Submitted:

30 October 2023

Posted:

31 October 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. State of the Art

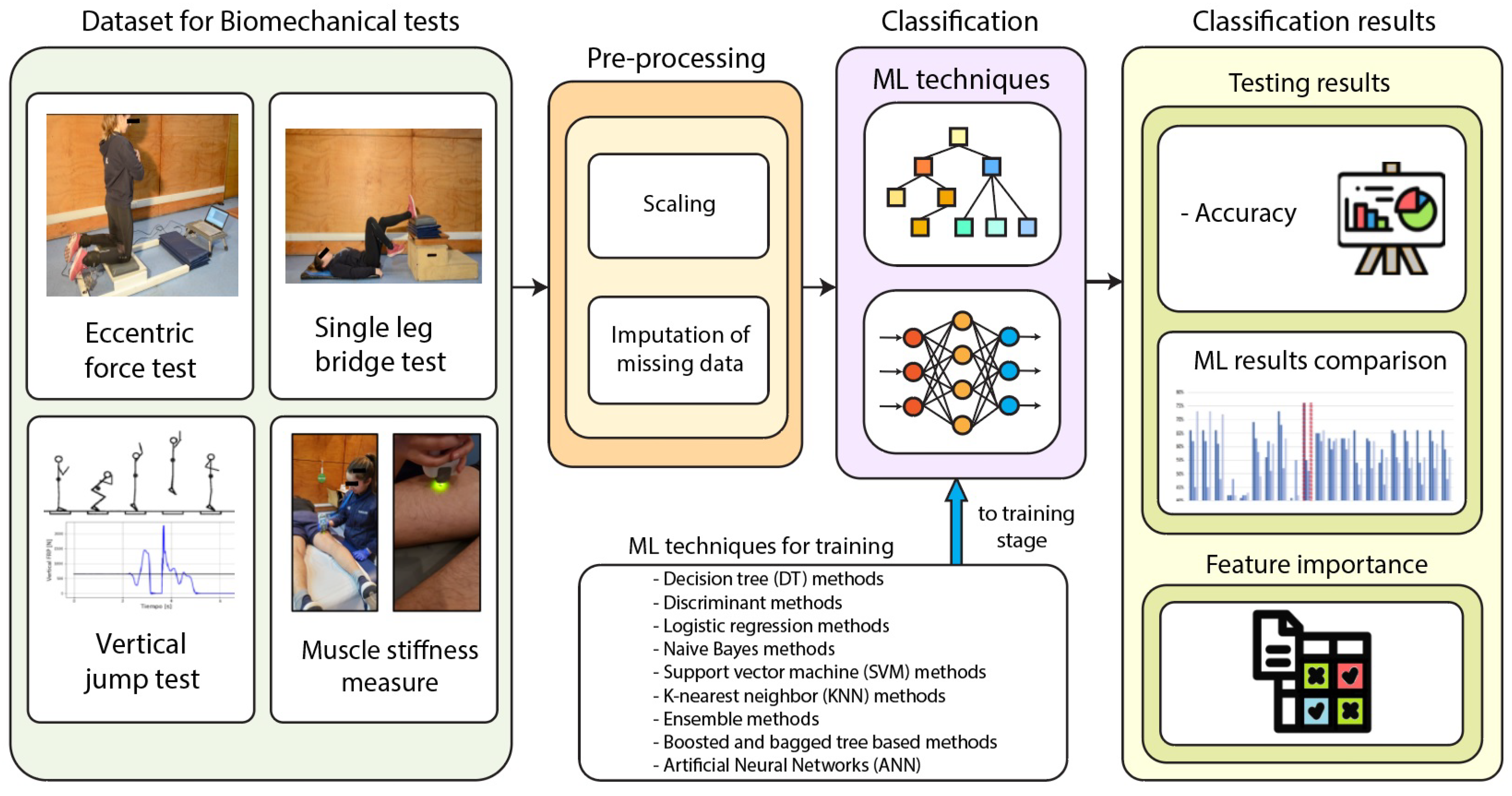

3. Soccer player injury classification architecture

3.1. Dataset for Biomechanical tests

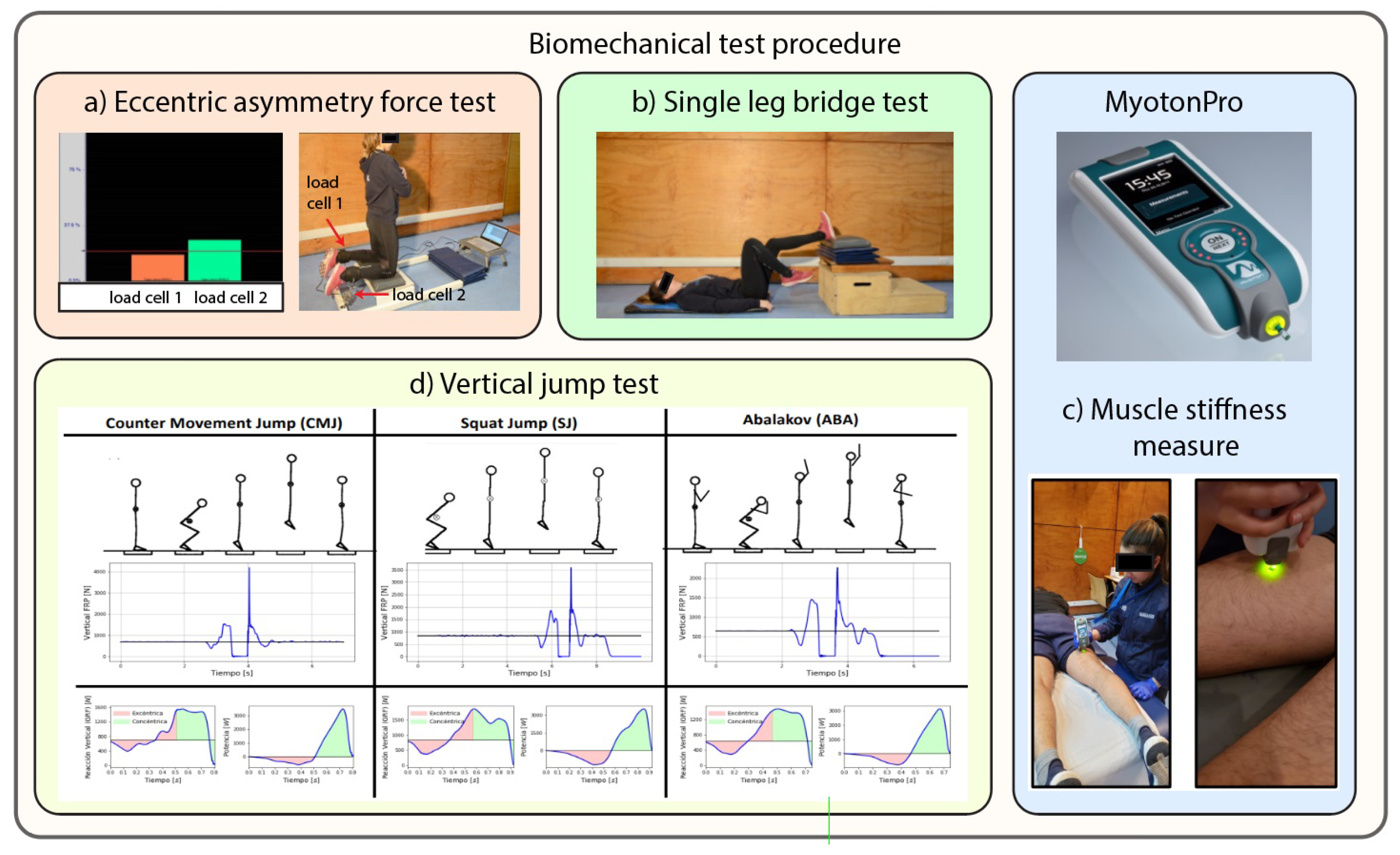

3.1.1. Biomechanical tests

- Eccentric Asymmetry force test (Nordic Hamstring): The participants assume a kneeling position with aligned hips and trunk support (see Figure 2a). An assistant or, in this case, load cells, is responsible for securing the heels, ensuring continual contact with the ground during the exercise. Load cells are utilized to measure the eccentric activation of the hamstring muscles. This test yields two parameters: Maximum right hamstring eccentric force (N) and Maximum left hamstring eccentric force (N), respectively.

- Single leg bridge test: This clinical test assesses the susceptibility to hamstring injury. The participant is instructed to lie on the floor supine with the heel of the designated leg placed inside a 60 cm high box. With hands crossed over the chest, the subject must push with the heel to elevate the glutes off the ground. Each repetition requires the participant to touch the ground before raising the glutes again without resting (see Figure 2b). This test yields the Number of repetitions for the right leg and the Number of repetitions for the left leg, respectively.

- Muscle stiffness measure (Myotonometry): This technique involves an objective and non-invasive digital palpation method for superficial skeletal muscles. The measurement targets explicitly the hamstring muscles (see Figure 2c) and is conducted using the MyotonPRO device. The parameters to be obtained for both extremities include S – Stiffness (N/m), which reflects the resistance to force or contraction that induces structural or tissue deformation.

- Vertical jump test (Bosco test): This series of vertical jumps serves to evaluate various aspects, including morphophysiological characteristics (muscle fiber types), functional attributes (heights and mechanical jump powers), and neuromuscular features (utilization of elastic energy and myotatic reflex, fatigue resistance) of the lower limb extensor muscles, based on the attained jump heights and mechanical power in different types of vertical jumps. The Bosco test will employ three jumps on a force platform. The execution of these jumps can be observed in Figure 2d, encompassing data from the Countermovement Jump (both two-legged and one-legged), Squat jump (both two-legged and one-legged), and Abalakov (both bipodal and unipodal) jumps.

3.2. Pre-processing

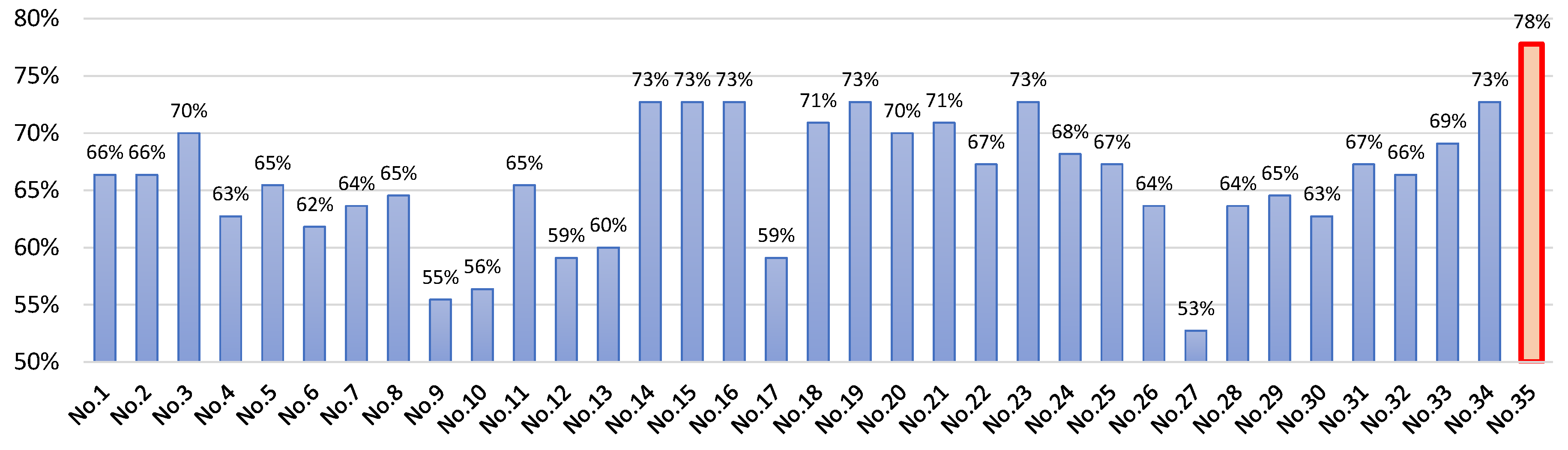

3.3. Classification

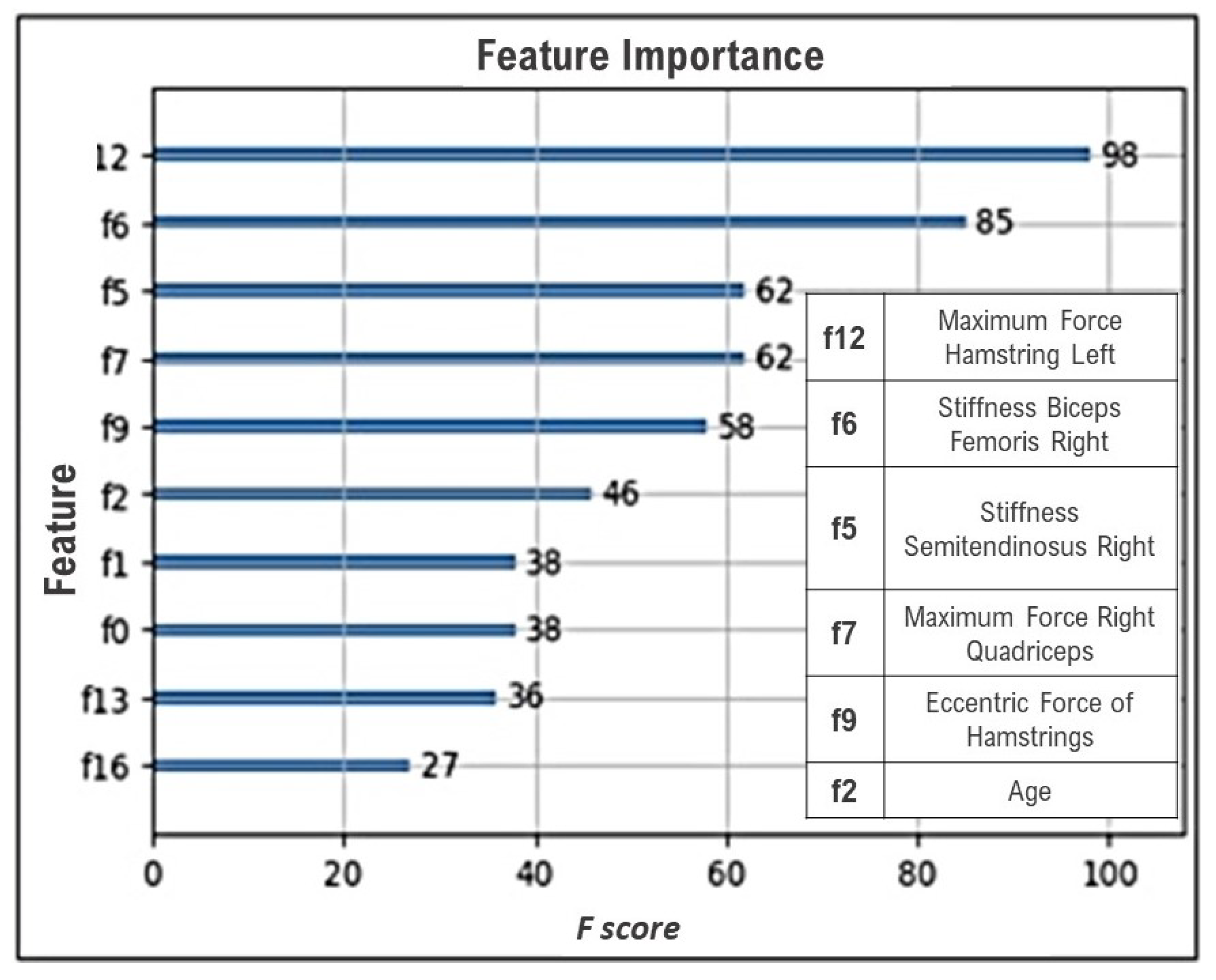

3.4. Most important features

3.5. Results

4. Discussion

5. Conclusions

Author Contributions

Acknowledgments

References

- Baroni, B.M.; Ruas, C.V.; Ribeiro-Alvares, J.B.; Pinto, R.S. Hamstring-to-quadriceps torque ratios of professional male soccer players: A systematic review. The Journal of Strength & Conditioning Research 2020, 34, 281–293. [Google Scholar]

- Lee, G.; Nho, K.; Kang, B.; Sohn, K.A.; Kim, D. Predicting Alzheimer’s disease progression using multi-modal deep learning approach. Scientific reports 2019, 9, 1952. [Google Scholar] [CrossRef]

- Cumps, E.; Verhagen, E.; Annemans, L.; Meeusen, R. Injury rate and socioeconomic costs resulting from sports injuries in Flanders: data derived from sports insurance statistics 2003. British journal of sports medicine 2008, 42, 767–772. [Google Scholar] [CrossRef] [PubMed]

- Calderón-Díaz, M.; Ulloa-Jiménez, R.; Saavedra, C.; Salas, R. Wavelet-based semblance analysis to determine muscle synergy for different handstand postures of Chilean circus athletes. Computer methods in biomechanics and biomedical engineering 2021, 24, 1053–1063. [Google Scholar] [CrossRef]

- Rosado-Portillo, A.; Chamorro-Moriana, G.; Gonzalez-Medina, G.; Perez-Cabezas, V. Acute hamstring injury prevention programs in eleven-a-side football players based on physical exercises: systematic review. Journal of clinical medicine 2021, 10, 2029. [Google Scholar] [CrossRef]

- Grazioli, R.; Lopez, P.; Andersen, L.L.; Machado, C.L.F.; Pinto, M.D.; Cadore, E.L.; Pinto, R.S. Hamstring rate of torque development is more affected than maximal voluntary contraction after a professional soccer match. European Journal of Sport Science 2019, 19, 1336–1341. [Google Scholar] [CrossRef]

- Crema, M.D.; Guermazi, A.; Tol, J.L.; Niu, J.; Hamilton, B.; Roemer, F.W. Acute hamstring injury in football players: association between anatomical location and extent of injury—a large single-center MRI report. Journal of science and medicine in sport 2016, 19, 317–322. [Google Scholar] [CrossRef] [PubMed]

- Ekstrand, J.; Hägglund, M.; Kristenson, K.; Magnusson, H.; Waldén, M. Fewer ligament injuries but no preventive effect on muscle injuries and severe injuries: an 11-year follow-up of the UEFA Champions League injury study. British journal of sports medicine 2013, 47, 732–737. [Google Scholar] [CrossRef]

- Szymski, D.; Krutsch, V.; Achenbach, L.; Gerling, S.; Pfeifer, C.; Alt, V.; Krutsch, W.; Loose, O. Epidemiological analysis of injury occurrence and current prevention strategies on international amateur football level during the UEFA Regions Cup 2019. Archives of orthopaedic and trauma surgery, 2021; 1–10. [Google Scholar]

- Biz, C.; Nicoletti, P.; Baldin, G.; Bragazzi, N.L.; Crimì, A.; Ruggieri, P. Hamstring strain injury (HSI) prevention in professional and semi-professional football teams: a systematic review and meta-analysis. International journal of environmental research and public health 2021, 18, 8272. [Google Scholar] [CrossRef]

- Musahl, V.; Karlsson, J.; Krutsch, W.; Mandelbaum, B.R.; Espregueira-Mendes, J.; d’Hooghe, P.; others, *!!! REPLACE !!!*. Return to play in football: an evidence-based approach, Springer, 2018.

- Cos, F.; Cos, M.Á.; Buenaventura, L.; Pruna, R.; Ekstrand, J. Modelos de análisis para la prevención de lesiones en el deporte. Estudio epidemiológico de lesiones: el modelo Union of European Football Associations en el fútbol. Apunts. Medicina de l’Esport 2010, 45, 95–102. [Google Scholar] [CrossRef]

- Halilaj, E.; Rajagopal, A.; Fiterau, M.; Hicks, J.L.; Hastie, T.J.; Delp, S.L. Machine learning in human movement biomechanics: Best practices, common pitfalls, and new opportunities. Journal of biomechanics 2018, 81, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Handelman, G.; Kok, H.; Chandra, R.; Razavi, A.; Lee, M.; Asadi, H. eD octor: machine learning and the future of medicine. Journal of internal medicine 2018, 284, 603–619. [Google Scholar] [CrossRef] [PubMed]

- Tseng, P.Y.; Chen, Y.T.; Wang, C.H.; Chiu, K.M.; Peng, Y.S.; Hsu, S.P.; Chen, K.L.; Yang, C.Y.; Lee, O.K.S. Prediction of the development of acute kidney injury following cardiac surgery by machine learning. Critical care 2020, 24, 1–13. [Google Scholar] [CrossRef]

- Pan, S.L.; Zhang, S. From fighting COVID-19 pandemic to tackling sustainable development goals: An opportunity for responsible information systems research. International journal of information management 2020, 55, 102196. [Google Scholar] [CrossRef]

- Ramkumar, P.N.; Karnuta, J.M.; Haeberle, H.S.; Owusu-Akyaw, K.A.; Warner, T.S.; Rodeo, S.A.; Nwachukwu, B.U.; Williams III, R.J. Association between preoperative mental health and clinically meaningful outcomes after osteochondral allograft for cartilage defects of the knee: a machine learning analysis. The American Journal of Sports Medicine 2021, 49, 948–957. [Google Scholar] [CrossRef] [PubMed]

- Jamaludin, A.; Lootus, M.; Kadir, T.; Zisserman, A.; Urban, J.; Battié, M.C.; Fairbank, J.; McCall, I. Automation of reading of radiological features from magnetic resonance images (MRIs) of the lumbar spine without human intervention is comparable with an expert radiologist. European Spine Journal 2017, 26, 1374–1383. [Google Scholar] [CrossRef] [PubMed]

- Mak, W.K.; Bin Abd Razak, H.R.; Tan, H.C.A. Which Patients Require a Contralateral Total Knee Arthroplasty Within 5 Years of Index Surgery? The Journal of Knee Surgery 2019, 33, 1029–1033. [Google Scholar] [CrossRef]

- Masís, S. Interpretable Machine Learning with Python: Learn to build interpretable high-performance models with hands-on real-world examples; Packt Publishing Ltd, 2021.

- Chen, H.; Michalopoulos, G.; Subendran, S.; Yang, R.; Quinn, R.; Oliver, M.; Butt, Z.; Wong, A. Interpretability of ml models for health data-a case study, 2019.

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable ai: A review of machine learning interpretability methods. Entropy 2020, 23, 18. [Google Scholar] [CrossRef] [PubMed]

- Brownlee, J. XGBoost With python: Gradient boosted trees with XGBoost and scikit-learn; Machine Learning Mastery, 2016.

- Van Beijsterveldt, A.; van de Port, I.G.; Vereijken, A.; Backx, F. Risk factors for hamstring injuries in male soccer players: a systematic review of prospective studies. Scandinavian journal of medicine & science in sports 2013, 23, 253–262. [Google Scholar]

- Hägglund, M.; Waldén, M.; Ekstrand, J. Previous injury as a risk factor for injury in elite football: a prospective study over two consecutive seasons. British journal of sports medicine 2006, 40, 767–772. [Google Scholar] [CrossRef]

- Arnason, A.; Sigurdsson, S.B.; Gudmundsson, A.; Holme, I.; Engebretsen, L.; Bahr, R. Risk factors for injuries in football. The American journal of sports medicine 2004, 32, 5–16. [Google Scholar] [CrossRef]

- Van Dyk, N.; Bahr, R.; Whiteley, R.; Tol, J.L.; Kumar, B.D.; Hamilton, B.; Farooq, A.; Witvrouw, E. Hamstring and quadriceps isokinetic strength deficits are weak risk factors for hamstring strain injuries: a 4-year cohort study. The American journal of sports medicine 2016, 44, 1789–1795. [Google Scholar] [CrossRef] [PubMed]

- Freckleton, G.; Pizzari, T. Risk factors for hamstring muscle strain injury in sport: a systematic review and meta-analysis. British journal of sports medicine 2013, 47, 351–358. [Google Scholar] [CrossRef] [PubMed]

- Fousekis, K.; Tsepis, E.; Poulmedis, P.; Athanasopoulos, S.; Vagenas, G. Intrinsic risk factors of non-contact quadriceps and hamstring strains in soccer: a prospective study of 100 professional players. British journal of sports medicine 2011, 45, 709–714. [Google Scholar] [CrossRef] [PubMed]

- Henderson, G.; Barnes, C.A.; Portas, M.D. Factors associated with increased propensity for hamstring injury in English Premier League soccer players. Journal of Science and Medicine in Sport 2010, 13, 397–402. [Google Scholar] [CrossRef] [PubMed]

- Fyfe, J.J.; Opar, D.A.; Williams, M.D.; Shield, A.J. The role of neuromuscular inhibition in hamstring strain injury recurrence. Journal of electromyography and kinesiology 2013, 23, 523–530. [Google Scholar] [CrossRef] [PubMed]

- Warren, P.; Gabbe, B.J.; Schneider-Kolsky, M.; Bennell, K.L. Clinical predictors of time to return to competition and of recurrence following hamstring strain in elite Australian footballers. British journal of sports medicine 2010, 44, 415–419. [Google Scholar] [CrossRef]

- Blackburn, J.T.; Norcross, M.F. The effects of isometric and isotonic training on hamstring stiffness and anterior cruciate ligament loading mechanisms. Journal of Electromyography and Kinesiology 2014, 24, 98–103. [Google Scholar] [CrossRef] [PubMed]

- Watsford, M.L.; Murphy, A.J.; McLachlan, K.A.; Bryant, A.L.; Cameron, M.L.; Crossley, K.M.; Makdissi, M. A prospective study of the relationship between lower body stiffness and hamstring injury in professional Australian rules footballers. The American journal of sports medicine 2010, 38, 2058–2064. [Google Scholar] [CrossRef]

- Amaral, J.L.; Sancho, A.G.; Faria, A.C.; Lopes, A.J.; Melo, P.L. Differential diagnosis of asthma and restrictive respiratory diseases by combining forced oscillation measurements, machine learning and neuro-fuzzy classifiers. Medical & Biological Engineering & Computing 2020, 58, 2455–2473. [Google Scholar]

- Song, X.; Gu, F.; Wang, X.; Ma, S.; Wang, L. Interpretable Recognition for Dementia Using Brain Images. Frontiers in Neuroscience 2021, 15, 748689. [Google Scholar] [CrossRef] [PubMed]

- Sabol, P.; Sinčák, P.; Hartono, P.; Kočan, P.; Benetinová, Z.; Blichárová, A.; Verbóová, L.; Štammová, E.; Sabolová-Fabianová, A.; Jašková, A. Explainable classifier for improving the accountability in decision-making for colorectal cancer diagnosis from histopathological images. Journal of biomedical informatics 2020, 109, 103523. [Google Scholar] [CrossRef] [PubMed]

- García-Pérez, P.; Lozano-Milo, E.; Landin, M.; Gallego, P.P. Machine Learning unmasked nutritional imbalances on the medicinal plant Bryophyllum sp. cultured in vitro. Frontiers in Plant Science 2020, 11, 576177. [Google Scholar] [CrossRef] [PubMed]

- Apostolopoulos, I.D.; Groumpos, P.P.; Apostolopoulos, D.J. Advanced fuzzy cognitive maps: state-space and rule-based methodology for coronary artery disease detection. Biomedical Physics & Engineering Express 2021, 7, 045007. [Google Scholar]

- Juang, C.F.; Wen, C.Y.; Chang, K.M.; Chen, Y.H.; Wu, M.F.; Huang, W.C. Explainable fuzzy neural network with easy-to-obtain physiological features for screening obstructive sleep apnea-hypopnea syndrome. Sleep Medicine 2021, 85, 280–290. [Google Scholar] [CrossRef] [PubMed]

- Ding, L.; Zhang, X.y.; Wu, D.y.; Liu, M.l. Application of an extreme learning machine network with particle swarm optimization in syndrome classification of primary liver cancer. Journal of Integrative Medicine 2021, 19, 395–407. [Google Scholar] [CrossRef]

- El-Sappagh, S.; Alonso, J.M.; Islam, S.R.; Sultan, A.M.; Kwak, K.S. A multilayer multimodal detection and prediction model based on explainable artificial intelligence for Alzheimer’s disease. Scientific reports 2021, 11, 2660. [Google Scholar] [CrossRef] [PubMed]

- Kokkotis, C.; Ntakolia, C.; Moustakidis, S.; Giakas, G.; Tsaopoulos, D. Explainable machine learning for knee osteoarthritis diagnosis based on a novel fuzzy feature selection methodology. Physical and Engineering Sciences in Medicine 2022, 45, 219–229. [Google Scholar] [CrossRef] [PubMed]

- Ntakolia, C.; Kokkotis, C.; Moustakidis, S.; Tsaopoulos, D. Identification of most important features based on a fuzzy ensemble technique: Evaluation on joint space narrowing progression in knee osteoarthritis patients. International Journal of Medical Informatics 2021, 156, 104614. [Google Scholar] [CrossRef]

- Burkart, N.; Huber, M.F. A survey on the explainability of supervised machine learning. Journal of Artificial Intelligence Research 2021, 70, 245–317. [Google Scholar] [CrossRef]

- Bucholc, M.; Ding, X.; Wang, H.; Glass, D.H.; Wang, H.; Prasad, G.; Maguire, L.P.; Bjourson, A.J.; McClean, P.L.; Todd, S.; others., *!!! REPLACE !!!*. A practical computerized decision support system for predicting the severity of Alzheimer’s disease of an individual. Expert systems with applications 2019, 130, 157–171. [Google Scholar] [CrossRef] [PubMed]

- Das, D.; Ito, J.; Kadowaki, T.; Tsuda, K. An interpretable machine learning model for diagnosis of Alzheimer’s disease. PeerJ 2019, 7, e6543. [Google Scholar] [CrossRef] [PubMed]

- Khan, I.U.; Aslam, N.; AlShedayed, R.; AlFrayan, D.; AlEssa, R.; AlShuail, N.A.; Al Safwan, A. A proactive attack detection for heating, ventilation, and air conditioning (HVAC) system using explainable extreme gradient boosting model (XGBoost). Sensors 2022, 22, 9235. [Google Scholar] [CrossRef]

- Calderón-Díaz, M.; Serey-Castillo, L.J.; Vallejos-Cuevas, E.A.; Espinoza, A.; Salas, R.; Macías-Jiménez, M.A. Detection of variables for the diagnosis of overweight and obesity in young Chileans using machine learning techniques. Procedia Computer Science 2023, 220, 978–983. [Google Scholar] [CrossRef]

| No. | Model name | Model configuration | Model description |

|---|---|---|---|

| No.1 | Tree | 100 splitts | |

| No.2 | Tree | 20 splitts | A flowchart-like structure where an internal node represents a feature, the branch represents a decision rule, and each leaf node represents the outcome. |

| No.3 | Tree | 4 splitts | |

| No.4 | Linear discriminant |

Full covariance structure |

A statistical technique for binary and multiclass classification, finding the linear combination of features that best separates classes. |

| No.5 | Quadratic discriminant |

Full covariance structure |

A method similar to linear discriminant analysis, but it assumes that the features follow a Gaussian distribution and estimates the covariance between the classes. |

| No.6 | Binary GLM Logistic Regression |

Binomial distribution | Logistic regression with binary outcomes for estimating the probability of a binary outcome using a logistic function. |

| No.7 | Efficient Logistic Regression |

L2 regularization, alpha = 0.001, one-vs-one coding |

A regression analysis similar to binary logistic regression but implemented efficiently to handle large datasets or high-dimensional data. |

| No.8 | Efficient Linear SVM |

L2 regularization, alpha = 0.001, one-vs-one coding |

A supervised machine learning algorithm used for classification and regression analysis, finding a hyperplane that best separates classes. |

| No.9 | Gaussian Naive Bayes |

Gaussian distribution | A probabilistic classifier assuming that the presence of a particular feature in a class is unrelated to the presence of other features. |

| No.10 | Kernel Naive Bayes |

Normal kernel, data standarization |

A version of the Naive Bayes classifier that can handle non-linear classification by using kernel methods, transforming data into a higher-dimensions. |

| No.11 | Linear SVM | Linear kernel, one-vs-one coding, data standarization |

A supervised machine learning algorithm used for classification, finding a hyperplane that best separates classes in a linearly separable dataset. |

| No.12 | Quadratic SVM | Quadratic kernel, one-vs-one coding, data standarization |

An extension of the SVM algorithm that uses a quadratic kernel to handle non-linearly separable data by mapping it into a higher-dimensional space. |

| No.13 | Cubic SVM | Cuibic kernel, one-vs-one coding, data standarization |

An extension of the SVM algorithm that uses a cubic kernel to handle highly non-linearly separable data by mapping it into an even higher-dimensional space. |

| No.14 | Fine Gaussian SVM |

Kernel scale = 1.6, one-vs-one coding, data standarization |

An SVM with a fine Gaussian kernel, suitable for datasets requiring high precision and accuracy. |

| No.15 | Medium Gaussian SVM |

kernel scale = 6.5, one-vs-one coding, data standarization |

An SVM with a medium Gaussian kernel, suitable for datasets with moderate complexity and dimensionality. |

| No.16 | Coarse Gaussian SVM |

Kernel scale = 26, one-vs-one coding, data standarization |

An SVM with a coarse Gaussian kernel, suitable for datasets with lower complexity and dimensionality. |

| No. | Model name | Model configuration | Model description |

|---|---|---|---|

| No.17 | Fine KNN | Number of neighbors = 1, euclidean distance |

A non-parametric classification algorithm that classifies a data point based on the majority vote of its neighbors, with a fine-tuned distance metric. |

| No.18 | Medium KNN |

Number of neighbors = 10, euclidean distance |

A non-parametric classification algorithm that classifies a data point based on the majority vote of its neighbors, with a moderately adjusted distance metric. |

| No.19 | Coarse KNN | Number of neighbors = 100, euclidean distance |

A non-parametric classification algorithm that classifies a data point based on the majority vote of its neighbors, with a roughly adjusted distance metric. |

| No.20 | Cosine KNN | Number of neighbors = 10, euclidean distance |

A variation of the K-Nearest Neighbors algorithm that computes the cosine similarity between data points to measure their similarity. |

| No.21 | Cubic KNN | Number of neighbors = 10, euclidean distance |

A non-parametric classification algorithm that classifies a data point based on the majority vote of its neighbors, with a cubic distance metric. |

| No.22 | Weighted KNN |

Number of neighbors = 10, euclidean distance |

A variant of the K-Nearest Neighbors algorithm that assigns weights to the contributions of the neighbors based on their distances. |

| No.23 | Boosted Trees with AdaBoost ensemble |

Decision tree learner, maximum splits = 20, learning rate=0.1 |

An ensemble learning method that constructs a strong classifier by combining multiple weak classifiers, such as decision trees, using the AdaBoost algorithm. |

| No.24 | Bagged trees with bag ensemble |

Decision tree learner, maximum splits = 109, number of learners = 30 |

An ensemble learning technique that combines multiple models, such as decision trees, to improve classification accuracy and stability. |

| No.25 | Subspace discriminant ensemble |

Discriminant learner, number of learners = 30, subspace dimension = 10 |

An ensemble approach that combines multiple discriminant analysis models to improve the classification performance of the system. |

| No.26 | Subspace KNN ensemble |

Subspace ensemple method, decision tree learner, number of learners = 30, learning rate = 0.1 |

An ensemble learning technique that combines multiple K-Nearest Neighbors models operating in different subspaces to improve classification accuracy. |

| No.27 | RUSBoosted Trees |

RUSBoost ensemple method, decision tree learner, number of learners = 30, learning rate = 0.1 |

It is a variant of the AdaBoost algorithm that incorporates random under-sampling to address class imbalance, particularly in binary classification problems. |

| No.28 | Neural Network | 1 layer - 10 neurons, 1k iterations | A network of interconnected nodes inspired by the structure of the human brain, capable of learning complex patterns and relationships in data. |

| No.29 | Neural Network | 1 layer - 25 neurons, 1k iterations | |

| No.30 | Neural Network | 1 layer - 100 neurons, 1k iterations | |

| No.31 | Neural Network | 2 layers - 10 neuron, 1k iterations | |

| No.32 | Neural Network | 3 layers - 10 neurons, 1k iterations | |

| No.33 | SVM Kernel |

SVM learner, lambda regularization = 0.01, one-vs-one coding, iteration limit = 1000 |

A variant of the SVM algorithm that uses kernel methods to handle non-linear data by transforming it into a higher-dimensional space. |

| No.34 | Logistic regression kernel |

Logistic regression learner, lambda regularization = 0.01, one-vs-one coding, |

A variant of logistic regression that uses kernel methods to handle non-linear data. |

| No.35 | XGBoost | learning rate = 0.3, L2 regularization alpha = 0.001, sampling method = uniform |

An optimized gradient boosting library designed for speed and performance, effective for classification and regression . |

| Feature | Number of repetitions |

|---|---|

| Maximum Force Hamstring Left | 28 |

| Stiffness Biceps Femoris Right | 28 |

| Stiffness Semitendinosus Right | 24 |

| Maximum Force Right Quadriceps | 21 |

| Eccentric Force of Hamstrings | 17 |

| Age | 16 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).