2.2. Detailed phases

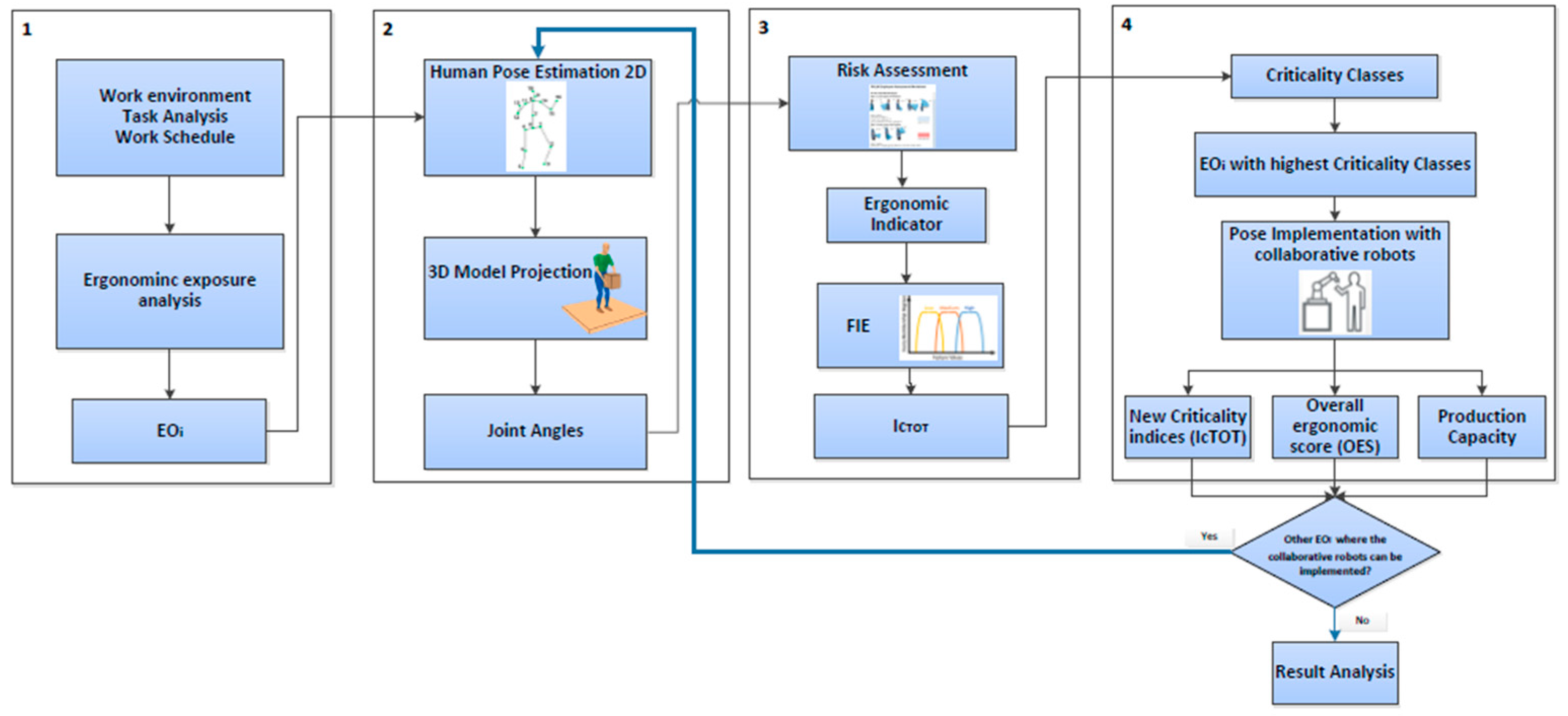

The main steps are the following:

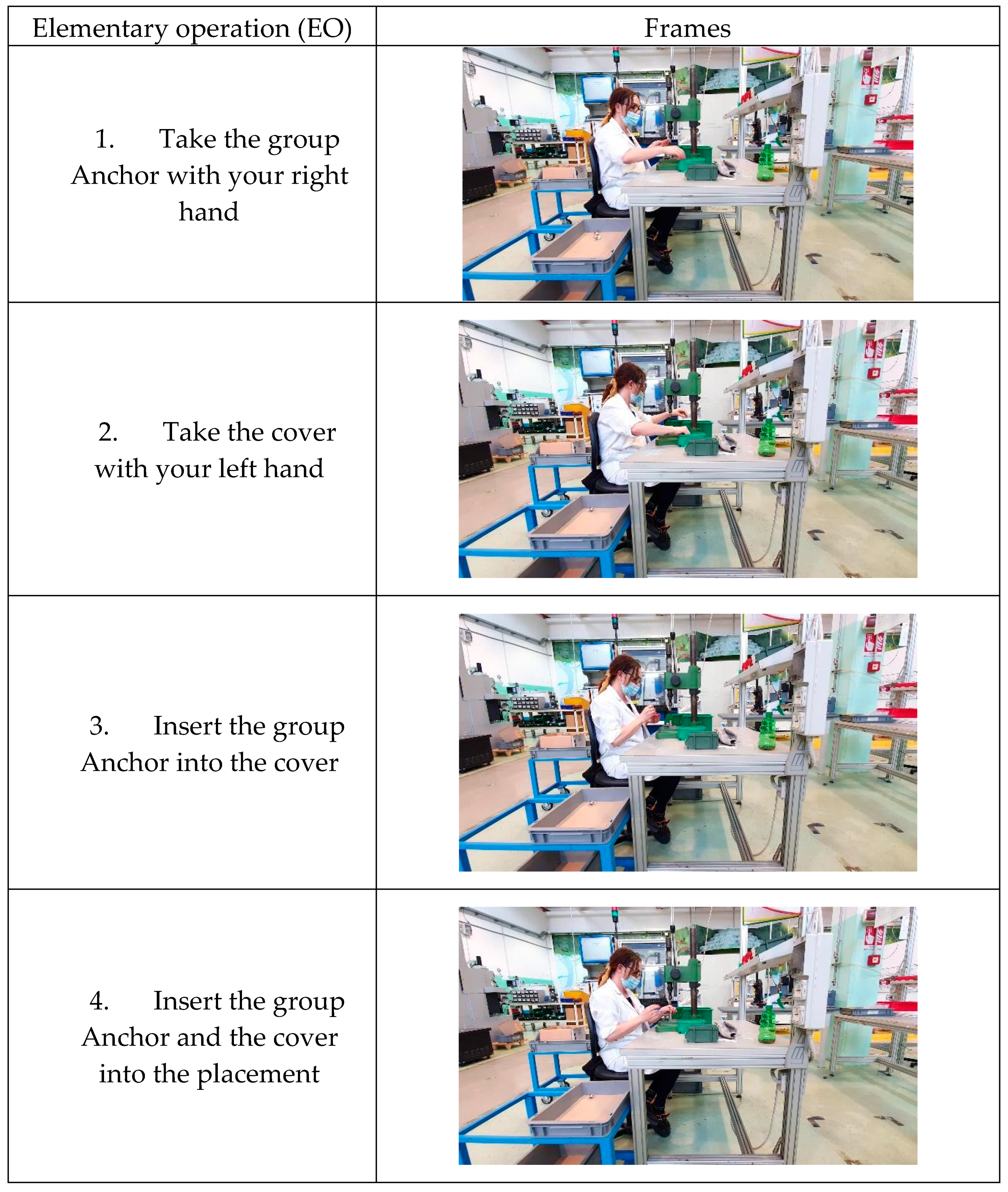

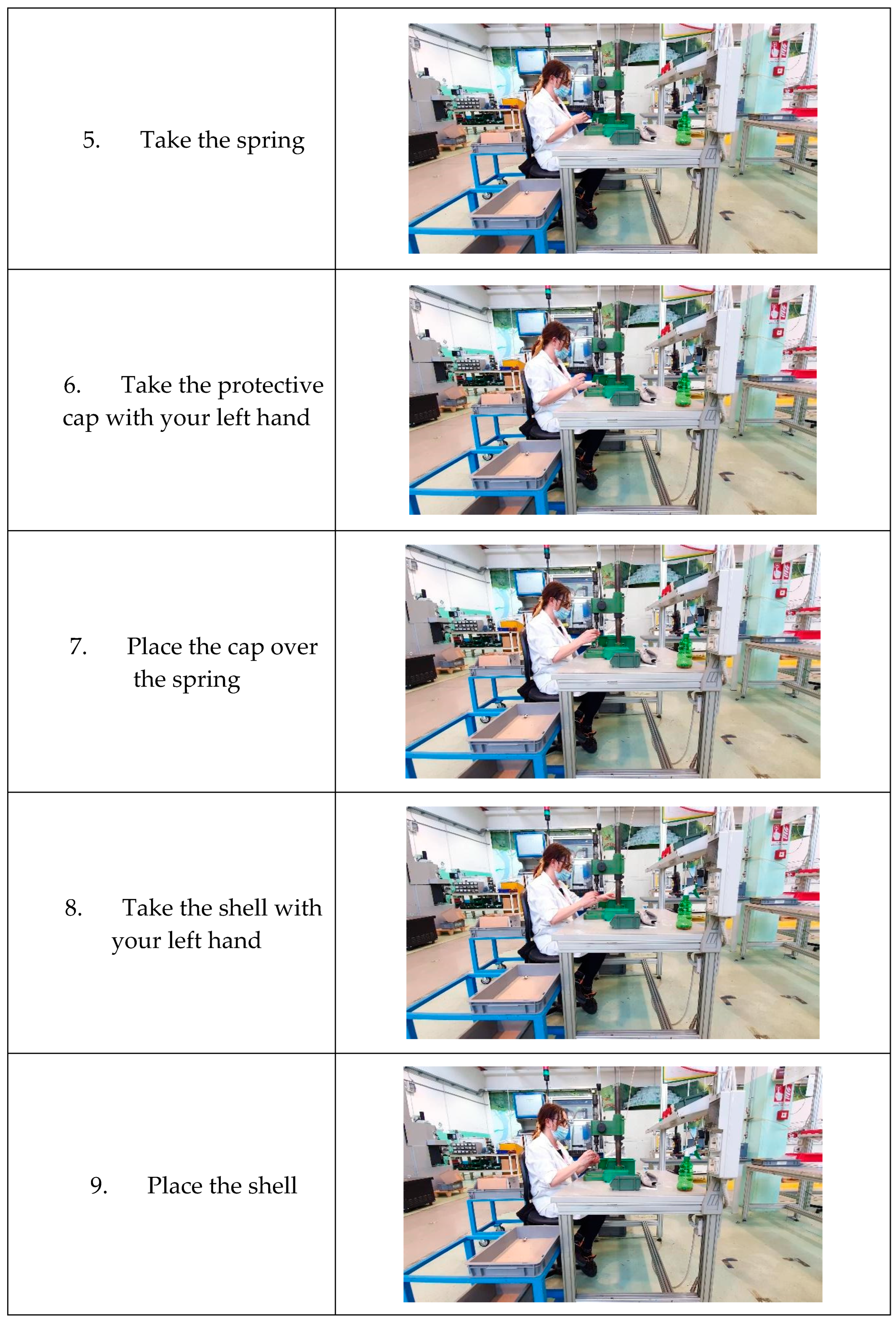

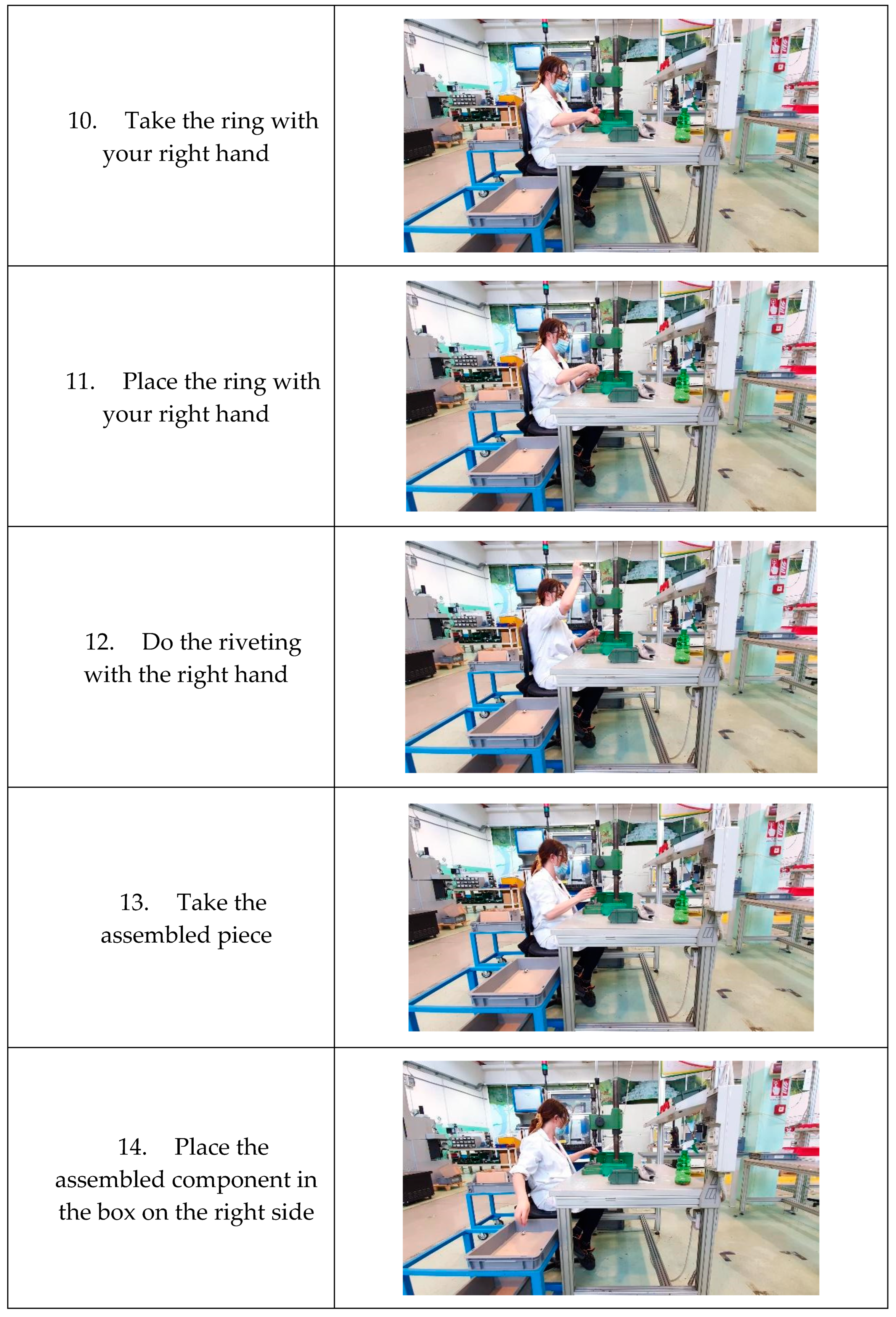

Worker activities are divided into EO

i [

35]. These EO

i define the input of the ergonomic analysis.

Introduction of a computer vision-based system for 3D human pose estimation that overcomes the partial findings of [

1,

36], and the relative limitations in computing the joint angles angles due to the use of 2D pose estimation models, that results in less accurate angles computing.

Total criticality indices for the EOi are balanced through the Fuzzy interface. These indices summarize the worker's ergonomic stress during manufacturing operations.

Cobot implementation for elementary operations with higher criticality class.

Step 1. EOi Identification

In this phase, elementary operations [

37] are identified through a video recording of production activities during a work shift [

35].

The main issue is to analyze the EO

i to appraise the joint angles, also considering the cyclic operations conducted within the cycle time and the non-cyclic operations. In this phase repetitive operations are also considered, as they can cause ergonomic problems [

38].

Step 2 Human Pose Assessment

The 3D CV is explored to assess the ergonomic risk assessment for each EO

i. Through the application of the approach presented in Video Pose3D [

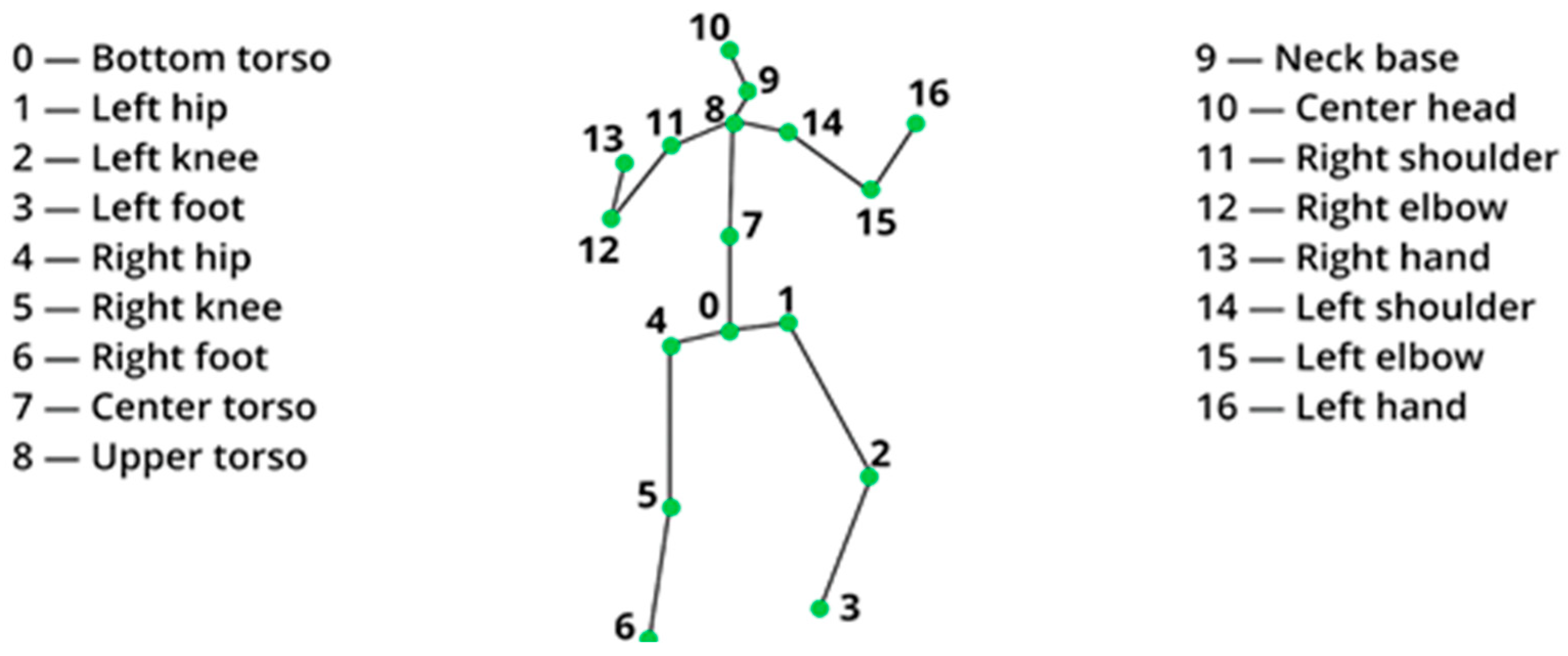

32] the keypoints of human body are detected from digital images or videos. VideoPose3D uses a system based on dilated temporal convolution on 2D keypoints trajectories to estimate 3D keypoints coordinates. Given an input image or a video, the network provides a list of detected body keypoints, referenced as in

Table 1 and

Figure 2.

The model used in this study is a 17-joint skeleton, in which the skeletal the keypoints are listed in as shown in

Figure 2. For each joint, the VideoPose 3D provides i) a vector with its relative position in the image and ii) the confidence of the estimation, ranging from 0 (null) to 1 (complete). From these information, we calculate the overall confidence of the skeletal detection as an average of the confidences of the joint estimate, which will be used for filtering out noisy or spurious detections.

An example of this step is given in

Table 1. The left elbow angle (EL), for is calculated from the positions of the left shoulder, elbow, and wrist, which correspond to skeleton joints #11, #12, and #13.

To compute the ergonomic risk value, the threshold values of the joint angle per skeleton must be described. These thresholds are explicit for some joint angles considering the RULA method (e.g. elbows and neck), but not for others. Thus, to define these threshold values the approach of [

14,

38] has been used. The results are shown in

Table 2.

Step 3. The total criticality indices

This portion of the research explores the uses of a FIE for calculate the total criticality indices considering some ergonomic indicators.

From the literature it can be said that fuzzy logic allows to simulate complicated processes and to front problems with qualitative, vague, or uncertain information [

40]. Recently, there have been several applications of this methodology in the field of safety and risk analysis, such as system reliability and risk assessment [

41,

42,

43] and analysis of human reliability [

44,

45,

46,

47]. [

48] use this methodology to assess the risk of human error. [

49] propose a framework based on fuzzy logic to address the inaccuracy of input data regarding ergonomic evaluation due to human subjectivity in field observation. A further ergonomic assessment based on fuzzy logic is proposed by [

50] with the aim of assessing and defining the level of risk for the manual handling of loads and the severity of the impact on the health of workers. Therefore, in this study we use this methodology to determine the total criticality index.

A Fuzzy system consists of four basic units, a knowledge base and three computational units (fuzzification, inference, and defuzzification).

A knowledge base contains all information about a system, allows other entities to process input data and obtain output. This information can be divided into i) the database and ii) the rule base. The first one contains the descriptions of all variables, including membership functions, while the second one contains the inference rules.

Since the input data is almost always crisp and the fuzzy system works on "fuzzy" sets, a conversion is required to translate a standard numeric data into a fuzzy data. The operation that implements this transformation is called Fuzzification. It is conducted using membership functions of the variables being processed. To de-fuzzy the input value, the membership degree is set for each linguistic term of the variable.

The phase in which the returned fuzzy values are converted into usable numbers is called defuzzification. In this phase we start with a particular fuzzy set obtained by inference. This fuzzy set may be often irregularly shaped due to the union of the results of various rules [

51] and find a single sharp value that best represents this set want to decide. The resulting values represent the final output of the system and are used as control actions or decision parameters.

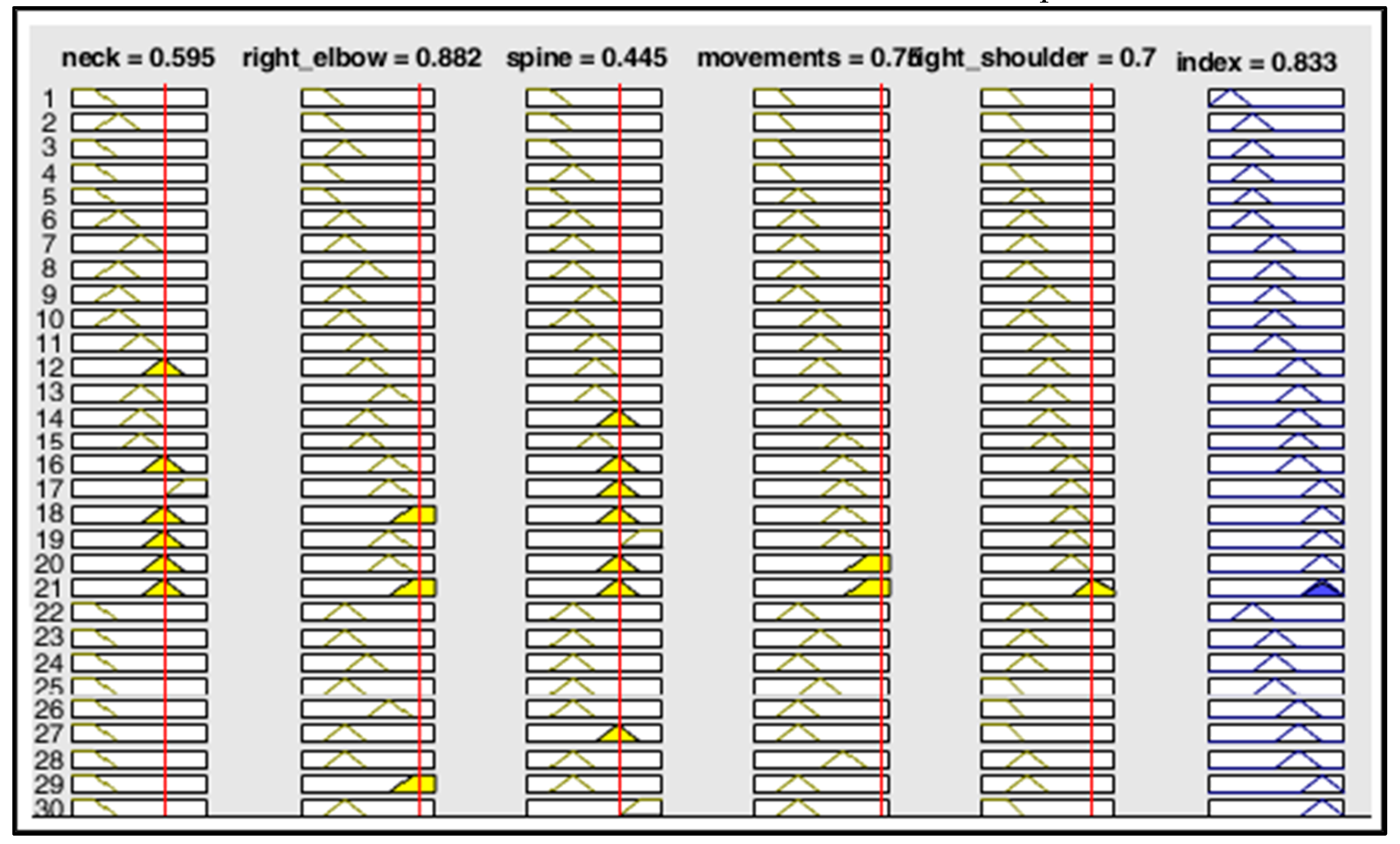

The fuzzy engine (FE) has been implemented with the Fuzzy toolbox of Matlab. The FE processes five variables, according to [

39] and

Table 2. These variables define the measure of postural stress for the upper limb and in particular for the elbow, shoulder, neck and trunk while another index refers to the high repetition of the same movements.

After the identification of the EO

i, the human pose for each EO

i is analysed and the joint angle is automatically considering the RULA method and the data of

Table 2. This joint angle is the input value for the criticality index calculation. The ergonomic indicators in this study have been evaluated through Video Pose3D, which uses a system based on time convolution on the trajectories of 2D key points to estimate the coordinates of 3D key points. The main advantage of this phase is that, unlike RULA or REBA methods, no training is required to obtain the final result because all calculation steps are performed by the FIE.

The fuzzy interface translates numerical values into linguistic values that are associated with fuzzy sets.

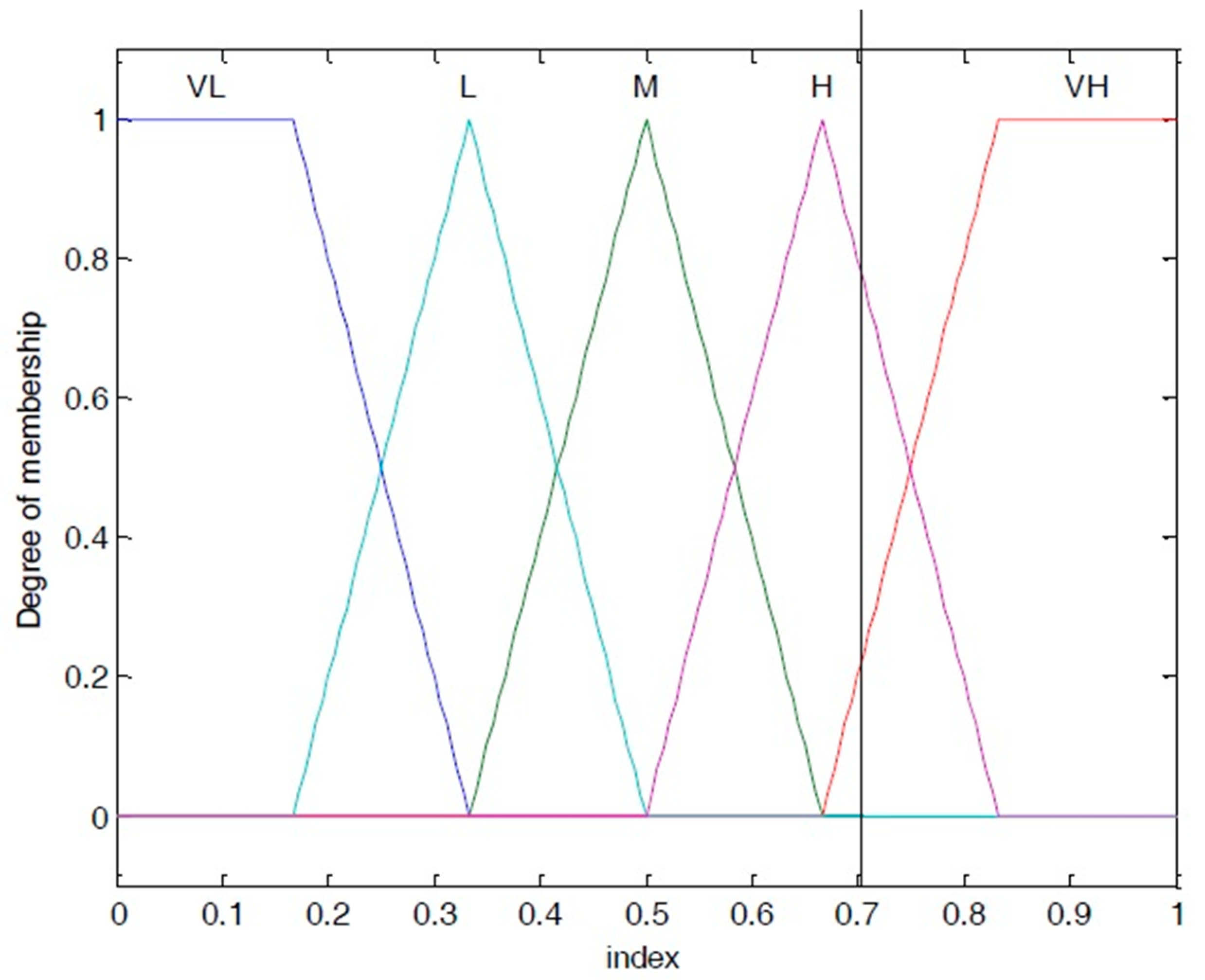

For each ergonomic input parameter, a membership function with five labels is defined [

39]. Thus, the input domain into regions where crisp values can be associated with a fuzzy number using the membership function.

This fuzzy number is expressed using three triangular-central membership functions and two trapezoidal functions at the domain's boundaries. The membership functions are symmetrical with respect to the value 0.5 [

52]. This value indicates how each variable belongs to various fuzzy sets when its membership degree changes.

Since the input values have different ranges, they are normalized to the maximum value in the [0,1] range before the fuzzy conversion. The value of the ergonomic index is defined in the range [low, medium, high]. Hence, if the value of the ergonomic index is in the high range, the normalization routine always returns the value 1. The fuzzy interface uses the knowledge base to calculate the input variables.

The input values are interpreted in terms of fuzzy sets (

Figure 3).

For example, considering the ergonomic indicator for the right shoulder variable. Since the measured angle is 63,151° the normalized value is 0.7. The fuzzy interface associates, through the membership function in

Figure 3, the ergonomic indicators to the VH set with degree 0.23 and to the H set with degree 0.8.

In the second step all the rules are considered in which our variable is associated with VH or H as shown in the following example:

Rule #1 if (neck is VL) and (right_elbow is VL) and (right_Shoulder is VH) and (Spine is VL) and (Repetition of the same movements is VL) then (IcTOT is L)

Rule #2 if (neck is VH) and (right_elbow is VH) and (right_Shoulder is H) and (Spine is VH) and (Repetition of the same movements is VH) then (IcTOT VH)

Considering the rule#2 if the input parameters are:

Ic neck = 1

Ic right_elbow=1

Ic spine=1

Ic Repetition of the same movements =1

Ic right_Shoulder = 0,702

Based on the membership function output, a numerical value is obtained for each index by reading the value on the x-axis corresponding to the assigned membership degree and assigned label.

To get a single IcTOT output value, a weighted average is performed with respect to the membership grades:

The rules are built to express in linguistic terms the requirement to outline strongly if even just one domain is critical. The de-fuzzy interface translates the result of the inference process, expressed in terms of degree of membership of a fuzzy set, into a numeric form.

The operative fuzzy implementation consists of the following steps

loading the fuzzy inference file .fis (the .fis file contains all the system settings saved through the Fuzzy Logic Toolbox) into the Matlab workspace

reading and normalizing the input array from the workspace (collecting the ergonomic parameters measured for each elementary operation i.e. neck bending angle and shoulder angle)

computing IcTOT through the fuzzy inference engine for each variable analysed.

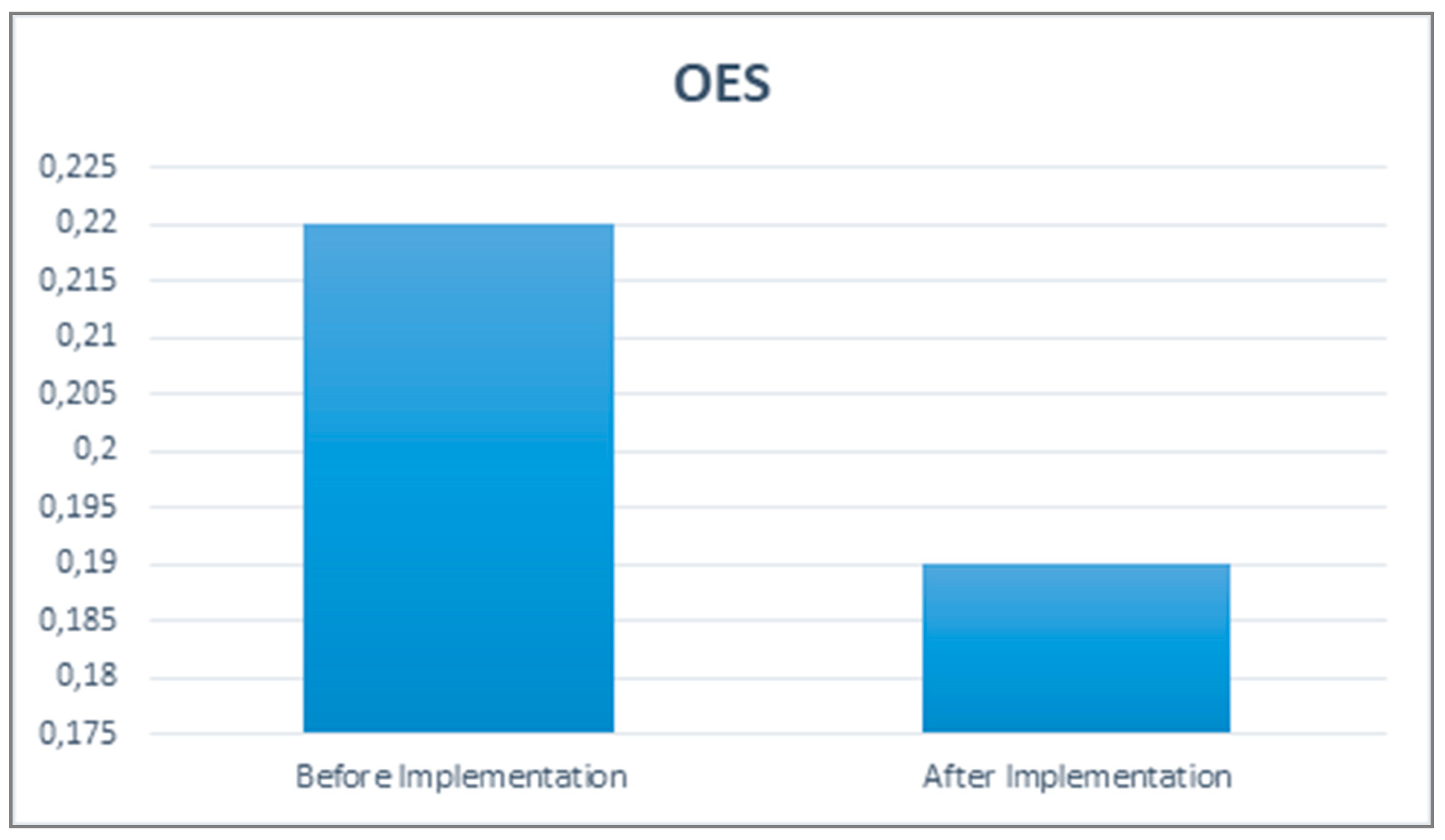

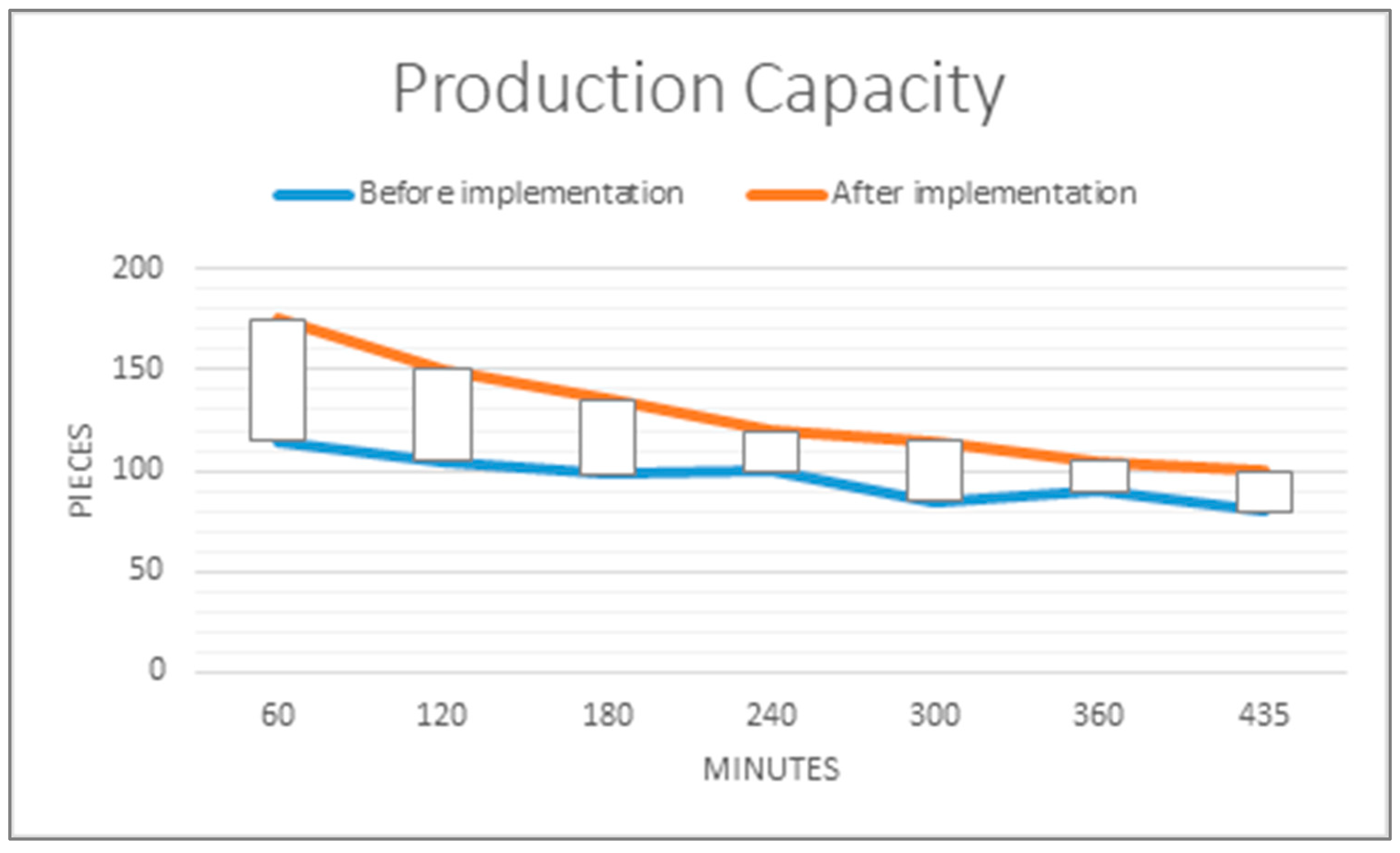

Step 4. Criticality classes and Cobot implementation

The outputs of step 4 are the EOi with the highest the criticality classes.

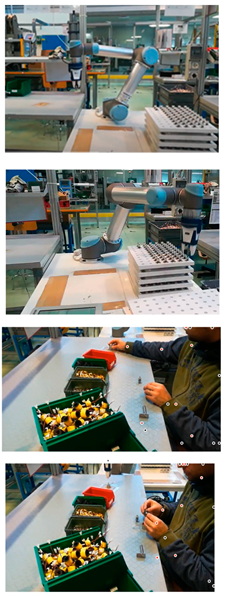

In this portion of the study the cobot is implemented for those operations with the greatest criticality classes.

The total criticality index associated with a criticality class is defined and the EOi in which the workers assume a critical posture are defined. Three criticality classes are defined through triangle-shaped and/or trapezoidal-shaped membership functions consisting of three labels, each associated with one color. The green color corresponds to a low criticality index, the yellow color to a medium criticality index and the red color to a high criticality index.

For the improvement of working conditions, [

53,

54,

55], proposed human-robot collaboration with promising results in reducing the workload and risks associated with WMSD. [

54] highlights the importance of industrial collaborative robots, defined as cobots, for the reduction of ergonomic problems in the workplace deriving from physical and cognitive stress and for the improvement of safety, productivity and quality. Thanks to a close interaction between the machine and the operator, these tools allow high precision, speed and repeatability which have a positive impact on productivity and flexibility.

Therefore, in this step, after the implementation of the cobot, the values of the criticality indices are calculated, along with the assessment of certain production parameters, including the impact on production capacity and ergonomic stress. The methodology is iterated if there are other EOi in which the cobot can be implemented. Automatically ergonomic assessments in various working environments will aid in the prevention of occupational injuries. Furthermore, rather than the entire operation period, the high-risk elementary operation could be identified immediately and provided to the inspector for further evaluation.