Submitted:

14 October 2023

Posted:

17 October 2023

You are already at the latest version

Abstract

Keywords:

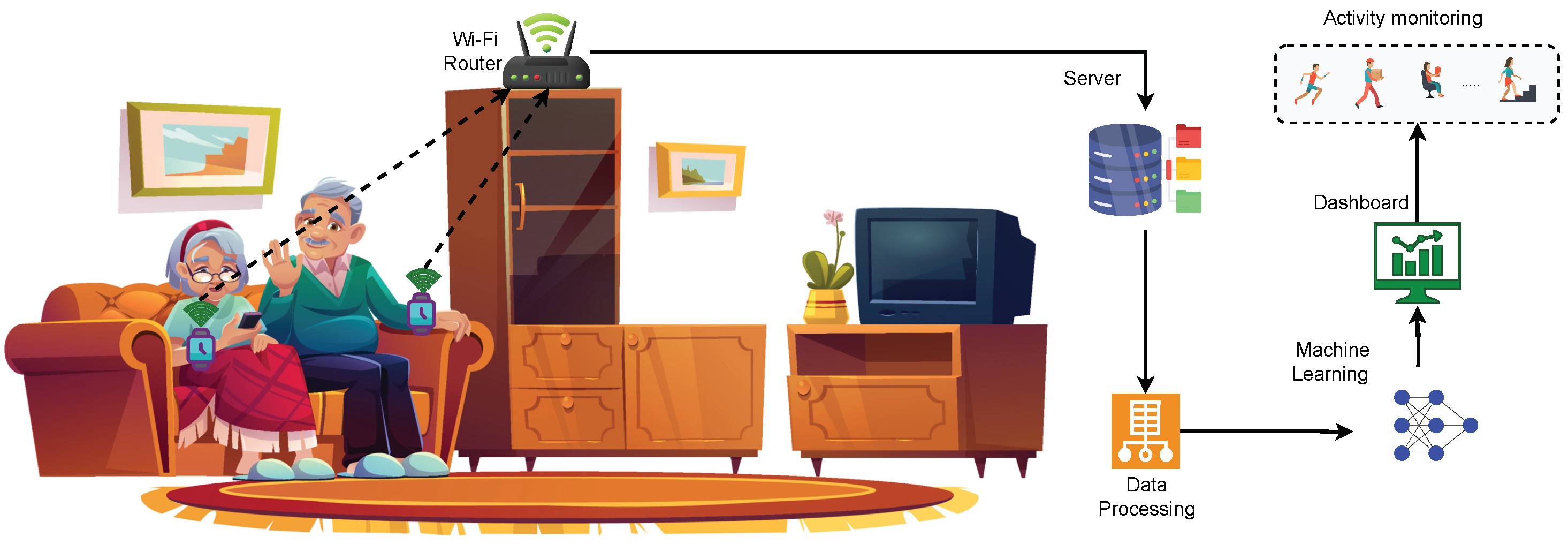

1. Introduction

- We introduce a novel HNFL framework tailored for HAR using wearable sensing technology. The hybrid design of S-LSTM seamlessly integrates the strengths of both LSTM and SNN in a federated setting, offering privacy preservation and computational efficiency.

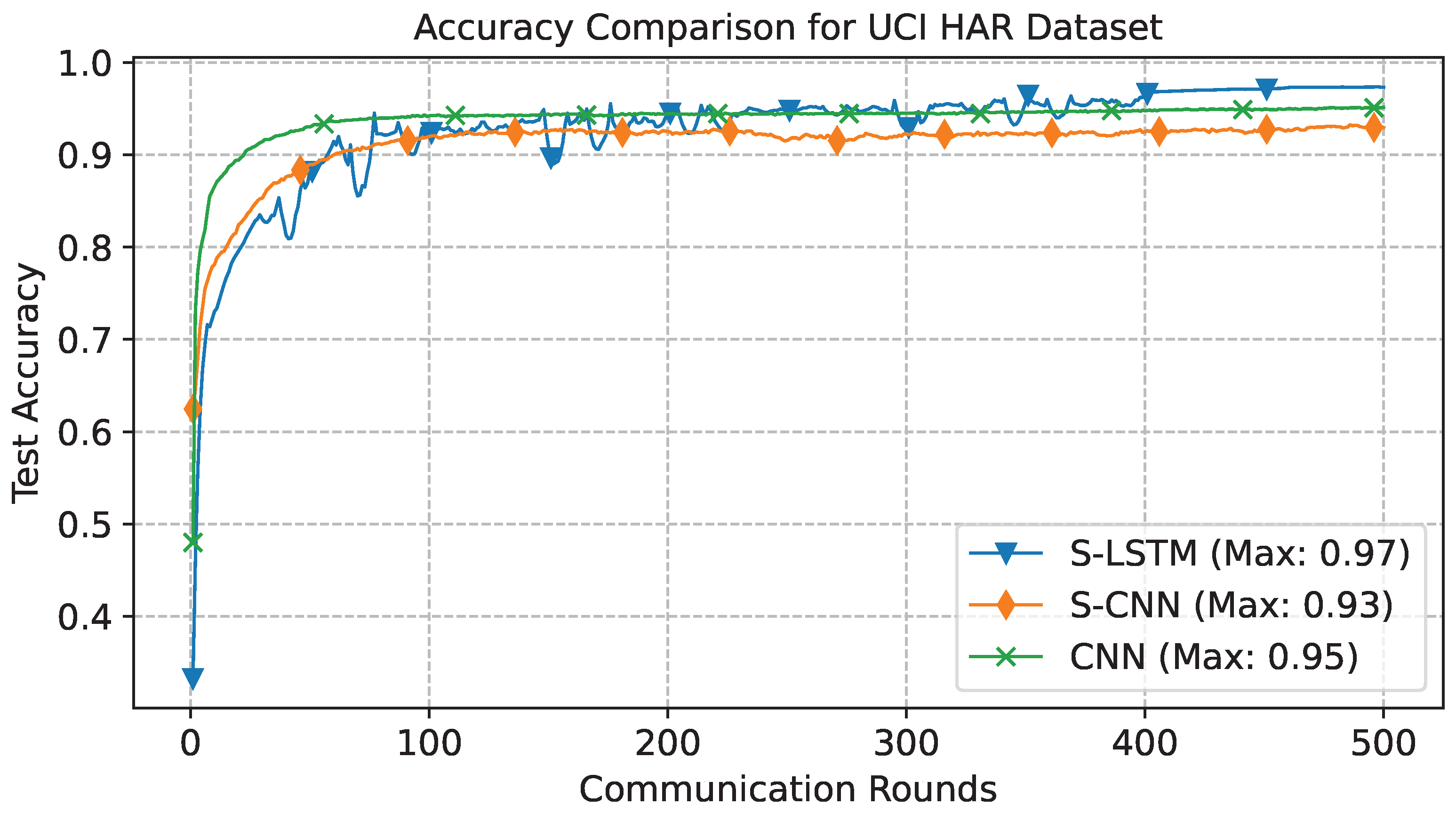

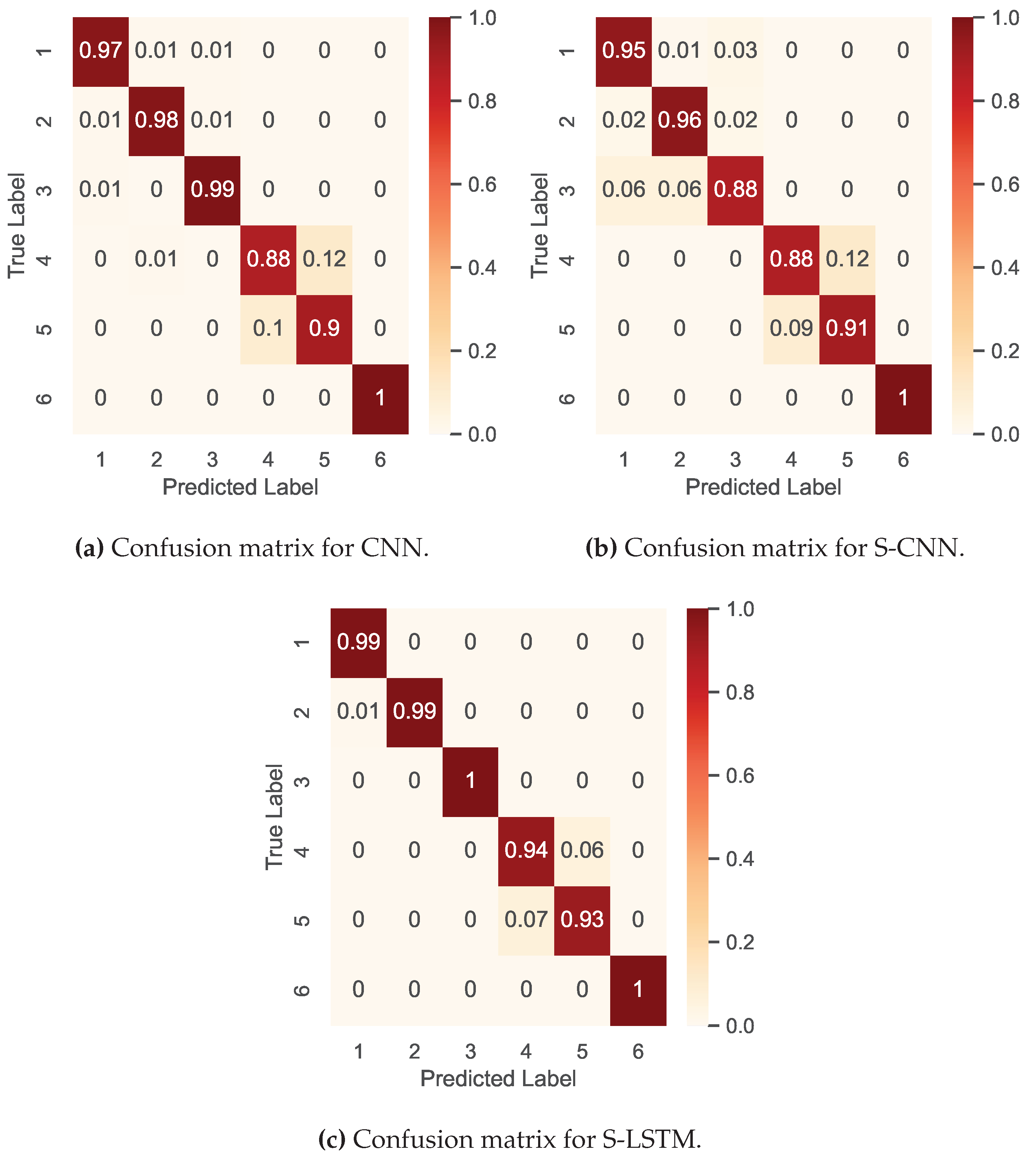

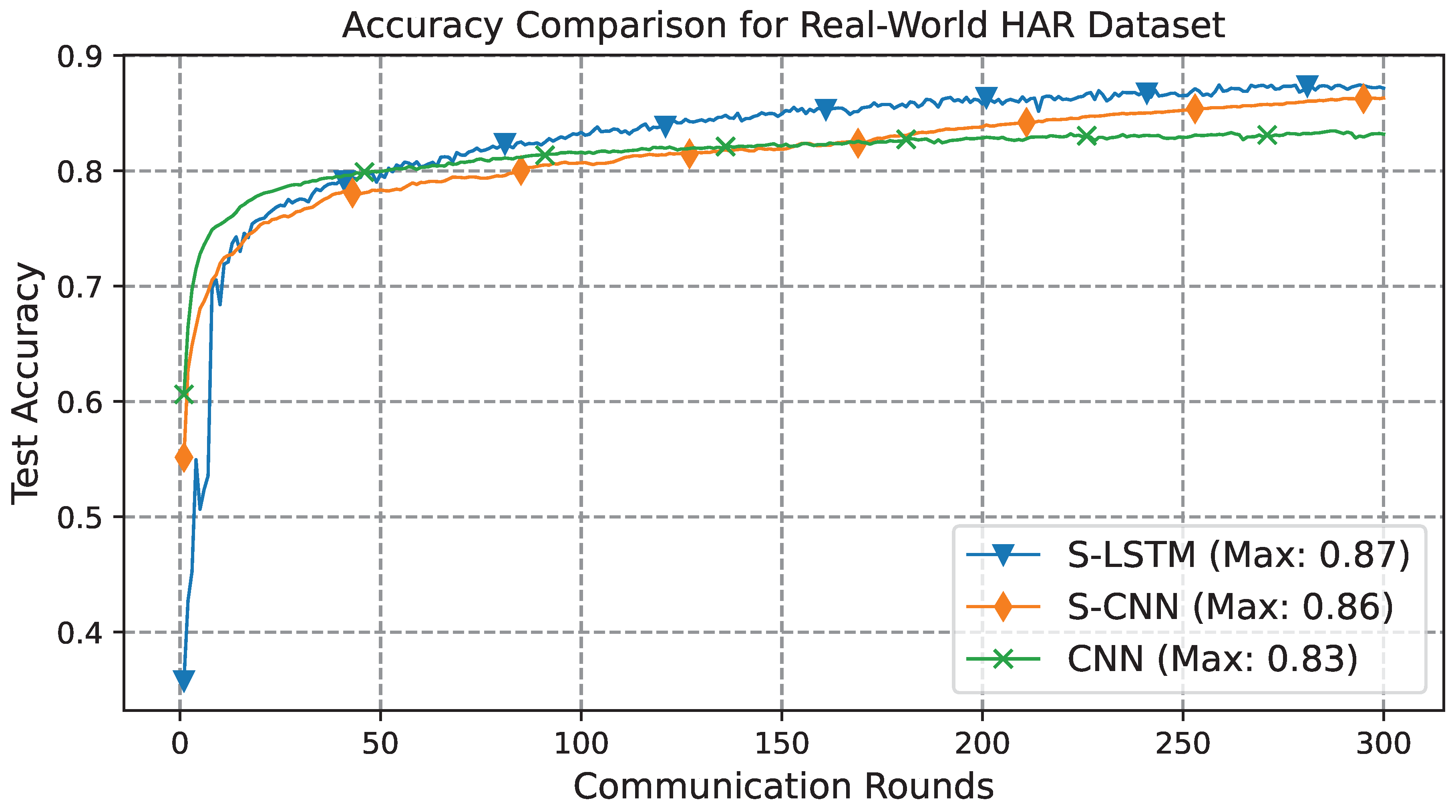

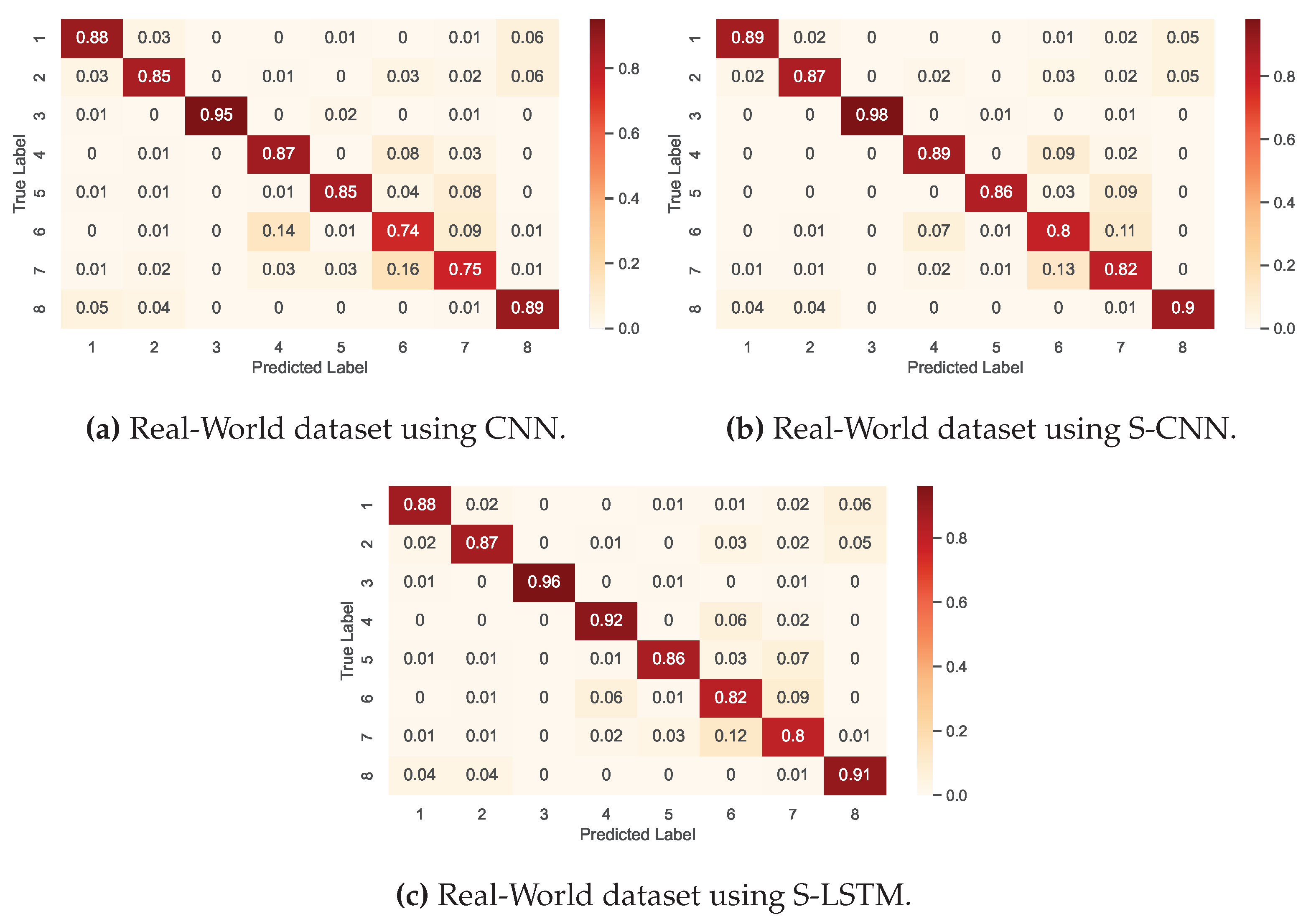

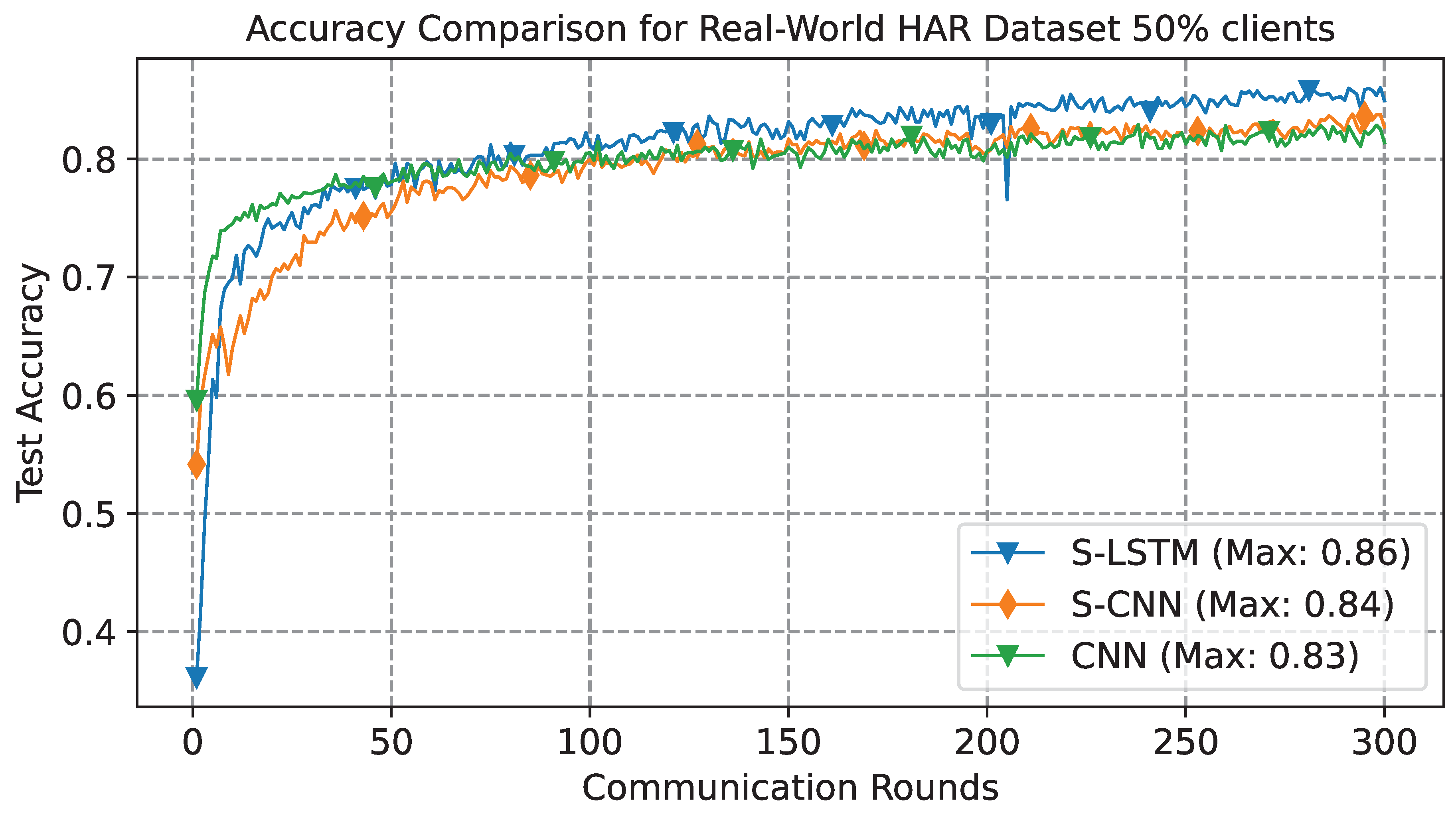

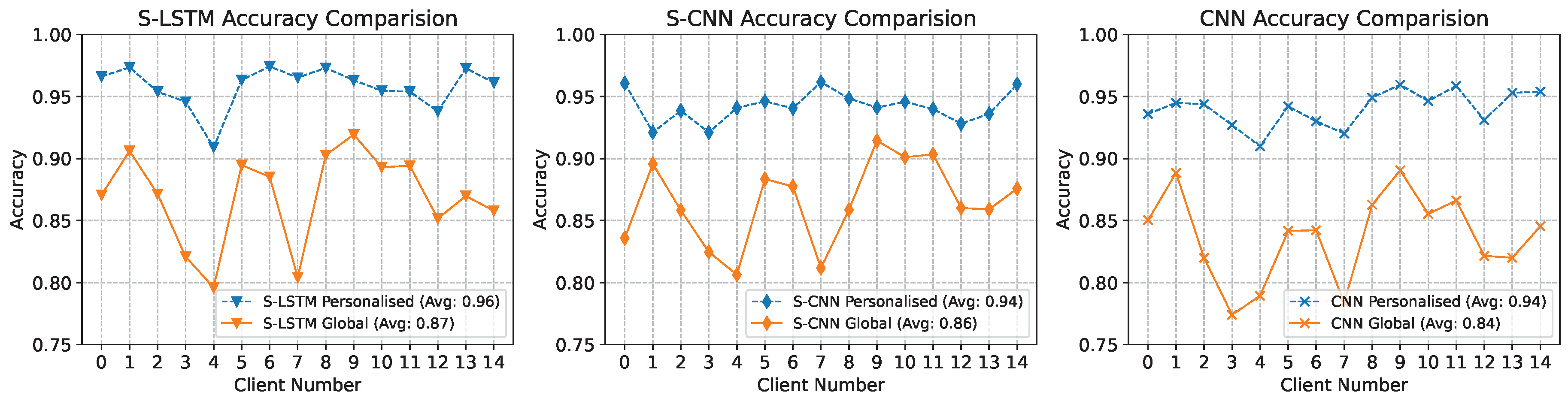

- A comprehensive analysis is conducted using two distinct publicly available datasets, and the results of the S-LSTM are compared with spiking CNN (S-CNN) and simple CNN. This dual-dataset testing approach validates the robustness of the proposed framework and provides valuable insights into its performance in varied environments and scenarios.

- This study addresses a significant issue of client selection within the context of federated HAR applications. We conduct a thorough investigation into the implications of random client selection and its impact on the overall performance of the HAR model. This analysis provides valuable insights into achieving the optimal balance between computational, communication efficiency and model precision, which guides the ideal approach for client selection in federated scenarios.

2. Related Work

2.1. Centralised Learning-Based HAR Systems

2.2. Federated Learning-Based HAR

3. Preliminaries and System Model

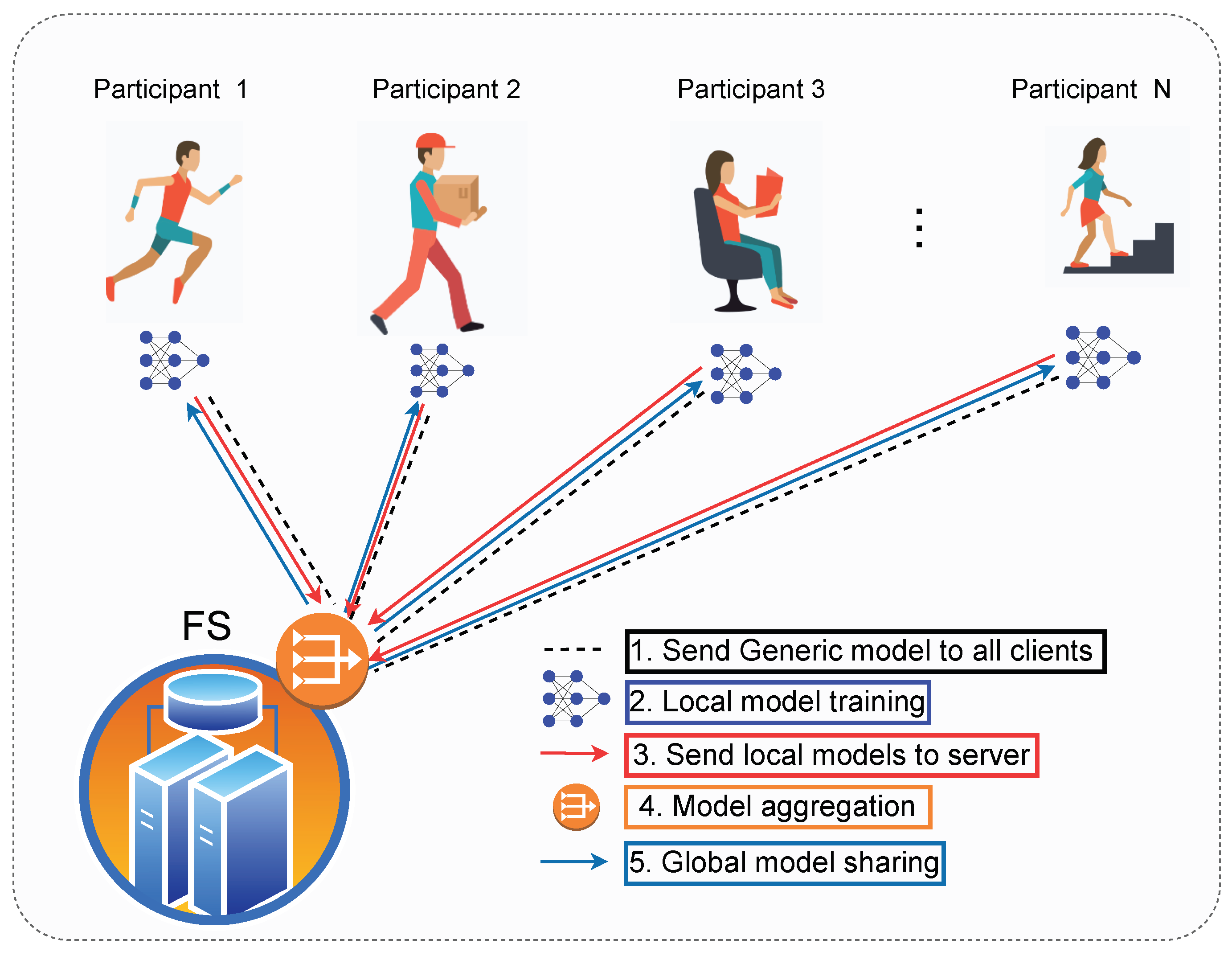

3.1. Federated Learning

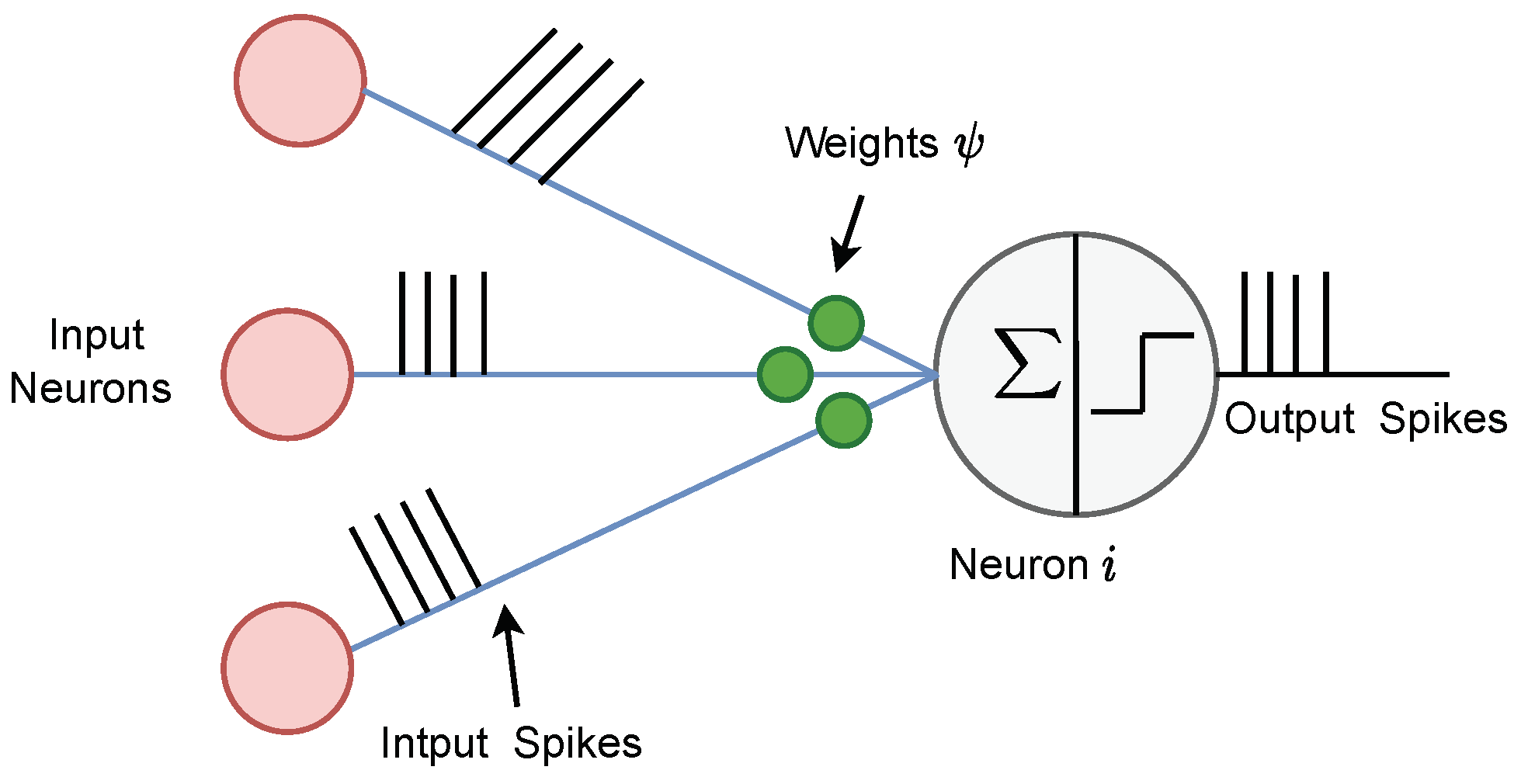

3.2. Spiking Neural Network

3.3. Training of SNN

| Algorithm 1 Federated S-LSTM training with surrogate gradient and BPTT |

|

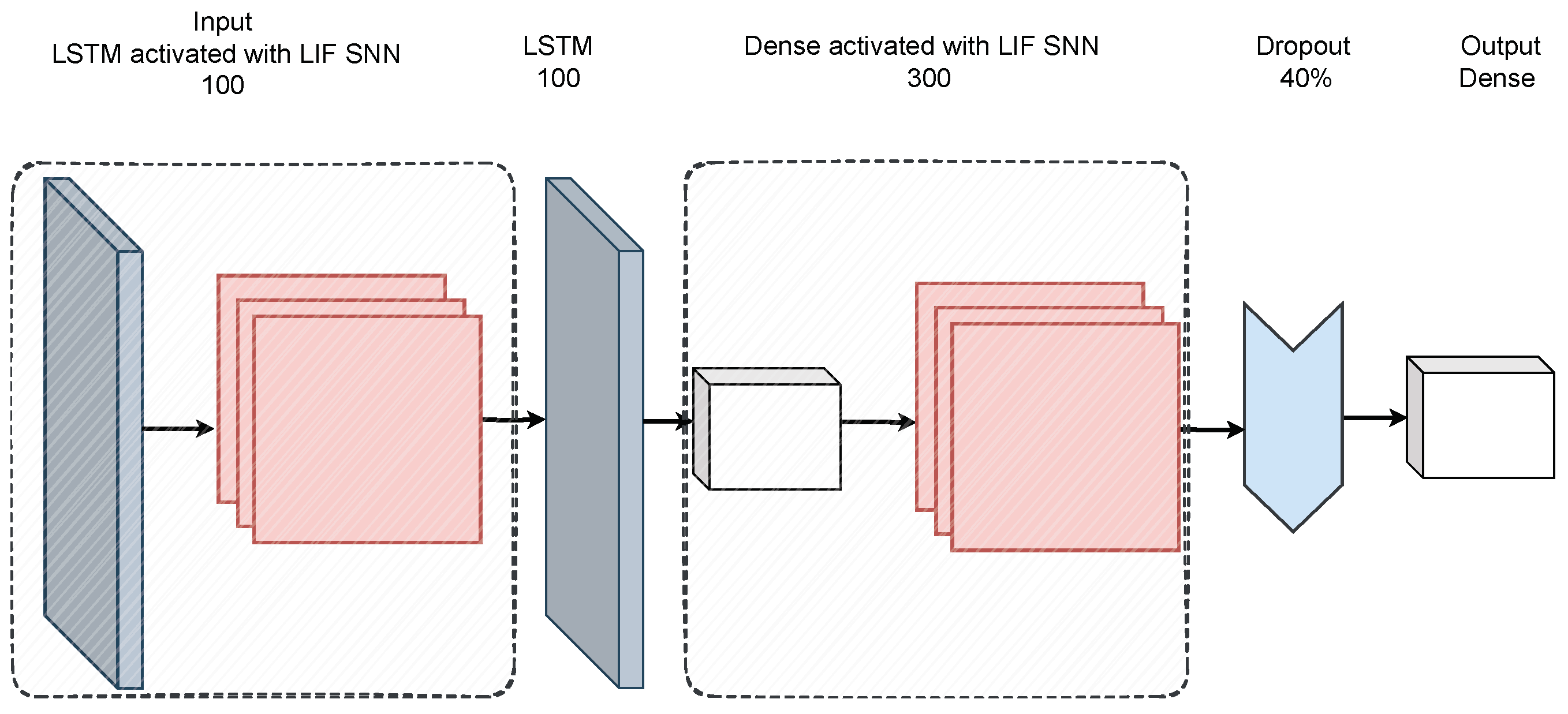

3.4. Proposed S-LSTM Model

4. Simulation Setup

4.1. Dataset Description

- The UCI dataset is obtained using the Samsung Galaxy S II smartphones worn by 30 volunteers for distant age groups and genders. The volunteers were engaged in six daily routine activities: sitting, standing, lying down, walking, walking downstairs and walking upstairs. Each subject repeated these activities multiple times with two distant scenarios for device placement. These scenarios include the placement of a smartphone on the left wrist and the preferred position of each subject. The smartphone’s embedded accelerometer and gyroscope sensors captured triaxial linear acceleration and angular velocity at a rate of 50 Hz. The raw signals are pre-processed to minimise noise interference, and 17 distinctive signals were extracted, encompassing various time and frequency domain parameters, such as magnitude, jerk, and Fast Fourier Transform (FFT). For analysis, signals were segmented into windows of 2.56 seconds, with an overlap of 50%, culminating in 561 diverse features per window derived from statistical and frequency measures. This dataset contains 10,299 instances, with a strategic split of 70% for training and 30% reserved for testing.

- Although the UCI dataset is very commonly used in HAR studies, however, it has limitations as it is collected in a controlled laboratory environment. Additionally, the sample size is very small to explore the true potential of FL. Hence, we chose a more realistic dataset collected by Sztyler and Stuckenschmidt [32]. The data was gathered from 15 participants (eight male, seven female) executing eight common real-world activities, including jumping, running, jogging, climbing stairs, lying, standing, sitting, and walking. The accelerometer data was collected from seven different locations on the body, which includes the head, chest, upper arm, wrist, forearm, thigh, and shin. In the data collection process, smartphones and a smartwatch are mounted on the aforementioned anatomical sites, collecting the data at the frequency of 50 Hz. The dataset incorporates 1065 minutes of accelerometer measurements per on-body position per axis, amounting to extensive volume.

4.2. Performance Metrics

5. Results and Discussion

5.1. UCI Results

5.2. Real-World Dataset Results

6. Conclusions

Author Contributions

Data Availability Statement

Conflicts of Interest

References

- Diraco, G.; Rescio, G.; Siciliano, P.; Leone, A. Review on Human Action Recognition in Smart Living: Sensing Technology, Multimodality, Real-Time Processing, Interoperability, and Resource-Constrained Processing. Sensors 2023, 23, 5281. [Google Scholar] [CrossRef]

- Kalabakov, S.; Jovanovski, B.; Denkovski, D.; Rakovic, V.; Pfitzner, B.; Konak, O.; Arnrich, B.; Gjoreski, H. Federated Learning for Activity Recognition: A System Level Perspective. IEEE Access 2023. [Google Scholar] [CrossRef]

- Yu, H.; Chen, Z.; Zhang, X.; Chen, X.; Zhuang, F.; Xiong, H.; Cheng, X. FedHAR: Semi-supervised online learning for personalized federated human activity recognition. IEEE Transactions on Mobile Computing 2021. [Google Scholar] [CrossRef]

- Bokhari, S.M.; Sohaib, S.; Khan, A.R.; Shafi, M.; others. DGRU based human activity recognition using channel state information. Measurement 2021, 167, 108245. [Google Scholar] [CrossRef]

- Ashleibta, A.M.; Taha, A.; Khan, M.A.; Taylor, W.; Tahir, A.; Zoha, A.; Abbasi, Q.H.; Imran, M.A. 5g-enabled contactless multi-user presence and activity detection for independent assisted living. Scientific Reports 2021, 11, 17590. [Google Scholar] [CrossRef]

- Islam, M.M.; Nooruddin, S.; Karray, F.; Muhammad, G. Human activity recognition using tools of convolutional neural networks: A state of the art review, data sets, challenges, and future prospects. Computers in Biology and Medicine 2022, 106060. [Google Scholar] [CrossRef] [PubMed]

- Sannara, E.; Portet, F.; Lalanda, P.; German, V. A federated learning aggregation algorithm for pervasive computing: Evaluation and comparison. 2021 IEEE International Conference on Pervasive Computing and Communications (PerCom). IEEE, 2021, pp. 1–10.

- Yao, S.; Hu, S.; Zhao, Y.; Zhang, A.; Abdelzaher, T. Deepsense: A unified deep learning framework for time-series mobile sensing data processing. Proceedings of the 26th international conference on world wide web, 2017, pp. 351–360.

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep learning for sensor-based activity recognition: A survey. Pattern recognition letters 2019, 119, 3–11. [Google Scholar] [CrossRef]

- Guan, Y.; Plötz, T. Ensembles of deep lstm learners for activity recognition using wearables. Proceedings of the ACM on interactive, mobile, wearable and ubiquitous technologies 2017, 1, 1–28. [Google Scholar] [CrossRef]

- Singh, M.S.; Pondenkandath, V.; Zhou, B.; Lukowicz, P.; Liwickit, M. Transforming sensor data to the image domain for deep learning—An application to footstep detection. 2017 International Joint Conference on Neural Networks (IJCNN). IEEE, 2017, pp. 2665–2672.

- Qi, P.; Chiaro, D.; Piccialli, F. FL-FD: Federated learning-based fall detection with multimodal data fusion. Information Fusion 2023, 101890. [Google Scholar] [CrossRef]

- Hafeez, S.; Khan, A.R.; Al-Quraan, M.; Mohjazi, L.; Zoha, A.; Imran, M.A.; Sun, Y. Blockchain-Assisted UAV Communication Systems: A Comprehensive Survey. IEEE Open Journal of Vehicular Technology 2023. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. Artificial intelligence and statistics. PMLR 2017, 1273–1282. [Google Scholar]

- Venkatesha, Y.; Kim, Y.; Tassiulas, L.; Panda, P. Federated learning with spiking neural networks. IEEE Transactions on Signal Processing 2021, 69, 6183–6194. [Google Scholar] [CrossRef]

- Wang, Y.; Duan, S.; Chen, F. Efficient asynchronous federated neuromorphic learning of spiking neural networks. Neurocomputing 2023, 557, 126686. [Google Scholar] [CrossRef]

- Khatun, M.A.; Yousuf, M.A.; Ahmed, S.; Uddin, M.Z.; Alyami, S.A.; Al-Ashhab, S.; Akhdar, H.F.; Khan, A.; Azad, A.; Moni, M.A. Deep CNN-LSTM with self-attention model for human activity recognition using wearable sensor. IEEE Journal of Translational Engineering in Health and Medicine 2022, 10, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Han, C.; Zhang, L.; Tang, Y.; Huang, W.; Min, F.; He, J. Human activity recognition using wearable sensors by heterogeneous convolutional neural networks. Expert Systems with Applications 2022, 198, 116764. [Google Scholar] [CrossRef]

- Uddin, M.Z.; Soylu, A. Human activity recognition using wearable sensors, discriminant analysis, and long short-term memory-based neural structured learning. Scientific Reports 2021, 11, 16455. [Google Scholar] [CrossRef]

- Jain, V.; Gupta, G.; Gupta, M.; Sharma, D.K.; Ghosh, U. Ambient intelligence-based multimodal human action recognition for autonomous systems. ISA transactions 2023, 132, 94–108. [Google Scholar] [CrossRef]

- Qi, W.; Su, H.; Yang, C.; Ferrigno, G.; De Momi, E.; Aliverti, A. A fast and robust deep convolutional neural networks for complex human activity recognition using smartphone. Sensors 2019, 19, 3731. [Google Scholar] [CrossRef]

- Laitrakun, S. Merging-Squeeze-Excitation Feature Fusion for Human Activity Recognition Using Wearable Sensors. Applied Sciences 2023, 13, 2475. [Google Scholar] [CrossRef]

- Cheng, D.; Zhang, L.; Bu, C.; Wang, X.; Wu, H.; Song, A. ProtoHAR: Prototype Guided Personalized Federated Learning for Human Activity Recognition. IEEE Journal of Biomedical and Health Informatics 2023. [Google Scholar] [CrossRef]

- Ouyang, X.; Xie, Z.; Zhou, J.; Xing, G.; Huang, J. ClusterFL: A Clustering-based Federated Learning System for Human Activity Recognition. ACM Transactions on Sensor Networks 2022, 19, 1–32. [Google Scholar] [CrossRef]

- Gad, G.; Fadlullah, Z. Federated Learning via Augmented Knowledge Distillation for Heterogenous Deep Human Activity Recognition Systems. Sensors 2022, 23, 6. [Google Scholar] [CrossRef] [PubMed]

- Aouedi, O.; Piamrat, K.; Südholt, M. HFedSNN: Efficient Hierarchical Federated Learning using Spiking Neural Networks. 21st ACM International Symposium on Mobility Management and Wireless Access (MobiWac 2023) 2023.

- Xie, K.; Zhang, Z.; Li, B.; Kang, J.; Niyato, D.; Xie, S.; Wu, Y. Efficient federated learning with spike neural networks for traffic sign recognition. IEEE Transactions on Vehicular Technology 2022, 71, 9980–9992. [Google Scholar] [CrossRef]

- Roy, K.; Jaiswal, A.; Panda, P. Towards spike-based machine intelligence with neuromorphic computing. Nature 2019, 575, 607–617. [Google Scholar] [CrossRef]

- Gerstner, W.; Kistler, W.M.; Naud, R.; Paninski, L. Neuronal dynamics: From single neurons to networks and models of cognition; Cambridge University Press, 2014.

- Lee, C.; Srinivasan, G.; Panda, P.; Roy, K. Deep spiking convolutional neural network trained with unsupervised spike-timing-dependent plasticity. IEEE Transactions on Cognitive and Developmental Systems 2018, 11, 384–394. [Google Scholar]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. others. A public domain dataset for human activity recognition using smartphones. Esann 2013, 3, 3. [Google Scholar]

- Sztyler, T.; Stuckenschmidt, H. On-body localization of wearable devices: An investigation of position-aware activity recognition. 2016 IEEE International Conference on Pervasive Computing and Communications (PerCom). IEEE, 2016, pp. 1–9.

| CNN | S-CNN | S-LSTM | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Class | Precision | Recall | F1-Score | Precision | Recall | F1-Score | Precision | Recall | F1-Score |

| Walking | 0.98 | 0.97 | 0.98 | 0.93 | 0.95 | 0.94 | 0.99 | 0.99 | 0.99 |

| Walking upstairs | 0.98 | 0.98 | 0.98 | 0.93 | 0.96 | 0.95 | 0.99 | 0.99 | 0.99 |

| Walking downstairs | 0.98 | 0.99 | 0.98 | 0.93 | 0.88 | 0.91 | 1.00 | 1.00 | 1.00 |

| Sitting | 0.89 | 0.88 | 0.88 | 0.90 | 0.88 | 0.89 | 0.93 | 0.94 | 0.93 |

| Standing | 0.89 | 0.90 | 0.89 | 0.89 | 0.91 | 0.90 | 0.94 | 0.93 | 0.94 |

| Laying | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| CNN | S-CNN | S-LSTM | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Class | Precision | Recall | F1-Score | Precision | Recall | F1-Score | Precision | Recall | F1-Score |

| Climbing down | 0.86 | 0.88 | 0.87 | 0.91 | 0.89 | 0.90 | 0.90 | 0.88 | 0.89 |

| Climbing up | 0.87 | 0.85 | 0.86 | 0.91 | 0.87 | 0.89 | 0.90 | 0.87 | 0.88 |

| Jumping | 0.95 | 0.95 | 0.95 | 0.96 | 0.98 | 0.97 | 0.98 | 0.96 | 0.97 |

| Lying | 0.82 | 0.87 | 0.84 | 0.88 | 0.89 | 0.88 | 0.90 | 0.92 | 0.91 |

| Running | 0.95 | 0.85 | 0.90 | 0.97 | 0.86 | 0.91 | 0.94 | 0.86 | 0.90 |

| Sitting | 0.70 | 0.74 | 0.72 | 0.73 | 0.80 | 0.76 | 0.77 | 0.82 | 0.80 |

| Standing | 0.75 | 0.75 | 0.75 | 0.75 | 0.82 | 0.78 | 0.77 | 0.80 | 0.78 |

| Walking | 0.88 | 0.89 | 0.89 | 0.91 | 0.90 | 0.90 | 0.89 | 0.91 | 0.90 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).