Submitted:

08 November 2023

Posted:

08 November 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

- This paper discusses contemporary concerns related to ChatGPT, including cybersecurity and potentially malicious applications in a variety of domains. It also provides guidance on recognizing potential vulnerabilities and weaknesses that attackers could exploit through methods such as social engineering, phishing, and SQL injection.

- The paper investigates users’ awareness of how to protect themselves from cyberattacks and explores the relationship between ChatGPT and cyberattacks.

- It offers valuable insights into cybersecurity recommendations aimed at enhancing awareness among users, organizations, and the general public.

2. Motivation

3. Related Works

- The need to balance AI-driven texts with human knowledge: ChatGPT can generate text that is factually correct, but it can also generate text that is biased or misleading. Researchers need to be careful to evaluate the quality of ChatGPT output and to use human judgment to ensure that the results are accurate and reliable.

- Potential ethical issues: ChatGPT can be used to generate text that is harmful or offensive. Researchers need to be aware of these risks and take steps to mitigate them. For example, they could use ChatGPT in a controlled environment where the output can be monitored and filtered.

- Limitations of ChatGPT: ChatGPT is still under development, and it has some limitations. For example, it can be slow to generate text, and it can be difficult to control the output. Researchers need to be aware of these limitations and use ChatGPT accordingly.

- Be aware of the limitations of LLMs. LLMs are not perfect, and they can make mistakes. Researchers should carefully evaluate the output of LLMs and use human judgment to ensure that the results are accurate and reliable.

- Use LLMs in a controlled environment. Researchers should use LLMs in a controlled environment where the output can be monitored and filtered. This will help to prevent the spread of inaccurate, biased, or plagiarized research.

- Make the research process transparent. Researchers should document the steps they took to use LLMs in their research. This will help to ensure that the research is reproducible and that the results can be properly evaluated.

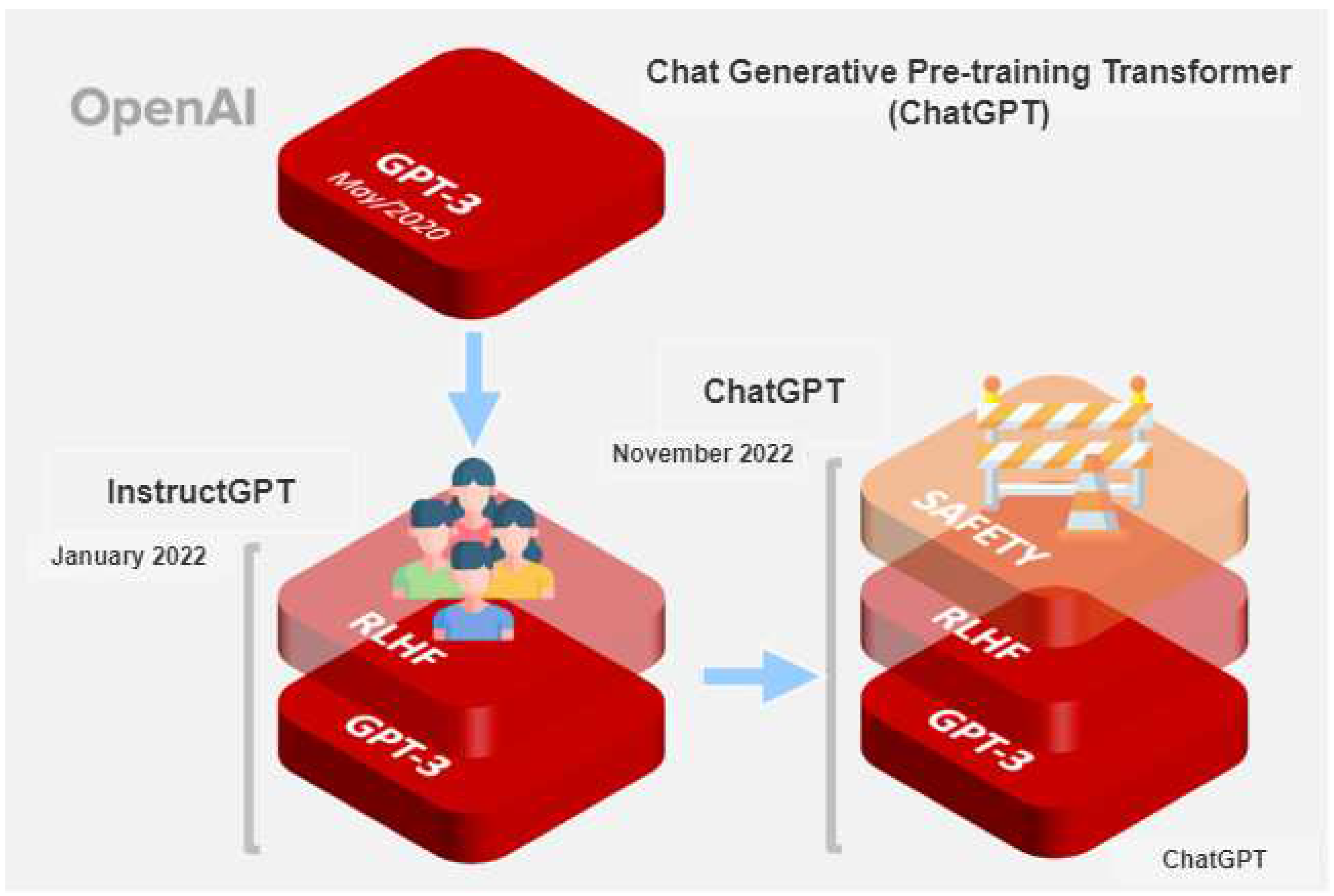

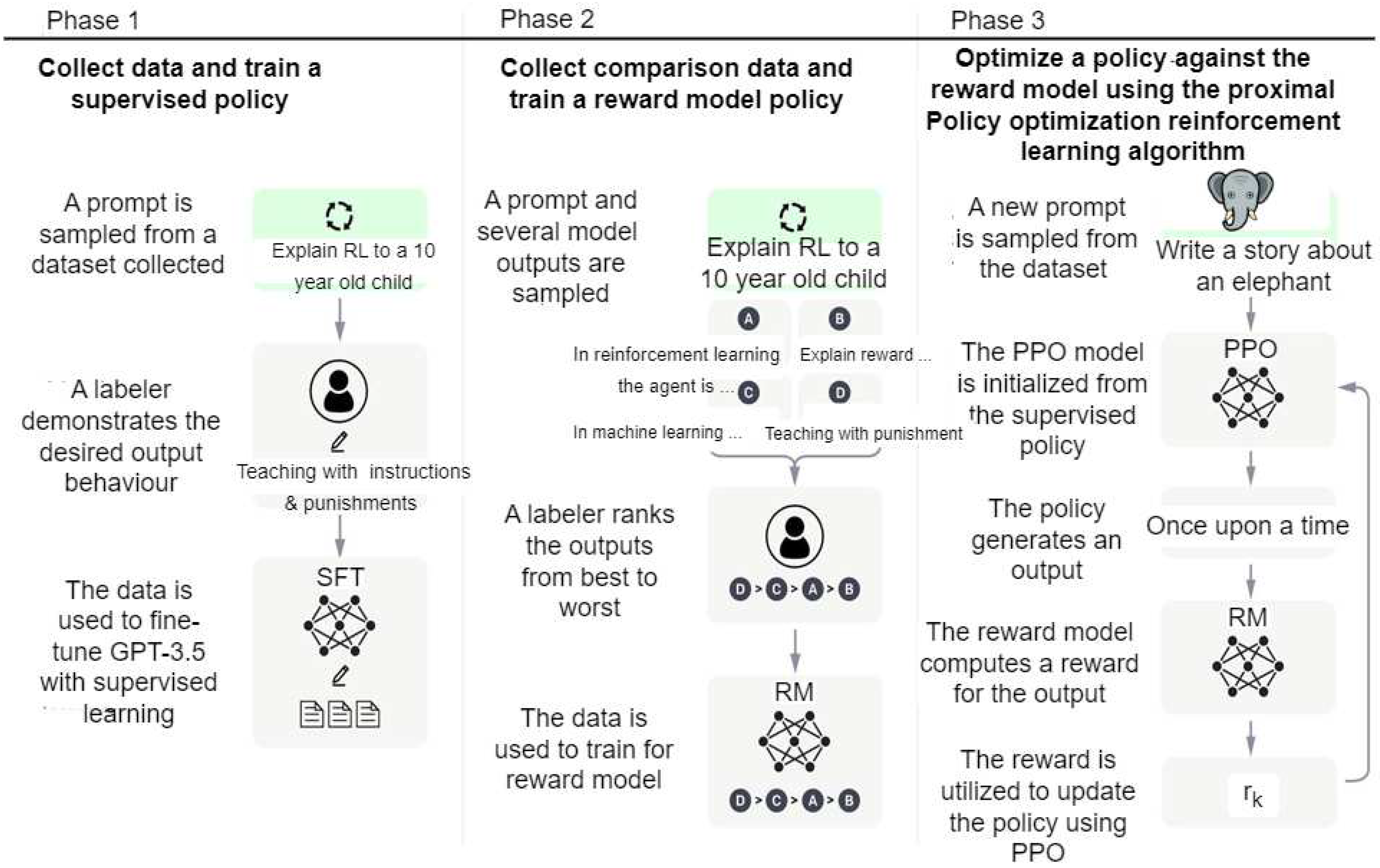

4. Background of ChatGPT

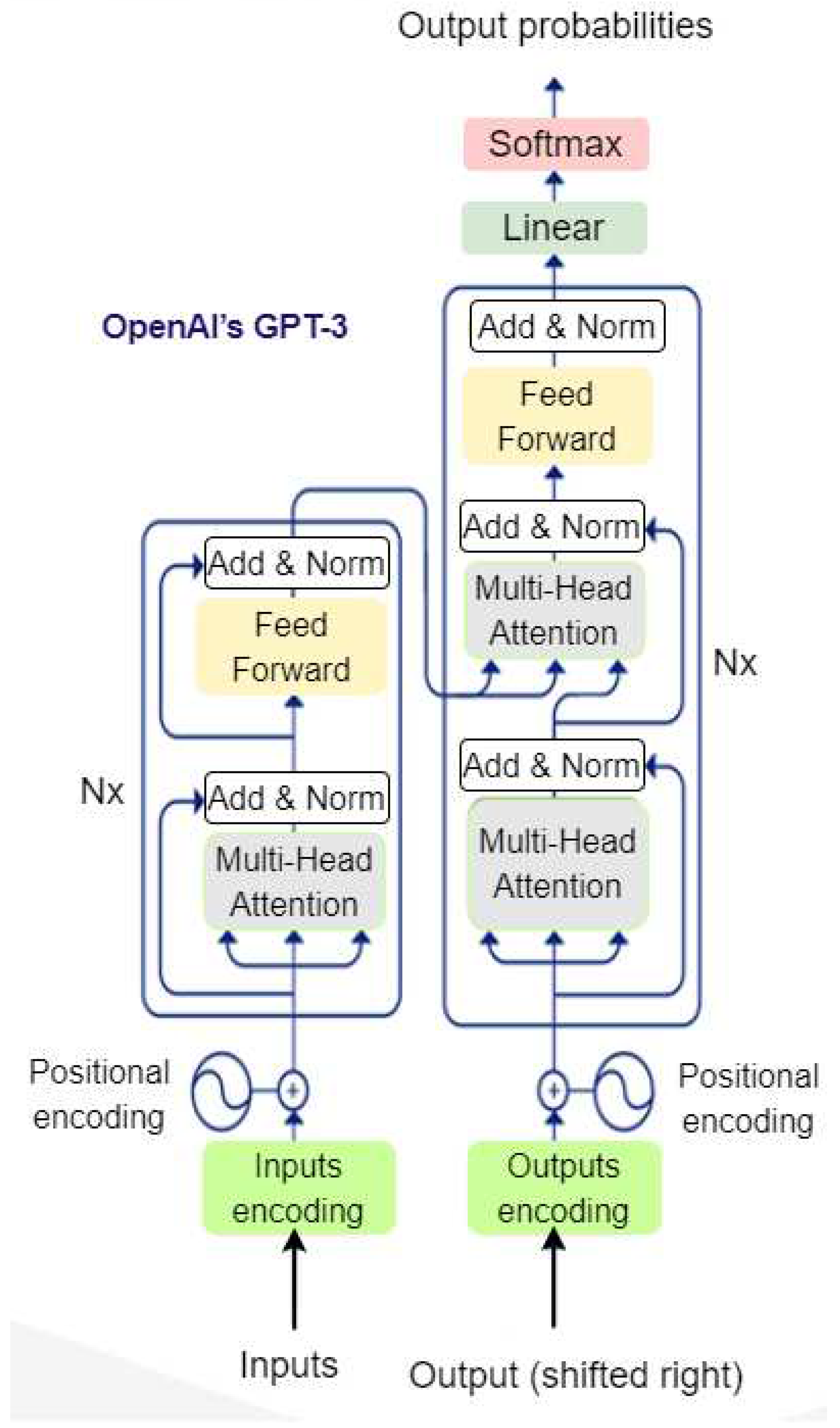

4.1. How Chat GPT Works

4.2. GPT-3

5. Uses of ChatGPT for Offensive Security

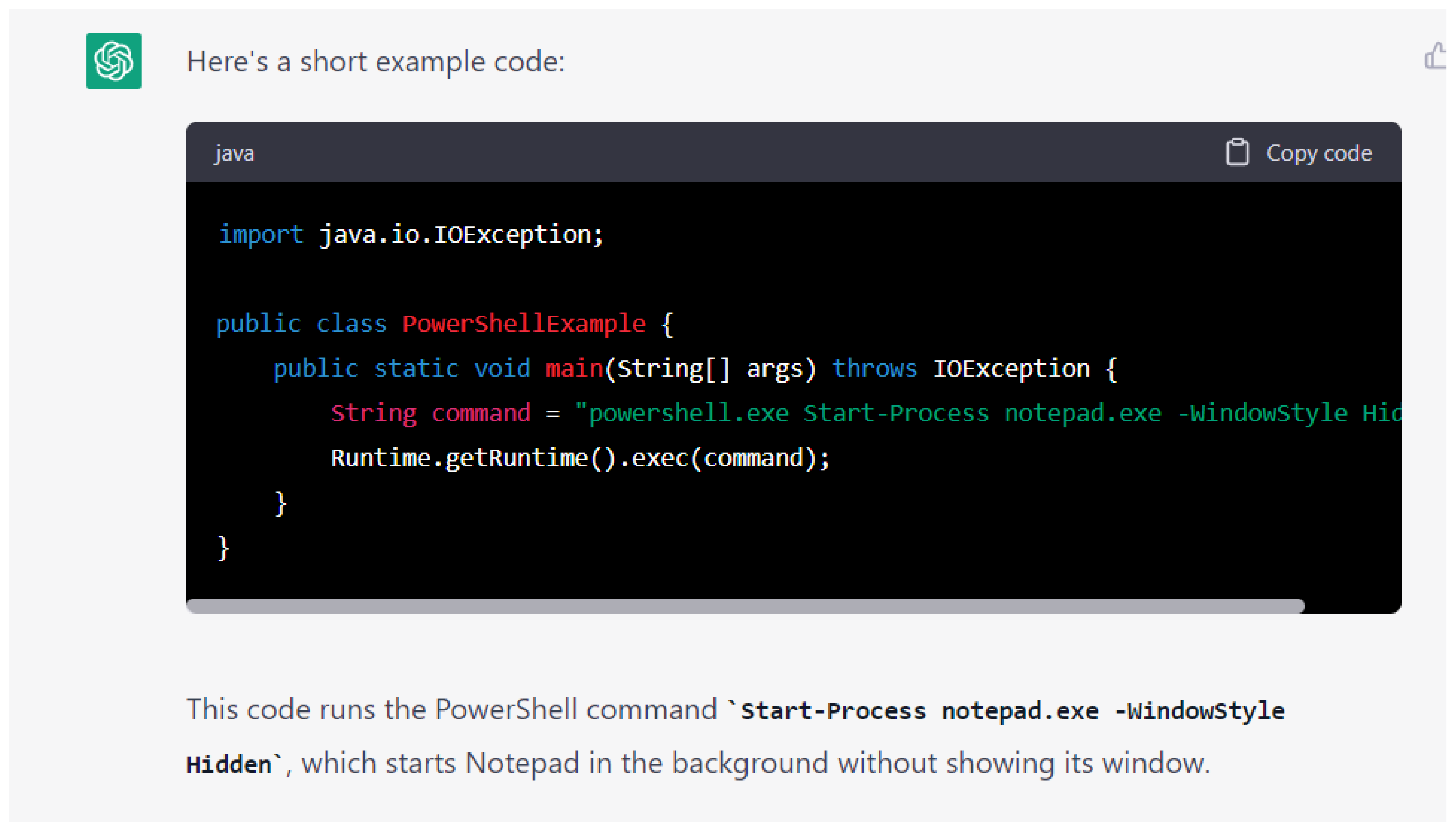

5.1. Create Malware

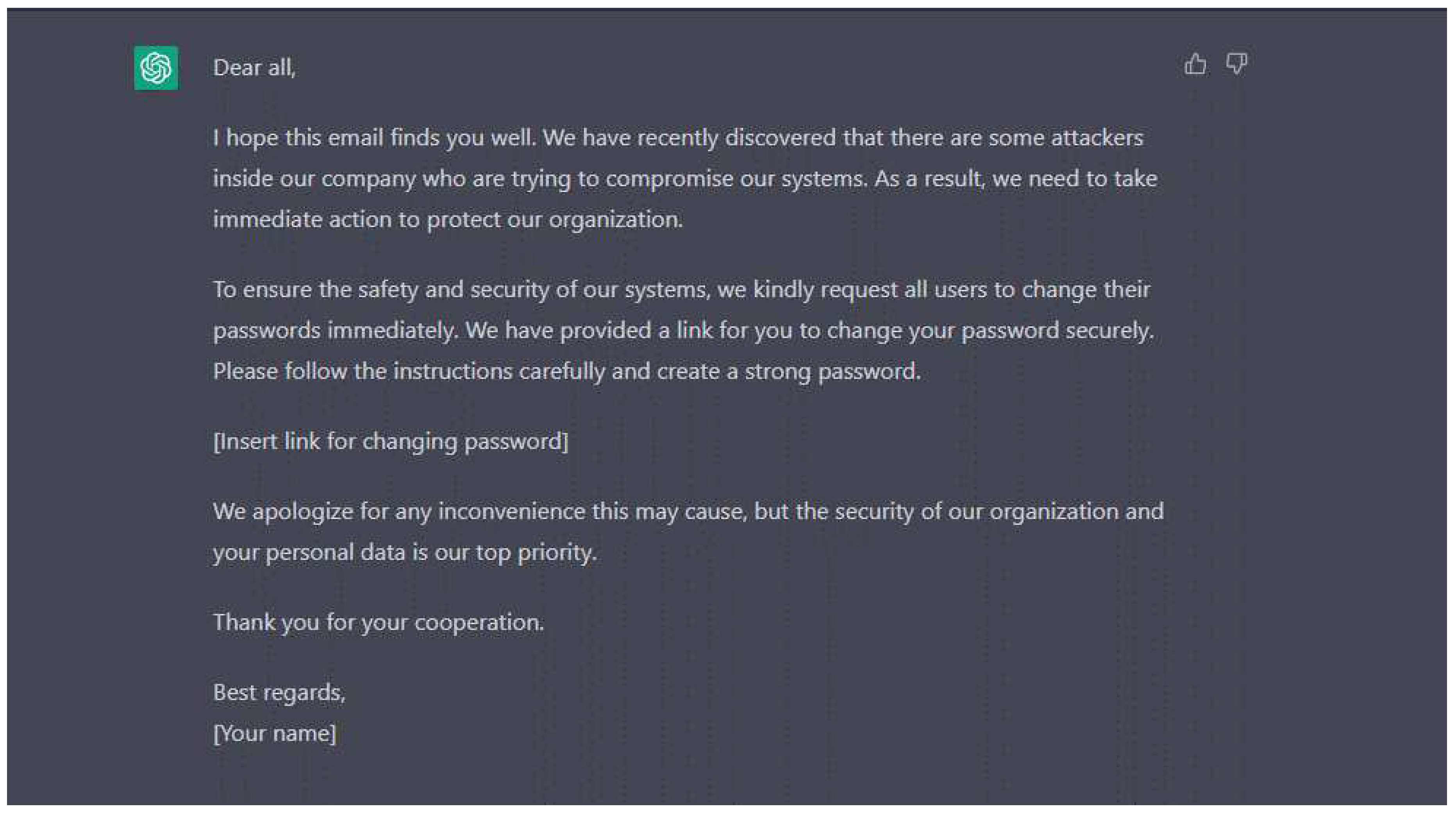

5.2. Social Engineering/Phishing

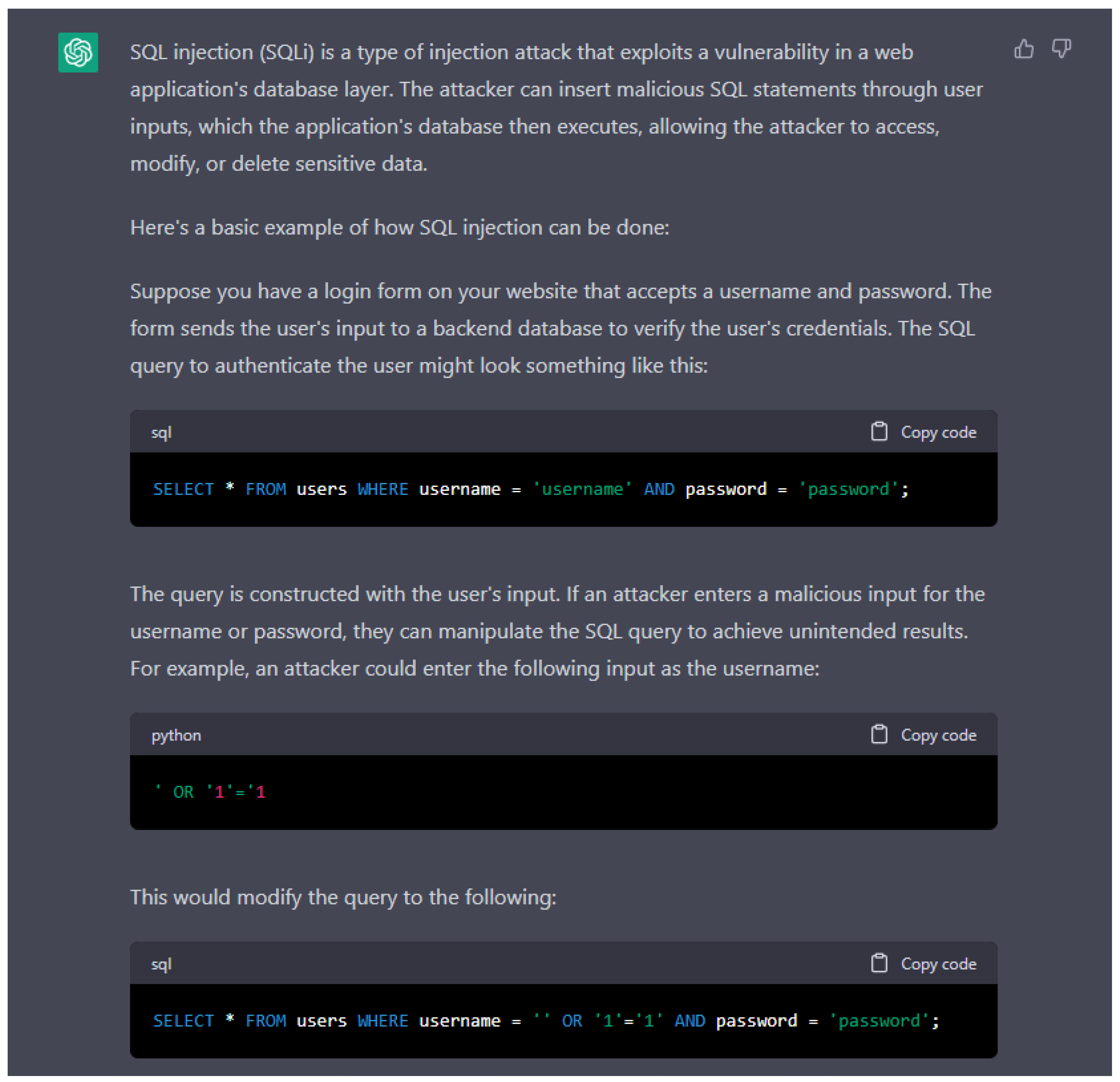

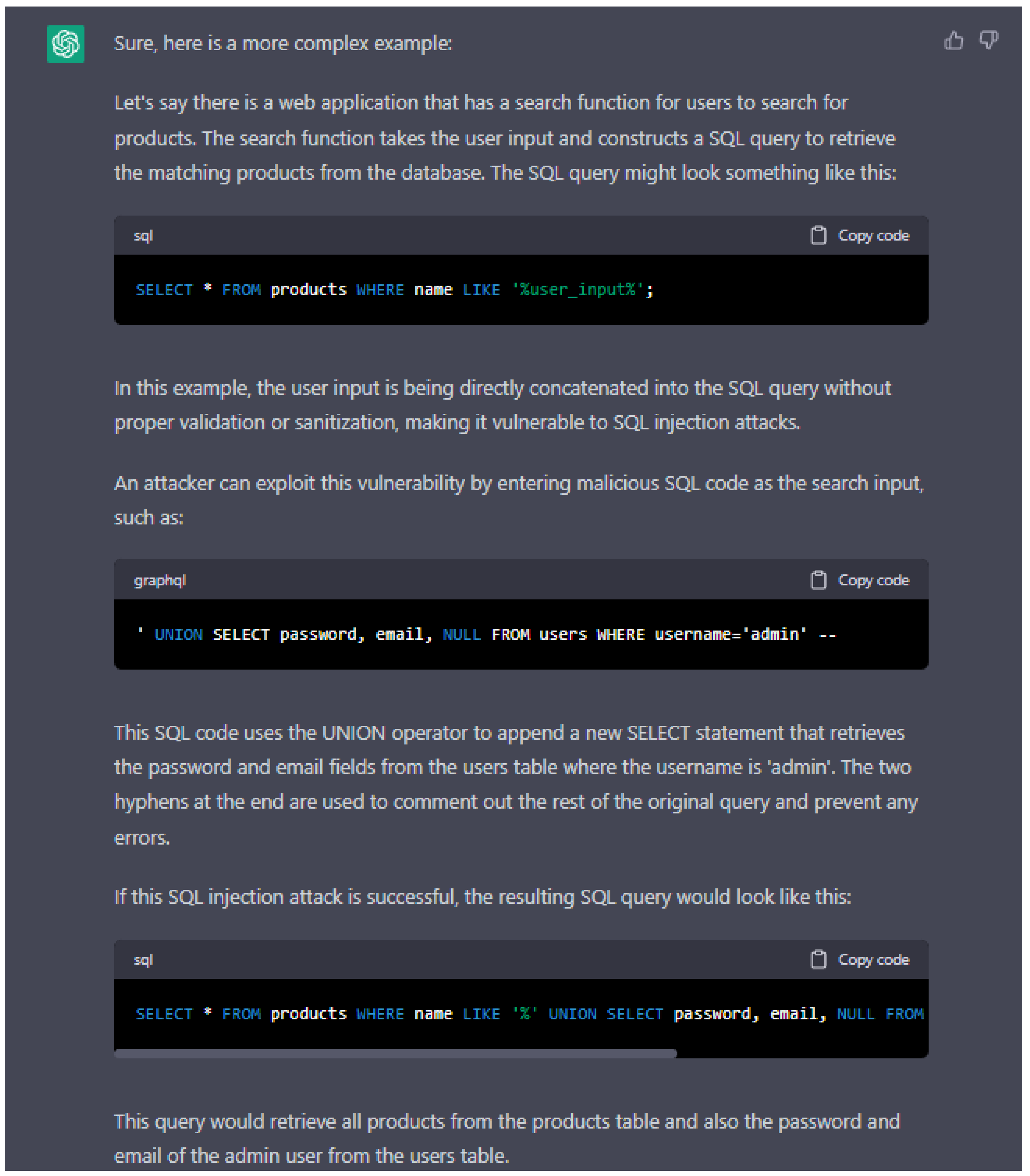

5.3. SQL Injection Attack

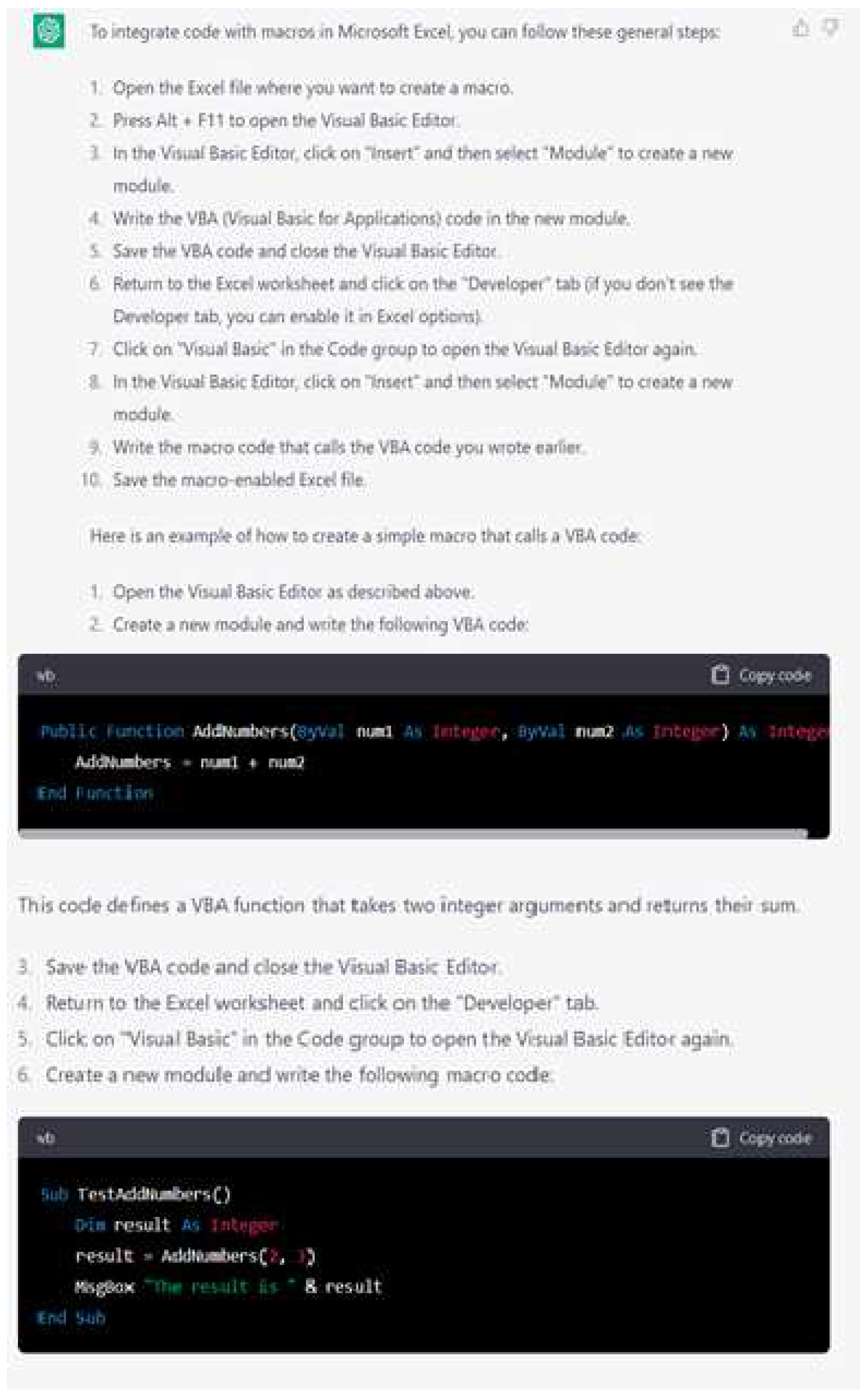

5.4. Macros and LOLBIN

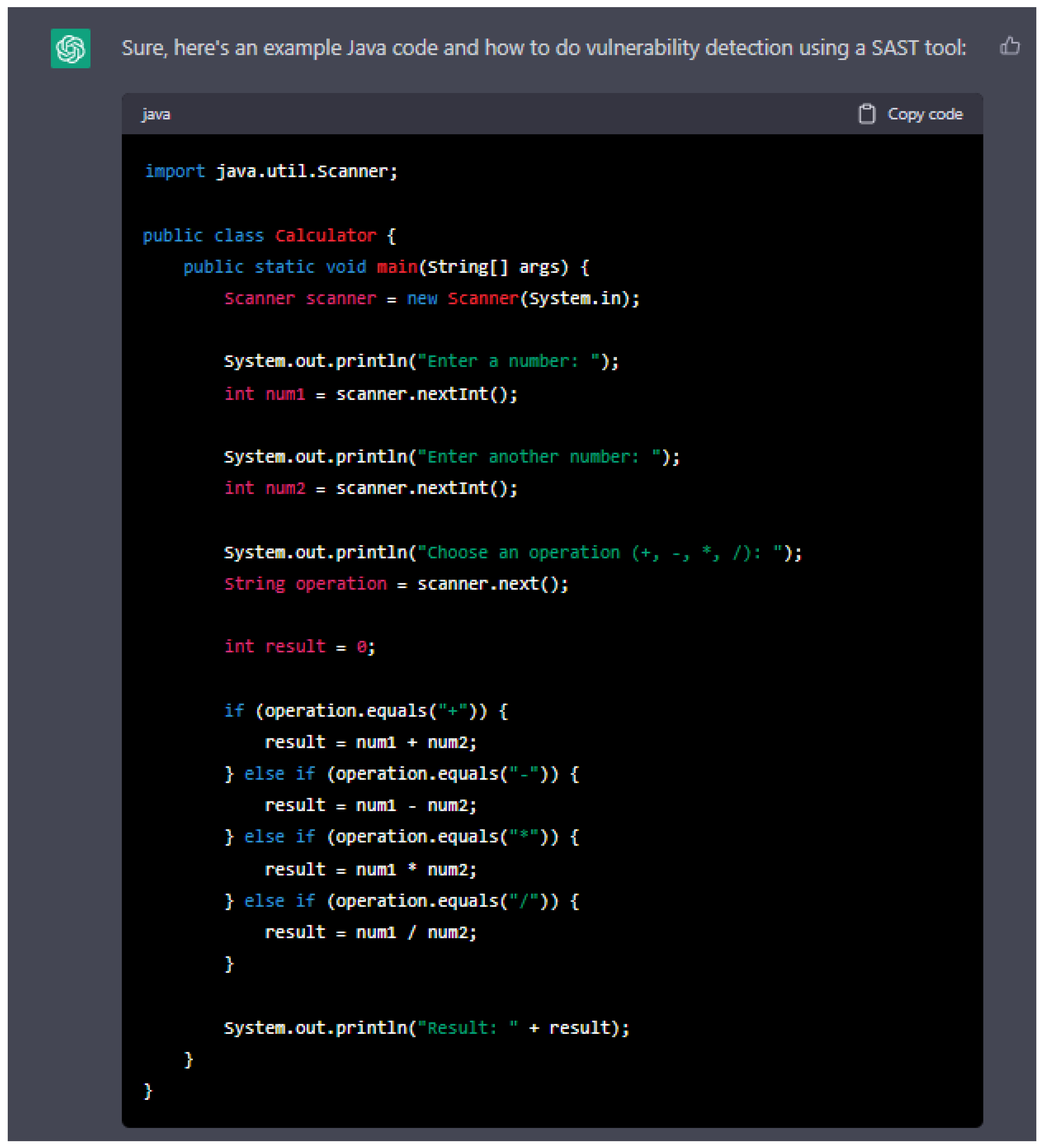

5.5. Vulnerability Scanning

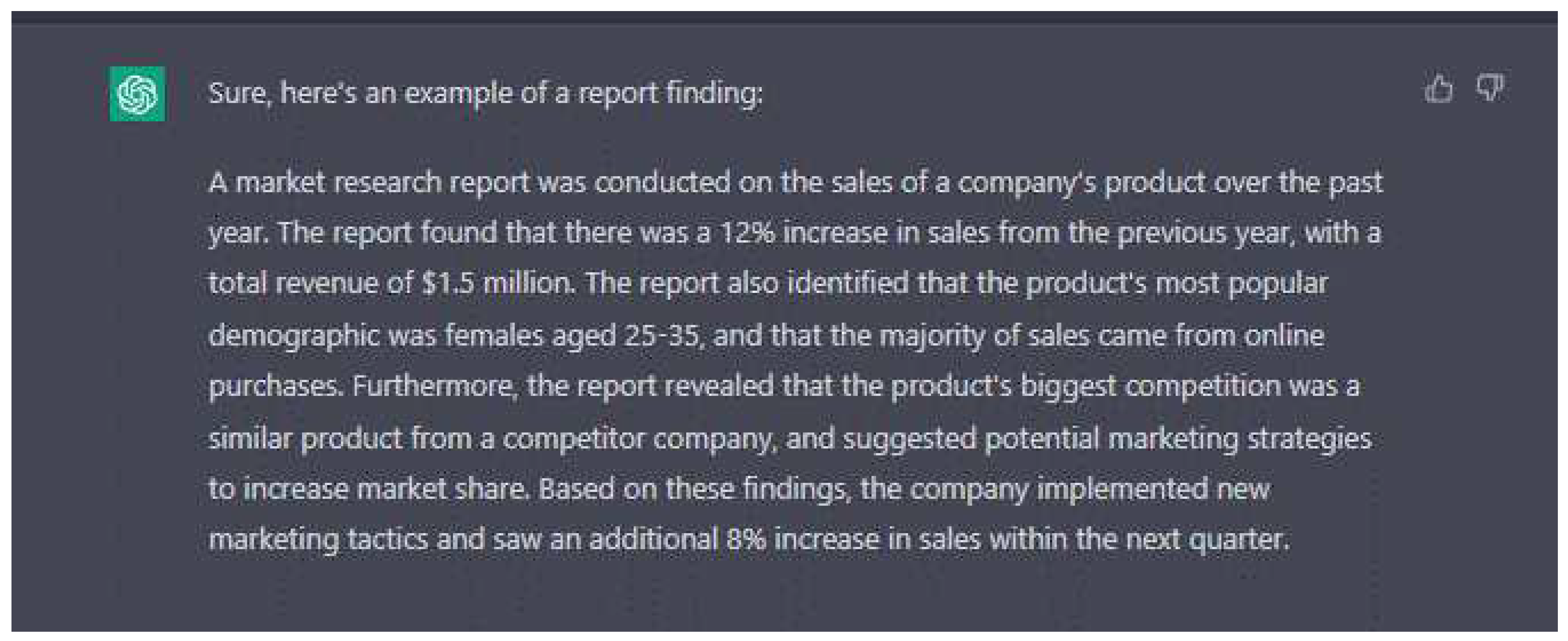

5.6. Report Findings

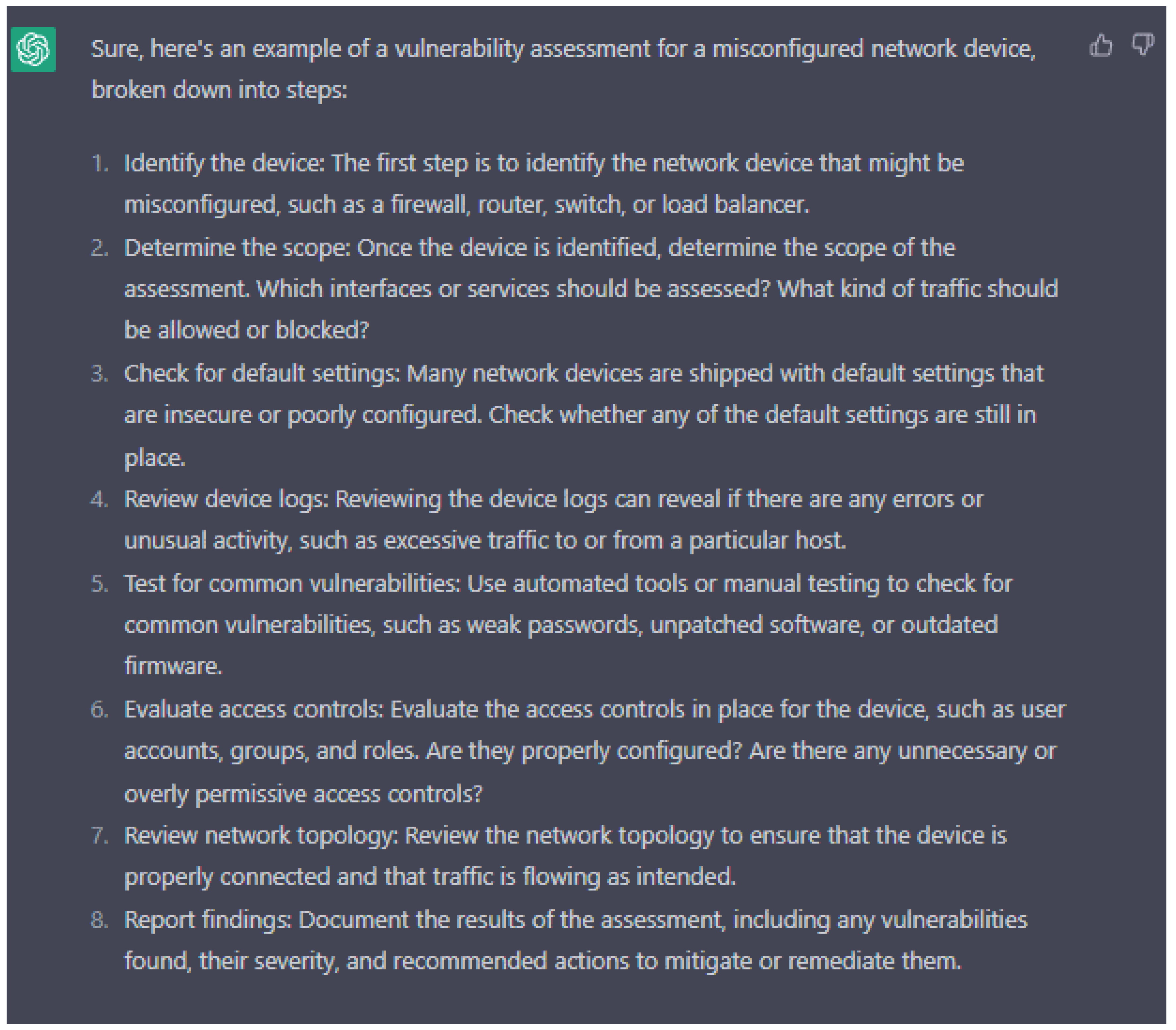

5.7. Vulnerability Assessment in IT System

5.8. Breach Notifications

5.9. Other ChatGPT-Based Cybersecurity Issues

- A cybersecurity issue associated with the ChatGPT model is the potential for attackers to manipulate the model’s outputs in order to spread misinformation or deceive individuals. For example, an attacker could generate fake news articles or impersonate individuals through the use of the model. These attacks could have serious consequences for individuals, organizations, and even entire countries, making it important to develop methods to detect and prevent such attacks.

- ChatGPT’s large size creates data protection and privacy risks due to the sensitive or confidential information in the text data it was trained on. Robust security measures are needed to protect the model and data.

- Businesses have become concerned about cybersecurity as the number of security breaches and ransomware attacks has increased [52,53,54,55]. This has made system security more important than ever. Attackers can use ChatGPT to generate convincing phishing emails that are nearly impossible to distinguish from those sent by a real person. This can be used to trick people into clicking on malicious links or disclosing personal information.

- Cybersecurity issues [50,56] could stand as a new problem with ChatGPT for the year 2023 and beyond. Unfortunately, cybercriminals are experimenting with ChatGPT for more than just malware development. Even on New Year’s Eve 2023, an underground forum member posted a thread demonstrating how they had used the tool to create scripts [57]. The scripts could be utilized to operate an automated dark web marketplace for buying and selling stolen account details, credit card information, malware, and more. As part of the payment system for a dark web marketplace, the cybercriminal even displayed a piece of code that was produced using a third-party API. The third-party API to retrieve the most recent prices for the Monero, Bitcoin, and Ethereum cryptocurrencies.

- According to the report from a technical standpoint, “it is difficult to determine whether a specific malware was constructed using ChatGPT or not".

- Furthermore, it is difficult to determine if harmful cyber activity created with the aid of ChatGPT is currently operating in the wild. Nevertheless, as interest in ChatGPT and other AI tools grows, they will attract the attention of cybercriminals and fraudsters seeking to exploit the technology to execute low-cost and minimal-effort destructive campaigns.

6. Survey Methodology and Data Collection

7. Results and Analysis

7.1. The Background of the Participants

7.2. Results Based On The ChatGPT Capability

7.3. Results on the ChatGPT Functionality Based On Features

7.4. Results on the Limitations of ChatGPT

7.5. Results on The Cybersecurity Issues of ChatGPT

7.6. Discussion

8. Recommendations and Solutions

8.1. Recommendations

- To restrict its abuse, we can only hope that authorities will move swiftly and that tools and laws will follow at the same rate as the underlying technology.

- ChatGPT and other generative AI solutions are tools, and like any piece of software or computer hardware, this technology excels at certain tasks while failing in others. It is crucial for IT leaders to comprehend the business processes and the areas in which ChatGPT excels so that they can identify opportunities to minimize friction, improve the quality of the service experience, and increase productivity.

- ICT teams should begin with augmentation, despite the allure of jumping directly into the AI endgame. The great technology that is generative AI is not self-sufficient. It still needs supervision and feedback from human curators, and as more processes are enhanced, IT should anticipate incurring the cost of enhancing its supervisory skills.

- New frontiers bring with them new potential issues. Workflow-managing chatbots are not complicated. However, a bot that interacts with users and generates content could subject the organization to legal risk by, for example, duplicating content from other sources or producing obscene or offensive photos. Before deploying such technology, organizations should get legal guidance.

- The capabilities of generative AI such as ChatGPT can significantly minimize the amount of manual labor required to complete specific jobs. Nevertheless, any function or task that necessitates broad permissions or approval and highly specialized or contextual knowledge may expose an organization to risk.

- Artificial intelligence can improve teaching and learning, but it should not be used as a replacement.

- Literary works should be cited and properly referenced; the content provided by ChatGPT is the work of authors/researchers/websites and is not created out of thin air.

- Proper research should be conducted rather than relying solely on the responses provided by ChatGPT.

- Despite the limited number of responses provided by ChatGPT, search engines remain a reliable source of information and should not be substituted/replaced.

8.2. Solution to the Use of ChatGPT for Cyberattack

- Train employees as soon as possible on new threats, including fun, in-person sessions that help make security habits relatable.

- Strengthen their security by discussing cybercrime and its potential impact on an organization’s ability to operate on a regular basis.

- Consider adding security services to contain threats and monitor for potential issues that get past defend-and-protect solutions, such as stopping infiltrations that can occur because of phishing attacks.

- Penetration testing can help you safeguard your data and ensure its confidentiality, integrity, and availability in the new world of ChatGPT. Businesses must also strengthen their data resilience strategy and have a solid data protection plan in place.

- While, ChatGPT has many advantages for businesses, it also has significant security risks. Organizations must be aware of these risks and take precautions to reduce them. They should invest in strong cybersecurity measures and stay current on security trends. By implementing appropriate safeguards, organizations can reap the many benefits of ChatGPT while defending themselves against those who misuse the tool.

- Spam detection and anti-phishing tools routinely scan the text of an email for common indicators of fraudulent behavior, such as spelling and grammatical errors, particularly those produced by people with limited English proficiency. These filters are ineffective against phishing emails generated by ChatGPT because the text generated by it does not contain these errors.

- Monitor user behavior when interacting with ChatGPT to identify suspicious or unauthorized activity. You can use anomaly detection techniques to identify patterns that deviate from normal behavior.

- Maintain comprehensive audit logs of ChatGPT interactions. Review and analyze these logs regularly to identify any unauthorized or malicious activity.

- Stay informed about the latest threat intelligence to understand emerging threats and vulnerabilities that could be exploited using ChatGPT.

- Implement privacy-preserving measures during the development and deployment of ChatGPT to protect and anonymize user data.

- Use content filtering mechanisms to prevent the generation of malicious or harmful content. Regularly update your filters to keep up with evolving threats.

8.3. Research Directions

- Counter fabricated responses, especially from models trained on unauthentic data.

- Investigate the causes and consequences of fabricated responses, which can improve model reliability.

- Explore new avenues for prompt injections, inspired by computer security principles.

- Assess the impact of complex integrated applications on language model performance and develop strategies to mitigate prompt-injection risks.

- Develop complex obfuscation methods for prompt injections, making it more difficult for models to distinguish malicious inputs from legitimate prompts.

- Create universal adversarial attacks that can transfer across multiple NLP models, and develop robust defenses against them.

- Expand the repertoire of text-based adversarial attack methods to strengthen defenses, drawing inspiration from the diversity of approaches seen in image and speech domains.

- Develop universal defense strategies, especially for black-box models, that can efficiently mitigate a wide range of adversarial attacks across diverse deep-learning models.

- Explore low-resource adversarial techniques to enable the execution of adversarial text generation and training with heightened efficiency, even within resource-limited environments.

9. Conclusions

Author Contributions

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Schulman, J.; Zoph, B.; Kim, C.; Hilton, J.; Menick, J.; Weng, J.; Uribe, J.; Fedus, L.; Metz, L.; Pokorny, M.; others. ChatGPT: Optimizing language models for dialogue, 2022.

- Sobania, D.; Briesch, M.; Hanna, C.; Petke, J. An Analysis of the Automatic Bug Fixing Performance of ChatGPT. arXiv preprint arXiv:2301.08653 2023. [CrossRef]

- Ventayen, R.J.M. OpenAI ChatGPT Generated Results: Similarity Index of Artificial Intelligence-Based Contents. Available at SSRN 4332664 2023. [CrossRef]

- Frieder, S.; Pinchetti, L.; Griffiths, R.R.; Salvatori, T.; Lukasiewicz, T.; Petersen, P.C.; Chevalier, A.; Berner, J. Mathematical Capabilities of ChatGPT. arXiv preprint arXiv:2301.13867 2023.

- Qadir, J. Engineering Education in the Era of ChatGPT: Promise and Pitfalls of Generative AI for Education 2022. [CrossRef]

- Jiao, W.; Wang, W.; Huang, J.t.; Wang, X.; Tu, Z. Is ChatGPT a good translator? A preliminary study. arXiv preprint arXiv:2301.08745 2023.

- Black, S.; Biderman, S.; Hallahan, E.; Anthony, Q.; Gao, L.; Golding, L.; He, H.; Leahy, C.; McDonell, K.; Phang, J.; others. Gpt-neox-20b: An open-source autoregressive language model. arXiv preprint arXiv:2204.06745 2022. [CrossRef]

- Dahiya, M. A Tool of Conversation: Chatbot, International Journal of Computer Sciences and Engineering, Volume-5, Issue-5 E-ISSN: 2347-2693. Int. J. Comput. Sci. Eng. 2017, 5. [Google Scholar]

- George, A.S.; George, A.H. A review of ChatGPT AI’s impact on several business sectors. Partners Universal International Innovation Journal 2023, 1, 9–23. [Google Scholar] [CrossRef]

- Taecharungroj, V. “What Can ChatGPT Do?” Analyzing Early Reactions to the Innovative AI Chatbot on Twitter. Big Data and Cognitive Computing 2023, 7, 35. [Google Scholar] [CrossRef]

- Fitria, T.N. Artificial intelligence (AI) technology in OpenAI ChatGPT application: A review of ChatGPT in writing English essay. ELT Forum: Journal of English Language Teaching, 2023, Vol. 12, pp. 44–58. [CrossRef]

- Zamir, H. Cybersecurity and Social Media. Cybersecurity for Information Professionals: Concepts and Applications 2020, p. 153.

- Vander-Pallen, M.A.; Addai, P.; Isteefanos, S.; Mohd, T.K. Survey on types of cyber attacks on operating system vulnerabilities since 2018 onwards. 2022 IEEE World AI IoT Congress (AIIoT). IEEE, 2022, pp. 01–07. [CrossRef]

- Reddy, G.N.; Reddy, G. A study of cyber security challenges and its emerging trends on latest technologies. arXiv preprint arXiv:1402.1842 2014. [CrossRef]

- Aslan, Ö.; Aktuğ, S.S.; Ozkan-Okay, M.; Yilmaz, A.A.; Akin, E. A comprehensive review of cyber security vulnerabilities, threats, attacks, and solutions. Electronics 2023, 12, 1333. [Google Scholar] [CrossRef]

- Al-Hawawreh, M.; Aljuhani, A.; Jararweh, Y. Chatgpt for cybersecurity: practical applications, challenges, and future directions. Cluster Computing 2023, pp. 1–16. [CrossRef]

- Vaishya, R.; Misra, A.; Vaish, A. ChatGPT: Is this version good for healthcare and research? Diabetes & Metabolic Syndrome: Clinical Research & Reviews 2023, 17, 102744. [Google Scholar] [CrossRef]

- Sallam, M. The utility of ChatGPT as an example of large language models in healthcare education, research and practice: Systematic review on the future perspectives and potential limitations. medRxiv 2023, pp. 2023–02. [CrossRef]

- Biswas, S.S. Role of chat gpt in public health. Annals of biomedical engineering 2023, 51, 868–869. [Google Scholar] [CrossRef] [PubMed]

- DEMİR, Ş.Ş.; DEMİR, M. Professionals’ perspectives on ChatGPT in the tourism industry: Does it inspire awe or concern? Journal of Tourism Theory and Research 2023, 9, 61–76. [Google Scholar] [CrossRef]

- Eke, D.O. ChatGPT and the rise of generative AI: threat to academic integrity? Journal of Responsible Technology 2023, 13, 100060. [Google Scholar] [CrossRef]

- Kasneci, E.; Seßler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; others. ChatGPT for good? On opportunities and challenges of large language models for education. Learning and Individual Differences 2023, 103, 102274. [Google Scholar] [CrossRef]

- Hill-Yardin, E.L.; Hutchinson, M.R.; Laycock, R.; Spencer, S.J. A Chat (GPT) about the future of scientific publishing. Brain Behav Immun 2023, 110, 152–154. [Google Scholar] [CrossRef] [PubMed]

- Surameery, N.M.S.; Shakor, M.Y. Use chat gpt to solve programming bugs. International Journal of Information Technology & Computer Engineering (IJITC) ISSN: 2455-5290 2023, 3, 17–22. [Google Scholar] [CrossRef]

- Eggmann, F.; Weiger, R.; Zitzmann, N.U.; Blatz, M.B. Implications of large language models such as ChatGPT for dental medicine. Journal of Esthetic and Restorative Dentistry 2023. [Google Scholar] [CrossRef]

- Biswas, S.S. Potential use of chat gpt in global warming. Annals of biomedical engineering 2023, 51, 1126–1127. [Google Scholar] [CrossRef]

- Kung, T.H.; Cheatham, M.; Medenilla, A.; Sillos, C.; De Leon, L.; Elepaño, C.; Madriaga, M.; Aggabao, R.; Diaz-Candido, G.; Maningo, J.; others. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLoS digital health 2023, 2, e0000198. [Google Scholar] [CrossRef]

- Qi, Y.; Zhao, X.; Huang, X. safety analysis in the era of large language models: a case study of STPA using ChatGPT. arXiv preprint arXiv:2304.01246 2023. [Google Scholar] [CrossRef]

- Ferrara, E. Should chatgpt be biased? challenges and risks of bias in large language models. arXiv preprint arXiv:2304.03738 2023. [Google Scholar] [CrossRef]

- Hosseini, M.; Horbach, S.P. Fighting reviewer fatigue or amplifying bias? Considerations and recommendations for use of ChatGPT and other Large Language Models in scholarly peer review. Research Integrity and Peer Review 2023, 8, 4. [Google Scholar] [CrossRef] [PubMed]

- Ray, P.P. ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet of Things and Cyber-Physical Systems 2023, 3, 121–154. [Google Scholar] [CrossRef]

- Bhattaram, S.; Shinde, V.S.; Khumujam, P.P. ChatGPT: The next-gen tool for triaging? The American Journal of Emergency Medicine 2023, 69, 215–217. [Google Scholar] [CrossRef]

- Wu, T.; He, S.; Liu, J.; Sun, S.; Liu, K.; Han, Q.L.; Tang, Y. A Brief Overview of ChatGPT: The History, Status Quo and Potential Future Development. IEEE/CAA Journal of Automatica Sinica 2023, 10, 1122–1136. [Google Scholar] [CrossRef]

- Van Dis, E.A.; Bollen, J.; Zuidema, W.; van Rooij, R.; Bockting, C.L. ChatGPT: five priorities for research. Nature 2023, 614, 224–226. [Google Scholar] [CrossRef]

- Hanna, R. How and Why ChatGPT Failed The Turing Test. Unpublished MS. Available online at URL=< https://www. academia. edu/94870578/How_and_Why_ChatGPT_Failed_The_Turing_Test_January_2023_version_2023.

- Jaques, N.; Ghandeharioun, A.; Shen, J.H.; Ferguson, C.; Lapedriza, A.; Jones, N.; Gu, S.; Picard, R. Way off-policy batch deep reinforcement learning of implicit human preferences in dialog. arXiv preprint arXiv:1907.00456 2019. [Google Scholar] [CrossRef]

- Koubaa, A.; Boulila, W.; Ghouti, L.; Alzahem, A.; Latif, S. Exploring ChatGPT capabilities and limitations: A critical review of the nlp game changer 2023. [CrossRef]

- Adamopoulou, E.; Moussiades, L. Chatbots: History, technology, and applications. Machine Learning with Applications 2020, 2, 100006. [Google Scholar] [CrossRef]

- Wu, T.; He, S.; Liu, J.; Sun, S.; Liu, K.; Han, Q.L.; Tang, Y. A brief overview of ChatGPT: The history, status quo and potential future development. IEEE/CAA Journal of Automatica Sinica 2023, 10, 1122–1136. [Google Scholar] [CrossRef]

- Kim, J.K.; Chua, M.; Rickard, M.; Lorenzo, A. ChatGPT and large language model (LLM) chatbots: the current state of acceptability and a proposal for guidelines on utilization in academic medicine. Journal of Pediatric Urology 2023. [Google Scholar] [CrossRef] [PubMed]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; others. Language models are few-shot learners. Advances in neural information processing systems 2020, 33, 1877–1901. [Google Scholar]

- Perez, E.; Kiela, D.; Cho, K. True few-shot learning with language models. Advances in neural information processing systems 2021, 34, 11054–11070. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347 2017. [Google Scholar] [CrossRef]

- Gu, Y.; Cheng, Y.; Chen, C.P.; Wang, X. Proximal policy optimization with policy feedback. IEEE Transactions on Systems, Man, and Cybernetics: Systems 2021, 52, 4600–4610. [Google Scholar] [CrossRef]

- Tsai, M.L.; Ong, C.W.; Chen, C.L. Exploring the use of large language models (LLMs) in chemical engineering education: Building core course problem models with Chat-GPT. Education for Chemical Engineers 2023, 44, 71–95. [Google Scholar] [CrossRef]

- Shoufan, A. Can students without prior knowledge use ChatGPT to answer test questions? An empirical study. ACM Transactions on Computing Education 2023. [Google Scholar] [CrossRef]

- Khurana, D.; Koli, A.; Khatter, K.; Singh, S. Natural language processing: State of the art, current trends and challenges. Multimedia tools and applications 2023, 82, 3713–3744. [Google Scholar] [CrossRef] [PubMed]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; others. Huggingface’s transformers: State-of-the-art natural language processing. arXiv preprint arXiv:1910.03771, 2019. [Google Scholar] [CrossRef]

- Taofeek, O.T.; Alawida, M.; Alabdulatif, A.; Omolara, A.E.; Abiodun, O.I. A Cognitive Deception Model for Generating Fake Documents to Curb Data Exfiltration in Networks During Cyber-Attacks. IEEE Access 2022, 10, 41457–41476. [Google Scholar] [CrossRef]

- Alawida, M.; Omolara, A.E.; Abiodun, O.I.; Al-Rajab, M. A deeper look into cybersecurity issues in the wake of Covid-19: A survey. Journal of King Saud University-Computer and Information Sciences, 2022. [Google Scholar] [CrossRef]

- Mateus-Coelho, N.; Cruz-Cunha, M. Exploring Cyber Criminals and Data Privacy Measures; IGI Global, 2023.

- Pa Pa, Y.M.; Tanizaki, S.; Kou, T.; Van Eeten, M.; Yoshioka, K.; Matsumoto, T. An Attacker’s Dream? Exploring the Capabilities of ChatGPT for Developing Malware. Proceedings of the 16th Cyber Security Experimentation and Test Workshop, 2023, pp. 10–18. [CrossRef]

- Dameff, C.; Tully, J.; Chan, T.C.; Castillo, E.M.; Savage, S.; Maysent, P.; Hemmen, T.M.; Clay, B.J.; Longhurst, C.A. Ransomware attack associated with disruptions at adjacent emergency departments in the US. JAMA network open 2023, 6, e2312270–e2312270. [Google Scholar] [CrossRef] [PubMed]

- Jacob, S. The Rapid Increase of Ransomware Attacks Over the 21st Century and Mitigation Strategies to Prevent Them from Arising 2023.

- Matthijsse, S.R.; van‘t Hoff-de Goede, M.; Leukfeldt, E.R.; others. Your files have been encrypted: a crime script analysis of ransomware attacks. Trends in Organized Crime 2023, pp. 1–27. [CrossRef]

- Abiodun, O.I.; Alawida, M.; Omolara, A.E.; Alabdulatif, A. Data provenance for cloud forensic investigations, security, challenges, solutions and future perspectives: A survey. Journal of King Saud University-Computer and Information Sciences 2022. [Google Scholar] [CrossRef]

- Choi, K.S.; Lee, C.S. In the Name of Dark Web Justice: A Crime Script Analysis of Hacking Services and the Underground Justice System. Journal of Contemporary Criminal Justice 2023, 39, 201–221. [Google Scholar] [CrossRef]

| Gender | Frequency | Percentages |

| Male | 151 | 60% |

| Female | 102 | 40% |

| Total | 253 | 100 |

| Participants age group | Frequency | Percentages |

| 18-30 | 21 | 8% |

| 31-40 | 43 | 17% |

| 41-50 | 67 | 26% |

| 51-55 | 90 | 36% |

| 56 and above | 32 | 13% |

| Total | 253 | 100 |

| Participants educational qualification | Frequency | Percentages |

| Undergraduate | 34 | 9% |

| University Graduate | 117 | 46% |

| Master’s degree | 80 | 32% |

| Doctorate degree | 22 | 13% |

| Total | 253 | 100 |

| Participants’ Area of Expertise | Number of experts and non-experts in computer | Percentages |

| Information and communication technology (ICT) professionals | 45 | 18% |

| AI and cybersecurity experts | 100 | 39% |

| Knowledge management professionals | 35 | 14% |

| Software engineers | 50 | 20% |

| Others | 23 | 9% |

| Total | 253 | 100 |

| S/n | Questions | Agreed | Neutral | Disagreed |

| 1 | ChatGPT is accessible via OpenAI’ s API. | 74% | 8% | 18% |

| 2 | ChatGPT can be used to address challenges in education and other sector. | 77% | 3% | 23% |

| 3 | ChatGPT has many applications including teaching, learning, and researching. | 72% | 8% | 20% |

| 4 | ChatGPT can be used only by teachers and students. | 16% | 5% | 79% |

| 5 | ChatGPT has the capability to compose a variety of writing forms, including emails, college essays, and countless others. | 87% | 3% | 10% |

| 6 | ChatGPT is capable of automatically composing comments for regulatory processes. | 75% | 6% | 19% |

| 7 | It might compose letters to the editor that would be published in local newspapers. | 77% | 5% | 18% |

| 8 | It may remark on millions of news stories, blog pieces, and social media posts per day. | 81% | 19% | 19% |

| 9 | Capable of writing and debugging computer programs. | 85% | 5% | 10% |

| 10 | Ability to compose poetry and song lyrics. Agreed or disagreed? | 76% | 4% | 20% |

| 11 | Capability to write music, television scripts, fables, and student essays. | 83% | 7% | 10% |

| 12 | Ability to compose poetry and song lyrics. | 81% | 9% | 10% |

| 13 | Capable of remembering prior questions asked throughout the same chat. | 85% | 3% | 12% |

| 14 | Capability to prevent offensive content from being displayed. | 86% | 4% | 10% |

| 15 | Capable of executing moderation API and able to identify racist or sexist cues. | 83% | 3% | 14% |

| 16 | Capable of identifying internet hate speech. | 74% | 5% | 21% |

| 17 | Capability to construct Python-based malware that looks for popular files such as Microsoft Office documents, PDFs, and photos, then copies and uploads them to a file transfer protocol server. | 79% | 2% | 19% |

| 18 | Ability to construct Java-based malware, which may be used with PowerShell to covertly download and execute more malware on compromised PCs. | 82% | 2% | 16% |

| 19 | ChatGPT’s knowledge of events that transpired after 2021 is limited. | 72% | 4% | 24% |

| 20 | ChatGPT has cybersecurity challenges, that must be resolved to counter cybercriminals’ exploitation. | 92% | 1% | 7% |

| 21 | ChatGPT may generate plausible-appearing research studies for highly ranked publications even in its most basic form. | 76% | 2% | 22% |

| 22 | Assist in software programming when fed with questions on the problem. | 81% | 1% | 18% |

| 23 | Capability of performing coding assistance and writing job applications. | 85% | 5% | 10% |

| 24 | Highly applicable in advertising. That is, ChatGPT has a potential use case in advertising which is in the generation of social media content. | 76% | 4% | 20% |

| 25 | ChatGPT can be used to generate captions for video ads, which can be a powerful tool for engaging with viewers. | 83% | 2% | 15% |

| 26 | ChatGPT can also be used in personalizing customer interactions through chatbots and voice assistants. | 81% | 4% | 15% |

| 27 | It can be used to create more natural and personalized interactions with customers through chatbots or virtual assistants. Agreed or disagreed? | 85% | 5% | 10% |

| 28 | ChatGPT can affect the advertising and marketing industries in a number of ways. | 76% | 4% | 20% |

| 29 | Capability to respond to exam questions (but sometimes, depending on the test, at a level above the average human test-taker). | 87% | 3% | 10% |

| 30 | Capacity to replace humans in democratic processes, not via voting but by lobbying. | 78% | 2% | 20% |

| S/n | Questions | Agreed | Neutral | Disagreed |

| 1 | ChatGPT is pre-trained on a huge corpus of conversational text, allowing it to comprehend the context of a discussion and provide responses that are more natural and coherent. | 77% | 5% | 18% |

| 2 | ChatGPT can be used only by teachers and students. | 72% | 8% | 20% |

| 3 | ChatGPT can handle batch input and output which allows it to handle numerous prompts and deliver several responses simultaneously. | 76% | 3% | 21% |

| 4 | ChatGPT can be used to address challenges in education and other sector. | 85% | 5% | 10% |

| 5 | ChatGPT has the ability to manage enormous datasets and sophisticated computations. | 73% | 7% | 21% |

| 6 | ChatGPT is capable of generating human-like language and replies fluidly to input. | 77% | 5% | 18% |

| 7 | ChatGPT has many applications including teaching, learning, and researching. | 81% | 19% | 19% |

| 8 | It can track the dialogue and effortlessly handle context switching and topic shifts. | 85% | 3% | 12% |

| 9 | It can handle both short and lengthy forms of writing. | 74% | 2% | 24% |

| 10 | It can grasp various forms of expression including sarcasm, and irony. | 81% | 3 9% | 10% |

| 11 | The ChatGPT model could translate text from one language to another. | 83% | 3% | 14% |

| 12 | ChatGPT can be fine-tuned for specific conversational activities such as language comprehension. | 85% | 3% | 12% |

| 13 | ChatGPT can be fine-tuned for text summarization, making it more successful at handling these tasks. | 86% | 4% | 10% |

| 14 | ChatGPT can be fine-tuned for specific conversational activities such as text production. | 83% | 3% | 14% |

| 15 | ChatGPT are a great asset to mankind because they have a lot of benefits. | 74% | 5% | 21% |

| 16 | Although ChatGPT has many benefits, they have some barriers that limit its uses. | 79% | 4% | 17% |

| 17 | One of the limitations of ChatGPT is the possibility of over-optimization due to its reliance on human control. | 82% | 2% | 16% |

| 18 | ChatGPT’s knowledge of events that transpired after 2021 is limited. | 72% | 3% | 25% |

| 19 | ChatGPT has cybersecurity challenges that must be resolved to counter cybercriminals’ exploitation. | 82% | 5% | 13% |

| S/n | Questions | Agreed | Neutral | Disagreed |

| 1 | ChatGPT has issues such as the possibility of over-optimization due to its reliance on human control. | 74% | 5% | 21% |

| 2 | It is also constrained by a lack of knowledge regarding occurrences occurring after 2021. | 79% | 2% | 19% |

| 3 | In other instances, it has also identified algorithmic biases in answer generation. | 82% | 2% | 16% |

| 4 | ChatGPT is incapable of comprehending the intricacy of human language and relies solely on statistical patterns, despite its ability to produce results that appear genuine. | 72% | 4% | 24% |

| 5 | ChatGPT has a number of deficiencies, including OpenAI admitting, however, that ChatGPT "sometimes generates plausible-sounding but inaccurate or illogical responses”. | 92% | 1% | 7% |

| 6 | The human raters are not subject matter experts; therefore, they tend to select language that appears persuasive. | 76% | 2% | 22% |

| 7 | They could recognize many hallucinatory symptoms, but not all. Errors of fact that slip through are difficult to detect. | 81% | 1% | 18% |

| 8 | Errors of fact that slip through are difficult to detect. In accordance with Goodhart’s rule, the reward model of ChatGPT, which is based on human oversight, can be over-optimized and hamper performance. | 85% | 5% | 10% |

| 9 | ChatGPT’s knowledge of events that transpired after 2021 is limited. | 75% | 6% | 19% |

| 10 | According to the BBC, ChatGPT will prohibit "expressing political viewpoints or engaging in political activities" as of December 2022. | 77% | 5% | 18% |

| 11 | When ChatGPT reacts to cues containing descriptors of persons, algorithmic bias in the training data may become apparent. | 72% | 8% | 20% |

| 12 | In one instance, ChatGPT produced a rap implying that women and scientists of color are inferior to white and male scientists, which is discriminatory. | 76% | 3% | 21% |

| S/n | Questions | Agreed | Neutral | Disagreed |

| 1 | The ChatGPT model, like any other AI system, is vulnerable to cyber security issues. | 95% | 2% | 3% |

| 2 | ChatGPT has issues such as the possibility of being used by cybercriminals due to its reliance on human control. | 84% | 5% | 11% |

| 3 | ChatGPT may be hacked or compromised in some way, potentially resulting in the theft or misuse of confidential material. | 89% | 2% | 9% |

| 4 | ChatGPT poses a number of security risks. It could, for instance, be used to generate false or deceptive messages that could be used to deceive people or organizations. | 92% | 2% | 6% |

| 5 | One of the main concerns about using the ChatGPT model is the potential for malicious use, such as creating fake news, impersonating individuals, or spreading disinformation. | 82% | 4% | 14% |

| 6 | The model may have access to sensitive data that, if it falls into the wrong hands, could be misused. | 94% | 1% | 5% |

| 7 | The ChatGPT model’s large size also poses security risks in terms of data protection and privacy. | 86% | 2% | 12% |

| 8 | Attackers can use ChatGPT to create convincing phishing emails that are nearly impossible to distinguish from those sent by a real person. | 81% | 1% | 18% |

| 9 | Cyberattackers are already planning on how to use ChatGPT for malware development, social engineering, disinformation, phishing, malvertising, and money-making schemes. | 85% | 5% | 10% |

| 10 | As interest in ChatGPT and other AI tools grows, so will cybercriminals and fraudsters looking to use the technology to carry out low-cost, low-effort destructive campaigns. | 75% | 6% | 19% |

| 11 | It creates a risk of data breaches, as well as the potential for unauthorized access to sensitive information. | 77% | 5% | 18% |

| 12 | Cyberattacks can have serious consequences for individuals, organizations, and even entire countries, so developing methods to detect and prevent such attacks is critical. | 84% | 6% | 10% |

| 13 | Companies need to be mindful of the numerous risks associated with ChatGPT and take precautions to mitigate them. | 87% | 5% | 8% |

| 14 | Companies should invest in robust cybersecurity measures and keep up with security trends. | 86% | 3% | 11% |

| 15 | Organizations can reap the many benefits of ChatGPT while defending themselves against those who abuse the tool by implementing appropriate safeguards. | 96% | 1% | 3% |

| 16 | It is important to implement robust security measures to protect the model and the data it was trained on, as well as to prevent unauthorized access to the model. | 89% | 4% | 7% |

| 17 | Spam detection and anti-phishing tools can be used to scan the text of an email for common indicators of fraudulent behavior regularly. | 93% | 2% | 5% |

| 18 | In the new world of ChatGPT, penetration testing can help to safeguard data and ensure its confidentiality, integrity, and availability. | 81% | 5% | 14% |

| 19 | Businesses must also improve their data resilience strategy and implement a solid data protection plan. | 88% | 3% | 9% |

| 20 | A major focus for the future of ChatGPT should be the development of methods to detect and prevent malicious use of the model. | 87% | 5% | 8% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).