1. Introduction

Weather and climate prediction play an important role in human history. Weather forecasting serves as a critical tool that underpins various facets of human life and societal operations, permeating everything from individual decision-making to large-scale industrial planning. Its significance at the individual level is manifested in its capacity to guide personal safety measures, from avoiding hazardous outdoor activities during inclement weather to taking health precautions in extreme temperatures. This decision-making extends into the agricultural realm, where forecasts inform the timing for planting, harvesting, and irrigation, ultimately contributing to maximized crop yields and stable food supply chains [

4]. The ripple effect of accurate forecasting also reaches the energy sector, where it aids in efficiently managing demand fluctuations, allowing for optimized power generation and distribution. This efficiency is echoed in the transportation industry, where the planning and scheduling of flights, train routes, and maritime activities hinge on weather conditions. Precise weather predictions are key in mitigating delays and enhancing safety pro tocols [

3]. Beyond these sectors, weather forecasting plays an integral role in the realm of construction and infrastructure development. Adverse conditions can cause project delays and degrade quality, making accurate forecasts a cornerstone of effective project management. Moreover, the capacity to forecast extreme weather events like hurricanes and typhoons is instrumental in disaster management, offering the possibility of early warnings and thereby mitigating loss of life and property [

2].

Although climate prediction often is ignored by human beings in the short term, it has a close relationship with Earth’s life. Global warming and the subsequent rise in sea levels constitute critical challenges with far-reaching implications for the future of our planet. Through sophisticated climate modelling and forecasting techniques, we stand to gain valuable insights into the potential ramifications of these phenomena, thereby enabling the development of targeted mitigation strategies. For instance, precise estimations of sea-level changes in future decades could inform rational urban planning and disaster prevention measures in coastal cities. On an extended temporal scale, climate change is poised to instigate considerable shifts in the geographical distribution of numerous species, thereby jeopardizing biodiversity. State-of-the-art climate models integrate an array of variables—encompassing atmospheric conditions, oceanic currents, terrestrial ecosystems, and biospheric interactions—to furnish a nuanced comprehension of environmental transformations [

7]. This integrative approach is indispensable for the formulation of effective global and regional policies aimed at preserving ecological diversity. Economic sectors such as agriculture, fisheries, and tourism are highly susceptible to the vagaries of climate change. Elevated temperatures may precipitate a decline in crop yields, while an upsurge in extreme weather events stands to impact tourism adversely. Longitudinal climate forecasts are instrumental in guiding governmental and business strategies to adapt to these inevitable changes. Furthermore, sustainable resource management, encompassing water, land, and forests, benefits significantly from long-term climate projections. Accurate predictive models can forecast potential water scarcity in specific regions, thereby allowing for the preemptive implementation of judicious water management policies. Climate change is also implicated in a gamut of public health crises, ranging from the proliferation of infectious diseases to an uptick in heatwave incidents. Comprehensive long-term climate models can equip public health agencies with the data necessary to allocate resources and devise effective response strategies.

Table 1 elucidates the diverse applications of weather forecasting across multiple sectors and time frames. In the short-term context, weather forecasts are instrumental for agricultural activities such as determining the optimal timing for sowing and harvesting crops, as well as formulating irrigation and fertilization plans. In the energy sector, short-term forecasts facilitate accurate predictions of output levels for wind and solar energy production. For transportation, which encompasses road, rail, aviation, and maritime industries, real-time weather information is vital for operational decisions affecting safety and efficiency. Similarly, construction projects rely on short-term forecasts for planning and ensuring safe operations. In the retail and sales domain, weather forecasts enable businesses to make timely inventory adjustments. For tourism and entertainment, particularly those involving outdoor activities and attractions, short-term forecasts provide essential guidance for day-to-day operations. Furthermore, short-term weather forecasts play a pivotal role in environment and disaster management by providing early warnings for floods, fires, and other natural calamities. In the medium-to-long-term scenario, weather forecasts have broader implications for strategic planning and risk assessment. In agriculture, these forecasts are used for long-term land management and planning. The insurance industry utilizes medium-to-long-term forecasts to prepare for prospective increases in specific types of natural disasters, such as floods and droughts. Real estate sectors also employ these forecasts for evaluating the long-term impact of climate-related factors like sea-level rise. Urban planning initiatives benefit from these forecasts for effective water resource management. For the tourism industry, medium-to-long-term weather forecasts are integral for long-term investments and for identifying regions that may become popular tourist destinations in the future. Additionally, in the realm of public health, long-term climate changes projected through these forecasts can inform strategies for controlling the spread of diseases. In summary, weather forecasts serve as a vital tool for both immediate and long-term decision-making across a diverse range of sectors.

Short-term weather prediction. Short-term weather forecasting primarily targets weather conditions that span from a few hours up to seven days, aiming to deliver highly accurate and actionable information that empowers individuals to make timely decisions like carrying an umbrella or postponing outdoor activities. These forecasts typically lose their reliability as they stretch further into the future. Essential elements of these forecasts include maximum and minimum temperatures, the likelihood and intensity of various forms of precipitation like rain, snow, or hail, wind speed and direction, levels of relative humidity or dew point temperature, and types of cloud cover such as sunny, cloudy, or overcast conditions [

1]. Visibility distance in foggy or smoky conditions and warnings about extreme weather events like hurricanes or heavy rainfall are also often included. The methodologies for generating these forecasts comprise numerical simulations run on high-performance computers, the integration of observational data from multiple sources like satellites and ground-based stations, and statistical techniques that involve pattern recognition and probability calculations based on historical weather data. While generally more accurate than long-term forecasts, short-term predictions are not without their limitations, often influenced by the quality of the input data, the resolution of the numerical models, and the sensitivity to initial atmospheric conditions. These forecasts play a crucial role in various sectors including decision-making processes, transportation safety, and agriculture, despite the inherent complexities and uncertainties tied to predicting atmospheric behavior.

Medium-to-long term climate prediction. Medium to Long-term Climate Forecasting (MLTF) concentrates on projecting climate conditions over periods extending from several months to multiple years, standing in contrast to short-term weather forecasts which focus more on immediate atmospheric conditions. The time frame of these climate forecasts can be segmented into medium-term, which generally ranges from a single season up to a year, and long-term, that could span years to decades or even beyond[

5]. Unlike weather forecasts, which may provide information on imminent rainfall or snowfall, MLTF centers on the average states or trends of climate variables, such as average temperature and precipitation, ocean-atmosphere interactions like El Niño or La Niña conditions, and the likelihood of extreme weather events like droughts or floods, as well as anticipated hurricane activities [

6]. The projection also encompasses broader climate trends, such as global warming or localized climatic shifts. These forecasts employ a variety of methods, including statistical models based on historical data and seasonal patterns, dynamical models that operate on complex mathematical equations rooted in physics, and integrated models that amalgamate multiple data sources and methodologies. However, the accuracy of medium- to long-term climate forecasting often falls short when compared to short-term weather predictions due to the intricate, multi-scale, and multi-process interactions that constitute the climate system, not to mention the lack of exhaustive long-term data. The forecasts’ reliability can also be influenced by socio-economic variables, human activities, and shifts in policy. Despite these complexities, medium- to long-term climate projections serve pivotal roles in areas such as resource management, agricultural planning, disaster mitigation, and energy policy formulation, making them not only a multi-faceted, multi-disciplinary challenge but also a crucial frontier in both climate science and applied research.

Survey Scope. In recent years, machine learning has emerged as a potent tool in meteorology, displaying strong capabilities in feature abstraction and trend prediction. Numerous studies have employed machine learning as the principal methodology for weather forecasting [

9,

10,

11]. Our survey extends this current understanding by including recent advances in the application of machine learning techniques such as High-Resolution Neural Networks and 3D neural networks representing the state-of-the-art in this multidisciplinary domain. This survey endeavours to serve as a comprehensive review of machine learning techniques applied in the realms of meteorology and climate prediction. Previous studies have substantiated the efficacy of machine learning methods in short-term weather forecasting [

16]. However, there exists a conspicuous dearth of nuanced research in the context of medium-to-long-term climate predictions [

17]. The primary objective of this survey is to offer a comprehensive analysis of nearly 20 diverse machine learning methods applied in meteorology and climate science. We categorize these methods based on their temporal applicability: short-term weather forecasting and medium-to-long-term climate predictions. This dual focus uniquely situates our survey as a bridge between immediate weather forecasts and longer climatic trends, thereby filling existing research gaps summarized as follows:

Limited Scope: Existing surveys predominantly focus either on short-term weather forecasting or medium-to-long-term climate predictions. There is a notable absence of comprehensive surveys that endeavour to bridge these two-time scales. In addition, current investigations tend to focus narrowly on specific methods, such as simple neural networks, thereby neglecting some combination of methods.

Lack of Model details: Many extant studies offer only generalized viewpoints and lack a systematic analysis of the specific model employed in weather and climate prediction. This absence creates a barrier to researchers aiming to understand the intricacies and efficacy of individual methods.

Neglect of Recent Advances: Despite rapid developments in machine learning and computational techniques, existing surveys have not kept pace with these advancements. The paucity of information on cutting-edge technologies stymies the progression of research in this interdisciplinary field.

By addressing these key motivations, this survey aims to serve as a roadmap for future research endeavours in this rapidly evolving, interdisciplinary field.

Contributions of the Survey. The contributions of this paper are as follows.

Comprehensive cope: Unlike research endeavors that restrict their inquiry to a singular temporal scale, our survey provides a comprehensive analysis that amalgamates short-term weather forecasting with medium- and long-term climate predictions. In total, 20 models were surveyed, from which a select subset of eight were chosen for an in-depth scrutiny.These models are discerned as the industry’s avant-garde, thereby serving as invaluable references for researchers. For instance, the PanGu model exhibits a remarkable congruence with actual observational results, thereby illustrating the caliber of models included in our analysis

In-Depth Analysis: Breaking new ground, this study delves into the intricate operational mechanisms of the eight focal models. We have dissected the operating mechanisms of these eight models, distinguishing the differences in their approaches and summarizing the commonalities in their methods through comparison. This comparison helps readers gain a deeper understanding of the efficacy and applicability of each model and provides a reference for choosing the most appropriate model for a given scenario.

Identification of Contemporary Challenges and Future Work: The survey identifies pressing challenges currently facing the field, such as limited dataset of chronological seasons and complex climate change effects, and suggests directions for future work, including simulating dataset and physics-Based Constraint model. These recommendations not only add a forward-looking dimension to our research but also act as a catalyst for further research and development in climate prediction.

Outline of the paper. This paper consists of six sections.

Section 1 describes our motivation and innovations compared to other weather prediction surveys.

Section 2 introduces some weather-related background knowledge.

Section 3 broadly introduces relevant methods for weather prediction other than machine learning.

Section 4 highlights the milestones of forecasting models using machine learning and their categorization.

Section 5 and 6 analyze representative methods in both short-term and medium- and long-term time scales.

Section 7 and 8 summarize the challenges faced and present promising future work and conclude the paper.

2. Background

In this section, the objective is to provide a thorough understanding of key meteorological principles, tailored to be accessible even to readers outside the meteorological domain. The section commences with an overview of Reanalysis Data, the cornerstone for data inputs in weather forecasting and climate projection models. Following this, the focus shifts to the vital aspect of model output validation. It is necessary to identify appropriate benchmarks and key performance indicators for assessing the model’s predictive accuracy. Without well-defined standards, the evaluation of a model’s effectiveness remains nebulous. Further, two essential concepts—bias correction and downscaling—are introduced. These become particularly relevant when discussing the role of machine learning in augmenting physical models. Finally, the text offers an in-depth explanation of predicting extreme events, clearly defining "extreme events" and differentiating them from routine occurrences.

Data source. Observed data undergoes a series of rigorous processing steps before it enters the predictive model (or what is known as the reanalysis data generation process). They are amassed from heterogeneous sources, such as ground-based networks like the Global Historical Climatology Network (GHCN), atmospheric tools like Next-Generation Radar (NEXRAD), and satellite systems like the Geostationary Operational Environmental Satellites (GOES). Oceanic measurements are captured through the specialized ARGO float network, focusing on key parameters like temperature and salinity. These raw datasets are further audited with quality control, spatial and temporal interpolation, and unit standardization.

Despite meticulous preprocessing, observational data exhibit challenges such as spatial-temporal heterogeneity, inherent measurement errors, and discrepancies with numerical models. To mitigate these issues, data assimilation techniques are employed. These techniques synergize observations with model forecasts using mathematical and statistical algorithms like Kalman filtering, Three-Dimensional Variational Analysis (3D-Var), and Four-Dimensional Variational Analysis (4D-Var) [

8].

Additionally, data assimilation can be utilized to enhance the initial model conditions and correct systemic model biases. The scope of data assimilation extends beyond singular meteorological models to complex Earth System Models that integrate dynamics from atmospheric, oceanic, and terrestrial subsystems. Post-assimilation where the model state is updated, leads to the generation of "reanalysis data". Popular reanalysis datasets include ERA5 from the European Centre for Medium-Range Weather Forecasts (ECMWF), NCEP/NCAR Reanalysis from the National Centers for Environmental Prediction and the National Center for Atmospheric Research, JRA-55 from the Japan Meteorological Agency, and MERRA-2 from NASA.

Result evaluation. Result evaluation serves as a critical stage in the iterative process of predictive modeling. It involves comparing forecasted outcomes against observed data to gauge the model’s reliability and accuracy. The temporal dimension is a critical factor in result evaluation. Short-term predictive models, like those used in weather forecasting, benefit from near-real-time feedback, which allows for frequent recalibration using machine learning algorithms like Ensemble Kalman Filters. On the other hand, long-term models, such as climate projections based on General Circulation Models (GCMs), are constrained by the absence of an immediate validation period.In weather forecasting, meteorologists employ a variety of numerical models like the Weather Research and Forecasting (WRF) model, which are evaluated based on short-term observational data. Standard metrics for evaluation include Mean Absolute Error (MAE), Root Mean Square Error (RMSE), and Skill Scores. The high-frequency availability of data, from sources like weather radars and satellites, facilitates rapid iterations and refinements.In contrast, climate models are scrutinized using different methodologies. Given their long-term nature, climate models are often validated using historical and paleoclimatic data. Statistical techniques like Empirical Orthogonal Functions (EOF) and Principal Component Analysis (PCA) are employed to identify and validate overarching climatic patterns. These models often have to account for high levels of uncertainty and are cross-validated against geological or even astronomical records, making immediate validation impractical. For weather forecasts, predictive accuracy within the scope of hours to days is paramount. Climate models, conversely, are evaluated based on their ability to accurately reproduce decadal and centennial patterns.

Bias correction. In the context of meteorology, climate science, machine learning, and statistical modeling, bias correction (or bias adjustment) refers to a set of techniques used to correct systematic errors (biases) in model simulations or predictions. These biases may arise due to various factors such as model limitations, uncertainties in parameterization, or discrepancies between model assumptions and real-world data. Bias Correction (Bias Adjustment) can be formally defined as the process of modifying the output of predictive models to align more closely with observed data. The primary objective is to minimize the difference between the model’s estimates and the observed values, thereby improving the model’s accuracy and reliability.

In more formal terms, let

M represent the model output, and

O represent the observed data. Bias

B is defined as:

The aim of bias correction is to find a function

f such that:

Various methods can be employed for bias correction, including simple linear adjustments, quantile mapping, and more complex machine-learning techniques. The choice of method often depends on the specific characteristics of the data and the overarching objectives of the study.

Down-scaling. Down-scaling in meteorology and climate science is a computational technique employed to bridge the gap between the spatial and temporal resolutions offered by General Circulation Models (GCMs) or Regional Climate Models (RCMs) and the scale at which specific applications, such as local weather predictions or hydrological assessments, operate. Given that GCMs and RCMs typically operate at a coarse resolution—spanning tens or hundreds of kilometres—downscaling aims to refine these projections to a more localized level, potentially down to single kilometres or less.

Extreme events. In meteorology, an "extreme event" refers to a rare occurrence within a statistical distribution of a particular weather variable. These events can be extreme high temperatures, heavy precipitation, severe storms, or high winds, among others. These phenomena are considered "extreme" due to their rarity and typically severe impact on ecosystems, infrastructure, and human life.

Symbol definition. Since many formulas are involved in weather and climate prediction methods, we have defined a table that summarizes all the common symbols and their definition.

Table 2.

Commonly used symbols and definitions.

Table 2.

Commonly used symbols and definitions.

| Symbol |

Definition |

| v |

velocity vector |

| t |

time |

|

fluid density |

| p |

pressure |

|

dynamic viscosity |

|

gravitational acceleration vector |

|

intensity of radiation at frequency

|

| s |

distance along the ray path |

|

absorption coefficient at frequency

|

|

emission coefficient at frequency

|

|

absorption coefficient at frequency

|

|

density of the medium |

|

Planck function at frequency

|

|

expectation under the variational distribution q( | ) |

|

latent variable |

|

observed data |

| p(, ) |

joint distribution of observed and latent variables |

| q( | ) |

variational distribution |

|

variational parameters |

| G, F |

Generators for mappings from simulated to real domain and vice versa. |

|

Discriminators for real and simulated domains. |

|

,

|

Cycle consistency loss and Generative Adversarial Network loss. |

| X, Y |

Data distributions for simulated and real domains. |

|

Weighting factor for the cycle consistency loss. |

In standard meteorological models, precipitation is usually represented as a three-dimensional array containing latitude, longitude, and elevation. Each cell in this array contains a numerical value that represents the expected precipitation for that particular location and elevation during a given time window. This data structure allows for straightforward visualization and analysis, such as contour maps or time series plots. Unlike standard precipitation forecasts, which focus primarily on the water content of the atmosphere, extreme events may require tracking multiple variables simultaneously. For example, hurricane modeling may include variables such as wind speed, atmospheric pressure, and sea surface temperature. Given the higher uncertainty associated with extreme events, the output may not be a single deterministic forecast, but rather a probabilistic one. An integration approach can be used to generate multiple model runs to capture a range of possible outcomes. Both types of predictions are typically evaluated using statistical metrics; however, for extreme events, more sophisticated measures such as event detection rates, false alarm rates, or skill scores associated with probabilistic predictions can be used.

3. Related work

This study principally centers on the utilization of machine learning techniques in the realm of climate prediction. However, to furnish a comprehensive perspective, we also elucidate traditional forecasting methodologies—statistical and physical methods—within this section. Historically speaking, the evolution of predictive models in climate science has undergone three distinct phases. Initially, statistical methods were prevalently deployed; however, their limited accuracy led to their gradual supplantation by physical models. While the role of statistical methods has dwindled in terms of standalone application, they are frequently amalgamated with other techniques to enhance predictive fidelity. Subsequently, physical models ascended to become the prevailing paradigm in climate prediction. Given the current predominance of physical models in the field of climate prediction, they serve as the natural benchmarks against which we evaluate the performance of emerging machine learning approaches. Finally, our focus on machine learning methods, exploring their potential to mitigate the limitations intrinsic to their historical predecessors.

3.1. Statistical method

Statistical or empirical forecasting methods have a rich history in meteorology, serving as the initial approach to weather prediction before the advent of computational models. Statistical prediction methodologies serve as the linchpin for data-driven approaches in meteorological forecasting, focusing on both short-term weather patterns and long-term climatic changes. These methods typically harness powerful statistical algorithms, among which Geographically Weighted Regression (GWR) and Spatio-Temporal Kriging (ST-Kriging) stand out as particularly effective [

19,

20].

GWR is instrumental in adjusting for spatial heterogeneity, allowing meteorological variables to exhibit different relationships depending on their geographical context. ST-Kriging extends this spatial consideration to include the temporal domain, thereby capturing variations in weather and climate that are both location-specific and time-sensitive. Such spatio-temporal modeling is especially pertinent in a rapidly changing environment, where traditional stationary models often fail to capture the dynamism inherent in meteorological systems.

Forecasting using inter-annual increments is now a statistically based forecasting method with better results.The interannual increment of a variable such as precipitation is calculated as:

Through meticulous analysis of variables correlating with the inter-annual growth rate of the predictive variable, five key predictive factors have been identified. A multivariate linear regression model was developed, employing these selected key predictive factors to estimate the inter-annual increment for future time units. The estimated inter-annual increment is subsequently aggregated with the actual variable value from the preceding year to generate a precise prediction of the total quantity for the current time frame.

However, these statistical models operate on a critical assumption cited in literature [

21,

22], which posits that the governing laws influencing past meteorological events are consistent and thus applicable to future events as well. While this assumption generally holds for many meteorological phenomena, it confronts limitations when dealing with intrinsically chaotic systems. The Butterfly Effect serves as a prime example of such chaotic behavior, where minuscule perturbations in initial conditions can yield dramatically divergent outcomes. This implies that the reliability of statistical models could be compromised when predicting phenomena susceptible to such chaotic influences.

3.2. Physical Models

Physical models were the predominant method for meteorological forecasting before the advent of Artifical intelligence (AI) and generally produce more accurate results compared to statistical methods. Physical models are predicated upon a foundational set of physical principles, including but not limited to Newton’s laws of motion, the laws of conservation of energy and mass, and the principles of thermodynamics. These governing equations are commonly expressed in mathematical form, with the Navier-Stokes equations serving as a quintessential example for describing fluid dynamics. At the core of these models lies the objective of simulating real-world phenomena in a computational setting with high fidelity. To solve these intricate equations, high-performance computing platforms are typically employed, complemented by specialized numerical methods and techniques such as Computational Fluid Dynamics (CFD) and Finite Element Analysis (FEA).

In the context of atmospheric science, these physical models are especially pivotal for Numerical Weather Prediction (NWP) and climate modeling. NWP primarily focuses on short-to-medium-term weather forecasting, striving for highly accurate meteorological predictions within a span of days or weeks. In contrast, climate models concentrate on long-term changes and predictions, which can span months, years, or even longer time scales. Owing to their rigorous construction based on physical laws, physical models offer a high degree of accuracy and reliability, providing researchers with valuable insights into the underlying mechanisms of weather and climate variations.

As mentioned before, Statistical-based methods can analyze past weather data to make predictions, but they may often fail to accurately predict future weather trends [

23], and physic-based models, despite being computationally intensive [

24], help us understand atmospheric, oceanic, and terrestrial processes in detail.Recently, machine learning methods have begun to be applied to the field of meteorology [

26], offering new ways to analyze and predict weather patterns and climate change [

25]. Machine learning methods are increasingly being utilized in meteorology for forecasting. Compared to physical models, they offer faster predictions, and compared to statistical methods, they provide more accurate results [

28]. Additionally, machine learning can be employed for error correction and downscaling, further enhancing its applicability in weather and climate predictions.

In the critical fields of weather forecasting and climate prediction, achieving accuracy and efficiency is of paramount importance. Traditional methods, while foundational, inevitably present limitations, creating a compelling need for innovative approaches. Machine learning has emerged as a promising solution, demonstrating significant potential in enhancing prediction outcomes.

4. Taxonomy of climate prediction applications.

In this section, we primarily explore the historical trajectory of machine learning applications within the field of meteorology. We categorize the surveyed methods according to distinct criteria, facilitating a more lucid understanding for the reader.

4.1. Climate prediction Milestone based on machine-learning.

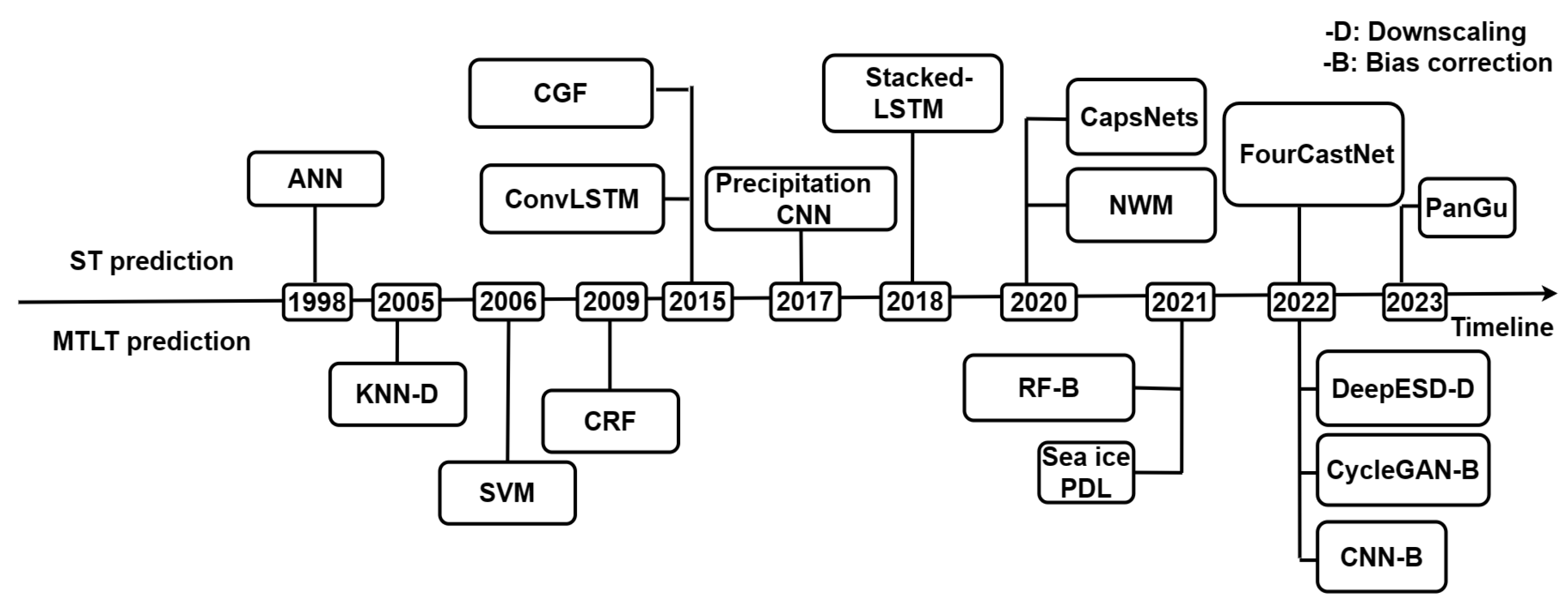

In this subsection, we surveyed almost 20 methods of machine learning applications for weather prediction and climate prediction. These methods are representative and common. We listed them as a timeline in figure one. The journey of machine learning applications in climate and weather prediction has undergone significant transformations since its inception.

Figure 1.

Application of machine-learning on climate prediction milestone.

Figure 1.

Application of machine-learning on climate prediction milestone.

Climate prediction methods before 2010. The earliest model in this context is the Precipitation Neural Network prediction model published in 1998. This model serves as an archetype of Basic DNN Models, leveraging Artificial Neural Networks to offer short-term forecasts specifically for precipitation in the Middle Atlantic Region. Advancing to the mid-2000s, the realm of medium-to-long-term predictions saw the introduction of ML-Enhanced Non-Deep-Learning Models, exemplified by KNN-Downscaling in 2005 and SVM-Downscaling in 2006. These models employed machine learning techniques like K-Nearest Neighbors and Support Vector Machines, targeting localized precipitation forecasts in the United States and India, respectively. In 2009, the field welcomed another medium-to-long-term model, CRF-Downscaling, which used Conditional Random Fields to predict precipitation in the Mahanadi Basin.

Climate prediction methods from 2010 - 2019. During the period from 2010 to 2019, the field of weather prediction witnessed significant technological advancements and diversification in modeling approaches. Around 2015, a notable shift back to short-term predictions was observed with the introduction of Hybrid DNN Models, exemplified by ConsvLSTM. This model integrated Long Short-Term Memory networks with Convolutional Neural Networks to provide precipitation forecasts specifically for Hong Kong. As the decade progressed, models became increasingly specialized. For instance, the 2017 Precipitation Convolution prediction model leveraged Convolutional Neural Networks to focus on localized precipitation forecasts in Guang Dong, China. The following year saw the emergence of the Stacked-LSTM-Model, which utilized Long Short-Term Memory networks for temperature predictions in Amsterdam and Eindhoven.

Climate prediction methods from 2020. Fast forward to 2020, the CapsNet model, a Specific Model, leveraged a novel architecture known as Capsule Networks to predict extreme weather events in North America. By 2021, the scope extended to models like RF-bias-correction and the Sea-ice prediction model, focusing on medium-to-long-term predictions. The former employed Random Forests for precipitation forecasts in Iran, while the latter utilized probabilistic deep learning techniques for forecasts in the Arctic region. Recent advancements as of 2022 and 2023 incorporate more complex architectures. Cycle GAN, a 2022 model, utilized Generative Adversarial Networks for global precipitation prediction. PanGu, a 2023 release, employed 3D Neural Networks for predicting extreme weather events globally. Another recent model, FourCastNet, leveraged a technique known as AFNO to predict extreme global events. And in 2022, this year also witnessed the introduction of DeepESD-Downscaling and CNN-Bias-correction models, both utilizing Convolutional Neural Networks to predict local temperature scales and perform global bias correction, respectively.

4.2. Classification of climate prediction methods

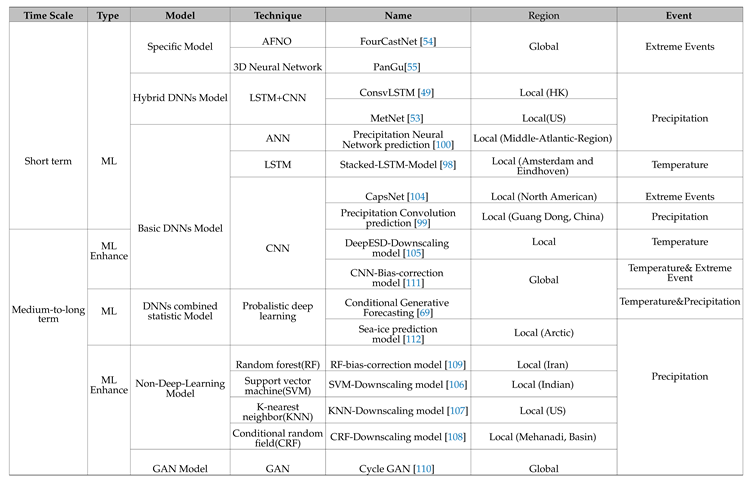

To provide a deeper level of understanding regarding the various weather prediction methods discussed, we have organized them into classifications in

Table 3. These classifications are made according to multiple criteria that encompass Time Scale, Type, Model, Technique, Name, Region, and Event. This structured approach aims to offer readers an easy way to compare and contrast different methods, as well as to gain insights into the specific contexts where each method is most applicable.

Time Scale. Models in weather and climate prediction are initially divided based on their temporal range into ’Short-term’ and ’Medium-to-long-term’. Short-term models primarily aim to provide immediate or near-future weather forecasts. In contrast, medium-to-long-term models are geared toward long-range forecasting, often spanning weeks, months, or even years.

ML and ML-Enhance Types. We categorize models into ML and ML-Enhance types. In ML type, algorithms are directly applied to climate data for pattern recognition or predictive tasks. These algorithms typically operate independently of traditional physical models, relying instead on data-driven insights garnered from extensive climate datasets. Contrastingly, ML-Enhanced type integrate machine learning techniques into conventional physical models to optimize or enhance their performance. Fundamentally, these approaches still rely on physical models for prediction. However, machine learning algorithms serve as auxiliary tools for parameter tuning, feature engineering, or addressing specific limitations in the physical models, thereby improving their overall predictive accuracy and reliability.

Model. Within each time scale, models are further categorized by their type. These models include: Specific Models: These are unique or specialized neural network architectures developed for particular applications.

Specific Models: Unique or specialized neural network architectures developed for particular applications.

Hybrid DNN Models: These models use a combination of different neural network architectures, such as LSTM+CNN.

Basic DNN Models:These models employ foundational Deep Neural Network architectures like ANNs (Artificial Neural Networks), CNNs (Convolutional Neural Networks), and LSTMs (Long Short-Term Memory networks).

DNN combined with statistical model: These models merge deep learning’s feature extraction capabilities with statistical methods for handling uncertainty. This hybrid approach aims to leverage the strengths of both DNNs and traditional statistics, offering both robust data representation and enhanced interpretability. It is particularly useful in applications requiring both high accuracy and uncertainty quantification.

Non-Deep-Learning Models: These models incorporate machine learning techniques that do not rely on deep learning, such as Random Forests and Support Vector Machines.

Technique. This category specifies the underlying machine learning or deep learning technique used in a particular model, for example, CNN, LSTM, Random Forest, Probalistic deep learning and GAN.

CNN. A specific type of ANN is the Convolutional Neural Network (CNN), designed to automatically and adaptively learn spatial hierarchies from data [

34]. CNNs comprise three main types of layers: convolutional, pooling, and fully connected [

41]. The convolutional layer applies various filters to the input data to create feature maps, identifying spatial hierarchies and patterns. Pooling layers reduce dimensionality, summarizing features in the previous layer [

36]. Fully connected layers then perform classification based on the high-level features identified [

31].CNNs are particularly relevant in meteorology for tasks like satellite image analysis, with their ability to recognize and extract spatial patterns [

37]. Their unique structure allows them to capture local dependencies in the data, making them robust against shifts and distortions [

38].

LSTM. Long Short-Term Memory (LSTM) units, a specialized form of recurrent neural network architecture [

49]. Purposefully designed to mitigate the vanishing gradient problem inherent in traditional RNNs, LSTM units manage the information flow through a series of gates, namely the input, forget, and output gates. These gates govern the retention, forgetting, and output of information, allowing LSTMs to effectively capture long-range dependencies and temporal dynamics in sequential data [

49]. In the context of meteorological forecasting, the utilization of LSTM contributes to a nuanced understanding of weather patterns, as it retains relevant historical information and discards irrelevant details over various time scales [

49]. The pioneering design of LSTMs and their ability to deal with nonlinear time dependencies have led to their outstanding robustness, adaptability, and efficiency, making them an essential part of modern predictive models. [

49].

Random forest. A technique used to adjust or correct biases in predictive models, particularly in weather forecasting or climate modeling. Random Forest (RF) is a machine learning algorithm used for various types of classification and regression tasks. In the context of bias correction, the Random Forest algorithm would be trained to identify and correct systematic errors or biases in the predictions made by a primary forecasting model.

Probabilistic deep learning. Probabilistic deep learning models in weather forecasting aim to provide not just point estimates of meteorological variables but also a measure of uncertainty associated with the predictions. By leveraging complex neural networks, these models capture intricate relationships between various features like temperature, humidity, and wind speed. The probabilistic aspect helps in quantifying the confidence in predictions, which is crucial for risk assessment and decision-making in weather-sensitive industries.

Generative adversarial networks. Generative Adversarial Networks (GANs) are a class of deep learning models composed of two neural networks: a Generator and a Discriminator. The Generator aims to produce data that closely resembles a genuine data distribution, while the Discriminator’s role is to distinguish between real and generated data. During training, these networks engage in a kind of "cat-and-mouse" game, continually adapting and improving—ultimately with the goal of creating generated data so convincing that the Discriminator can no longer tell it apart from real data.

Name. Some models are commonly cited or recognized under a specific name, such as ’PanGu’ or ’FourCastNet’. Some models are named after their technical features.

Region. This criterion indicates whether a model is globally applicable or designed for specific geographical locales like the United States, China, or the Arctic.

Event. The type of weather or climatic events that the model aims to forecast is specified under this category. This could range from generalized weather conditions like temperature and precipitation to more extreme weather events.

Selection Rationale. In the next section, we will discuss the related reasons. In the short term, we choose two specific ones(PanGu and FourCastNet) as analysis targets according to the model type. And we also analyze the MetNet which is a hybrid DNNs Model. The other hybrid DNNs Model (ConsLSTM) is one part of MetNet. In the medium-to-long term, we choose the probabilistic deep learning model(Conditional Generative Forecasting). It has more extensive applicability compared to the other one in Probabilistic deep learning category. Probabilistic deep learning method is also minority machine learning method which could be used in medium-to-long term prediction. In addition, we also selected two machine learning methods for downscaling and bias correction.

5. Short-term weather forecast

Weather forecasting aims to predict atmospheric phenomena within a short time-frame, generally ranging from one to three days. This information is crucial for a multitude of sectors, including agriculture, transportation, and emergency management. Factors such as precipitation, temperature, and extreme weather events are of particular interest. Forecasting methods have evolved over the years, transitioning from traditional numerical methods to more advanced hybrid and machine-learning models. This section elucidates the working principles, methodologies, and merits and demerits of traditional numerical weather prediction models, MetNet, FourCastNet, and PanGu.

5.1. Model Design

Numerical Weather Prediction. Numerical Weather Prediction (NWP) stands as a cornerstone methodology in the realm of meteorological forecasting, fundamentally rooted in the simulation of atmospheric dynamics through intricate physical models. At the core of NWP lies a set of governing physical equations that encapsulate the holistic behaviour of the atmosphere:

The Navier-Stokes Equations [

45]: Serving as the quintessential descriptors of fluid motion, these equations delineate the fundamental mechanics underlying atmospheric flow.

The Thermodynamic Equations [

46]: These equations intricately interrelate the temperature, pressure, and humidity within the atmospheric matrix, offering insights into the state and transitions of atmospheric energy.

The Radiative Transfer Equations [

61]: These equations provide a comprehensive framework for understanding energy exchanges between the Earth and the Sun, shedding light on the intricacies of terrestrial and solar radiative dynamics.

Microphysical Processes [

48]: Delving into the nuances of cloud physics, these processes elucidate the genesis, evolution, and dissipation of clouds, serving as critical components in the atmospheric system.

Collectively, these equations form the primal equations of the model. Being time-dependent partial differential equations, they demand sophisticated numerical techniques for their solution. The resolution of these equations permits the simulation of the evolving dynamism inherent in the atmosphere, paving the way for accurate and predictive meteorological insights.

In Numerical Weather Prediction (NWP), a critical tool for atmospheric dynamics forecasting, the process begins with data assimilation, where observational data is integrated into the model to reflect current conditions. This is followed by numerical integration, where governing equations are meticulously solved to simulate atmospheric changes over time. However, certain phenomena, like microphysics of clouds, cannot be directly resolved and are accounted for through parameterization to approximate their aggregate effects. Finally, post-processing methods are used to reconcile potential discrepancies between model predictions and real-world observations, ensuring accurate and reliable forecasts. This comprehensive process captures the complexity of weather systems and serves as a robust method for weather prediction [

44].

While the sophistication of NWP allows for detailed simulations of global atmospheric states, one cannot overlook the intensive computational requirements of such models. Even with the formidable processing capabilities of contemporary supercomputers, a ten-day forecast simulation can necessitate several hours of computational engagement.

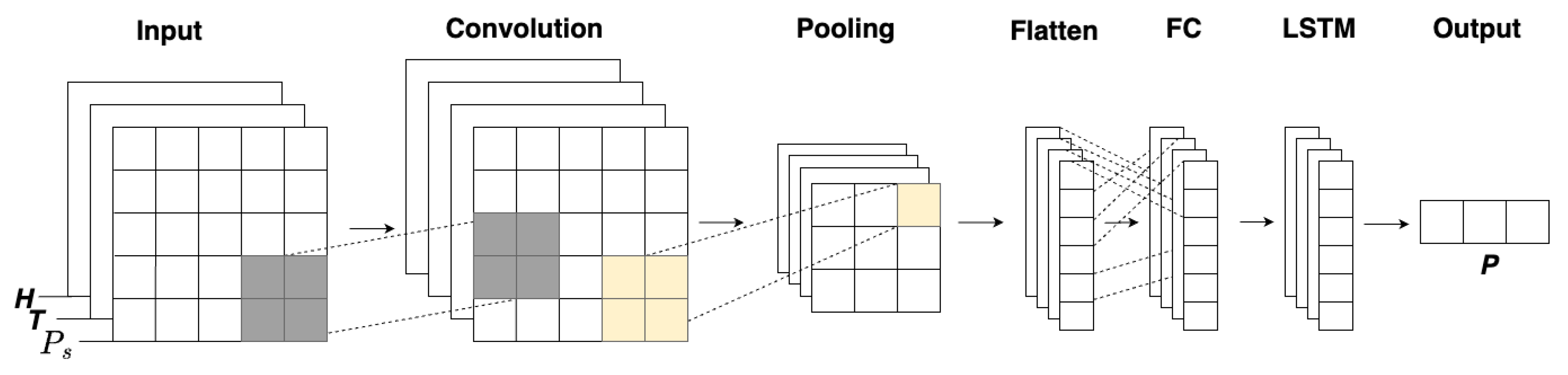

MetNet. MetNet [

53] is a state-of-the-art weather forecasting model that integrates the functionality of CNN, LSTM, and auto-encoder units. The CNN component conducts a multi-scale spatial analysis, extracting and abstracting meteorological patterns across various spatial resolutions. In parallel, the LSTM component captures temporal dependencies within the meteorological data, providing an in-depth understanding of weather transitions over time [

49]. Autoencoders are mainly used in weather prediction for data preprocessing, feature engineering and dimensionality reduction to assist more complex prediction models in making more accurate and efficient predictions.This combined architecture permits a dynamic and robust framework that can adaptively focus on key features in both spatial and temporal dimensions, guided by an embedded attention mechanism [

50,

51].

MetNet is consist of three core components: Spatial Downsampler, Temporal Encoder (ConvLSTM), and Spatial Aggregator. In this architecture, the Spatial Downsampler acts as an efficient encoder that specializes in transforming complex, high-dimensional raw data into a more compact, low-dimensional, information-intensive form. This process helps in feature extraction and data compression. The Temporal Encoder, using the ConvLSTM (Convolutional Long Short-Term Memory) model, is responsible for processing this dimensionality-reduced data in the temporal dimension. One of the major highlights of ConvLSTM is that it combines the advantages of CNNs and LSTM. The advantage of ConvLSTM is that it combines the advantages of CNN and LSTM, and is able to consider the localization of space in time series analysis simultaneously, increasing the model’s ability to perceive complex time and space dependencies. The Spatial Aggregator plays the role of an optimized, high-level decoder. Rather than simply recovering the raw data from its compressed form, it performs deeper aggregation and interpretation of global and local information through a series of axial self-attentive blocks, thus enabling the model to make more accurate weather predictions. These three components work in concert with each other to form a powerful and flexible forecasting model that is particularly well suited to handle meteorological data with a high degree of spatio-temporal complexity.

The operational workflow of MetNet begins with the preprocessing of atmospheric input data, such as satellite imagery and radar information [

52]. Spatial features are then discerned through the CNN layers, while temporal correlations are decoded via the LSTM units. This information is synthesized, with the attention mechanism strategically emphasizing critical regions and timeframes, leading to short-term weather forecasts ranging from 2 to 12 hours [

51]. MetNet’s strength lies in its precise and adaptive meteorological predictions, blending spatial and temporal intricacies, and thus offers an indispensable tool for refined weather analysis [

53].

Figure 2.

MetNet Structure

Figure 2.

MetNet Structure

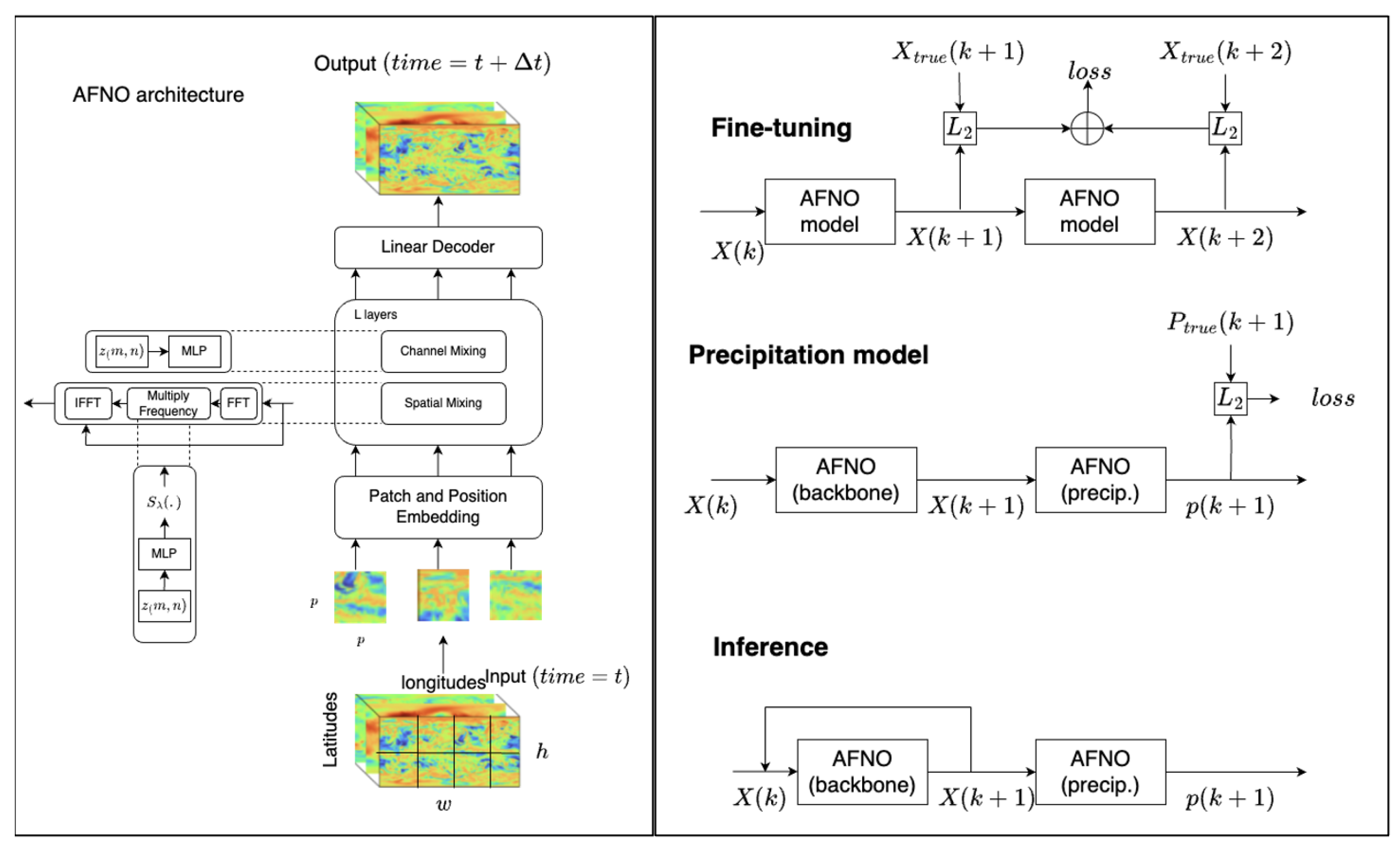

FourCastNet. In response to the escalating challenges posed by global climate changes and the increasing frequency of extreme weather phenomena, the demand for precise and prompt weather forecasting has surged. High-resolution weather models serve as pivotal instruments in addressing this exigency, offering the ability to capture finer meteorological features, thereby rendering more accurate predictions [

56,

57]. Against this backdrop, FourCastNet [

54] has been conceived, employing ERA5, an atmospheric reanalysis dataset. This dataset is the outcome of a Bayesian estimation process known as data assimilation, fusing observational results with numerical models’ output [

61]. FourCastNet leverages the Adaptive Fourier Neural Operator (AFNO), uniquely crafted for high-resolution inputs, incorporating several significant strides within the domain of deep learning.

The essence of AFNO resides in its symbiotic fusion of the Fourier Neural Operator (FNO) learning strategy with the self-attention mechanism intrinsic to Vision Transformers (ViT) [

60]. While FNO, through Fourier transforms, adeptly processes periodic data and has proven efficacy in modeling complex systems of partial differential equations, the computational complexity for high-resolution inputs is prohibitive. Consequently, AFNO deploys the Fast Fourier Transform (FFT) in the Fourier domain, facilitating continuous global convolution. This innovation reduces the complexity of spatial mixing to

, thus rendering it suitable for high-resolution data [

58]. The workflow of AFNO encompasses data preprocessing, feature extraction with FNO, feature processing with ViT, spatial mixing for feature fusion, culminating in prediction output, representing future meteorological conditions such as temperature, pressure, and humidity.

Tailoring AFNO for weather prediction, FourCastNet introduces specific adaptations. Given its distinct application scenario—predicting atmospheric variables utilizing the ERA5 dataset—a dedicated precipitation model is integrated into FourCastNet, predicting six-hour accumulated total precipitation [

61]. Moreover, the training paradigm of FourCastNet includes both pre-training and fine-tuning stages. The former learns the mapping from weather state at one time point to the next, while the latter forecasts two consecutive time steps. The advantages of FourCastNet are manifested in its unparalleled speed—approximately 45,000 times swifter than conventional NWP models—and remarkable energy efficiency—consuming about 12,000 times less energy compared to the IFS model [

60]. The model’s architectural innovations and its efficient utilization of computational resources position it at the forefront of high-resolution weather modeling.

Figure 3.

(a) The multi-layer transformer architecture (b) two-step fine-tuning (c) backbone model (d) forecast model in free-running autoregressive inference mode.

Figure 3.

(a) The multi-layer transformer architecture (b) two-step fine-tuning (c) backbone model (d) forecast model in free-running autoregressive inference mode.

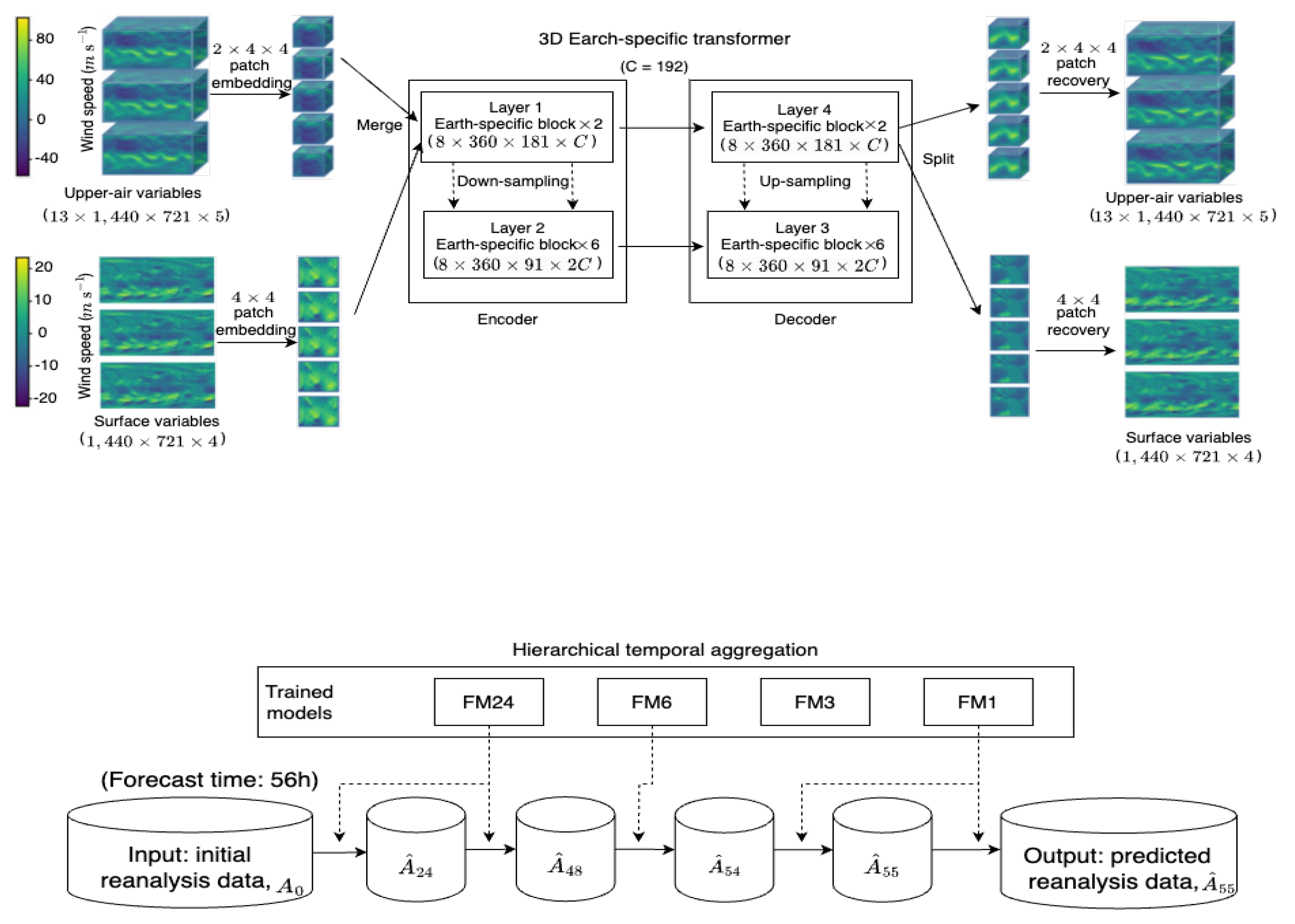

PanGu. In the rapidly evolving field of meteorological forecasting, PanGu emerges as a pioneering model, predicated on a three-dimensional neural network that transcends traditional boundaries of latitude and longitude. Recognizing the intrinsic relationship between meteorological data and atmospheric pressure, PanGu incorporates a neural network structure that accounts for altitude in addition to latitude and longitude. The initiation of the PanGu model’s process involves Block Embedding, where the dataset is parsed into smaller subsets or blocks. This operation not only mitigates spatial resolution and complexity but also facilitates the subsequent data management within the network.

Following block embedding, the PanGu model integrates the data blocks into a 3D cube through a process known as 3D Cube Fusion, thereby enabling data processing within a tri-dimensional space. Swin Encoding [

62], a specialized Transformer encoder utilized in the deep learning spectrum, applies a self-attention mechanism for data comprehension and processing. This encoder, akin to the Autoencoder, excels in extracting and encoding essential information from the dataset. The ensuing phases include Decoding, which strives to unearth salient information, and Output Splitting, which partitions data into atmospheric and surface variables. Finally, Resolution Restoration reinstates the data to its original spatial resolution, making it amenable for further scrutiny and interpretation.

PanGu [

55]’s innovative 3D neural network architecture [

63] offers a groundbreaking perspective for integrating meteorological data, and its suitability for three-dimensional data is distinctly pronounced. Moreover, PanGu introduces a hierarchical time-aggregation strategy, an advancement that ensures the network with the maximum lead time is consistently invoked, thereby curtailing errors. In juxtaposition with running a model like FourCastNet [

54] multiple times, which may accrue errors, this approach exhibits superiority in both speed and precision. Collectively, these novel attributes and methodological advancements position PanGu as a cutting-edge tool in the domain of high-resolution weather modeling, promising transformative potential in weather analysis and forecasting.

Figure 4.

Network training and inference strategies. a. 3DEST architecture. b. Hierarchical temporal aggregation. We use FM1, FM3, FM6 and FM24 to indicate the forecast models with lead times being 1 h, 3 h, 6 h or 24 h, respectively.

Figure 4.

Network training and inference strategies. a. 3DEST architecture. b. Hierarchical temporal aggregation. We use FM1, FM3, FM6 and FM24 to indicate the forecast models with lead times being 1 h, 3 h, 6 h or 24 h, respectively.

FourCastNet, MetNet, and PanGu are state-of-the-art methods in the field of weather prediction, and they share some architectural similarities that can indicate converging trends in this field. All three models initiate the process by embedding or downsampling the input data. FourCastNet uses AFNO, MetNet employs a Spatial Downsampler, and PanGu uses Block Embedding to manage the spatial resolution and complexity of the datasets.Spatio-temporal coding is an integral part of all networks; FourCastNet uses pre-training and fine-tuning phases to deal with temporal dependencies, MetNet uses ConvLSTM, and PanGu introduces a hierarchical temporal aggregation strategy to manage temporal correlations in the data. Each model employs a specialized approach to understand the spatial relationships within the data. FourCastNet uses AFNO along with Vision Transformers, MetNet utilizes Spatial Aggregator blocks, and PanGu integrates data into a 3D cube via 3D Cube Fusion. Both FourCastNet and PanGu employ self-attention mechanisms, derived from the Transformer architecture, for better capturing long-range dependencies in the data. FourCastNet combines FNO with ViT, and PanGu uses Swin Encoding.

5.2. Result Analysis

MetNet: According to MetNet experiment part,at the threshold of 1 millimeter/hour precipitation rate, both MetNet and NWP predictions have high similarity to ground conditions.Evidently, MetNet exhibits a forecasting capability that is commensurate with NWP, distinguished by an accelerated computational proficiency that generally surpasses NWP’s processing speed.

FourCastNet: According to FourCastNet experiment, FourCastNet can predict wind speed 96 hours in advance, with extremely high fidelity and accurate fine-scale features. In the experiment, the FourCastNet forecast accurately captured the formation and path of the super typhoon Shanzhu, as well as its intensity and trajectory over four days. It also has a high resolution and demonstrates excellent skills in capturing small-scale features. Particularly noteworthy is the performance of FourcastNet, in forecasting meteorological phenomena within a 48-hour horizon, has transcended the predictive accuracy intrinsic to conventional numerical weather forecasting methodologies. This constitutes a significant stride in enhancing the veracity and responsiveness of short-term meteorological projections.

PanGu: According to PanGu experiment, PanGu can almost accurately predict typhoon trajectories during the tracking of strong tropical cyclones Kong Lei and Yu Tu, and is 48 hours faster than NWP.The advent of 3D Net further heralds a momentous advancement in weather prediction technology. This cutting-edge model outperforms numerical weather prediction models by a substantial margin and possesses the unprecedented ability to replicate reality with exceptional fidelity. It’s not merely a forecasting tool but a near-precise reflection of meteorological dynamics, allowing for a nearly flawless reconstruction of real-world weather scenarios.

MetNet and FourCastNet offer comparable performance to NWP but with heightened computational efficiencies, with FourCastNet notably excelling in fine-scale feature capture. PanGu brings the innovation of 3D neural network structures to the table, allowing for almost flawless typhoon trajectory predictions and a 48-hour lead in forecasting compared to NWP. Therefore, while all three models improve upon traditional NWP in various dimensions—speed, fidelity, and feature capture—PanGu stands out for its three-dimensional data analysis and faster forecasting capabilities.

6. Medium-to-long-term climate prediction

Medium to long-term climate predictions are usually measured in decadal quarters. In the domain of medium to long-term climate forecasting, the focal point extends beyond immediate meteorological events to embrace broader, macroscopic elements such as long-term climate change trends, average temperature fluctuations, and mean precipitation levels. This orientation is critical for a wide array of sectors, spanning from environmental policy planning to infrastructure development and agricultural projections. Over time, the forecasting methodologies have experienced significant advancements, evolving from conventional climate models to cutting-edge computational methods such as Probabilistic Deep Learning for Climate Forecasting (CGF), Machine Learning for Model Downscaling (DeepESD), and Machine Learning for Result Bias Correction (CycleGAN).

6.1. Model Design

Climate Model. Climate models, consisting of fundamental atmospheric dynamics and thermodynamics equations, focus on simulating Earth’s long-term climate system [

64]. Unlike NWP which targets short-term weather patterns, climate models address broader climatic trends. These models encompass Global Climate Models (GCMs), which provide a global perspective but often at a lower resolution, and Regional Climate Models (RCMs), designed for detailed regional analysis [

65]. The main emphasis is on the average state and variations rather than transient weather events. The workflow of climate modelling begins with initialization by setting boundary conditions, possibly involving centuries of historical data. Numerical integration follows, using the basic equations to model the long-term evolution of the climate system [

66]. Parameterization techniques are employed to represent sub-grid scale processes like cloud formation and vegetation feedback. The model’s performance and uncertainties are then analyzed and validated by comparing them with observational data or other model results [

67]. The advantages of climate models lie in their ability to simulate complex climate systems, providing forecasts and insights into future climate changes, thereby informing policy and adaptation strategies. However, they also present challenges such as high computational demands, sensitivity to boundary conditions, and potential uncertainties introduced through parameterization schemes. The distinction between GCMs and RCMs, and their integration in understanding both global and regional climate phenomena, underscores the sophistication and indispensable role of these models in advancing meteorological studies [

68].

Conditional Generative Forecasting [69]. In the intricate arena of medium to long-term seasonal climate prediction, the scarcity of substantial datasets since 1979 poses a significant constraint on the rigorous training of complex models like CNNs, thus limiting their predictive efficacy. To navigate this challenge, a pioneering approach of transfer learning has been embraced, leveraging the simulated climate data drawn from CMIP5 (Coupled Model Intercomparison Project Phase 5) [

71]to enhance modeling efficiency and accuracy. The process begins with a pre-training phase, where the CNN is enriched with CMIP5 data to comprehend essential climatic patterns and relationships. This foundational insight then transfers seamlessly to observational data without resetting the model parameters, ensuring a continuous learning trajectory that marries simulated wisdom with empirical climate dynamics. The methodology culminates in a fine-tuning phase, during which the model undergoes subtle refinements to align more closely with the real-world intricacies of medium to long-term ENSO forecasting [

70]. This innovative strategy demonstrates the transformative power of transfer learning in addressing the formidable challenges associated with limited sample sizes in medium to long-term climate science.

Leveraging 52,201 years of climate simulation data from CMIP5/CMIP6, which serves to increase the sample size, the method for medium-term forecasting employs CNNs and Temporal Convolutional Neural Networks (TCNNs) to extract essential features from high-dimensional geospatial data. This feature extraction lays the foundation for probabilistic deep learning, which determines an approximate distribution of the target variables, capturing the data’s structure and uncertainty [

76]. The model’s parameters are optimized through maximizing the Evidence Lower Bound (ELBO) within the variational inference framework. The integration of deep learning techniques with probabilistic modeling ensures accuracy, robustness to sparse data, and flexibility in assumptions, enhancing the precision of forecasts and offering valuable insights into confidence levels and expert knowledge integration.

Leveraging advanced techniques in variational inference and neural networks, the method described seeks to approximate the complex distribution , where Y is the target variable, and X and M are predictor and GCM index information, respectively. The process is outlined as follows:

Problem Definition: The goal is to approximate , a task challenged by high-dimensional geospatial data, data inhomogeneity, and a large dataset.

-

Model Specification:

Random Variable z: A latent variable with a fixed standard Gaussian distribution.

Parametric Functions : Neural networks for transforming z and approximating target and posterior distributions.

Objective Function: Maximization of the Evidence Lower Bound (ELBO).

-

Training Procedure:

-

Initialize: Define random variable

[

73,

74,

75]

parametric functions .

Training Objective (Maximize ELBO) [

72]: The ELBO is defined as:

with terms for reconstruction, regularization, and residual error.

Optimization: Utilize variational inference, Monte Carlo reparameterization, and Gaussian assumptions.

Forecasting: Generate forecasts by sampling , the likelihood of , and using the mean of for an average estimate.

This method embodies a rigorous approach to approximating complex distributions, bridging deep learning and probabilistic modeling to enhance forecasting accuracy and insights.

In summary, the combination of deep learning and probabilistic insights presents a unique and potent method for spatial predictive analytics. The approach is marked by scalability, flexibility, and an ability to learn complex spatial features, even though challenges persist such as intrinsic complexity in computational modeling and the requirement for profound statistical and computer science background. Its potential in handling large data settings and adapting to varying scenarios highlights its promising applicability in modern spatial predictive analytics, representing an advanced tool in the arena of seasonal climate prediction.

Cycle-Consistent Generative Adversarial Networks. Cycle-Consistent Generative Adversarial Networks (CycleGANs) have been ingeniously applied to the bias correction of high-resolution Earth System Model (ESM) precipitation fields, such as GFDL-ESM4 [

77]. This model includes two generators responsible for translating between simulated and real domains, and two discriminators to differentiate between generated and real observations. A key component of this approach is the cycle consistency loss, ensuring a reliable translation between domains, coupled with a constraint to maintain global precipitation values for physical consistency. By framing bias correction as an image-to-image translation task, CycleGANs have significantly improved spatial patterns and distributions in climate projections. The model’s utilization of spatial spectral densities and fractal dimension measurements further emphasizes its spatial context-awareness, making it a groundbreaking technique in the field of climate science. CycleGAN model consists of two generators and two discriminators along with a cycle consistency loss:

Two Generators: The CycleGAN model includes two generators. Generator

G learns the mapping from the simulated domain to the real domain, and generator

F learns the mapping from the real domain to the simulated domain [

78].

Two Discriminators: There are two discriminators, one for the real domain and one for the simulated domain. Discriminator encourages generator G to generate samples that look similar to samples in the real domain, and discriminator encourages generator F to generate samples that look similar to samples in the simulated domain.

Cycle Consistency Loss: To ensure that the mappings are consistent, the model enforces the following condition through a cycle consistency loss: if a sample is mapped from the simulated domain to the real domain and then mapped back to the simulated domain, it should get a sample similar to the original simulated sample. Similarly, if a sample is mapped from the real domain to the simulated domain and then mapped back to the real domain, it should get a sample similar to the original real sample.

Training Process: The model is trained to learn the mapping between these two domains by minimizing the adversarial loss and cycle consistency loss between the generators and discriminators.

Application to Prediction: Once trained, these mappings can be used for various tasks, such as transforming simulated precipitation data into forecasts that resemble observed data.

The bidirectional mapping strategy of Cycle-Consistent Generative Adversarial Networks (CycleGANs) permits the exploration and learning of complex transformation relationships between two domains, without reliance on paired training samples. This attribute holds profound significance, especially in scenarios where only unlabeled data are available for training. In the specific application within climate science, this characteristic of CycleGAN enables precise capturing and modeling of the subtle relationships between real and simulated precipitation data. Through this unique bidirectional mapping, the model not only enhances the understanding of climatic phenomena but also improves the predictive accuracy of future precipitation trends. This provides a novel, data-driven methodology for climate prediction and analysis, contributing to the ever-expanding field of computational climate science.

DeepESD. Traditional GCMs, while proficient in simulating large-scale global climatic dynamics [

79,

80], exhibit intrinsic limitations in representing finer spatial scales and specific regional characteristics. This inadequacy manifests as a pronounced resolution gap at localized scales, restricting the applicability of GCMs in detailed regional climate studies [

81,

82].

In stark contrast, the utilization of CNNs symbolizes a significant breakthrough [

83]. Structurally characterized by hierarchical convolutional layers, CNNs possess the unique ability to articulate complex multi-scale spatial features across disparate scales, commencing from global coarse-grained characteristics and progressively refining to capture intricate regional details. An exemplar implementation of this approach was demonstrated by Baño-Medina et al. [

84], wherein a CNN comprised three convolutional layers with spatial kernels of varying counts (50, 25, and 10 respectively). The transformation process began with the recalibration of ERA-Interim reanalysis data to a 2° regular grid, elevating it to 0.5° [

85,

86,

87]. This configuration allowed the CNN to translate global atmospheric patterns into high-resolution regional specificity [

88,

89].

The nuanced translation from global to regional scales, achieved through sequential convolutional layers, not only amplifies the spatial resolution but also retains the contextual relevance of climatic variables [

90,

91]. The first convolutional layer captured global coarse-grained features, with subsequent layers incrementally refining these into nuanced regional characteristics. By the terminal layer, the CNN had effectively distilled complex atmospheric dynamics into a precise high-resolution grid [

92,

93].

This enhancement fosters a more robust understanding of regional climatic processes, ushering in an era of precision and flexibility in climate modeling. The deployment of this technology affirms a pivotal advancement in the field, opening new possibilities for more granulated, precise, and comprehensive examination of climatic processes and future scenarios [

94,

95,

96]. The introduction of CNNs thus represents a transformative approach to bridging the resolution gap inherent to traditional GCMs, with substantial implications for future climate analysis and scenario planning.

CGF (Conditional Generative Flows), DeepESD (Deep Earth System Downscaling), and CycleGAN (Cycle Generative Adversarial Networks) are very different in their uses and implementations, but there are also some level of similarity. All three approaches focus on mapping from one data distribution to another. And, they focus more on the mechanisms of climate change than previous models for weather forecasting.CycleGAN specifically emphasizes the importance of not only mapping from distribution A to B, but also the inverse mapping capability from B to A, which is to some extent what CGF and DeepESD are concerned with.

6.2. Result Analysis

CGF: In the utilization of deep probabilistic machine learning techniques, the figure compares the performance of the CGF model using both simulated samples and actual data against the traditional climate model, Cancm4. The findings illustrate that our model outperforms the conventional climate modeling approach in terms of accuracy, irrespective of the employment of simulated or real data sets. This distinction emphasizes the enhanced predictive capability of our method, and underlines its potential superiority in handling complex meteorological phenomena.

CycleGANs: In the context of long-term climate estimation, the application of deep learning for model correction has yielded promising results. As illustrated in the accompanying figure, the diagram delineates the mean absolute errors of different models relative to the W5E5v2 baseline facts. Among these, the error correction technique utilizing Generative Adversarial Networks (GANs) in conjunction with the ISIMIP3BASD physical model has demonstrated the lowest discrepancy. This evidence underscores the efficacy of sophisticated deep-learning methodologies in enhancing the precision of long-term climate estimations, thereby reinforcing their potential utility in climatological research and forecasting applications.

DeepESD: In the conducted study, deep learning has been employed to enhance resolution, resulting in a model referred to as DeepESD. The following figure portrays the Probability Density Functions (PDFs) of precipitation and temperature for the historical period from 1979 to 2005, as expressed by the General Circulation Model (GCM) in red, the Regional Climate Model (RCM) in blue, and DeepESD in green. These are contextualized across regions such as the Alps, the Iberian Peninsula, and Eastern Europe as defined by the PRUDENCE area.In the diagram, solid lines represent the overall mean, while the shaded region includes two standard deviations. Dashed lines depict the distribution mean of each PDF. A clear observation from the graph illustrates that DeepESD maintains a higher consistency with observed data in comparison to the other models.

From the results, it can be discerned that although the utilization of machine learning has significantly diminished in medium-to-long-term climate forecasting, our findings demonstrate that by judiciously addressing the challenge of scarce sample sizes, and by employing appropriate machine learning techniques, superior results can still be achieved compared to those derived from physical models. This observation underscores the potential of machine learning methodologies to enhance prediction accuracy in climate science, even in situations constrained by data limitations. In the context of climate estimation, it is observable that the utilization of neural networks for predicting climate variations has become less prevalent among meteorologists. However, the adoption of machine learning techniques to aid and optimize climate modelling has emerged as a complementary strategy. As evidenced by the two preceding figures, climate models that have been enhanced through the application of machine learning demonstrate superior predictive capabilities when compared to other conventional models.

7. Discussion

Our research purpose, the examination of machine learning in meteorological forecasting, is situated within a rich historical context, charting the evolution of weather prediction methodologies. Starting from simple statistical methods to complex deterministic modelling, the field has witnessed a paradigm shift with the advent of machine learning techniques.

7.1. Overall comparison

In this section of our survey, we delineate key differences between our study and existing surveys, thereby underscoring the unique contribution of our work. We contrast various time scales—short-term versus medium-to-long-term climate predictions—to substantiate our rationale for focusing on these particular temporal dimensions. Additionally, we draw a comparative analysis between machine learning approaches and traditional models in climate prediction. This serves to highlight our reason for centering our survey on machine learning techniques for climate forecasting. Overall, this section not only amplifies the distinctiveness and relevance of our survey but also frames it within the larger scientific discourse.

Comparison to existing surveys. Compared to existing literature, our survey takes a unique approach by cohesively integrating both short-term weather forecasting and medium-to-long-term climate predictions—a dimension often underrepresented. While other surveys may concentrate on a limited range of machine learning methods, ours extends to examine nearly 20 different techniques. However, we recognize our limitations, particularly the challenge of providing an exhaustive analysis due to the complexity of machine learning algorithms and their multifaceted applications in meteorology. This signals an opportunity for future research to delve deeper into specialized machine-learning techniques or specific climatic variables. In contrast to many generalized surveys, our study ventures into the technical nuances of scalability, interpretability, and applicability for each method. We also make a conscious effort to incorporate the most recent advances in the field, although we acknowledge that the pace of technological change inevitably leaves room for further updates. In sum, while our survey provides a more comprehensive and technically detailed roadmap than many existing reviews, it also highlights gaps and opportunities for future work in this rapidly evolving interdisciplinary domain.