Submitted:

19 September 2023

Posted:

21 September 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related work

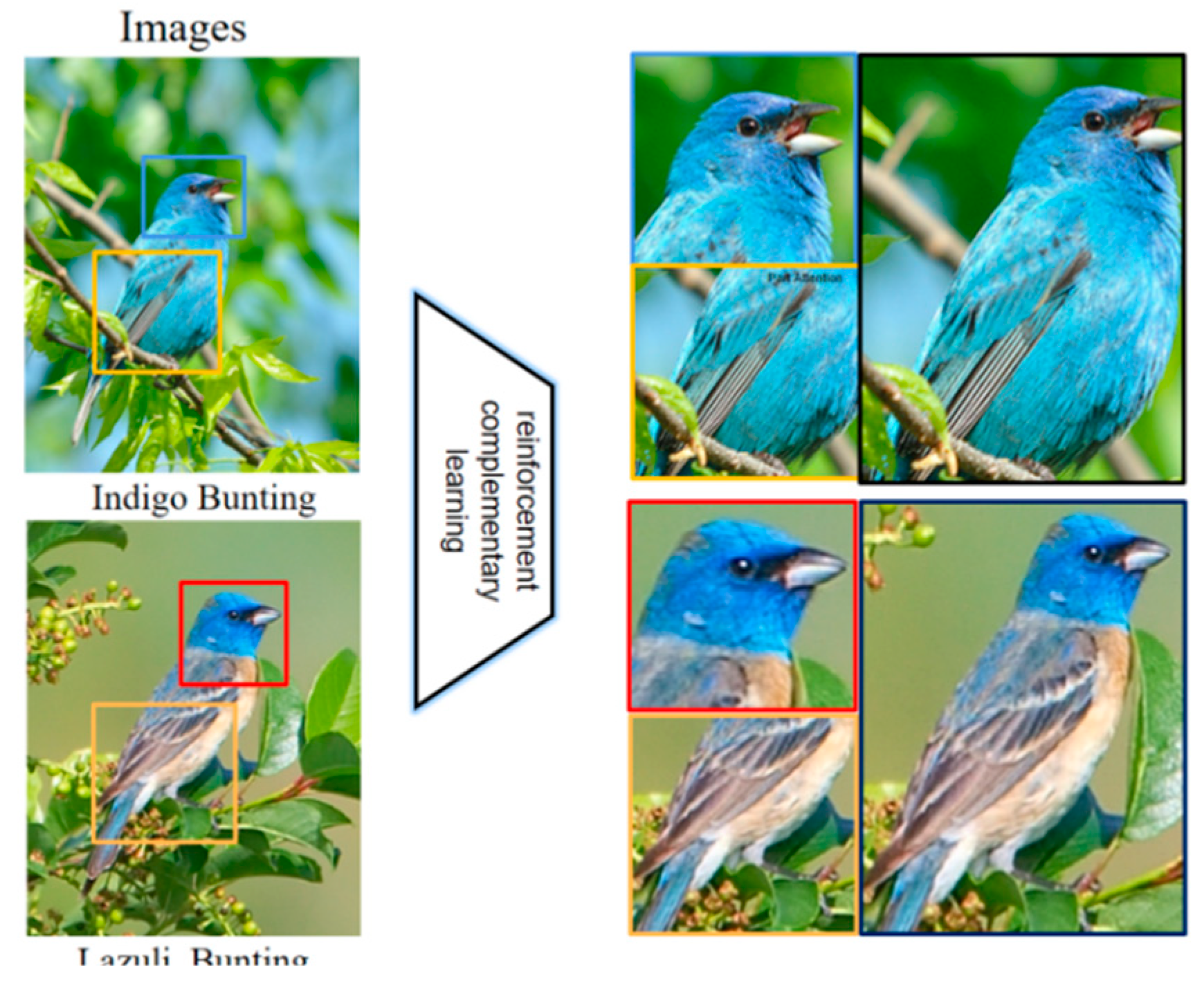

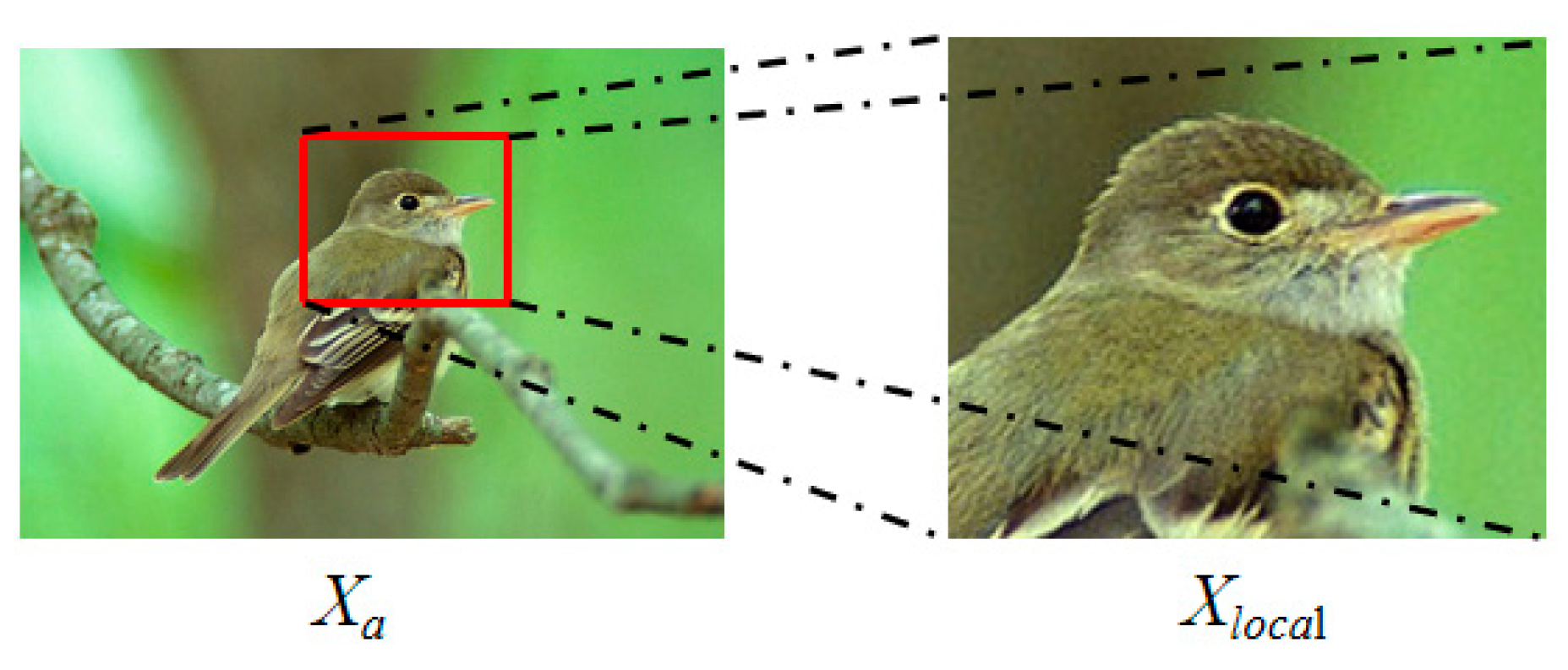

3. RACL-NET(Reinforcement And Complementary Learning network)

3.1. The network structure

3.2. Drive model

3.3. Loss function

4. Experiments and Discussions

4.1. Datasets

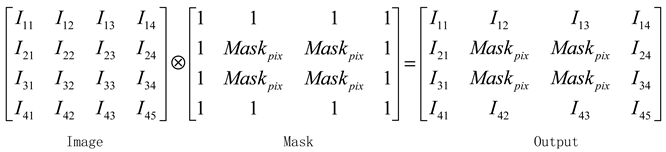

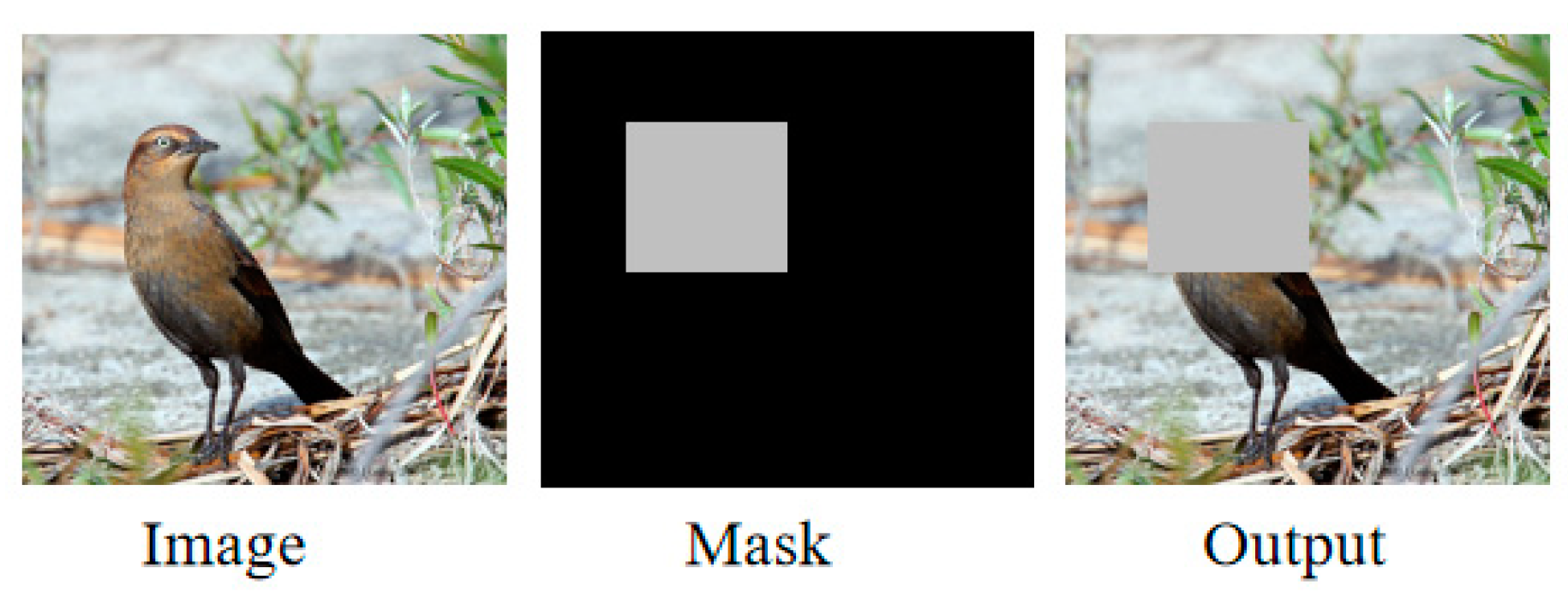

4.2. Erase experiment

4.3. Experimental steps

4.4. Parameter setting

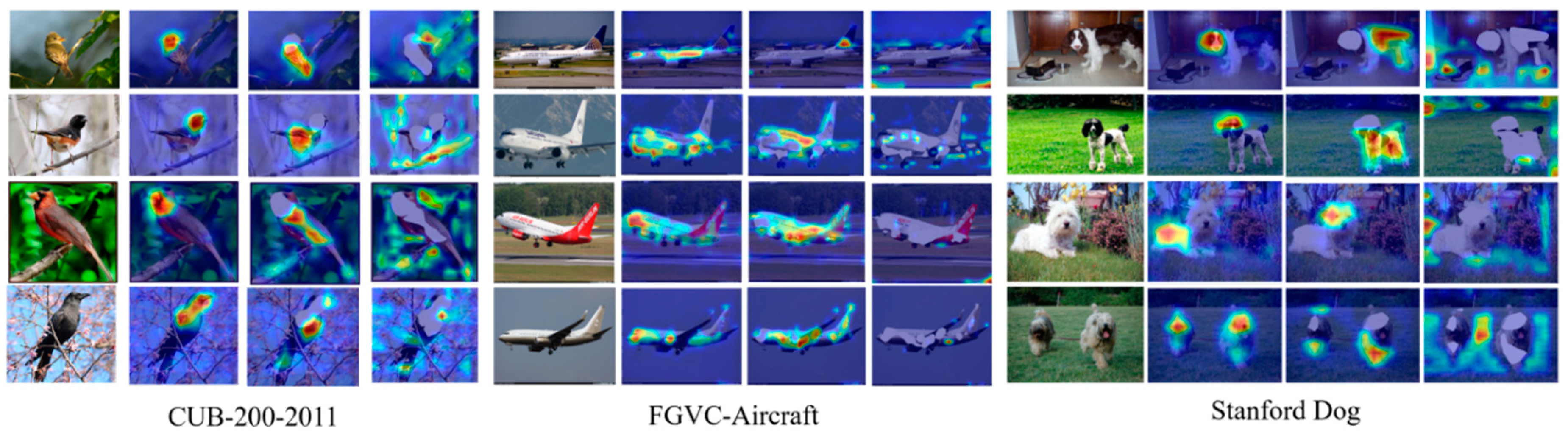

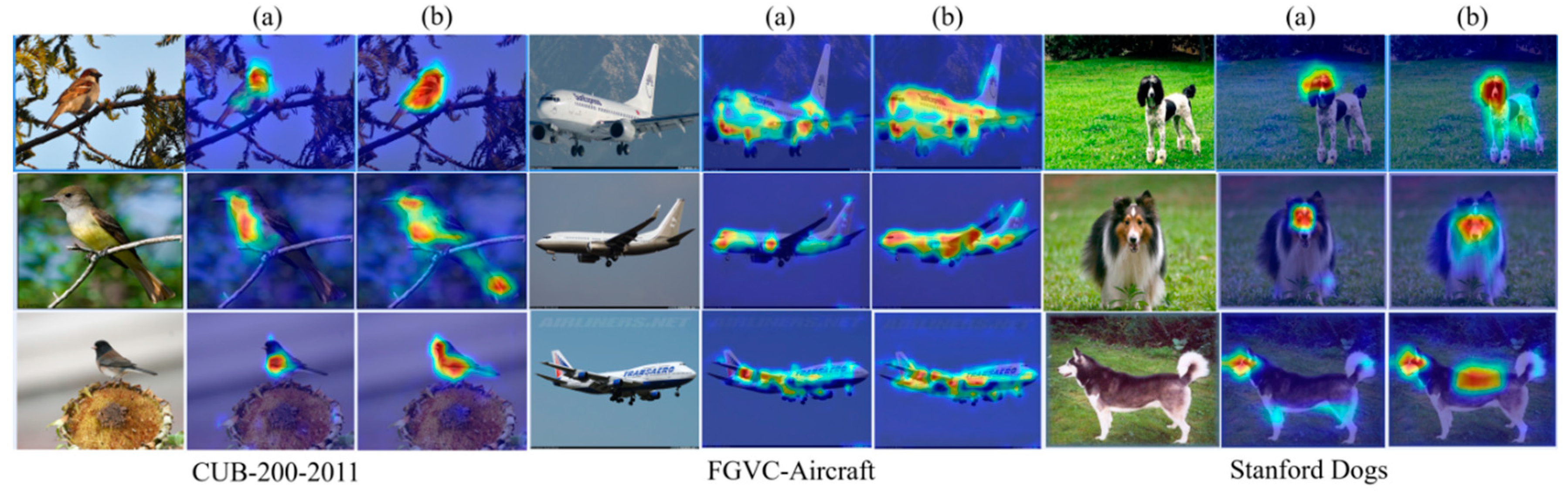

4.5. Visualization of experimental results

4.6. Experimental verification and analysis

4.6.1. Ablation experiment

4.6.2. Comparison test

| Method | Top-1 Acc(%) |

|---|---|

| B-CNN[11] | 84.1 |

| MA-CNN[6] | 86.5 |

| DFL-CNN[24] | 87.4 |

| DCL[25] | 87.8 |

| SPS[32] | 88.7 |

| DB[26] | 88.6 |

| DCAL[33] | 88.7 |

| FDL[27] | 89.0 |

| RACL-Net | 89.5 |

5. Conclusions

References

- ZHANG N, DONAHUE J, GIRSHICK R, et al. Part-based R-CNNs for fine-grained category detection[M]//ECCV European Conference on Computer Vision (ECCV).2014: 834-849.

- ON S, VAN HORN G, BELONGIE S, et al. Bird species categorization using pose normalized deep convolutional nets[EB/OL].(2014-06-11)[2021-09-15]. https://arxiv.org/abs/1406.2952.

- Lin T Y, ROYCHOWDHURYA, MAJI S. Bilinear CNN Models for Fine-Grained Visual Recognition[C]//ICCV Proceedings of the 15th IEEE International Conference on Computer Vision (IEEE).Santiago,Chile:2015:1449-1457.

- DONAHUE J, JIA Y Q, VINYALS O, et al. DeCAF: A deep convolutional activation feature for generic visual recognition[C]// Proceedings of the 31st International Conference on Machine Learning. New York: JMLR. org,2014:647-655.

- FU J,ZHENG H,TAO M. Look Closer to See Better: Recurrent Attention Convolutional Neural Network for Fine-grained Image Recognition[C]∥IEEE Conference on Computer Vision and Pattern Recognition (CVPR).2017:4438-4446.

- ZHENG H,FU J,TAO M, et al. Learning Multi-attention Convolutional Neural Network for Fine-Grained Image Recognition[C]//ICCV International Conference on Computer Vision (ICCV).2017:5209- 5217.

- SUN M,YUAN Y,ZHOU F, et al. Multi attention multi-class constraint for fine-grained image recognition [C]//ECCV Proceedings of the European Conference on Computer Vision (ECCV).2018:805-821.

- WANG Y,MORARIU V I,DAVIS L S. Learning a discriminative filter bank within a CNN for fine-grained recognition[C]//IEEE Conference on Computer Vision and Pattern Recognition (CVPR).2018 :4148-4157.

- Huang,ZXu, DTao,YZhang. Part-Stacked CNN for Fine-Grained Visual Categorization[C]//IEEE Conference on Computer Vision and Pattern Recognition (CVPR).2016:1173-1182.

- WGe, XLin, YYu. Weakly Supervised Complementary Parts Models forFine-Grained Image Classification From the Bottom Up[C]//IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).2019: 3029-3038.

- Lin T, ROYCHOWDHURY A, MAJI. Bilinear S.CNN Models for Fine-Grained Visual Recognition[C]//IEEE International Conference on Computer Vision (ICCV). 2015:1449-1457.

- SHU K, FOWLKES C. Low-Rank Bilinear Pooling for Fine-Grained Classification[C]//IEEE Conference on Computer Vision and Pattern Recognition (CVPR).2017:365-374.

- GAO Y,BEIJBOM O,ZHANG N,et al. Compact bilinear pooling[C]//IEEE Conference on Computer Vision and Pattern Recognition (CVPR).2016:317-326.

- DUBEY A, GUPTA O, RASKAR R, et al. Maximum entropy fine grained classification[J]. ArXiv Preprint ArXiv,2018:1809.05934.

- GAO Y, EIJBOM O, HANG N, et al. Compact bilinear pooling[C]//2016 IEEE Computer Vision and Pattern Recognition(CVPR).2016:317-326.

- H Zheng,J Fu,Z Zha, et al. Looking for the Devil in the Details: Learning Trilinear Attention Sampling Network for Fine-Grained Image Recognition[C]//2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019:5007-5016.

- LIU X, XIA T, WANG J, et al. Fully convolutional attention networks for fine⁃grained recognition[EB/OL]. (2017-03-21)[2021-11-11]. https://arxiv.org/pdf/1603.06765.pdf.

- ZHENG H L, FU J L, MEI T, et al. Learning multi⁃attention convolutional neural network for fine⁃grained image recognition[C]// Proceedings of the 2017 IEEE International Conference on Computer Vision. Piscataway: IEEE,2017:5219-5227.

- L Zhang,S Huang,W Liu, et al. Learning a Mixture of Granularity-Specific Experts for Fine-Grained Categorization[C]//2019 IEEE/CVF International Conference on Computer Vision (ICCV), 2019: 8330-8339.

- Zhu Q, Kuang W, Li Z. A collaborative gated attention network for fine-grained visual classification[J]. Displays, 2023: 102468. [CrossRef]

- Ning E, Zhang C, Wang C, et al. Pedestrian Re-ID based on feature consistency and contrast enhancement[J]. Displays, 2023: 102467. [CrossRef]

- Wei Y, Feng J, Liang X, et al. Object region mining with adversarial erasing: A simple classification to semantic segmentation approach[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2017: 1568-1576.

- Zhou X, Li Y, Cao G, et al. Master-CAM: Multi-scale fusion guided by Master map for high-quality class activation maps[J]. Displays, 2023, 76: 102339. [CrossRef]

- DFL-CNN:Yang Z, Luo TG, Wang D, et al. Learning to navigate for fine-grained classification. Proceedings of the 15th European Conference On Computer Vision. Cham: Springer, 2018. 420–435.

- DCLCHENY,BAIY,ZHANGW,et al.Destruction and Construction Learning for Fine-Grained Image Recognition[C]∥IEEE/ CVF Conference on Computer Vision and Pattern Recognition (CVPR).2019:5152-5161.

- DBSUNG,CHOLAKKALH,KHANS,etal.Fine-Grained Recognition:Accounting for Subtle Differences between Similar Classes[J].Proceedings of the AAAI Conference on Artificial Intelligence,2020,34(1):12047-12054. [CrossRef]

- FDL;LIU C,XIE H,ZHAZJ,etal.Filtration and Distillation:Enhancing Region Attention for Fine-Grained Visual Categorization [C]∥AAAI Conference on Artificial Intelligence.2020: 11555-11562. [CrossRef]

- LUO W, ZHANG H, LI J, et al. learning Semantically Enhanced Feature for Fine-Grained Image Classification[J]//2020 IEEE Signal Processing Letters (IEEE).2020,27:1545-1549. [CrossRef]

- ZHAO B,WU X,FENGJ,etal.Diversified Visual Attention Networks for Fine-Grained Object Classification [J].IEEE Transactionson Multimedia,2017,19(6):1245-1256. [CrossRef]

- DUBEY A,GUPTA O,GUO P,et al.Pairwise Confusion for Fine-Grained Visual Classification[C]∥European Conference on Computer Vision(ECCV).2018:71-88.

- CHEN Y,BAIY,ZHANG W,et al. Destruction and Construction Learning for Fine-Grained Image Recognition[C]∥IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR).2019:5152-5161.

- Shaoli Huang, Xinchao Wang, and Dacheng Tao. Stochastic partial swap: Enhanced model generalization and interpretability for fine-grained recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 620–629, 2021. 5.

- Zhu H, Ke W, Li D, et al. Dual cross-attention learning for fine-grained visual categorization and object re-identification[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022: 4692-4702.

| CUB-200-2011 | Experiment1 | Experiment2 | Experiment3 |

|---|---|---|---|

| Inception-V3 | √ | √ | √ |

| Strengthen network | √ | √ | |

| Complementary network | √ | ||

| Top-1Acc/% | 83.5 | 85.6 | 89.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).