Submitted:

03 October 2023

Posted:

09 October 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Contributions and Research Questions

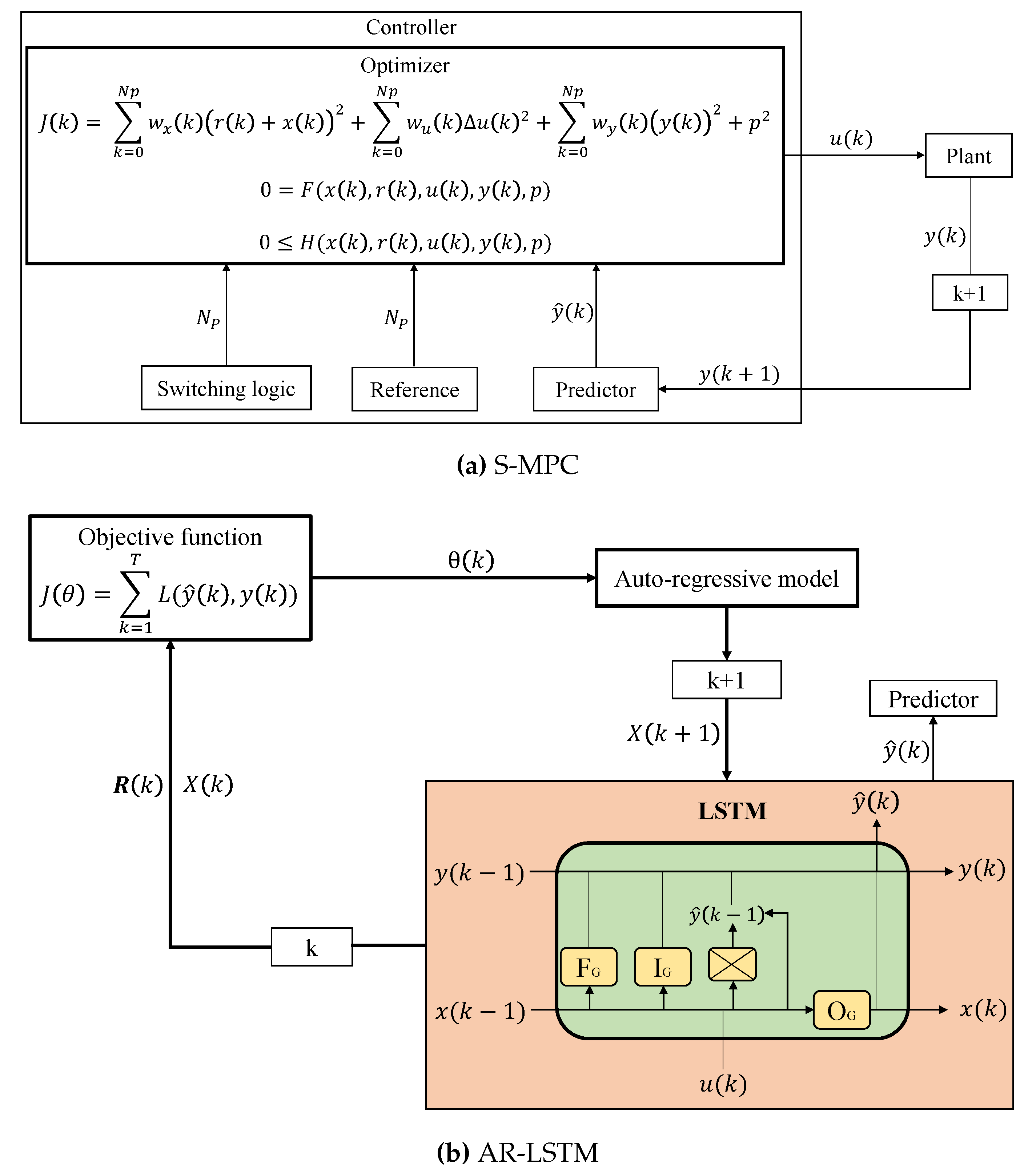

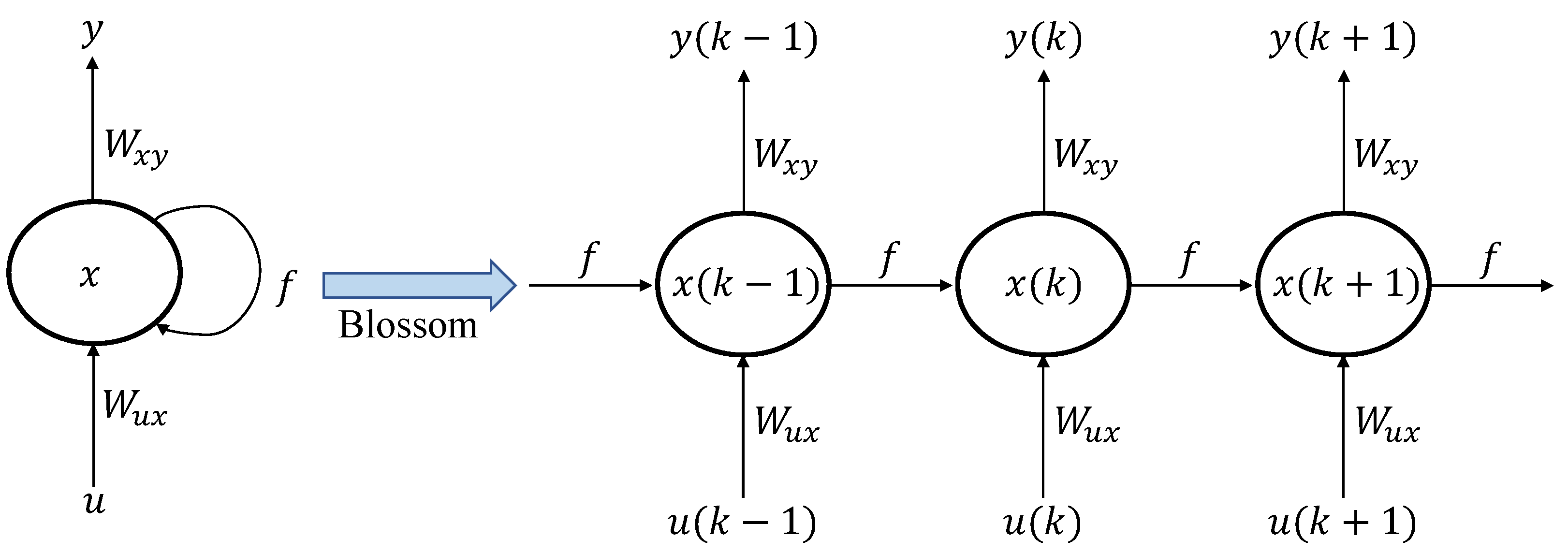

2. Identifying the distinctions between S-MPC and AR-RNN-LSTM

2.1. Strategy

2.2. Problem-solving method

2.3. Peak Performance

2.4. Calculational effort

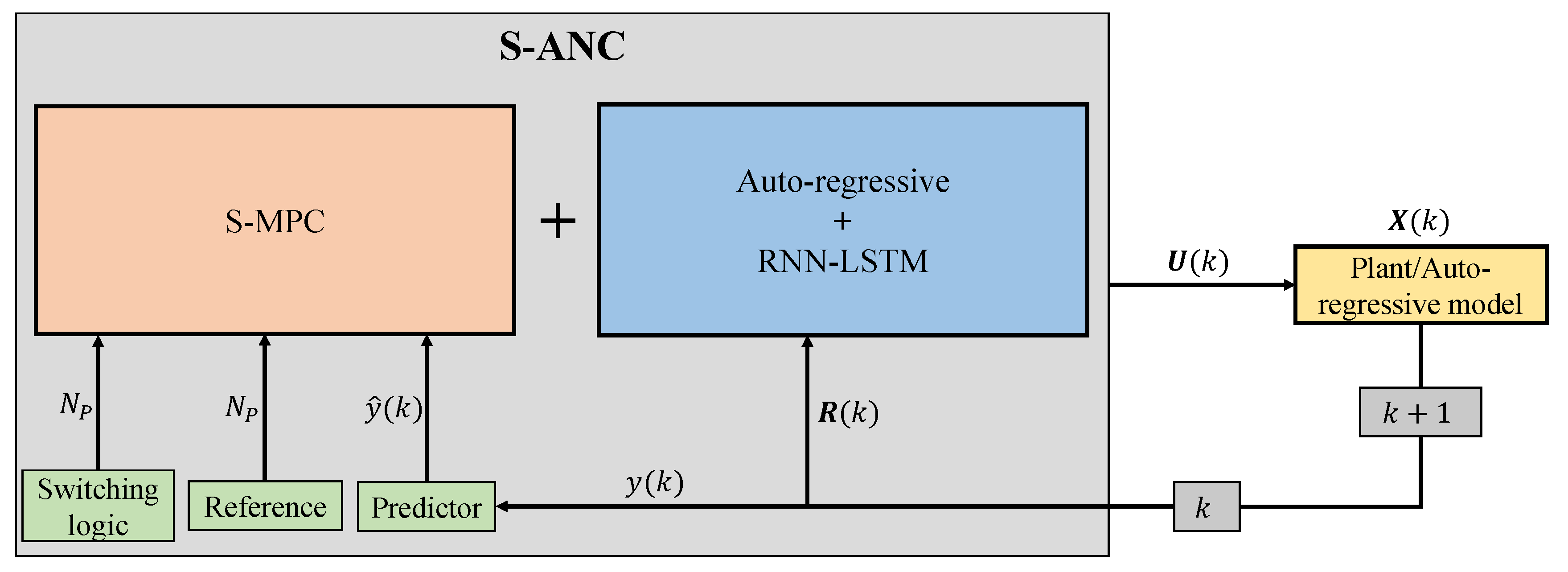

3. Switched Auto-regressive Neural Control (S-ANC)

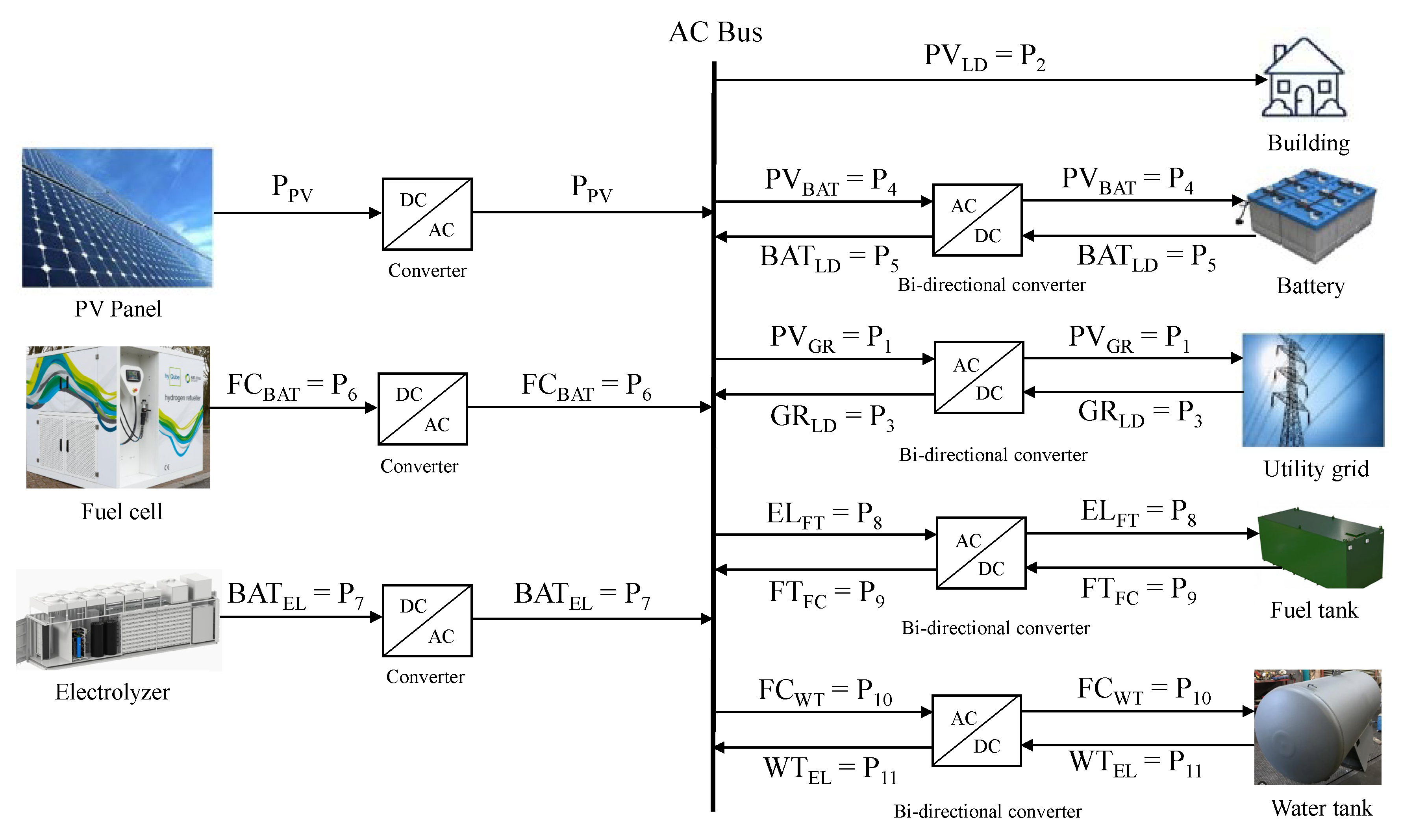

3.1. Hybrid MG description

3.2. Simple definition of the proposed method

- Model development: The S-MPC necessitates the creation of multiple models that represent the system’s behavior in different operating modes. This requires an efficient system architecture and behavior.

- Mode detection: The S-MPC controller must be able to detect the current mode of operation of the system, which can be difficult in certain circumstances.

- Switching logic: The S-MPC controller must select the appropriate model and control strategy based on the current operating mode and desired performance objectives. This necessitates the design of switching logic that maps the system’s current state to the appropriate model and control strategy (a mode’s objective function and an operational mode’s objective function may differ).

3.3. Formal definition

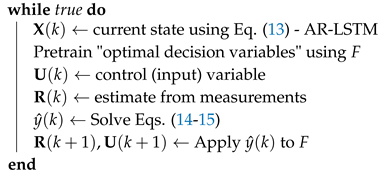

| Algorithm 1:Switched Auto-regressive Neural Control (S-ANC) |

|

Identify:

Imply:

Switching logic: Conversion MPC into S-MPC

Solve: Objective function for S-MPC using Eq. (1)

Obtain: "Optimal decision variables"

Configure:

|

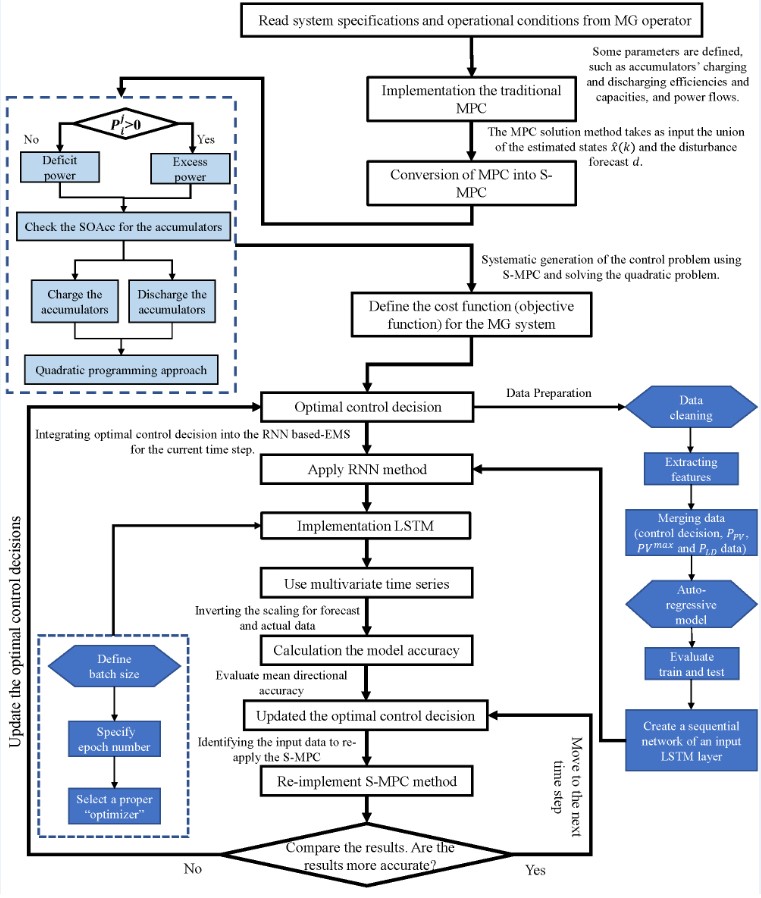

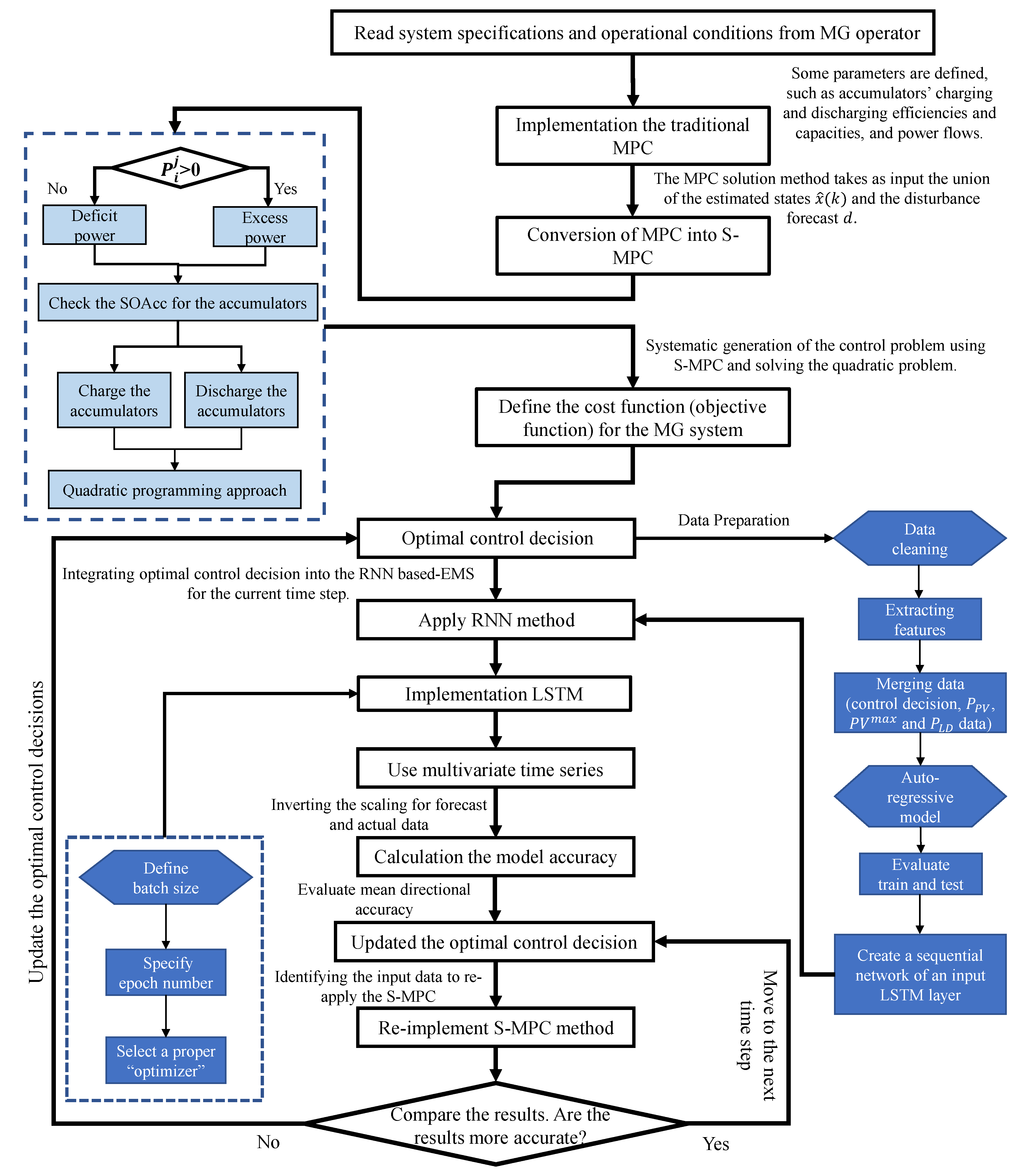

- Initiate the system specifications and operational conditions from the MG operator.

- Solve the systematic generation of the control problem employing the MPC with the QP.

- Using switching logic, convert the MPC into the S-MPC automatically.

- The optimal control decisions are obtained.

- The optimal control decisions are employed as input data for the AR method.

- The data preparation is initiated. The step has several parameters, such as data cleaning, extracting features, and merging the input data and PV constraints.

- The AR model is implemented to increase the accuracy of our proposed method.

- After that, the multivariate time series are employed.

- Then, the train and test data are selected and evaluated.

- To move the LSTM layer after the RNN, a sequential network of an input LSTM layer is produced.

- In this step (implementation of LSTM), several parameters are defined, including batch size, epoch number, and type of optimizer.

- Before moving the calculation to the model accuracy, the scaling for the forecast and actual data are inverted.

- The model accuracy is calculated using some methods, along with mean directional accuracy, method, and so on.

- Integrate the S-MPC and AR-LSTM controllers into a closed-loop control system by connecting the RNN output to the MPC controller’s input and the MPC controller’s output to the MG system’s input.

- Then, the optimal control decisions and references are updated. In other words, , , and are re-evaluated depending on the model accuracy.

- If this accuracy is unreasonable, the S-MPC is re-applied with the updated control decisions.

4. Results and Discussions

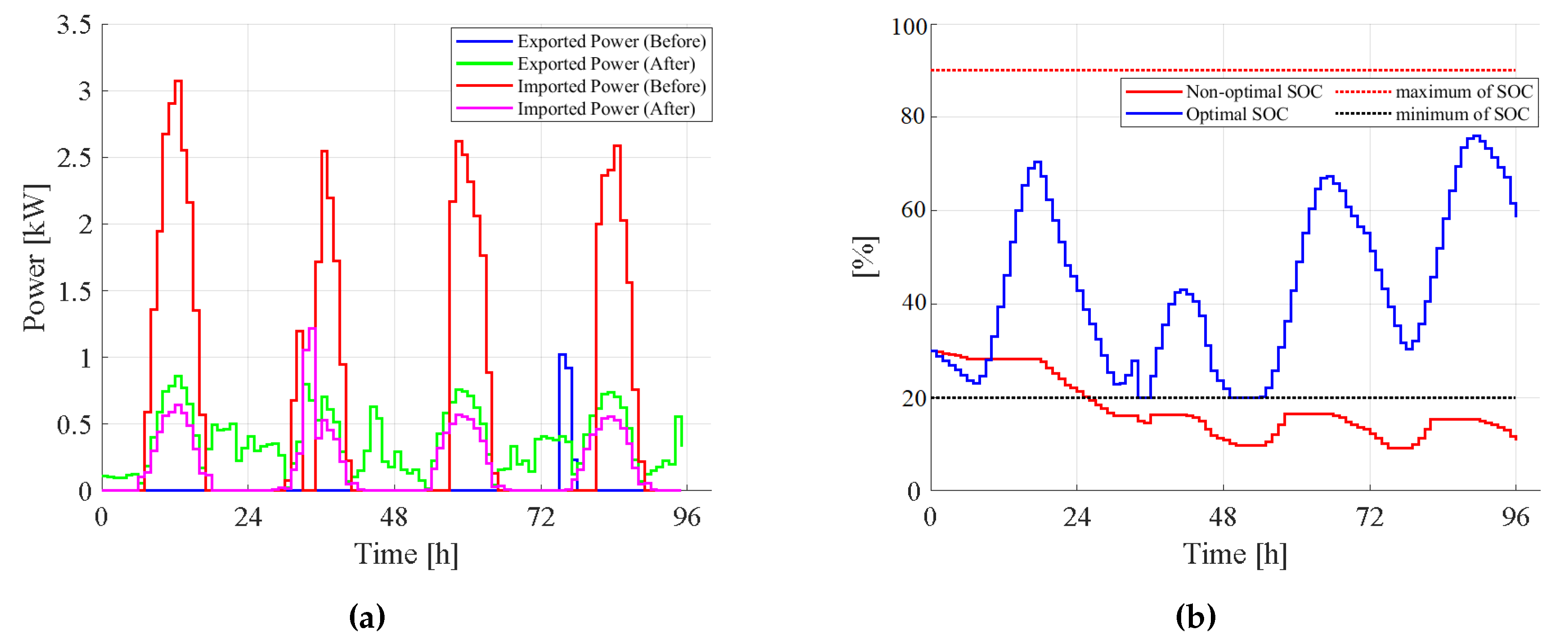

4.1. Case 1: The implementation of S-MPC

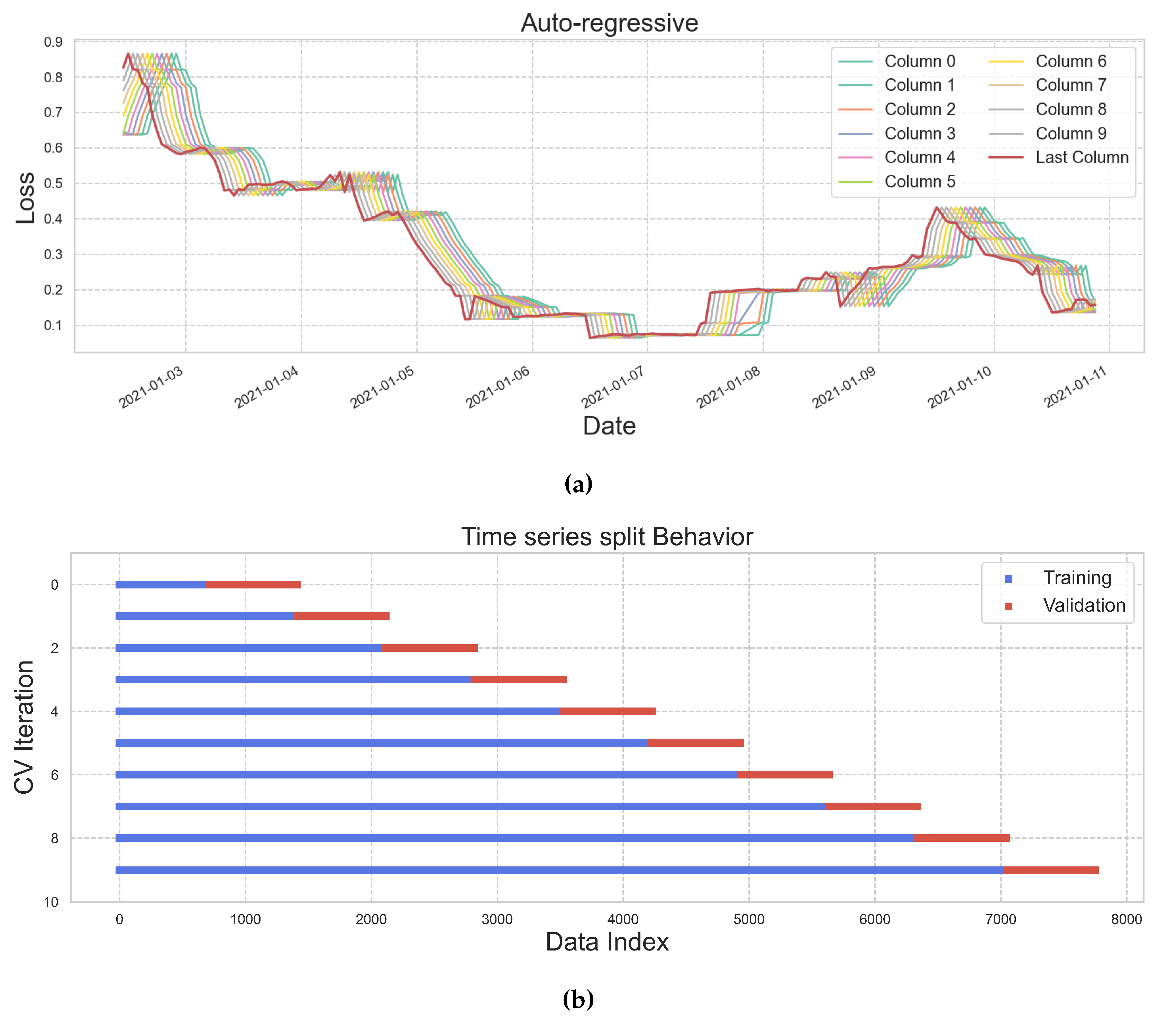

4.2. Case 2: The implementation of the merged S-MPC and AR

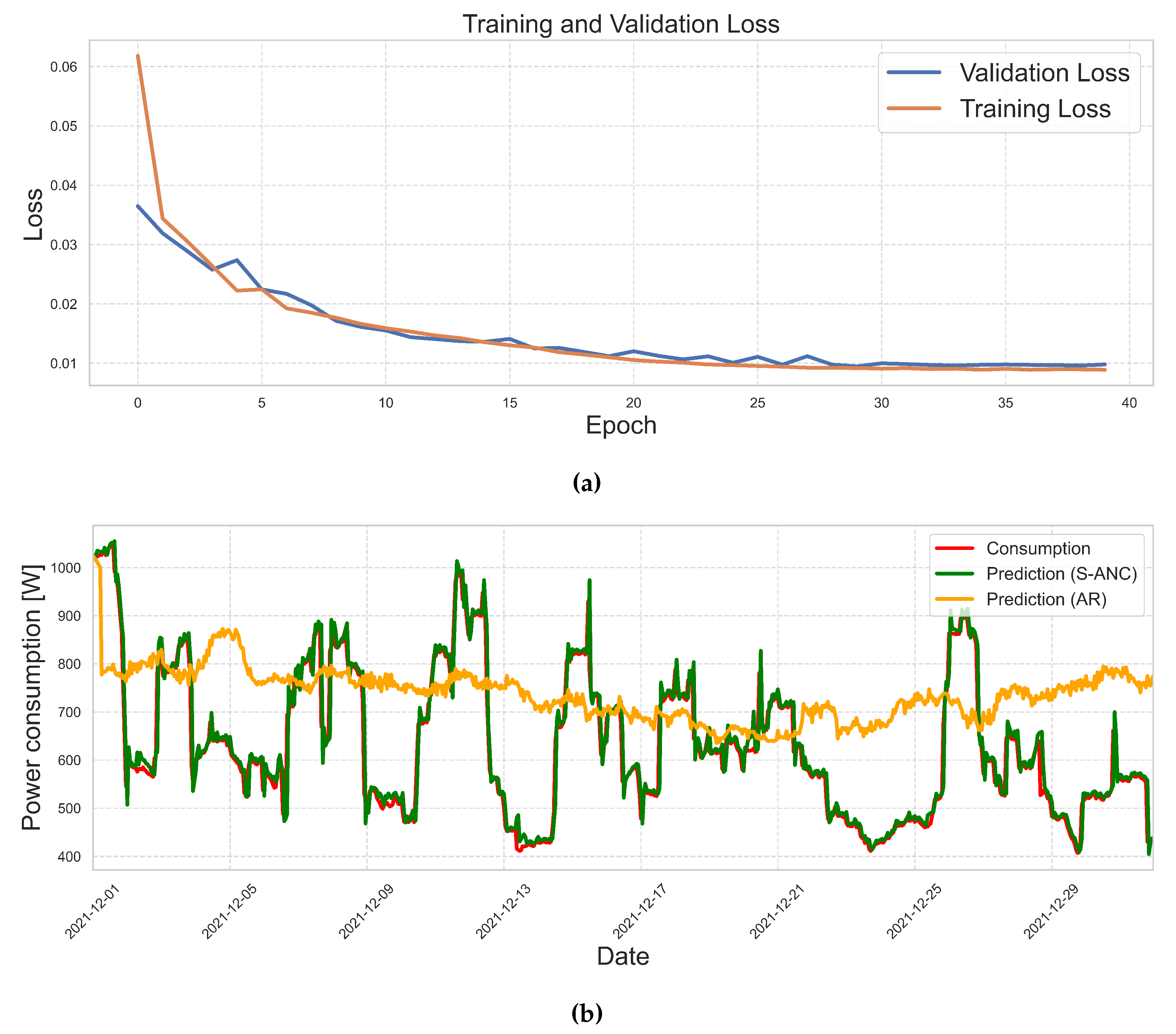

4.3. Case 3: The implementation of the S-ANC

4.4. Calculation of model accuracy

5. Conclusions

Author Contributions

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ANN | Artificial Neural Network |

| AR | Auto-regressive |

| AR-LSTM | Auto-regressive Long Short-Term Memory |

| ARIMA | Auto-regressive Integrated Moving Average |

| ARMA | Auto-regressive Moving Average |

| BAT | Battery |

| BPTT | Back-Propagation Through Time |

| CNN | Convolutional Neural Network |

| CV | Cross-validation |

| DLC | Direct Load Control |

| EL | Electrolyzer |

| EM | Energy Management |

| ESS | Energy Storage System |

| FC | Fuel Cell |

| FT | Fuel Tank |

| GHI | Global Horizontal Irradiance |

| GR | Grid |

| GRU | Gated Recurrent Unit |

| IoT | Internet of Things |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| MG | Microgrid |

| MSE | Mean Squared Error |

| MILP | Mixed Integer Linear Programming |

| ML | Machine Learning |

| MPC | Model Predictive Control |

| NARX | Nonlinear Auto-regressive with exogenous input |

| NMG | Networked Microgrid |

| PAR | Peak-to-average Ratio |

| RNN | Recurrent Neural Network |

| RES | Renewable Energy Source |

| S-ANC | Switched Auto-regressive Neural Control |

| S-MPC | Switched Model Predictive Control |

| SVM | Support Vector Machine |

| QP | Quadratic Programming |

| WT | Water Tank |

| Charging efficiency of accumulator l | |

| Discharging efficiency of accumulator l | |

| F | controller model |

| H | constraint |

| J | objective function |

| Maximum values of power flows, 5 kW | |

| Photovoltaic | |

| or | Power flow from PV to grid |

| or | Power flow from PV to load |

| or | Power flow from grid to load |

| or | Power flow from PV to battery |

| or | Power flow from battery to load |

| or | Power flow from fuel cell to battery |

| or | Power flow from battery to electrolyzer |

| or | Hydrogen flow from electrolyzer to fuel tank |

| or | Hydrogen flow from fuel tank to fuel cell |

| or | Water flow from fuel cell to water tank |

| or | Water flow from water tank to electrolyzer |

| Flow of j from node a to node b | |

| Capacities of accumulator l, [kWh] | |

| Power of j from node a to node b | |

| Auto-regressive model coefficient | |

| Prediction horizon, 24h | |

| State of accumulator l | |

| Maximum value state of accumulator l | |

| Minimum value state of accumulator l | |

| error term or random noise at time k |

References

- Kumar, A.S.; Ahmad, Z. Model predictive control (MPC) and its current issues in chemical engineering. Chemical Engineering Communications 2012, 199, 472–511. [Google Scholar] [CrossRef]

- Garcia, C.E.; Prett, D.M.; Morari, M. Model predictive control: Theory and practice—A survey. Automatica 1989, 25, 335–348. [Google Scholar] [CrossRef]

- Pamulapati, T.; Cavus, M.; Odigwe, I.; Allahham, A.; Walker, S.; Giaouris, D. A Review of Microgrid Energy Management Strategies from the Energy Trilemma Perspective. Energies 2022, 16, 289. [Google Scholar] [CrossRef]

- Parisio, A.; Rikos, E.; Glielmo, L. A model predictive control approach to microgrid operation optimization. IEEE Transactions on Control Systems Technology 2014, 22, 1813–1827. [Google Scholar] [CrossRef]

- Ulutas, A.; Altas, I.H.; Onen, A.; Ustun, T.S. Neuro-fuzzy-based model predictive energy management for grid connected microgrids. Electronics 2020, 9, 900. [Google Scholar] [CrossRef]

- Silvente, J.; Kopanos, G.M.; Dua, V.; Papageorgiou, L.G. A rolling horizon approach for optimal management of microgrids under stochastic uncertainty. Chemical Engineering Research and Design 2018, 131, 293–317. [Google Scholar] [CrossRef]

- Parisio, A.; Rikos, E.; Glielmo, L. Stochastic model predictive control for economic/environmental operation management of microgrids: An experimental case study. Journal of Process Control 2016, 43, 24–37. [Google Scholar] [CrossRef]

- Garcia-Torres, F.; Bordons, C. Optimal economical schedule of hydrogen-based microgrids with hybrid storage using model predictive control. IEEE Transactions on Industrial Electronics 2015, 62, 5195–5207. [Google Scholar] [CrossRef]

- Jayachandran, M.; Ravi, G. Decentralized model predictive hierarchical control strategy for islanded AC microgrids. Electric Power Systems Research 2019, 170, 92–100. [Google Scholar] [CrossRef]

- Cavus, M.; Allahham, A.; Adhikari, K.; Zangiabadia, M.; Giaouris, D. Control of microgrids using an enhanced Model Predictive Controller. PEMD 2022. [Google Scholar] [CrossRef]

- Cavus, M.; Allahham, A.; Adhikari, K.; Zangiabadi, M.; Giaouris, D. Energy Management of Grid-Connected Microgrids using an Optimal Systems Approach. IEEE Access 2023. [Google Scholar] [CrossRef]

- Zhu, B.; Tazvinga, H.; Xia, X. Switched model predictive control for energy dispatching of a photovoltaic-diesel-battery hybrid power system. IEEE Transactions on Control Systems Technology 2014, 23, 1229–1236. [Google Scholar] [CrossRef]

- Maślak, G.; Orłowski, P. Microgrid operation optimization using hybrid system modeling and switched model predictive control. Energies 2022, 15, 833. [Google Scholar] [CrossRef]

- Moness, M.; Moustafa, A.M. Real-time switched model predictive control for a cyber-physical wind turbine emulator. IEEE Transactions on Industrial Informatics 2019, 16, 3807–3817. [Google Scholar] [CrossRef]

- Pervez, M.; Kamal, T.; Fernández-Ramírez, L.M. A novel switched model predictive control of wind turbines using artificial neural network-Markov chains prediction with load mitigation. Ain Shams Engineering Journal 2022, 13, 101577. [Google Scholar] [CrossRef]

- Magni, L.; Scattolini, R.; Tanelli, M. Switched model predictive control for performance enhancement. International Journal of Control 2008, 81, 1859–1869. [Google Scholar] [CrossRef]

- Aguilera, R.P.; Lezana, P.; Quevedo, D.E. Switched model predictive control for improved transient and steady-state performance. IEEE Transactions on Industrial Informatics 2015, 11, 968–977. [Google Scholar] [CrossRef]

- Kwadzogah, R.; Zhou, M.; Li, S. Model predictive control for HVAC systems—A review. 2013 IEEE International Conference on Automation Science and Engineering (CASE). IEEE, 2013, pp. 442–447.

- Forbes, M.G.; Patwardhan, R.S.; Hamadah, H.; Gopaluni, R.B. Model predictive control in industry: Challenges and opportunities. IFAC-PapersOnLine 2015, 48, 531–538. [Google Scholar] [CrossRef]

- Teimourzadeh, S.; Tor, O.B.; Cebeci, M.E.; Bara, A.; Oprea, S.V. A three-stage approach for resilience-constrained scheduling of networked microgrids. Journal of Modern Power Systems and Clean Energy 2019, 7, 705–715. [Google Scholar] [CrossRef]

- Oprea, S.V.; Bâra, A. Edge and fog computing using IoT for direct load optimization and control with flexibility services for citizen energy communities. Knowledge-Based Systems 2021, 228, 107293. [Google Scholar] [CrossRef]

- Bodong, S.; Wiseong, J.; Chengmeng, L.; Khakichi, A. Economic management and planning based on a probabilistic model in a multi-energy market in the presence of renewable energy sources with a demand-side management program. Energy 2023, p. 126549.

- Wynn, S.L.L.; Boonraksa, T.; Boonraksa, P.; Pinthurat, W.; Marungsri, B. Decentralized Energy Management System in Microgrid Considering Uncertainty and Demand Response. Electronics 2023, 12, 237. [Google Scholar] [CrossRef]

- Sansa, I.; Boussaada, Z.; Bellaaj, N.M. Solar Radiation Prediction Using a Novel Hybrid Model of ARMA and NARX. Energies 2021, 14, 6920. [Google Scholar] [CrossRef]

- Brahma, B.; Wadhvani, R. Solar irradiance forecasting based on deep learning methodologies and multi-site data. Symmetry 2020, 12, 1830. [Google Scholar] [CrossRef]

- Jeon, B.k.; Kim, E.J. Next-day prediction of hourly solar irradiance using local weather forecasts and LSTM trained with non-local data. Energies 2020, 13, 5258. [Google Scholar] [CrossRef]

- Husein, M.; Chung, I.Y. Day-ahead solar irradiance forecasting for microgrids using a long short-term memory recurrent neural network: A deep learning approach. Energies 2019, 12, 1856. [Google Scholar] [CrossRef]

- Zafar, R.; Vu, B.H.; Husein, M.; Chung, I.Y. Day-Ahead Solar Irradiance Forecasting Using Hybrid Recurrent Neural Network with Weather Classification for Power System Scheduling. Applied Sciences 2021, 11, 6738. [Google Scholar] [CrossRef]

- Qing, X.; Niu, Y. Hourly day-ahead solar irradiance prediction using weather forecasts by LSTM. Energy 2018, 148, 461–468. [Google Scholar] [CrossRef]

- Srivastava, S.; Lessmann, S. A comparative study of LSTM neural networks in forecasting day-ahead global horizontal irradiance with satellite data. Solar Energy 2018, 162, 232–247. [Google Scholar] [CrossRef]

- Wang, F.; Yu, Y.; Zhang, Z.; Li, J.; Zhen, Z.; Li, K. Wavelet decomposition and convolutional LSTM networks based improved deep learning model for solar irradiance forecasting. applied sciences 2018, 8, 1286. [Google Scholar] [CrossRef]

- Ghimire, S.; Deo, R.C.; Raj, N.; Mi, J. Deep solar radiation forecasting with convolutional neural network and long short-term memory network algorithms. Applied Energy 2019, 253, 113541. [Google Scholar] [CrossRef]

- Huang, J.; Korolkiewicz, M.; Agrawal, M.; Boland, J. Forecasting solar radiation on an hourly time scale using a Coupled AutoRegressive and Dynamical System (CARDS) model. Solar Energy 2013, 87, 136–149. [Google Scholar] [CrossRef]

- Jiang, Y.; Long, H.; Zhang, Z.; Song, Z. Day-ahead prediction of bihourly solar radiance with a Markov switch approach. IEEE Transactions on Sustainable Energy 2017, 8, 1536–1547. [Google Scholar] [CrossRef]

- Ekici, B.B. A least squares support vector machine model for prediction of the next day solar insolation for effective use of PV systems. Measurement 2014, 50, 255–262. [Google Scholar] [CrossRef]

- Yu, Y.; Cao, J.; Zhu, J. An LSTM short-term solar irradiance forecasting under complicated weather conditions. IEEE Access 2019, 7, 145651–145666. [Google Scholar] [CrossRef]

- Wojtkiewicz, J.; Hosseini, M.; Gottumukkala, R.; Chambers, T.L. Hour-ahead solar irradiance forecasting using multivariate gated recurrent units. Energies 2019, 12, 4055. [Google Scholar] [CrossRef]

- Zang, H.; Liu, L.; Sun, L.; Cheng, L.; Wei, Z.; Sun, G. Short-term global horizontal irradiance forecasting based on a hybrid CNN-LSTM model with spatiotemporal correlations. Renewable Energy 2020, 160, 26–41. [Google Scholar] [CrossRef]

- Gao, B.; Huang, X.; Shi, J.; Tai, Y.; Zhang, J. Hourly forecasting of solar irradiance based on CEEMDAN and multi-strategy CNN-LSTM neural networks. Renewable Energy 2020, 162, 1665–1683. [Google Scholar] [CrossRef]

- Connor, J.; Atlas, L. Recurrent neural networks and time series prediction. IJCNN-91-Seattle international joint conference on neural networks. IEEE, 1991, Vol. 1, pp. 301–306.

- Brownlee, J. Time series prediction with lstm recurrent neural networks in python with keras. Machine Learning Mastery 2016, 18. [Google Scholar]

- Moreno, J.J.M. Artificial neural networks applied to forecasting time series. Psicothema 2011, 23, 322–329. [Google Scholar]

- Shen, Z.; Zhang, Y.; Lu, J.; Xu, J.; Xiao, G. A novel time series forecasting model with deep learning. Neurocomputing 2020, 396, 302–313. [Google Scholar] [CrossRef]

- Bianchi, F.M.; Maiorino, E.; Kampffmeyer, M.C.; Rizzi, A.; Jenssen, R. Recurrent neural networks for short-term load forecasting: an overview and comparative analysis; Springer, 2017.

- Kumar, D.; Mathur, H.; Bhanot, S.; Bansal, R.C. Forecasting of solar and wind power using LSTM RNN for load frequency control in isolated microgrid. International Journal of Modelling and Simulation 2021, 41, 311–323. [Google Scholar] [CrossRef]

- Li, D.; Tan, Y.; Zhang, Y.; Miao, S.; He, S. Probabilistic forecasting method for mid-term hourly load time series based on an improved temporal fusion transformer model. International Journal of Electrical Power & Energy Systems 2023, 146, 108743. [Google Scholar] [CrossRef]

- DiPietro, R.; Hager, G.D. Deep learning: RNNs and LSTM. In Handbook of medical image computing and computer assisted intervention; Elsevier, 2020; pp. 503–519.

- Gupta, A.; Gurrala, G.; Sastry, P.S. Instability Prediction in Power Systems using Recurrent Neural Networks. IJCAI, 2017, pp. 1795–1801.

- Huo, Y.; Chen, Z.; Bu, J.; Yin, M. Learning assisted column generation for model predictive control based energy management in microgrids. Energy Reports 2023, 9, 88–97. [Google Scholar] [CrossRef]

- Cabrera-Tobar, A.; Massi Pavan, A.; Petrone, G.; Spagnuolo, G. A Review of the Optimization and Control Techniques in the Presence of Uncertainties for the Energy Management of Microgrids. Energies 2022, 15, 9114. [Google Scholar] [CrossRef]

- Zhou, Y. Advances of machine learning in multi-energy district communities–mechanisms, applications and perspectives. Energy AI 2022, 10, 100187. [Google Scholar] [CrossRef]

- Li, B.; Roche, R. Optimal scheduling of multiple multi-energy supply microgrids considering future prediction impacts based on model predictive control. Energy 2020, 197, 117180. [Google Scholar] [CrossRef]

- Nyong-Bassey, B.E.; Giaouris, D.; Patsios, C.; Papadopoulou, S.; Papadopoulos, A.I.; Walker, S.; Voutetakis, S.; Seferlis, P.; Gadoue, S. Reinforcement learning based adaptive power pinch analysis for energy management of stand-alone hybrid energy storage systems considering uncertainty. Energy 2020, 193, 116622. [Google Scholar] [CrossRef]

- Tang, W.; Zhang, Y.J. A model predictive control approach for low-complexity electric vehicle charging scheduling: Optimality and scalability. IEEE transactions on power systems 2016, 32, 1050–1063. [Google Scholar] [CrossRef]

- Karamanakos, P.; Geyer, T.; Kennel, R. A computationally efficient model predictive control strategy for linear systems with integer inputs. IEEE Transactions on Control Systems Technology 2015, 24, 1463–1471. [Google Scholar] [CrossRef]

- Zhang, L.; Zhuang, S.; Braatz, R.D. Switched model predictive control of switched linear systems: Feasibility, stability and robustness. Automatica 2016, 67, 8–21. [Google Scholar] [CrossRef]

- Ayumi, V.; Rere, L.R.; Fanany, M.I.; Arymurthy, A.M. Optimization of convolutional neural network using microcanonical annealing algorithm. 2016 International Conference on Advanced Computer Science and Information Systems (ICACSIS). IEEE, 2016, pp. 506–511.

- Liu, C.T.; Wu, Y.H.; Lin, Y.S.; Chien, S.Y. Computation-performance optimization of convolutional neural networks with redundant kernel removal. 2018 IEEE international symposium on circuits and systems (ISCAS). IEEE, 2018, pp. 1–5.

- Huang, R.; Wei, C.; Wang, B.; Yang, J.; Xu, X.; Wu, S.; Huang, S. Well performance prediction based on Long Short-Term Memory (LSTM) neural network. Journal of Petroleum Science and Engineering 2022, 208, 109686. [Google Scholar] [CrossRef]

- Zhang, Y.; Hao, X.; Liu, Y. Simplifying long short-term memory for fast training and time series prediction. Journal of Physics: Conference Series. IOP Publishing, 2019, Vol. 1213, p. 042039.

- Schmidt, R.M. Recurrent neural networks (rnns): A gentle introduction and overview. arXiv preprint arXiv:1912.05911 2019. arXiv:1912.05911 2019.

- Amidi, A.; Amidi, S. Vip cheatsheet: Recurrent neural networks, 2018.

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv preprint arXiv:1609.04747 2016. arXiv:1609.04747 2016.

- Görges, D. Relations between model predictive control and reinforcement learning. IFAC-PapersOnLine 2017, 50, 4920–4928. [Google Scholar] [CrossRef]

- Ying, X. An overview of overfitting and its solutions. Journal of physics: Conference series. IOP Publishing, 2019, Vol. 1168, p. 022022.

- Giaouris, D.; Papadopoulos, A.I.; Patsios, C.; Walker, S.; Ziogou, C.; Taylor, P.; Voutetakis, S.; Papadopoulou, S.; Seferlis, P. A systems approach for management of microgrids considering multiple energy carriers, stochastic loads, forecasting and demand side response. Applied energy 2018, 226, 546–559. [Google Scholar] [CrossRef]

- Cavus, M.; Allahham, A.; Adhikari, K.; Giaouris, D. A Hybrid Method Based on Logic Control and Model Predictive Control for Synthesizing Controller for Flexible Hybrid Microgrid with Plug-and-Play Capabilities. Available at SSRN 447 3008.

- Chicco, D.; Warrens, M.J.; Jurman, G. The coefficient of determination R-squared is more informative than SMAPE, MAE, MAPE, MSE and RMSE in regression analysis evaluation. PeerJ Computer Science 2021, 7, e623. [Google Scholar] [CrossRef]

- Kong, X.; Du, X.; Xu, Z.; Xue, G. Predicting solar radiation for space heating with thermal storage system based on temporal convolutional network-attention model. Applied Thermal Engineering 2023, 219, 119574. [Google Scholar] [CrossRef]

| Control Method | Optimality | Computational Time [s] | Multiple Models | Adaptability | Constraints |

|---|---|---|---|---|---|

| MPC [54,55] | ✓ | [High] | × | Good | ✓ |

| S-MPC [10,11,15,56] | ✓ | [Moderate] | ✓ | Outstanding | ✓ |

| DLC [21] | ✓ | [Moderate] | × | Good | ✓ |

| AR [24] | × | [Moderate] | × | Poor | × |

| CNN [57,58] | × | [Low] | × | Poor | × |

| RNN-LSTM [59,60] | ✓ | [Low] | × | Poor | × |

| S-ANC | ✓ | [Low] | ✓ | Outstanding | ✓ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).