1. Introduction

Even though it is computationally very complex to incorporate spatial and temporal information in real time issues which are useful for a wide range if it correlates well with human perception. The impairments visibility is subjected to spatial and temporal properties of visual content, more over spatial and temporal complexity is quite expensive and time conservative method while considering within Human Visual characteristics.

2. Reference works of ITU Recommendations

Since our methodology of data screening is limited to visual content excluding audio because we considered out test video sequences data at frame by frame level or macro block level where complexity of motion content is essential and audio visual data is negligible. Results of subjective assessment largely dependents on the factors like selection of test video sequences and welly defined evaluation procedure. In our research work, we carefully employed the specifications recommended by ITU-R BT 500-10 [

1] as mentioned and VQEG Hybrid test plan in which is explained briefly in following sections.

a) Test Video Sequences: Shahid et.al [

2] considered six different video sequences of CIF and QCIF spatial resolutions were selected in raw progressive format based on different motion content and including various levels of spatial-temporal complexity recommended by ITU-R P.910.

b) Specifications of Data Screening Methodology: The involvement of human observers within laboratory viewing environment specified by ITU-R BT.500-12 Standards are mentioned for Single Stimulus Continuous Quality Evaluation(SSCQ) process out of Single Stimulus which includes Stimulus Comparison Quality Evaluation.

3. Estimation of Motion Dynamics while considering User Experience(UX)

Investigation of unidentified error at decoder side has been considered into account because of missing motion vectors in reconstructed frames which resulted in increase computational complexity within motion vectors and its not due to poor coding or compression nor because of delay, More over based on assumptions if b frame size is less than predetermined threshold the we must not consider motion intensity feature which was traced out by User Experience, so decision making tree decides either to consider the motion intensity features out of all or not based on Hypothesis.

4. Translations of Recurrent Neural Networks

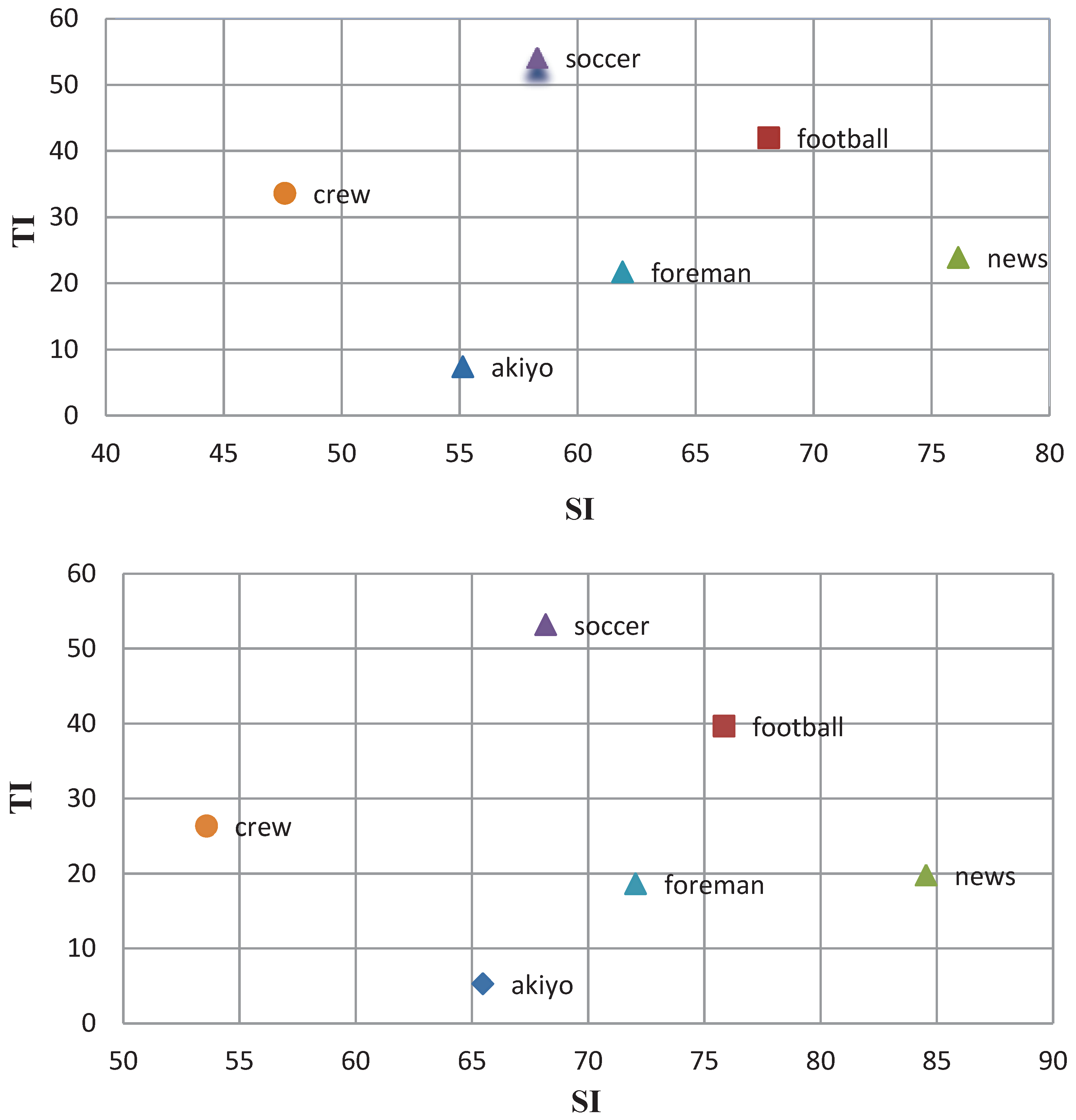

The measurement of spatial-temporal information is essential due to quality of transmitted video sequence is highly dependent on this whereabouts. The formulations for quantifying spatial and temporal perceptual information of test sequences.

a) Spatial Information: SI is calculated for each frame of video sequence within luminance plane, which leads in obtaining spatial information for n frames.

b) Temporal Information: TI is calculated for amount of temporal changes of a video sequence on luminance plane and measurement of temporal perceptual information

4.1. Principles based on Translations

Structural Information and Motion Content: Out of all existing features within structural information of bit stream data, motion vector plays quite essential role for quantifying dedicated features such as motion intensity, more over Motion vector complexity is quite high at macro block layer as mentioned in [

3].

Coding Distortion: The effectiveness of changes for identifying the errors within data transmitted due to interruption in signal within a channel is completely based on coding theory and amitesh et al. [

4] explained in detail information about rate distortion control and information theory.

Figure 1.

Spatial and Temporal information computed for luminance component of selected CIF and QCIF videos.

Figure 1.

Spatial and Temporal information computed for luminance component of selected CIF and QCIF videos.

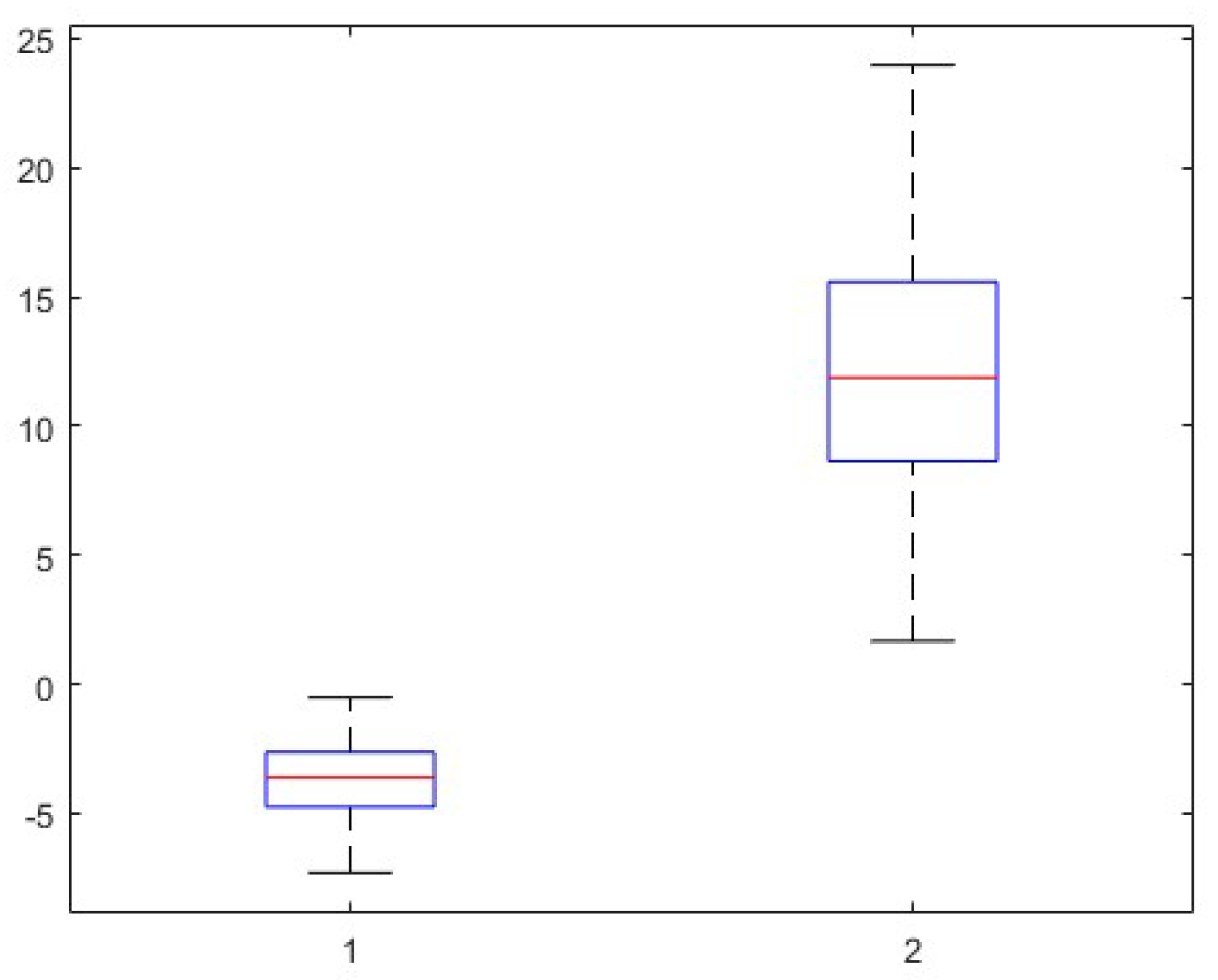

5. Confidence Interval of observations and consistency based on Decision Making Tree

This above box plot illustrates inconsistency between outcome of two possibilities based on decision making tree but one of two decision captured 99 % Confidence Interval between observations and consistency which concludes that there is huge difference in prediction between comparison of two decisions and secondly consistency is based on assumptions.

6. Conclusion

We concluded that our approach is based on principles of Recurrent Neural Networks and our assumptions based on RNN priciples are within reach of Human Perception level because subjective scores are considered as true or standard scores of video quality. Since all the 120 video sequences which we generated for our research work are encoded in JM Reference 16.1 based on H.264/AVC software uses Rate distortion optimization algorithm for improving video quality while video compression and distortion measure in JM encoder is Mean Square Error by default [

5].

Figure 2.

99% Confidence Interval between observations and consistency for Two Decisions.

Figure 2.

99% Confidence Interval between observations and consistency for Two Decisions.

References

- ITU-R Radio communication Sector of ITU, Recommendation ITU-R BT.500-12, 2009. http://www.itu.int/.

- Shahid, M.; Singam, A.K.; Rossholm, A.; Lovstrom, B. Subjective quality assessment of H.264/AVC encoded low resolution videos. 2012 5th International Congress on Image and Signal Processing, 2012; 63–67. [Google Scholar] [CrossRef]

- Singam, A.K.; Wlode, K. Revised One, a Full Reference Video Quality Assessment Based on Statistical Based Transform Coefficient. SSRN Electronic Journal 2023. [Google Scholar] [CrossRef]

- Singam, A.K. Coding Estimation based on Rate Distortion Control of H.264 Encoded Videos for Low Latency Applications. arXiv e-prints, 2023; p. arXiv:2306.16366, [arXiv:cs.IT/2306.16366]. [Google Scholar] [CrossRef]

- Singam, A.; Wlodek, K.; Lövström, B. Classification Review of Raw Subjective Scores towards Statistical Analysis. SSRN 2023. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).