Submitted:

02 July 2023

Posted:

04 July 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

Main Contribution

1.1. Notation and Background Knowledge

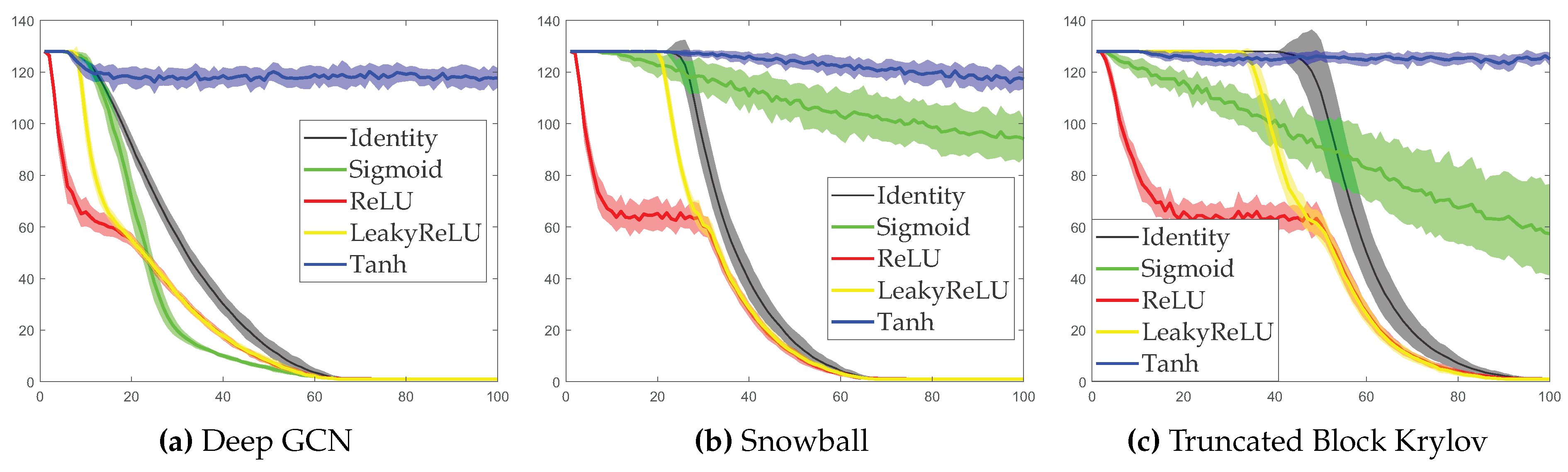

2. Loss of Expressive Power of Deep Graph Neural Networks

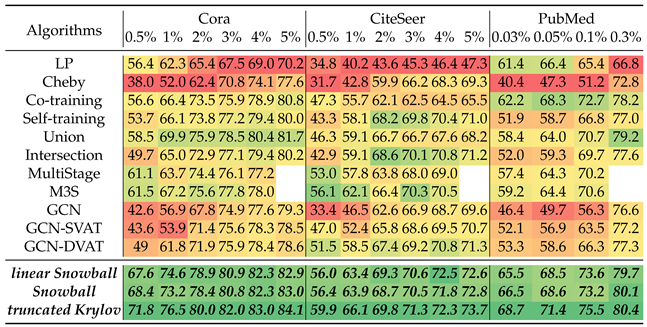

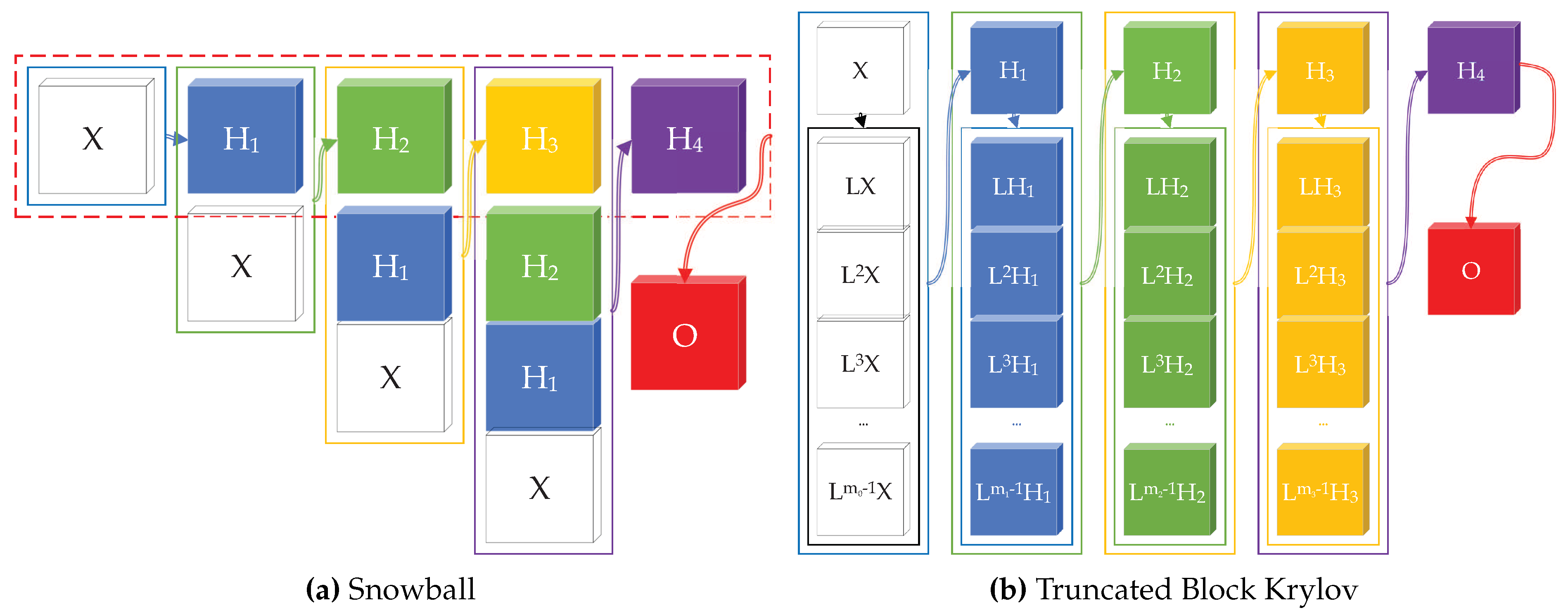

2.1. A Stronger Multi-Scale Deep GNN with Truncated Krylov Architecture

|

2.2. Future Works on Over-Smoothing

2.2.0.2. Weight Initialization for GNNs

2.2.0.3. Variance Analysis: Forward View

Variance Analysis: Backward View

Adaptive ReLU (AdaReLU) Activation Function

3. GNNs on Heterophily Graphs

3.1. Metrics of Homophily

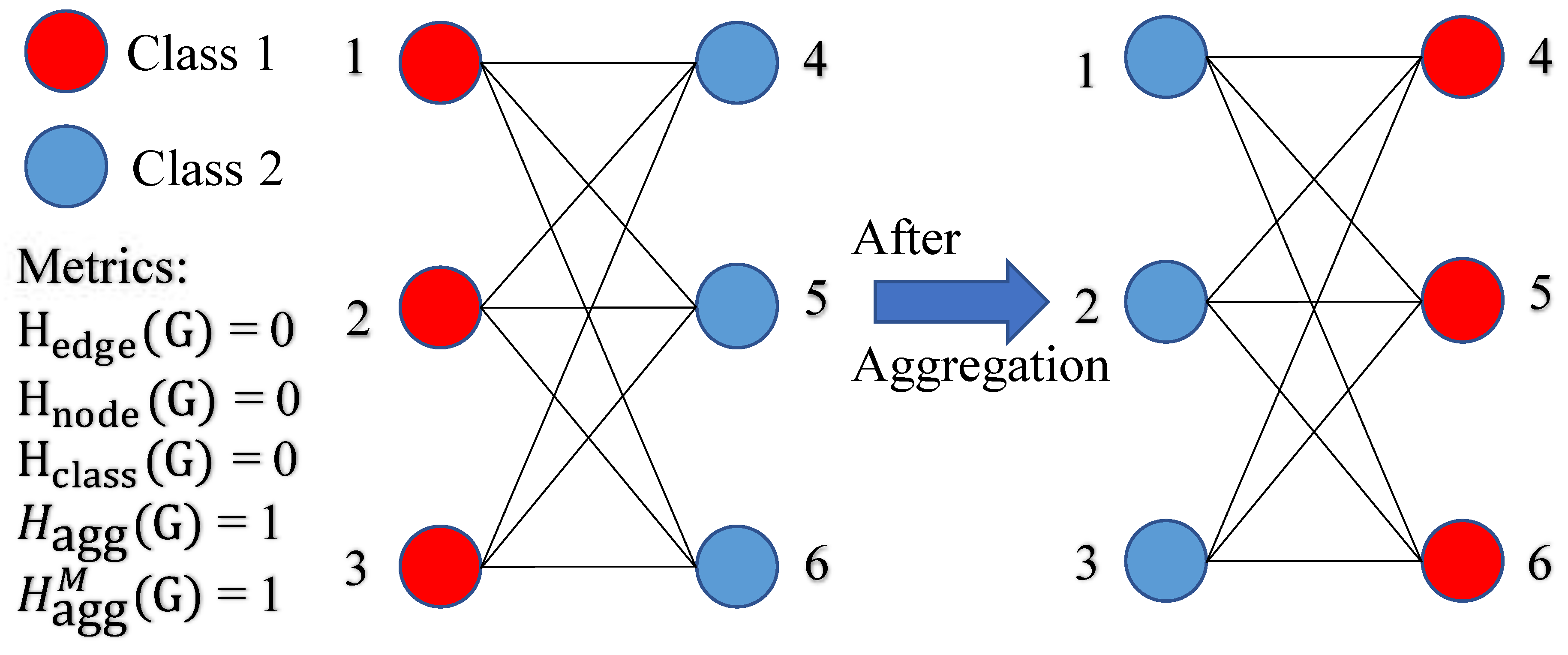

3.2. Analysis of Heterophily and Aggregation Homophily Metric

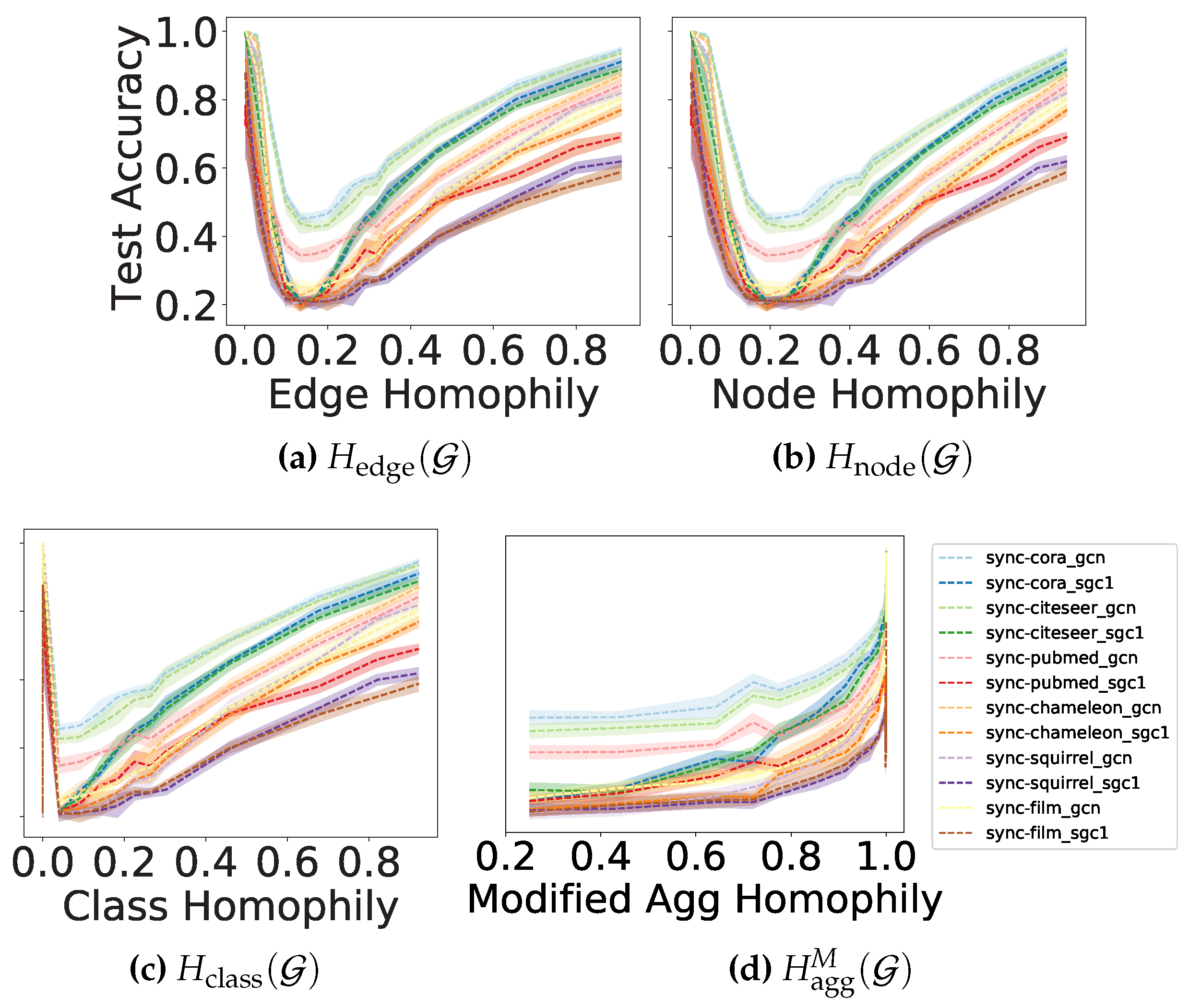

Comparison of Homophily Metrics on Synthetic Graphs

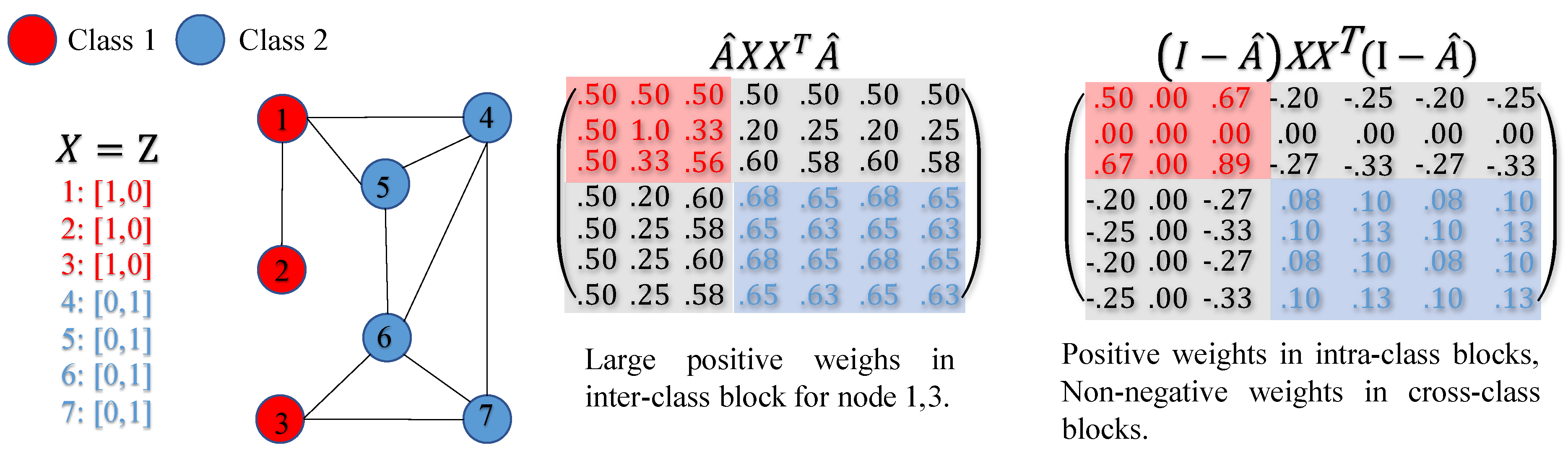

3.3. How Diversification Operation Helps with Harmful Heterophily

3.4. Filterbank and Adaptive Channel Mixing(ACM) GNN Framework

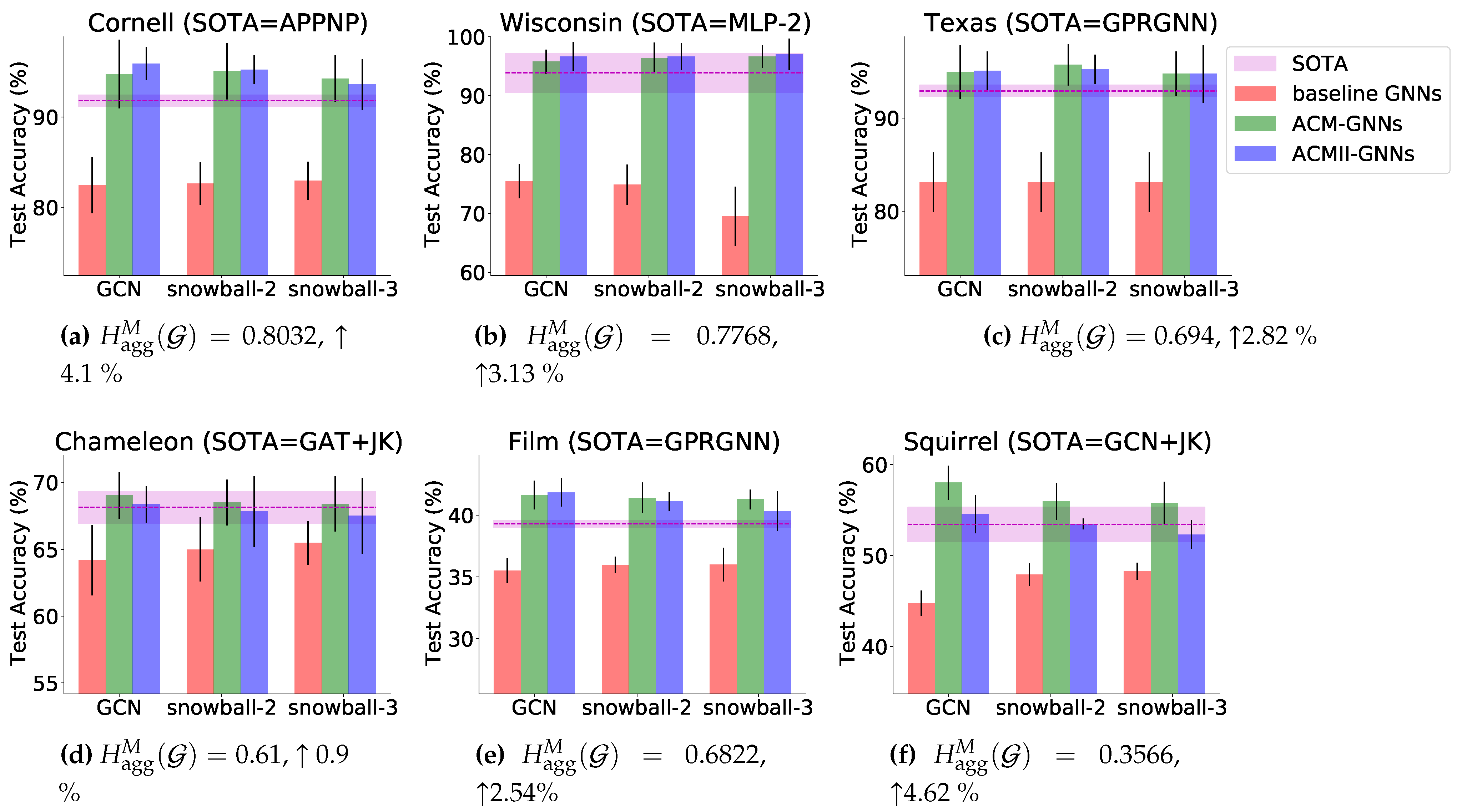

Filterbank

Filterbank in Spatial Form

Complexity

Performance Comparison

3.5. Prior Work

3.6. Future Work

Limitation of Diversification Operation

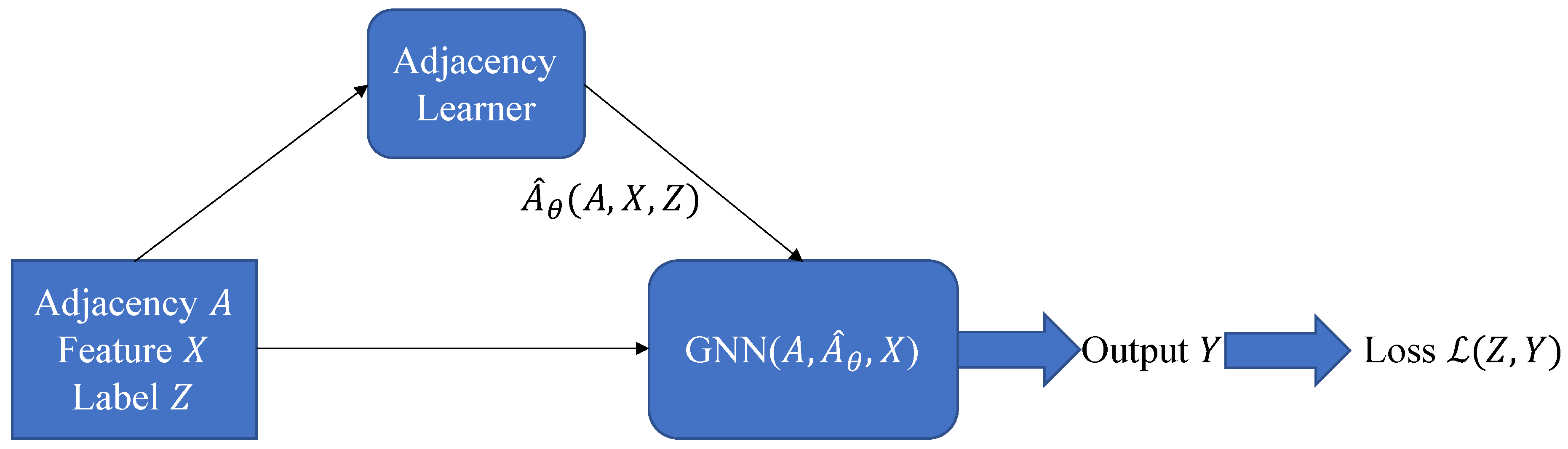

3.6.0.12. Exploring Different Ways for Adjacency Candidate Selection

- Sample or select (top-) nodes from complementary graph, put them together with the pre-defined neighborhood set to form adjacency candidate set, then sample or select (top-) adjacency candidates for training. Try to train it end-to-end.

- Consider modeling the candidate selection process as a multi-armed bandit problem. Find an efficient way to learn to select good candidates from complementary graph. Can use pseudo count to prevent selecting the same nodes repeatedly.

4. Graph Representation Learning for Reinforcement Learning

4.1. Markov Decision Process (MDP)

4.2. Graph Representation Learning for MDP

4.3. Reinforcement Learning with Graph Representation Learning

- Denote environment transition matrix as P, whereand , , for all . Note that P is not a square matrix.

- We rewrite the policy by an matrix , where , otherwise 0:where is an -dimensional row vector. From this definition, one can quickly verify that the matrix product gives the state-to-state transition matrix (asymmetric) induced by the policy in the environment P, and the matrix product gives the state-action-to-state-action transition matrix (asymmetric) induced by policy in the environment P.

- We denote the reward vector as , whose entry specifies the reward obtained when taking action a in state s, i.e.

- The state value function and state-action value function can be represented by

4.3.1. Learn Reward Propagation as Label Propagation

4.3.2. Graph Embedding as Auxiliary Task for Representation Learning

Appendix A. Calculation of Variances

Appendix A.1. Background

Appendix A.2. Variance Analysis

Appendix A.2.1. Forward View

- The adjacency matrix with self-loop can be considered as a prior covariance matrix and thus a reasonable assumption is .

- Consider symmetric normalized as a prior covariance matrix and we have

Appendix A.2.2. Backward View

Appendix A.3. Energy Analysis

References

- S. Abu-El-Haija, B. Perozzi, A. Kapoor, N. Alipourfard, K. Lerman, H. Harutyunyan, G. Ver Steeg, and A. Galstyan. Mixhop: Higher-order graph convolutional architectures via sparsified neighborhood mixing. In international conference on machine learning, pages 21–29. PMLR, 2019.

- D. Bo, X. Wang, C. Shi, and H. Shen. Beyond low-frequency information in graph convolutional networks. arXiv preprint, arXiv:2101.00797, 2021.

- A. Bordes, N. Usunier, A. Garcia-Duran, J. Weston, and O. Yakhnenko. Translating embeddings for modeling multi-relational data. In Advances in neural information processing systems, pages 2787–2795, 2013.

- M. M. Bronstein, J. Bruna, Y. LeCun, A. Szlam, and P. Vandergheynst. Geometric deep learning: Going beyond euclidean data. arXiv, abs/1611.08097, 2016.

- M. Chen, Z. Wei, Z. Huang, B. Ding, and Y. Li. Simple and deep graph convolutional networks. arXiv preprint, arXiv:2007.02133.2020.

- E. Chien, J. Peng, P. Li, and O. Milenkovic. Adaptive universal generalized pagerank graph neural network. In International Conference on Learning Representations. https://openreview. net/forum, 2021.

- F. R. Chung and F. C. Graham. Spectral graph theory Number 92. American Mathematical Soc., 1997.

- P. Dayan. Improving generalization for temporal difference learning: The successor representation. Neural Computation, 5(4):613–624. 1993.

- M. Defferrard, X. Bresson, and P. Vandergheynst. Convolutional neural networks on graphs with fast localized spectral filtering. arXiv, abs/1606.09375, 2016.

- V. N. Ekambaram. Graph structured data viewed through a fourier lens. University of California, Berkeley, 2014.

- A. Frommer, K. Lund, and D. B. Szyld. Block Krylov subspace methods for functions of matrices. Electronic Transactions on Numerical Analysis. 47:100–126, 2017.

- J. Gilmer, S. S. Schoenholz, P. F. Riley, O. Vinyals, and G. E. Dahl. Neural message passing for quantum chemistry. In Proceedings of the 34th International Conference on Machine Learning-Volume 70, pages 1263–1272. JMLR. org, 2017.

- X. Glorot and Y. Bengio. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the thirteenth international conference on artificial intelligence and statistics, pages 249–256, 2010.

- A. Grover and J. Leskovec. node2vec: Scalable feature learning for networks. In Proceedings of the 22nd ACM SIGKDD international conference on Knowledge discovery and data mining, pages 855–864. ACM, 2016.

- M. H. Gutknecht and T. Schmelzer. The block grade of a block krylov space. Linear Algebra and its Applications, 430(1):174–185, 2009.

- W. L. Hamilton. Graph representation learning. Synthesis Lectures on Artifical Intelligence and Machine Learning, 14(3):1–159. 2020.

- W. L. Hamilton, R. Ying, and J. Leskovec. Inductive representation learning on large graphs. arXiv, abs/1706.02216, 2017.

- K. He, X. Zhang, S. Ren, and J. Sun. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE international conference on computer vision, pages 1026–1034, 2015.

- G. E. Hinton, S. Osindero, and Y.-W. Teh. A fast learning algorithm for deep belief nets. Neural computation, 18(7):1527–1554, 2006.

- Y. Hou, J. Zhang, J. Cheng, K. Ma, R. T. Ma, H. Chen, and M.-C. Yang. Measuring and improving the use of graph information in graph neural networks. In International Conference on Learning Representations, 2019.

- C. Hua, S. Luan, Q. Zhang, and J. Fu. Graph neural networks intersect probabilistic graphical models: A survey. arXiv preprint, arXiv:2206.06089, 2022.

- M. Jaderberg, V. Mnih, W. M. Czarnecki, T. Schaul, J. Z. Leibo, D. Silver, and K. Kavukcuoglu. Reinforcement learning with unsupervised auxiliary tasks. arXiv preprint, arXiv:1611.05397.2016.

- D. P. Kingma and J. Ba. Adam: A method for stochastic optimization. arXiv preprint, arXiv:1412.6980.2014.

- T. N. Kipf and M. Welling. Semi-supervised classification with graph convolutional networks. arXiv, abs/1609.02907, 2016.

- J. Klicpera, A. Bojchevski, and S. Günnemann. Predict then propagate: Graph neural networks meet personalized pagerank. arXiv preprint, arXiv:1810.05997.2018.

- M. Klissarov and D. Precup. Diffusion-based approximate value functions. In the 35th international conference on Machine learning ECA Workshop, 2018.

- M. Klissarov and D. Precup. Graph convolutional networks as reward shaping functions. In ICLR 2019, Representation Learning on Graphs and Manifolds Workshop, 2019.

- M. Klissarov and D. Precup. Reward propagation using graph convolutional networks. arXiv preprint, arXiv:2010.02474.2020.

- T. D. Kulkarni, A. Saeedi, S. Gautam, and S. J. Gershman. Deep successor reinforcement learning. arXiv preprint, arXiv:1606.02396.2016.

- Y. LeCun, Y. Bengio, and G. Hinton. Deep learning. nature, 521(7553):436, . 2015.

- Y. LeCun, L. Bottou, Y. Bengio, P. Haffner, et al. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11):2278–2324, 1998.

- Q. Li, Z. Han, and X.Wu. Deeper insights into graph convolutional networks for semi-supervised learning. arXiv, abs/1801.07606, 2018.

- R. Li, S. Wang, F. Zhu, and J. Huang. Adaptive graph convolutional neural networks. In Thirty-Second AAAI Conference on Artificial Intelligence, 2018.

- R. Liao, Z. Zhao, R. Urtasun, and R. S. Zemel. Lanczosnet: Multi-scale deep graph convolutional networks. arXiv, abs/1901.01484, 2019.

- D. Lim, X. Li, F. Hohne, and S.-N. Lim. New benchmarks for learning on non-homophilous graphs. arXiv preprint, arXiv:2104.01404.2021.

- M. Liu, Z. Wang, and S. Ji. Non-local graph neural networks. arXiv preprint, arXiv:2005.14612.2020.

- I. Loshchilov and F. Hutter. Decoupled weight decay regularization. arXiv preprint, arXiv:1711.05101.2017.

- S. Luan, X.-W. Chang, and D. Precup. Revisit policy optimization in matrix form. arXiv preprint, arXiv:1909.09186.2019.

- S. Luan, C. Hua, Q. Lu, J. Zhu, X.-W. Chang, and D. Precup. When do we need gnn for node classification? arXiv preprint, arXiv:2210.16979.2022.

- S. Luan, C. Hua, Q. Lu, J. Zhu, M. Zhao, S. Zhang, X.-W. Chang, and D. Precup. Is heterophily a real nightmare for graph neural networks on performing node classification?

- S. Luan, C. Hua, Q. Lu, J. Zhu, M. Zhao, S. Zhang, X.-W. Chang, and D. Precup. Is heterophily a real nightmare for graph neural networks to do node classification? arXiv preprint, arXiv:2109.05641.2021.

- S. Luan, C. Hua, Q. Lu, J. Zhu, M. Zhao, S. Zhang, X.-W. Chang, and D. Precup. Revisiting heterophily for graph neural networks. Advances in neural information processing systems, 35:1362–1375, 2022.

- S. Luan, C. Hua, M. Xu, Q. Lu, J. Zhu, X.-W. Chang, J. Fu, J. Leskovec, and D. Precup. When do graph neural networks help with node classification: Investigating the homophily principle on node distinguishability. arXiv preprint, arXiv:2304.14274.2023.

- S. Luan, M. Zhao, X.-W. Chang, and D. Precup. Break the ceiling: Stronger multi-scale deep graph convolutional networks. Advances in neural information processing systems, 32, 2019.

- S. Luan, M. Zhao, X.-W. Chang, and D. Precup. Training matters: Unlocking potentials of deeper graph convolutional neural networks. arXiv preprint, arXiv:2008.08838.2020.

- S. Luan, M. Zhao, C. Hua, X.-W. Chang, and D. Precup. Complete the missing half: Augmenting aggregation filtering with diversification for graph convolutional networks. arXiv preprint, arXiv:2008.08844.2020.

- S. Luan, M. Zhao, C. Hua, X.-W. Chang, and D. Precup. Complete the missing half: Augmenting aggregation filtering with diversification for graph convolutional neural networks. arXiv preprint, arXiv:2212.10822.2022.

- T. Maehara. Revisiting graph neural networks: All we have is low-pass filters. arXiv preprint, arXiv:1905.09550.2019.

- S. Mahadevan. Proto-value functions: Developmental reinforcement learning. In Proceedings of the 22nd international conference on Machine learning, pages 553–560. ACM, 2005.

- F. Monti, D. Boscaini, J. Masci, E. Rodola, J. Svoboda, and M. M. Bronstein. Geometric deep learning on graphs and manifolds using mixture model cnns. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 5115–5124, 2017.

- H. Pei, B. Wei, K. C.-C. Chang, Y. Lei, and B. Yang. Geom-gcn: Geometric graph convolutional networks. arXiv preprint, arXiv:2002.05287.2020.

- B. Perozzi, R. Al-Rfou, and S. Skiena. Deepwalk: Online learning of social representations. In Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining, pages 701–710. ACM, 2014.

- U. N. Raghavan, R. Albert, and S. Kumara. Near linear time algorithm to detect community structures in large-scale networks. Physical review E, 76(3):036106, 2007.

- L. F. Ribeiro, P. H. Saverese, and D. R. Figueiredo. struc2vec: Learning node representations from structural identity. In Proceedings of the 23rd ACM SIGKDD international conference on knowledge discovery and data mining, pages 385–394, 2017.

- Z. Sun, Z.-H. Deng, J.-Y. Nie, and J. Tang. Rotate: Knowledge graph embedding by relational rotation in complex space. arXiv preprint, arXiv:1902.10197.2019.

- R. S. Sutton and A. G. Barto. Reinforcement learning: An introduction, MIT press,. 2018.

- J. Tang, M. Qu, M. Wang, M. Zhang, J. Yan, and Q. Mei. Line: Large-scale information network embedding. In Proceedings of the 24th international conference on world wide web, pages 1067–1077. International World Wide Web Conferences Steering Committee, 2015.

- P. Velickovic, G. Cucurull, A. Casanova, A. Romero, P. Lio, and Y. Bengio. Graph attention networks. arXiv, abs/1710.10903, 2017.

- T. Wang, M. Bowling, and D. Schuurmans. Dual representations for dynamic programming and reinforcement learning. In 2007 IEEE International Symposium on Approximate Dynamic Programming and Reinforcement Learning, pages 44–51. IEEE, 2007.

- F. Wu, T. Zhang, A. H. d. Souza Jr, C. Fifty, T. Yu, and K. Q. Weinberger. Simplifying graph convolutional networks. arXiv preprint, arXiv:1902.07153.2019.

- Z.Wu, S. Pan, F. Chen, G. Long, C. Zhang, and P. S. Yu. A comprehensive survey on graph neural networks. arXiv, abs/1901.00596, 2019.

- C. Xu and R. Li. Relation embedding with dihedral group in knowledge graph. arXiv preprint, arXiv:1906.00687.2019.

- K. Xu, C. Li, Y. Tian, T. Sonobe, K.-i. Kawarabayashi, and S. Jegelka. Representation learning on graphs with jumping knowledge networks. In J. Dy and A. Krause, editors, Proceedings of the 35th International Conference on Machine Learning, volume 80 of Proceedings of Machine Learning Research, pages 5453–5462. PMLR, 10–15 Jul 2018.

- Y. Yan, M. Hashemi, K. Swersky, Y. Yang, and D. Koutra. Two sides of the same coin: Heterophily and oversmoothing in graph convolutional neural networks. arXiv preprint, arXiv:2102.06462.2021.

- S. Zhang, Y. Tay, L. Yao, and Q. Liu. Quaternion knowledge graph embedding. arXiv preprint, arXiv:1904.10281.2019.

- S. Zhang, H. Tong, J. Xu, and R. Maciejewski. Graph convolutional networks: Algorithms, applications and open challenges. In International Conference on Computational Social Networks, pages 79–91. Springer, 2018.

- M. Zhao, Z. Liu, S. Luan, S. Zhang, D. Precup, and Y. Bengio. A consciousness-inspired planning agent for model-based reinforcement learning. Advances in neural information processing systems, 34:1569–1581, 2021.

- M. Zhao, S. Luan, I. Porada, X.-W. Chang, and D. Precup. Meta-learning state-based eligibility traces for more sample-efficient policy evaluation. arXiv preprint, arXiv:1904.11439.2019.

- J. Zhu, R. A. Rossi, A. Rao, T. Mai, N. Lipka, N. K. Ahmed, and D. Koutra. Graph neural networks with heterophily. arXiv preprint, arXiv:2009.13566.2020.

- J. Zhu, Y. Yan, L. Zhao, M. Heimann, L. Akoglu, and D. Koutra. Beyond homophily in graph neural networks: Current limitations and effective designs. Advances in Neural Information Processing Systems, 33, 2020.

- J. Zhu, Y. Yan, L. Zhao, M. Heimann, L. Akoglu, and D. Koutra. Generalizing graph neural networks beyond homophily. arXiv preprint, arXiv:2006.11468.2020.

| 1 | |

| 2 | For simplicity, the independence assumption is directly borrowed from [13], but theoretically it is too strong for GNNs. We will try to relax this assumption in the future. |

| 3 | The words "channel-shared" and "channel-wise" are borrowed from [18], which indicate if we share the same learning parameter between each feature dimension or not. |

| 4 | The authors in [35] did not name this homophily metric. We name it class homophily based on its definition. |

| 5 | A similar J-shaped curve is found in [70], though using different data generation processes. It does not mention the insufficiency of edge homophily. |

| 6 | In graph signal processing, an additional synthesis filter [10] is required to form the 2-channel filterbank. But synthesis filter is not needed in our framework, so we do not introduce it in our paper. |

| 7 | From this perspective, we should not treat the trajectory as sequential data, because we do not necessarily have an ordered relation between states on a graph, even for directed graph. Although the observation seems to have an order in it, we actually only have transition relation. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).