1. Introduction

In clinical practice,

Radiographs are a common diagnostic tool and a standard imaging system

frequently employed in treating tooth loss due to their cost-effectiveness. Another reason to use panoramic X-rays is that they capture a wide range of the maxillomandibular region, resulting in richer contextual information. In addition, it has a lower radiation rate than other methods [

2]. With the help of panoramic radiographs, dentists can discover various dental problems like bone abnormalities, cavities, hidden dental structures, and post-accidental fractures that are hard or nearly impossible to detect with visual scrutiny [

3]. Thus, dentists can leverage such tools to establish an appropriate treatment plan for each patient. The

analysis of radiographs may be contracted to dentists in certain circumstances due to the nature of the analysis done on the panoramic X-rays being done manually. Such practice is time-consuming and requires a certain level of expertise to segregate relevant dental features from irrelevant ones like jaw bones, nasal bones, and spine bones [

4]. Contradictions may occur due to the variation present in the level of expertise from one dentist to another. The

agreement rate (an alternative for dentists'

diagnostic performance) of professionals' analysis of radiographs

seems to vary in part due to personal knowledge, biases, and skills [

5,

6]. The irregularity of professional dentists' abilities to interpret radiographs could cause misdiagnosis and mistreatment in some cases [

7,

8].

Many automated systems have been developed to overcome such complications; some systems utilized classical machine learning techniques such as active contour [

9], Bayesian methods [

10], and support vector machines [

11] with hand-crafted features. The design of hand-crafted features has a huge negative impact on the performance of the abovementioned algorithms. On the other hand, deep learning approaches made a breakthrough in creating automated systems and, as a result, had a superior performance compared to the classical machine learning algorithms. Convolutional Neural Networks are the mainly used network in interpreting imagery data because it possesses many advantages like connectivity, weight sharing, and down-sampling, effectively reducing the number of parameters and speeding up convergence [

12].

Architectures like U-Net [

13] and DeepMedic [

14], which are categorized as Fully Convolutional Networks [

1], are specially built to handle medical images of various medical domains. One popular task in computer-aided diagnosis is medical image segmentation, which involves making anatomical or pathological structure changes more protruding in embodiments [

15]. Popular medical image segmentation tasks include liver-tumour segmentation [

16], brain segmentation [

17], and cardiac image segmentation [

18]. Many of these tasks utilize the U-Net architecture, which has shown an ideal performance in the segmentation domain. Other improvements were made to this architecture, like redesigning it to be a nested-like architecture [

19] or to be self-adaptable [

20] U-Net is a framework rather than an architecture due to its ample room for customization, which leaves it a good option to combine it with newly arising mechanisms [

14].

The main purpose of this study is to utilize the attention module in U-Net architecture to help optimize the teeth segmentation quality on the TUFTS benchmark dataset and later be assigned as an axillary tool to aid experienced general dentists as well as novice practitioners in learning to analyze panoramic x-rays without the problem of bias or misdiagnosis that is resulted from the diversity of practitioners' domain knowledge. The rest of the paper goes as follows: 2) discusses related work of previous methods proposed in teeth segmentation, 3) material and methods, 4) experiment results, 5) discussion of the conducted study and further improvements.

2. Related Work

2.1. U-Net Approaches

Since the creation of U-Net [

13] in 2015. Various studies have been conducted on it, proving its effectiveness in detecting and segmenting visual medical data. U-Net has had a lot of variations until now, with some authors using a batch normalization layer in its encoder part to get better stability or other ingenuine strategies that helped to enhance U-Net's performance in the task in question.

L. F. Machado et al. [

21] solved the problem of mandible bone segmentation on panoramic X-ray images. Where in their study, they considered two datasets. The first dataset contained 393 images of radiographs and their respective segmentation mask; the second one was a publicly available third-party image dataset comprising 116 images and segmentation mask pairs. They trained four networks using U-Net and HRNet architectures without data augmentation (DA) and then used morphology refinement (MR) to enhance the model's prediction. The ensemble model comprises U-Net + MR, U-Net+DA+MR, HRNet+MR, and HRNet+DA+MR. In their individual deep learning models section, they mentioned that U-Net and U-Net+DA models achieved the highest performances after morphological refinement on both training and validation sets. The effectiveness of the morphological refinement strategy has been demonstrated in their study, and it shows that it is a good strategy to consider when training.

C. Rohrer et al. [

22] also used U-Net with a pre-trained encoder to investigate the problem of dental restoration segmentation. The authors' study demonstrated how models trained on small, equally cropped rectangular images (tiles) of panoramic radiographs would outperform models trained on the full image. Their dataset had a total of 1781 panoramic radiographs. They used different numbers of tiles for their experiments, concluding that the model's performance improved with a higher number of tiles. The study's proposed approach is that the tiling strategy effectively enhances the detection performance when tailored to other applications, as it can detect less frequent features that might go missing from the bigger image.

I.-S. Song et al. [

23] aimed to evaluate the performance of deep learning models based on CNNs (Convolutional Neural Networks) for apical lesion segmentation from panoramic radiographs. They trained a U-Net on a dataset consisting of 1000 radiographs in total. Their study showed the potential of utilizing deep learning models to segment apical lesions and how U-Net's performance demonstrated such an incredible performance in this specific task. Rini

Widyaningrum et al. [

24] Used two approaches for segmenting periodontitis staging by using Multi-Label Unet and Mask R-CNN, which were previously trained to perform other tasks (I.e., transfer learning). The authors' dataset contained 100 panoramic radiographs. The Multi-Label Unet performed better than the Mask R-CNN. Later, the authors concluded that Multi-Label U-Net gave off superior segmentation performance to that of Mask R-CNN, and they further recommended using Multi-Label Unet with other techniques to develop more powerful hybrid models for automatic periodontitis detection.

2.2. Attention-Based and Transformer Based

Attention is commonly used in machine translation [

25], learning on graphs [

26], and visual question answering [

27]. Numerous types of attention mechanisms .for example, hard attention which determines a part of the input should be considered by assigning binary weights of either 0 or 1. A weight of 1 indicates that the input element is deemed important, while a weight of 0 means it is considered unimportant. By assigning binary weights, the parameter update process becomes non-differentiable as slight weight changes do not result in smooth transitions or gradients that can be used for gradient-based optimization (e.g., Stochastic gradient descent or adaptive moment estimation). Hard attention was incorporated to focus on meaningful areas in forensic dental identification [

28]. On the other hand, soft attention follows the same process with the difference of using SoftMax functions to calculate weights resulting in a differentiable and deterministic attention process.

Another type of attention, called "self-attention", allows the inputs to interact with each other "self" and determine what they should pay attention to more. Compared to other attention mechanisms, the main advantage of self-attention is the parallel computing ability for a long input, which results in much more expensive computations and more training data. Self-attention was one of the main reasons for the emergence of transformers in the Natural Language (NLP) domain which had a huge impact on benchmarking language model due to it being a cutting-edge technology that is now state-of-the-art in the NLP domain. The transformer model was primarily developed for neural machine translation by

A. Vaswani et al. [

25] and then later modified by

A. Dosovitskiy et al. [

29] to work on visual data making the idea of multi-headed self-attention possible for images without the use of convolutions. Since then, there have been numerous variations of transformer model used for

computer vision applications like

image classification, object detection, and image segmentation.

A. Almalki and L. J. Latecki [

30] utilized newly emerging self-supervised deep learning algorithms like Sim-MIM and UM-MAE to increase their model's efficiency and understating of a limited number of dental radiographs. The model they used in their study was the Swin Transformer, one of the most impactful variations of the transformer model.

Moreover, they used data augmentation and corrected the existing panoramic radiograph dataset, which contains 543 annotated examples they are using for their study. They further discuss the problems with their dataset and how they will try to overcome them by modifying and correcting the teeth instance segmentation and overlapping in all images. They also contributed to further expanding the dataset under the supervision of a dentist by developing ground truth segmentation for dental restorations, including direct and indirect restorations and root canal therapy. Their results showed that parameter tuning, including mask ratio and pre-training epochs, is useful when applying SimMIM pre-training to the dental domain. Furthermore, their correction of the dataset's annotation significantly improved their results.

Having explored the principles and some applications of self-attention and hard attention, we now turn our attention back to the concept of soft attention, as it forms the primary focus of our study.

W. Li et al. [

31] aimed to combine U-Net architecture's convolutional block with a channel-based attention module that uses soft attention for weight calculating. In addition, the authors designed an attention U-Net model for segmenting caries and teeth in a bite-wing radiograph. The Attention U-Net model was trained on a total of 652 images and then compared with several architectures (e.g., PSPNet, DeepLabv3+, and U-NET models), and the experimental results showed that the proposed model outperformed them all. Later they discussed the feasibility of deep learning models as an axillary tool for dentists to aid them in detecting caries.

P. Harsh et al. [

32] also used channel-based Attention U-Net that uses an attention block called Squeeze and excitation (SE) which is known to enhance a fully convolutional network's performance by filtering the relevant and impactful channels only, which is a similar mechanism to the one used in [

31]. The proposed model was trained and tested on the UFBA-UESC dental image dataset containing 1500 dental X-ray images. The performance of the model was superior when compared to other methods.

Channel-wise attention in U-Net is extendable to other applications and is sometimes mixed with spatial attention [

33]. However, it collects features on a global scale for the input. Different types of attention mechanisms adapted for the U-Net architecture, such as grid-based attention gate [

1], which calculates attention coefficients on a local scale allowing more fine-grained output, and it has shown great performance in tasks like pancreas segmentation [

1], deforestation detection[

34], and ischemic lesion segmentation in the brain [

35] but has not yet been applied to any dental segmentation task as to our knowledge.

2.3. Other Approaches

In some cases, authors refer to other types of networks to achieve better segmentation results; some authors may create a novel network that best suits the discussed task. Keep in mind that the choice has a lot of key factors, ranging from the chosen framework used to develop the network to the data quality and computational resources. Some segmentation tasks in the dental domain require more complex networks with additional layers or a different training setup to achieve promising results.

Dayi et al. [

36] evaluated the diagnostic performance of deep learning models for the segmentation of occlusal, proximal, and cervical caries lesions on panoramic radiographs. Their data consisted of 504 anonymous panoramic radiographs. The authors proposed a custom network for dental caries segmentation called Dental Caries Detection Network (DCDNet). As mentioned in the study, the architecture is an encoder-decoder that uses pre-trained backbone networks. The main difference between their proposed network and other segmentation networks is that the last part of the network contains a Multi-Predicted Output (MPO) structure which splits the final feature map into three different paths for detecting occlusal, proximal, and cervical caries.

On the other hand,

Nafi'iyah et al. [

37]. Alleviated the problem of mandibular segmentation using an ensemble of three MobileNetV2 networks trained on 106 panoramic radiographs while addressing the main drawbacks of previously existing mandibular segmentation methods (e.g., they cannot completely represent the mandible.). The authors conducted several experiments with different networks, including U-Net, MobileNetV2, ResNet18, ResNet50, Xception, InceptionResNet V2, and MobileNetV2 turned out to be better than all networks. However, its results still needed to be clarified on the coronoid and mandibular condyles, hence the use of the ensemble, which achieved an excellent result in their research. Similarly,

Arora et al. [

38] used a model based on an encoder-decoder architecture. Its encoder part contained several types of CNN-based models to exploit each network and combine their output to generate a fine-grained contextual feature for teeth segmentation. The authors trained and tested the model on a dataset of 1500 radiographs. Their proposed method uses fewer parameters when compared to state-of-the-art models mentioned in their study while also outperforming them.

2.3. Comparison of Different Attention Modules

There exists numerous attention modules used in fully convolutional networks and have been incorporated by previously mentioned literature [

31,

32,

33] . one of which is "Squeeze-and-Excitation" [

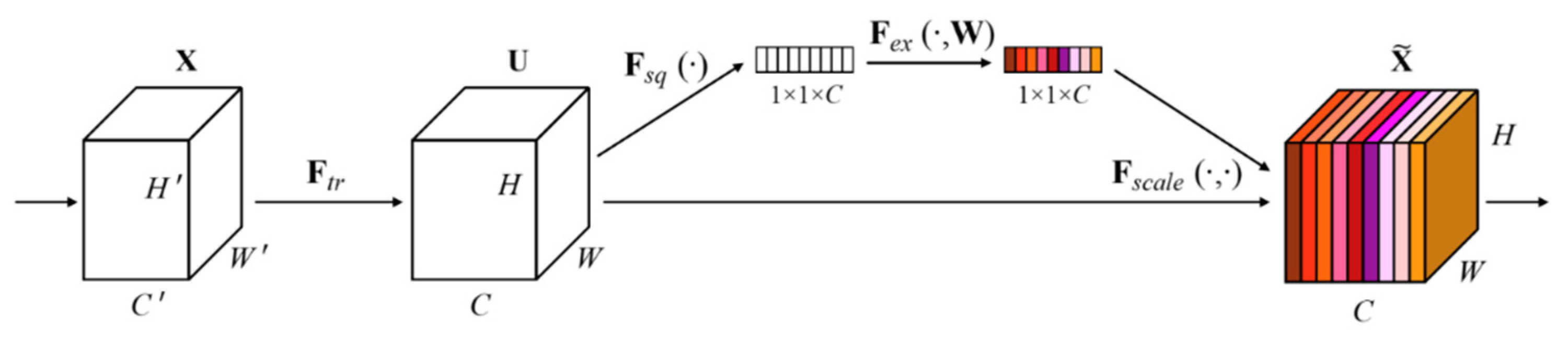

39], which is a Channel-based attention module that involves squeezing the spatial dimension of the input feature map as it produces an attention map by exploiting the inter-relationship of features.

As shown in

Figure 1, the Average-pooling operation is first used to aggregate spatial information of a feature map resulting in a spatial context descriptor for average-pooled features denoted by

.The descriptor is passed through a dense layer, then through a non-linearity activation function (ReLU) and then through another dense layer, followed by a sigmoid activation function that gives each channel a smoothing gating function. Finally, each feature map is weighted based on the side network. The name "Squeeze-and-Excitation" comes from the squeezing that happens by the max-pooling operation and the excitation that happens by weighting the original feature maps with the side network.

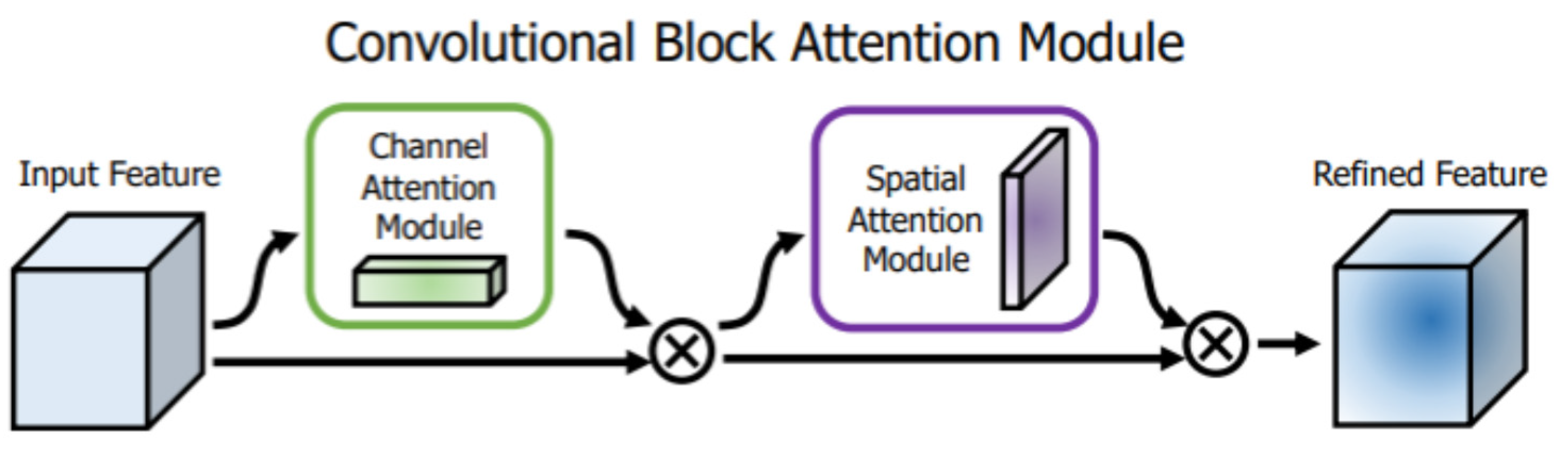

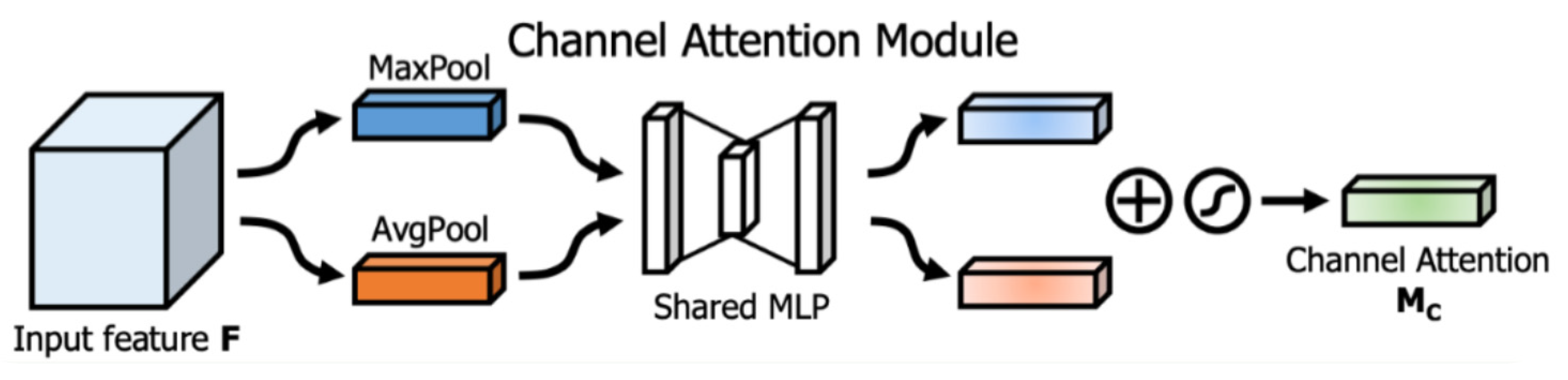

Another type of attention mechanism used in fully convolutional networks is the CBAM attention module [

40] which consists of two submodules. The first submodule is a channel attention module shown in

Figure 2 (the green box), which has the same purpose as the Squeeze-and-Excitation attention module and somehow follows the same structure. It consists of Average and Max-pooling operations which are first used to aggregate spatial information of a feature map resulting in two different spatial context descriptors, one for average-pooled features denoted by

and one for max-pooled features denoted by

.

Both descriptors are passed through a shared multi-layer perceptron (MLP) network comprised of one hidden layer to produce the attention map

, where

is the number of channels. the hidden layer's activation is set to

, where

is the reduction ratio. After passing both descriptors through the MLP network, the intermediate output of the network for both descriptors is passed through a non-linearity activation function (ReLU). Then the final output is merged using element-wise summation and scaled with a sigmoid activation function. The whole process is shown in

Figure 3.

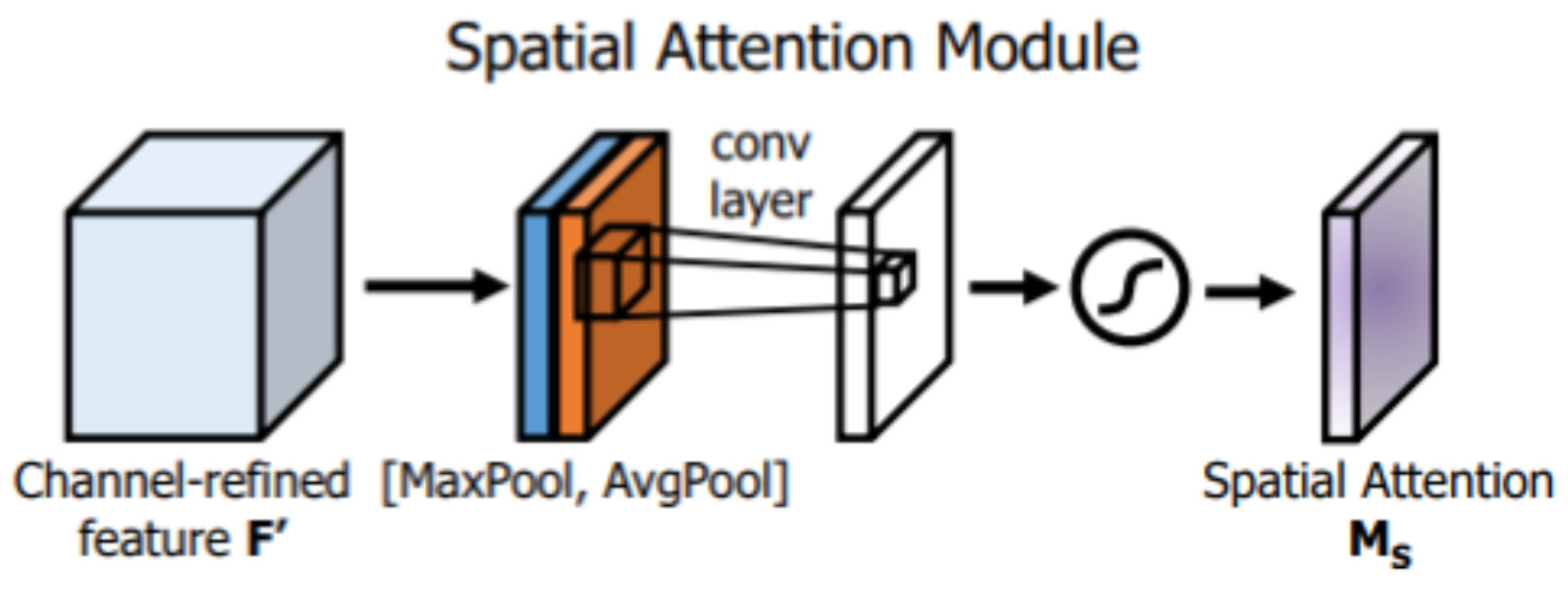

The second attention block is the spatial attention module shown in

Figure 2 (the blue box), which also utilizes max and average-pooling operations to extract refined features denoted by

They are scaled with a sigmoid activation function, as shown in

Figure 4.

Both attention channels

and

Are utilized to refine the input convolutional channels successively. The input is first multiplied element-wise with

Then the output tensor of that operation is then multiplied element-wise with

Last but not least, the output of that sequential process is multiplied element-wise with the original input channel, resulting in fine-grained feature maps. The whole process of the CBAM is shown in

Figure 2.

Both Attention modules enhance the quality of the feature refinement, but both modules suffer from limited modelling of long-rage dependencies that span across the entire input.

2.4. Motivation

Much work has adopted the U-Net architecture for dental segmentation tasks due to its superiority in the segmentation task. However, teeth segmentation can be difficult due to the spatial features of the panoramic radiographs. Some works have used morphological refinement and other attention-based techniques to address this problem. While previous works in the literature of attention-based methods have only employed channel-based attention, no previous work has explored using a grid-based attention mechanism [

1]. Furthermore, the variation in datasets used in previous works is due to the lack of a publicly available benchmark dataset collected with proper data collection protocols. This paper tests the attention U-Net architecture based on [

1] and shows its superiority in teeth segmentation on a publicly available benchmark dataset from the TUFTS School of Dentistry. The contributions of this work can be summarized as follows:

Improving over the baselines trained and tested on the dataset [

41].

Demonstrating the superiority of attention U-Net [

1].

Exploring the application of attention U-Net [

1] in teeth segmentation using a publicly available benchmark dataset.

3. Material and Methods

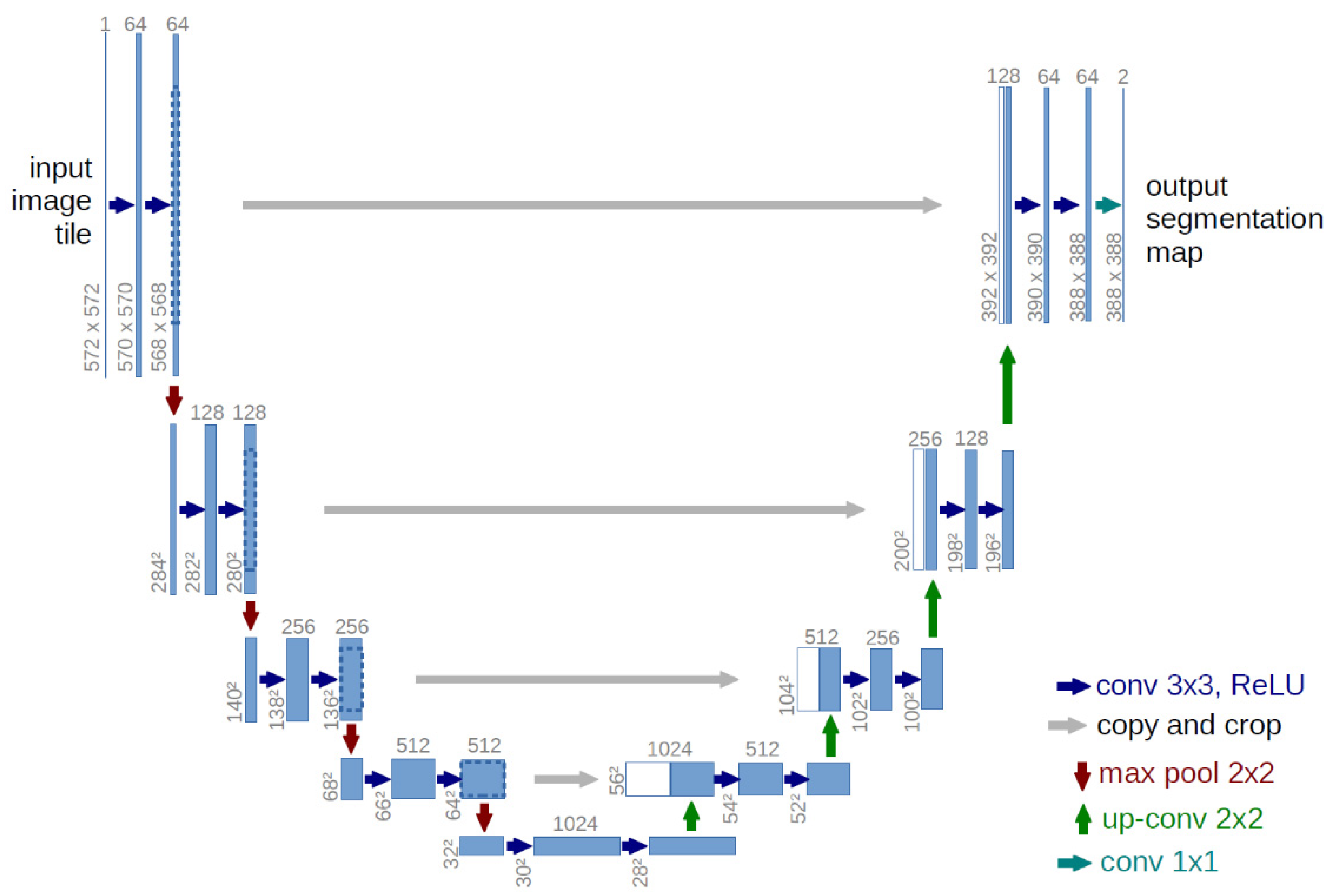

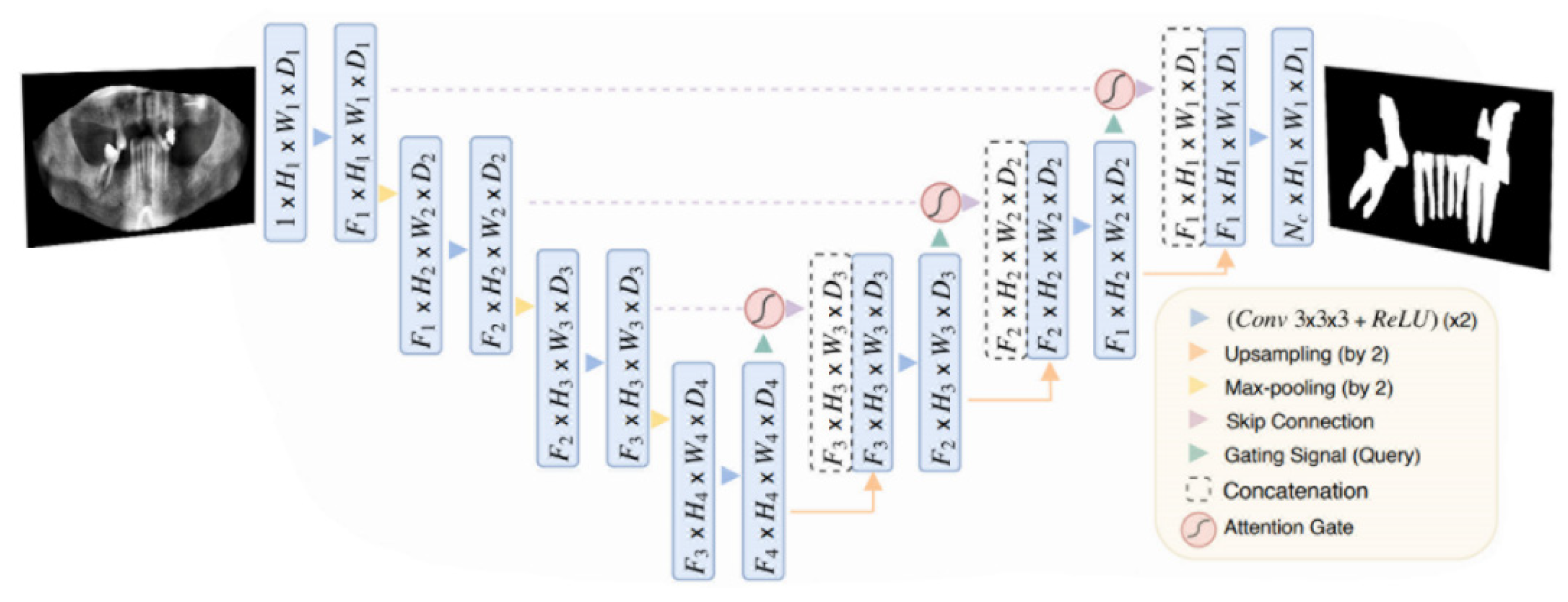

3.1. U-Net Architecture

U-Net is a famous architecture that was developed for biomedical image segmentation. U-Net gets its name from its U-like architecture, as shown in

Figure 5. It is an encoder-decoder architecture consisting of four encoder blocks and four decoder blocks connected via a 1x1 convolution bridge (bottleneck). The encoder part is designed to extract features from the input image and learn high-level features. This process is done through

A sequence of convolutional blocks. Each encoder block comprises 3x3 convolutions followed by a Rectified Linear Unit (ReLU) activation function. After each convolution and ReLU activation, a maximum pooling layer of 2x2 is applied to the output channels in which the dimensions of the channels are reduced by half. The maximum pooling process reduces the computational cost required by the network by reducing the number of trainable parameters. Each decoder block performs a 2x2 transposed convolution (up-sampling) on the channels that came from the encoder through the bottleneck and then concatenates each of the outputted channels from the encoder blocks with the up-sampled channels that come from each block of the up-sampling convolutions. This process helps restore lost information because of the network's depth. Finally, at the last up-sampling convolution, the model applies a sigmoid activation function to the output logits to yield a binary segmentation or a SoftMax in the case of multi-class segmentation.

3.2. Attention U-Net

Attention U-Net [

1] was introduced in 2018. Attention U-Net is nothing but a regular U-Net model, but with the so-called Attention Gate (AG) shown in

Figure 3, between each skip connection and up-sampling layer in the U-Net model shown in

Figure 6. The initial task for the proposed model was the segmentation of CT images of the pancreas, which was considered difficult due to low tissue contrast and large variance in the organ's shape and size. The pape's main contribution was a modification of the attention proposed in [

42], which involved changing the calculation for the attention coefficients from a global feature vector to a grid-based vector. The grid-based attention module computes attention maps that capture the importance of different spatial locations. This change made the attention coefficients target more local regions and better enhance the performance of any Fully Convolutional Network that implements this type of attention. The authors then showcased their proposed Attention Module on the U-Net architecture, which resulted in this novel architecture which was an impactful change in the U-Net architecture. We adopt the same model but with a slightly different modification on the hyperparameters and training setup (e.g. data split and training criterion).

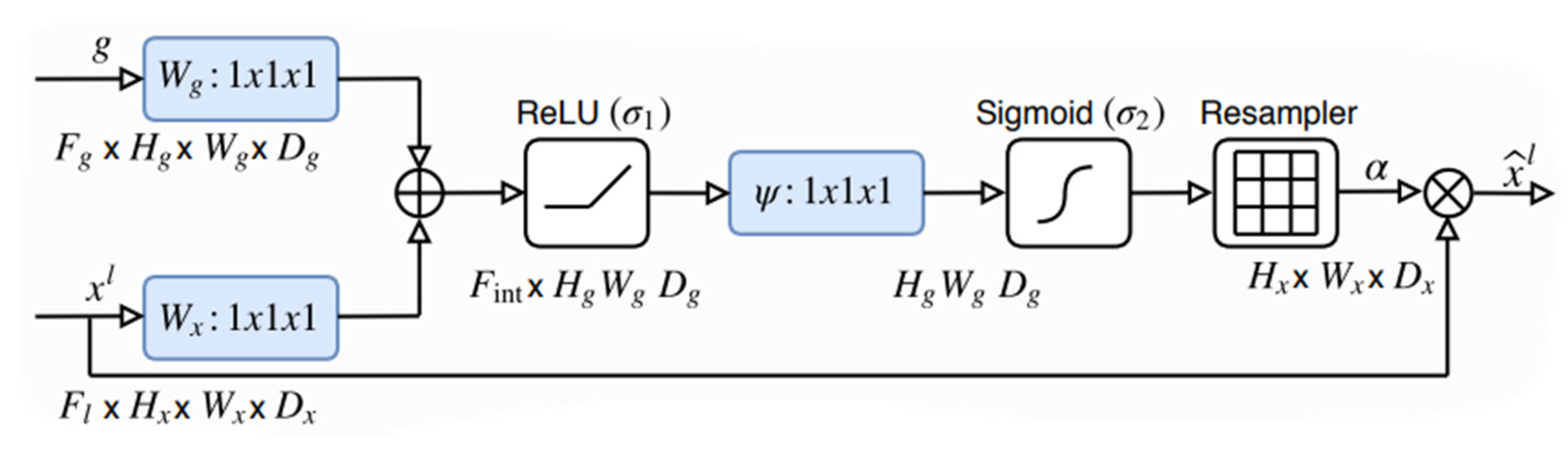

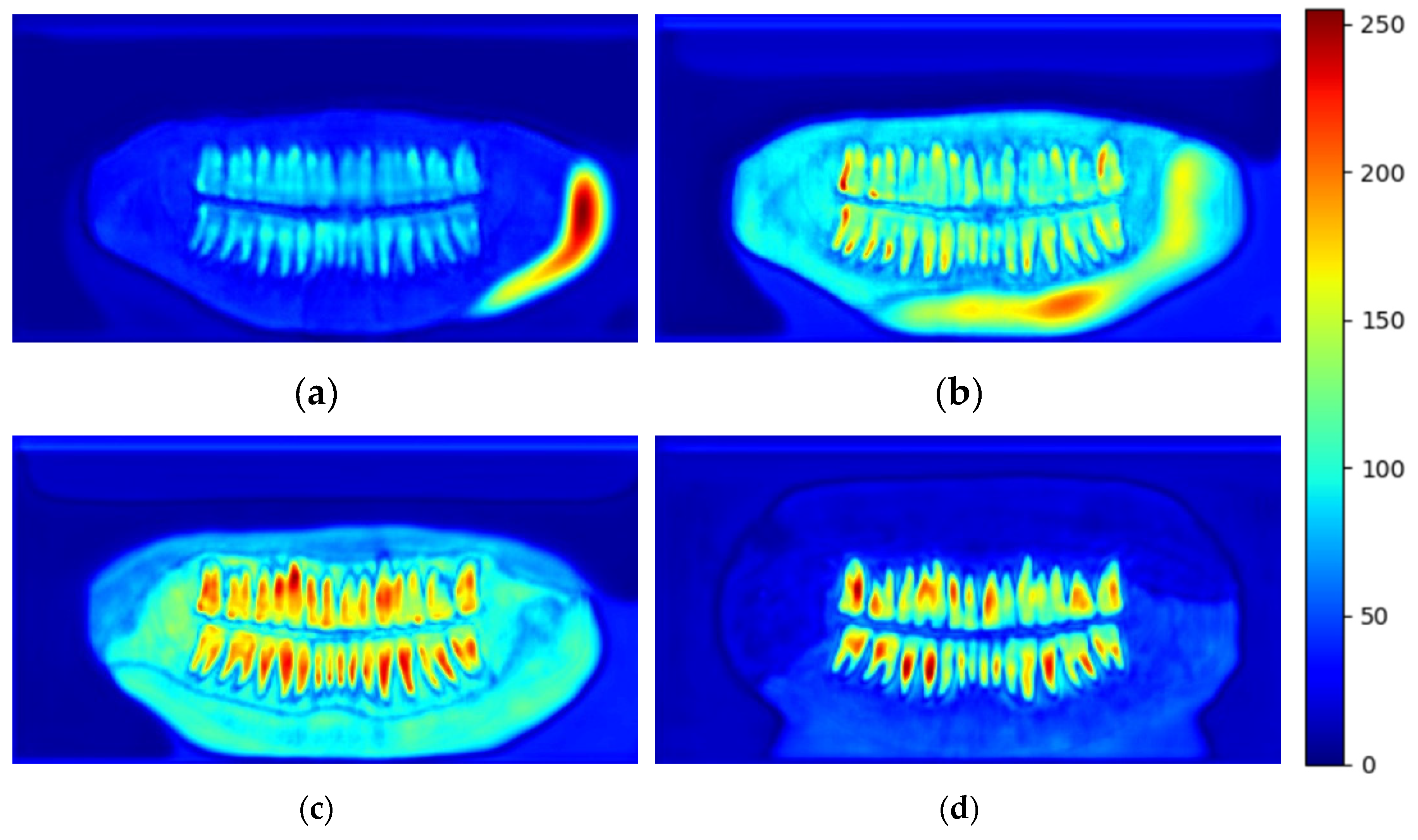

3.2.1. Attention Modules Analysis

Attention helps identify crucial regions in the image and reduce the feature responses to only preserve the important features relevant to the segmentation task (see

Figure 8d).

Figure 7 shows the whole process of the attention gate proposed in [

1], but for a 2D input, the process involves a linear transformation

which is a

convolution on the gating signal coming from the up-sample layer

which is used to determine the focus region and contains more representative contextual information; this process is done in conjunction with linear transformation

which is

convolution on the feature maps coming from the skip-connection layer

from the encoder, which has a better spatial feature representation, the output of this operation will leave us with two intermediate vectors

from the linear transformation

and

from the linear transformation

.

The preceding step, which involves the element-wise addition of the two vectors

and

Namely additive attention. The result of the addition

is then passed through a non-linearity activation function (ReLU)

and another

convolution

is done on the output of the non-linearity to obtain the attention coefficients, but its values are bounded between

and

so it is passed through a sigmoid activation function

which scales the values between 0 and 1. The reason for using sigmoid instead of SoftMax, which is normally used in soft attention, is that SoftMax yields sparse activations at the output. The scaled values of

are the final attention coefficients α. A bilinear then upsamples the coefficients to match the original size of

and multiplied elementwise with

to obtain

Which represents the incoming skip connection that is concatenated with the up-sampling layer in the context of a standard U-Net. The attention coefficients are calculated channel-wise and updated each time the network backpropagates learning which features to suppress and which features to pay attention to.

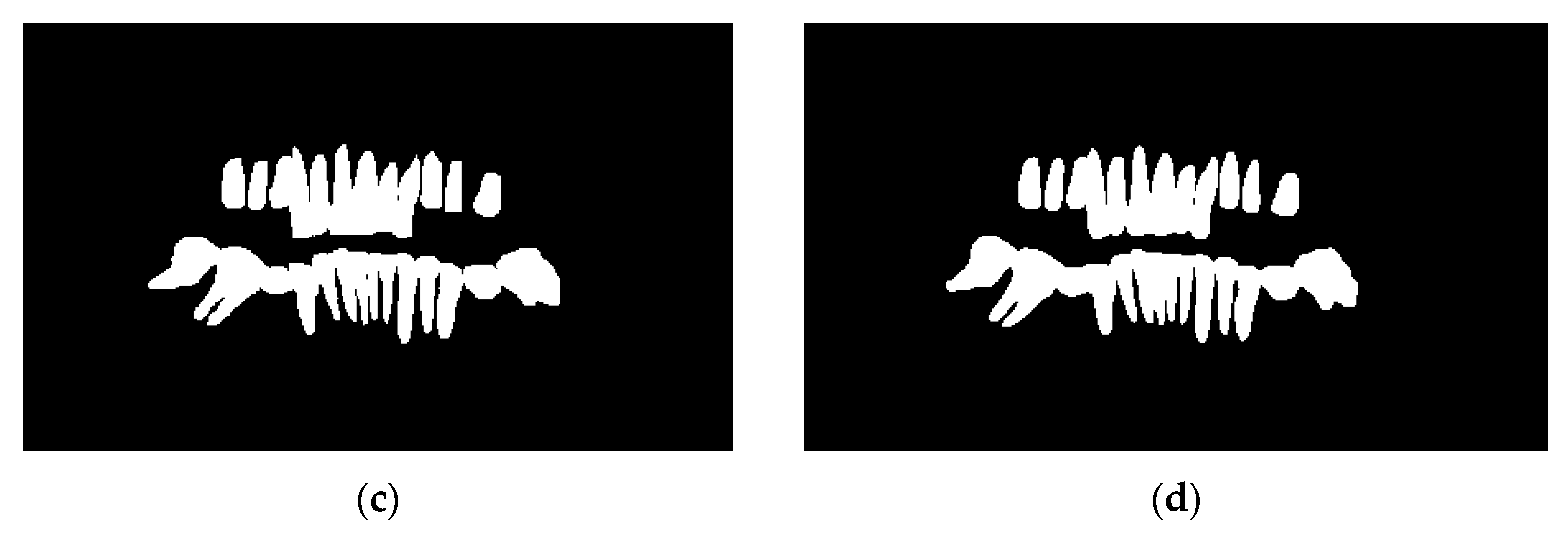

Figure 8 visualizes the attention layer preceding the last attention layer within the network. As can be seen, the attention gate filters out irrelevant information when trained for enough epochs.

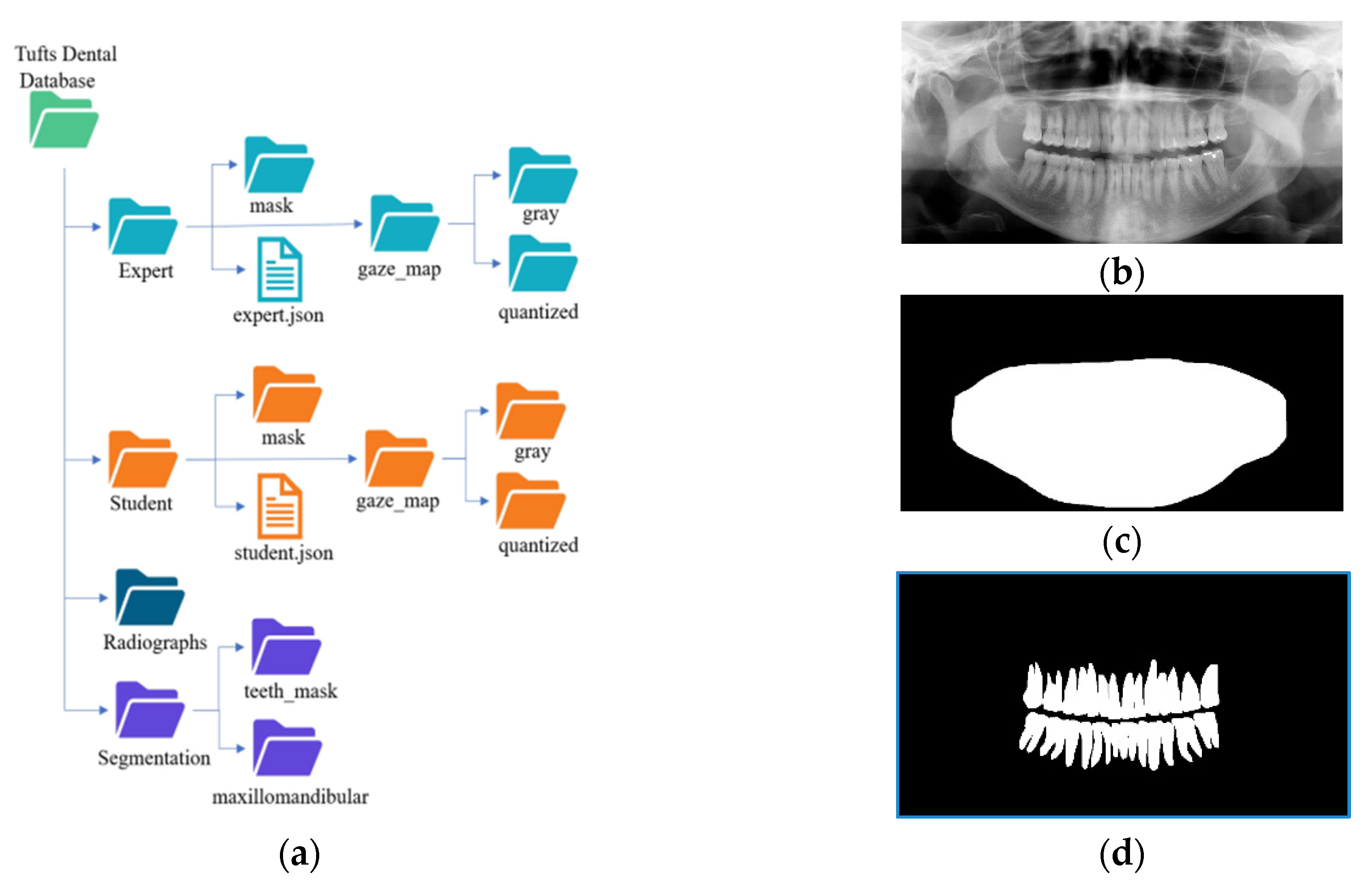

3.3. Dataset Collection and Description

The TUFTS university dataset [

41] is a multimodal dataset consisting of 1000 de-identified images of panoramic radiographs shown in

Figure 9b and five other major components such as 1) teeth masks in

Figure 9d. ,2) maxillomandibular masks shown in

Figure 9c, 3) eye tracker generated maps (grey and quantized), 4) text information containing the description of each radiograph, and 5) masks outlining the abnormalities. Each abnormality segmentation mask and the radiograph description are further split into expert and student-level annotations. The classification of the radiographs is based on five categories: peripheral characteristics, anatomical location, radiodensity, effects on the surrounding structure, and the abnormality category, making it a multimodal dataset. The folder structure of the dataset is shown in

Figure 9a.

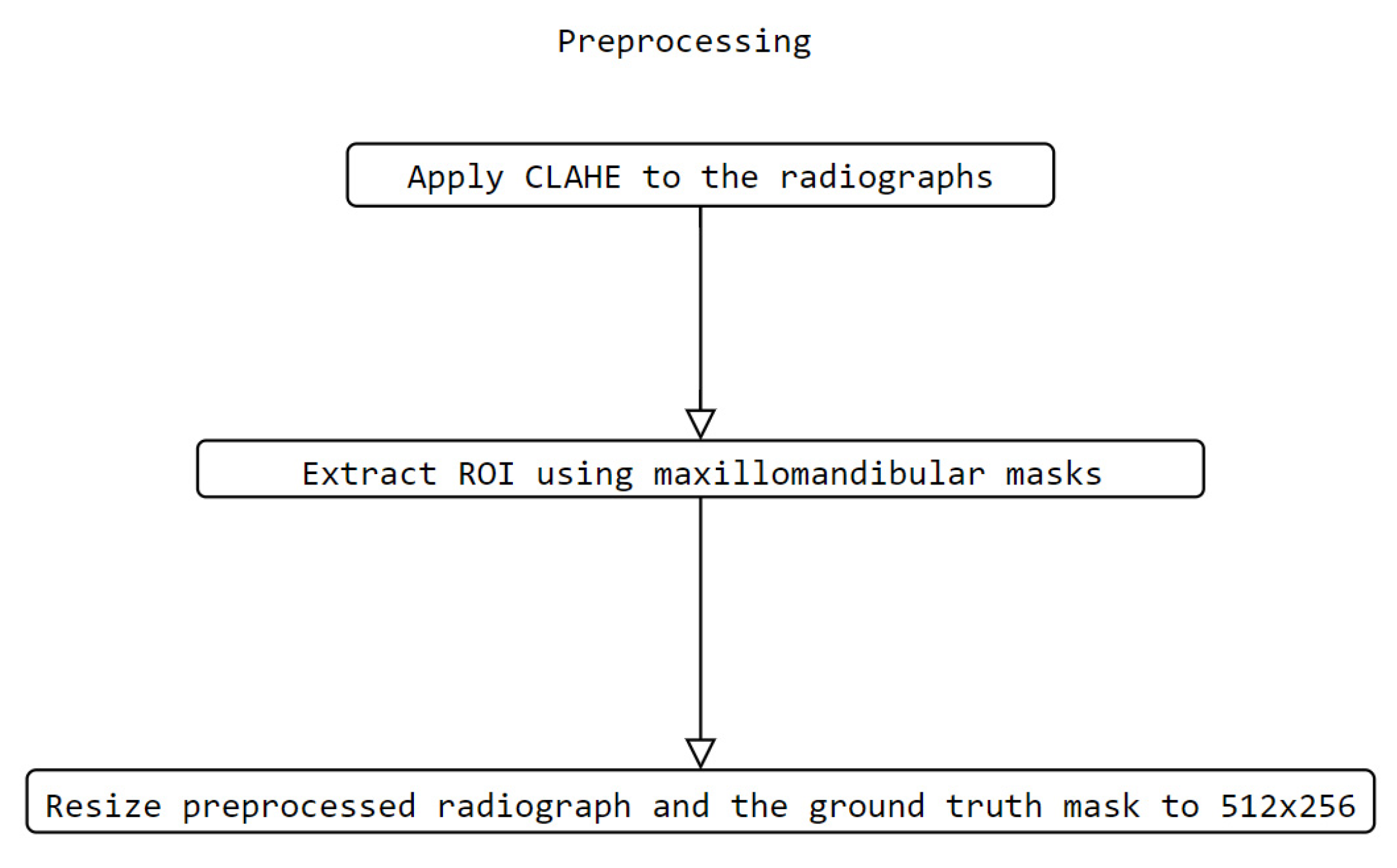

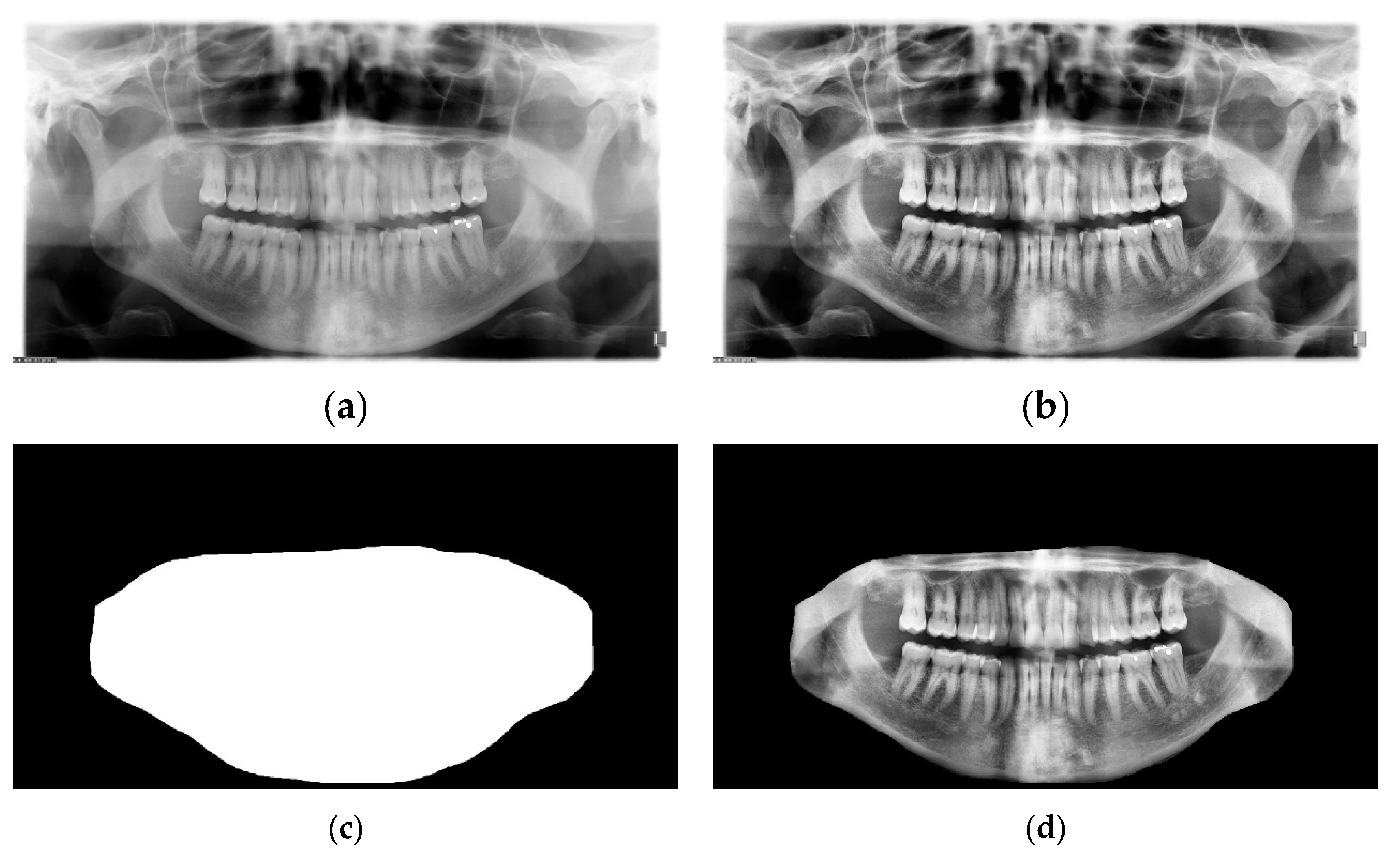

3.4. Data Preprocessing

The preprocessing process is as follows: Contrast Limited Adaptive Histogram Equalization (CLAHE) [

43] was applied to the images to enhance the image features. The contrast enhancement process had a major impact on the visibility of the images.

Figure 11b and

Figure 11a show the original and enhanced image, respectively. To further yield better performance from the model, the maxillomandibular mask shown in

Figure 11c was used by applying an AND operation to the maxillomandibular mask with the preprocessed image to get only the ROI that includes the teeth shown in

Figure 11d. Finally, the processed radiograph shown in Figure (11d) and its corresponding ground truth mask shown in

Figure 9d was resized to 512

256. The preprocessing was applied to all examples in the dataset. Figure 10 is a diagram showing the preprocessing pipeline.

3.5. Training Setup

The model was implemented in Pytorch framework and trained on an NVIDIA RTX 3050 GPU with 4GB RAM. Adam optimizer was used with a learning rate of

and. The model was trained on 100 epochs, ten epochs for each fold. The number of learnable kernels was reduced for computational resource limitations, the batch size was set to 8, and mixed precision strategy was used for training; this allows for less RAM usage as it scales the loss from a number that has 64-bit precision to 16-bit precision, making it viable to train on a low RAM. The preprocessed radiographs and teeth mask pairs were split randomly into ten folds for training and validation.

3.5.1. Loss Function

The segmentation of teeth can be considered a pixel-wise classification problem where the model tries to classify whether the given pixel belongs to the background or teeth class. When dealing with medical image segmentation, some approaches use the standard Cross Entropy (CE) [44, 38]. In contrast, others use metric-sensitive, minority-class penalizing losses, or a mixture between metric-sensitive and Cross Entropy losses [1, 45,19,20] which have shown significant performance in dealing with hard-to-segment regions. For this reason, dice loss is the most appropriate loss function for this specific task, as its main goal is to make the segmented region overlap with the ground truth as much as possible. The negative logarithm value of the dice loss is taken to further alleviate the problem of small misclassifications by penalizing the small errors with the logarithmic operator. This specific loss function was chosen due to the major presence of black pixels (background), which is not interesting in the segmentation task and could hinder performance.

3.5.2. Evaluation Metrics

Pixel Accuracy (PA), Intersection over Union (IoU), and Dice Coefficient (Dice) are employed as the evaluation metric for this study. While pixel accuracy is not a reliable metric to measure the real performance of a segmentation model, it is still used as a generic metric. The pixel accuracy is calculated as the number of correctly classified pixels over the total number of pixels as shown in formula (2); the resultant value is

with 1 indicating a high number of correctly classified pixels and 0 indicating no correctly classified pixels. On the other hand, dice coefficient (also known as dice score) and IoU are two of the most popular metrics used to evaluate the performance of segmentation models. The dice coefficient shown in formula (3) measures the similarity between predicted and ground truth segmentation masks, and it is calculated as twice the intersection of the predicted and ground truth masks divided by the sum of their areas; the resultant value is

with 1 indicating a perfect match between the ground truth and the predicted mask and 0 indicating no overlap. Similarly, intersection over union (IoU) shown in formula (4) measures the extent to which the segmentation aligns with the ground truth. It is calculated as the ratio of the intersection of the predicted and ground truth masks to their union, and the resultant value is

with 1 indicating a perfect match between the ground truth and the predicted mask and 0 indicating no overlap.

4. Experimental Results

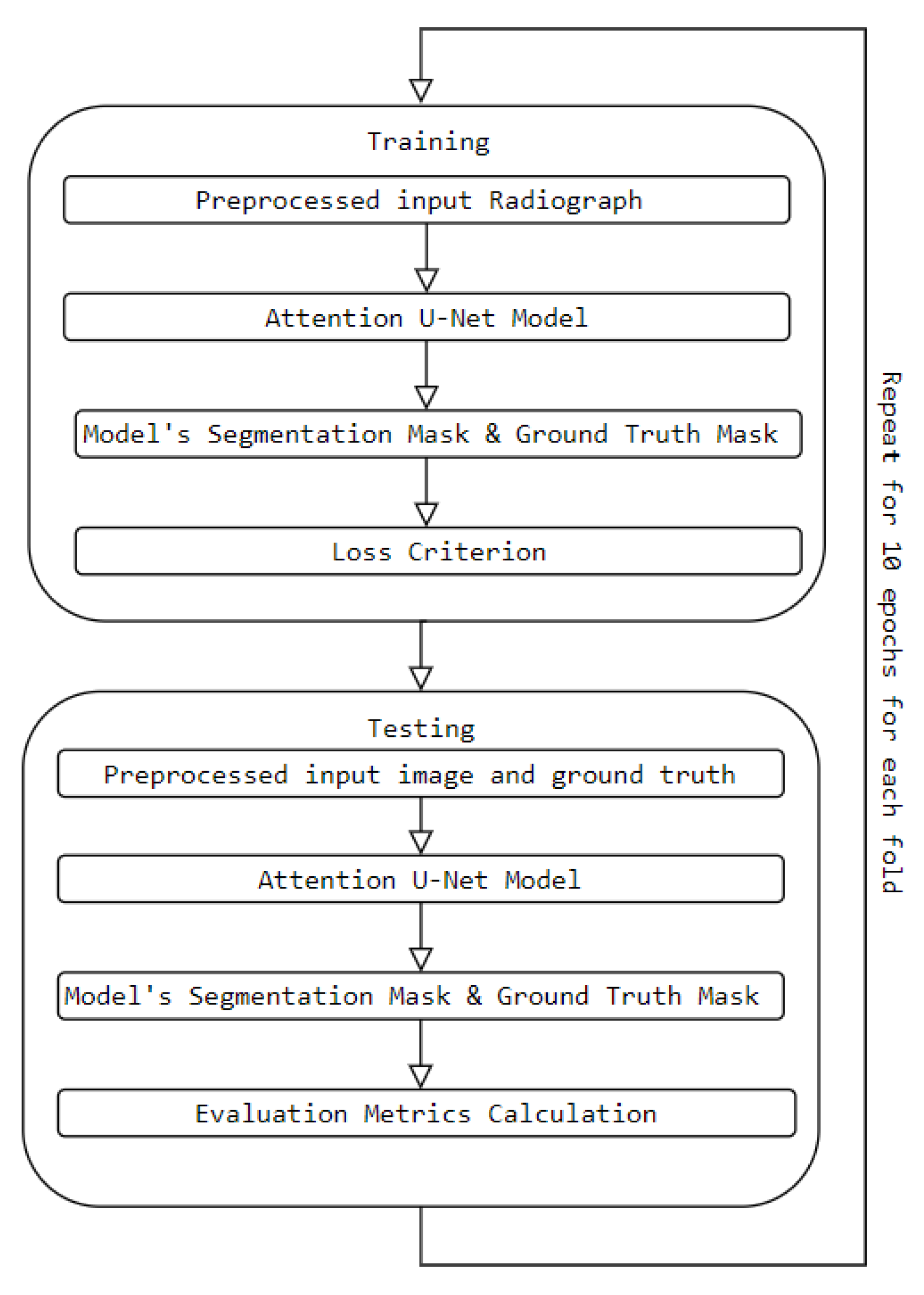

Figure 13 demonstrates the training and testing procedure and how we obtained the results.

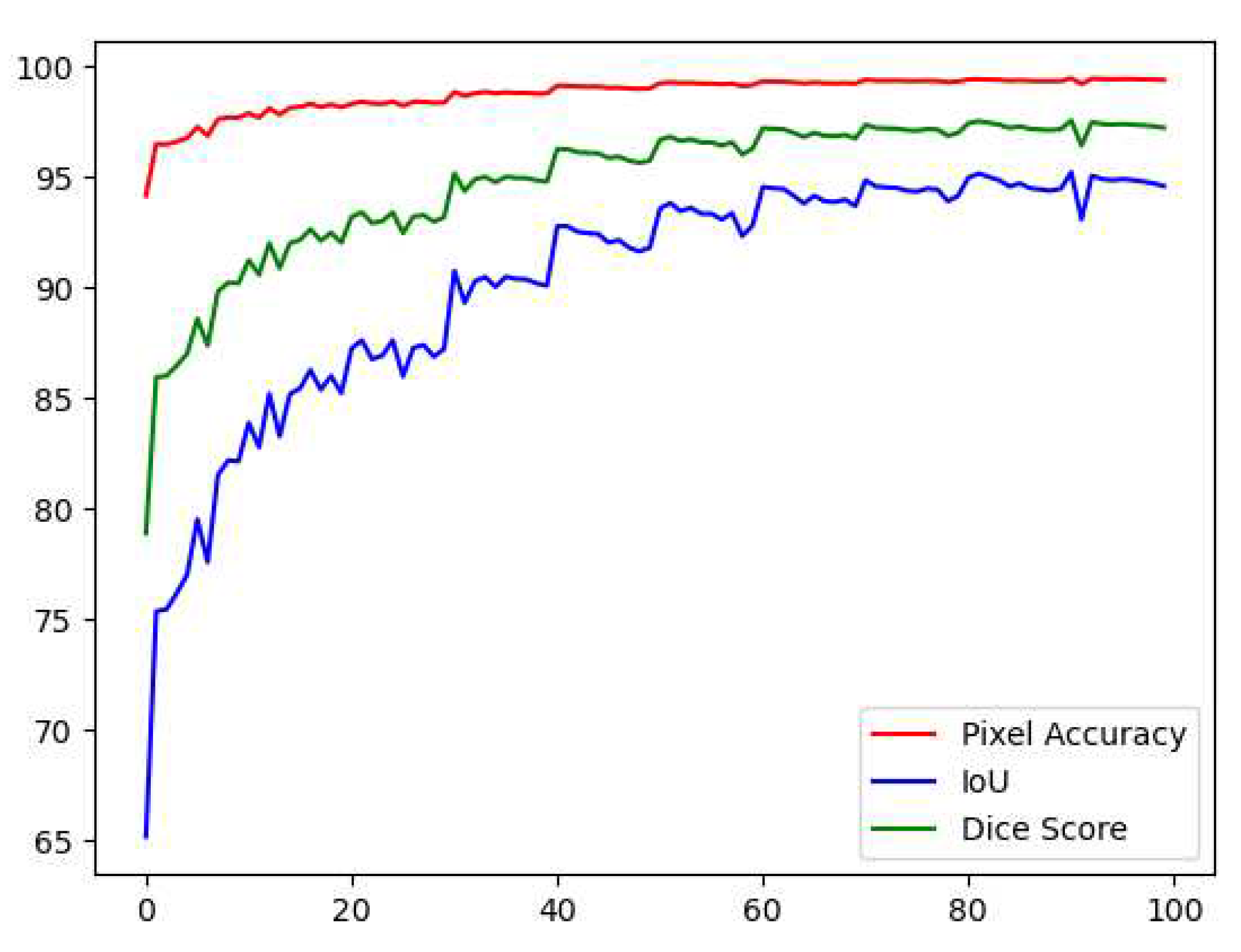

Table 1 demonstrates the results obtained after training the model on the 10-fold cross-validation sets. Fold one had a moderately good result with 90.18%,82.12%, and 97.66% for the dice score, IoU, and PA, respectively. The next fold improves by 2%, 3%, and 1%; the next fold improves by 1%,2%, and 0.20%. The model performance keeps improving throughout the training phase, yielding an average score of 95%,90.6%, and 98% for dice score, IoU, and PA, respectively.

Figure 12 shows the testing curve for each pixel accuracy, dice score, and IoU. The model was tested after each training epoch. It could be noticed that the evaluation was turbulent for each metric because some examples in the dataset had no teeth, which means that the masks are just black pixels. Such examples in the batches have resulted in an unstable convergence, but despite the instability, the model could accurately segment teeth. To further validate our results and visualize the model's performance, two random samples were taken from the test set and visualized w.r.t the predicted images (refer to

Figure 14); the model had a superior performance (refer to

Figure 14b and 14d for the model's predictions) in segmenting the two samples with minor imperfections yet great details quite like the ground truth masks (refer to

Figure 14a and 14c for the ground truths). The trained model was also compared to other baselines tested on the dataset [

41], including the same model trained with the same hyperparameter setup but with the difference in the data split. Attention U-Net has a better segmentation performance than different baselines trained for much longer with an already pre-trained backbone, as shown in

Table 2.

5. Conclusions

In this study, we explored and improved an attention-based network called "Attention U-Net" [

1] with reduced parameters for teeth segmentation on panoramic radiographs. The model demonstrated exceptional performance through training and testing on the TUFTS benchmark dataset using 10-fold cross-validation. The results of our evaluation showed that our proposed improvement achieved remarkable accuracy, with an average dice coefficient of 95.01%, intersection over union of 90.6%, and pixel accuracy of 98.82%. These scores surpass those obtained by other networks evaluated in the original paper for the dataset, underscoring the effectiveness of our approach. By leveraging Artificial Intelligence systems in dentistry, our research aims to contribute to the field and encourage dental practitioners at all levels of expertise to incorporate these systems as auxiliary tools in their diagnostic and post-operative screening phases. The utilization of such systems has the potential to enhance the accuracy and efficiency of dental diagnoses, leading to improved patient care and outcomes. Our findings highlight the promise of attention-based networks and their potential to revolutionize the field of dentistry. We hope our work will inspire further exploration and adoption of Artificial Intelligence technologies in dental practices, benefiting practitioners and patients alike.

Author Contributions

Conceptualization, A.M., and WH; methodology, A.M., SS, and WH; software, A.M.; validation, SS and WH; formal analysis, SS; investigation, A.M., SS, and WH; resources, SS; data curation, SS; writing—original draft preparation, A.M. and WH; writing—review and editing, A.M. and WH; visualization, WH; supervision, WH and SS; project administration, WH and SS All authors have read and agreed to the published version of the manuscript."

Funding

This research received no external funding.

Data Availability Statement

Conflicts of Interest

The authors declare no conflict of interest.

References

- Oktay, O.; et al. Attention U-Net: Learning Where to Look for the Pancreas. arXiv.org, 2018. https://arxiv.org/abs/1804. 0399. [Google Scholar]

- Kong, Z.; Xiong, F.; Zhang, C.; Fu, Z.; Zhang, M.; Weng, J.; Fan, M. Automated Maxillofacial Segmentation in Panoramic Dental X-Ray Images Using an Efficient Encoder-Decoder Network. IEEE Access 2020, 8, 207822–207833. [Google Scholar] [CrossRef]

- Wang, C.-W.; Huang, C.-T.; Lee, J.-H.; Li, C.-H.; Chang, S.-W.; Siao, M.-J.; Lai, T.-M.; Ibragimov, B.; Vrtovec, T.; Ronneberger, O.; et al. A benchmark for comparison of dental radiography analysis algorithms. Med Image Anal. 2016, 31, 63–76. [Google Scholar] [CrossRef]

- Wirtz A, Mirashi SG, Wesarg S. Automatic Teeth Segmentation in Panoramic X-Ray Images Using a Coupled Shape Model in Combination with a Neural Network. In: Frangi AF, Schnabel JA, Davatzikos C, Alberola-López C, Fichtinger G, editors. Medical Image Computing and Computer Assisted Intervention – MICCAI 2018 [Internet]. Cham: Springer International Publishing; 2018 [cited 2022 Aug 13]. p. 712–9. (Lecture Notes in Computer Science; vol. 11073). Available from: http://link.springer.com/10. 1007.

- Sabarudin, A.; Tiau, Y.J. Image quality assessment in panoramic dental radiography: a comparative study between conventional and digital systems. . 2013, 3, 43–48. [Google Scholar] [CrossRef]

- Kantor, M.L.; Reiskin, A.B.; Lurie, A.G. A clinical comparison of X-ray films for detection of proximal surface caries. J. Am. Dent. Assoc. 1985, 111, 967–969. [Google Scholar] [CrossRef]

- Fitzgerald, R. Error in Radiology. Clin. Radiol. 2001, 56, 938–946. [Google Scholar] [CrossRef]

- A. Brady, R. A. Brady, R. Ó. Laoide, P. McCarthy, and R. McDermott, "Discrepancy and error in radiology: concepts, causes and consequences," The Ulster medical journal, vol. 81, no. 1, pp. 3–9, 2012, Available: https://www.ncbi.nlm.nih. 3609. [Google Scholar]

- Lin, P.; Lai, Y.; Huang, P. An effective classification and numbering system for dental bitewing radiographs using teeth region and contour information. Pattern Recognit. 2010, 43, 1380–1392. [Google Scholar] [CrossRef]

- Mahoor, M.H.; Abdel-Mottaleb, M. Classification and numbering of teeth in dental bitewing images. Pattern Recognit. 2005, 38, 577–586. [Google Scholar] [CrossRef]

- Yuniarti, A.; Nugroho, A.S.; Amaliah, B.; Arifin, A.Z. Classification and Numbering of Dental Radiographs for an Automated Human Identification System. TELKOMNIKA (Telecommunication, Comput. Electron. Control. 2012, 10, 137–146. [Google Scholar] [CrossRef]

- Z. Li, W. Z. Li, W. Yang, S. Peng, and F. A: Liu, "A Survey of Convolutional Neural Networks, 0280; arXiv:2004.02806 [cs, eess], Apr. 2020, Available: https://arxiv.org/abs/2004. [Google Scholar]

- Ronneberger, P. Fischer, and T. Brox, "U-Net: Convolutional Networks for Biomedical Image Segmentation," arXiv.org, , 2015. https://arxiv.org/abs/1505. 18 May 0459. [Google Scholar]

- Kamnitsas, K.; Ledig, C.; Newcombe, V.F.; Simpson, J.P.; Kane, A.D.; Menon, D.K.; Rueckert, D.; Glocker, B. Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med Image Anal. 2017, 36, 61–78. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Lei, T.; Cui, R.; Zhang, B.; Meng, H.; Nandi, A.K. Medical image segmentation using deep learning: A survey. IET Image Process. 2022, 16, 1243–1267. [Google Scholar] [CrossRef]

- Li, W.; Jia, F.; Hu, Q. ; Wen Li$Research Lab for Medical Imaging and Digital Surgery; Shenzhen Institutes of Advanced Technology; Chinese Academy of Sciences; Shenzhen; China╃Fucang Jia$Research Lab for Medical Imaging and Digital Surgery; China╃Qingmao Hu$Research Lab for Medical Imaging and Digital Surgery; China Automatic Segmentation of Liver Tumor in CT Images with Deep Convolutional Neural Networks. J. Comput. Commun. 2015, 03, 146–151. [Google Scholar] [CrossRef]

- V. Cherukuri, P. V. Cherukuri, P. Ssenyonga, B. Warf, A. Kulkarni, V. Monga, and S. Schiff, "Learning Based Segmentation of CT Brain Images: Application to Post-operative Hydrocephalic Scans," IEEE Transactions on Biomedical Engineering, 2017. https://www.semanticscholar.org/paper/Learning-Based-Segmentation-of-CT-Brain-Images%3A-to-Cherukuri-Ssenyonga/f4c38fca0d2df65278e222f535b08e66830b03df (accessed , 2023). 29 May.

- Chen, J.; Chen, S.; Wee, L.; Dekker, A.; Bermejo, I. Deep learning based unpaired image-to-image translation applications for medical physics: a systematic review. Phys. Med. Biol. 2023, 68, 05TR01. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Stoyanov, D., et al., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2018; Volume 11045. [Google Scholar] [CrossRef]

- F. Isensee et al. S: "nnU-Net, 1048; arXiv:1809.10486 [cs], Sep. 2018, Available: https://arxiv.org/abs/1809.

- Machado, L.F.; Watanabe, P.C.A.; Rodrigues, G.A.; Jr, L.O.M. Deep learning for automatic mandible segmentation on dental panoramic X-Ray images. Biomed. Phys. Eng. Express 2023, 9. [Google Scholar] [CrossRef] [PubMed]

- Rohrer, C.; Krois, J.; Patel, J.; Meyer-Lueckel, H.; Rodrigues, J.A.; Schwendicke, F. Segmentation of Dental Restorations on Panoramic Radiographs Using Deep Learning. Diagnostics 2022, 12, 1316. [Google Scholar] [CrossRef]

- Song, I.-S.; Shin, H.-K.; Kang, J.-H.; Kim, J.-E.; Huh, K.-H.; Yi, W.-J.; Lee, S.-S.; Heo, M.-S. Deep learning-based apical lesion segmentation from panoramic radiographs. Imaging Sci. Dent. 2022, 52, 351–357. [Google Scholar] [CrossRef] [PubMed]

- Widyaningrum, R.; Candradewi, I.; Aji, N.R.A.S.; Aulianisa, R. Comparison of Multi-Label U-Net and Mask R-CNN for panoramic radiograph segmentation to detect periodontitis. Imaging Sci. Dent. 2022, 52, 383–391. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Zhang, Y.; Wang, X.; Shi, C.; Jiang, X.; Ye, Y.F. Hyperbolic Graph Attention Network. IEEE Trans. Big Data 2021, PP, 1–1. [Google Scholar] [CrossRef]

- Nguyen, D.-K.; Okatani, T. Improved Fusion of Visual and Language Representations by Dense Symmetric Co-attention for Visual Question Answering. 2018, 6087–6096. [CrossRef]

- Y. Liang et al., "Exploring Forensic Dental Identification with Deep Learning," 2021. Accessed: Jun. 02, 2023. [Online]. Available: https://proceedings.neurips.cc/paper_files/paper/2021/file/1a423f7c07a179ec243e82b0c017a034-Paper.

- Li, W.; Zhu, X.; Wang, X.; Wang, F.; Liu, J.; Chen, M.; Wang, Y.; Yue, H. Segmentation and accurate identification of large carious lesions on high quality x-ray images based on Attentional U-Net model. A proof of concept study. J. Appl. Phys. 2022, 132, 033103. [Google Scholar] [CrossRef]

- A. Dosovitskiy et al. T: "An Image is Worth 16x16 Words, 1192; arXiv:2010.11929 [cs], Oct. 2020, Available: https://arxiv.org/abs/2010.

- Almalki and L., J. Latecki, "Self-Supervised Learning with Masked Image Modeling for Teeth Numbering, Detection of Dental Restorations, and Instance Segmentation in Dental Panoramic Radiographs," arXiv.org, Oct. 20, 2022. https://arxiv.org/abs/2210.11404 (accessed , 2023). 23 May.

- Harsh, P.; Chakraborty, R.; Tripathi, S.; Sharma, K. Attention U-Net Architecture for Dental Image Segmentation. 2021, 1–5. [CrossRef]

- Biswas, M.; Pramanik, R.; Sen, S.; Sinitca, A.; Kaplun, D.; Sarkar, R. Microstructural segmentation using a union of attention guided U-Net models with different color transformed images. Sci. Rep. 2023, 13, 1–14. [Google Scholar] [CrossRef]

- John, D.; Zhang, C. An attention-based U-Net for detecting deforestation within satellite sensor imagery. Int. J. Appl. Earth Obs. Geoinformation 2022, 107, 102685. [Google Scholar] [CrossRef]

- Karthik, R.; Radhakrishnan, M.; Rajalakshmi, R.; Raymann, J. Delineation of ischemic lesion from brain MRI using attention gated fully convolutional network. Biomed. Eng. Lett. 2020, 11, 3–13. [Google Scholar] [CrossRef]

- Dayı, B.; Üzen, H.; Çiçek. B.; Duman,.B. A Novel Deep Learning-Based Approach for Segmentation of Different Type Caries Lesions on Panoramic Radiographs. Diagnostics 2023, 13, 202. [Google Scholar] [CrossRef] [PubMed]

- MobileNetV2 Ensemble Segmentation for Mandibular on Panoramic Radiography. Int. J. Intell. Eng. Syst. 2023, 16, 546–560. [CrossRef]

- Arora, S.; Tripathy, S.K.; Gupta, R.; Srivastava, R. Exploiting multimodal CNN architecture for automated teeth segmentation on dental panoramic X-ray images. 2023, 237, 395–405. [CrossRef]

- J. Hu, L. J. Hu, L. Shen, S. Albanie, G. Sun, and E. Wu, "Squeeze-and-excitation networks," arXiv.org. https://arxiv.org/abs/1709.01507v4 (accessed Jul. 2, 2023).

- S. Woo, J. S. Woo, J. Park, J.-Y. Lee, and I. S. Kweon, "CBAM: Convolutional Block Attention Module," arXiv.org. https://arxiv.org/abs/1807.06521 (accessed Jul. 2, 2023).

- Panetta, K.; Rajendran, R.; Ramesh, A.; Rao, S.P.; Agaian, S. Tufts Dental Database: A Multimodal Panoramic X-Ray Dataset for Benchmarking Diagnostic Systems. IEEE J. Biomed. Heal. Informatics 2021, 26, 1650–1659. [Google Scholar] [CrossRef] [PubMed]

- Saumya Jetley, N. A. Lord, N. Lee, and Philip, "Learn to Pay Attention," OpenReview, , 2023. https://openreview.net/forum?id=HyzbhfWRW (accessed May 27, 2023). 21 May.

- Yoshimi, Y.; Mine, Y.; Ito, S.; Takeda, S.; Okazaki, S.; Nakamoto, T.; Nagasaki, T.; Kakimoto, N.; Murayama, T.; Tanimoto, K. Image preprocessing with contrast-limited adaptive histogram equalization improves the segmentation performance of deep learning for the articular disk of the temporomandibular joint on magnetic resonance images. Oral Surgery, Oral Med. Oral Pathol. Oral Radiol. [CrossRef]

- Ni, Z.-L.; Bian, G.-B.; Zhou, X.-H.; Hou, Z.-G.; Xie, X.-L.; Wang, C.; Zhou, Y.-J.; Li, R.-Q.; Li, Z. RAUNet: Residual Attention U-Net for Semantic Segmentation of Cataract Surgical Instruments. In Proceedings of the International Conference on Neural Information Processing, Sydney, NSW, Australia, 12–15 December 2019; pp. 139–149. [Google Scholar] [CrossRef]

- Oztekin, F.; Katar, O.; Sadak, F.; Aydogan, M.; Yildirim, T.T.; Plawiak, P.; Yildirim, O.; Talo, M.; Karabatak, M. Automatic semantic segmentation for dental restorations in panoramic radiography images using U-Net model. Int. J. Imaging Syst. Technol. 2022, 32, 1990–2001. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

is the pixel accuracy,

is the pixel accuracy,  is the IoU, and

is the IoU, and  is the dice score.

is the dice score.

is the pixel accuracy,

is the pixel accuracy,  is the IoU, and

is the IoU, and  is the dice score.

is the dice score.