Submitted:

08 June 2023

Posted:

09 June 2023

Read the latest preprint version here

Abstract

Keywords:

1. Introduction

2. Related Work

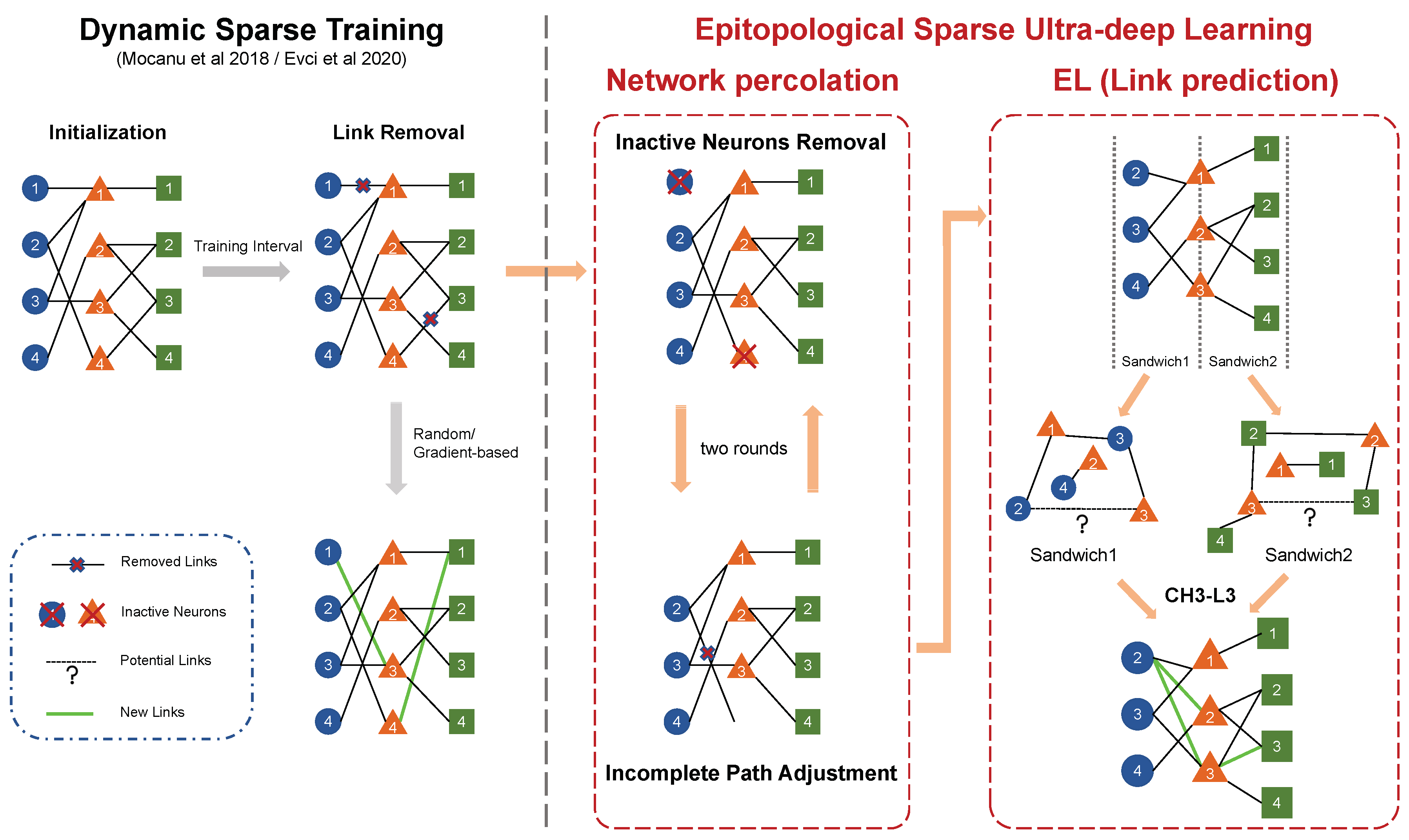

2.1. Dynamic Sparse Training

2.2. Epitopological Learning and Cannistraci-Hebb Network Automata Theory for Link Prediction

3. Epitopological Sparse Ultra-Deep Learning and Cannistraci-Hebb Training

3.1. Epitopological Sparse Ultra-Deep Learning

3.2. Cannistraci-Hebb Training

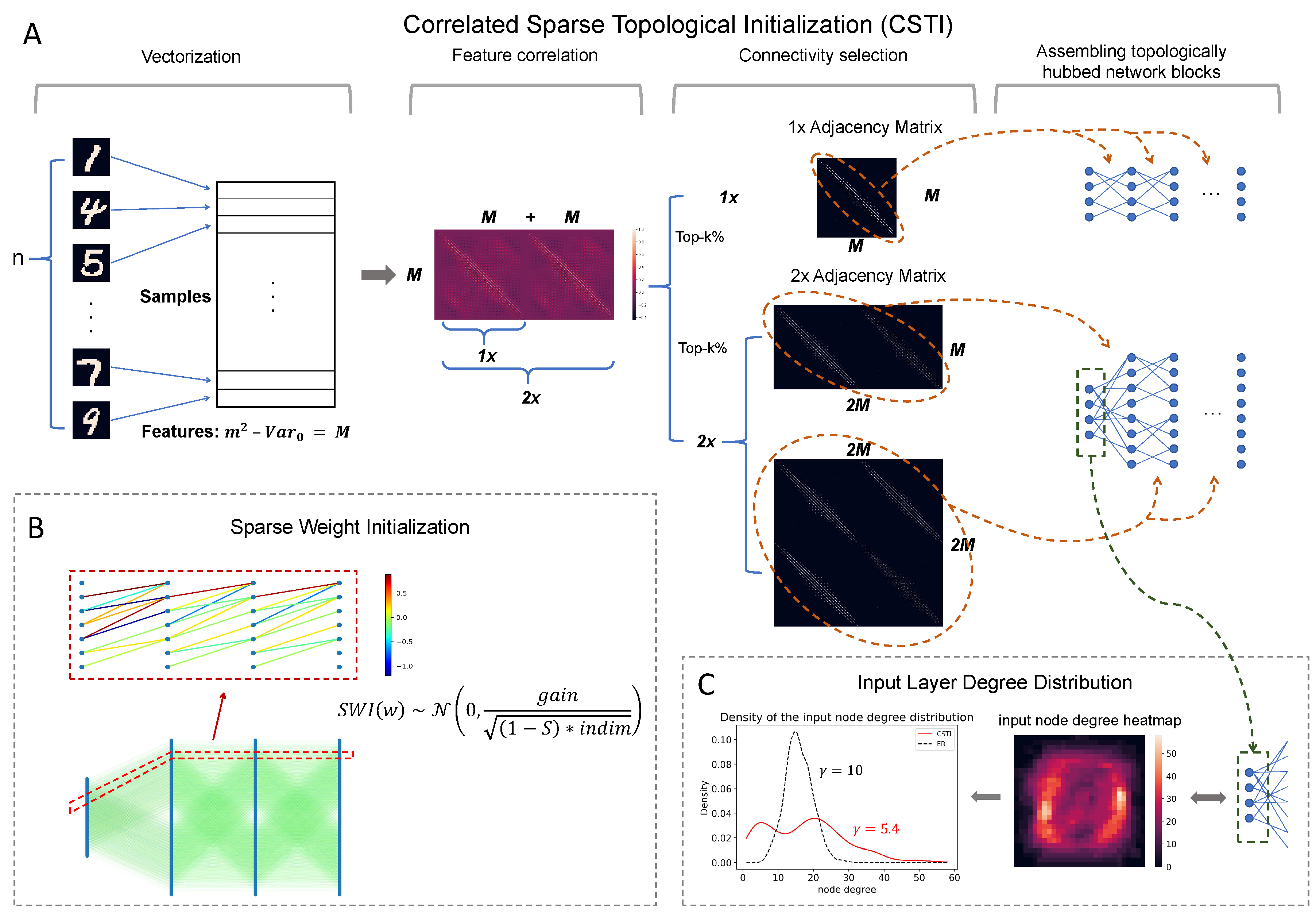

- Correlated sparse topological initialization (CSTI): as shown in Figure 2, CSTI consists of 4 steps. During the vectorization phase, we follow a specific procedure to construct a matrix of size n x M, where n represents the number of samples selected from the training set. Here, M denotes the number of valid features, obtained by excluding features with zero variance among the selected samples. Once we have this n x M matrix, we proceed with feature selection by calculating the Pearson Correlation for each feature. This step allows us to construct a correlation matrix. Subsequently, we construct a sparse adjacency matrix, where the positions marked with "1" (represented as white in the heatmap plot of Figure 2A) correspond to the top-k% values from the correlation matrix. The specific value of k depends on the desired sparsity level. This adjacency matrix plays a crucial role in defining the topology of each sandwich layer. The dimension of the hidden layer is determined by a scaling factor denoted as `x’. A scaling factor of 1x implies that the hidden layer’s dimension is equal to the input dimension, while a scaling factor of 2x indicates that the hidden layer’s dimension is twice the input dimension which allows the dimension of the hidden layer to be variable. In fact, since ESUL can efficiently reduce the dimension of each layer, the hidden dimension can automatically reduce to the inferred size. In this study we are only focusing on the sandwich layers in each model, in cases where sandwich layers cannot receive direct information from the input features, such as CNN and Transformer, we directly run the untrained network and use CSTI to initialize the adjacency matrix of each layer. The detailed implementation of VGG16 and Transformer can be found in the supplementary material.

-

Sparse Weight Initialization (SWI): in addition to the topological initialization, we also recognize the importance of weight initialization in the sparse network. The standard initialization methods such as Kaiming [24] or Xavier [25] are designed to keep the variance of the values consistent across layers. However, these methods are not suitable for sparse network initialization since the variance is not consistent with the previous layer. To address this issue, we propose a method that can assign initial weights in any sparsity cases. SWI can also be extended to the fully connected network, in which case it becomes equivalent to Kaiming initialization. Here we provide the mathematical formula for SWI and in supplementary we provide the rationale that brought us to its definition.denotes the normal distribution with a mean of 0 and a variance of . The value of varies for different activation functions. In this article, except for Transformer, all other models use ReLU, where the gain is always . S here denotes the desired sparsity and indicates the input dimension of each sandwich layer.

- Epitopological prediction: this step corresponds to ESUL (introduced in Section 3.1), but evolves the sparse topological structure starting from CSTI + SWI initialization rather than random.

- Early stop and weight refinement: during the process of epitological prediction, it is common to observe an overlap between the links that are removed and added, as shown in the last plot in Suppl. Figure S2D. After several rounds of topological evolution, the overlap rate can reach a high level, indicating that the network has achieved a relatively stable topological structure. In this case, the ESUL algorithm may continuously remove and add mostly the same links, which can slow down the training process. To solve this problem, we introduce an early stop mechanism for each sandwich layer. When the overlap rate between the removed and added links reaches a certain threshold (we use a significant level of 90%), we stop the epitopological prediction for that layer. Once all the sandwich layers have reached the early stopping condition, the model starts to focus on learning and refining the weights using the obtained network structure.

4. Results

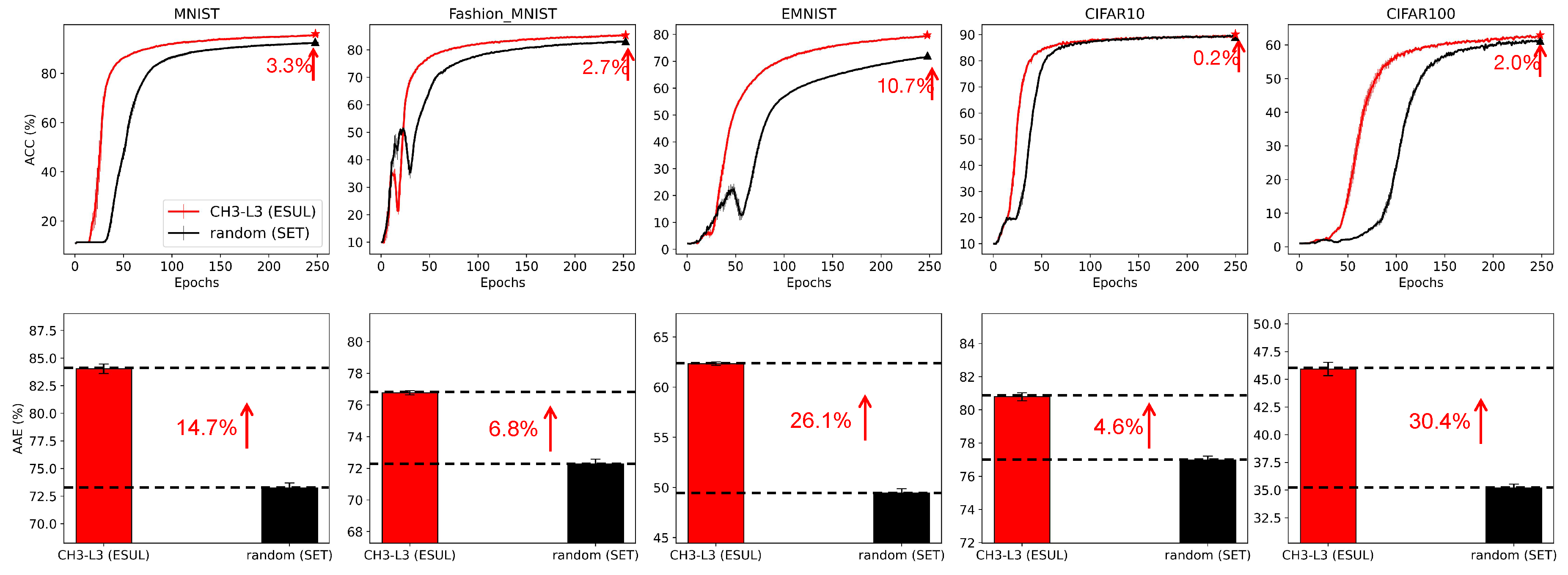

4.1. Results of ESUL

4.2. Results of CHT

| MNIST(MLP) | Fashion_MNIST(MLP) | EMNIST(MLP) | ||||

|---|---|---|---|---|---|---|

| ACC | AAE | ACC | AAE | ACC | AAE | |

| FC | 98.69±0.02 | 96.33±0.16 | 90.43±0.09 | 87.40±0.02 | 85.58±0.06 | 81.75±0.10 |

| RigL | 97.40±0.07 | 93.69±0.10 | 88.02±0.11 | 84.49±0.12 | 82.96±0.04 | 78.01±0.06 |

| CHT | 98.05±0.04 | 95.45±0.05 | 88.07±0.11 | 85.20±0.06 | 83.82±0.04 | 80.25±0.20 |

| FC | 98.73±0.03 | 96.27±0.13 | 90.74±0.13 | 87.58±0.04 | 85.85±0.05 | 82.19±0.12 |

| RigL | 97.91±0.09 | 94.25±0.03 | 88.66±0.07 | 85.23±0.08 | 83.44±0.09 | 79.10±0.25 |

| CHT | 98.34±0.08 | 95.60±0.05 | 88.34±0.07 | 85.53±0.25 | 85.43±0.10 | 81.18±0.15 |

| CIFAR10(VGG16) | CIFAR100(VGG16) | Multi30K(Transformer) | ||||

| ACC | AAE | ACC | AAE | BLEU | AAE | |

| FC | 91.52±0.04 | 87.74±0.03 | 66.73±0.06 | 57.21±0.11 | 24.0±0.20 | 20.9±0.13 |

| RigL | 91.60±0.10* | 86.54±0.14 | 67.87±0.17* | 53.80±0.49 | 21.1±0.08 | 18.1±0.08 |

| CHT | 91.68±0.15* | 86.57±0.08 | 67.58±0.30* | 57.30±0.20* | 21.3±0.29 | 18.3±0.13 |

| FC | 91.75±0.07 | 87.86±0.02 | 66.34±0.06 | 57.02±0.04 | - | - |

| RigL | 91.75±0.03 | 87.07±0.09 | 67.88±0.35* | 54.08±0.43 | - | - |

| CHT | 91.98±0.03* | 88.29±0.10* | 67.70±0.16* | 57.79±0.08* | - | - |

| MNIST | Fashion_MNIST | EMNIST | CIFAR10 | CIFAR100 | Multi-30k | |

|---|---|---|---|---|---|---|

| CHT | 31.15% | 30.45% | 42.22% | 32.52% | 32.32% | 33.86% |

| RigL | 97.45% | 98.69% | 89.19% | 94.14% | 93.50% | 88.7% |

| CHT | 33.27% | 29.25% | 39.78% | 34.24% | 35.71% | - |

| RigL | 100% | 100% | 99.82% | 99.83% | 99.80% | - |

References

- Belkin, M.; Hsu, D.; Ma, S.; Mandal, S. Reconciling modern machine-learning practice and the classical bias–variance trade-off. Proc. Natl. Acad. Sci. 2019, 116, 15849–15854. [Google Scholar] [CrossRef]

- Hinton, G.E.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. CoRR, 1503. [Google Scholar] [CrossRef]

- Han, S.; Mao, H.; Dally, W.J. Deep Compression: Compressing Deep Neural Network with Pruning, Trained Quantization and Huffman Coding. 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, -4, 2016, Conference Track Proceedings; Bengio, Y.; LeCun, Y., Eds., 2016. 2 May. [CrossRef]

- Chen, W.; Wilson, J.T.; Tyree, S.; Weinberger, K.Q.; Chen, Y. Compressing Neural Networks with the Hashing Trick. Proceedings of the 32nd International Conference on Machine Learning, ICML 2015, Lille, France, 6-; Bach, F.R.; Blei, D.M., Eds. JMLR.org, 2015, Vol. 37, JMLR Workshop and Conference Proceedings, pp. 2285–2294. 11 July.

- LeCun, Y.; Denker, J.S.; Solla, S.A. Optimal Brain Damage. Advances in Neural Information Processing Systems 2, [NIPS Conference, Denver, Colorado, USA, -30, 1989]; Touretzky, D.S., Ed. Morgan Kaufmann, 1989, pp. 598–605. 27 November.

- Han, S.; Pool, J.; Tran, J.; Dally, W.J. Learning both Weights and Connections for Efficient Neural Network. Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, -12, 2015, Montreal, Quebec, Canada; Cortes, C.; Lawrence, N.D.; Lee, D.D.; Sugiyama, M.; Garnett, R., Eds., 2015, pp. 1135–1143. 7 December.

- Lee, N.; Ajanthan, T.; Torr, P.H.S. Snip: Single-Shot Network Pruning based on Connection sensitivity. 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, -9, 2019. OpenReview.net, 2019. 6 May.

- Frankle, J.; Carbin, M. The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks. 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, -9, 2019. OpenReview.net, 2019. 6 May.

- Mocanu, D.C.; Mocanu, E.; Stone, P.; Nguyen, P.H.; Gibescu, M.; Liotta, A. Scalable training of artificial neural networks with adaptive sparse connectivity inspired by network science. Nat. Commun. 2018, 9, 1–12. [Google Scholar] [CrossRef]

- Evci, U.; Gale, T.; Menick, J.; Castro, P.S.; Elsen, E. Rigging the Lottery: Making All Tickets Winners. Proceedings of the 37th International Conference on Machine Learning, ICML 2020, 13-, Virtual Event. PMLR, 2020, Vol. 119, Proceedings of Machine Learning Research, pp. 2943–2952. 18 July.

- Liu, S.; Yin, L.; Mocanu, D.C.; Pechenizkiy, M. Do We Actually Need Dense Over-Parameterization? In-Time Over-Parameterization in Sparse Training. Proceedings of the 38th International Conference on Machine Learning, ICML 2021, 18-, Virtual Event; Meila, M.; Zhang, T., Eds. PMLR, 2021, Vol. 139, Proceedings of Machine Learning Research, pp. 6989–7000. 24 July.

- Yuan, G.; Ma, X.; Niu, W.; Li, Z.; Kong, Z.; Liu, N.; Gong, Y.; Zhan, Z.; He, C.; Jin, Q.; others. Mest: Accurate and fast memory-economic sparse training framework on the edge. Adv. Neural Inf. Process. Syst. 2021, 34, 20838–20850. [Google Scholar]

- Liu, S.; Chen, T.; Chen, X.; Atashgahi, Z.; Yin, L.; Kou, H.; Shen, L.; Pechenizkiy, M.; Wang, Z.; Mocanu, D.C. Sparse Training via Boosting Pruning Plasticity with Neuroregeneration. 2021, pp. 9908–9922.

- Daminelli, S.; Thomas, J.M.; Durán, C.; Cannistraci, C.V. Common neighbours and the local-community-paradigm for topological link prediction in bipartite networks. New J. Phys. 2015, 17, 113037. [Google Scholar] [CrossRef]

- Durán, C.; Daminelli, S.; Thomas, J.M.; Haupt, V.J.; Schroeder, M.; Cannistraci, C.V. Pioneering topological methods for network-based drug–target prediction by exploiting a brain-network self-organization theory. Briefings Bioinform. 2017, 19, 1183–1202. [Google Scholar] [CrossRef] [PubMed]

- Cannistraci, C.V. Modelling Self-Organization in Complex Networks Via a Brain-Inspired Network Automata Theory Improves Link Reliability in Protein Interactomes. Sci Rep 2018, 8, 2045–2322. [Google Scholar] [CrossRef]

- Muscoloni, A.; Michieli, U.; Cannistraci, C. Adaptive Network Automata Modelling of Complex Networks. preprints 2020. [Google Scholar]

- Yuan, G.; Li, Y.; Li, S.; Kong, Z.; Tulyakov, S.; Tang, X.; Wang, Y.; Ren, J. Layer Freezing & Data Sieving: Missing Pieces of a Generic Framework for Sparse Training. arXiv preprint arXiv:2209.11204, arXiv:2209.11204 2022.

- Hebb, D. The Organization of Behavior. emphNew York, 1949.

- Cannistraci, C.V.; Alanis-Lobato, G.; Ravasi, T. From link-prediction in brain connectomes and protein interactomes to the local-community-paradigm in complex networks. Sci. Rep. 2013, 3, 1613. [Google Scholar] [CrossRef] [PubMed]

- Narula, V.e.a. Can local-community-paradigm and epitopological learning enhance our understanding of how local brain connectivity is able to process, learn and memorize chronic pain? Appl. Netw. Sci. 2017, 2. [Google Scholar] [CrossRef]

- Li, M.; Liu, R.R.; Lü, L.; Hu, M.B.; Xu, S.; Zhang, Y.C. Percolation on complex networks: Theory and application. Phys. Rep. 2021, 907, 1–68. [Google Scholar] [CrossRef]

- Cannistraci, C.V.; Muscoloni, A. Geometrical congruence, greedy navigability and myopic transfer in complex networks and brain connectomes. Nat. Commun. 2022, 13, 7308. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. Proceedings of the IEEE international conference on computer vision, 2015, pp. 1026–1034.

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, AISTATS 2010, Chia Laguna Resort, Sardinia, Italy, -15, 2010; Teh, Y.W.; Titterington, D.M., Eds. JMLR.org, 2010, Vol. 9, JMLR Proceedings, pp. 249–256. 13 May.

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-mnist: A novel image dataset for benchmarking machine learning algorithms. arXiv preprint arXiv:1708.07747, arXiv:1708.07747 2017. [CrossRef]

- Cohen, G.; Afshar, S.; Tapson, J.; Van Schaik, A. EMNIST: Extending MNIST to handwritten letters. 2017 international joint conference on neural networks (IJCNN). IEEE, 2017, pp. 2921–2926. [CrossRef]

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images 2009. pp. 32–33.

- Elliott, D.; Frank, S.; Sima’an, K.; Specia, L. Multi30K: Multilingual English-German Image Descriptions. Proceedings of the 5th Workshop on Vision and Language. Association for Computational Linguistics, 2016, pp. 74. [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, arXiv:1409.1556 2014. [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser. ; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Muscoloni, A.; Thomas, J.M.; Ciucci, S.; Bianconi, G.; Cannistraci, C.V. Machine learning meets complex networks via coalescent embedding in the hyperbolic space. Nat. Commun. 2017, 8, 1615. [Google Scholar] [CrossRef] [PubMed]

- Muscoloni, A.; Cannistraci, C.V. Angular separability of data clusters or network communities in geometrical space and its relevance to hyperbolic embedding. arXiv preprint arXiv:1907.00025, arXiv:1907.00025 2019. [CrossRef]

- Muscoloni, A.; Cannistraci, C.V. A nonuniform popularity-similarity optimization (nPSO) model to efficiently generate realistic complex networks with communities. New J. Phys. 2018, 20, 052002. [Google Scholar] [CrossRef]

- Durán, C.; Muscoloni, A.; Cannistraci, C.V. Geometrical inspired pre-weighting enhances Markov clustering community detection in complex networks. Appl. Netw. Sci. 2021, 6, 1–16. [Google Scholar] [CrossRef]

- Cacciola, A.; Muscoloni, A.; Narula, V.; Calamuneri, A.; Nigro, S.; Mayer, E.A.; Labus, J.S.; Anastasi, G.; Quattrone, A.; Quartarone, A. ; others. Coalescent embedding in the hyperbolic space unsupervisedly discloses the hidden geometry of the brain. arXiv preprint arXiv:1705.04192, arXiv:1705.04192 2017. [CrossRef]

- Xu, M.; Pan, Q.; Muscoloni, A.; Xia, H.; Cannistraci, C.V. Modular gateway-ness connectivity and structural core organization in maritime network science. Nat. Commun. 2020, 11, 2849. [Google Scholar] [CrossRef]

- Lü, L.; Pan, L.; Zhou, T.; Zhang, Y.C.; Stanley, H.E. Toward link predictability of complex networks. Proc. Natl. Acad. Sci. 2015, 112, 2325–2330. [Google Scholar] [CrossRef]

- Gauch Jr, H.G.; Gauch, H.G.; Gauch Jr, H.G. Scientific method in practice; Cambridge University Press, 2003.

- Einstein, A. Does the inertia of a body depend upon its energy-content. Ann. Der Phys. 1905, 18, 639–641. [Google Scholar] [CrossRef]

- de Maupertuis, P.L.M. Mémoires de l’Académie Royale. p. 423 1744. [Google Scholar]

- Hoffmann, R.; Minkin, V.I.; Carpenter, B.K. Ockham’s razor and chemistry. Bull. De La Société Chim. De Fr. 1996, 2, 117–130. [Google Scholar]

- de Broglie, L. Annales de Physique. pp. 22–128.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).