Submitted:

24 May 2024

Posted:

27 May 2024

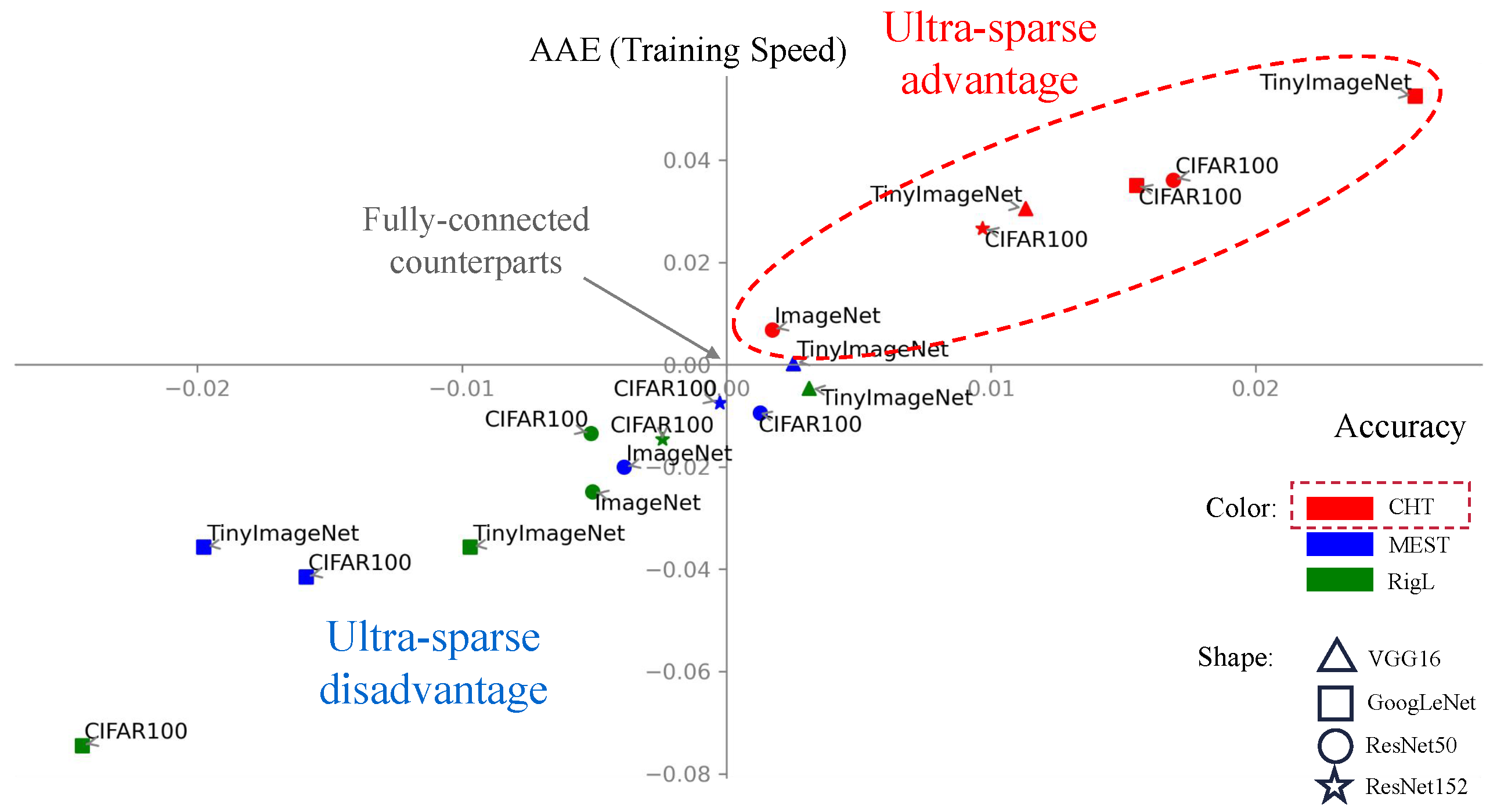

You are already at the latest version

Abstract

Keywords:

1. Introduction

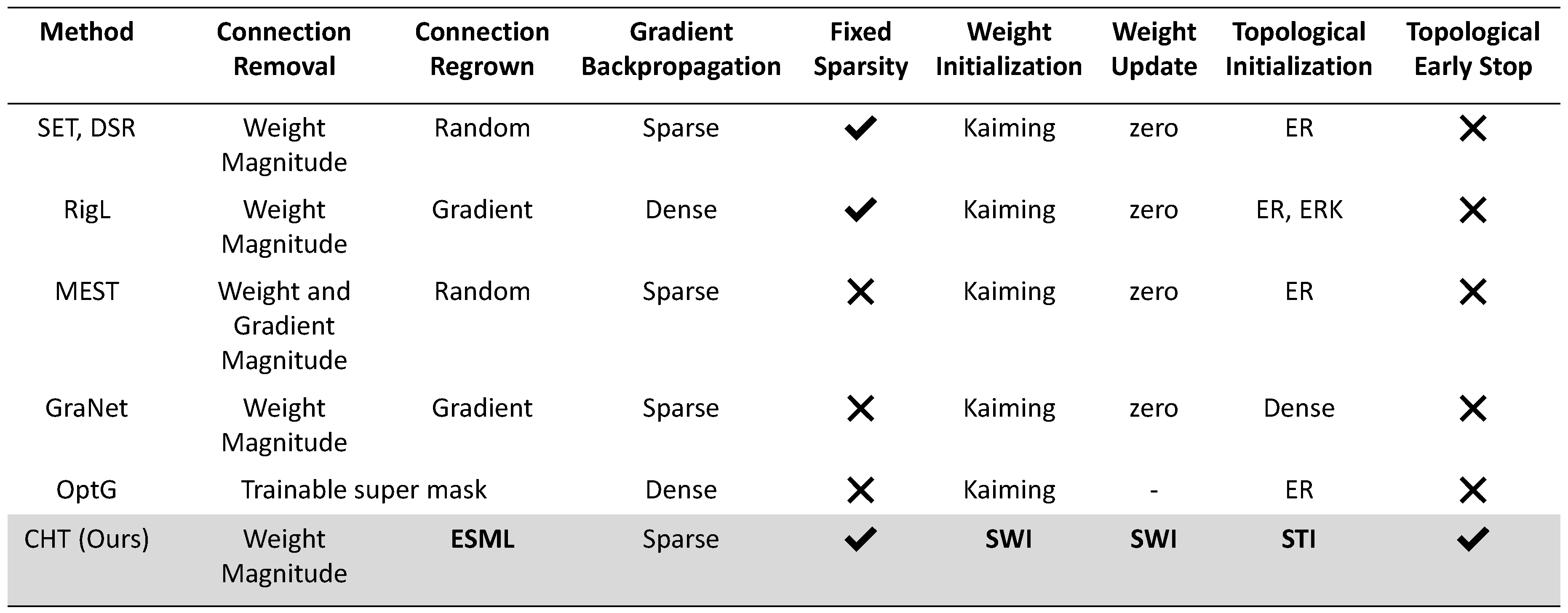

2. Related Work

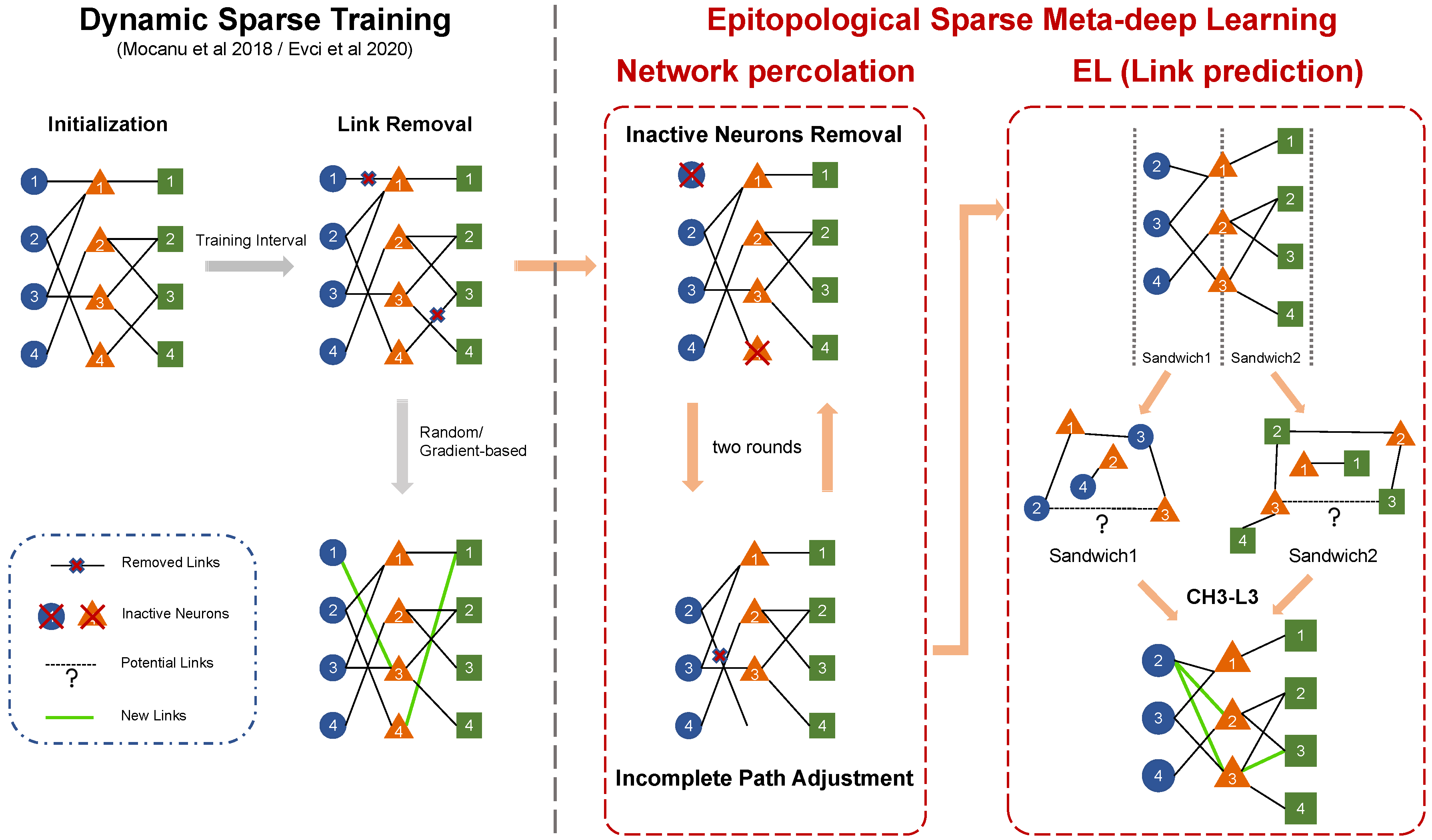

2.1. Dynamic Sparse Training

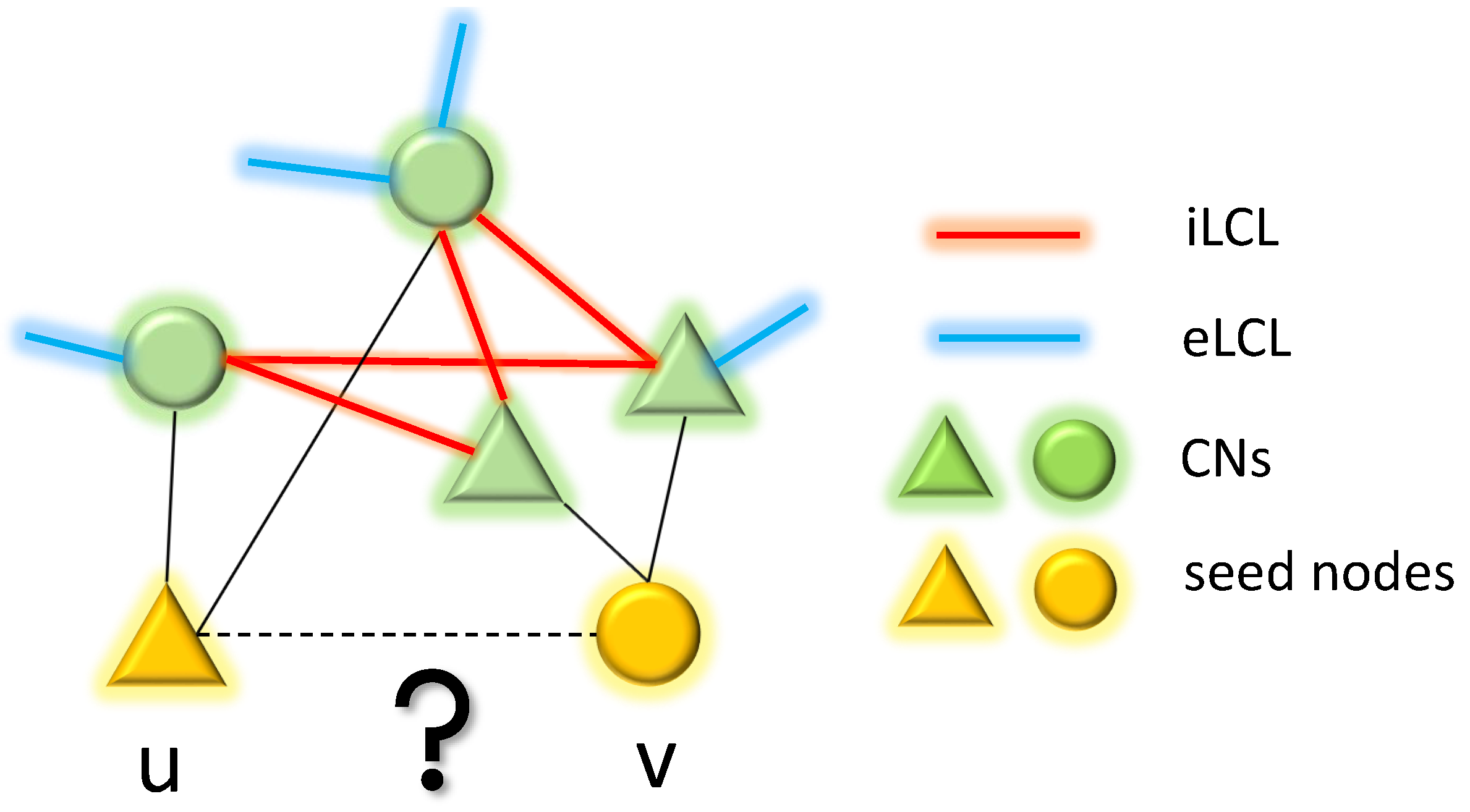

2.2. Epitopological Learning and Cannistraci-Hebb Network Automata Theory for Link Prediction

3. Epitopological Sparse Meta-deep Learning and Cannistraci-Hebb Training

3.1. Epitopological Sparse Meta-deep Learning (ESML)

3.2. Cannistraci-Hebb Training

4. Results

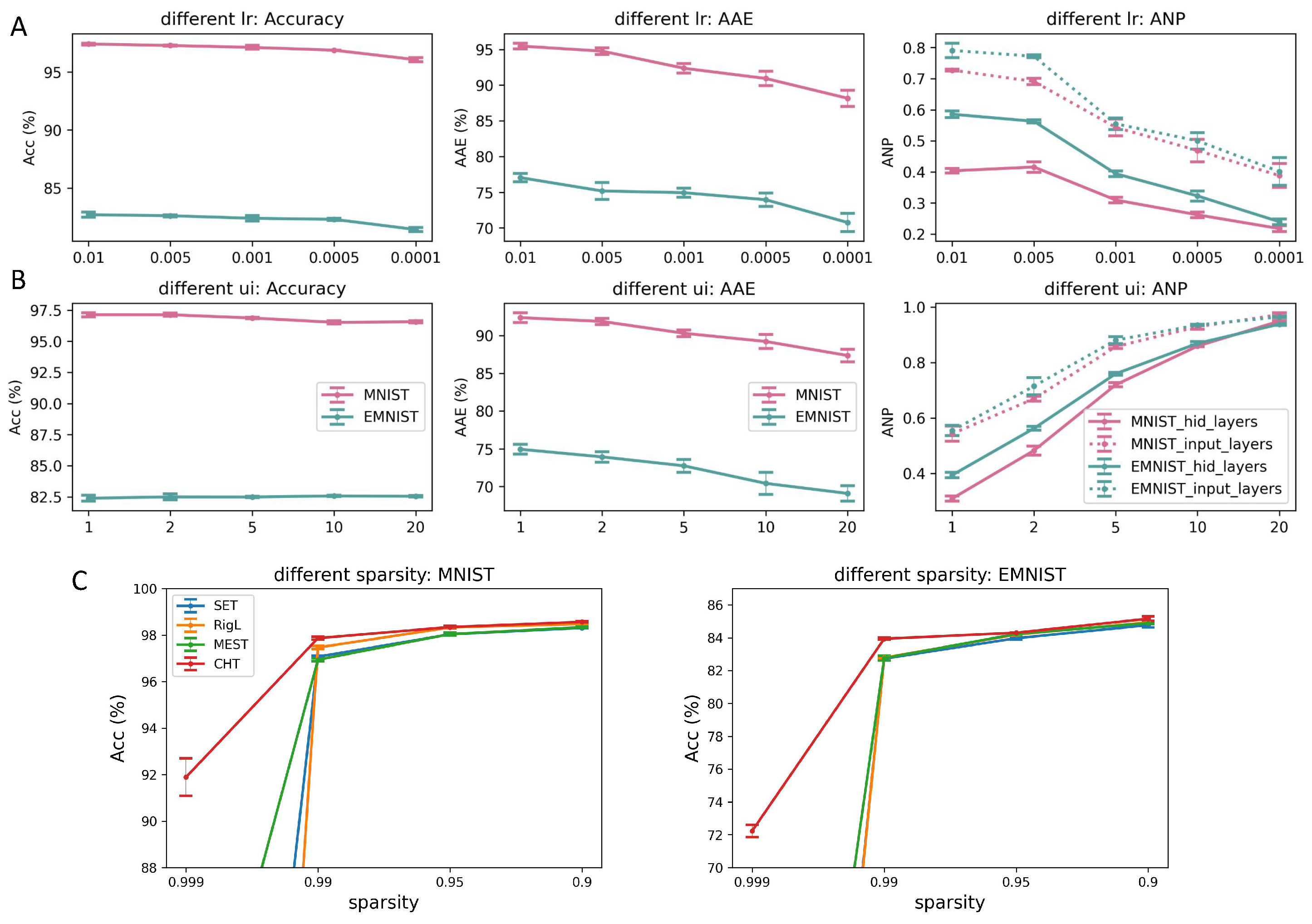

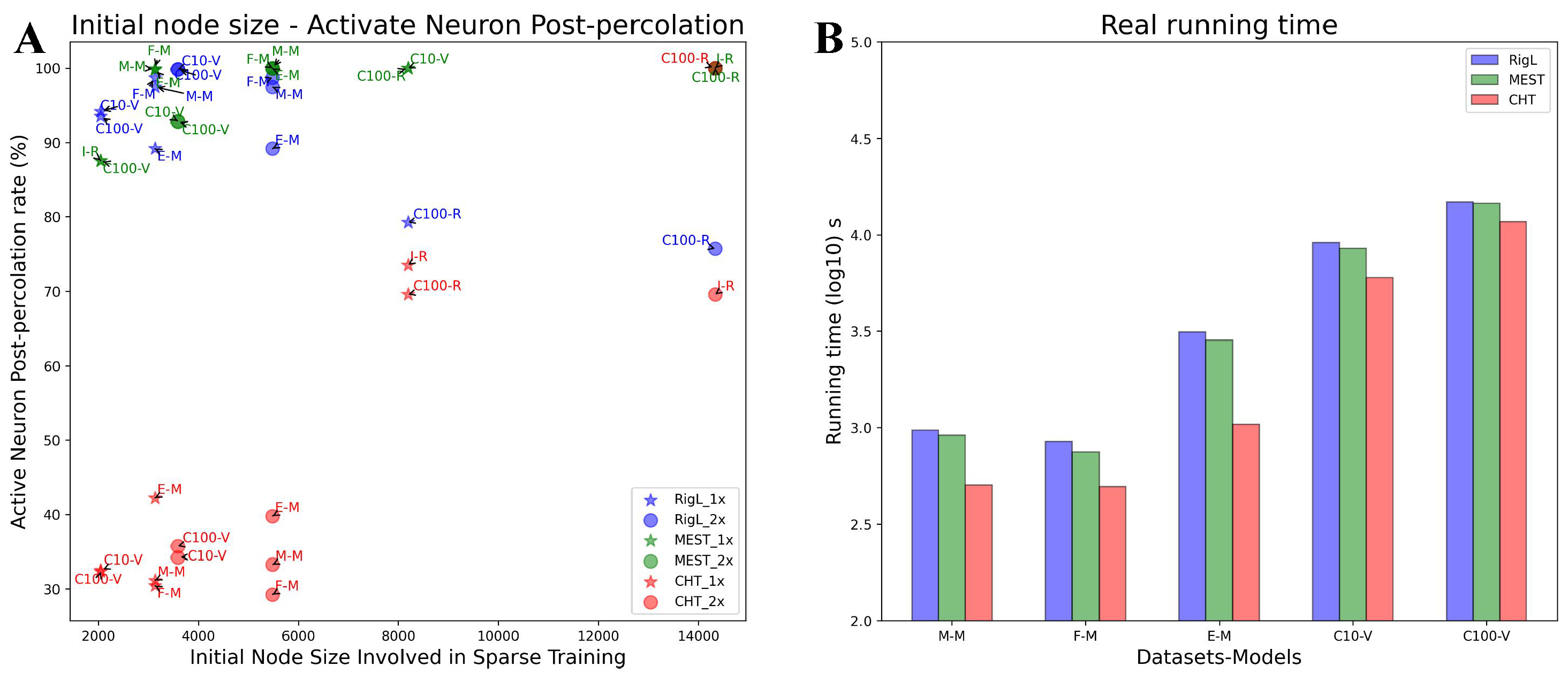

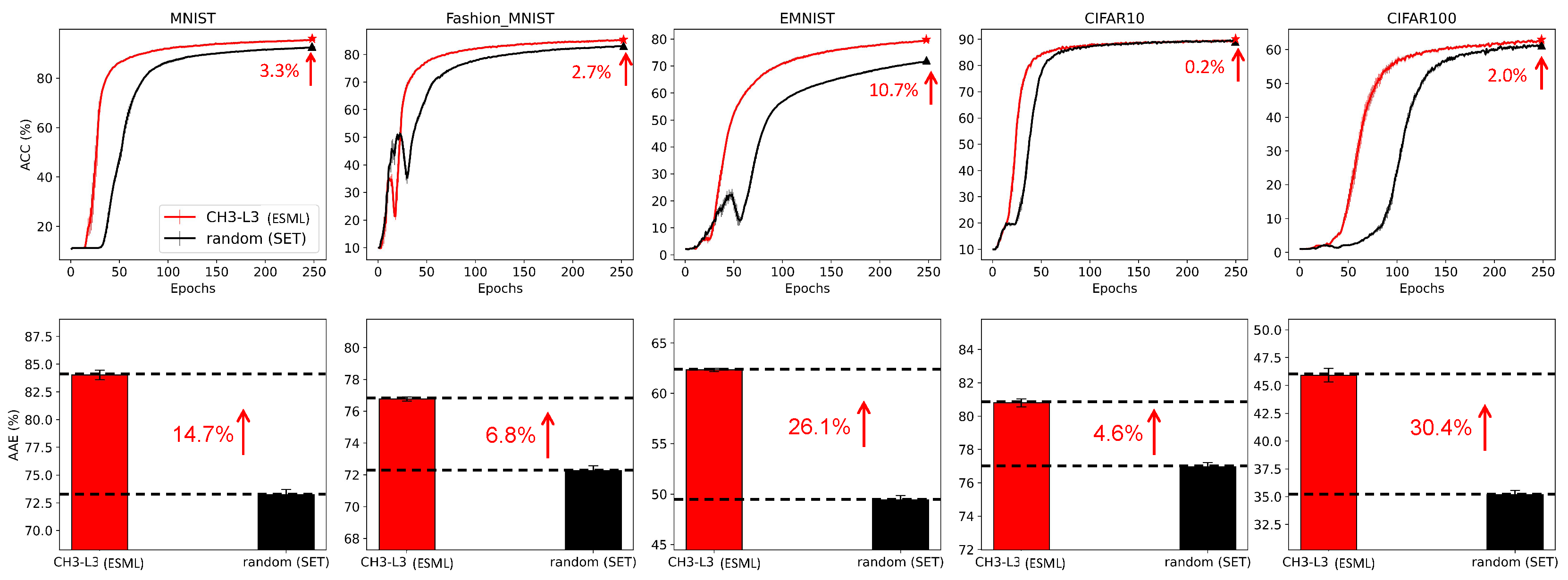

4.1. Results for ESML and Network Analysis of Epitopological-based Link Regrown

4.1.1. ESML Prediction Performance

4.1.2. Network Analysis

4.2. Results of CHT

5. Conclusion

Acknowledgments

Appendix A. Mathematical Formula and Explanation of CH3-L3

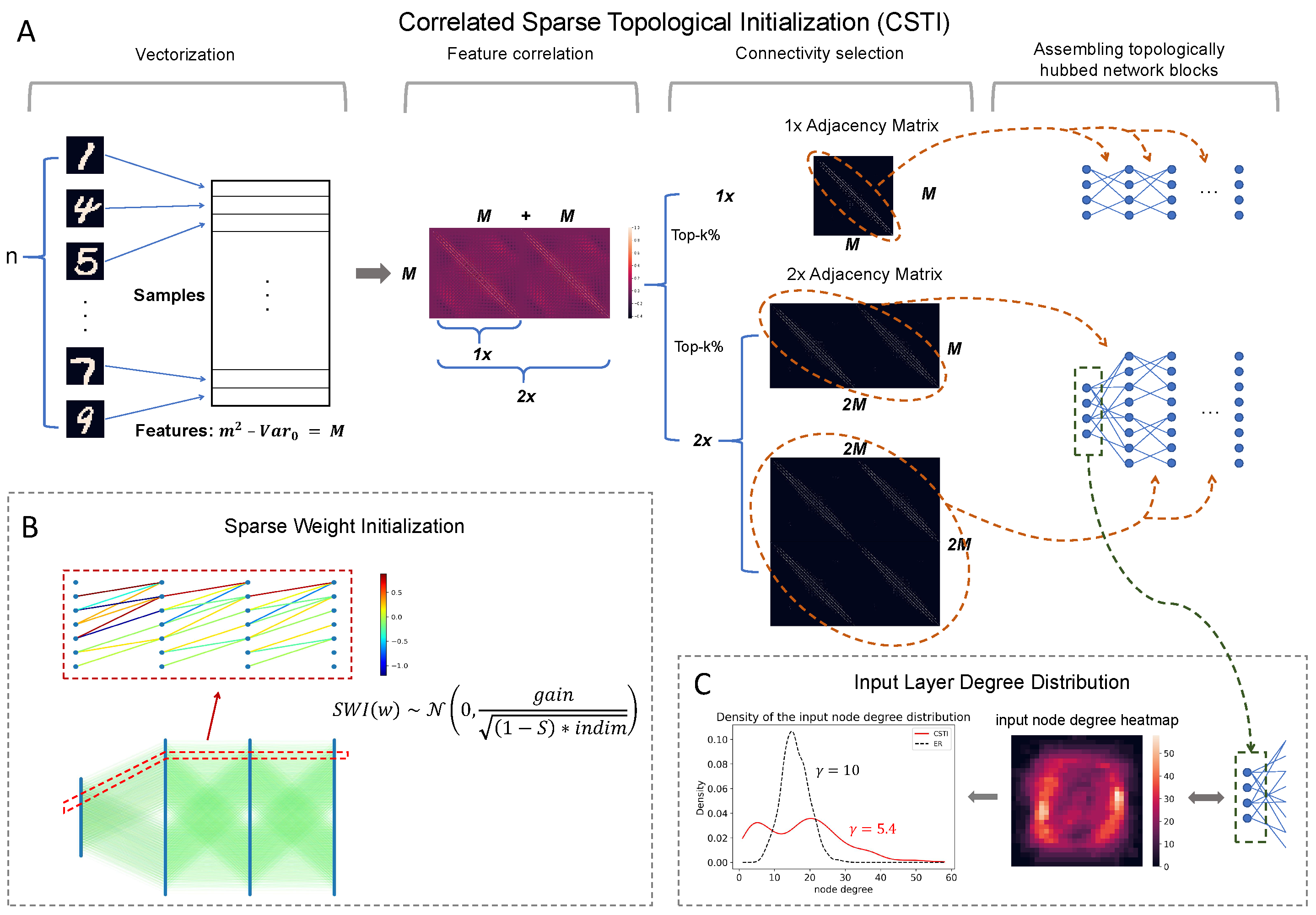

Appendix B. Correlated Sparse Topological Initialization (CSTI)

Appendix C. Glossary of Network Science

Appendix D. Dynamic Sparse Training

Appendix E. Sparse Weight Initialization

Appendix F. Limitations and Future Challenges

Appendix G. Link Removal and Network Percolation in ESML

Appendix H. Experimental Setup

Appendix I. Sensitivity Test of ESML and CHT

| ResNet152-CIFAR100 | ||

|---|---|---|

| S:50K C:100 | ||

| ACC | AAE | |

| Original | 79.76±0.19 | 68.26±0.11 |

| FC1× | 79.28±0.16 | 67.33±0.21 |

| RigL1× | 79.81±0.09* | 68.07±0.09 |

| MEST1× | 79.97±0.11* | 68.42±0.05* |

| CHT1×(WS, ) | 80.01±0.02* | 68.91±0.09* |

| FC2× | 79.22±0.12 | 67.69±0.14 |

| RigL2× | 79.59±0.03 | 67.95±0.1 |

| MEST2× | 79.86±0.08* | 68.28±0.07* |

| CHT2×(WS, ) | 80.15±0.15* | 68.9±0.13* |

Appendix J. CHT Results on the MLP Tasks

| MNIST | Fashion_MNIST | EMNIST | ||||

| S:60K C:10 | S:60K C:10 | S:131K C:47 | ||||

| ACC | AAE | ACC | AAE | ACC | AAE | |

| FC1× | 98.69±0.02 | 97.01±0.12 | 90.43±0.09 | 88.54±0.02 | 85.58±0.06 | 83.16±0.09 |

| RigL1× | 97.40±0.07 | 94.71±0.07 | 88.02±0.10 | 86.13±0.12 | 82.96±0.04 | 79.88±0.06 |

| MEST1× | 97.31±0.05 | 94.41±0.05 | 88.13±0.10 | 86.02±0.07 | 83.05±0.04 | 80.02±0.07 |

| CHT1×(CSTI) | 98.05±0.04 | 96.9±0.06 | 88.07±0.11 | 86.12±0.05 | 83.82±0.04 | 81.05±0.17 |

| CHT1×(BA) | 96.36±0.04 | 95.79±0.03 | 87.11±0.06 | 86.28±0.11 | 80.5±0.11 | 79.14±0.07 |

| CHT1×(WS, ) | 96.08±0.05 | 95.56±0.06 | 88.27±0.14 | 87.29±0.11 | 78.8±0.16 | 77.74±0.07 |

| CHT1×(WS, ) | 97.18±0.03 | 96.59±0.05 | 88.5±0.02 | 87.38±0.06 | 81.12±0.04 | 80.09±0.04 |

| CHT1×(WS, ) | 97.25±0.02 | 96.6±0.03 | 88.19±0.04 | 87.14±0.06 | 81.33±0.06 | 80.15±0.05 |

| CHT1×(WS, ) | 97.06±0.05 | 96.49±0.05 | 87.84±0.13 | 86.95±0.06 | 80.97±0.07 | 79.77±0.05 |

| CHT1×(WS, ) | 96.92±0.02 | 96.37±0.03 | 87.72±0.12 | 86.83±0.12 | 81.03±0.13 | 79.71±0.15 |

| BA1× static | 96.19±0.09 | 94.3±0.16 | 87.04±0.07 | 85.01±0.12 | 80.93±0.08 | 75.75±0.01 |

| BA1× dynamic | 97.11±0.08 | 95.47±0.09 | 87.98±0.04 | 85.78±0.09 | 82.6±0.08 | 78.14±0.02 |

| WS1× static() | 95.41±0.01 | 92.01±0.10 | 86.74±0.03 | 81.71±0.11 | 77.91±0.07 | 71.06±0.21 |

| WS1× static() | 96.4±0.03 | 92.6±0.10 | 87.27±0.12 | 83±0.06 | 81.3±0.07 | 73.18±0.13 |

| WS1× static() | 96.33±0.04 | 92.13±0.09 | 87.18±0.07 | 82.49±0.01 | 81.37±0.02 | 72.45±0.03 |

| WS1× static() | 96.07±0.08 | 91.55±0.07 | 86.78±0.10 | 82.4±0.06 | 81.39±0.05 | 72.00±0.15 |

| WS1× static() | 96.18±0.04 | 91.43±0.08 | 86.59±0.02 | 82.53±0.07 | 81.1±0.11 | 71.75±0.12 |

| WS1× dynamic() | 97.04±0.04 | 94.15±0.14 | 88.09±0.05 | 84.3±0.10 | 82.79±0.09 | 75.89±0.11 |

| WS1× dynamic() | 96.95±0.05 | 93.87±0.06 | 87.85±0.14 | 84.4±0.04 | 82.42±0.08 | 75.1±0.15 |

| WS1× dynamic() | 96.88±0.05 | 93.02±0.08 | 87.78±0.09 | 83.87±0.04 | 82.45±0.03 | 74.28±0.12 |

| WS1× dynamic() | 96.94±0.06 | 92.74±0.12 | 87.57±0.07 | 83.72±0.12 | 82.55±0.06 | 74.11±0.03 |

| WS1× dynamic() | 96.81±0.04 | 92.56±0.07 | 87.46±0.17 | 83.91±0.08 | 82.52±0.07 | 73.92±0.08 |

| FC2× | 98.73±0.02 | 97.14±0.03 | 90.74±0.13 | 88.37±0.05 | 85.85±0.05 | 84.17±0.11 |

| RigL2× | 97.91±0.09 | 95.17±0.03 | 88.66±0.07 | 86.14±0.07 | 83.44±0.09 | 81.41±0.25 |

| MEST2× | 97.66±0.03 | 95.63±0.09 | 88.33±0.10 | 85.01±0.07 | 83.50±0.09 | 80.77±0.03 |

| CHT2×(CSTI) | 98.34±0.08 | 97.51±0.07 | 88.66±0.07 | 87.63±0.19 | 85.43±0.10 | 83.91±0.11 |

| CHT2×(BA) | 97.08±0.05 | 96.67±0.03 | 87.48±0.05 | 86.8±0.05 | 80.38±0.04 | 79.46±0.03 |

| CHT2×(WS, ) | 96.31±0.06 | 95.89±0.01 | 87.48±0.16 | 87.83±0.07 | 79.15±0.03 | 78.28±0.03 |

| CHT2×(WS, ) | 97.64±0.02 | 97.29±0.06 | 88.57±0.08 | 88.21±0.13 | 81.91±0.01 | 81.02±0.01 |

| CHT2×(WS, ) | 97.75±0.04 | 97.36±0.04 | 88.98±0.07 | 87.73±0.08 | 81.77±0.09 | 80.77±0.09 |

| CHT2×(WS, ) | 97.47±0.04 | 97.01±0.05 | 88.61±0.09 | 87.42±0.04 | 81.13±0.07 | 80.37±0.10 |

| CHT2×(WS, ) | 97.39±0.05 | 97.03±0.03 | 88.17±0.11 | 87.4±0.06 | 81±0.18 | 80.22±0.03 |

| BA2× static | 96.79±0.05 | 95.67±0.04 | 87.69±0.03 | 86.19±0.05 | 82.02±0.20 | 79.18±0.25 |

| BA2× dynamic | 97.49±0.02 | 96.67±0.06 | 88.3±0.13 | 86.7±0.14 | 83.18±0.04 | 80.5±0.26 |

| WS2× static() | 96.03±0.10 | 93.41±0.14 | 87.57±0.01 | 83.72±0.28 | 78.72±0.15 | 73.09±0.20 |

| WS2× static() | 96.95±0.10 | 93.98±0.06 | 88.26±0.12 | 84.15±0.22 | 82.42±0.09 | 76.03±0.08 |

| WS2× static() | 97.27±0.07 | 93.48±0.09 | 88.26±0.08 | 83.87±0.06 | 82.66±0.08 | 75.38±0.08 |

| WS2× static() | 96.77±0.07 | 92.85±0.15 | 88.13±0.07 | 83.47±0.10 | 82.39±0.07 | 74.64±0.22 |

| WS2× static() | 96.67±0.06 | 92.52±0.09 | 87.68±0.04 | 83.46±0.07 | 82.29±0.18 | 74.41±0.16 |

| WS2× dynamic() | 97.57±0.06 | 95.46±0.19 | 88.42±0.19 | 85.39±0.25 | 83.41±0.06 | 78.6±0.20 |

| WS2× dynamic() | 97.36±0.02 | 94.72±0.06 | 88.49±0.17 | 85.35±0.14 | 83.41±0.05 | 77.46±0.08 |

| WS2× dynamic() | 97.15±0.03 | 93.82±0.11 | 88.49±0.08 | 84.71±0.05 | 83.09±0.01 | 76.26±0.08 |

| WS2× dynamic() | 97.17±0.06 | 93.77±0.19 | 88.14±0.06 | 84.56±0.09 | 82.94±0.04 | 75.94±0.17 |

| WS2× dynamic() | 97.14±0.01 | 93.54±0.05 | 88.11±0.07 | 84.46±0.11 | 83.14±0.07 | 75.55±0.06 |

Appendix K. Ablation Test of Each Component in CHT

| VGG16-TinyImageNet | GoogLeNet-CIFAR100 | GoogLeNet-TinyImageNet | ResNet50-CIFAR100 | ResNet50-ImageNet | ResNet50-CIFAR100 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| S:100K C:200 | S:50K C:100 | S:100K C:200 | S:50K C:100 | S:1.2M C:1000 | S:50K C:100 | |||||||

| ACC | AAE | ACC | AAE | ACC | AAE | ACC | AAE | ACC | AAE | ACC | AAE | |

| FC1× | 51.34±0.12 | 43.55±0.04 | 76.64±0.1 | 62.9±0.07 | 52.63±0.08 | 45.14±0.02 | 78.13±0.13 | 65.39±0.08 | 75.04±0.05 | 63.27±0.03 | 78.3±0.03 | 65.53±0.09 |

| RigL1× | 51.32±0.25 | 43.0±0.12 | 74.12±0.34 | 56.96±0.68 | 51.23±0.12 | 42.59±0.2 | 77.34±0.04 | 64.07±0.07 | 74.5±0.02 | 60.8±0.13 | 78.08±0.08 | 64.54±0.05 |

| MEST1× | 51.47±0.1 | 43.56±0.04 | 75.22±0.57 | 58.85±1.08 | 51.58±0.15 | 43.28±0.15 | 78.23±0.05 | 64.77±0.02 | 74.65±0.1 | 61.83±0.17 | 78.47±0.14 | 65.27±0.05 |

| CHT1×(BA) | 51.17±0.05 | 43.35±0.01 | 77.89±0.05 | 65.32±0.08* | 52.63±0.24 | 45.23±0.44 | 79.33±0.12 | 67.55±0.11 | 74.74±0.12 | 62.44±0.26 | 79.03±0.13 | 67.05±0.06 |

| CHT1×(WS, ) | 51.32±0.09 | 43.44±0.02 | 77.67±0.09 | 65.08±0.10 | 52.25±0.53 | 44.97±0.89 | 79.45±0.03 | 68.11±0.06 | 75.08±0.05 | 63.70±0.01* | 79.61±0.05 | 68.15±0.15 |

| CHT1×(WS, ) | 51.26±0.04 | 43.57±0.04 | 77.78±0.11 | 65.04±0.07 | 51.52±0.48 | 43.22±0.89 | 79.76±0.09* | 68.14±0.06* | 75.12±0.2 | 63.61±0.02 | 79.21±0.06 | 67.94±0.08 |

| CHT1×(WS, ) | 51.32±0.05 | 43.43±0.04 | 77.95±0.03 | 65.18±0.06 | 52.46±0.44 | 44.92±0.89 | 79.14±0.04 | 67.67±0.03 | 75.08±0.10 | 63.43±0.08 | 79.74±0.02* | 68.24±0.12* |

| CHT1×(WS, ) | 51.75±0.05 | 44.94±0.01* | 77.89±0.06 | 65.05±0.05 | 53.41±0.10* | 46.88±0.06* | 79.29±0.04 | 67.50±0.04 | 75.01±0.02 | 63.48±0.07 | 79.35±0.07 | 67.55±0.03 |

| CHT1×(WS, ) | 51.92±0.08* | 44.88±0.03 | 77.52±0.10 | 64.70±0.04 | 53.28±0.03 | 46.82±0.01 | 79.45±0.02 | 67.75±0.02 | 75.17±0.02* | 63.70±0.07 | 79.2±0.16 | 67.51±0.1 |

| BA1× static | 51.10±0.02 | 43.36±0.03 | 76.31±0.07 | 61.46±0.11 | 51.43±0.09 | 43.02±0.08 | 78.40±0.08 | 64.61±0.10 | 74.75±0.05 | 62.07±0.04 | 78.32±0.14 | 65.07±0.11 |

| WS1× static () | 51.27±0.04 | 43.56±0.08 | 75.62±0.14 | 59.99±0.30 | 49.77±0.10 | 40.65±0.06 | 78.38±0.10 | 64.41±0.12 | 73.96±0.07 | 60.46±0.05 | 78.51±0.00 | 64.77±0.15 |

| WS1× static () | 51.45±0.04 | 43.74±0.02 | 75.78±0.13 | 60.15±0.19 | 50.33±0.09 | 41.31±0.02 | 77.94±0.11 | 64.40±0.09 | 74.78±0.02 | 61.52±0.08 | 78.88±0.15 | 64.74±0.05 |

| WS1× static () | 51.42±0.03 | 43.48±0.05 | 75.58±0.15 | 60.11±0.08 | 50.00±0.05 | 40.91±0.13 | 78.45±0.03 | 64.34±0.06 | 74.33±0.04 | 61.11±0.02 | 78.62±0.05 | 64.74±0.20 |

| WS1× static () | 51.26±0.14 | 43.27±0.04 | 74.48±0.00 | 57.23±0.00 | 49.83±0.08 | 40.62±0.13 | 78.06±0.11 | 63.54±0.20 | 74.26±0.08 | 61.10±0.09 | 78.23±0.05 | 64.86±0.05 |

| WS1× static () | 51.19±0.07 | 43.14±0.06 | 75.05±0.12 | 57.62±0.18 | 49.53±0.10 | 40.63±0.03 | 77.70±0.15 | 63.60±0.10 | 74.26±0.03 | 60.84±0.02 | 78.25±0.01 | 64.69±0.14 |

| BA1× dynamic | 51.21±0.05 | 43.25±0.03 | 76.41±0.15 | 61.49±0.12 | 51.73±0.11 | 42.97±0.05 | 78.25±0.05 | 64.72±0.07 | 74.42±0.04 | 61.25±0.05 | 78.86±0.10 | 65.22±0.15 |

| WS1× dynamic () | 51.26±0.08 | 43.43±0.05 | 74.88±0.28 | 58.30±0.50 | 50.19±0.12 | 40.93±0.18 | 78.28±0.07 | 64.64±0.12 | 74.51±0.06 | 61.56±0.03 | 78.42±0.03 | 65.31±0.12 |

| WS1× dynamic () | 51.30±0.04 | 43.37±0.03 | 74.52±0.16 | 57.38±0.36 | 50.58±0.06 | 41.54±0.04 | 78.11±0.06 | 64.59±0.08 | 74.68±0.05 | 61.52±0.04 | 78.17±0.10 | 64.66±0.10 |

| WS1× dynamic () | 51.20±0.03 | 43.09±0.03 | 74.30±0.25 | 57.04±0.59 | 50.64±0.06 | 41.23±0.06 | 78.20±0.13 | 64.43±0.08 | 74.56±0.08 | 61.71±0.02 | 78.53±0.05 | 64.62±0.06 |

| WS1× dynamic () | 51.15±0.07 | 43.42±0.03 | 74.82±1.26 | 57.06±2.72 | 51.19±0.08 | 42.50±0.08 | 77.62±0.15 | 63.25±0.17 | 74.51±0.04 | 61.49±0.01 | 78.44±0.08 | 64.28±0.11 |

| WS1× dynamic () | 51.10±0.11 | 43.37±0.04 | 74.67±0.25 | 57.01±0.36 | 51.09±0.09 | 42.52±0.12 | 77.93±0.16 | 63.88±0.12 | 74.39±0.03 | 61.43±0.05 | 78.51±0.15 | 64.19±0.17 |

| FC2× | 50.82±0.05 | 43.24±0.03 | 76.76±0.21 | 63.11±0.09 | 51.46±0.13 | 44.43±0.1 | 77.59±0.03 | 65.29±0.03 | 74.91±0.02 | 63.52±0.04 | 78.49±0.07 | 65.76±0.02 |

| RigL2× | 51.5±0.11 | 43.35±0.05 | 74.89±0.44 | 58.41±0.44 | 52.12±0.09 | 43.53±0.01 | 77.73±0.10 | 64.51±0.10 | 74.66±0.07 | 61.7±0.06 | 78.3±0.14 | 64.8±0.03 |

| MEST2× | 51.36±0.08 | 43.67±0.05 | 75.54±0.01 | 60.49±0.02 | 51.59±0.07 | 43.25±0.03 | 78.08±0.07 | 64.67±0.11 | 74.76±0.01 | 62.0±0.03 | 78.45±0.13 | 64.98±0.16 |

| CHT2×(BA) | 50.56±0.04 | 41.48±0.14 | 77.84±0.04 | 65.28±0.01 | 51.50±0.01 | 44.64±0.12 | 78.54±0.12 | 66.88±0.10 | 74.39±0.04 | 61.65±0.09 | 79.47±0.06 | 68.17±0.05 |

| CHT2×(WS, ) | 51.93±0.01* | 43.92±0.01 | 77.50±0.06 | 65.15±0.05 | 52.63±0.56 | 45.37±0.93 | 79.10±0.08 | 67.81±0.05 | 74.86±0.03 | 63.17±0.03 | 79.52±0.10 | 68.10±0.08* |

| CHT2×(WS, ) | 51.80±0.01 | 44.00±0.13 | 77.67±0.05 | 64.86±0.03 | 53.91±0.11 | 46.33±0.44 | 79.88±0.02* | 68.28±0.03* | 75.12±0.05* | 63.55±0.09* | 79.55±0.07* | 67.97±0.05 |

| CHT2×(WS, ) | 51.64±0.05 | 44.14±0.10 | 77.59±0.03 | 65.09±0.03 | 53.24±0.50 | 45.60±0.93 | 79.07±0.02 | 67.61±0.03 | 75.04±0.03 | 63.19±0.05 | 79.44±0.05 | 67.75±0.06 |

| CHT2×(WS, ) | 51.74±0.09 | 44.07±0.07 | 77.53±0.04 | 64.38±0.07 | 53.85±0.10 | 47.32±0.08 | 79.10±0.03 | 67.22±0.02 | 74.83±0.02 | 62.86±0.04 | 79.39±0.04 | 67.67±0.05 |

| CHT2×(WS, ) | 51.78±0.06 | 44.19±0.12* | 77.95±0.08* | 65.32±0.07* | 54.00±0.05* | 47.51±0.08* | 79.16±0.21 | 67.36±0.14 | 75.1±0.09 | 63.43±0.18 | 79.25±0.1 | 67.37±0.03 |

| BA2× static | 51.31±0.09 | 43.52±0.00 | 76.11±0.04 | 61.33±0.10 | 51.96±0.04 | 43.58±0.03 | 78.32±0.12 | 65.07±0.09 | 74.58±0.03 | 62.08±0.01 | 78.32±0.12 | 65.07±0.09 |

| WS2× static () | 51.34±0.08 | 43.92±0.03 | 76.05±0.05 | 60.89±0.03 | 50.32±0.13 | 41.47±0.10 | 78.17±0.16 | 64.57±0.17 | 74.65±0.03 | 61.67±0.08 | 78.39±0.02 | 65.11±0.08 |

| WS2× static () | 51.32±0.05 | 43.97±0.05 | 76.01±0.05 | 60.27±0.03 | 50.95±0.12 | 42.06±0.10 | 78.02±0.08 | 64.76±0.11 | 74.62±0.03 | 61.7±0.02 | 78.65±0.07 | 65.20±0.04 |

| WS2× static () | 51.34±0.08 | 43.69±0.03 | 75.86±0.14 | 60.26±0.15 | 50.94±0.12 | 41.92±0.10 | 77.91±0.02 | 64.57±0.06 | 74.55±0.03 | 61.57±0.03 | 78.58±0.06 | 65.02±0.05 |

| WS2× static () | 51.47±0.07 | 43.62±0.07 | 75.26±0.18 | 59.05±0.09 | 50.62±0.07 | 41.76±0.05 | 77.81±0.05 | 64.18±0.05 | 74.58±0.02 | 61.62±0.05 | 78.57±0.04 | 64.98±0.04 |

| WS2× static () | 51.31±0.05 | 43.52±0.06 | 75.28±0.12 | 58.71±0.00 | 50.57±0.16 | 41.65±0.23 | 78.07±0.09 | 64.21±0.17 | 74.49±0.02 | 61.59±0.08 | 78.56±0.03 | 64.92+0.03 |

| BA2× dynamic | 51.42±0.04 | 43.36±0.04 | 76.71±0.01 | 61.70±0.03 | 51.93±0.08 | 43.18±0.06 | 78.21±0.20 | 65.02±0.12 | 74.62±0.11 | 62.02±0.04 | 78.71±0.22 | 65.02±0.15 |

| WS2× dynamic () | 51.63±0.01 | 43.75±0.04 | 75.43±0.10 | 59.29±0.04 | 51.19±0.10 | 42.11±0.10 | 78.43±0.02 | 64.99±0.10 | 74.75±0.07 | 61.82±0.03 | 78.91±0.04 | 65.45±0.12 |

| WS2× dynamic () | 51.34±0.06 | 43.57±0.05 | 74.98±0.13 | 59.28±0.17 | 51.24±0.07 | 41.94±0.09 | 77.95±0.08 | 64.66±0.08 | 74.52±0.04 | 62.48±0.09 | 78.74±0.10 | 65.62±0.10 |

| WS2× dynamic () | 51.47±0.04 | 43.48±0.01 | 75.33±0.10 | 58.58±0.22 | 50.86±0.15 | 41.80±0.16 | 78.03±0.03 | 64.62±0.04 | 74.49±0.03 | 61.37±0.13 | 78.62±0.05 | 65.22±0.06 |

| WS2× dynamic () | 51.22±0.05 | 43.55±0.02 | 74.43±0.25 | 57.30±0.80 | 51.26±0.06 | 42.66±0.08 | 78.19±0.06 | 64.28±0.09 | 74.51±0.03 | 61.47±0.04 | 78.45±0.08 | 64.98±0.11 |

| WS2× dynamic () | 51.24±0.05 | 43.48±0.03 | 74.60±0.06 | 58.16±0.11 | 50.98±0.03 | 42.40±0.13 | 78.04±0.13 | 64.19±0.14 | 74.55±0.03 | 61.64±0.02 | 78.42±0.15 | 64.69±0.17 |

Appendix L. Area Across the Epochs

Appendix M. Selecting Network Initialization Methods: A Network Science Perspective

Appendix N. Subranking Strategy of CH3-L3

- Assign a weight to each link in the network, denoted as .

- Compute the shortest paths (SP) between all pairs of nodes in the weighted network.

- For each node pair , compute the Spearman’s rank correlation () between the vectors of all shortest paths from node i and node j.

- Generate a final ranking where node pairs are initially ranked based on the CH score (), and ties are sub-ranked based on the value. Random selection is used to rank the node pairs if there are still tied scores.

Appendix O. Credit Assigned Path

Appendix P. Hyperparameter Setting and Implementation Details

Appendix P.1. Hyperparameter Setting

| Model | Dataset | Epochs | Starting Learning-rate | Milestones | Gamma | Batch-size | Warm-up | Warm-up Epoch |

| VGG16 | Tiny-ImageNet | 200 | 0.1 | 61,121,161 | 0.2 | 256 | No | - |

| GoogLeNet | CIFAR100 | 200 | 0.1 | 60,120,160 | 0.2 | 128 | Yes | 1 |

| GoogLeNet | Tiny-ImageNet | 200 | 0.1 | 61,121,161 | 0.2 | 256 | No | - |

| ResNet50 | CIFAR100 | 200 | 0.1 | 60,120,160 | 0.2 | 128 | Yes | 1 |

| ResNet152 | CIFAR100 | 200 | 0.1 | 60,120,160 | 0.2 | 128 | Yes | 1 |

| ResNet50 | ImageNet | 90 | 0.1 | 32, 62 | 0.1 | 256 | No | - |

Appendix P.2. Algorithm Tables of CHT

| Algorithm 1:Algorihtm of CHT. |

|

References

- LeCun, Y.; Denker, J.S.; Solla, S.A. Optimal Brain Damage. Advances in Neural Information Processing Systems 2, [NIPS Conference, Denver, Colorado, USA, November 27-30, 1989]; Touretzky, D.S., Ed. Morgan Kaufmann, 1989, pp. 598–605.

- Hassibi, B.; Stork, D.G.; Wolff, G.J. Optimal brain surgeon and general network pruning. IEEE international conference on neural networks. IEEE, 1993, pp. 293–299.

- Li, J.; Louri, A. AdaPrune: An accelerator-aware pruning technique for sustainable CNN accelerators. IEEE Transactions on Sustainable Computing 2021, 7, 47–60. [Google Scholar] [CrossRef]

- Sun, M.; Liu, Z.; Bair, A.; Kolter, J.Z. A Simple and Effective Pruning Approach for Large Language Models. arXiv preprint arXiv:2306.11695, 2023; arXiv:2306.11695 2023. [Google Scholar]

- Frantar, E.; Alistarh, D. Massive language models can be accurately pruned in one-shot. arXiv preprint arXiv:2301.00774, 2023; arXiv:2301.00774 2023. [Google Scholar]

- Zhang, Y.; Bai, H.; Lin, H.; Zhao, J.; Hou, L.; Cannistraci, C.V. An Efficient Plug-and-Play Post-Training Pruning Strategy in Large Language Models 2023.

- Liu, Z.; Gan, E.; Tegmark, M. Seeing is believing: Brain-inspired modular training for mechanistic interpretability. Entropy 2023, 26, 41. [Google Scholar] [CrossRef] [PubMed]

- Mocanu, D.C.; Mocanu, E.; Stone, P.; Nguyen, P.H.; Gibescu, M.; Liotta, A. Scalable training of artificial neural networks with adaptive sparse connectivity inspired by network science. Nature communications 2018, 9, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Lee, N.; Ajanthan, T.; Torr, P.H.S. Snip: single-Shot Network Pruning based on Connection sensitivity. 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019. OpenReview.net, 2019.

- Evci, U.; Gale, T.; Menick, J.; Castro, P.S.; Elsen, E. Rigging the Lottery: Making All Tickets Winners. Proceedings of the 37th International Conference on Machine Learning, ICML 2020, 13-18 July 2020, Virtual Event. PMLR, 2020, Vol. 119, Proceedings of Machine Learning Research, pp. 2943–2952.

- Yuan, G.; Ma, X.; Niu, W.; Li, Z.; Kong, Z.; Liu, N.; Gong, Y.; Zhan, Z.; He, C.; Jin, Q.; others. Mest: Accurate and fast memory-economic sparse training framework on the edge. Advances in Neural Information Processing Systems 2021, 34, 20838–20850. [Google Scholar]

- Yuan, G.; Li, Y.; Li, S.; Kong, Z.; Tulyakov, S.; Tang, X.; Wang, Y.; Ren, J. Layer Freezing & Data Sieving: Missing Pieces of a Generic Framework for Sparse Training. arXiv preprint arXiv:2209.11204, 2022; arXiv:2209.11204 2022. [Google Scholar]

- Zhang, Y.; Lin, M.; Chen, M.; Chao, F.; Ji, R. OptG: Optimizing Gradient-driven Criteria in Network Sparsity. arXiv preprint arXiv:2201.12826, 2022; arXiv:2201.12826 2022. [Google Scholar]

- Zhang, Y.; Luo, Y.; Lin, M.; Zhong, Y.; Xie, J.; Chao, F.; Ji, R. Bi-directional Masks for Efficient N: M Sparse Training. arXiv preprint arXiv:2302.06058, 2023; arXiv:2302.06058 2023. [Google Scholar]

- Daminelli, S.; Thomas, J.M.; Durán, C.; Cannistraci, C.V. Common neighbours and the local-community-paradigm for topological link prediction in bipartite networks. New Journal of Physics 2015, 17, 113037. [Google Scholar] [CrossRef]

- Durán, C.; Daminelli, S.; Thomas, J.M.; Haupt, V.J.; Schroeder, M.; Cannistraci, C.V. Pioneering topological methods for network-based drug–target prediction by exploiting a brain-network self-organization theory. Briefings in Bioinformatics 2017, 19, 1183–1202. [Google Scholar] [CrossRef] [PubMed]

- Cannistraci, C.V. Modelling Self-Organization in Complex Networks Via a Brain-Inspired Network Automata Theory Improves Link Reliability in Protein Interactomes. Sci Rep 2018, 8, 2045–2322. [Google Scholar] [CrossRef] [PubMed]

- Narula, V.e.a. Can local-community-paradigm and epitopological learning enhance our understanding of how local brain connectivity is able to process, learn and memorize chronic pain? Applied network science 2017, 2. [Google Scholar] [CrossRef] [PubMed]

- Cannistraci, C.V.; Alanis-Lobato, G.; Ravasi, T. From link-prediction in brain connectomes and protein interactomes to the local-community-paradigm in complex networks. Scientific reports 2013, 3, 1613. [Google Scholar] [CrossRef]

- Abdelhamid, I.; Muscoloni, A.; Rotscher, D.M.; Lieber, M.; Markwardt, U.; Cannistraci, C.V. Network shape intelligence outperforms AlphaFold2 intelligence in vanilla protein interaction prediction. bioRxiv 2023, 2023–08. [Google Scholar]

- Muscoloni, A.; Cannistraci, C.V. A nonuniform popularity-similarity optimization (nPSO) model to efficiently generate realistic complex networks with communities. New Journal of Physics 2018, 20, 052002. [Google Scholar] [CrossRef]

- Muscoloni, A.; Michieli, U.; Cannistraci, C. Adaptive Network Automata Modelling of Complex Networks. preprints 2020. [Google Scholar]

- Hebb, D. The Organization of Behavior. emphNew York, 1949.

- Lü, L.; Pan, L.; Zhou, T.; Zhang, Y.C.; Stanley, H.E. Toward link predictability of complex networks. Proceedings of the National Academy of Sciences 2015, 112, 2325–2330. [Google Scholar] [CrossRef]

- Newman, M.E. Clustering and preferential attachment in growing networks. Physical review E 2001, 64, 025102. [Google Scholar] [CrossRef] [PubMed]

- Cannistraci, C.V.; Muscoloni, A. Geometrical congruence, greedy navigability and myopic transfer in complex networks and brain connectomes. Nature Communications 2022, 13, 7308. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. Proceedings of the IEEE international conference on computer vision, 2015, pp. 1026–1034.

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, AISTATS 2010, Chia Laguna Resort, Sardinia, Italy, May 13-15, 2010; Teh, Y.W.; Titterington, D.M., Eds. JMLR.org, 2010, Vol. 9, JMLR Proceedings, pp. 249–256.

- Frankle, J.; Carbin, M. The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks. 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019. OpenReview.net, 2019.

- Cacciola, A.; Muscoloni, A.; Narula, V.; Calamuneri, A.; Nigro, S.; Mayer, E.A.; Labus, J.S.; Anastasi, G.; Quattrone, A.; Quartarone, A. ; others. Coalescent embedding in the hyperbolic space unsupervisedly discloses the hidden geometry of the brain. arXiv preprint arXiv:1705.04192, 2017; arXiv:1705.04192 2017. [Google Scholar]

- Muscoloni, A.; Cannistraci, C.V. Angular separability of data clusters or network communities in geometrical space and its relevance to hyperbolic embedding. arXiv preprint arXiv:1907.00025, 2019; arXiv:1907.00025 2019. [Google Scholar]

- Alessandro, M.; Vittorio, C.C. Leveraging the nonuniform PSO network model as a benchmark for performance evaluation in community detection and link prediction. New Journal of Physics 2018, 20, 063022. [Google Scholar] [CrossRef]

- Xu, M.; Pan, Q.; Muscoloni, A.; Xia, H.; Cannistraci, C.V. Modular gateway-ness connectivity and structural core organization in maritime network science. Nature Communications 2020, 11, 2849. [Google Scholar] [CrossRef]

- Gauch Jr, H.G.; Gauch, H.G.; Gauch Jr, H.G. Scientific method in practice; Cambridge University Press, 2003.

- Barabási, A.L.; Albert, R. Emergence of scaling in random networks. science 1999, 286, 509–512. [Google Scholar] [CrossRef]

- Barabási, A.L. Network science. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 2013, 371, 20120375. [Google Scholar] [CrossRef]

- Watts, D.J.; Strogatz, S.H. Collective dynamics of ‘small-world’networks. nature 1998, 393, 440–442. [Google Scholar] [CrossRef]

- Budrikis, Z. Networks with exactly six degrees of separation. Nature Reviews Physics 2023, 5, 441–441. [Google Scholar] [CrossRef]

- Newman, M.E.; Watts, D.J. Scaling and percolation in the small-world network model. Physical review E 1999, 60, 7332. [Google Scholar] [CrossRef]

- Newman, M.E. Modularity and community structure in networks. Proceedings of the national academy of sciences 2006, 103, 8577–8582. [Google Scholar] [CrossRef]

- Muscoloni, A.; Thomas, J.M.; Ciucci, S.; Bianconi, G.; Cannistraci, C.V. Machine learning meets complex networks via coalescent embedding in the hyperbolic space. Nature communications 2017, 8, 1615. [Google Scholar] [CrossRef] [PubMed]

- Balasubramanian, M.; Schwartz, E.L. The isomap algorithm and topological stability. Science 2002, 295, 7–7. [Google Scholar] [CrossRef] [PubMed]

- Belkin, M.; Niyogi, P. Laplacian eigenmaps for dimensionality reduction and data representation. Neural computation 2003, 15, 1373–1396. [Google Scholar] [CrossRef]

- Cannistraci, C.V.; Alanis-Lobato, G.; Ravasi, T. Minimum curvilinearity to enhance topological prediction of protein interactions by network embedding. Bioinformatics 2013, 29, i199–i209. [Google Scholar] [CrossRef]

- Mishra, A.; Latorre, J.A.; Pool, J.; Stosic, D.; Stosic, D.; Venkatesh, G.; Yu, C.; Micikevicius, P. Accelerating sparse deep neural networks. arXiv preprint arXiv:2104.08378, 2021; arXiv:2104.08378 2021. [Google Scholar]

- Riquelme, C.; Puigcerver, J.; Mustafa, B.; Neumann, M.; Jenatton, R.; Susano Pinto, A.; Keysers, D.; Houlsby, N. Scaling vision with sparse mixture of experts. Advances in Neural Information Processing Systems 2021, 34, 8583–8595. [Google Scholar]

- Hubara, I.; Chmiel, B.; Island, M.; Banner, R.; Naor, J.; Soudry, D. Accelerated sparse neural training: A provable and efficient method to find n: m transposable masks. Advances in neural information processing systems 2021, 34, 21099–21111. [Google Scholar]

- Li, M.; Liu, R.R.; Lü, L.; Hu, M.B.; Xu, S.; Zhang, Y.C. Percolation on complex networks: Theory and application. Physics Reports 2021, 907, 1–68. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms. arXiv preprint arXiv:1708.07747, 2017; arXiv:1708.07747 2017. [Google Scholar]

- Cohen, G.; Afshar, S.; Tapson, J.; Van Schaik, A. EMNIST: Extending MNIST to handwritten letters. 2017 international joint conference on neural networks (IJCNN). IEEE, 2017, pp. 2921–2926.

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images 2009. pp. 32–33.

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014; arXiv:1409.1556 2014. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 1–9.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; Berg, A.C.; Fei-Fei, L. ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision (IJCV) 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Feng, F.; Hou, L.; She, Q.; Chan, R.H.; Kwok, J.T. Power law in deep neural networks: Sparse network generation and continual learning with preferential attachment. IEEE Transactions on Neural Networks and Learning Systems 2022. [Google Scholar] [CrossRef] [PubMed]

- Rees, C.L.; Moradi, K.; Ascoli, G.A. Weighing the evidence in Peters’ rule: does neuronal morphology predict connectivity? Trends in neurosciences 2017, 40, 63–71. [Google Scholar] [CrossRef] [PubMed]

| 1 | A commented video that shows how ESML shapes and percolates the network structure across the epochs is provided at this link https://www.youtube.com/watch?v=b5lLpOhb3BI

|

| VGG16-TinyImageNet | GoogLeNet-CIFAR100 | GoogLeNet-TinyImageNet | ResNet50-CIFAR100 | ResNet50-ImageNet | ResNet152-CIFAR100 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| S:100K C:200 | S:50K C:100 | S:100K C:200 | S:50K C:100 | S:1.2M C:1000 | S:50K C:100 | |||||||

| ACC | AAE | ACC | AAE | ACC | AAE | ACC | AAE | ACC | AAE | ACC | AAE | |

| FC1× | 51.34±0.12 | 43.55±0.04 | 76.64±0.1 | 62.9±0.07 | 52.63±0.08 | 45.14±0.02 | 78.13±0.13 | 65.39±0.08 | 75.04±0.05 | 63.27±0.03 | 78.3±0.03 | 65.53±0.09 |

| RigL1× | 51.32±0.25 | 43.0±0.12 | 74.12±0.34 | 56.96±0.68 | 51.23±0.12 | 42.59±0.2 | 77.34±0.04 | 64.07±0.07 | 74.5±0.02 | 60.8±0.13 | 78.08±0.08 | 64.54±0.05 |

| MEST1× | 51.47±0.1* | 43.56±0.04* | 75.22±0.57 | 58.85±1.08 | 51.58±0.15 | 43.28±0.15 | 78.23±0.05* | 64.77±0.02 | 74.65±0.1 | 61.83±0.17 | 78.47±0.14* | 65.27±0.05 |

| CHT1× | 51.92±0.08* | 44.88±0.03* | 77.52±0.10* | 64.70±0.04* | 53.28±0.03* | 46.82±0.01* | 79.45±0.02* | 67.75±0.02* | 75.17±0.02* | 63.70±0.07* | 79.2±0.16* | 67.51±0.1* |

| FC2× | 50.82±0.05 | 43.24±0.03 | 76.76±0.21 | 63.11±0.09 | 51.46±0.13 | 44.43±0.1 | 77.59±0.03 | 65.29±0.03 | 74.91±0.02 | 63.52±0.04 | 78.49±0.07 | 65.76±0.02 |

| RigL2× | 51.5±0.11* | 43.35±0.05* | 74.89±0.44 | 58.41±0.44 | 52.12±0.09* | 43.53±0.01 | 77.73±0.10* | 64.51±0.10 | 74.66±0.07 | 61.7±0.06 | 78.3±0.14 | 64.8±0.03 |

| MEST2× | 51.36±0.08* | 43.67±0.05* | 75.54±0.01 | 60.49±0.02 | 51.59±0.07* | 43.25±0.03 | 78.08±0.07* | 64.67±0.11 | 74.76±0.01 | 62.0±0.03 | 78.45±0.13 | 64.98±0.16 |

| CHT2× | 51.78±0.06* | 44.19±0.12* | 77.95±0.08* | 65.32±0.07* | 54.00±0.05* | 47.51±0.08* | 79.16±0.21* | 67.36±0.14* | 75.1±0.09* | 63.43±0.18 | 79.25±0.1* | 67.37±0.03* |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).