Submitted:

05 January 2026

Posted:

07 January 2026

Read the latest preprint version here

Abstract

This paper introduces GISMOL (General Intelligent Systems Modelling Language), a Python library under active development for modeling and prototyping general intelligent systems based on the Constrained Object Hierarchies (COH) theoretical framework. COH provides a neuroscience-inspired 9-tuple model that integrates symbolic constraints with neural computation, addressing limitations in current AI paradigms that often separate statistical learning from symbolic reasoning. GISMOL aims to operationalize COH through modular components supporting hierarchical object composition, constraint-aware neural networks, multi-domain reasoning engines, and natural language understanding with constraint validation. To illustrate its potential, we present six conceptual case studies spanning healthcare, smart manufacturing, autonomous drone delivery, finance, governance, and education. These examples demonstrate how GISMOL can translate COH theory into executable prototypes that prioritize safety, compliance, and adaptability in solving complex real-world problems. Preliminary comparative analysis suggests GISMOL’s promise in explainability, modularity, and cross-domain applicability relative to existing frameworks. This work contributes both a theoretical foundation for neuro-symbolic integration and an evolving practical toolkit that seeks to bridge the gap between AGI theory and deployable intelligent systems.

Keywords:

1. Introduction

- A formal presentation of the COH theoretical framework and its neuroscience grounding.

- A description of the design goals and initial API of GISMOL, a prototype Python library for COH.

- Six conceptual case studies and code snippets illustrating intended usage patterns and cross-domain applicability.

- A preliminary comparison with existing approaches to indicate potential advantages and gaps.

- A development roadmap, implementation strategies, and evaluation plan suitable for future empirical validation.

2. Literature Review

2.1. Symbolic AI and Knowledge Representation

2.2. Neural Networks and Deep Learning

2.3. Neuro-Symbolic Integration

2.4. Cognitive Architectures and AGI

2.5. Constraint Satisfaction and Optimization

3. Introducing Constrained Object Hierarchies (COH)

3.1. Formal Definition

3.2. Neuroscience Grounding

3.3. Theoretical Significance

- Unified Representation: Integrates symbolic, neural, and constraint-based representations.

- Hierarchical Composition: Supports arbitrary decomposition while maintaining constraint propagation.

- Multi-domain Constraints: Accommodates biological, physical, temporal, and other domain-specific constraints.

- Real-time Monitoring: Constraint daemons provide continuous validation.

- Adaptive Optimization: Goal constraints guide learning toward multiple objectives.

4. Structure of GISMOL

4.1. Core Module (gismol.core)

- COHObject: Base class for intelligent system components.

- ConstraintSystem: Manages constraint evaluation and resolution.

- ConstraintDaemon: Autonomous agents for real-time constraint monitoring.

- COHRepository: Manages object collections and relationships.

4.2. Neural Module (gismol.neural)

- NeuralComponent: Base class combining nn.Module with COHObject.

- EmbeddingModel: Generates semantic representations of objects and text.

- ConstraintAwareOptimizer: Optimizers that respect constraints.

- Specialized models for classification, regression, and generation (in progress).

4.3. Reasoners Module (gismol.reasoners)

- BaseReasoner: Fallback implementation with robust error handling.

- Domain-specific reasoners (Biological, Physical, Geometric, etc.) (in progress).

- Advanced reasoning systems (Causal, Probabilistic, Temporal, etc.) (beta version).

- Registry pattern for dynamic reasoner discovery.

4.4. NLP Module (gismol.nlp)

- COHDialogueManager: Manages conversations with constraint validation (prototype).

- EntityRelationExtractor: Extracts knowledge from text into COHObjects (prototype).

- Text2COH: Converts documents into hierarchical object structures (planned).

- ResponseValidator: Ensures language generation respects constraints (planned).

4.5. COH-to-GISMOL Mapping

5. Explanation of GISMOL Modules

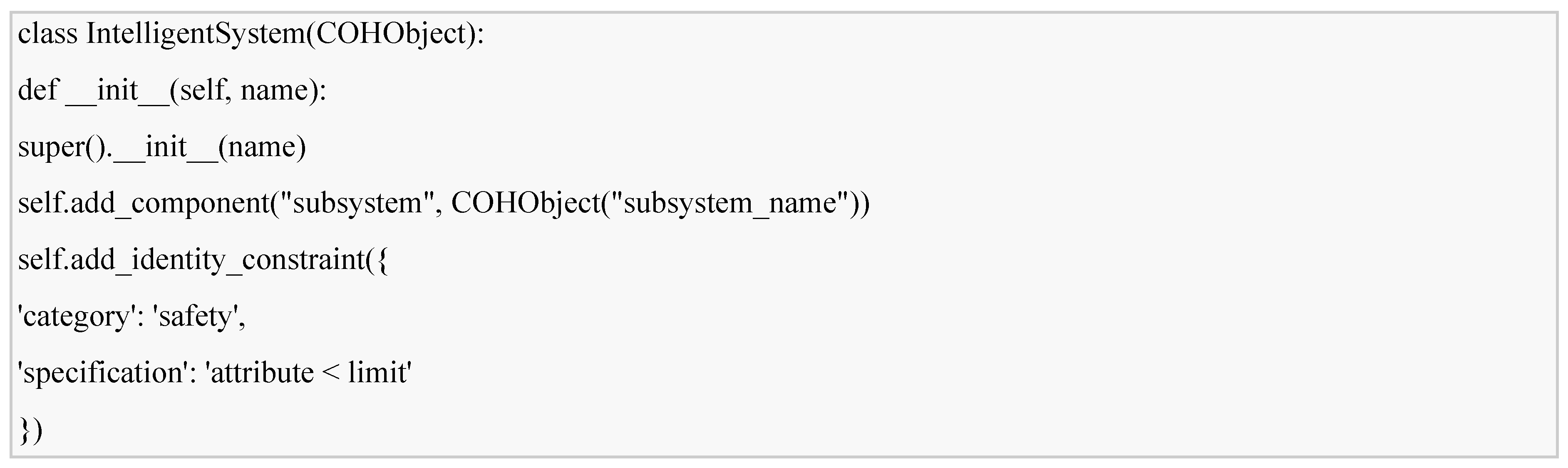

5.1. Object Instantiation

- Hierarchical parent-child relationships.

- Attribute dictionaries for state representation.

- Method dictionaries for executable behaviors.

- Constraint sets with associated reasoners.

- Neural component registries.

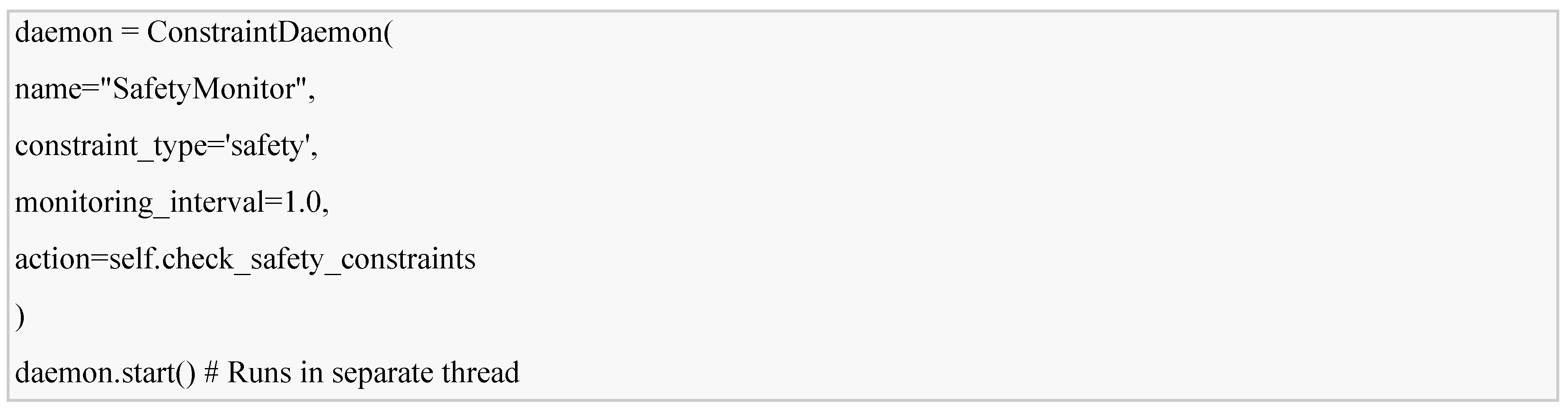

5.2. Constraint Daemons

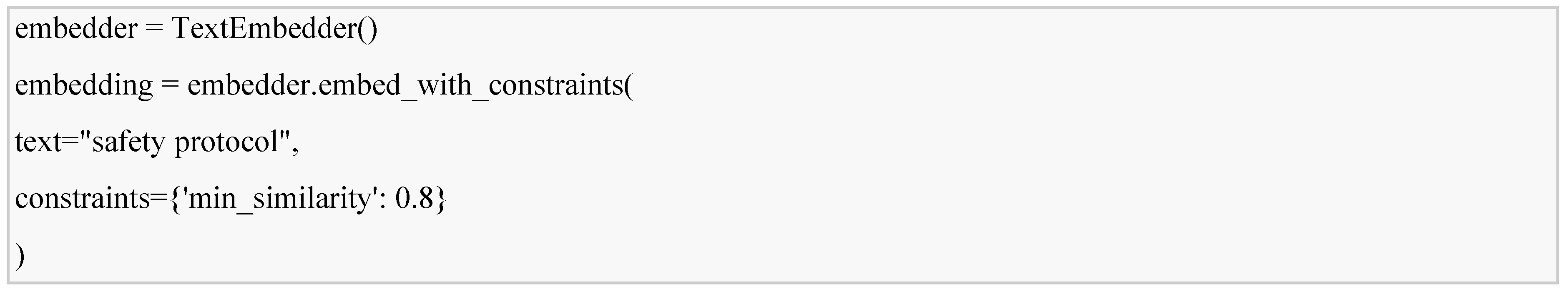

5.3. Neural Embedding

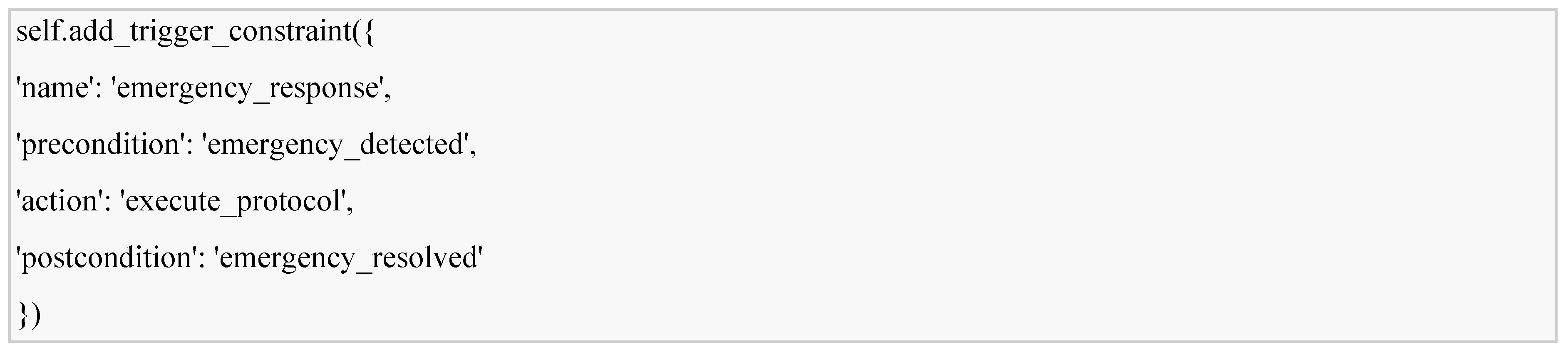

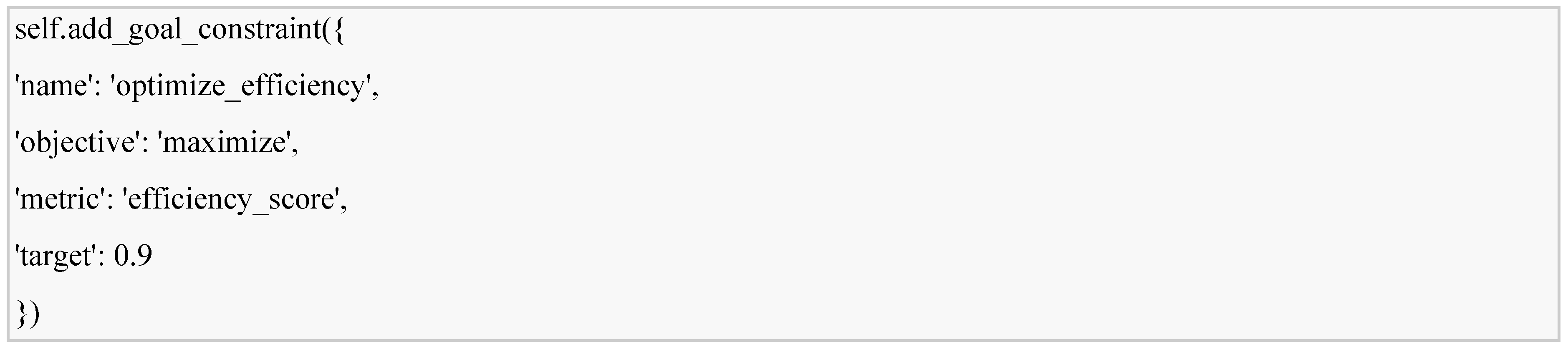

5.4. Trigger and Goal Resolution

6. Constraint Propagation in COH

6.1. Hierarchical Enforcement

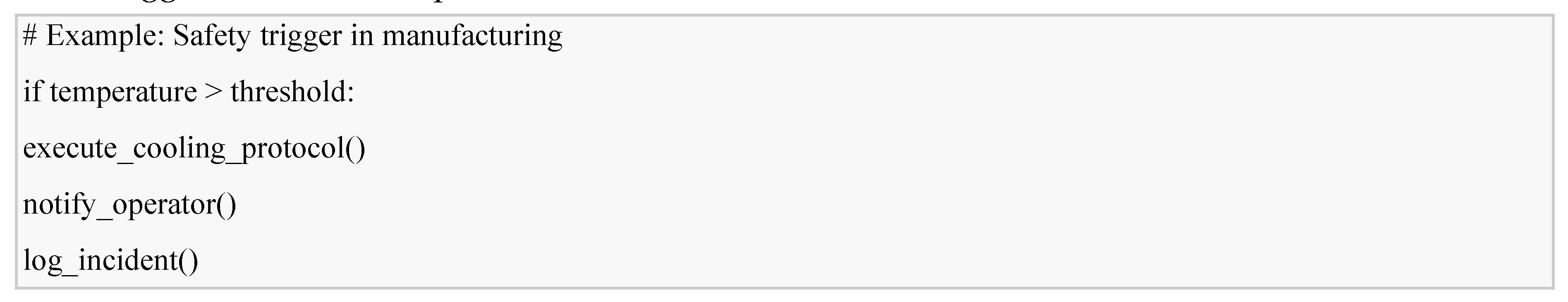

6.2. Real-time Monitoring

6.3. Semantic Coherence

6.4. Cross-domain Constraint Resolution

7. Resolution of Identity, Trigger, and Goal Constraints

7.1. Identity Constraint Resolution

- Prevention: Design ensures constraints cannot be violated.

- Detection: Daemons monitor for violations.

- Correction: Automatic remediation when violations occur.

- Escalation: Human intervention when automated correction fails.

7.2. Trigger Constraint Resolution

- Multi-objective optimization: Balancing competing goals.

- Adaptive learning: Neural components adjust based on goal achievement.

- Constraint-aware optimization: Optimization algorithms respect hard constraints.

7.4. Symbolic-Neural Interaction

- Constraint embeddings: Constraints are embedded in neural representations.

- Loss functions: Constraint violations contribute to loss terms.

- Curriculum learning: Simpler constraints are learned before complex ones.

8. Case Studies

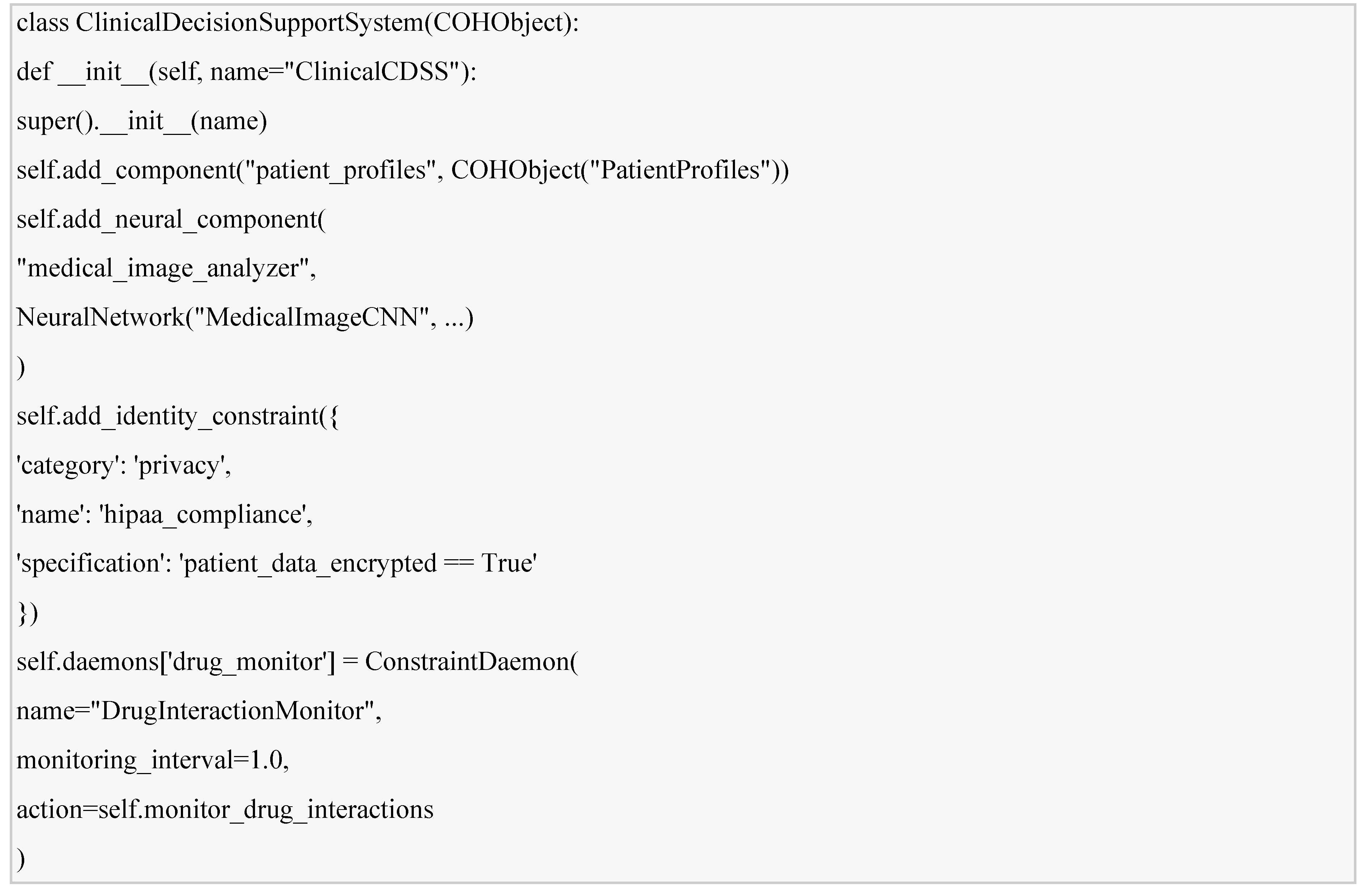

8.1. Healthcare: AI-Enhanced Clinical Decision Support System

- HIPAA compliance and patient privacy.

- Evidence-based diagnosis and treatment.

- Integration with existing healthcare systems.

- Explainable recommendations for clinical acceptance.

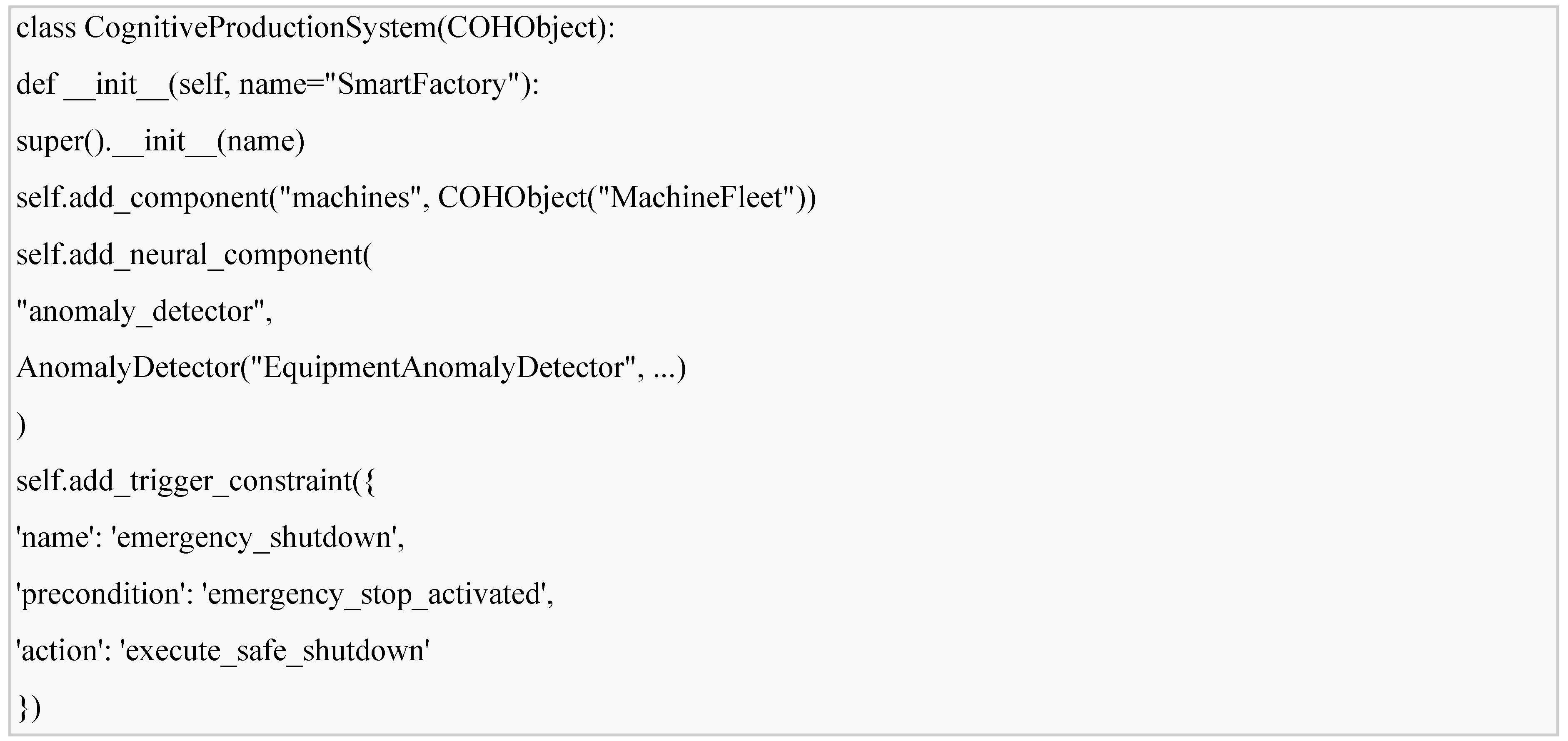

8.2. Smart Manufacturing: Cognitive Production Optimization System

- Real-time production scheduling.

- Predictive maintenance.

- Quality control with defect detection.

- Energy efficiency optimization.

8.3. Autonomous Drone Delivery: Urban Air Mobility Management System

- Collision avoidance with geometric constraints.

- Battery management with predictive optimization.

- Weather response with safety triggers.

- Regulatory compliance monitoring.

- N: {ObstacleDetectionCNN, RouteOptimizerGNN, BatteryPredictor}

- I: {drone_certification, airspace_compliance, weight_constraints}

- T: {low_battery_redirect, weather_contingency, airspace_congestion_avoidance}

- D: {AirspaceMonitor, BatteryMonitor, WeatherMonitor}

8.4. Finance: Algorithmic Trading and Risk Management System

- Regulatory compliance (MiFID II, Dodd-Frank) (conceptual modeling only).

- Risk management with Value at Risk constraints.

- Market manipulation prevention.

- Neural market prediction with sentiment analysis.

- N: {MarketPredictorLSTM, MarketAnomalyDetector, NewsSentimentBERT}

- I: {position_limits, var_limit, market_manipulation_prevention}

- T: {risk_limit_breach, volatility_spike, circuit_breaker}

- D: {RiskMonitor, ComplianceMonitor, MarketAbuseMonitor}

8.5. Governance: Smart City Public Service Optimization System

- Equity-aware resource allocation.

- Citizen feedback processing with sentiment analysis.

- Policy impact simulation.

- Multi-stakeholder optimization.

- N: {ServiceDemandPredictor, ResourceOptimizer, CitizenSentimentAnalyzer}

- I: {equal_access, budget_authority, gdpr_compliance}

- T: {demand_spike_response, emergency_activation, budget_alert}

- D: {BudgetMonitor, ServiceMonitor, PrivacyMonitor}

8.6. Education: Personalized Adaptive Learning System

- Knowledge tracing with Bayesian models.

- Learning style adaptation.

- Content recommendation with constraint validation.

- Collaborative learning facilitation.

- N: {BayesianKnowledgeTracer, ContentRecommenderNN, EssayGradingBERT}

- I: {student_privacy, curriculum_alignment, accessibility_requirements}

- T: {struggling_learner, mastery_advancement, engagement_drop}

- D: {ProgressMonitor, AccessibilityMonitor, IntegrityMonitor}

9. Prototyping and Evaluation Plan

9.1. Implementation Pipeline

- Modeling: Define COH 9-tuple for the target system.

- Implementation: Create GISMOL objects with appropriate constraints and neural components.

- Integration & Simulation: Connect components; validate constraint propagation using test harnesses and simulated data.

- Experimental Evaluation: Measure performance on benchmarks; prepare for future pilot deployments.

9.2. Intended Runtime Behavior

- Autonomous constraint monitoring: Daemons continuously validate constraints.

- Adaptive learning: Neural components adjust based on experience and goal achievement.

- Hierarchical coordination: Parent objects coordinate child object behavior.

- Multi-domain reasoning: Appropriate reasoners handle different constraint types.

9.3. Evaluation Metrics

- Constraint Satisfaction Rate: Percentage of constraints satisfied over time.

- Goal Achievement: Progress toward optimization objectives.

- Adaptation Speed: How quickly systems adapt to changing conditions.

- Explainability Quality: Comprehensibility of system decisions.

- Resource Efficiency: Computational and memory requirements.

9.4. Performance Considerations (Design)

- Cached evaluation: Frequent constraint evaluations are cached.

- Lazy evaluation: Complex constraints are evaluated only when needed.

- Parallel monitoring: Constraint daemons operate in parallel threads.

- Incremental updating: Only affected constraints are re-evaluated after changes.

10. Summary of COH/GISMOL Advantages

10.1. Comparison with Existing Frameworks

10.2. Unique Contributions of COH/GISMOL

- Integrated Constraint System: Combines identity, trigger, and goal constraints with neural components.

- Hierarchical Organization: Natural decomposition of complex systems while maintaining coherence.

- Multi-domain Reasoning: Unified handling of biological, physical, temporal, and other constraints.

- Real-time Monitoring: Constraint daemons provide continuous safety guarantees.

- Practical Implementation (prototype): Python library enables rapid prototyping of intelligent systems.

- Cross-domain Applicability: Single framework applicable to healthcare, manufacturing, finance, etc.

10.3. Limitations

- Learning Curve: Requires understanding of both symbolic and neural approaches.

- Computational Overhead: Constraint monitoring adds runtime overhead.

- Domain Knowledge Requirement: System designers must specify appropriate constraints.

- Early Development Stage: Limited real-world deployment compared to mature frameworks; API subject to change.

11. Summary of Contributions

11.1. Theoretical Contributions

- COH Formalization: A comprehensive 9-tuple model for intelligent systems.

- Neuro-symbolic Integration Framework: Unifies neural learning with symbolic constraints.

- Hierarchical Constraint Propagation: Mechanism for maintaining coherence in complex systems.

- Real-time Constraint Monitoring: Daemon-based approach to continuous validation.

11.2. Practical Contributions (Development Stage)

- GISMOL Library: Initial Python implementation of COH theory.

- Modular Architecture: Separable components for objects, neural networks, reasoning, and NLP.

- Domain Case Studies: Six conceptual examples across different application areas.

- Implementation Guidelines: Pipeline for translating COH models to executable prototypes.

11.3. Impact on AGI Development

- Bridging Theory and Practice: Translating theoretical models into executable prototypes.

- Enabling Safer AI: Constraint system provides safety guarantees (conceptually and in simulation).

- Supporting Explainability: Hierarchical organization and constraint tracing aid interpretation.

- Facilitating Cross-domain Transfer: Common framework applicable to multiple domains.

12. Conclusion and Future Research Directions

12.1. Summary

12.2. Future Research Directions

- Scalability Optimization: Improving performance for large constraint systems.

- Automated Constraint Learning: Learning constraints from data rather than manual specification.

- Formal Verification: Mathematical proofs of constraint satisfaction under certain conditions.

- Cognitive Science Validation: Testing COH models against human cognitive performance.

- Distributed Implementation: Scaling GISMOL systems across multiple computing nodes.

- Quantum Integration: Exploring quantum computing for constraint satisfaction problems.

- Ethical Constraint Formalization: Developing frameworks for encoding ethical principles.

- Cross-modal Learning: Integrating vision, language, and other modalities more seamlessly.

- Lifelong Learning: Systems that accumulate knowledge over extended periods.

- Human-AI Collaboration: Improved interfaces for human guidance of GISMOL systems.

12.3. Concluding Remarks

References

- J. R. Anderson, C. Lebiere, et al., "The Atomic Components of Thought," Psychology Press, 1998.

- B. Goertzel, "Artificial general intelligence: Concept, state of the art, and future prospects," Journal of Artificial General Intelligence, vol. 5, no. 1, pp. 1-46, 2014. [CrossRef]

- G. Marcus, "The next decade in AI: Four steps towards robust artificial intelligence," arXiv preprint arXiv:2002.06177, 2020.

- C. Rudin, "Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead," Nature Machine Intelligence, vol. 1, no. 5, pp. 206-215, 2019. [CrossRef]

- H. J. Levesque, "Common sense, the Turing test, and the quest for real AI," MIT Press, 2017.

- S. d'Avila Garcez and L. C. Lamb, "Neurosymbolic AI: The 3rd wave," Artificial Intelligence Review, vol. 53, pp. 1-20, 2020. [CrossRef]

- M. Garnelo and M. Shanahan, "Reconciling deep learning with symbolic artificial intelligence: representing objects and relations," Current Opinion in Behavioral Sciences, vol. 29, pp. 17-23, 2019. [CrossRef]

- T. R. Besold et al., "Neural-symbolic learning and reasoning: A survey and interpretation," arXiv preprint arXiv:1711.03902, 2017.

- J. E. Laird, "The SOAR cognitive architecture," MIT Press, 2012.

- P. Langley, "An integrative framework for artificial intelligence," Journal of Artificial General Intelligence, vol. 10, no. 1, pp. 1-8, 2019.

- P. Langley, "The cognitive systems paradigm," Advances in Cognitive Systems, vol. 1, pp. 3-13, 2012.

- L. De Raedt et al., "From statistical relational to neuro-symbolic artificial intelligence," Artificial Intelligence, vol. 287, 2020.

- Y. LeCun, Y. Bengio, and G. Hinton, "Deep learning," Nature, vol. 521, no. 7553, pp. 436-444, 2015.

- V. Mnih et al., "Human-level control through deep reinforcement learning," Nature, vol. 518, no. 7540, pp. 529-533, 2015. [CrossRef]

- C. Molnar, "Interpretable Machine Learning: A Guide for Making Black Box Models Explainable," 2020.

- R. Manhaeve et al., "DeepProbLog: Neural probabilistic logic programming," Advances in Neural Information Processing Systems, vol. 31, pp. 3749-3759, 2018.

- T. Rocktäschel and S. Riedel, "End-to-end differentiable proving," Advances in Neural Information Processing Systems, vol. 30, pp. 3788-3800, 2017.

- K. Yi et al., "Neural-symbolic VQA: Disentangling reasoning from vision and language understanding," Advances in Neural Information Processing Systems, vol. 31, pp. 1031-1042, 2018.

- G. H. Chen, "A survey on hierarchical deep learning," IEEE Access, vol. 8, pp. 68712-68722, 2020.

- P. S. Rosenbloom, "The Sigma cognitive architecture and system," International Journal of Machine Learning and Cybernetics, vol. 10, pp. 147-169, 2019. [CrossRef]

- P. Langley et al., "Cognitive architectures: Research issues and challenges," Cognitive Systems Research, vol. 56, pp. 1-10, 2019. [CrossRef]

- F. Rossi, P. van Beek, and T. Walsh, "Handbook of constraint programming," Elsevier, 2006.

- R. Dechter, "Constraint processing," Morgan Kaufmann, 2003.

- D. Hassabis et al., "Neuroscience-inspired artificial intelligence," Neuron, vol. 95, no. 2, pp. 245-258, 2017. [CrossRef]

- Y. Bengio, "The consciousness prior," arXiv preprint arXiv:1709.08568, 2017.

- J. Hawkins et al., "A framework for intelligence and cortical function based on grid cells in the neocortex," Frontiers in Neural Circuits, vol. 13, 2019. [CrossRef]

- M. Leucker and C. Schallhart, "A brief account of runtime verification," Journal of Logic and Algebraic Programming, vol. 78, no. 5, pp. 293-303, 2009. [CrossRef]

- M. A. Makary and M. Daniel, "Medical error—the third leading cause of death in the US," BMJ, vol. 353, 2016. [CrossRef]

- Rajkomar et al., "Scalable and accurate deep learning with electronic health records," NPJ Digital Medicine, vol. 1, no. 18, 2018. [CrossRef]

- Z. Li et al., "Deep learning for smart manufacturing: Methods and applications," Journal of Manufacturing Systems, vol. 48, pp. 144-156, 2018.

- M. R. G. Raman et al., "Explainable AI: A review of machine learning interpretability methods," Entropy, vol. 23, no. 1, 2021.

| COH Element | GISMOL Implementation | Primary Module |

| C (Components) | COHObject hierarchy, COHRepository | gismol.core |

| A (Attributes) | COHObject.attributes dictionary | gismol.core |

| M (Methods) | COHObject.methods dictionary | gismol.core |

| N (Neural Components) | NeuralComponent subclasses | gismol.neural |

| E (Embedding) | EmbeddingModel classes | gismol.neural |

| I (Identity Constraints) | Constraint objects with identity category | gismol.core |

| T (Trigger Constraints) | Constraint objects with trigger type | gismol.core |

| G (Goal Constraints) | Constraint objects with goal type | gismol.core |

| D (Constraint Daemons) | ConstraintDaemon instances | gismol.core |

| Framework | Type | Strengths | Limitations | GISMOL (anticipated) |

| SOAR [9] | Cognitive architecture | Symbolic reasoning, goal-driven | Limited learning, no neural integration | Neuro-symbolic integration, constraint system |

| ACT-R [10] | Cognitive architecture | Cognitive modeling, production rules | Complex implementation, limited scalability | Python implementation, hierarchical organization |

| TensorLog [12] | Neuro-symbolic | Differentiable inference, probabilistic | Limited constraint types, no real-time monitoring | Comprehensive constraint system, daemon monitoring |

| DeepProbLog [16] | Neuro-symbolic | Probabilistic logic, neural networks | No hierarchical constraints, limited domains | Multi-domain constraints, hierarchical organization |

| PyTorch/TensorFlow | Deep learning | Neural network flexibility, GPU acceleration | No symbolic reasoning, black-box nature | Symbolic constraint integration, explainability |

| CLIPS [31] | Expert system | Rule-based reasoning, pattern matching | No learning capabilities, static knowledge | Adaptive neural components, continuous learning |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).