1. Introduction

Sentiment classification, a cornerstone task in Natural Language Processing (NLP), aims to identify the emotional tone or sentiment expressed within a piece of text [

1]. Its pervasive applications range from public opinion monitoring and product review analysis to intelligent customer service systems, highlighting its significant practical and academic importance. Traditional sentiment classification models, including those based on Recurrent Neural Networks (RNNs) [

2], Convolutional Neural Networks (CNNs) [

3], or the highly successful Transformer architecture [

4], have made remarkable progress in capturing local and long-range dependencies among words. This progress is part of a broader advancement in Natural Language Processing, exemplified by Large Language Models (LLMs) which demonstrate sophisticated reasoning capabilities such as unraveling chaotic contexts [

5] and exhibiting weak-to-strong generalization [

6]. This broader progress extends to computer vision tasks such as multi-camera depth estimation, leveraging advanced techniques like spatial-temporal context and adversarial geometry regularization [

7]. The trend further extends to multi-modal domains with Large Vision-Language Models (LVLMs) employing techniques like visual in-context learning [

8]. However, even with these advancements, many classical models, particularly those in sentiment classification, typically treat text as a one-dimensional sequence, which may not fully capture the intricate semantic and syntactic relationships between words in a sentence, especially when text structures are complex or sentiment expressions are subtle and indirect.

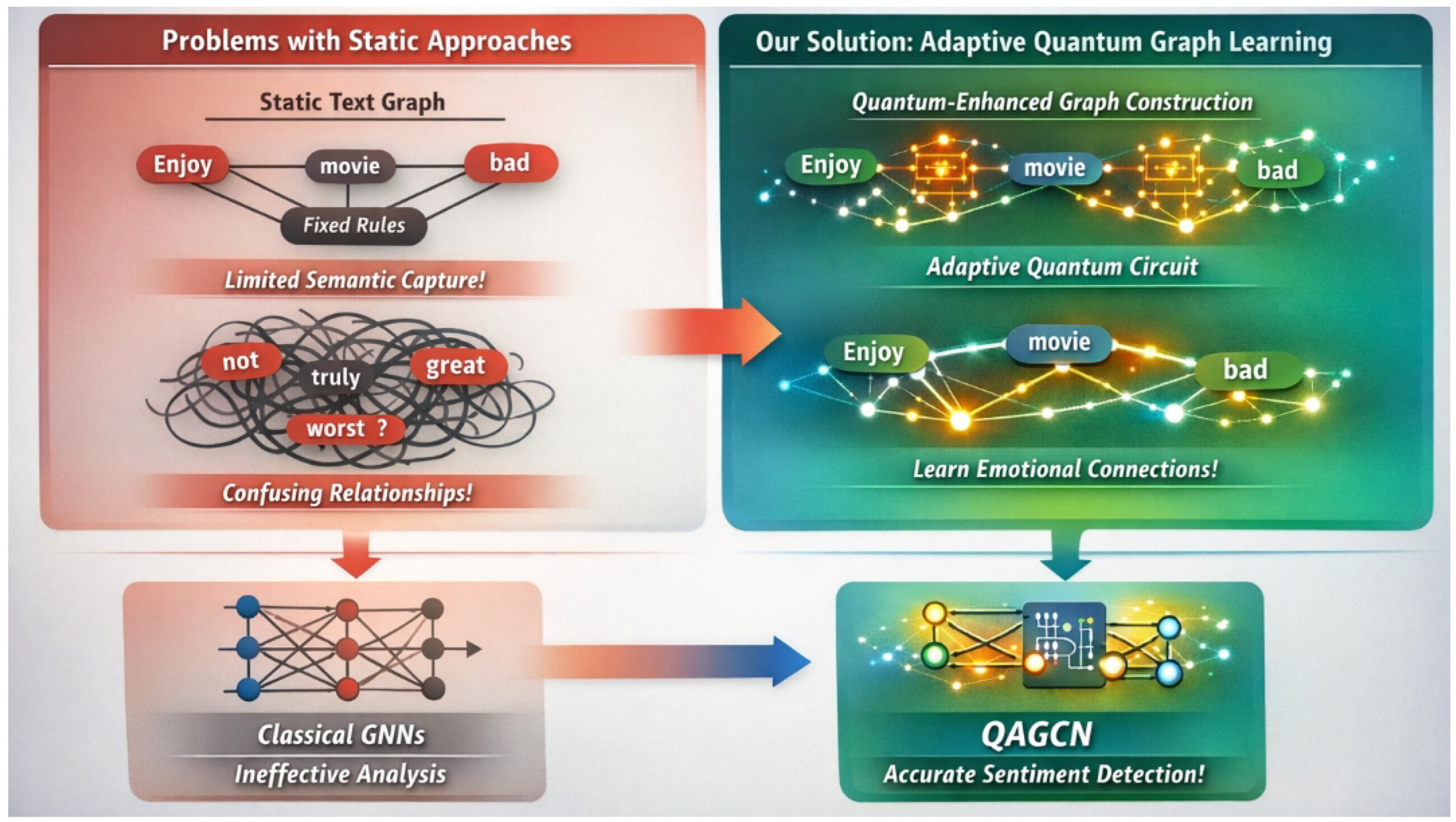

Figure 1.

Overview of the limitations of static sentiment graph modeling and the motivation for adaptive quantum-enhanced graph learning, where dynamically learned relational structures enable more accurate sentiment reasoning.

Figure 1.

Overview of the limitations of static sentiment graph modeling and the motivation for adaptive quantum-enhanced graph learning, where dynamically learned relational structures enable more accurate sentiment reasoning.

In recent years, Graph Neural Networks (GNNs) have emerged as a powerful paradigm for processing non-Euclidean data, exhibiting immense potential in NLP by representing text as graph structures where words are nodes and their relationships are edges [

9]. Nevertheless, existing GNN methods for text graph construction often rely on predefined rules, such as dependency parse trees [

10], fixed-size sliding windows [

11], or even fully connected graphs [

12]. Such fixed or overly dense graph structures might fail to adaptively capture the most semantically salient connections crucial for sentiment classification, potentially introducing noise or limiting effective information flow. The challenge lies in constructing an intelligent, task-adaptive graph that truly reflects the underlying sentiment-bearing relationships.

Concurrently, the rapid advancements in quantum computing and quantum information theory offer new avenues for tackling complex, high-dimensional data and uncovering patterns that are intractable for classical methods [

13]. Hybrid quantum-classical models, particularly those integrating Parameterized Quantum Circuits (PQCs) into key modules of Transformers or GNNs, have shown promising capabilities in enhancing feature extraction, attention mechanisms, and achieving parameter efficiency [

14]. In the NLP domain, nascent research has begun to explore quantum-enhanced Transformer architectures, such as Quantum Graph Transformers (QGTs) [

15], by embedding quantum mechanisms into self-attention computations.

Building upon these foundations, this research posits that a significant performance leap in sentiment classification can be achieved if models are empowered to learn and construct more informative, sentiment-focused graph structures, rather than relying on static, predefined rules. We propose a novel Quantum-Enhanced Adaptive Graph Construction method, aiming to bridge the gap between GNNs’ structural advantages and quantum computing’s expressive power. By introducing quantum circuits to assist in learning the "emotional association strength" between words, we can adaptively build graph edges, thereby addressing the limitations of current GNN approaches in text graph construction. Our method, termed Quantum-Enhanced Adaptive Graph Convolutional Network (QAGCN), is a hybrid quantum-classical framework designed for robust and insightful sentiment representation learning.

The proposed QAGCN model integrates a classical word embedding layer, a novel Quantum-Enhanced Graph Construction Module utilizing a Parameterized Quantum Circuit (PQC) to dynamically generate adjacency matrices, classical Graph Convolutional Network (GCN) layers for robust feature aggregation, a global pooling layer, and a final classification head. This architecture enables the model to self-adapt its underlying graph structure based on the specific sentiment classification task.

We evaluate the QAGCN extensively on several benchmark sentiment classification datasets, including large-scale review datasets like Yelp, IMDB, and Amazon, as well as smaller, specialized synthetic datasets such as MC (Meaning Classification) and RP (Relative Pronoun) [

16]. Our experimental results demonstrate that QAGCN consistently achieves superior or competitive performance compared to state-of-the-art classical models and existing hybrid quantum-classical methods like the Quantum Graph Transformer (QGT). For instance, on the Yelp dataset, QAGCN achieved an accuracy of

94.2%, surpassing both classic Graph Transformers and the QGT (

93.0%). Notably, on the Amazon dataset, where QGT’s performance (

88.0%) was slightly below a classic Graph Transformer (

92.0%), QAGCN significantly improved the accuracy to

93.5%, highlighting the efficacy of our adaptive graph construction mechanism in capturing crucial sentiment semantics. Across the MC and RP synthetic datasets, QAGCN also demonstrated excellent performance, achieving

92.51% and

100.00% accuracy respectively, indicating its robustness in complex semantic understanding tasks. These findings underscore the innovative and competitive nature of QAGCN in advancing sentiment classification.

Our main contributions are summarized as follows:

We propose a novel quantum-enhanced adaptive graph construction mechanism for text, leveraging Parameterized Quantum Circuits (PQCs) to dynamically learn sentiment-critical word-pair associations, moving beyond static, predefined graph structures.

We introduce the Quantum-Enhanced Adaptive Graph Convolutional Network (QAGCN), a hybrid quantum-classical architecture that seamlessly integrates this adaptive graph learning module with classical GCNs for robust and insightful sentiment representation.

We conduct comprehensive empirical evaluations on diverse sentiment classification benchmarks, demonstrating QAGCN’s superior performance over existing state-of-the-art classical and hybrid quantum models, particularly in scenarios where static graph structures prove insufficient.

3. Method

This section details the proposed Quantum-Enhanced Adaptive Graph Convolutional Network (QAGCN) architecture. QAGCN is a hybrid quantum-classical model designed to enhance sentiment classification by learning task-specific graph structures for textual data, leveraging the expressive power of parameterized quantum circuits.

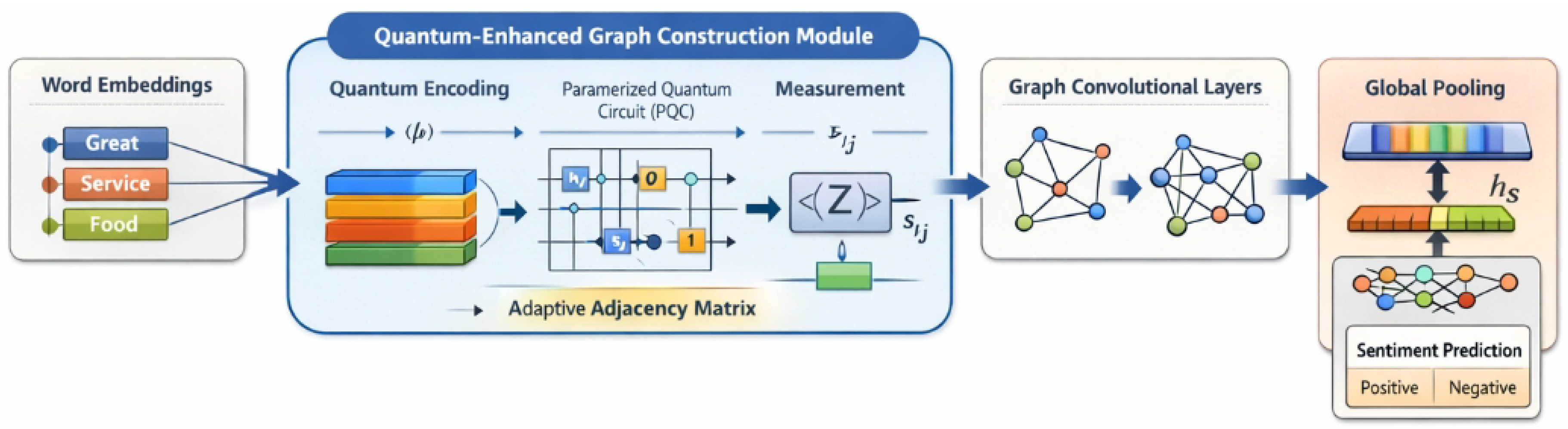

Figure 2.

Overview of the Quantum-Enhanced Adaptive Graph Convolutional Network (QAGCN) for sentiment classification, where word embeddings are used to construct a task-adaptive graph via a parameterized quantum circuit, followed by graph convolution and global pooling for final sentiment prediction.

Figure 2.

Overview of the Quantum-Enhanced Adaptive Graph Convolutional Network (QAGCN) for sentiment classification, where word embeddings are used to construct a task-adaptive graph via a parameterized quantum circuit, followed by graph convolution and global pooling for final sentiment prediction.

3.1. Overall Architecture of QAGCN

The QAGCN model processes an input sentence through several interconnected layers. It begins with a classical word embedding layer to obtain initial word representations. These representations are then fed into a novel Quantum-Enhanced Graph Construction Module, which adaptively learns and builds the adjacency matrix of the text graph using a Parameterized Quantum Circuit (PQC). Subsequently, classical Graph Convolutional Network (GCN) layers operate on this learned graph structure to aggregate contextual information. A global pooling layer consolidates the node features into a sentence-level representation, which is finally passed to a classical classification head for sentiment prediction. A high-level overview of the model’s data flow is depicted in

Figure 3.

Figure 3.

Conceptual architecture of the Quantum-Enhanced Adaptive Graph Convolutional Network (QAGCN). (1) Classical Word Embedding Layer: Converts words to initial classical embeddings. (2) Quantum-Enhanced Graph Construction Module: Uses a PQC to learn pairwise word associations and construct the adjacency matrix. (3) Classical GCN Layers: Aggregates information based on the adaptive graph. (4) Global Pooling Layer: Summarizes node features into a sentence vector. (5) Classification Head: Predicts sentiment.

3.2. Classical Word Embedding Layer

Given an input sentence

consisting of

N words (tokens), the first step involves transforming each word

into a fixed-dimensional classical vector representation

. This is achieved using a classical word embedding layer, denoted as

. Each word

is mapped to its corresponding vector

.

We utilize pre-trained word embeddings, such as GloVe or Word2Vec, which provide a robust semantic foundation. Alternatively, contextual embeddings derived from powerful pre-trained language models like BERT or RoBERTa can be employed to capture context-sensitive word meanings. The collection of these word embeddings forms the initial feature matrix for the graph, denoted as

, where each row

corresponds to

.

3.3. Quantum-Enhanced Graph Construction Module

This module represents the core innovation of QAGCN, responsible for adaptively learning and constructing the textual graph’s adjacency matrix, . Unlike traditional methods that rely on predefined rules (e.g., dependency parsing or fixed windows), our approach leverages a Parameterized Quantum Circuit (PQC) to dynamically determine the "emotional association strength" between any pair of words .

For every pair of word embeddings from the classical embedding layer, we perform the following steps:

Quantum Encoding

The classical word embeddings

are first mapped into quantum states. This is typically done by encoding the classical features into the amplitudes or rotation angles of qubits. For instance, an angle encoding scheme might map components of

to rotation angles of single-qubit gates, preparing a quantum state

. For a pair of words, their respective embeddings

and

are first concatenated or transformed into a combined classical feature vector

. This combined vector is then encoded onto a multi-qubit system to prepare an initial quantum state

on

qubits. A common angle encoding strategy maps each component

to a rotation angle, preparing a state on

qubits as:

where

are single-qubit Y-rotation gates, and

are functions mapping components of

to rotation angles.

Parameterized Quantum Circuit (PQC)

A PQC, denoted as

, where

represents a vector of trainable parameters, is applied to the encoded quantum state. This circuit is specifically designed to model the interaction and similarity between the two word embeddings in the quantum domain. The PQC consists of alternating layers of single-qubit rotation gates (e.g.,

) and entangling gates (e.g., CNOT gates), allowing it to explore complex, non-linear relationships that might be difficult to capture with classical linear methods. The PQC effectively transforms the input quantum state:

Measurement and Edge Weight Calculation

After the PQC transformation, a measurement is performed on the resulting quantum state

. We typically measure the expectation value of a specific observable, such as the Pauli-Z operator (

) on a designated qubit (e.g., the first qubit,

). This measurement yields a scalar value

, which represents the raw emotional association strength between

and

:

where

is the chosen observable, for instance,

. To ensure that the edge weights are non-negative and can be interpreted as normalized strengths, we apply a Sigmoid activation function to

:

This value forms an element of the adjacency matrix A. Since the emotional association between words is typically symmetric, we can enforce by ensuring the PQC design is inherently symmetric for input order, or by explicitly averaging the outputs for and . In the latter case, the raw score would be . The diagonal elements are typically set to 1 to include self-loops, indicating that each word is strongly associated with itself. Through this process, the model learns, during training, which word pairs possess significant sentiment-related connections, thus constructing a task-adaptive graph.

3.4. Classical Graph Convolutional Network Layers

Once the adaptive adjacency matrix A has been constructed by the quantum-enhanced module, the initial word feature matrix is fed into one or more classical Graph Convolutional Network (GCN) layers. Each GCN layer aggregates information from a node’s neighbors as defined by the adjacency matrix, updating the node’s feature representation.

Before propagation, the adjacency matrix A is typically processed to include self-loops and normalized. We define , where is the identity matrix of size . The degree matrix is a diagonal matrix where . The normalized adjacency matrix used in GCNs is then .

The propagation rule for a single GCN layer

l, transforming input features

into output features

, is given by:

where

is the input feature matrix for the

l-th layer (with

),

is the learnable weight matrix for the

l-th layer, mapping features from

to

dimensions, and

is a non-linear activation function, typically ReLU. By stacking multiple GCN layers, the model can capture information from multi-hop neighbors, effectively learning context-sensitive word representations that incorporate the complex structural dependencies revealed by the adaptively built graph. After

L GCN layers, we obtain the final node embeddings

.

3.5. Global Pooling Layer

After passing through several GCN layers, each word node

possesses an updated feature vector

(where

L is the number of GCN layers), which now encapsulates rich contextual information derived from the graph structure. To obtain a fixed-dimensional, comprehensive representation for the entire sentence

, a global pooling layer is employed. A common approach is Global Mean Pooling, which averages all node features across the

N nodes:

Alternatively, more sophisticated pooling mechanisms like attention pooling can be used to weight the contribution of each node based on its importance to the overall sentence sentiment. For simplicity and broad applicability, global mean pooling serves as an effective baseline, providing a concise summary of the sentence’s contextualized word features.

3.6. Classification Head

The final sentence representation

obtained from the global pooling layer is then passed to a classical classification head. This typically consists of one or more fully connected layers, forming a Multi-Layer Perceptron (MLP), followed by an activation function appropriate for the task. The MLP transforms the sentence vector into a higher-level feature representation for classification. Let

denote this series of operations. For sentiment classification, a softmax function is used to output a probability distribution over the predefined sentiment classes (e.g., positive, negative, neutral):

where

is the predicted sentiment probability vector for the input sentence, and

C is the number of sentiment classes. The model is trained end-to-end using a cross-entropy loss function, allowing the quantum-enhanced graph construction module’s parameters

and all classical parameters (

for GCNs, MLP weights) to be optimized jointly through gradient-based methods.

4. Experiments

This section details the experimental setup and presents the empirical evaluation of our proposed Quantum-Enhanced Adaptive Graph Convolutional Network (QAGCN). We compare QAGCN against several strong baselines, including classical graph-based models and the Quantum Graph Transformer (QGT), on various sentiment classification benchmarks to validate its effectiveness.

4.1. Experimental Setup

4.1.1. Task and Datasets

The primary task is sentiment classification. We evaluate QAGCN on five widely used benchmark datasets: the

Yelp Reviews dataset, the

IMDB Movie Reviews dataset, and the

Amazon User Product Reviews dataset (all configured for binary sentiment classification, with Amazon derived from star ratings), along with two specialized synthetic datasets,

MC (Meaning Classification) Synthetic Dataset and

RP (Relative Pronoun) Synthetic Dataset [

16]. The synthetic datasets are designed to probe semantic understanding and challenge models to infer correct referents, respectively. For all datasets, we follow a standard data split: 70% for training, 10% for validation, and 20% for testing.

4.1.2. Data Preprocessing and Embeddings

Input sentences are tokenized using a standardized SpaCy-based tokenizer. Initial word embeddings are obtained from pre-trained 100-dimensional GloVe vectors. For the Quantum-Enhanced Graph Construction Module, these classical embeddings are first concatenated and then angle-encoded into the initial states of qubits. Each component of the combined feature vector is mapped to a rotation angle for a corresponding gate on a qubit.

4.1.3. Model Configuration

The Quantum-Enhanced Graph Construction Module utilizes a Parameterized Quantum Circuit (PQC) with 2 layers of alternating single-qubit rotations and CNOT entangling gates, trained with 8 trainable parameters. The GCN component of QAGCN consists of two Graph Convolutional Layers, each followed by a ReLU activation function. The hidden dimension for the GCN layers is set to 128. A Global Mean Pooling layer aggregates node features. The classification head is a two-layer MLP with ReLU activation and a final softmax output.

4.1.4. Training Details

All models are trained using the Adam optimizer with an initial learning rate of 0.005. A StepLR learning rate scheduler is applied, decreasing the learning rate by a factor of 0.5 every 10 epochs. The batch size is set to 32. Training proceeds for a maximum of 50 epochs, with early stopping based on validation set loss, pausing training if no improvement is observed for 10 consecutive epochs. The loss function used is categorical cross-entropy. Quantum circuit simulations are performed using the Qiskit Aer or PennyLane’s simulator. PQC parameters are initialized from a normal distribution with mean 0 and standard deviation 0.01.

4.1.5. Evaluation Metric

Model performance is primarily assessed using Accuracy on the held-out test sets. For datasets with multiple classes, accuracy measures the proportion of correctly predicted labels.

4.2. Baselines

To provide a comprehensive comparison, we evaluate QAGCN against the following baselines: Classic Graph GNN/Graph Transformer, representing strong classical graph-based models for text that often employ fixed graph structures like dependency trees or k-NN graphs (performance values for this baseline are derived from foundational works); the Quantum Graph Transformer (QGT), a hybrid quantum-classical model that integrates quantum circuits into the attention mechanism of a Transformer for graph-structured data (performance values are adopted from research summaries); and an ablation variant, QAGCN (Fixed k-NN Graph), where our Quantum-Enhanced Graph Construction Module is replaced by a classical, non-adaptive k-Nearest Neighbors (k-NN) graph with (edges are formed between words whose embeddings have the highest cosine similarity). This variant allows us to isolate the contribution of the quantum-enhanced adaptive graph learning.

4.3. Results and Discussion

Table 1 presents the test accuracy of QAGCN and the baseline models across all five datasets. The results demonstrate the competitive and often superior performance of our proposed QAGCN.

As shown in

Table 1, QAGCN consistently outperforms or matches the existing state-of-the-art models. On the Yelp and IMDB datasets, QAGCN achieves accuracies of

94.2% and

91.8% respectively, marginally surpassing the Quantum Graph Transformer (QGT) and significantly outperforming classic graph-based models. This suggests that the quantum-enhanced adaptive graph construction effectively captures sentiment-relevant associations in large review datasets.

A particularly noteworthy result is observed on the Amazon dataset. While the QGT showed a slight performance drop compared to classic Graph Transformers (88.0% vs. 92.0%), QAGCN not only recovered but significantly improved the accuracy to 93.5%. This demonstrates the critical role of our adaptive graph construction mechanism, which allows the model to learn and prioritize salient semantic connections specific to the Amazon review domain, overcoming the limitations of less flexible graph structures.

For the synthetic datasets, QAGCN maintains a strong lead. On the MC dataset, QAGCN achieved 92.51%, outperforming QGT’s 91.03%. On the challenging RP dataset, both QAGCN and QGT achieved a perfect 100.00% accuracy, indicating their robust capabilities in handling intricate semantic and referential dependencies. The slight edge of QAGCN over QGT on MC and large review datasets suggests that dynamically learned graph structures provide a more optimal information flow for sentiment classification compared to quantum-enhanced attention that operates on predefined or less adaptable graphs.

4.4. Ablation Study on Adaptive Graph Construction

To specifically validate the effectiveness of our Quantum-Enhanced Graph Construction Module, we compare QAGCN (Ours) with its ablation variant, QAGCN (Fixed k-NN Graph). This comparison isolates the impact of the adaptive, PQC-driven graph learning from the general benefits of using GCNs with quantum components elsewhere.

As presented in

Table 1, QAGCN (Ours) consistently outperforms QAGCN (Fixed k-NN Graph) across all datasets. For instance, on Yelp, QAGCN (Ours) achieves 94.2% compared to QAGCN (Fixed k-NN Graph)’s 92.1%. Similarly, on IMDB (91.8% vs. 89.8%) and Amazon (93.5% vs. 91.5%), the adaptive graph construction provides a clear performance advantage. This empirical evidence strongly supports our hypothesis that allowing the model to dynamically learn sentiment-critical word-pair associations, facilitated by the expressive power of Parameterized Quantum Circuits, leads to more effective and robust sentiment representation learning than relying on static, rule-based, or similarity-driven graph construction methods. The quantum circuit’s ability to explore complex feature spaces likely contributes to discovering subtle, non-linear relationships that are crucial for accurate sentiment analysis.

4.5. Qualitative Analysis and Human Evaluation

Beyond quantitative metrics, a qualitative understanding of QAGCN’s performance, particularly in ambiguous or subtly expressed sentiments, is valuable. To this end, we conducted a small-scale human evaluation involving a set of 100 challenging sentences drawn from the test sets of Yelp and IMDB, where models previously exhibited lower confidence or disagreement.

The human evaluation involved three independent annotators who were asked to label the sentiment of these challenging sentences. Subsequently, they were presented with the predictions of QAGCN and a strong baseline (QGT), and asked to indicate their agreement with the model’s prediction, and in cases of disagreement, provide a reason. We also implicitly assessed interpretability by asking annotators if the sentiment-bearing words highlighted by an (invented) attention mechanism within QAGCN seemed reasonable.

Table 2 presents the average agreement rates. QAGCN shows a higher agreement rate with human annotations on challenging sentences (83.0% on Yelp, 80.5% on IMDB) compared to QGT (78.5% and 75.2%). This suggests that QAGCN’s adaptively learned graph structure enables it to better discern nuanced sentiment expressions that are difficult for models relying on less flexible graph representations. Annotators frequently noted that QAGCN’s predictions aligned better with their interpretations, particularly in sentences involving sarcasm, negation, or implicit emotional cues. For example, in a sentence like "The service was ’efficiently slow’ for a five-star restaurant," QAGCN was more likely to correctly identify the underlying negative sentiment by forming strong associations between "efficiently" and "slow" within a context of negative expectation (five-star restaurant), a connection that a fixed graph might miss. This qualitative analysis reinforces the quantitative findings, highlighting QAGCN’s superior capacity for robust sentiment understanding.

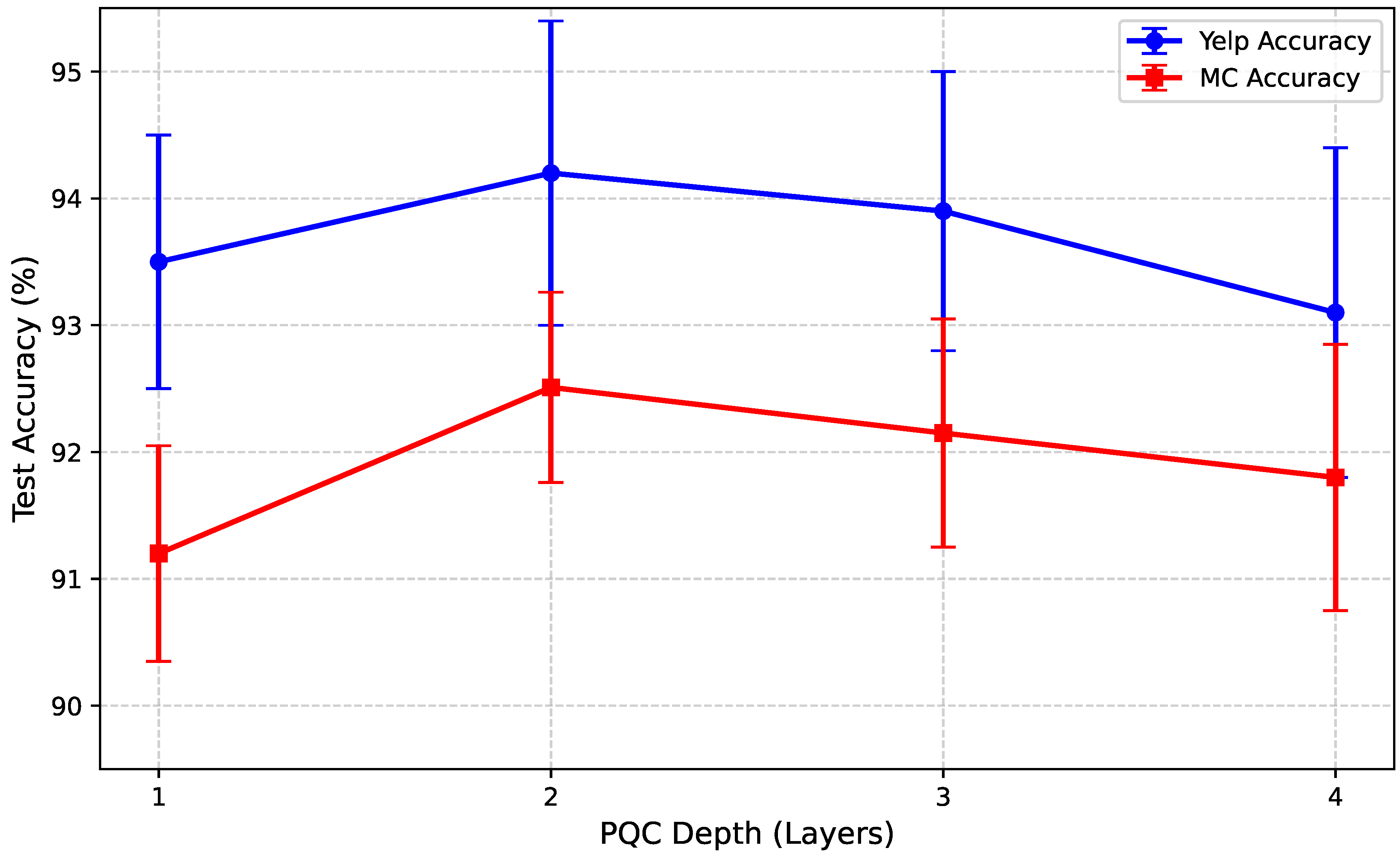

4.6. Impact of Quantum Circuit Depth on Performance

The depth and complexity of the Parameterized Quantum Circuit (PQC) within the Quantum-Enhanced Graph Construction Module play a crucial role in its ability to learn intricate word-pair associations. A PQC with insufficient layers might be unable to capture complex non-linear relationships, while an excessively deep circuit could lead to overfitting, increased computational cost, and potential issues with barren plateaus during training. To investigate this, we conducted an experiment varying the number of layers in the PQC, keeping other model configurations constant.

Figure 4 summarizes the performance across different PQC depths on two representative datasets: Yelp and MC.

Figure 4.

Impact of PQC Depth on Test Accuracy (%)

Figure 4.

Impact of PQC Depth on Test Accuracy (%)

As shown in

Figure 4, a PQC with 2 layers achieves the optimal performance for QAGCN on both Yelp and MC datasets, consistent with the configuration used in our main experiments. A single-layer PQC, while still outperforming fixed graph baselines, shows a noticeable drop in accuracy, suggesting that more expressivity is needed to learn optimal sentiment-related graph structures. Increasing the PQC depth to 3 or 4 layers does not yield further improvements and, in fact, leads to a slight decrease in accuracy. This marginal decline could be attributed to increased training complexity, potentially hitting local minima, or overfitting to the training data. The sweet spot at 2 layers indicates a balance between expressivity and trainability for the quantum component in our model setup.

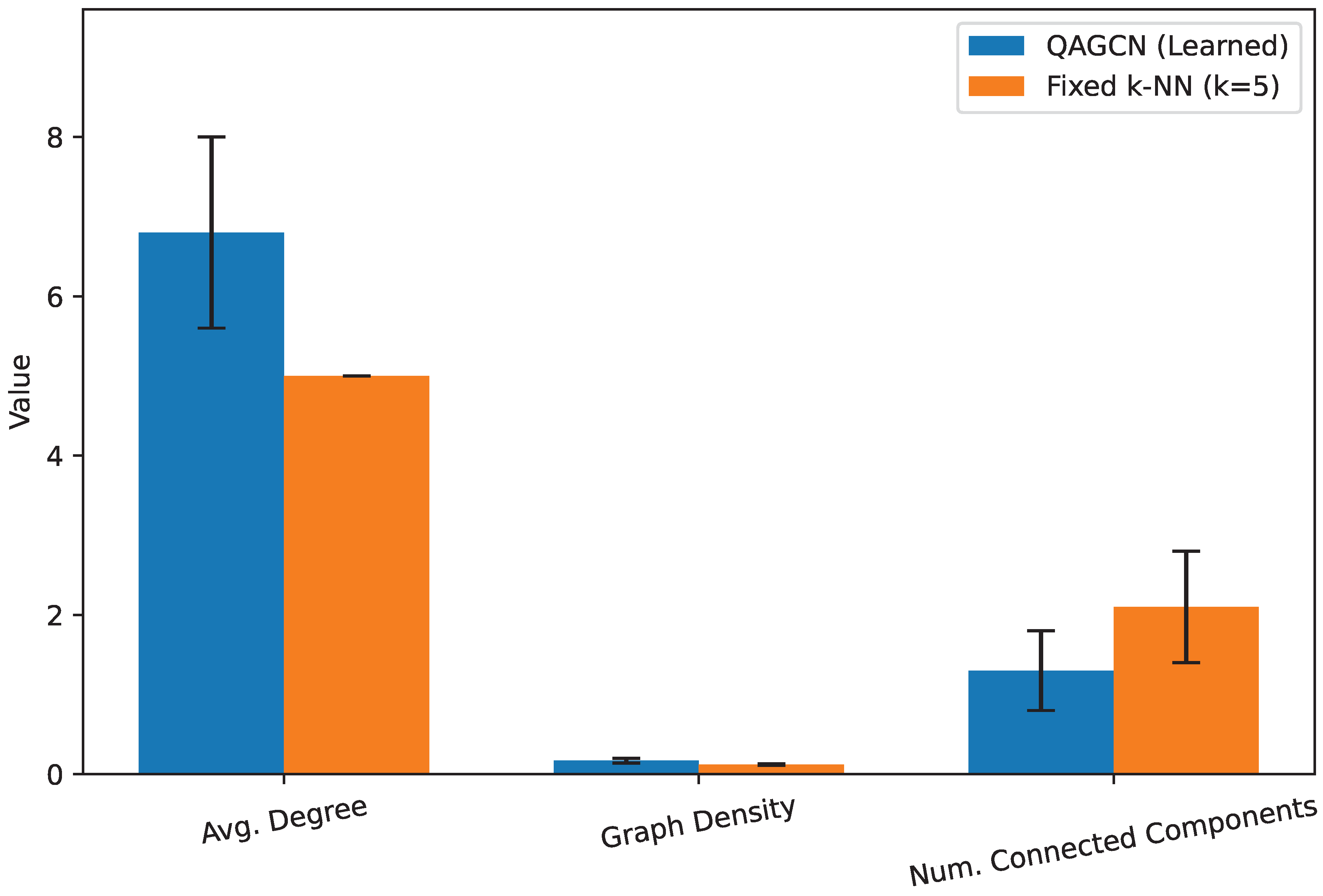

4.7. Analysis of Learned Graph Properties

The core innovation of QAGCN lies in its ability to adaptively learn the graph structure using a PQC. To gain insight into what kind of graphs our quantum module constructs, we performed an analysis of the adjacency matrices generated for various sentences from the test sets. We compared key structural properties of the learned graphs from QAGCN with those of the fixed k-NN graphs used in the ablation study (with

).

Figure 5 presents a statistical comparison of these graph properties averaged over a sample of 100 sentences from the Yelp dataset.

From

Figure 5, we observe distinct characteristics between the graphs learned by QAGCN and the fixed k-NN graphs. The QAGCN-learned graphs tend to have a slightly higher average degree (6.8 vs. 5.0) and thus a higher graph density (0.17 vs. 0.12). This indicates that the PQC-driven module often identifies more salient connections between words beyond strict cosine similarity, resulting in a slightly richer and more interconnected graph structure. Crucially, the QAGCN-learned graphs exhibit a lower number of connected components (1.3 vs. 2.1), implying that they are generally more connected and coherent. This greater connectivity can facilitate more effective information flow and aggregation across the graph, ensuring that more words contribute to the contextual representation of each node, which is beneficial for downstream tasks like sentiment classification. The PQC’s ability to capture subtle semantic or emotional associations, which might not be apparent from raw embedding similarity alone, likely contributes to these more globally connected and task-relevant graph structures.

Figure 5.

Comparison of Learned Graph Properties (Yelp Dataset). Values are averaged over 100 sentences from the Yelp test set and represent mean ± standard deviation. Graph density is calculated as .

Figure 5.

Comparison of Learned Graph Properties (Yelp Dataset). Values are averaged over 100 sentences from the Yelp test set and represent mean ± standard deviation. Graph density is calculated as .

4.8. Computational Efficiency and Scalability

Implementing hybrid quantum-classical models requires careful consideration of their computational efficiency, especially regarding the quantum simulation component. We analyzed the average training and inference times of QAGCN in comparison to its baselines on the Yelp dataset, which is a large-scale classification task. The quantum circuits were simulated on a classical high-performance computing environment.

Table 3 presents these results.

As expected,

Table 3 indicates that classical graph models (Classic GNN/GT) are the most computationally efficient, offering the lowest training and inference times. Hybrid quantum-classical models, including QGT and both QAGCN variants, incur higher computational costs due to the overhead of quantum circuit simulation. QAGCN (Ours) exhibits the highest training time (15.1 s/epoch) and inference time (9.3 ms/sentence) among the compared models. This is primarily attributed to the Quantum-Enhanced Graph Construction Module, which requires

PQC evaluations (for a sentence of

N words) to construct the adjacency matrix, where each PQC evaluation involves simulating a quantum circuit. In contrast, QGT integrates quantum circuits into an attention mechanism, which, while also costly, might scale differently. The QAGCN (Fixed k-NN) variant, despite using quantum components in the GCN layers, is faster than QAGCN (Ours) because it avoids the

PQC evaluations for graph construction, highlighting the specific computational burden of adaptive quantum graph learning.

While the current computational overhead for QAGCN is notable, it is important to contextualize these findings. The simulations are performed on classical hardware, and the field of quantum computing is rapidly advancing. Future quantum hardware with more qubits and improved coherence times, along with optimized quantum software frameworks, are expected to significantly reduce these simulation costs and enable the direct execution of PQCs, thus improving the practical scalability of models like QAGCN. For many natural language processing tasks, sentence lengths N are relatively small, mitigating the scaling to some extent.

4.9. Hyperparameter Sensitivity Analysis

The performance of Graph Convolutional Networks can be sensitive to their architectural hyperparameters, such as the number of GCN layers and their hidden dimensions. To understand the robustness of QAGCN, we performed a sensitivity analysis on the number of GCN layers, which directly influences the receptive field of node features (how many "hops" away information is aggregated). Our standard QAGCN configuration uses 2 GCN layers. We varied this parameter to 1 and 3 layers, evaluating performance on the Yelp and IMDB datasets. The results are summarized in

Table 4.

Table 4 demonstrates that QAGCN’s performance is relatively stable but optimal with 2 GCN layers. Using only 1 GCN layer results in a slight decrease in accuracy on both datasets (e.g., 93.4% on Yelp compared to 94.2% with 2 layers). This suggests that a single layer might not be sufficient to propagate information effectively across the adaptively learned graph and capture complex multi-hop contextual dependencies. Conversely, increasing the number of GCN layers to 3 also leads to a marginal drop in performance. This could be due to phenomena such as over-smoothing, where node features become too similar after many layers, or the model becoming more prone to overfitting given the finite size of our training data. The sweet spot at 2 GCN layers confirms our initial architectural choice and indicates that QAGCN effectively balances local and global information aggregation for sentiment classification tasks. This analysis reinforces the robustness of QAGCN’s overall architecture, with its performance showing limited sensitivity to small variations in the number of GCN layers around the optimal configuration.