Submitted:

17 December 2025

Posted:

18 December 2025

You are already at the latest version

Abstract

Keywords:

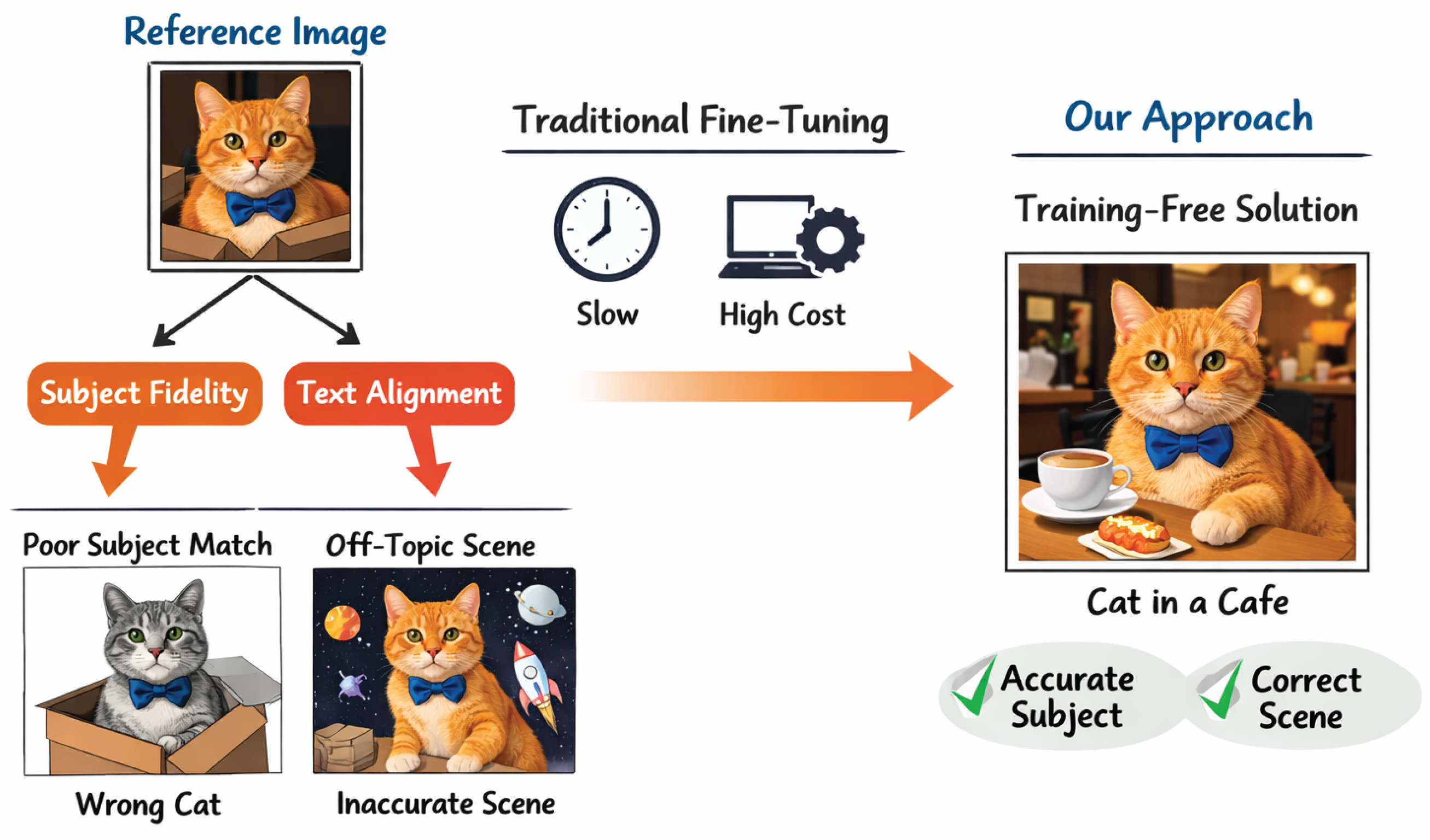

1. Introduction

- We introduce Adaptive Contextual Feature Grafting (ACFG), a novel mechanism that uses context-aware attention fusion to dynamically integrate subject features, significantly enhancing the naturalness and contextual consistency of grafted subjects in new scenes.

- We propose Hierarchical Structure-Aware Initialization (HSAI), a multi-stage initialization strategy incorporating multi-scale structural alignment during latent inversion and an adaptive dropout schedule during diffusion, ensuring robust preservation of both global and fine-grained subject structures. This leverages insights from advanced image editing and generation techniques [12,28].

- We demonstrate that ContextualGraftor sets a new state-of-the-art for training-free subject-driven text-to-image generation, achieving superior subject fidelity and text alignment across diverse benchmarks while maintaining competitive computational efficiency.

2. Related Work

2.1. Subject-Driven Text-to-Image Generation

2.2. Diffusion Model Architectures and Image Manipulation Techniques

3. Method

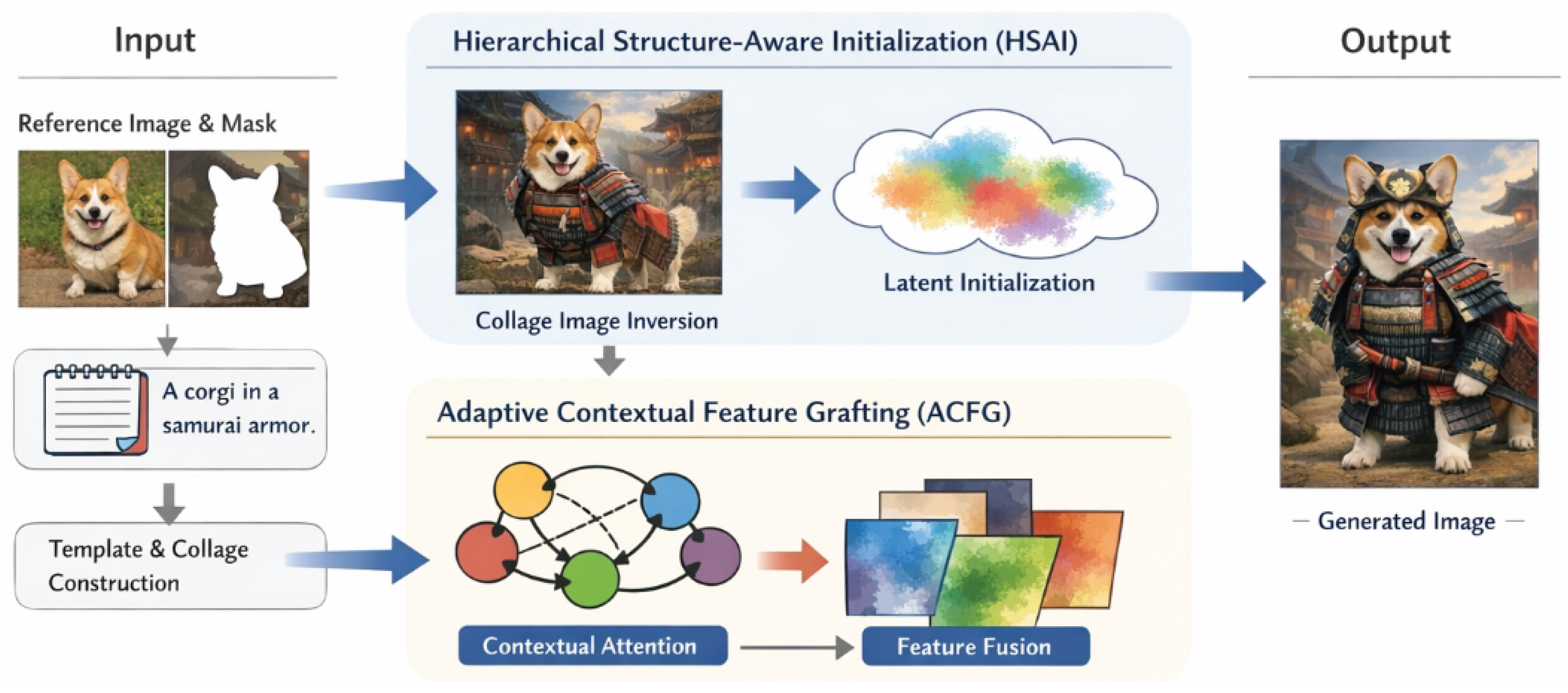

3.1. Overall Framework Architecture

3.1.1. Template Generation and Collage Construction

3.1.2. Hierarchical Structure-Aware Initialization (HSAI)

3.1.3. Adaptive Contextual Feature Grafting (ACFG)

3.1.4. Image Generation

3.2. Adaptive Contextual Feature Grafting (ACFG)

3.3. Hierarchical Structure-Aware Initialization (HSAI)

3.3.1. Multi-Scale Structural Alignment during Latent Inversion

3.3.2. Adaptive Dropout for Reference Feature Injection

3.4. Training-Free Nature

3.5. Data Preprocessing for Reference Subjects

4. Experiments

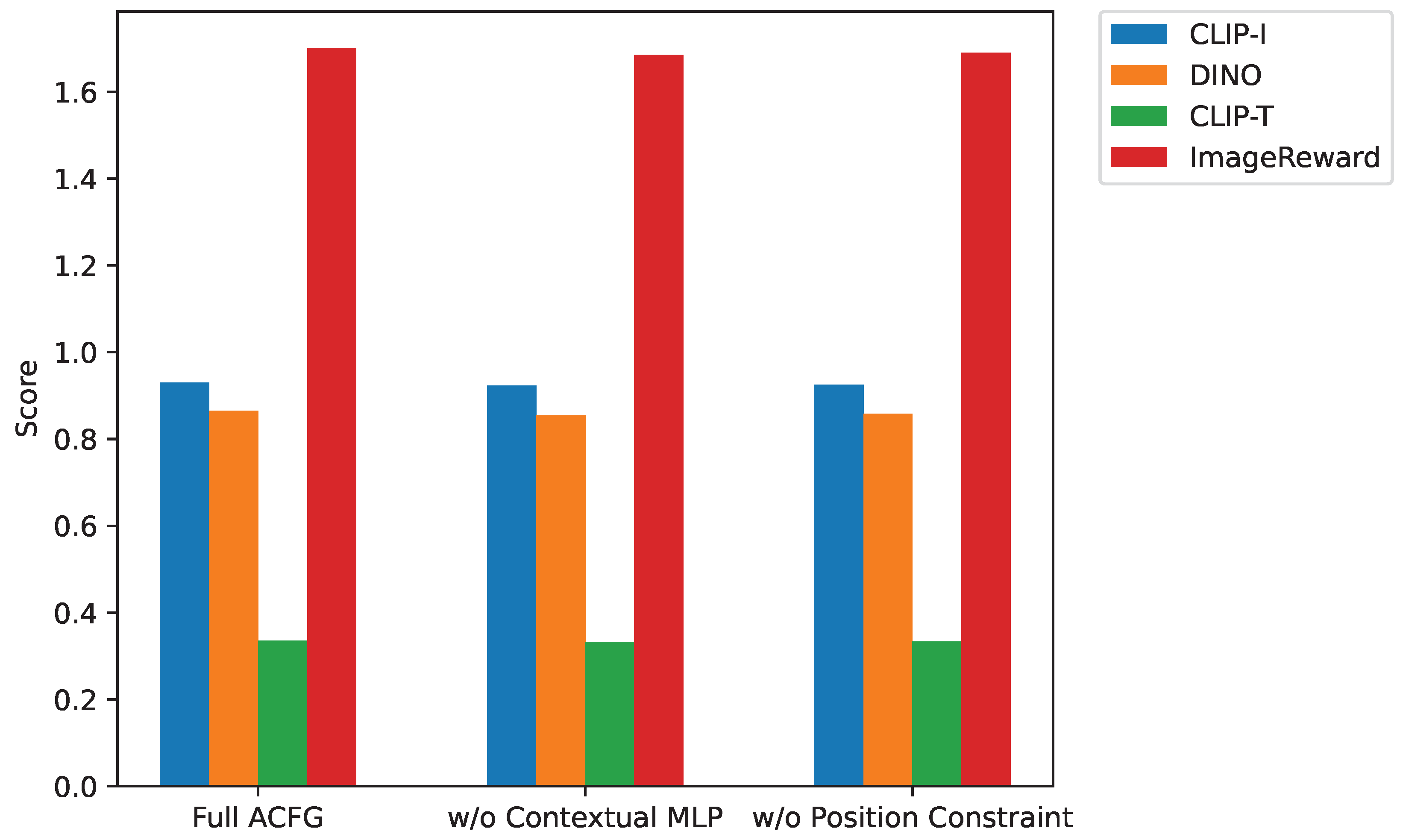

4.1. Analysis of Adaptive Contextual Feature Grafting (ACFG)

4.2. Analysis of Hierarchical Structure-Aware Initialization (HSAI)

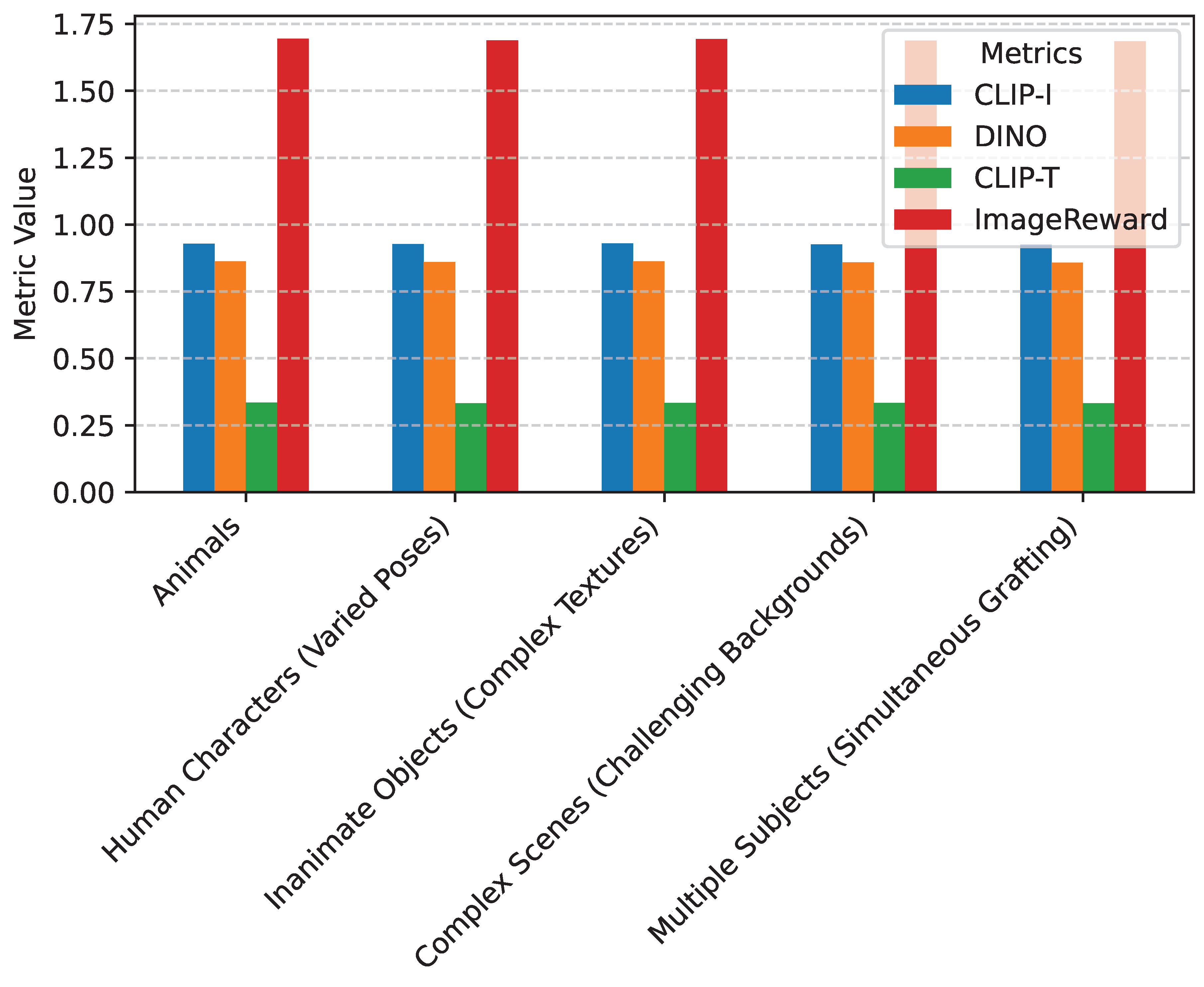

4.3. Robustness and Generalization to Diverse Scenarios

5. Conclusion

References

- Yang Xu, Yiheng Xu, Tengchao Lv, Lei Cui, Furu Wei, Guoxin Wang, Yijuan Lu, Dinei Florencio, Cha Zhang, Wanxiang Che, Min Zhang, and Lidong Zhou. LayoutLMv2: Multi-modal pre-training for visually-rich document understanding. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 2579–2591. Association for Computational Linguistics, 2021.

- Yucheng Zhou, Xiubo Geng, Tao Shen, Chongyang Tao, Guodong Long, Jian-Guang Lou, and Jianbing Shen. Thread of thought unraveling chaotic contexts. arXiv preprint arXiv:2311.08734, 2023.

- Yucheng Zhou, Jianbing Shen, and Yu Cheng. Weak to strong generalization for large language models with multi-capabilities. In The Thirteenth International Conference on Learning Representations, 2025.

- Mingxuan Du, Yutian Zeng, Ruichen He, and Zihan Dong. Multimodal ai and agent systems for biology: Perception, reasoning, generation, and automation. 2025.

- Liancheng Zheng, Zhen Tian, Yangfan He, Shuo Liu, Huilin Chen, Fujiang Yuan, and Yanhong Peng. Enhanced mean field game for interactive decision-making with varied stylish multi-vehicles. arXiv preprint arXiv:2509.00981, 2025.

- Zhihao Lin, Zhen Tian, Jianglin Lan, Dezong Zhao, and Chongfeng Wei. Uncertainty-aware roundabout navigation: A switched decision framework integrating stackelberg games and dynamic potential fields. IEEE Transactions on Vehicular Technology, pages 1–13, 2025. [CrossRef]

- Sichong Huang et al. Ai-driven early warning systems for supply chain risk detection: A machine learning approach. Academic Journal of Computing & Information Science, 8(9):92–107, 2025. [CrossRef]

- Sichong Huang et al. Real-time adaptive dispatch algorithm for dynamic vehicle routing with time-varying demand. Academic Journal of Computing & Information Science, 8(9):108–118, 2025. [CrossRef]

- Huijun Zhou, Jingzhi Wang, and Xuehao Cui. Causal effect of immune cells, metabolites, cathepsins, and vitamin therapy in diabetic retinopathy: a mendelian randomization and cross-sectional study. Frontiers in Immunology, 15:1443236, 2024. [CrossRef]

- Chen Zhou, Bing Wang, Zihan Zhou, Tong Wang, Xuehao Cui, and Yuanyin Teng. Ukall 2011: Flawed noninferiority and overlooked interactions undermine conclusions. Journal of Clinical Oncology, 43(28):3135–3136, 2025. [CrossRef]

- Ruidan He, Linlin Liu, Hai Ye, Qingyu Tan, Bosheng Ding, Liying Cheng, Jiawei Low, Lidong Bing, and Luo Si. On the effectiveness of adapter-based tuning for pretrained language model adaptation. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 2208–2222. Association for Computational Linguistics, 2021.

- Jiancheng Huang, Yi Huang, Jianzhuang Liu, Donghao Zhou, Yifan Liu, and Shifeng Chen. Dual-schedule inversion: Training-and tuning-free inversion for real image editing. In 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), pages 660–669. IEEE, 2025.

- Sichong Huang. Lstm-based deep learning models for long-term inventory forecasting in retail operations. Journal of Computer Technology and Applied Mathematics, 2(6):21–25, 2025. [CrossRef]

- Cui Xuehao, Wen Dejia, and Li Xiaorong. Integration of immunometabolic composite indices and machine learning for diabetic retinopathy risk stratification: Insights from nhanes 2011–2020. Ophthalmology Science, page 100854, 2025. [CrossRef]

- Zebin Yao, Lei Ren, Huixing Jiang, Chen Wei, Xiaojie Wang, Ruifan Li, and Fangxiang Feng. Freegraftor: Training-free cross-image feature grafting for subject-driven text-to-image generation. CoRR, 2025.

- Yucheng Zhou, Xiang Li, Qianning Wang, and Jianbing Shen. Visual in-context learning for large vision-language models. In Findings of the Association for Computational Linguistics, ACL 2024, Bangkok, Thailand and virtual meeting, August 11-16, 2024, pages 15890–15902. Association for Computational Linguistics, 2024.

- Fan Zhang, Zebang Cheng, Chong Deng, Haoxuan Li, Zheng Lian, Qian Chen, Huadai Liu, Wen Wang, Yi-Fan Zhang, Renrui Zhang, et al. Mme-emotion: A holistic evaluation benchmark for emotional intelligence in multimodal large language models. arXiv preprint arXiv:2508.09210, 2025.

- Fan Zhang, Haoxuan Li, Shengju Qian, Xin Wang, Zheng Lian, Hao Wu, Zhihong Zhu, Yuan Gao, Qiankun Li, Yefeng Zheng, et al. Rethinking facial expression recognition in the era of multimodal large language models: Benchmark, datasets, and beyond. arXiv preprint arXiv:2511.00389, 2025.

- Kun Qian, Tianyu Sun, Wenhong Wang, and Wenhan Luo. Deep learning in pathology: Advances in perception, diagnosis, prognosis, and workflow automation. 2025.

- Shuo Xu, Yexin Tian, Yuchen Cao, Zhongyan Wang, and Zijing Wei. Benchmarking machine learning and deep learning models for fake news detection using news headlines. Preprints, June 2025.

- Fangxiaoyu Feng, Yinfei Yang, Daniel Cer, Naveen Arivazhagan, and Wei Wang. Language-agnostic BERT sentence embedding. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 878–891. Association for Computational Linguistics, 2022.

- Jiancheng Huang, Gengwei Zhang, Zequn Jie, Siyu Jiao, Yinlong Qian, Ling Chen, Yunchao Wei, and Lin Ma. M4v: Multi-modal mamba for text-to-video generation. arXiv preprint arXiv:2506.10915, 2025.

- Jialin Gao, Xin Sun, Mengmeng Xu, Xi Zhou, and Bernard Ghanem. Relation-aware video reading comprehension for temporal language grounding. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pages 3978–3988. Association for Computational Linguistics, 2021.

- Meng Cao, Long Chen, Mike Zheng Shou, Can Zhang, and Yuexian Zou. On pursuit of designing multi-modal transformer for video grounding. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pages 9810–9823. Association for Computational Linguistics, 2021.

- Xiang Deng, Ahmed Hassan Awadallah, Christopher Meek, Oleksandr Polozov, Huan Sun, and Matthew Richardson. Structure-grounded pretraining for text-to-SQL. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 1337–1350. Association for Computational Linguistics, 2021.

- Arian Hosseini, Siva Reddy, Dzmitry Bahdanau, R Devon Hjelm, Alessandro Sordoni, and Aaron Courville. Understanding by understanding not: Modeling negation in language models. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 1301–1312. Association for Computational Linguistics, 2021.

- Jiazheng Xu, Xiao Liu, Yuchen Wu, Yuxuan Tong, Qinkai Li, Ming Ding, Jie Tang, and Yuxiao Dong. Imagereward: Learning and evaluating human preferences for text-to-image generation. In Advances in Neural Information Processing Systems 36: Annual Conference on Neural Information Processing Systems 2023, NeurIPS 2023, New Orleans, LA, USA, December 10 - 16, 2023, 2023.

- Yi Huang, Jiancheng Huang, Yifan Liu, Mingfu Yan, Jiaxi Lv, Jianzhuang Liu, Wei Xiong, He Zhang, Liangliang Cao, and Shifeng Chen. Diffusion model-based image editing: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2025. [CrossRef]

- Haiyang Xu, Ming Yan, Chenliang Li, Bin Bi, Songfang Huang, Wenming Xiao, and Fei Huang. E2E-VLP: End-to-end vision-language pre-training enhanced by visual learning. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 503–513. Association for Computational Linguistics, 2021.

- Chenliang Li, Haiyang Xu, Junfeng Tian, Wei Wang, Ming Yan, Bin Bi, Jiabo Ye, He Chen, Guohai Xu, Zheng Cao, Ji Zhang, Songfang Huang, Fei Huang, Jingren Zhou, and Luo Si. mPLUG: Effective and efficient vision-language learning by cross-modal skip-connections. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, pages 7241–7259. Association for Computational Linguistics, 2022.

- Zewen Chi, Li Dong, Shuming Ma, Shaohan Huang, Saksham Singhal, Xian-Ling Mao, Heyan Huang, Xia Song, and Furu Wei. mT6: Multilingual pretrained text-to-text transformer with translation pairs. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pages 1671–1683. Association for Computational Linguistics, 2021.

- Shrimai Prabhumoye, Kazuma Hashimoto, Yingbo Zhou, Alan W Black, and Ruslan Salakhutdinov. Focused attention improves document-grounded generation. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 4274–4287. Association for Computational Linguistics, 2021.

- Xinyu Wang, Min Gui, Yong Jiang, Zixia Jia, Nguyen Bach, Tao Wang, Zhongqiang Huang, and Kewei Tu. ITA: Image-text alignments for multi-modal named entity recognition. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 3176–3189. Association for Computational Linguistics, 2022.

- Ximing Lu, Peter West, Rowan Zellers, Ronan Le Bras, Chandra Bhagavatula, and Yejin Choi. NeuroLogic decoding: (un)supervised neural text generation with predicate logic constraints. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 4288–4299. Association for Computational Linguistics, 2021.

- Prakhar Gupta, Jeffrey Bigham, Yulia Tsvetkov, and Amy Pavel. Controlling dialogue generation with semantic exemplars. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 3018–3029. Association for Computational Linguistics, 2021.

- Brian Lester, Rami Al-Rfou, and Noah Constant. The power of scale for parameter-efficient prompt tuning. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pages 3045–3059. Association for Computational Linguistics, 2021.

- Sashuai Zhou, Hai Huang, and Yan Xia. Enhancing multi-modal models with heterogeneous moe adapters for fine-tuning. arXiv preprint arXiv:2503.20633, 2025.

- Zhen Tian, Zhihao Lin, Dezong Zhao, Wenjing Zhao, David Flynn, Shuja Ansari, and Chongfeng Wei. Evaluating scenario-based decision-making for interactive autonomous driving using rational criteria: A survey. arXiv preprint arXiv:2501.01886, 2025.

- Junqiao Wang, Zeng Zhang, Yangfan He, Yuyang Song, Tianyu Shi, Yuchen Li, Hengyuan Xu, Kunyu Wu, Guangwu Qian, Qiuwu Chen, et al. Enhancing code llms with reinforcement learning in code generation. arXiv preprint arXiv:2412.20367, 2024.

- Qiang Yi, Yangfan He, Jianhui Wang, Xinyuan Song, Shiyao Qian, Xinhang Yuan, Miao Zhang, Li Sun, Keqin Li, Kuan Lu, et al. Score: Story coherence and retrieval enhancement for ai narratives. arXiv preprint arXiv:2503.23512, 2025.

- Zhengfu He, Tianxiang Sun, Qiong Tang, Kuanning Wang, Xuanjing Huang, and Xipeng Qiu. DiffusionBERT: Improving generative masked language models with diffusion models. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 4521–4534. Association for Computational Linguistics, 2023.

- Farhad Nooralahzadeh, Nicolas Perez Gonzalez, Thomas Frauenfelder, Koji Fujimoto, and Michael Krauthammer. Progressive transformer-based generation of radiology reports. In Findings of the Association for Computational Linguistics: EMNLP 2021, pages 2824–2832. Association for Computational Linguistics, 2021.

- Chi Han, Mingxuan Wang, Heng Ji, and Lei Li. Learning shared semantic space for speech-to-text translation. In Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, pages 2214–2225. Association for Computational Linguistics, 2021.

- Yong Liu, Ran Yu, Fei Yin, Xinyuan Zhao, Wei Zhao, Weihao Xia, and Yujiu Yang. Learning quality-aware dynamic memory for video object segmentation. In European Conference on Computer Vision, pages 468–486. Springer, 2022.

- Yong Liu, Sule Bai, Guanbin Li, Yitong Wang, and Yansong Tang. Open-vocabulary segmentation with semantic-assisted calibration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3491–3500, 2024.

- Kunyang Han, Yong Liu, Jun Hao Liew, Henghui Ding, Jiajun Liu, Yitong Wang, Yansong Tang, Yujiu Yang, Jiashi Feng, Yao Zhao, et al. Global knowledge calibration for fast open-vocabulary segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 797–807, 2023.

- Zhitao Wang, Jiangtao Wen, and Yuxing Han. Ep-sam: An edge-detection prompt sam based efficient framework for ultra-low light video segmentation. In ICASSP 2025-2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 1–5. IEEE, 2025.

- Fan Zhang, Zhi-Qi Cheng, Jian Zhao, Xiaojiang Peng, and Xuelong Li. Leaf: unveiling two sides of the same coin in semi-supervised facial expression recognition. Computer Vision and Image Understanding, page 104451, 2025. [CrossRef]

- Lisa Anne Hendricks and Aida Nematzadeh. Probing image-language transformers for verb understanding. In Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, pages 3635–3644. Association for Computational Linguistics, 2021.

- Zhitao Wang, Yirong Xiong, Roberto Horowitz, Yanke Wang, and Yuxing Han. Hybrid perception and equivariant diffusion for robust multi-node rebar tying. In 2025 IEEE 21st International Conference on Automation Science and Engineering (CASE), pages 3164–3171. IEEE, 2025.

| HSAI Variant | CLIP-I (↑) | DINO (↑) | CLIP-T (↑) | ImageReward (↑) |

|---|---|---|---|---|

| ContextualGraftor (Full HSAI) | 0.9300 | 0.8650 | 0.3350 | 1.7000 |

| w/o Multi-Scale Loss | 0.9240 | 0.8550 | 0.3325 | 1.6880 |

| w/o Adaptive Dropout | 0.9265 | 0.8590 | 0.3330 | 1.6920 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).