Submitted:

23 November 2025

Posted:

24 November 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

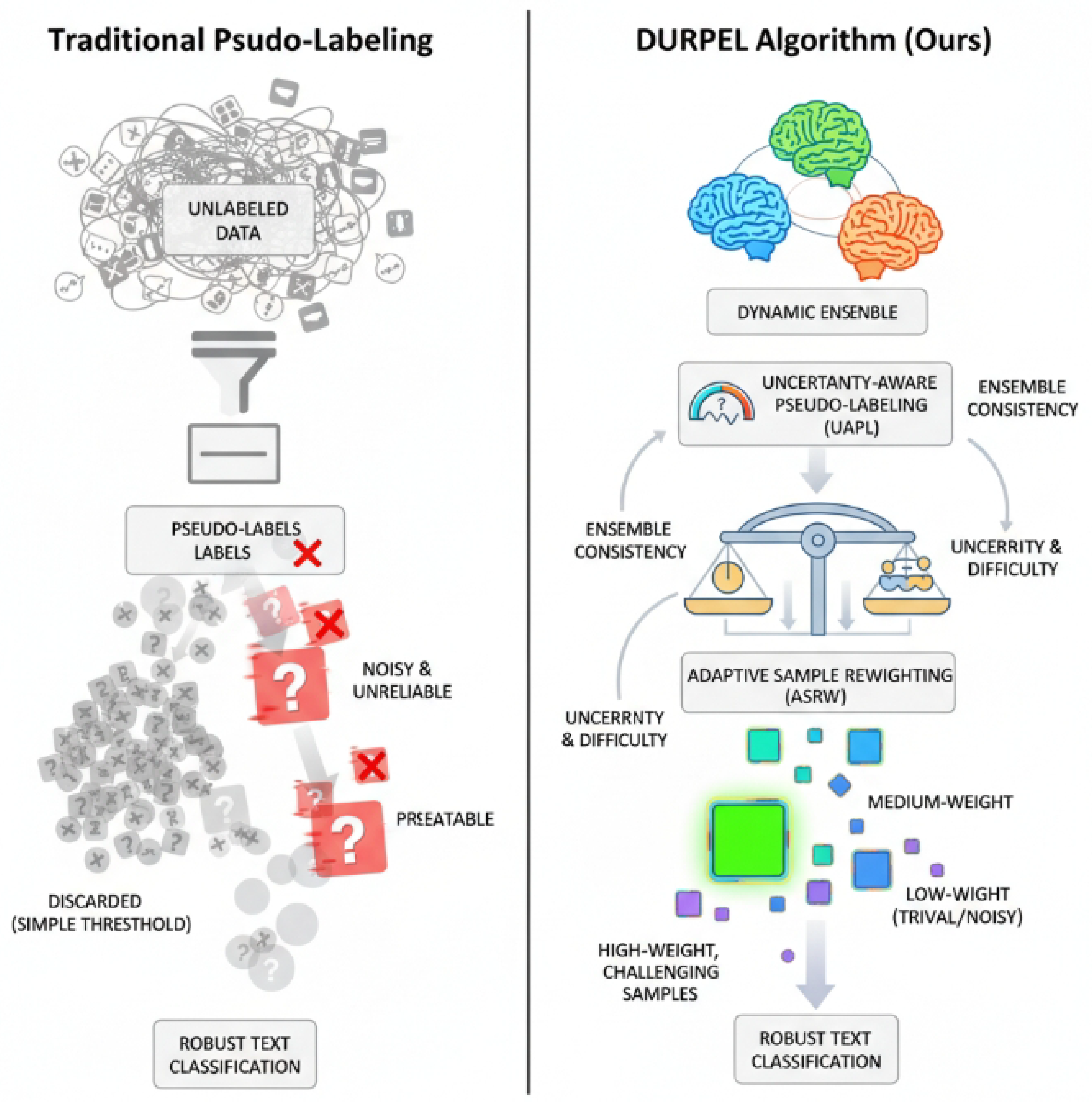

- We propose Dynamic Uncertainty-aware Pseudo-Labeling with Ensemble Reweighting (DURPEL), a novel SSTC algorithm that integrates dynamic ensemble learning with sophisticated pseudo-labeling and sample reweighting.

- We introduce an Uncertainty-aware Pseudo-Labeling (UAPL) module that generates more reliable pseudo-labels through dynamic ensemble voting, entropy-based uncertainty estimation, and adaptive class-level confidence thresholds.

- We develop an Adaptive Sample Reweighting (ASRW) mechanism that intelligently assigns learning weights to unlabeled samples by considering ensemble consistency, predicted uncertainty, and historical sample difficulty, thereby enhancing learning from challenging examples.

2. Related Work

2.1. Semi-Supervised Text Classification with Consistency Regularization

2.2. Ensemble and Uncertainty-Aware Strategies in Semi-Supervised Learning

3. Method

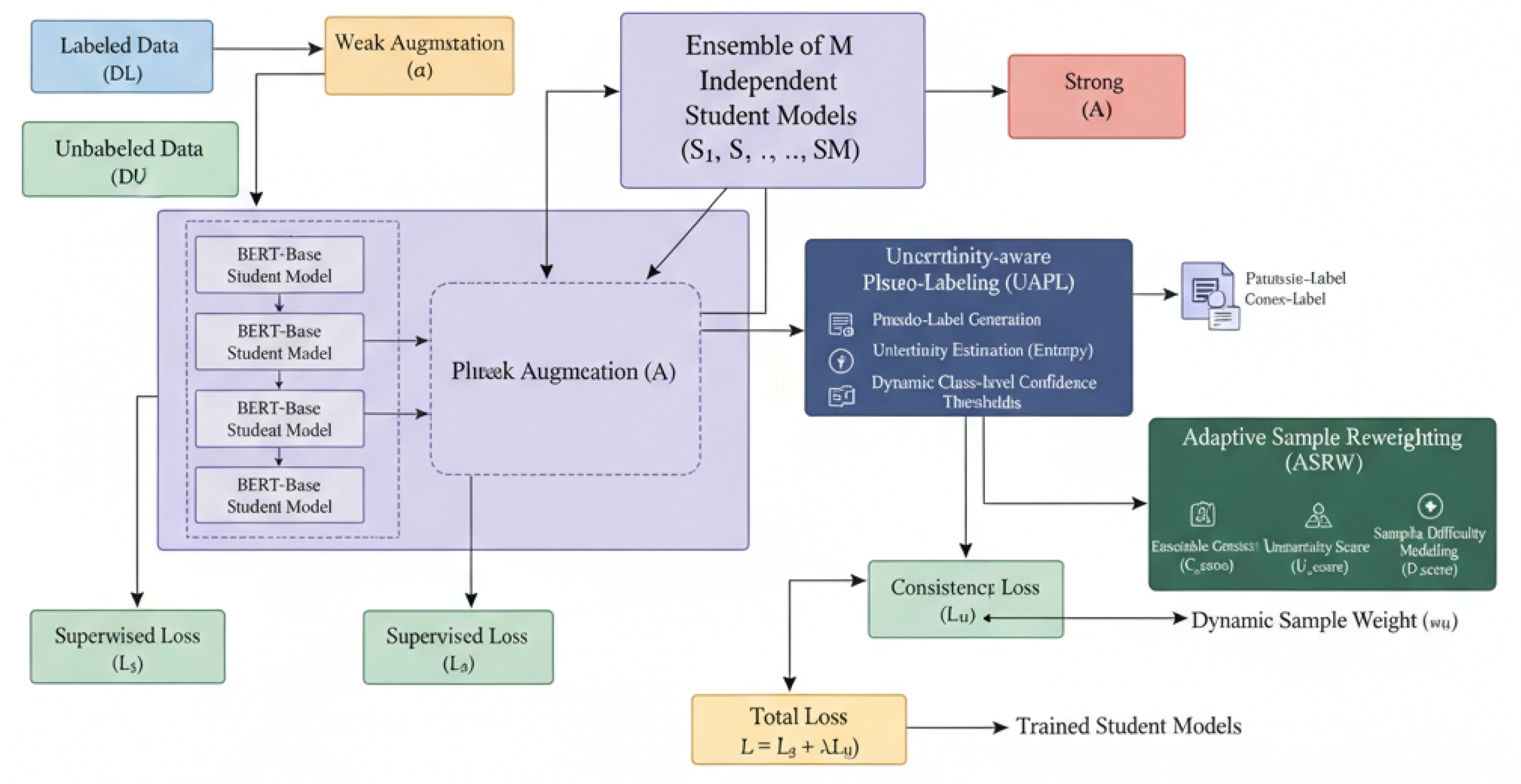

3.1. Overall Architecture of DURPEL

3.2. Uncertainty-Aware Pseudo-Labeling (UAPL)

3.2.1. Weak and Strong Augmentations

3.2.2. Ensemble Prediction and Pseudo-Label Generation

3.2.3. Dynamic Class-Level Confidence Thresholds

3.3. Adaptive Sample Reweighting (ASRW)

3.3.1. Ensemble Consistency

3.3.2. Uncertainty Estimation

3.3.3. Sample Difficulty Modeling

3.3.4. Weight Computation

3.4. Training Objective

3.4.1. Supervised Loss

3.4.2. Consistency Loss

3.4.3. Total Loss

4. Experiments

4.1. Experimental Setup

4.1.1. Model Architecture

4.1.2. Datasets

- Standard Semi-Supervised Benchmarks: We use five core datasets from the USB benchmark: IMDB, AG News, Amazon Review, Yahoo! Answers, and Yelp Review. These datasets are used to assess performance under various proportions of labeled data.

- Highly Class-Imbalanced Settings: To rigorously test DURPEL’s robustness, we further construct long-tail imbalanced versions of these datasets. This is achieved by exponentially decaying the number of samples per class with an imbalance factor , mimicking real-world imbalanced data distributions.

4.1.3. Data Preprocessing and Augmentation

- Text Preprocessing: All text inputs are uniformly truncated or padded to a maximum length of 512 tokens, consistent with the input requirements of BERT-Base.

- Weak Augmentation: For unlabeled samples, the weak augmentation is set as an identity function, i.e., . This aligns with common practices in consistency regularization methods and the USB benchmark settings.

- Strong Augmentation: We employ back-translation as the strong augmentation strategy. Specifically, we use mutual translation between English and German, and English and Russian, to generate robust perturbations that force the models to learn more invariant and discriminative features.

4.1.4. Training Details

- Semi-Supervised Training Batches: Each training batch consists of B labeled samples and unlabeled samples, where we set .

- Pseudo-Labeling and Consistency Learning: For each unlabeled sample u, its weakly augmented version is fed into the ensemble of M student models. The Uncertainty-aware Pseudo-Labeling (UAPL) module generates an integrated pseudo-label and estimates its uncertainty. Subsequently, the Adaptive Sample Reweighting (ASRW) mechanism calculates a dynamic learning weight for this pseudo-label. The strongly augmented version is then passed through the student models, and its predictions are used to compute the consistency loss against the weighted pseudo-label.

- Loss Function: The total loss function comprises a supervised cross-entropy loss applied to the labeled data and a weighted consistency loss (using ASRW weights) for the unlabeled data.

- Optimizer and Learning Rate Schedule: We use the AdamW optimizer with a weight decay of . The learning rate follows a cosine scheduler, incorporating a warm-up phase of 5,120 steps.

- Total Training Steps: The models are trained for a total of 102,400 steps.

4.1.5. Hyperparameters

4.2. Main Results

4.3. Results on Class-Imbalanced Datasets

4.4. Ablation Study of DURPEL Components

- DURPEL w/o Ensemble: When DURPEL is reduced to a single BERT-Base model (i.e., ), the error rate consistently increases across all datasets. This highlights the crucial role of ensemble diversity and robustness in generating more reliable pseudo-labels and predictions. The average error rate increases by approximately 2.09% compared to the full DURPEL.

- DURPEL w/o UAPL: Removing the Uncertainty-aware Pseudo-Labeling module, which means relying on a fixed global confidence threshold for pseudo-label acceptance instead of dynamic class-level thresholds and explicit uncertainty estimation, leads to a noticeable degradation. This indicates that filtering pseudo-labels based on their quality and uncertainty, rather than a naive confidence score, is vital for preventing error accumulation. The average error rate increases by approximately 1.31% compared to the full DURPEL.

- DURPEL w/o ASRW: When the Adaptive Sample Reweighting mechanism is removed, and valid pseudo-labels are simply weighted equally (weight of 1), the performance also drops. This confirms that intelligently weighting samples based on their consistency, uncertainty, and estimated difficulty is effective in prioritizing informative samples and accelerating learning, especially for challenging examples. The average error rate increases by approximately 0.60% compared to the full DURPEL.

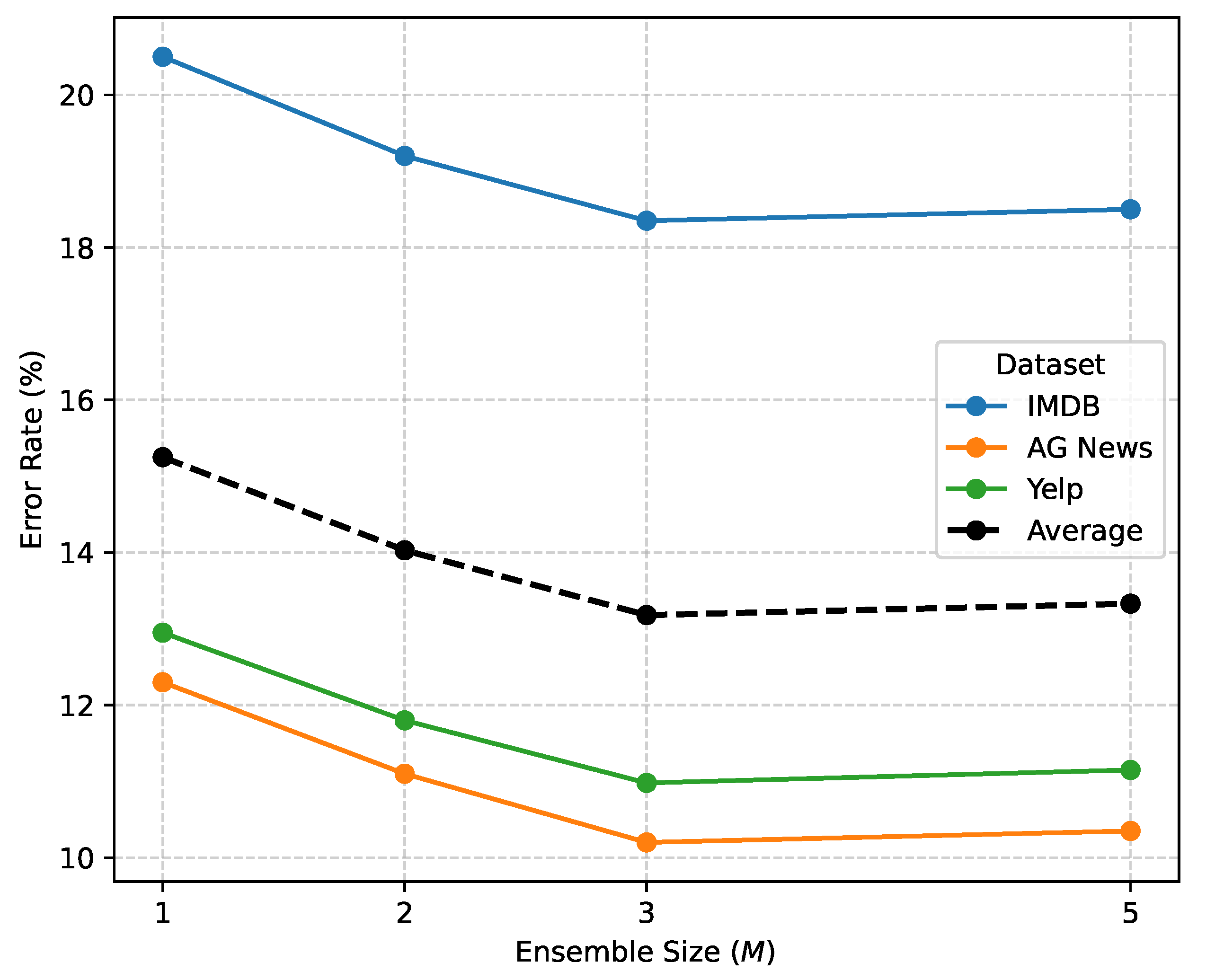

4.5. Impact of Ensemble Size

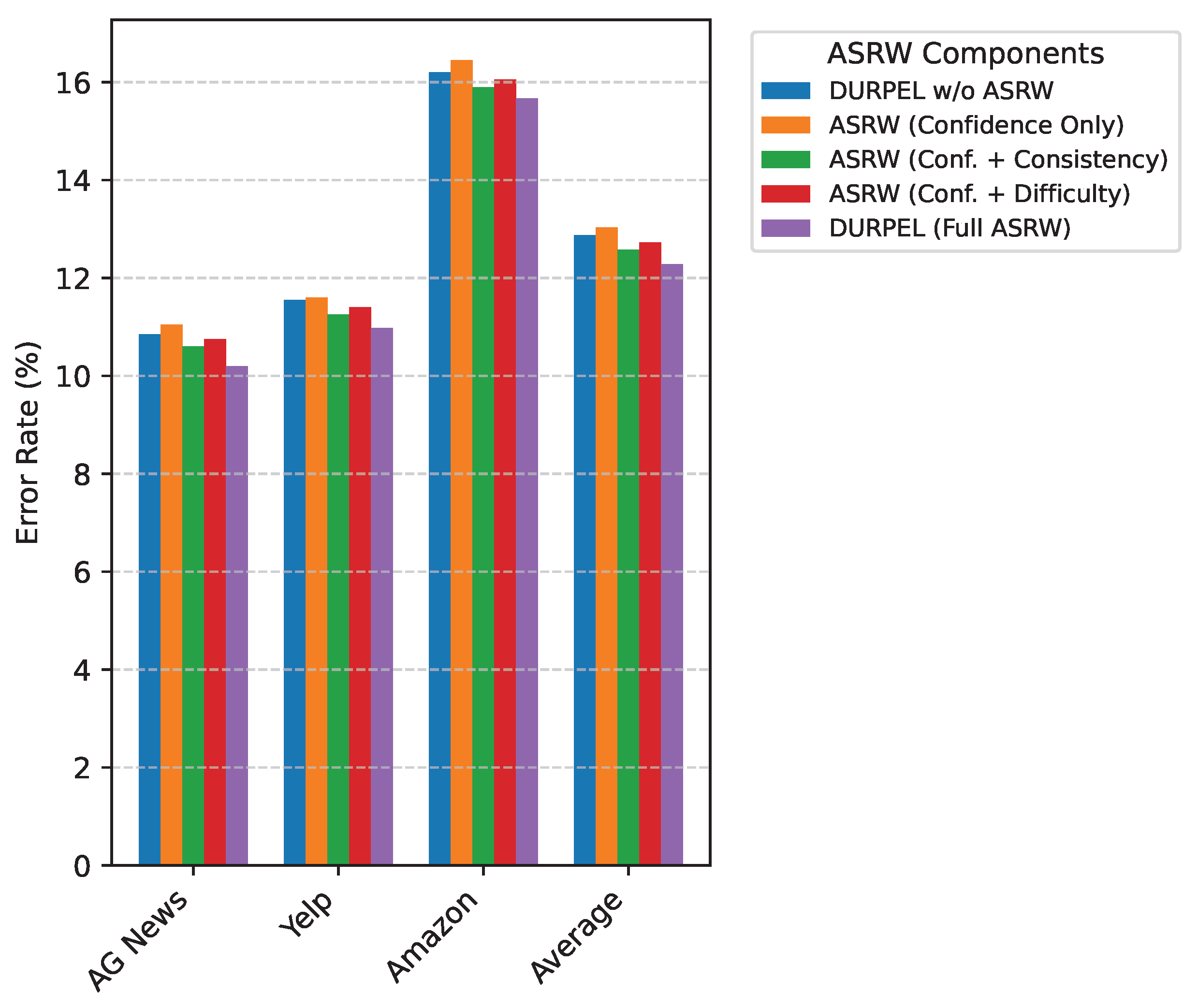

4.6. Analysis of Adaptive Sample Reweighting (ASRW)

- ASRW (Confidence Only): Using only the integrated ensemble confidence as the reweighting factor yields an improvement over no ASRW, but it is not optimal. This indicates that while confidence is a good starting point, it lacks the nuance needed for robust weighting.

- ASRW (Confidence + Consistency): Incorporating ensemble consistency alongside confidence significantly improves performance. This demonstrates that agreement among ensemble members is a strong indicator of pseudo-label reliability, reducing the error rate by an average of 0.45% compared to confidence-only weighting.

- ASRW (Confidence + Difficulty): Adding the sample difficulty score to confidence also shows improvement. This validates our hypothesis that prioritizing moderately challenging samples, which are neither trivial nor highly ambiguous, can enhance learning. This variant reduces the error rate by an average of 0.30% compared to confidence-only weighting.

- DURPEL (Full ASRW): The full ASRW mechanism, which combines confidence, consistency, and difficulty, achieves the best performance. This synergistic effect demonstrates that a comprehensive weighting strategy, considering multiple dimensions of pseudo-label quality and sample utility, is superior to relying on single or partial factors. The full ASRW reduces the average error rate by 0.59% compared to the best partial ASRW variant (Confidence + Consistency).

4.7. Evolution of Dynamic Class-Level Confidence Thresholds

5. Conclusion

References

- Ren, L. AI-Powered Financial Insights: Using Large Language Models to Improve Government Decision-Making and Policy Execution. Journal of Industrial Engineering and Applied Science 2025, 3, 21–26. [Google Scholar] [CrossRef]

- Huang, J.; Tian, Z.; Qiu, Y. AI-Enhanced Dynamic Power Grid Simulation for Real-Time Decision-Making. In Proceedings of the 2025 4th International Conference on Smart Grids and Energy Systems (SGES); 2025; pp. 15–19. [Google Scholar] [CrossRef]

- Xu, P.; Kumar, D.; Yang, W.; Zi, W.; Tang, K.; Huang, C.; Cheung, J.C.K.; Prince, S.J.; Cao, Y. Optimizing Deeper Transformers on Small Datasets. In Proceedings of the Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers). Association for Computational Linguistics, 2021, pp. 2089–2102. [CrossRef]

- Zhou, Y.; Shen, J.; Cheng, Y. Weak to strong generalization for large language models with multi-capabilities. In Proceedings of the The Thirteenth International Conference on Learning Representations, 2025.

- Wang, X.; Ruder, S.; Neubig, G. Multi-view Subword Regularization. In Proceedings of the Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 2021, pp. 473–482. [CrossRef]

- Xie, Y.; Sun, F.; Deng, Y.; Li, Y.; Ding, B. Factual Consistency Evaluation for Text Summarization via Counterfactual Estimation. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021. Association for Computational Linguistics, 2021, pp. 100–110. [CrossRef]

- Li, J.; Pan, J.; Tan, V.Y.F.; Toh, K.; Zhou, P. Towards Understanding Why FixMatch Generalizes Better Than Supervised Learning. In Proceedings of the The Thirteenth International Conference on Learning Representations, ICLR 2025, Singapore, April 24-28, 2025. OpenReview.net, 2025.

- Wang, Y.; Chen, H.; Heng, Q.; Hou, W.; Fan, Y.; Wu, Z.; Wang, J.; Savvides, M.; Shinozaki, T.; Raj, B.; et al. FreeMatch: Self-adaptive Thresholding for Semi-supervised Learning. In Proceedings of the The Eleventh International Conference on Learning Representations, ICLR 2023, Kigali, Rwanda, May 1-5, 2023. OpenReview.net, 2023.

- Sosea, T.; Caragea, C. MarginMatch: Improving Semi-Supervised Learning with Pseudo-Margins. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2023, Vancouver, BC, Canada, June 17-24, 2023. IEEE, 2023, pp. 15773–15782. [CrossRef]

- Zhong, Z.; Friedman, D.; Chen, D. Factual Probing Is [MASK]: Learning vs. Learning to Recall. In Proceedings of the Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 2021, pp. 5017–5033. [CrossRef]

- Cheng, L.; Li, X.; Bing, L. Is GPT-4 a Good Data Analyst? In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023. Association for Computational Linguistics, 2023, pp. 9496–9514. [CrossRef]

- Ren, L.; et al. Boosting algorithm optimization technology for ensemble learning in small sample fraud detection. Academic Journal of Engineering and Technology Science 2025, 8, 53–60. [Google Scholar] [CrossRef]

- Zhang, F.; Wang, C.; Cheng, Z.; Peng, X.; Wang, D.; Xiao, Y.; Chen, C.; Hua, X.S.; Luo, X. DREAM: Decoupled Discriminative Learning with Bigraph-aware Alignment for Semi-supervised 2D-3D Cross-modal Retrieval. In Proceedings of the Proceedings of the AAAI Conference on Artificial Intelligence, 2025, Vol. 39, pp. 13206–13214.

- Zeng, Z.; He, K.; Yan, Y.; Liu, Z.; Wu, Y.; Xu, H.; Jiang, H.; Xu, W. Modeling Discriminative Representations for Out-of-Domain Detection with Supervised Contrastive Learning. In Proceedings of the Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 2: Short Papers). Association for Computational Linguistics, 2021, pp. 870–878. [CrossRef]

- Wei, K.; Zhong, J.; Zhang, H.; Zhang, F.; Zhang, D.; Jin, L.; Yu, Y.; Zhang, J. Chain-of-specificity: Enhancing task-specific constraint adherence in large language models. In Proceedings of the Proceedings of the 31st International Conference on Computational Linguistics, 2025, pp. 2401–2416.

- Jang, J.; Ye, S.; Lee, C.; Yang, S.; Shin, J.; Han, J.; Kim, G.; Seo, M. TemporalWiki: A Lifelong Benchmark for Training and Evaluating Ever-Evolving Language Models. In Proceedings of the Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2022, pp. 6237–6250. [CrossRef]

- Laskar, M.T.R.; Bari, M.S.; Rahman, M.; Bhuiyan, M.A.H.; Joty, S.; Huang, J. A Systematic Study and Comprehensive Evaluation of ChatGPT on Benchmark Datasets. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2023. Association for Computational Linguistics, 2023, pp. 431–469. [CrossRef]

- Hao, X.; Liu, G.; Zhao, Y.; Ji, Y.; Wei, M.; Zhao, H.; Kong, L.; Yin, R.; Liu, Y. Msc-bench: Benchmarking and analyzing multi-sensor corruption for driving perception. arXiv preprint, 2025; arXiv:2501.01037. [Google Scholar]

- Nguyen, M.V.; Lai, V.D.; Nguyen, T.H. Cross-Task Instance Representation Interactions and Label Dependencies for Joint Information Extraction with Graph Convolutional Networks. In Proceedings of the Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 2021, pp. 27–38. [CrossRef]

- Zhang, F.; Chen, C.; Hua, X.S.; Luo, X. FATE: Learning Effective Binary Descriptors With Group Fairness. IEEE Transactions on Image Processing 2024, 33, 3648–3661. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; Hua, X.S.; Chen, C.; Luo, X. A Statistical Perspective for Efficient Image-Text Matching. In Proceedings of the Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), 2024, pp. 355–369.

- Honovich, O.; Choshen, L.; Aharoni, R.; Neeman, E.; Szpektor, I.; Abend, O. Q2: Evaluating Factual Consistency in Knowledge-Grounded Dialogues via Question Generation and Question Answering. In Proceedings of the Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2021, pp. 7856–7870. [CrossRef]

- Nan, F.; Nogueira dos Santos, C.; Zhu, H.; Ng, P.; McKeown, K.; Nallapati, R.; Zhang, D.; Wang, Z.; Arnold, A.O.; Xiang, B. Improving Factual Consistency of Abstractive Summarization via Question Answering. In Proceedings of the Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers). Association for Computational Linguistics, 2021, pp. 6881–6894. [CrossRef]

- Wang, F.; Shi, Z.; Wang, B.; Wang, N.; Xiao, H. Readerlm-v2: Small language model for HTML to markdown and JSON. arXiv preprint, 2025; arXiv:2503.01151. [Google Scholar]

- Karamanolakis, G.; Mukherjee, S.; Zheng, G.; Awadallah, A.H. Self-Training with Weak Supervision. In Proceedings of the Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 2021, pp. 845–863. [CrossRef]

- Zhou, Y.; Li, X.; Wang, Q.; Shen, J. Visual In-Context Learning for Large Vision-Language Models. In Proceedings of the Findings of the Association for Computational Linguistics, ACL 2024, Bangkok, Thailand and virtual meeting, August 11-16, 2024. Association for Computational Linguistics, 2024, pp. 15890–15902.

- Gao, T.; Yao, X.; Chen, D. SimCSE: Simple Contrastive Learning of Sentence Embeddings. In Proceedings of the Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2021, pp. 6894–6910. [CrossRef]

- Zhu, C.; Hinthorn, W.; Xu, R.; Zeng, Q.; Zeng, M.; Huang, X.; Jiang, M. Enhancing Factual Consistency of Abstractive Summarization. In Proceedings of the Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 2021, pp. 718–733. [CrossRef]

- Silva, A.; Tambwekar, P.; Gombolay, M. Towards a Comprehensive Understanding and Accurate Evaluation of Societal Biases in Pre-Trained Transformers. In Proceedings of the Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 2021, pp. 2383–2389. [CrossRef]

- Ren, L. Causal Modeling for Fraud Detection: Enhancing Financial Security with Interpretable AI. European Journal of Business, Economics & Management 2025, 1, 94–104. [Google Scholar]

- Wei, L.; Hu, D.; Zhou, W.; Yue, Z.; Hu, S. Towards Propagation Uncertainty: Edge-enhanced Bayesian Graph Convolutional Networks for Rumor Detection. In Proceedings of the Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers). Association for Computational Linguistics, 2021, pp. 3845–3854. [CrossRef]

- Wei, K.; Sun, X.; Zhang, Z.; Zhang, J.; Zhi, G.; Jin, L. Trigger is not sufficient: Exploiting frame-aware knowledge for implicit event argument extraction. In Proceedings of the Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), 2021, pp. 4672–4682.

- Wei, K.; Yang, Y.; Jin, L.; Sun, X.; Zhang, Z.; Zhang, J.; Li, X.; Zhang, L.; Liu, J.; Zhi, G. Guide the many-to-one assignment: Open information extraction via iou-aware optimal transport. In Proceedings of the Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 2023, pp. 4971–4984.

- Shi, Z.; Zhou, Y. Topic-selective graph network for topic-focused summarization. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining. Springer, 2023, pp. 247–259.

- Hou, X.; Qi, P.; Wang, G.; Ying, R.; Huang, J.; He, X.; Zhou, B. Graph Ensemble Learning over Multiple Dependency Trees for Aspect-level Sentiment Classification. In Proceedings of the Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 2021, pp. 2884–2894. [CrossRef]

- Zhao, H.; Bian, W.; Yuan, B.; Tao, D. Collaborative Learning of Depth Estimation, Visual Odometry and Camera Relocalization from Monocular Videos. In Proceedings of the IJCAI, 2020, pp. 488–494.

- Yang, Y.; Constantinescu, D.; Shi, Y. Connectivity-preserving swarm teleoperation with a tree network. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2019, pp. 3624–3629.

- Yang, Y.; Constantinescu, D.; Shi, Y. Proportional and reachable cluster teleoperation of a distributed multi-robot system. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2021, pp. 8984–8990.

- Chen, L.; Garcia, F.; Kumar, V.; Xie, H.; Lu, J. Industry Scale Semi-Supervised Learning for Natural Language Understanding. In Proceedings of the Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: Industry Papers. Association for Computational Linguistics, 2021, pp. 311–318. [CrossRef]

- Du, J.; Grave, E.; Gunel, B.; Chaudhary, V.; Celebi, O.; Auli, M.; Stoyanov, V.; Conneau, A. Self-training Improves Pre-training for Natural Language Understanding. In Proceedings of the Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 2021, pp. 5408–5418. [CrossRef]

- Meng, Y.; Zhang, Y.; Huang, J.; Wang, X.; Zhang, Y.; Ji, H.; Han, J. Distantly-Supervised Named Entity Recognition with Noise-Robust Learning and Language Model Augmented Self-Training. In Proceedings of the Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2021, pp. 10367–10378. [CrossRef]

- Shi, Z.; Zhou, Y.; Li, J.; Jin, Y.; Li, Y.; He, D.; Liu, F.; Alharbi, S.; Yu, J.; Zhang, M. Safety alignment via constrained knowledge unlearning. In Proceedings of the Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 2025, pp. 25515–25529.

- Lin, Z.; Zhang, Q.; Tian, Z.; Yu, P.; Lan, J. DPL-SLAM: enhancing dynamic point-line SLAM through dense semantic methods. IEEE Sensors Journal 2024, 24, 14596–14607. [Google Scholar] [CrossRef]

- Lin, Z.; Tian, Z.; Zhang, Q.; Zhuang, H.; Lan, J. Enhanced visual slam for collision-free driving with lightweight autonomous cars. Sensors 2024, 24, 6258. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Tian, Z.; Wang, X.; Yang, J.; Lin, Z. Efficient and Safe Planner for Automated Driving on Ramps Considering Unsatisfication. arXiv preprint, 2025; arXiv:2504.15320. [Google Scholar]

- Hwang, W.; Yim, J.; Park, S.; Yang, S.; Seo, M. Spatial Dependency Parsing for Semi-Structured Document Information Extraction. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021. Association for Computational Linguistics, 2021, pp. 330–343. [CrossRef]

- Wang, Z.; Jiang, W.; Wu, W.; Wang, S. Reconstruction of complex network from time series data based on graph attention network and Gumbel Softmax. International Journal of Modern Physics C 2023, 34, 2350057. [Google Scholar] [CrossRef]

- Wang, Z.; Xiong, Y.; Horowitz, R.; Wang, Y.; Han, Y. Hybrid Perception and Equivariant Diffusion for Robust Multi-Node Rebar Tying. In Proceedings of the 2025 IEEE 21st International Conference on Automation Science and Engineering (CASE). IEEE, 2025, pp. 3164–3171.

- Wang, Z.; Wen, J.; Han, Y. EP-SAM: An Edge-Detection Prompt SAM Based Efficient Framework for Ultra-Low Light Video Segmentation. In Proceedings of the ICASSP 2025-2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2025, pp. 1–5.

- Wei, Q.; Shan, J.; Cheng, H.; Yu, Z.; Lijuan, B.; Haimei, Z. A method of 3D human-motion capture and reconstruction based on depth information. In Proceedings of the 2016 IEEE International Conference on Mechatronics and Automation. IEEE, 2016, pp. 187–192.

- Wang, P.; Zhu, Z.; Liang, D. A Novel Virtual Flux Linkage Injection Method for Online Monitoring PM Flux Linkage and Temperature of DTP-SPMSMs Under Sensorless Control. IEEE Transactions on Industrial Electronics 2025. [Google Scholar] [CrossRef]

- Wang, P.; Zhu, Z.Q.; Feng, Z. Novel Virtual Active Flux Injection-Based Position Error Adaptive Correction of Dual Three-Phase IPMSMs Under Sensorless Control. IEEE Transactions on Transportation Electrification 2025. [Google Scholar] [CrossRef]

- Wang, P.; Zhu, Z.; Liang, D. Improved position-offset based online parameter estimation of PMSMs under constant and variable speed operations. IEEE Transactions on Energy Conversion 2024, 39, 1325–1340. [Google Scholar] [CrossRef]

- Margatina, K.; Vernikos, G.; Barrault, L.; Aletras, N. Active Learning by Acquiring Contrastive Examples. In Proceedings of the Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2021, pp. 650–663. [CrossRef]

- Rosenthal, S.; Atanasova, P.; Karadzhov, G.; Zampieri, M.; Nakov, P. SOLID: A Large-Scale Semi-Supervised Dataset for Offensive Language Identification. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021. Association for Computational Linguistics, 2021, pp. 915–928. [CrossRef]

| Dataset | Label Count | Supervised Only | FixMatch | FreeMatch | MultiMatch | Ours (DURPEL) |

| IMDB | 20 | 35.12 | 29.58 | 28.91 | 28.71 | 28.05 |

| IMDB | 100 | 24.33 | 19.87 | 19.12 | 18.99 | 18.35 |

| AG News | 40 | 25.45 | 19.33 | 18.95 | 18.89 | 18.12 |

| AG News | 200 | 16.78 | 11.21 | 10.88 | 10.75 | 10.20 |

| Yelp | 50 | 32.11 | 26.50 | 25.99 | 25.77 | 25.08 |

| Yelp | 200 | 18.76 | 12.11 | 11.75 | 11.55 | 10.98 |

| Amazon | 100 | 22.31 | 16.80 | 16.45 | 16.32 | 15.67 |

| Yahoo! | 50 | 38.99 | 31.75 | 31.20 | 30.98 | 30.01 |

| Average Error Rate | 29.80 | 23.52 | 22.91 | 22.68 | 21.81 |

| Dataset | Labeled Count | DURPEL (Full) | DURPEL w/o ASRW | DURPEL w/o UAPL (Fixed Global Threshold) | DURPEL w/o Ensemble (Single Model) |

| IMDB | 100 | 18.35 | 19.00 | 19.80 | 20.50 |

| AG News | 200 | 10.20 | 10.85 | 11.50 | 12.30 |

| Yelp | 200 | 10.98 | 11.55 | 12.10 | 12.95 |

| Amazon | 100 | 15.67 | 16.20 | 17.05 | 17.80 |

| Average | 13.80 | 14.40 | 15.11 | 15.89 |

| Training Stage | Class 1 (World) | Class 2 (Sports) | Class 3 (Business) | Class 4 (Sci/Tech) |

| Early (25% steps) | 0.70 | 0.68 | 0.72 | 0.69 |

| Mid (50% steps) | 0.85 | 0.82 | 0.87 | 0.84 |

| Late (75% steps) | 0.92 | 0.90 | 0.94 | 0.91 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).