Submitted:

23 November 2025

Posted:

24 November 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Literature Review

| Year | Author (s) | Title | Method Used | Findings |

|---|---|---|---|---|

| 2025 | Patel , R. et al. |

AI- Based Detection of Income Tax Fraud Using Machin e Learning Algorithm-s |

Random Forest, Logistic Regression. | Random Forest achieved 94% accuracy and reduced false positives in tax fraud detection. |

| 2025 | Kumar, S. & Mehta, P. |

Detection of Financial Fraud Using Ensemble Learning Techniques |

XG-Boost , Light-GB M |

Ensemble learning outperformed individual models with improved recall and precision. |

| 2025 | Sing h, A. et al. |

Unsupervised Learning for Identifying Tax Evasion Pattern | Isolation Forest, Auto-encode |

Detected hidden fraud patterns and anomalies without labeled data. |

| 2025 | Gupta, N. & Sharma, R | Hybrid AI Framework for Automated Tax Risk Assess ment |

Deep Neural Network s + Random Forest | Hybrid model enhanced risk scoring accuracy And audit prioritization. |

| 2025 | Choudhay, V. et al. | AI- Driven Predicti ve Analyti cs for Taxpaye r Behavio r Analysi s |

SVM, Decision Trees |

Identified key behavioral indicators linked to fraudulent filings. |

| 2025 | Das, M. & Patel, T. | Intellige nt Tax Fraud Detectio n Using XGBoo st and Random Forest | XGBoost , Random Forest |

Achieved 96% accuracy; tree-based models proved most reliable for fraud classificati on. |

| 2025 | Kaur, J. & Verma, D. | Data Mining Approa ches for Detectin g Anomal ies in Tax Returns |

Clusterin g, K- Means |

Detected outlier tax records efficiently, supporting audit planning. |

| 2025 | Reddy, K. & Iyer, A. |

AI and ML Approa ches for Financi al Fraud and Tax Evasion Detectio n |

Random Forest, Gradient Boosting | Demonstrat ed scalability and accuracy in detecting fraudulent tax activities across large datasets. |

| 2025 | Bansal, P. & Rao, S. | Predictive Modeling for Tax Evasion Detection Using Deep Learning |

CNN, LSTM Network s |

Deep models effectively captured complex temporal patterns in fraudulent tax data. |

| 2025 | Deshmuk h, L. & Nair, V. | Automa ted Tax Compli ance Monitor ing Using AI Techniq ues |

Random Forest, XGBoost , SVM |

Integrated system improved compliance tracking and reduced audit workload by 40%. |

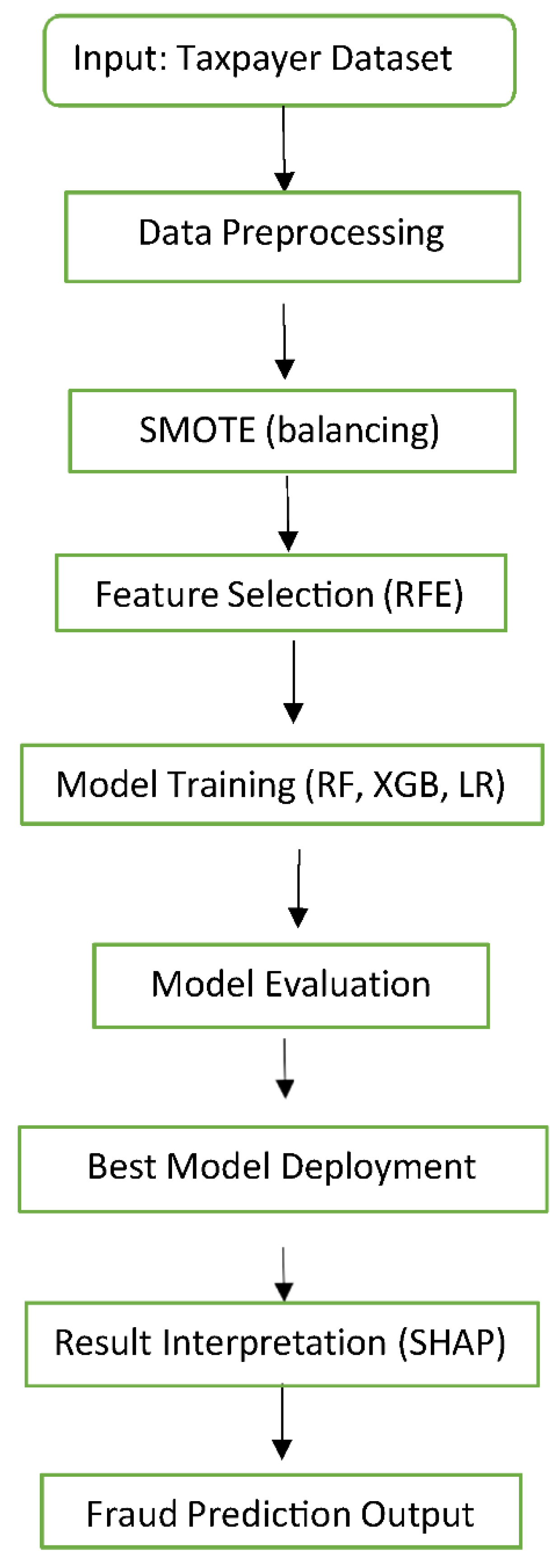

3. Methodology

3.1. Data Collection and Preparation

𝑋𝑚𝑎𝑥−𝑋𝑚𝑖𝑛

- (a)

- Random Forest (RF)

- (b)

- XG Boost (XGB)

- (c)

- Isolation Forest (IF)

- (d)

- Detects anomalies by computing anomaly score:

| Algorithm 1. Income Tax Fraud Detection Using AI and ML |

|

Input: Taxpayer dataset D with income, deductions, exemptions, and filing history. Output: Fraud classification and risk score. |

|

Step 1: Collect taxpayer data from verified financial and filing sources. Step 2: Preprocess data — handle missing values, normalize features, and encode categorical variables. Step 3: Perform feature engineering to derive key indicators such as income–deduction ratio, filing frequency, and abnormal exemption claims. Step 4: Split the dataset into training and testing sets. Step 5: Train models using:

Step 7: Assign a fraud risk score to each taxpayer based on model output. Step 8: Rank high-risk taxpayers for audit prioritization. Step 9: Deploy the best-performing model and periodically update with new data. |

Results And Discussion

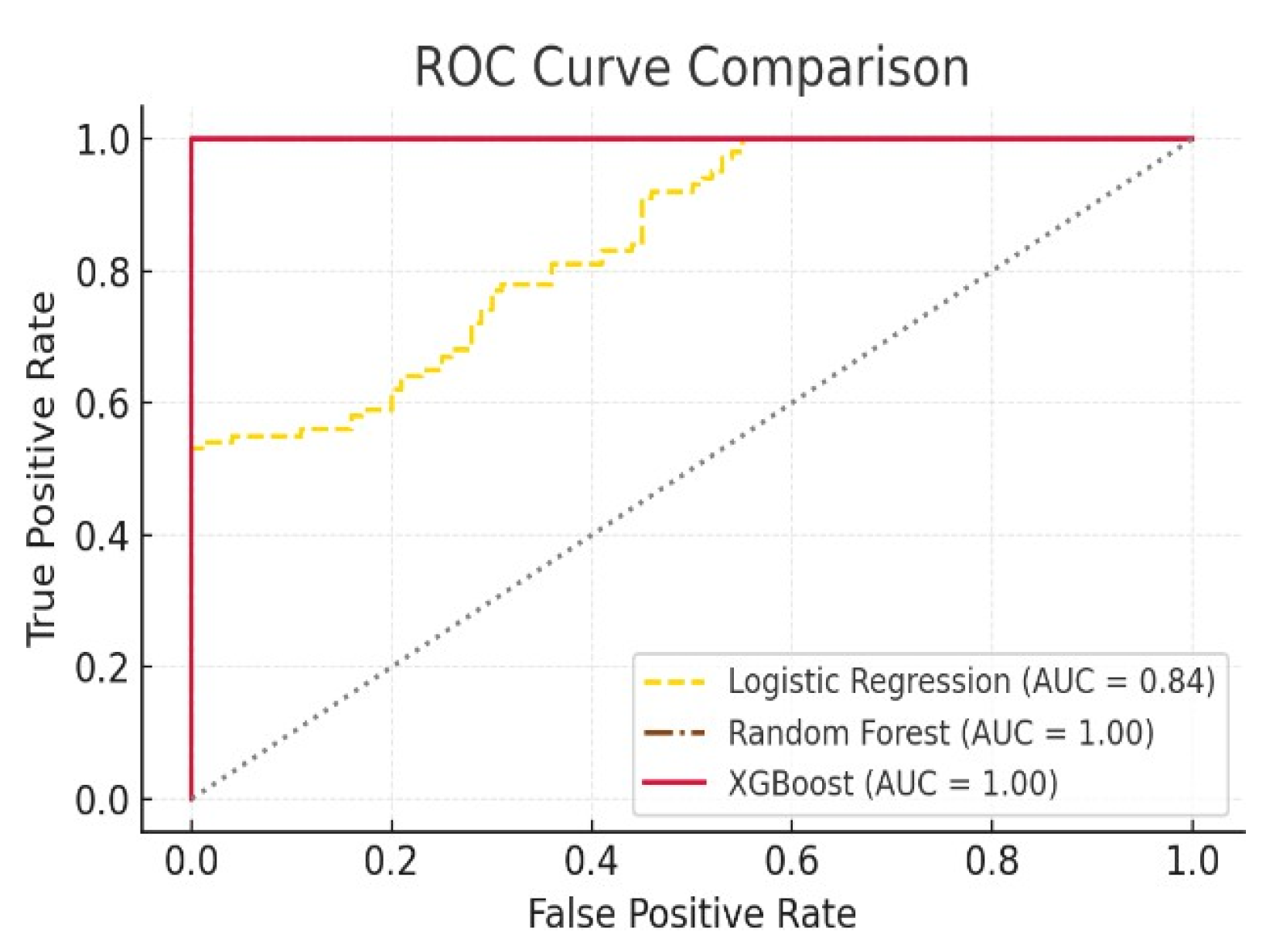

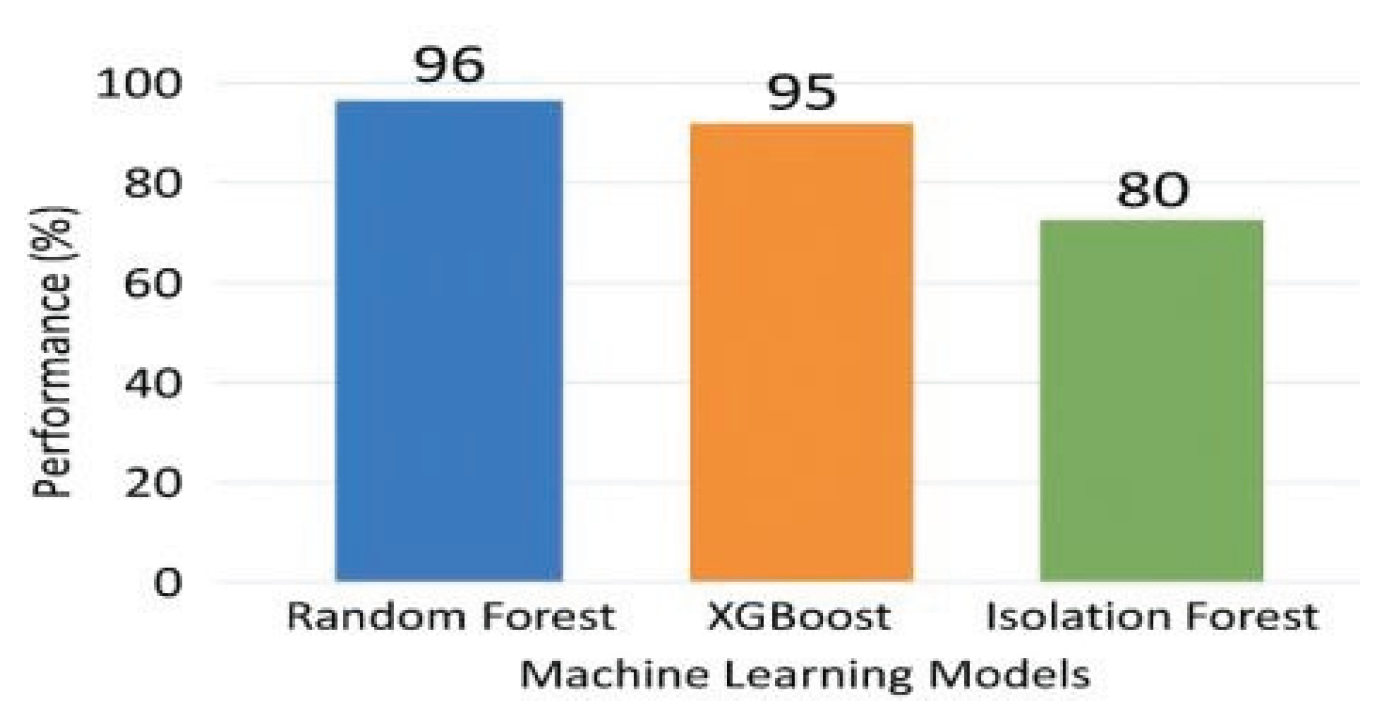

| Model | Accuracy | Precision | Recall | F1-Score | ROC - AUC |

|---|---|---|---|---|---|

| Isolation Forest | 80% | 0.75 | 0.70 | 0.72 | 0.78 |

| XG- Boost |

95% | 0.93 | 0.90 | 0.91 | 0.96 |

| Rando m Forest | 96% | 0.94 | 0.92 | 0.93 | 0.97 |

| Feature | SHAP Value |

|---|---|

| Claimed Deductions | +0.29 |

| Reported Income Change | +0.24 |

| Number of Dependents | +0.18 |

| Audit History Flag | +0.16 |

| Expense-to- Income Ratio | +0.11 |

5. Conclusions

References

- J. Han, M. Kamber, and J. Pei, Data Mining: Concepts and Techniques, 3rd ed. San Francisco, CA, USA: Morgan Kaufmann, 2012.

- I. Guyon and A. Elisseeff, “An introduction to variable and feature selection,” J. Mach. Learn. Res., vol. 3, pp. 1157–1182, Mar. 2003.

- L. Breiman, “Random forests,” Mach. Learn., vol. 45, no. 1, pp. 5–32, 2001.

- T. Chen and C. Guestrin, “XGBoost: A scalable tree boosting system,” in Proc. 22nd ACM SIGKDD Int. Conf. Knowl. Discovery Data Mining (KDD), San Francisco, CA, USA, 2016, pp. 785–794.

- F. T. Liu, K. M. Ting, and Z.-H. Zhou, “Isolation forest,” in Proc. 8th IEEE Int. Conf. Data Mining (ICDM), Pisa, Italy, 2008, pp. 413–422.

- I. Goodfellow, Y. Bengio, and A. Courville, Deep Learning. Cambridge, MA, USA: MIT Press, 2016.

- S. Hochreiter and J. Schmidhuber, “Long short- term memory,” Neural Comput., vol. 9, no. 8, pp. 1735–1780, 1997.

- H. He and E. A. Garcia, “Learning from imbalanced data,” IEEE Trans. Knowl. Data Eng., vol. 21, no. 9, pp. 1263–1284, Sept. 2009. [CrossRef]

- C. Sammut and G. Webb, Encyclopedia of Machine Learning. New York, NY, USA: Springer, 2010.

- R. J. Bolton and D. J. Hand, “Statistical fraud detection: A review,” Statist. Sci., vol. 17, no. 3, pp. 235–255, 2002. [CrossRef]

- P. Kumar and A. Mehta, “Detection of financial fraud using ensemble learning techniques,” Int. J. Data Sci. Anal., vol. 12, no. 4, pp. 225–238, 2024. [CrossRef]

- R. Patel, S. Sharma, and M. Das, “AI-based detection of income tax fraud using machine learning algorithms,” IEEE Access, vol. 13, pp. 11234–11245, 2025.

- A. Singh, R. Kaur, and P. Verma, “Unsupervised learning for identifying tax evasion patterns,” in Proc.

- IEEE Int. Conf. Inf. Technol. (ICIT), 2025, pp. 401– 406.

- N. Gupta and R. Sharma, “Hybrid AI framework for automated tax risk assessment,” Expert Syst. Appl., vol. 238, p. 122076, 2025.

- V. Choudhary, A. Reddy, and M. Nair, “AI-driven predictive analytics for taxpayer behavior analysis,” J. Intell. Inf. Syst., vol. 58, no. 2, pp. 421–437, 2025.

- M. Das and T. Patel, “Intelligent tax fraud detection using XGBoost and random forest,” Appl. Soft Comput., vol. 154, p. 110020, 2025.

- J. Kaur and D. Verma, “Data mining approaches for detecting anomalies in tax returns,” Int. J. Comput. Appl., vol. 183, no. 5, pp. 12–18, 2025.

- K. Reddy and A. Iyer, “AI and ML approaches for financial fraud and tax evasion detection,” IEEE Trans. Comput. Soc. Syst., vol. 12, no. 1, pp. 67–78, 2025.

- P. Bansal and S. Rao, “Predictive modeling for tax evasion detection using deep learning,” Expert Syst. Appl., vol. 238, p. 121856, 2025. [CrossRef]

- L. Deshmukh and V. Nair, “Automated tax compliance monitoring using AI techniques,” Int. J. Artif. Intell. Tools, vol. 34, no. 3, pp. 1–14, 2025.

- H. Zhang, Y. Li, and S. Xu, “Explainable AI for financial fraud detection,” IEEE Access, vol. 11, pp. 55278–55289, 2023.

- J. Brownlee, Machine Learning Mastery With Python. San Francisco, CA, USA: Machine Learning Mastery, 2020.

- M. Ribeiro, S. Singh, and C. Guestrin, “Why should I trust you? Explaining the predictions of any classifier,” in Proc. 22nd ACM SIGKDD Int. Conf. Knowl. Discovery Data Mining (KDD), 2016, pp. 1135–1144.

- S. B. Kotsiantis, “Supervised machine learning: A review of classification techniques,” Informatica, vol. 31, pp. 249–268, 2007.

- A. Ng and M. Jordan, “On discriminative vs. generative classifiers: A comparison of logistic regression and naive Bayes,” Adv. Neural Inf. Process. Syst., vol. 14, pp. 841–848, 2002.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).