1. Introduction

Artificial Intelligence (AI), in its broadest sense, refers to the field of computer science focused on creating systems capable of performing tasks that typically require human intelligence. These tasks include learning from experience, reasoning, problem-solving, understanding natural language, and perceiving and interacting with the environment [

1]. As AI technologies continue to proliferate across diverse sectors, their integration into mental health services has emerged as a significant area of inquiry [

2,

3].

Recent research has increasingly focused on the use of AI chatbots as innovative tools for digital mental health interventions. AI chatbots are increasingly being used in mental health support as complementary tools to traditional therapy [

4]. These chatbots utilize machine learning algorithms and natural language processing to interpret user input and generate contextually appropriate responses, thereby enabling interactions that closely resemble human conversation [

5]. AI chatbots offer 24/7 access to mental health resources, providing instant responses without waiting for appointments. Additionally, AI chatbots are useful for people in remote or underserved areas where mental health professionals are scarce. Thus, AI chatbots offer a promising solution to several barriers commonly associated with traditional psychotherapy, including financial cost, limited availability of mental health professionals, and geographic or logistical constraints. Moreover, the anonymity and privacy afforded by chatbot platforms may reduce the stigma often linked to seeking mental health support [

6,

7].

Empirical studies investigating the effectiveness of AI-based interventions have yielded encouraging findings. For instance, a systematic review of AI-assisted psychotherapy for adults experiencing symptoms of depression and anxiety revealed significant improvements in depressive outcomes [

8]. Specific applications such as Tess (Text-based Emotional Supporter by X2AI), which employs natural language processing to respond to emotional distress, have demonstrated reductions in both depression and anxiety symptoms [

9]. Similarly, Greer et al. reported notable decreases in anxiety among users of “Vivibot”, an AI chatbot designed to provide mental health support [

10]. Further evidence from Drouin et al. showed that participants who interacted with the AI chatbot “Replika” experienced lower levels of conversational anxiety compared to those who engaged with human counterparts, either face-to-face or online [

11]. In another study, De Gennaro et al. found that individuals who received empathetic responses from an AI chatbot following a social exclusion task reported improved mood [

12]. Additionally, usability testing and expert evaluations of an AI-based emotion chatbot indicated its particular usefulness for individuals who struggle with emotional expression in face-to-face interactions [

13]. Moreover, users who regularly interacted with the “Wysa” chatbot reported significantly lower levels of depressive symptoms compared to those with minimal engagement [

14]. Similarly, individuals using “Woebot” experienced notable reductions in depressive symptoms over a two-week period, whereas participants in a psychoeducational control group showed no significant change [

15]. AI chatbots may be particularly well-suited for young adults, a demographic that frequently utilizes online messaging platforms with interfaces resembling those of chatbot applications [

7]. Moreover, young adults have demonstrated high levels of satisfaction with digital mental health resources, suggesting that chatbot-based interventions may be both accessible and appealing to this population [

16].

Although preliminary findings support the effectiveness of AI chatbots and highlight their potential utility, empirical research investigating individuals’ attitudes toward these technologies remains limited. Attitude is commonly defined as a psychological predisposition expressed through evaluative judgments—ranging from favorable to unfavorable—toward a specific entity [

17]. It may also be conceptualized as an affective orientation toward or against a psychological object. Attitudes serve adaptive functions and are instrumental in shaping behavior [

18]. Given that technological innovations often elicit distinct attitudinal responses, it is imperative to understand public perceptions of AI-based applications, particularly in domains where such technologies are actively being implemented [

19]. Consequently, the accurate assessment of attitudes through psychometrically sound instruments is essential for predicting behavioral responses and informing the future trajectory of technological developments, such as AI-driven mental health interventions.

Until now, several scales have developed to measure attitudes towards AI as a more overarching concept, such as the Attitudes Towards Artificial Intelligence Scale (ATTARI--12) [

20], the Attitudes Towards Artificial Intelligence Scale (ATAI) [

21], the General Attitudes Towards Artificial Intelligence Scale (GAAIS) [

22], the Concerns About Autonomous Technology questionnaire [

23], the Artificial Intelligence Attitude Scale (AIAS-4) [

24], and the Artificial Intelligence Attitudes Inventory (AIAI) [

25]. Additionally, several other scales have produced to assess attitudes towards specific domains of AI such as the Attitudes Towards Artificial Intelligence at Work (AAAW) [

26], the Treats of Artificial Intelligence Scale (TAI) [

27], the Artificial Intelligence Anxiety Scale (AIAS) [

28], the Short Trust in Automation Scale (S-TIAS) [

29], and the Attitude Scale Towards the Use of Artificial Intelligence Technologies in Nursing (ASUAITIN) [

30].

Evaluating individuals’ attitudes toward applications of AI in mental health has become increasingly relevant for both research and practice. Given the well-established relationship between attitudinal dispositions and help-seeking intentions [

31,

32,

33], rigorous investigation of user perceptions toward chatbot-assisted psychotherapy is critical for evaluating its viability as an empirically supported mental health treatment modality. In this context, measuring attitudes toward the use of AI-based chatbots for mental health support is highly important, especially as these technologies become more integrated into clinical and therapeutic settings.

However, to the best of our knowledge, there is no scale that measures individuals’ attitudes toward the use of AI-based chatbots for mental health support. The development of a psychometrically robust, concise measurement scale to assess attitudes toward AI-enabled chatbots in mental health applications would significantly advance empirical research and applied practices in evaluating and addressing public perceptions of artificial intelligence. Therefore, the aim of our study was to develop and validate a scale to measure attitudes toward the use of AI-based chatbots for mental health support, i.e., the Artificial Intelligence in Mental Health Scale.

2. Materials and Methods

2.1. Development of the Scale

AI chatbots for mental health support present distinct technical advantages that enhance their applicability in clinical and research contexts. These systems employ advanced natural language processing techniques to interpret user input and generate contextually appropriate responses, facilitating a conversational interface that mimics human interaction. They utilize machine learning algorithms to adapt responses based on user behavior and historical interactions, improving accuracy and relevance over time. Their continuous availability ensures uninterrupted support, overcoming temporal and geographical barriers inherent in traditional mental health services. Furthermore, through machine learning algorithms, chatbots dynamically adapt to individual user patterns, improving predictive accuracy over time. Integration with data analytics frameworks allows for real-time monitoring of behavioral indicators and early detection of psychological distress, thereby supporting preventive care strategies. Finally, their cloud-based infrastructure ensures scalability and compliance with data protection regulations, while maintaining secure storage and interoperability with electronic health record systems [

19,

34,

35,

36]. Thus, we considered that technical advantages could be a factor in our scale. In this context, we created items that refer to technical advantages of the AI chatbots such as “Artificial intelligence chatbots can demonstrate better problem-solving skills compared to a human therapist” and “Artificial intelligence chatbots can offer up to date information on mental health issues”.

Additionally, AI chatbots for mental health support offer significant personal benefits that enhance accessibility and user engagement. They provide immediate, on-demand support, reducing barriers such as scheduling constraints and geographic limitations commonly associated with traditional therapy. Their anonymity and privacy features foster a safe environment for individuals who may experience stigma or discomfort in seeking face-to-face care, thereby encouraging disclosure and emotional expression. Moreover, chatbots deliver personalized interventions by analyzing user input and tailoring responses to individual needs, which promotes a sense of relevance and trust. The non-judgmental nature of AI interactions further alleviates anxiety associated with human evaluation, making them particularly valuable for individuals with social apprehension [

37,

38,

39,

40,

41]. Thus, we considered that personal advantages could be a factor in our scale. In this context, we created items that refer to personal advantages of the AI chatbots such as “Artificial intelligence chatbots can expand access to mental health care by reducing geographic barriers” and “Artificial intelligence chatbots can expand access to mental health care by reducing financial barriers”.

After all, we expected a two-factor model for our scale (i.e., technical and personal advantages).

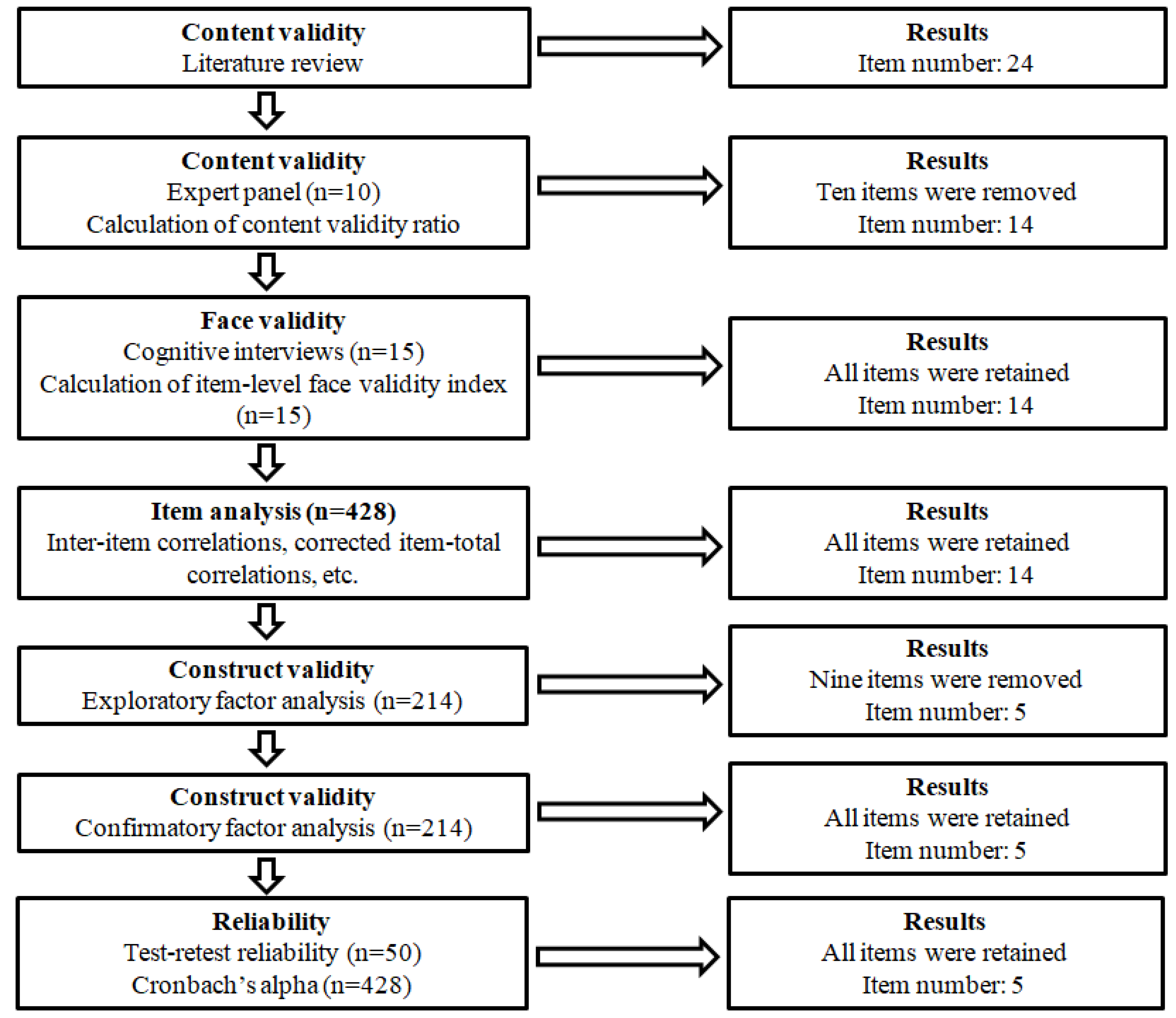

Figure 1 illustrates the process undertaken to develop and validate the Artificial Intelligence in Mental Health Scale (AIMHS). We followed the suggested guidelines [

42,

43,

44] to develop and validate the AIMHS. Initially, a comprehensive literature review was conducted to identify existing instruments, scales and tools that assess attitudes towards AI [e.g., 20–22,24,26,28,29,45,46]. The relevance and applicability of items from these instruments were critically evaluated to inform the development of the AIMHS. Moreover, we examined instruments, scales and tools that measure mental health issues [e.g., 47–54]. After all, an initial pool of 24 items was generated to capture attitudes towards the use of AI chatbots for mental health support. The initial item pool was structured around the two factors (i.e., technical and personal advantages) that we explain in detail above.

Subsequently, content validity was assessed through consultation with a panel of ten experts from diverse professional backgrounds, including AI experts, psychiatrists, psychologists, therapists, and digital health experts. We used the expert panel to assess the content validity of the initial set of 24 items. Each item was rated as “not essential,” “useful but not essential,” or “essential.” The Content Validity Ratio (CVR) was calculated using the following formula, where N represents the total number of experts and n denotes the number who rated an item as “essential.”

Following suggested guidelines [

55], items with a CVR below 0.80 were excluded, resulting in the retention of 14 items.

To evaluate face validity, cognitive interviews [

56] were conducted with 15 participants, all of whom demonstrated clear comprehension of the items. Further validation was performed using the item-level face validity index, based on ratings from 15 participants using a four-point Likert scale (1 = not clear to 4 = highly clear). In accordance with suggested guidelines [

57], items with an item-level face validity index above 0.80 were retained. All 14 items met this criterion, with item-level face validity index ranging from 0.933 to 1.000.

After the scale’s initial development phase, it comprised 14 items designed to assess attitudes towards the use of AI chatbots for mental health support. Responses were recorded on a five-point Likert scale as follows: 1 (strongly disagree), 2 (disagree), 3 (neither disagree nor agree), 4 (agree), 5 (strongly agree).

Supplementary Table S1 shows the 14 items that were produced after the assessment of the content and face validity of the AIMHS. To reduce acquiescence bias, we employed positive and negative items towards AI chatbots use. In particular, we used seven positive items and seven negative items. Examples of positive items were the following: (1) Artificial intelligence chatbots can demonstrate sufficient social and cultural sensitivity to support diverse populations, (2) Artificial intelligence chatbots can demonstrate better problem-solving skills compared to a human therapist, and (3) Artificial intelligence chatbots can expand access to mental health care by reducing geographic barriers. Examples of negative items were the following: (1) Artificial intelligence chatbots cannot understand people’s emotions, (2) Artificial intelligence chatbots cannot provide correct information on mental health issues, and (3) Artificial intelligence chatbots cannot achieve empathy levels comparable to those of a human therapist.

2.2. Participants and Procedure

The study sample consisted of adults aged 18 years or older. Inclusion criteria required participants to engage with AI chatbots, social media platforms, or websites for a minimum of 30 minutes per day, thereby ensuring a basic level of interaction with digital technologies. Data collection was conducted through an anonymous online survey, developed via Google Forms and disseminated across multiple digital platforms, including Facebook, Instagram, and LinkedIn. To enhance recruitment, a promotional video was additionally produced and shared on TikTok. Data collection took place in October 2025.

In total, 428 individuals completed the questionnaire. Of these, 77.6% (n = 332) were female and 22.4% (n = 96) were male. The mean age of participants was 41.1 years (standard deviation; 14.1), with a median of 45 years (range: 18–65). The reported mean daily use of AI chatbots, social media platforms, and websites was 3.5 hours (SD = 2.6), with a median of 3 hours (range: 30 minutes–14 hours).

2.3. Item Analysis

After the scale’s initial development phase, an item analysis was carried out on the 14 generated items. This analysis, based on the full sample (n=428), examined inter-item correlations, corrected item-total correlations, floor and ceiling effects, skewness, kurtosis, and Cronbach’s alpha (calculated when each individual item was removed) for the 14 items [

58]. Inter-item correlations were compared against the recommended range of 0.15 to 0.75 [

59], while corrected item-total correlations needed to exceed 0.30 to confirm sufficient discriminatory power [

60].

Each item was rated on a five-point Likert scale with anchors: strongly disagree, disagree, neither disagree nor agree, agree, strongly agree. Floor and ceiling effects were identified if over 85% of respondents chose either the lowest (“strongly disagree”) or highest (“strongly agree”) option [

61]. Distribution normality was evaluated using skewness (acceptable between -2 and +2) and kurtosis (acceptable between -3 and +3) [

62].

An introductory note was provided to participants to describe and clarify the role of AI chatbots in mental health support.

Supplementary Table S1 presents this introductory note. We asked participants to assess their attitudes towards AI chatbots for mental health support by answering the AIMHS.

2.4. Construct Validity

To evaluate the construct validity of the AIMHS, we applied both exploratory factor analysis (EFA) and confirmatory factor analysis (CFA). According to the literature, EFA requires a minimum of either 50 participants or five participants per item [

63], while CFA typically requires at least 200 participants [

58]. Our sample size met these criteria. For stronger psychometric validation, participants were randomly split into two groups: one group of 214 participants for the EFA and another 214 for the CFA. Using independent samples for each factor analysis (i.e., EFA and CFA) strengthened the reliability and validity of our results, with both subsamples surpassing the recommended thresholds.

We began with an EFA to identify the underlying factor structure of the AIMHS, followed by a CFA to test the factor model suggested by the EFA. This stage included the 14 items that remained after the scale’s initial development and item analysis. Before conducting the EFA, data adequacy was confirmed using the Kaiser-Meyer-Olkin (KMO) index and Bartlett’s test of sphericity. Standard acceptability thresholds were applied: KMO values above 0.70 and a statistically significant Bartlett’s test (p < 0.05) [

62,

64]. For the EFA, we used oblique rotation (direct oblimin method in SPSS) since this method allow the factors to correlate as we expected some correlation in our study [

58]. Indeed, there was a statistically significant correlation (r = 0.5, p-value < 0.001) between the two factors that emerged through validity and reliability analysis. Since the data followed the normal distribution, the maximum likelihood estimator was used [

58]. We applied the following acceptability criteria: (a) eigenvalues greater than 1, (b) factor loadings above 0.60, (c) communalities exceeding 0.40, and (d) total explained variance over 65% [

62]. Reliability was further tested using Cronbach’s alpha, with coefficients above 0.70 considered satisfactory [

65].

Subsequently, a CFA was conducted to confirm the AIMHS factor structure. Model fit was assessed through several indices: absolute fit measures (Root Mean Square Error of Approximation [RMSEA] and Goodness of Fit Index [GFI]), relative fit measures (Normed Fit Index [NFI] and Comparative Fit Index [CFI]), and one parsimonious fit measure (ratio of chi-square to degrees of freedom [x²/df]). Acceptable thresholds were RMSEA < 0.10, GFI > 0.90, NFI > 0.90, CFI > 0.90, and x²/df < 5 [

66,

67]. Additionally, standardized regression weights between items and factors were calculated.

Moreover, we assessed measurement invariance across demographic subgroups, specifically examining differences by gender, age, and daily use of AI chatbots, social media platforms, and websites. Participants were divided into two groups for age and usage time based on median values. Configural invariance was tested first, followed by metric invariance. For configural invariance, we evaluated RMSEA, GFI, NFI, and CFI, with acceptable thresholds defined as RMSEA < 0.10 and GFI, NFI, and CFI > 0.90. For metric invariance, a p-value greater than 0.05 indicated invariance [

68].

2.5. Concurrent Validity

Following the construct validity assessment, a two-factor model with 5 items was established for the AIMHS. Each item was rated on a five-point Likert scale ranging from “strongly disagree” to “strongly agree”. Scores were assigned so that higher values reflected more positive attitudes towards AI chatbots. Thus, scores for positive items were the following: 1 for “strongly disagree”, 2 for “disagree”, 3 for “neither disagree nor agree”, 4 for “agree”, and 5 for “strongly agree”. On the other hand, scores for the negative items were the following: 5 for “strongly disagree”, 4 for “disagree”, 3 for “neither disagree nor agree”, 2 for “agree”, and 1 for “strongly agree”. To obtain the total AIMHS score, we sum the answers in all items and divide the aggregate by total number of answers. Thus, the total AIMHS score ranges from 1 to 5. Similarly, we calculate total score for each factor, and, thus, this score also ranges from 1 to 5. Higher scores indicate more positive attitudes towards AI mental health chatbots.

There are no other scales that measure attitudes towards the use of AI chatbots for mental health support. Thus, we used three other scales to measure concurrent validity of the AIMHS. In particular, we used the Artificial Intelligence Attitude Scale (AIAS-4) [

24], the Attitudes Towards Artificial Intelligence Scale (ATAI) [

21], and the Short Trust in Automation Scale (S-TIAS) [

29].

We used the AIAS-4 [

24] to measure general attitude toward AI. The AIAS-4 includes four items such as “I believe that artificial intelligence will improve my life” and “I think artificial intelligence technology is positive for humanity”. Answers are on a 10-point Likert scale from 1 (not at all) to 10 (completely agree). Total score is the average of all the item scores and ranges from 1 to 10. Higher scores indicate more positive attitudes towards AI. We used the valid Greek version of the AIAS-4 [

69]. In our study, Cronbach’s alpha for the AIAS-4 was 0.917. We expected a positive correlation between the AIAS-4 and the AIMHS.

We used the ATAI [

21] to measure acceptance and fear of AI. The ATAI includes five items; two items measure acceptance of AI, and three items measure fear of AI. The two items for the acceptance of AI are the following: “I trust artificial intelligence” and “Artificial intelligence will benefit humankind”. The three items for the fear of AI are the following: “I fear artificial intelligence”, “Artificial intelligence will destroy humankind” and “Artificial intelligence will cause many job losses”. Answers are on an 11-point Likert scale from 0 (strongly disagree) to 10 (strongly agree). Total score is the average of all the item scores and ranges from 0 to 10. Higher scores in the acceptance subscale indicate higher levels of acceptance of AI, and, thus, more positive attitudes towards AI. Higher scores in the fear subscale indicate higher levels of fear of AI, and, thus, more negative attitudes towards AI. We used the valid Greek version of the ATAI [

70]. In our study, Cronbach’s alpha for the acceptance subscale was 0.738, and for the fear subscale was 0.721. We expected a positive correlation between the acceptance subscale and the AIMHS. Also, we expected a negative correlation between the fear subscale and the AIMHS.

We used the S-TIAS [

29] to measure trust in AI. The S-TIAS includes three items; “I am confident in the artificial intelligence assistant”, “The artificial intelligence assistant is reliable”, and “I can trust the AI assistant”. Answers are on a 7-point Likert scale from 1 (not at all) to 7 (extremely). Total score is the average of all the item scores and ranges from 1 to 7. Higher scores indicate higher levels of trust in AI, and, thus, more positive attitudes towards AI. We used the valid Greek version of the S-TIAS [

71]. In our study, Cronbach’s alpha for the S-TIAS was 0.912. We expected a positive correlation between the S-TIAS and the AIMHS.

2.6. Reliability

To assess the internal consistency of the AIMHS, we computed Cronbach’s alpha using the full sample (n = 428). Values above 0.70 for Cronbach’s alpha were regarded as acceptable [

65]. We also examined corrected item-total correlations and Cronbach’s alpha if an item was deleted for the final five-item version of the AIMHS, with correlations above 0.30 considered satisfactory [

60].

In addition, a test-retest reliability study was carried out with 50 participants who completed the AIMHS twice within a five-day interval. For the five items, Cohen’s kappa was calculated due to the five-point ordinal response format, while a two-way mixed intraclass correlation coefficient (absolute agreement) was used to evaluate the total AIMHS score.

2.7. Ethical Issues

The study utilized an anonymous and voluntary data collection process. Before taking part, participants were fully informed about the research aims and procedures and subsequently provided informed consent. Ethical approval was granted by the Institutional Review Board of the Faculty of Nursing, National and Kapodistrian University of Athens (Protocol Approval #01, September 14, 2025). All procedures adhered to the ethical standards of the Declaration of Helsinki [

72].

2.8. Statistical Analysis

Categorical variables are described using absolute frequencies (n) and percentages, while continuous variables are summarized with measures of central tendency (mean, median) and dispersion (standard deviation, range), along with minimum and maximum values. Normality of scale distributions was evaluated using both statistical tests (Kolmogorov-Smirnov) and graphical methods (Q-Q plots). As all scales showed normal distribution, correlations between them were assessed with Pearson’s correlation coefficients. P-values less than 0.05 were considered as statistically significant. We performed CFA with AMOS version 21 (Amos Development Corporation, 2018). All other analyses were conducted with IBM SPSS 28.0 (IBM Corp. Released 2012. IBM SPSS Statistics for Windows, Version 28.0. Armonk, NY: IBM Corp.).

3. Results

3.1. Item Analysis

Item analysis included the 14 items developed during the initial phase of the AIMHS. All items demonstrated acceptable psychometric properties, including corrected item–total correlations, inter-item correlations, floor and ceiling effects, skewness, and kurtosis (

Table 1). The internal consistency of the scale was high, with a Cronbach’s alpha of 0.888, which decreased upon removal of any single item.

Supplementary Table S2 provides the inter-item correlation matrix for the 14 items.

3.2. Exploratory Factor Analysis

The Kaiser-Meyer-Olkin index was 0.861, and Bartlett’s test of sphericity was significant (p < 0.001), confirming sample adequacy for EFA. An oblique rotation (direct oblimin in SPSS) was applied to the 14 items (

Table 1). Results of the initial EFA are presented in

Table 2. Seven items (#1, #2, #5, #10, #11, #13, and #14) exhibited inadequate factor loadings (< 0.60) and/or communalities (< 0.40). This analysis yielded three factors explaining 59.758% of the variance. A second EFA was conducted after removing these items. The KMO index was 0.766, and Bartlett’s test remained significant (p < 0.001).

Table 3 summarizes these results, where two items (#3 and #12) showed insufficient loadings or/and communalities. The second EFA identified two factors accounting for 66.199% of the variance. A third EFA was then performed after excluding items #3 and #12. The KMO index was 0.708, and Bartlett’s test was significant (p < 0.001). In this third model, all remaining items (#4, #6, #7, #8, and #9) had factor loadings above 0.649 and communalities above 0.415. The EFA identified two factors explaining 81.280% of the variance, making the third model (

Table 4) the optimal structure derived from EFA.

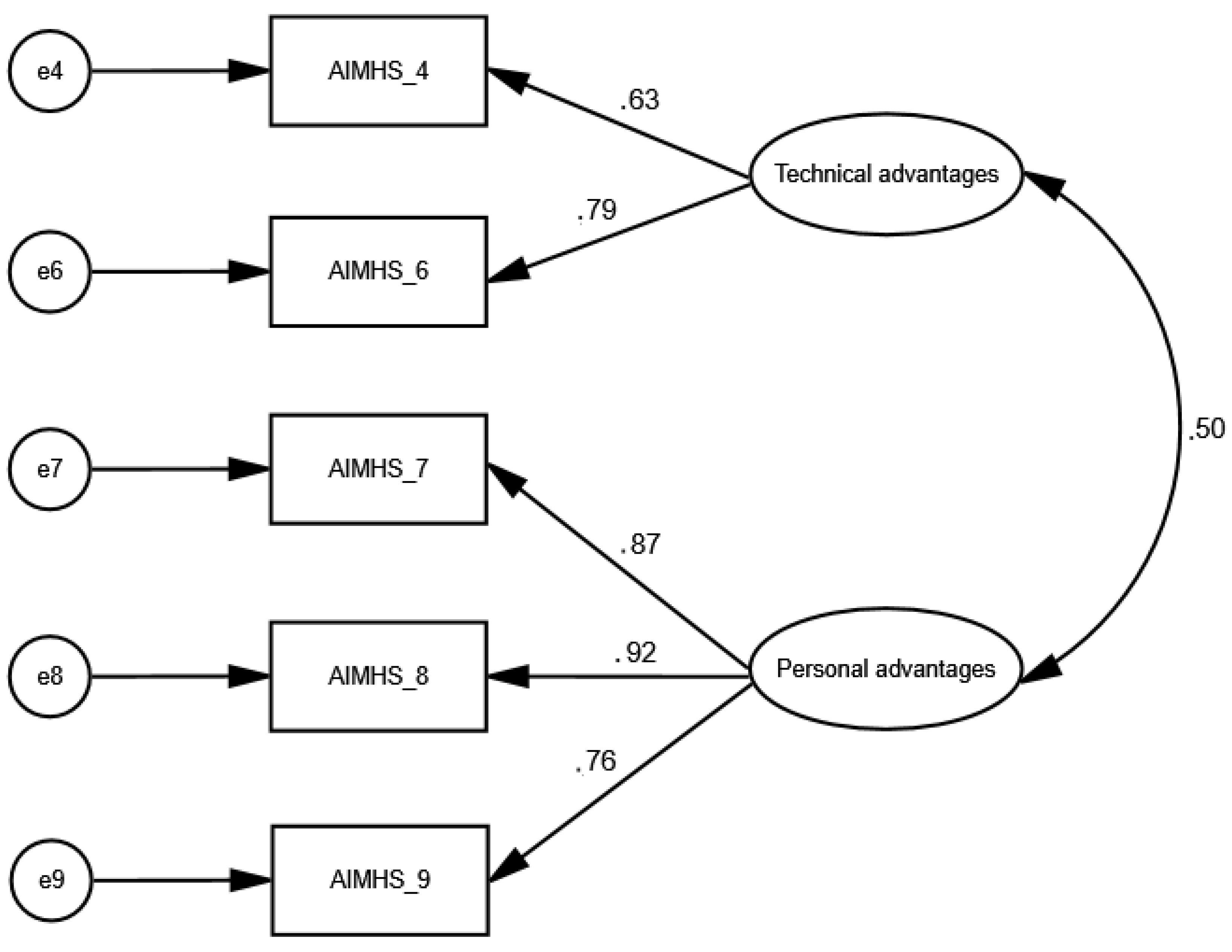

After all, the EFA identified a two-factor five-item model for the AIMHS. These factors were named “technical advantages” and “personal advantages” after consideration of the items that included. In particular, the factor “technical advantages” included the items “Artificial intelligence chatbots cannot achieve empathy levels comparable to those of a human therapist” and “Artificial intelligence chatbots can demonstrate better problem-solving skills compared to a human therapist”. Also, the factor “personal advantages” included the items “Artificial intelligence chatbots can expand access to mental health care by reducing geographic barriers”, “Artificial intelligence chatbots can expand access to mental health care by providing continuous access (24/7 availability)”, and “Artificial intelligence chatbots can expand access to mental health care by reducing financial barriers”.

Cronbach’s alpha for the AIMHS (third model,

Table 4) was 0.798, while for the factor “technical advantages” was 0.728, and for the factor “personal advantages” was 0.881.

3.3. Confirmatory Factor Analysis

Confirmatory factor analysis was conducted to validate the factor structure of the AIMHS identified through EFA (

Figure 2). The goodness-of-fit indices indicated that the two-factor model comprising five items demonstrated an excellent fit to the data since x2/df was 1.485, RMSEA was 0.048 (90% confidence interval = 0.0001 to 0.122), GFI was 0.989, NFI was 0.987 and CFI was 0.996. Additionally, standardized regression weights between items and factors ranged from 0.63 to 0.92 (p < 0.001 in all cases). We found a statistically significant correlation between the two factors (0.5, p-value < 0.001).

In conclusion, EFA and CFA identified a two-factor five-item model for the AIMHS. As we described above in detail, the two factors were named “technical advantages” and “personal advantages”.

3.4. Measurement Invariance

Table 5 presents the results of the configural measurement invariance analysis for the two-factor five-item CFA model across gender, age, and daily use of AI chatbots, social media platforms, and websites. The RMSEA, GFI, NFI, and CFI indices for these demographic groups indicated that the two-factor CFA model demonstrated very good fit for all comparisons, including females versus males, younger versus older participants, and those with lower versus higher daily use of AI chatbots, social media platforms, and websites. Furthermore, metric invariance was supported, as the p-values for gender (0.597), age (0.204), and daily use of AI chatbots, social media platforms, and websites (0.261) exceeded the 0.05 threshold.

3.5. Concurrent Validity

We found a positive correlation between the AIAS-4 and the AIMHS (r = 0.405, p-value < 0.001), suggesting that participants with more positive attitudes towards AI have more positive attitudes towards AI mental health chatbots.

Moreover, we found a positive correlation between the acceptance subscale of the ATAI and the AIMHS (r = 0.401, p-value < 0.001), suggesting that participants with higher levels of acceptance of AI have more positive attitudes towards AI mental health chatbots. On the other hand, we found a negative correlation between the fear subscale of the ATAI and the AIMHS (r = -0.151, p-value = 0.002), suggesting that participants with higher levels of fear of AI have more negative attitudes towards AI mental health chatbots.

Additionally, we found a positive correlation between the S-TIAS and the AIMHS (r = 0.450, p-value < 0.001), suggesting that participants with higher levels of trust in AI have more positive attitudes towards AI mental health chatbots.

Therefore, the concurrent validity of the AIMHS was excellent.

Table 6 shows the correlations between the AIMHS, and the AIAS-4, the ATAI and the S-TIAS.

3.6. Reliability

Cronbach’s alpha for the AIMHS was 0.798, for the factor “technical advantages” was 0.728, and for the factor “personal advantages” was 0.881.

Corrected item-total correlations for the five items ranged between 0.451 and 0.734, while removal of each single item did not increase Cronbach’s alpha for the AIMHS (

Supplementary Table S4).

Cohen’s kappa for the five items ranged from 0.760 to 0.848 (p < 0.001 in all cases), (

Supplementary Table S5).

Intraclass correlation coefficient for the AIMHS was 0.938 (95% confidence interval; 0.866 to 0.968, p < 0.001).

Afterall, the reliability of the AIMHS was excellent.

4. Discussion

According to the World Health Organization (WHO), the global prevalence of mental disorders continues to rise, posing a significant public health challenge. Recent estimates indicate that approximately one in five individuals worldwide—equivalent to nearly 1.7 billion people—are currently living with a mental disorder, with depressive and anxiety disorders being the most common [

73]. Depression affects about 5% of adults globally and remains the leading cause of disability, while anxiety disorders impact roughly 4% of adults [

74]. The burden of these conditions is particularly pronounced in low- and middle-income countries, where access to mental health care remains limited and comorbidity between depression and anxiety disorders is frequent [

73]. These data underscore the urgent need for scalable, evidence-based interventions and systemic reforms to strengthen mental health services worldwide.

In this context, AI chatbots seem to be valuable as a first line of support and self-help tool [

8,

9,

10,

11,

12,

13]. Usage of mental health chatbots and virtual therapists has increased by 320% between 2020 and 2022. By 2023, around 84% of mental health professionals had either adopted or considered integrating AI tools into their work. Looking ahead to 2032, it’s expected that nearly all—99%—will be using AI technologies as part of their everyday practice [

75]. This surge reflects a broader trend toward digital mental health solutions, driven by accessibility, affordability, and the demand for immediate support. Market projections indicate continued expansion, with the mental health chatbot sector expected to grow from

$380 million in 2025 to

$1.65 billion by 2033, representing a compound annual growth rate of approximately 20% [

76].

Since AI-based chatbots are increasingly being used in mental health support as complementary tools to traditional therapy there is an urgent need to create valid tools to measure attitudes toward the use of AI chatbots for mental health support. To the best of our knowledge, there is no scale that measures individuals’ attitudes toward the use of AI chatbots for mental health support. Therefore, we developed and validated the Artificial Intelligence in Mental Health Scale to measure individuals’ attitudes toward the use of AI-based chatbots for mental health support. Our comprehensive assessment of validity and reliability indicates that the AIMHS demonstrates strong psychometric soundness as a measure of attitudes toward the use of AI-based chatbots for mental health support. Comprising five items and requiring only a few minutes to administer, the AIMHS offers a concise and user-friendly option while maintaining robust measurement properties.

We adhered to the recommended guidelines [

42,

43,

44] for the development and validation of the AIMHS. Following an extensive literature review on instruments, scales and tools that assess attitudes towards AI, and mental health issues, we initially generated 24 items intended to measure attitudes towards AI mental health chatbots. The content validity of these items was evaluated using the content validity ratio, resulting in the removal of 10 items and leaving 14 items for further analysis. Face validity was assessed through cognitive interviews and the calculation of the item-level face validity index, after which all 14 items advanced to the next stage of evaluation. Subsequently, item analysis was conducted, leading to adequate indices for the 14 items. Consequently, 14 items were retained for construct validity testing. Exploratory factor analysis revealed that nine items exhibited unacceptable factor loadings and communalities, prompting their removal. The remaining five items demonstrated satisfactory values in the EFA, which identified two factors. As we expected, factor analysis revealed a two-factor model for AIMHS; technical advantages and personal advantages. Confirmatory factor analysis further supported the two-factor five-item structure identified by the EFA. To further assess validity, three validated scales were employed to examine concurrent. In particular, we used the AIAS-4, the ATAI, and the S-TIAS. Statistically significant correlations were observed between the AIMHS and these scales, indicating strong construct validity.

4.1. Limitations

Our study had several limitations. First, this study was conducted within a single country using a convenience sampling method. For instance, the proportion of male participants was notably lower than that of the general population. Consequently, the results may not be widely generalizable. Future research should aim to include more representative and diverse samples to further validate the AIMHS. Despite these limitations, the psychometric evaluation remains strong, as the sample size met all required standards. Moreover, our findings supported configural measurement invariance and metric invariance considering several demographic variables. However, it is important for future studies to explore the psychometric properties of the AIMHS across various populations and cultural contexts. Second, we employed a cross-sectional design to evaluate the validity of the AIMHS. However, since individuals’ attitudes may shift over time, longitudinal studies are needed to better understand individuals’ attitudes toward the use of AI chatbots for mental health support. Third, while we conducted a comprehensive psychometric analysis of the AIMHS, future researchers may consider additional methods—such as assessing divergent, criterion, and known-groups validity—to further examine its reliability and validity. Fourth, as this was the initial validation of the AIMHS, we did not attempt to establish a cut-off score. Future investigations could explore threshold values to help differentiate between participant groups. Fifth, self-report instruments are inherently susceptible to social desirability bias in studies like this one. However, since our study was conducted anonymously and participation was entirely voluntary, we believe that the likelihood of such bias influencing responses was minimal. Sixth, we used three scales to examine the concurrent validity of the AIMHS: (1) Artificial Intelligence Attitude Scale, (2) Attitudes Towards Artificial Intelligence Scale, and (3) Short Trust in Automation Scale. The concurrent validity of the AIMHS can be further explored by using several other scales such as the Attitudes Towards Artificial Intelligence Scale [

20], the General Attitudes Towards Artificial Intelligence Scale (GAAIS) [

22], and the Artificial Intelligence Attitudes Inventory (AIAI) [

25]. Finally, the AIMHS as well as the other scales we used in our study were self-report instruments, and, thus, an information bias is probable due to some participant subjectivity.

5. Conclusions

Given the rapid integration of AI into mental health care, assessing attitudes toward AI-based chatbots is essential for understanding their acceptance and potential impact. User perceptions regarding trust, privacy, perceived usefulness, and emotional safety significantly influence adoption and sustained engagement. If individuals perceive chatbots as helpful, trustworthy, and easy to use, they are more likely to integrate them into their mental health routines.

However, currently, there is no widely validated scale specifically for measurement of attitudes toward AI mental health chatbots. To address this gap, we developed and validated the Artificial Intelligence in Mental Health Scale. Measuring attitudes toward these technologies can inform the design of user-centered interventions, guide policy development, and ensure that digital mental health solutions are implemented in a manner that is both effective and ethically responsible. Even the most advanced chatbot will fail to deliver benefits if users are skeptical or resistant. Measuring attitudes helps identify barriers such as privacy concerns, lack of empathy perception, or fear of misdiagnosis. Understanding public attitudes informs regulatory frameworks, ethical guidelines, and implementation strategies for digital mental health interventions. Developers can use attitude data to improve user experience, cultural sensitivity, and trust-building features in AI systems.

After thoroughly evaluating its reliability and validity, we determined that the AIMHS is a concise and accessible instrument with strong psychometric qualities. The results indicate that AIMHS functions as a two-factor scale comprising five items, designed to gauge individuals’ perceptions of AI-based mental health chatbots. Considering the study’s limitations, we suggest translating and validating the AIMHS across various languages and demographic groups to further examine its consistency and accuracy. Overall, the AIMHS shows potential as a valuable resource for assessing attitudes toward AI mental health chatbots and could assist policymakers, educators, healthcare providers, and other stakeholders in enhancing mental health services. For example, both researchers and practitioners can use the AIMHS to evaluate the effectiveness of mental health interventions by measuring attitudes before and after chatbot interaction to assess changes. Furthermore, researchers in all disciplines may now use a valid tool such as AIMHS to explore associations between attitudes and variables like usage intention, mental health outcomes, or demographics. For instance, the AIMHS may be used to predict intention to use AI chatbots for mental health support, examine factors influencing acceptance, and evaluate interventions aimed at improving acceptance.

Assessing individuals’ acceptance of AI-based mental health chatbots is critical for understanding their potential integration into mental healthcare services. Acceptance influences both initial adoption and sustained engagement, which are essential for achieving therapeutic benefits. Measuring acceptance through validated scales enables researchers to identify key determinants such as perceived usefulness, ease of use, trust, and privacy concerns. These insights can inform the design of user-centered interventions, guide ethical implementation, and support policy development aimed at promoting equitable access to digital mental health solutions.

Supplementary Materials

The following supporting information can be downloaded at the website of this paper posted on

Preprints.org, Table S1: The 14 items that were produced after the assessment of the content and face validity of the Artificial Intelligence in Mental Health Scale; Table S2: Inter-item correlations between the 14 items that were produced after the assessment of the content and face validity of the Artificial Intelligence in Mental Health Scale (n=428); Table S3: Final version of the Artificial Intelligence in Mental Health Scale; Table S4: Corrected item-total correlations, and Cronbach’s alpha when a single item was deleted for the five items of the final structure model of the Artificial Intelligence in Mental Health Scale; Table S5: Cohen’s kappa for the two-factor five-item model of the Artificial Intelligence in Mental Health Scale.

Author Contributions

Conceptualization, A.K. and P.G. (Petros Galanis); methodology, A.K., O.K., I.M. and P.G. (Petros Galanis); software, P.G. (Parisis Gallos), O.G., P.L. and P.G. (Petros Galanis; validation, O.G., P.L. and M.T.; formal analysis, O.K., P.G. (Parisis Gallos), O.G., P.L. and P.G. (Petros Galanis); investigation, O.G., P.L. and M.T.; resources, O.G., P.L. and M.T.; data curation, O.K., P.G. (Parisis Gallos), O.G., P.L. and P.G. (Petros Galanis); writing—original draft preparation, A.K., I.M., O.K., P.G. (Parisis Gallos), O.G., P.L., M.T. and P.G. (Petros Galanis); writing—review and editing, A.K., I.M., O.K., P.G. (Parisis Gallos), O.G., P.L., M.T. and P.G. (Petros Galanis); visualization, A.K., P.G. (Petros Galanis); supervision, P.G. (Petros Galanis); project administration, A.K. and P.G. (Petros Galanis). All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by Institutional Review Board of the Faculty of Nursing, National and Kapodistrian University of Athens (Protocol Approval #01, September 14, 2025).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AAAW |

Attitudes Towards Artificial Intelligence at Work |

| AI |

Artificial Intelligence |

| AIAS |

Artificial Intelligence Anxiety Scale |

| AIAS-4 |

Artificial Intelligence Attitude Scale |

| AIMHS |

Artificial Intelligence in Mental Health Scale |

| ASUAITIN |

Attitude Scale Towards the Use of Artificial Intelligence Technologies in Nursing |

| ATAI |

Attitudes Towards Artificial Intelligence Scale |

| ATTARI--12 |

Attitudes Towards Artificial Intelligence Scale |

| CFA |

Confirmatory Factor Analysis |

| CFI |

Comparative Fit Index |

| CVR |

Content Validity Ratio |

| EFA |

Exploratory Factor Analysis |

| GAAIS |

General Attitudes Towards Artificial Intelligence Scale |

| GFI |

Goodness of Fit Index |

| KMO |

Kaiser-Meyer-Olkin |

| NFI |

Normed Fit Index |

| RMSEA |

Root Mean Square Error of Approximation |

| SPSS |

Statistical Package for Social Sciences |

| S-TIAS |

Short Trust in Automation Scale |

| TAI |

Treats of Artificial Intelligence Scale |

| WHO |

World Health Organization |

References

- Sheikh, H.; Prins, C.; Schrijvers, E. Artificial Intelligence: Definition and Background. In Mission AI; Research for Policy; Springer International Publishing: Cham, 2023; ISBN 978-3-031-21447-9. [Google Scholar]

- Kamel Rahimi, A.; Canfell, O.J.; Chan, W.; Sly, B.; Pole, J.D.; Sullivan, C.; Shrapnel, S. Machine Learning Models for Diabetes Management in Acute Care Using Electronic Medical Records: A Systematic Review. International Journal of Medical Informatics 2022, 162, 104758. [Google Scholar] [CrossRef] [PubMed]

- Martinez-Millana, A.; Saez-Saez, A.; Tornero-Costa, R.; Azzopardi-Muscat, N.; Traver, V.; Novillo-Ortiz, D. Artificial Intelligence and Its Impact on the Domains of Universal Health Coverage, Health Emergencies and Health Promotion: An Overview of Systematic Reviews. International Journal of Medical Informatics 2022, 166, 104855. [Google Scholar] [CrossRef] [PubMed]

- D’Alfonso, S. AI in Mental Health. Current Opinion in Psychology 2020, 36, 112–117. [Google Scholar] [CrossRef]

- Milne-Ives, M.; De Cock, C.; Lim, E.; Shehadeh, M.H.; De Pennington, N.; Mole, G.; Normando, E.; Meinert, E. The Effectiveness of Artificial Intelligence Conversational Agents in Health Care: Systematic Review. J Med Internet Res 2020, 22, e20346. [Google Scholar] [CrossRef]

- Boucher, E.M.; Harake, N.R.; Ward, H.E.; Stoeckl, S.E.; Vargas, J.; Minkel, J.; Parks, A.C.; Zilca, R. Artificially Intelligent Chatbots in Digital Mental Health Interventions: A Review. Expert Review of Medical Devices 2021, 18, 37–49. [Google Scholar] [CrossRef]

- Koulouri, T.; Macredie, R.D.; Olakitan, D. Chatbots to Support Young Adults’ Mental Health: An Exploratory Study of Acceptability. ACM Trans. Interact. Intell. Syst. 2022, 12, 1–39. [Google Scholar] [CrossRef]

- Lim, S.M.; Shiau, C.W.C.; Cheng, L.J.; Lau, Y. Chatbot-Delivered Psychotherapy for Adults With Depressive and Anxiety Symptoms: A Systematic Review and Meta-Regression. Behavior Therapy 2022, 53, 334–347. [Google Scholar] [CrossRef]

- Sachan, D. Self-Help Robots Drive Blues Away. The Lancet Psychiatry 2018, 5, 547. [Google Scholar] [CrossRef]

- Greer, S.; Ramo, D.; Chang, Y.-J.; Fu, M.; Moskowitz, J.; Haritatos, J. Use of the Chatbot “Vivibot” to Deliver Positive Psychology Skills and Promote Well-Being Among Young People After Cancer Treatment: Randomized Controlled Feasibility Trial. JMIR Mhealth Uhealth 2019, 7, e15018. [Google Scholar] [CrossRef]

- Drouin, M.; Sprecher, S.; Nicola, R.; Perkins, T. Is Chatting with a Sophisticated Chatbot as Good as Chatting Online or FTF with a Stranger? Computers in Human Behavior 2022, 128, 107100. [Google Scholar] [CrossRef]

- De Gennaro, M.; Krumhuber, E.G.; Lucas, G. Effectiveness of an Empathic Chatbot in Combating Adverse Effects of Social Exclusion on Mood. Front. Psychol. 2020, 10, 3061. [Google Scholar] [CrossRef]

- Denecke, K.; Vaaheesan, S.; Arulnathan, A. A Mental Health Chatbot for Regulating Emotions (SERMO) - Concept and Usability Test. IEEE Trans. Emerg. Topics Comput. 2021, 9, 1170–1182. [Google Scholar] [CrossRef]

- Inkster, B.; Sarda, S.; Subramanian, V. An Empathy-Driven, Conversational Artificial Intelligence Agent (Wysa) for Digital Mental Well-Being: Real-World Data Evaluation Mixed-Methods Study. JMIR Mhealth Uhealth 2018, 6, e12106. [Google Scholar] [CrossRef] [PubMed]

- Fitzpatrick, K.K.; Darcy, A.; Vierhile, M. Delivering Cognitive Behavior Therapy to Young Adults With Symptoms of Depression and Anxiety Using a Fully Automated Conversational Agent (Woebot): A Randomized Controlled Trial. JMIR Ment Health 2017, 4, e19. [Google Scholar] [CrossRef] [PubMed]

- Burns, J.M.; Davenport, T.A.; Durkin, L.A.; Luscombe, G.M.; Hickie, I.B. The Internet as a Setting for Mental Health Service Utilisation by Young People. Medical Journal of Australia 2010, 192. [Google Scholar] [CrossRef] [PubMed]

- Eagly, A.; Chaiken, S. The Psychology of Attitudes; Harcourt Brace Jovanovich College Publishers: Texas, 1993. [Google Scholar]

- Ajzen, I. Nature and Operation of Attitudes. Annu. Rev. Psychol. 2001, 52, 27–58. [Google Scholar] [CrossRef]

- Dehbozorgi, R.; Zangeneh, S.; Khooshab, E.; Nia, D.H.; Hanif, H.R.; Samian, P.; Yousefi, M.; Hashemi, F.H.; Vakili, M.; Jamalimoghadam, N.; et al. The Application of Artificial Intelligence in the Field of Mental Health: A Systematic Review. BMC Psychiatry 2025, 25, 132. [Google Scholar] [CrossRef]

- Stein, J.-P.; Messingschlager, T.; Gnambs, T.; Hutmacher, F.; Appel, M. Attitudes towards AI: Measurement and Associations with Personality. Sci Rep 2024, 14, 2909. [Google Scholar] [CrossRef]

- Sindermann, C.; Sha, P.; Zhou, M.; Wernicke, J.; Schmitt, H.S.; Li, M.; Sariyska, R.; Stavrou, M.; Becker, B.; Montag, C. Assessing the Attitude Towards Artificial Intelligence: Introduction of a Short Measure in German, Chinese, and English Language. Künstl Intell 2021, 35, 109–118. [Google Scholar] [CrossRef]

- Schepman, A.; Rodway, P. The General Attitudes towards Artificial Intelligence Scale (GAAIS): Confirmatory Validation and Associations with Personality, Corporate Distrust, and General Trust. International Journal of Human–Computer Interaction 2023, 39, 2724–2741. [Google Scholar] [CrossRef]

- Stein, J.-P.; Liebold, B.; Ohler, P. Stay Back, Clever Thing! Linking Situational Control and Human Uniqueness Concerns to the Aversion against Autonomous Technology. Computers in Human Behavior 2019, 95, 73–82. [Google Scholar] [CrossRef]

- Grassini, S. Development and Validation of the AI Attitude Scale (AIAS-4): A Brief Measure of General Attitude toward Artificial Intelligence. Front. Psychol. 2023, 14, 1191628. [Google Scholar] [CrossRef]

- Krägeloh, C.U.; Melekhov, V.; Alyami, M.M.; Medvedev, O.N. Artificial Intelligence Attitudes Inventory (AIAI): Development and Validation Using Rasch Methodology. Curr Psychol 2025, 44, 12315–12327. [Google Scholar] [CrossRef]

- Park, J.; Woo, S.E.; Kim, J. Attitudes towards Artificial Intelligence at Work: Scale Development and Validation. J Occupat & Organ Psyc 2024, 97, 920–951. [Google Scholar] [CrossRef]

- Kieslich, K.; Lünich, M.; Marcinkowski, F. The Threats of Artificial Intelligence Scale (TAI): Development, Measurement and Test Over Three Application Domains. Int J of Soc Robotics 2021, 13, 1563–1577. [Google Scholar] [CrossRef]

- Wang, Y.-Y.; Wang, Y.-S. Development and Validation of an Artificial Intelligence Anxiety Scale: An Initial Application in Predicting Motivated Learning Behavior. Interactive Learning Environments 2022, 30, 619–634. [Google Scholar] [CrossRef]

- McGrath, M.J.; Lack, O.; Tisch, J.; Duenser, A. Measuring Trust in Artificial Intelligence: Validation of an Established Scale and Its Short Form. Front. Artif. Intell. 2025, 8, 1582880. [Google Scholar] [CrossRef]

- Yılmaz, D.; Uzelli, D.; Dikmen, Y. Psychometrics of the Attitude Scale towards the Use of Artificial Intelligence Technologies in Nursing. BMC Nurs 2025, 24, 151. [Google Scholar] [CrossRef] [PubMed]

- El-Hachem, S.S.; Lakkis, N.A.; Osman, M.H.; Issa, H.G.; Beshara, R.Y. University Students’ Intentions to Seek Psychological Counseling, Attitudes toward Seeking Psychological Help, and Stigma. Soc Psychiatry Psychiatr Epidemiol 2023, 58, 1661–1674. [Google Scholar] [CrossRef]

- Lannin, D.G.; Vogel, D.L.; Brenner, R.E.; Abraham, W.T.; Heath, P.J. Does Self-Stigma Reduce the Probability of Seeking Mental Health Information? Journal of Counseling Psychology 2016, 63, 351–358. [Google Scholar] [CrossRef]

- Vogel, D.L.; Wade, N.G.; Hackler, A.H. Perceived Public Stigma and the Willingness to Seek Counseling: The Mediating Roles of Self-Stigma and Attitudes toward Counseling. Journal of Counseling Psychology 2007, 54, 40–50. [Google Scholar] [CrossRef]

- Algumaei, A.; Yaacob, N.M.; Doheir, M.; Al-Andoli, M.N.; Algumaie, M. Symmetric Therapeutic Frameworks and Ethical Dimensions in AI-Based Mental Health Chatbots (2020–2025): A Systematic Review of Design Patterns, Cultural Balance, and Structural Symmetry. Symmetry 2025, 17, 1082. [Google Scholar] [CrossRef]

- Casu, M.; Triscari, S.; Battiato, S.; Guarnera, L.; Caponnetto, P. AI Chatbots for Mental Health: A Scoping Review of Effectiveness, Feasibility, and Applications. Applied Sciences 2024, 14, 5889. [Google Scholar] [CrossRef]

- Hornstein, S.; Zantvoort, K.; Lueken, U.; Funk, B.; Hilbert, K. Personalization Strategies in Digital Mental Health Interventions: A Systematic Review and Conceptual Framework for Depressive Symptoms. Front. Digit. Health 2023, 5, 1170002. [Google Scholar] [CrossRef] [PubMed]

- Chakraborty, C.; Pal, S.; Bhattacharya, M.; Dash, S.; Lee, S.-S. Overview of Chatbots with Special Emphasis on Artificial Intelligence-Enabled ChatGPT in Medical Science. Front. Artif. Intell. 2023, 6, 1237704. [Google Scholar] [CrossRef] [PubMed]

- Chin, H.; Song, H.; Baek, G.; Shin, M.; Jung, C.; Cha, M.; Choi, J.; Cha, C. The Potential of Chatbots for Emotional Support and Promoting Mental Well-Being in Different Cultures: Mixed Methods Study. J Med Internet Res 2023, 25, e51712. [Google Scholar] [CrossRef] [PubMed]

- Laymouna, M.; Ma, Y.; Lessard, D.; Schuster, T.; Engler, K.; Lebouché, B. Roles, Users, Benefits, and Limitations of Chatbots in Health Care: Rapid Review. J Med Internet Res 2024, 26, e56930. [Google Scholar] [CrossRef]

- Moilanen, J.; Van Berkel, N.; Visuri, A.; Gadiraju, U.; Van Der Maden, W.; Hosio, S. Supporting Mental Health Self-Care Discovery through a Chatbot. Front. Digit. Health 2023, 5, 1034724. [Google Scholar] [CrossRef]

- Ogilvie, L.; Prescott, J.; Carson, J. The Use of Chatbots as Supportive Agents for People Seeking Help with Substance Use Disorder: A Systematic Review. Eur Addict Res 2022, 28, 405–418. [Google Scholar] [CrossRef]

- DeVellis, R.F.; Thorpe, C.T. Scale Development: Theory and Applications; Fifth edition.; SAGE Publications, Inc: Thousand Oaks, California, 2022; ISBN 978-1-5443-7934-0. [Google Scholar]

- Johnson, R.L.; Morgan, G.B. Survey Scales: A Guide to Development, Analysis, and Reporting; The Guilford Press: New York, 2016; ISBN 978-1-4625-2696-3. [Google Scholar]

- Saris, W.E. Design, Evaluation, and Analysis of Questionnaires for Survey Research; Second Edition.; Wiley: Hoboken, New Jersey, 2014; ISBN 978-1-118-63461-5. [Google Scholar]

- Gnambs, T.; Stein, J.-P.; Appel, M.; Griese, F.; Zinn, S. An Economical Measure of Attitudes towards Artificial Intelligence in Work, Healthcare, and Education (ATTARI-WHE). Computers in Human Behavior: Artificial Humans 2025, 3, 100106. [Google Scholar] [CrossRef]

- Klarin, J.; Hoff, E.; Larsson, A.; Daukantaitė, D. Adolescents’ Use and Perceived Usefulness of Generative AI for Schoolwork: Exploring Their Relationships with Executive Functioning and Academic Achievement. Front. Artif. Intell. 2024, 7, 1415782. [Google Scholar] [CrossRef]

- Beck, A.; Ward, C.; Mendelson, M.; Mock, J.; Erbaugh, J. An Inventory for Measuring Depression. Arch Gen Psychiatry 1961, 4, 561. [Google Scholar] [CrossRef] [PubMed]

- Beck, A.T.; Epstein, N.; Brown, G.; Steer, R.A. An Inventory for Measuring Clinical Anxiety: Psychometric Properties. Journal of Consulting and Clinical Psychology 1988, 56, 893–897. [Google Scholar] [CrossRef]

- Hamilton, M. The Assessment of Anxiety States by Rating. British Journal of Medical Psychology 1959, 32, 50–55. [Google Scholar] [CrossRef] [PubMed]

- Kroenke, K.; Spitzer, R.L.; Williams, J.B.W.; Lowe, B. An Ultra-Brief Screening Scale for Anxiety and Depression: The PHQ-4. Psychosomatics 2009, 50, 613–621. [Google Scholar] [CrossRef]

- Kroenke, K.; Spitzer, R.L.; Williams, J.B. The PHQ-9: Validity of a Brief Depression Severity Measure. J Gen Intern Med 2001, 16, 606–613. [Google Scholar] [CrossRef]

- Mughal, A.Y.; Devadas, J.; Ardman, E.; Levis, B.; Go, V.F.; Gaynes, B.N. A Systematic Review of Validated Screening Tools for Anxiety Disorders and PTSD in Low to Middle Income Countries. BMC Psychiatry 2020, 20, 338. [Google Scholar] [CrossRef]

- Spitzer, R.L.; Kroenke, K.; Williams, J.B.W.; Löwe, B. A Brief Measure for Assessing Generalized Anxiety Disorder: The GAD-7. Arch Intern Med 2006, 166, 1092–1097. [Google Scholar] [CrossRef]

- Zigmond, A.S.; Snaith, R.P. The Hospital Anxiety and Depression Scale. Acta Psychiatr Scand 1983, 67, 361–370. [Google Scholar] [CrossRef]

- Ayre, C.; Scally, A.J. Critical Values for Lawshe’s Content Validity Ratio: Revisiting the Original Methods of Calculation. Measurement and Evaluation in Counseling and Development 2014, 47, 79–86. [Google Scholar] [CrossRef]

- Meadows, K. Cognitive Interviewing Methodologies. Clin Nurs Res 2021, 30, 375–379. [Google Scholar] [CrossRef]

- Yusoff, M.S.B. ABC of Response Process Validation and Face Validity Index Calculation. EIMJ 2019, 11, 55–61. [Google Scholar] [CrossRef]

- Costello, A.B.; Osborne, J. Best Practices in Exploratory Factor Analysis: Four Recommendations for Getting the Most from Your Analysis. Pract Assess Res Eval 2005, 10, 1–9. [Google Scholar] [CrossRef]

- DeVon, H.A.; Block, M.E.; Moyle--Wright, P.; Ernst, D.M.; Hayden, S.J.; Lazzara, D.J.; Savoy, S.M.; Kostas--Polston, E. A Psychometric Toolbox for Testing Validity and Reliability. J of Nursing Scholarship 2007, 39, 155–164. [Google Scholar] [CrossRef] [PubMed]

- De Vaus, D. Surveys in Social Research; 5th ed.; Routledge: London, 2004.

- Yusoff, M.S.B.; Arifin, W.N.; Hadie, S.N.H. ABC of Questionnaire Development and Validation for Survey Research. EIMJ 2021, 13, 97–108. [Google Scholar] [CrossRef]

- Hair, J.; Black, W.; Babin, B; Anderson, R. Multivariate Data Analysis; 7th ed.; Prentice Hall: New Jersey, 2017. [Google Scholar]

- De Winter, J.C.F.; Dodou, D.; Wieringa, P.A. Exploratory Factor Analysis With Small Sample Sizes. Multivariate Behavioral Research 2009, 44, 147–181. [Google Scholar] [CrossRef]

- Kaiser, H.F. An Index of Factorial Simplicity. Psychometrika 1974, 39, 31–36. [Google Scholar] [CrossRef]

- Bland, J.M.; Altman, D.G. Statistics Notes: Cronbach’s Alpha. BMJ 1997, 314, 572–572. [Google Scholar] [CrossRef] [PubMed]

- Baumgartner, H.; Homburg, C. Applications of Structural Equation Modeling in Marketing and Consumer Research: A Review. International Journal of Research in Marketing 1996, 13, 139–161. [Google Scholar] [CrossRef]

- Hu, L.; Bentler, P.M. Fit Indices in Covariance Structure Modeling: Sensitivity to Underparameterized Model Misspecification. Psychological Methods 1998, 3, 424–453. [Google Scholar] [CrossRef]

- Putnick, D.L.; Bornstein, M.H. Measurement Invariance Conventions and Reporting: The State of the Art and Future Directions for Psychological Research. Developmental Review 2016, 41, 71–90. [Google Scholar] [CrossRef]

- Katsiroumpa, A.; Moisoglou, I.; Gallos, P.; Galani, O.; Tsiachri, M.; Lialiou, P.; Konstantakopoulou, O.; Lamprakopoulou, K.; Galanis, P. Artificial Intelligence Attitude Scale: Translation and Validation in Greek. Arch Hell Med Under press. 2025. [Google Scholar]

- Konstantakopoulou, O.; Katsiroumpa, A.; Moisoglou, I.; Gallos, P.; Galani, O.; Tsiachri, M.; Lialiou, P.; Lamprakopoulou, K.; Galanis, P. Attitudes Towards Artificial Intelligence Scale: Translation and Validation in Greek. Arch Hell Med Under press. 2025. [Google Scholar]

- Katsiroumpa, A.; Moisoglou, I.; Gallos, P.; Galani, O.; Tsiachri, M.; Lialiou, P.; Konstantakopoulou, O.; Lamprakopoulou, K.; Galanis, P. Short Trust in Automation Scale: Translation and Validation in Greek. Arch Hell Med Under press. 2025. [Google Scholar]

- World Medical Association World Medical Association Declaration of Helsinki: Ethical Principles for Medical Research Involving Human Subjects. JAMA 2013, 310, 2191. [CrossRef] [PubMed]

- World Health Organization Mental Health: Strengthening Our Response; 2024.

- World Health Organization Depression and Other Common Mental Disorders: Global Health Estimates; 2024.

- Grand View Research AI In Mental Health Market Size, Share & Trends Analysis Report; 2025.

- Data Bridge Market Research Global Mental Health Chatbot Services Market Size, Share, and Trends Analysis Report – Industry Overview and Forecast to 2032; 2025.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).