Submitted:

11 November 2025

Posted:

12 November 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

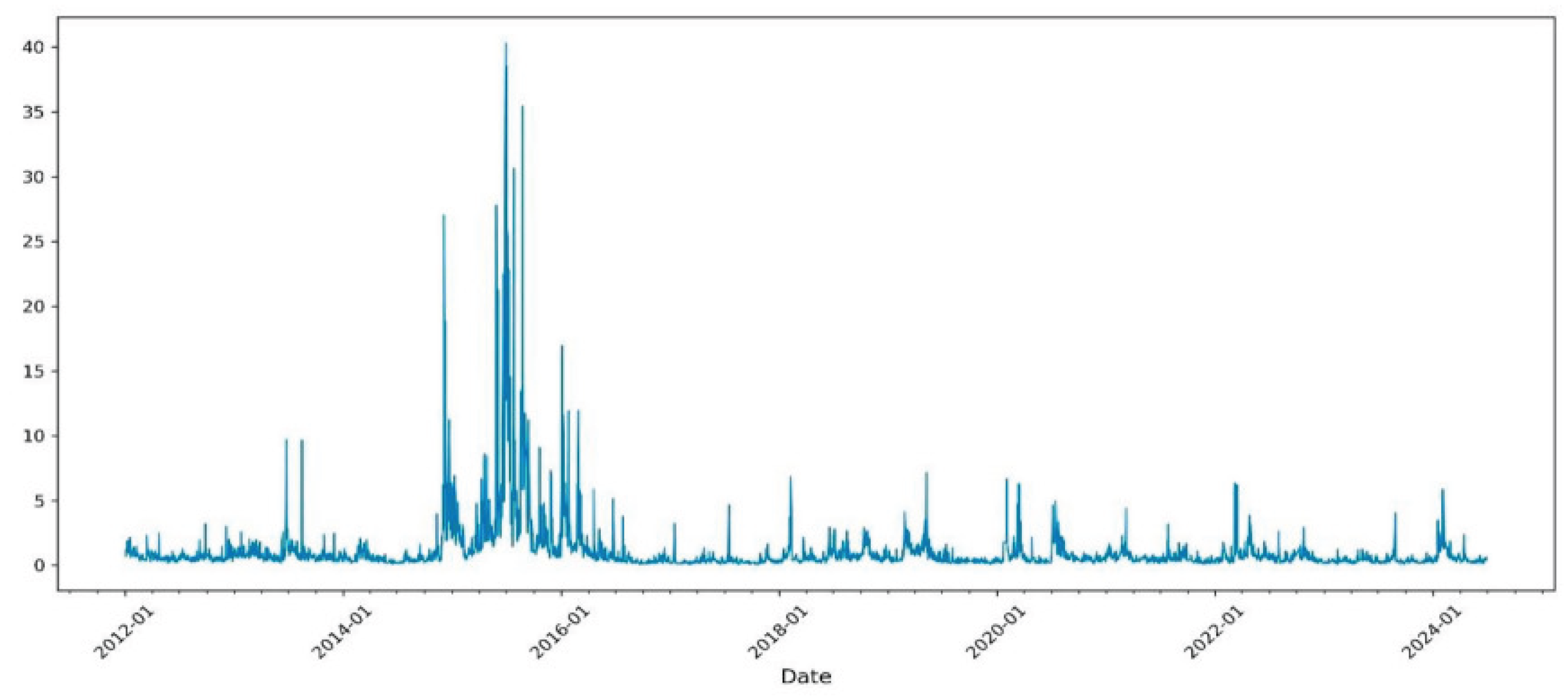

2.1. Data Sources and Sample Description

2.2. Experimental Design and Control Setup

2.3. Measurement Methods and Quality Control

2.4. Data Processing and Model Equations

2.5. Validation and Robustness Analysis

3. Results and Discussion

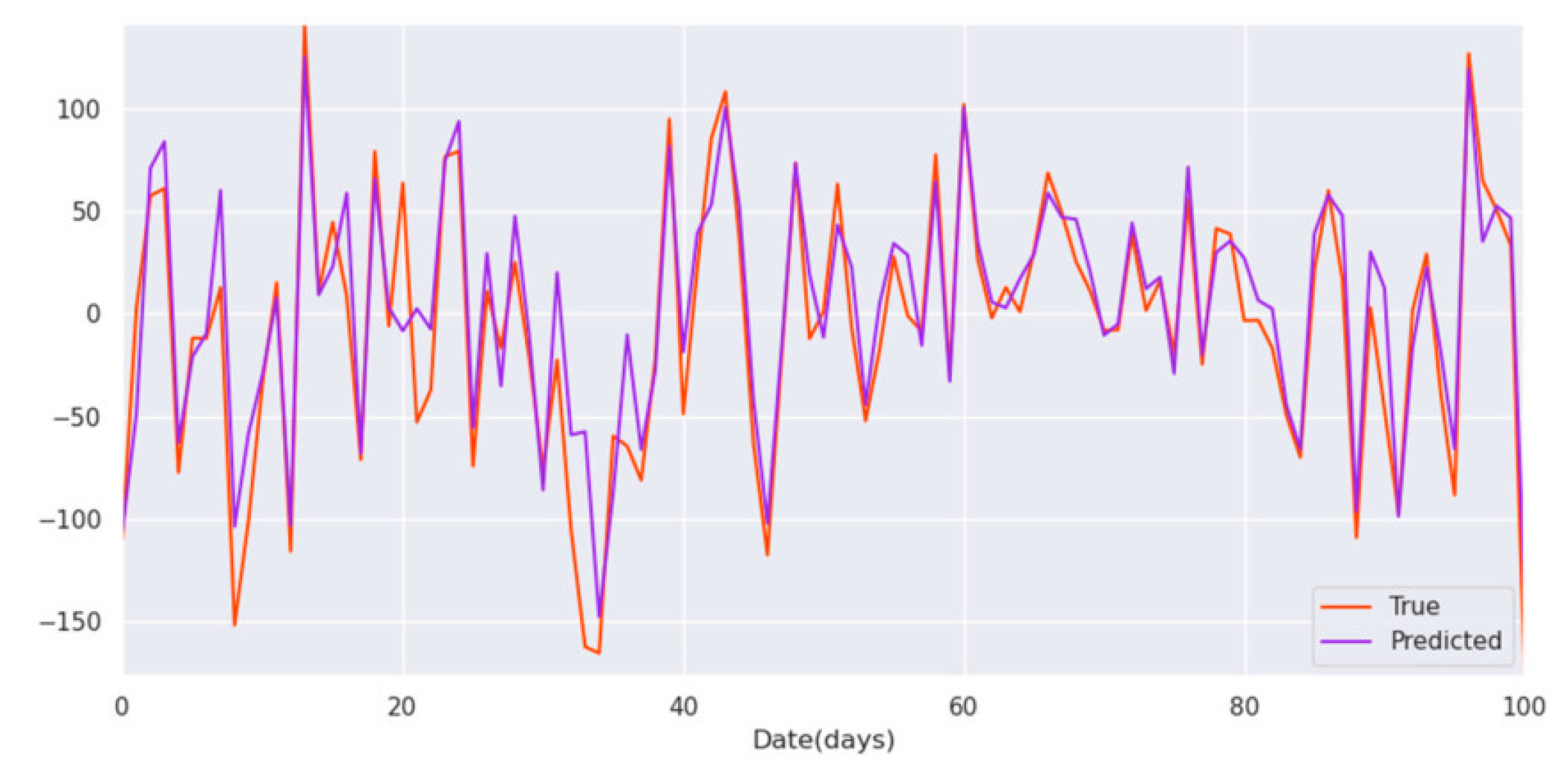

3.1. Overall Forecasting Performance

3.2. Contribution of the Multi-Scale Attention

3.3. Robustness in High-Volatility Phases

3.4. Comparison with Transformer-Based and Hybrid Models

4. Conclusion

References

- Nafiu, A., Balogun, S. O., Oko-Odion, C., & Odumuwagun, O. O. (2025). Risk management strategies: Navigating volatility in complex financial market environments. World Journal of Advanced Research and Reviews, 25(1), 236-250.

- James, N., & Menzies, M. (2024). Nonlinear shifts and dislocations in financial market structure and composition. Chaos: An Interdisciplinary Journal of Nonlinear Science, 34(7).

- Hu, Q., Li, X., Li, Z., & Zhang, Y. (2025). Generative AI of Pinecone Vector Retrieval and Retrieval-Augmented Generation Architecture: Financial Data-Driven Intelligent Customer Recommendation System.

- Nsengiyumva, E., Mung’atu, J. K., & Ruranga, C. (2025). A comparative study of multivariate CNN, BiLSTM and hybrid CNN–BiLSTM models for forecasting foreign exchange rate using deep learning. Cogent Economics & Finance, 13(1), 2526148.

- Whitmore, J., Mehra, P., Yang, J., & Linford, E. (2025). Privacy Preserving Risk Modeling Across Financial Institutions via Federated Learning with Adaptive Optimization. Frontiers in Artificial Intelligence Research, 2(1), 35-43.

- Liu, Z. (2022, January). Stock volatility prediction using LightGBM based algorithm. In 2022 International Conference on Big Data, Information and Computer Network (BDICN) (pp. 283-286). IEEE.

- Bulut, E. (2024). Market Volatility and Models for Forecasting Volatility. In Business Continuity Management and Resilience: Theories, Models, and Processes (pp. 220-248). IGI Global Scientific Publishing.

- Li, S. (2025). Momentum, volume and investor sentiment study for us technology sector stocks—A hidden markov model based principal component analysis. PLoS One, 20(9), e0331658.

- Benzi, K. M. (2017). From recommender systems to spatio-temporal dynamics with network science (Doctoral dissertation, Ecole Polytechnique Fédérale de Lausanne).

- Zhu, W., & Yang, J. (2025). Causal Assessment of Cross-Border Project Risk Governance and Financial Compliance: A Hierarchical Panel and Survival Analysis Approach Based on H Company’s Overseas Projects.

- Khattak, B. H. A., Shafi, I., Khan, A. S., Flores, E. S., Lara, R. G., Samad, M. A., & Ashraf, I. (2023). A systematic survey of AI models in financial market forecasting for profitability analysis. Ieee Access, 11, 125359-125380.

- Wang, J., & Xiao, Y. (2025). Assessing the Spillover Effects of Marketing Promotions on Credit Risk in Consumer Finance: An Empirical Study Based on AB Testing and Causal Inference.

- Li, T., Liu, S., Hong, E., & Xia, J. (2025). Human Resource Optimization in the Hospitality Industry Big Data Forecasting and Cross-Cultural Engagement.

- Stuart-Smith, R., Studebaker, R., Yuan, M., Houser, N., & Liao, J. (2022). Viscera/L: Speculations on an Embodied, Additive and Subtractive Manufactured Architecture. Traits of Postdigital Neobaroque: Pre-Proceedings (PDNB), edited by Marjan Colletti and Laura Winterberg. Innsbruck: Universitat Innsbruck.

- Sheng, Z., Liu, Q., Hu, Y., & Liu, H. (2025). A Multi-Feature Stock Index Forecasting Approach Based on LASSO Feature Selection and Non-Stationary Autoformer. Electronics, 14(10), 2059.

- Pawitra, M. T., Fakhrurroja, H., & Abdurrahman, L. (2024, October). Predicting Stock Market using CNN and BiLSTM Model. In 2024 International Conference on Computer, Control, Informatics and its Applications (IC3INA) (pp. 267-272). IEEE.

- Yang, J., Li, Y., Harper, D., Clarke, I., & Li, J. (2025). Macro Financial Prediction of Cross Border Real Estate Returns Using XGBoost LSTM Models. Journal of Artificial Intelligence and Information, 2, 113-118.

- Casolaro, A., Capone, V., Iannuzzo, G., & Camastra, F. (2023). Deep learning for time series forecasting: Advances and open problems. Information, 14(11), 598.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).