1. Introduction

Underwater environment perception, particularly optical visual perception, is crucial for the autonomous navigation and operation of underwater vehicles[

1]. The timeliness and accuracy of semantic segmentation, a key technology in underwater perception, directly impact the overall performance of these systems. Therefore, efficient and precise semantic segmentation algorithms are essential for the practical application of intelligent sensing systems in underwater vehicles[

2].

Deep neural networks, especially convolutional neural networks (CNNs), have recently shown great potential in the semantic segmentation of underwater images due to their excellent feature extraction and numerical regression capabilities[

3,

4]. However, most deep learning-based methods for underwater image segmentation require substantial amounts of labeled training data and precise pixel-wise labels for each image. The complex and unclear nature of underwater environments makes manual annotation labor-intensive and time-consuming, complicating the acquisition of large labeled datasets. To address this issue, semi-supervised semantic segmentation methods[

5,

6,

7,

8] have been proposed to reduce dependence on high-quality data by using self-/pseudo-supervision to perform segmentation without extensive manual labeling. Nonetheless, these methods often suffer from errors in pseudo labels. Previous self-training methods[

9,

10] always select pseudo labels based on confidence, derived from a model trained on a labeled subset. However, confidence-based methods have limitations: 1) To maintain a low error rate in pseudo labels, many low-confidence pseudo labels, which are naturally correct, are discarded; 2) Errors in pseudo-supervision from the model itself (i.e., self-error) can significantly hinder semi-supervised learning performance.

To overcome above challenges, we propose a novel semi-supervised method for semantic segmentation of underwater images, named Dynamic Mutual Adversarial Segmentation (DMAS). Our method employs a dual-model configuration where each model’s segmentations serve as checks for the other to enable the identification and correction of mislabeling. The key features of the DMAS methodology are as follows:

The DMAS framework is based on two essential sub-processes: The first stage involves adversarial pre-training of two segmentation networks and their respective discriminators to develop two preliminary pseudo-label annotation models. The second stage is dynamic mutual learning, which measures the discrepancies between different segmentation models through confidence maps to mitigate the effects of potential pseudo label labeling errors, thereby enhancing the accuracy of the training process.

The adversarial training method is mainly used for training a segmentation model and a fully convolutional discriminator with labeled data to generate pseudo-labels. This allows the discriminator to differentiate between real label maps and their predicted counterparts by generating a confidence map. Also, it enables a quantitative assessment of segmentation accuracy in specific regions of the pseudo-labels.

Dynamic mutual learning guides different models based on their varying prior knowledge. It leverages the divergence between these models to detect inaccuracies in pseudo-label generation. By employing a dynamically reweighted loss function, it reflects the discrepancies between two models trained with each other’s pseudo-labels, thereby assigning lower weights to pixels with a higher likelihood of error.

We validate the effectiveness of our proposed method on various underwater datasets, namely the DUT dataset and the SUIM dataset, demonstrating that the proposed semi-supervised learning algorithm is capable of enhancing the performance of models trained with limited and noisy annotations to be comparable to models fully-supervisedly trained with large amounts of labeled data.

3. Methodology

3.1. Overview

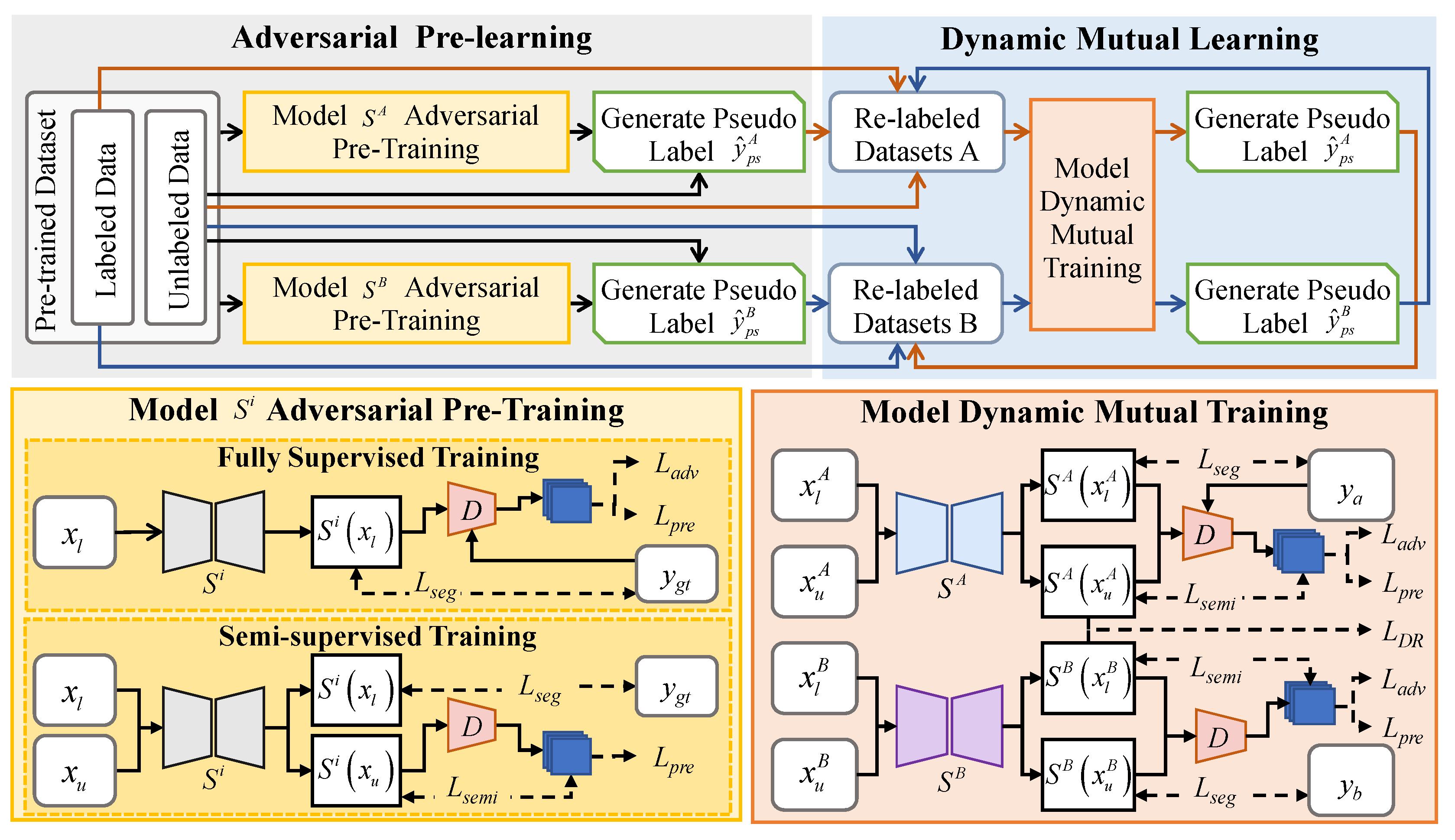

Figure 1 illustrates our DMAS method, which comprises a two-stage training process: adversarial pre-training stage and dynamic mutual learning stage.

The adversarial pre-training stage uses a pre-training dataset divided into limited labeled data and a larger set of unlabeled data to develop two pre-trained segmentation networks and a discriminator capable of providing a quality evaluation, and to generate initial pseudo labels . This stage includes two steps: fully supervised training and semi-supervised training. Initially, the segmentation model and the discriminator are fully supervised using images and their corresponding labels , within a generative adversarial training framework where acts as the generator. Then, using images , the probability maps from the trained discriminator serves as a supervision signal for semi-supervised training of . High-probability outputs are selected as initial pseudo labels . We differentiate and by integrating prior knowledge from the ImageNet dataset into one of them.

In the dynamic mutual learning stage, the pre-trained segmentation networks provide mutual supervision through dynamic self-training on re-labeled datasets. These datasets comprise initial labeled data , reliable pseudo-labeled data , and remaining unlabeled data . Training involves using images and , with labels , and the probability map as supervision signals for . Pseudo labels are then generated to update the pseudo-labeled data , and unlabeled data of the other re-labeled dataset. A dynamic re-weighting loss is introduced, utilizing the discrepancy between predicted confidence map and the discriminator-generated probability map to learn from the unlabeled data.

The purpose of this stage is to iteratively enhance the segmentation network models.The details on each component of our DMAS method will be described next.

3.2. Adversarial Pre-training

The adversarial pre-training stage employs a Generative Adversarial Network (GAN) structure to enhance the quality of generated pseudo-labels, consisting of the segmentation network

and the discriminator

. Subsequent pseudo-labels

are obtained by calculating probabilities through the discriminator on images generated by the segmentation network and selecting the top 10% of images. In this stage, both segmentation networks

and the discriminator

follow the same training process, which is divided into two steps: fully-supervised training and semi-supervised training, as detailed in Algorithm 1.

|

Algorithm 1 Model adversarial training. |

Input: Labeled data ; Unlabeled data

Output: Trained segmentation network ; Trained discriminator

for number of fully supervised training iterations do

for do

•Training segmentation network:

•Training discriminator:

end for

end for

for number of semi-supervised training iterations do

for do

•Training segmentation network:

end for

end for |

3.2.1. Fully Supervised Training

In the fully-supervised training step, the objective is to train the initial segmentation networks and the evaluation-capable discriminator using only labeled data . The labeled data includes images and their corresponding labels . We use the segmentation network to predict segmentation results from images , which contain confidence information for each class. The discriminator evaluates predicted results with the corresponding probability maps . To maintain the independence of the segmentation network and the discriminator, an alternating training strategy is employed: in each iteration, the segmentation network is trained while keeping the discriminator parameters fixed, and then the discriminator is trained while keeping the segmentation network parameters fixed. We use a multi-class segmentation loss function and a prediction loss function to optimize the segmentation network to deceive the discriminator; the adversarial loss is used to optimize the discriminator to detect subtle differences between predicted segmentation images and true labels. After fully-supervised training, the segmentation network attains initial segmentation capability, and the discriminator acquires the ability to evaluate the reliability of predicted segmentation images.

3.2.2. Semi-Supervised Training

In the semi-supervised training step, the model’s performance is further enhanced using unlabeled data

. The discriminator, which is proficient at distinguishing predictions from the segmentation network, generates probability maps that can act as a supervisory signal to identify areas consistent with the true label distribution. A threshold is applied to convert this map into a binary format, highlighting regions of high confidence. These regions, now serving as pseudo-labels, facilitate the self-training of the model, with the most effective iteration being determined through comparative evaluation against previous iterations. The semi-supervised training step focuses on minimizing the semi-supervised loss

while keeping the discriminator’s parameters fixed:

where

and

are the multi-class segmentation loss function and the prediction loss function, respectively, and

represents the semi-supervised multi-class cross-entropy loss. The hyperparameters

and

are used to balance the weights of the terms.

and

are the same as in supervised training. The semi-supervised cross-entropy loss

is defined as:

where

is the indicator function for high-probability pixel classification, while

is the threshold that controls the sensitivity of the semi-supervised process. By binarizing the probability map

, we can balance the credibility of generated pseudo-labels against the amount of data; specifically, a larger threshold

can increase the credibility of the pseudo-labels, but the number of usable pseudo-labels will decrease accordingly. To enhance the utilization of unlabeled data, we set

. Since

employs one-hot encoding, we set

to control category input. Therefore, when

, it follows that

; otherwise,

.

3.3. Dynamic Mutual Learning

As depicted in

Figure 1, during the Dynamic Mutual Learning (DML) stage, the segmentation network parameters are progressively refined through iterative updates of the dynamic mutual learning algorithm applied to the re-labeled datasets A and B. Dataset

consists of initial labeled data

, re-labeled data

, data iteratively updated from the other model

where

and

, and its corresponding reduced unlabeled data

. By analyzing the unlabeled images

, labeled images

, and corresponding labels

, the models iteratively update, leveraging differences to detect errors in automatically generated pseudo labels. Additionally, a dynamic reweighting loss

is introduced to account for the discrepancies between the two models. Each model

is trained using pseudo labels

generated by the other model

; thus, greater pixel-level discrepancies suggest higher error likelihoods and require reduced weighting in the loss function. This minimizes the impact of those pixels or regions with significant differences on the training process. The subsequent section details the dynamic mutual iterative framework and the dynamic reweighting loss.

3.3.1. Dynamic Mutual Iterative Framework

The dynamic mutual iteration framework employs an iterative learning model that progresses from simple to complex tasks. It involves repeatedly executing a "self-training" algorithm over multiple cycles, each containing numerous low-confidence pseudo labels. Effectively utilizing these pseudo labels is crucial for enhancing the use of unlabeled datasets. In this framework, training with deterministic labeled data and highly reliable pseudo labels aligns with previous semi-supervised training, utilizing an adversarial generation method. The primary distinction lies in how pseudo labels with lower relative reliability are used. Within a specific loop, we stabilize the segmentation model

and generate pseudo labels for the unlabeled image

, denoted as

. These pseudo labels are then passed through a discriminator to obtain a probability map

. By leveraging these probability maps, we can compare pseudo labels across different iterations and refine them for training segmentation model

by calculating the dynamic reweighting loss

. After appropriate weighting, the loss

is combined with the semi-supervised training loss

to derive the final loss

L, aiding in the training of segmentation model

, as shown in Equation

3.

Similarly, segmentation model can be employed to train segmentation model .

3.3.2. Dynamic Reweighting Loss

We introduce the dynamic reweighting loss with inter-model disagreement as reweighting weight. Assuming segmentation model

is used to train segmentation model

, let

represent the unlabeled images from unlabeled data

. We define its pseudo label as

, with

being the probability map. Similarly,

is the prediction during training, with

as the probability map. In the loop iteration of training the segmentation model

, let

represent the predicted probability of class

c by

. The dynamic reweighting loss weight

is defined as:

The dynamic reweighting loss on unlabeled samples,

, is then defined as:

In the loop iteration of training the segmentation model , is calculated using the same method, except that the results of the two segmentation models are swapped in order.

4. Experiments

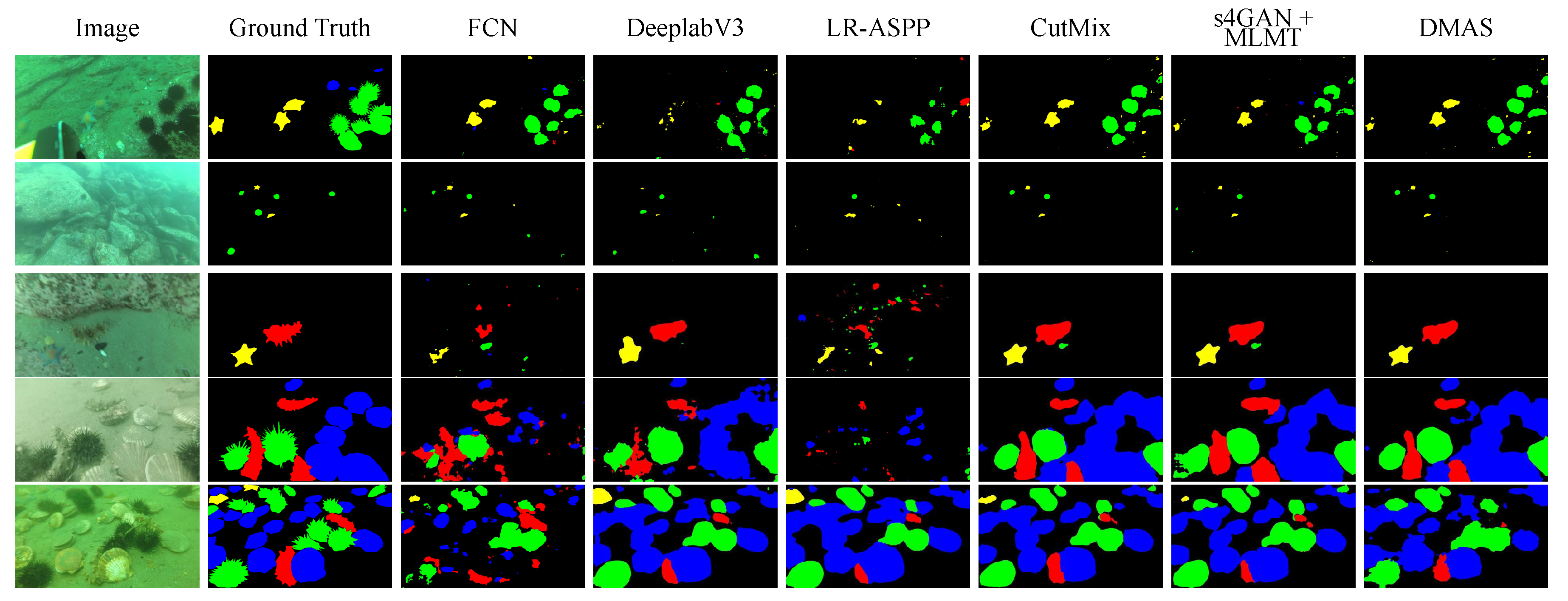

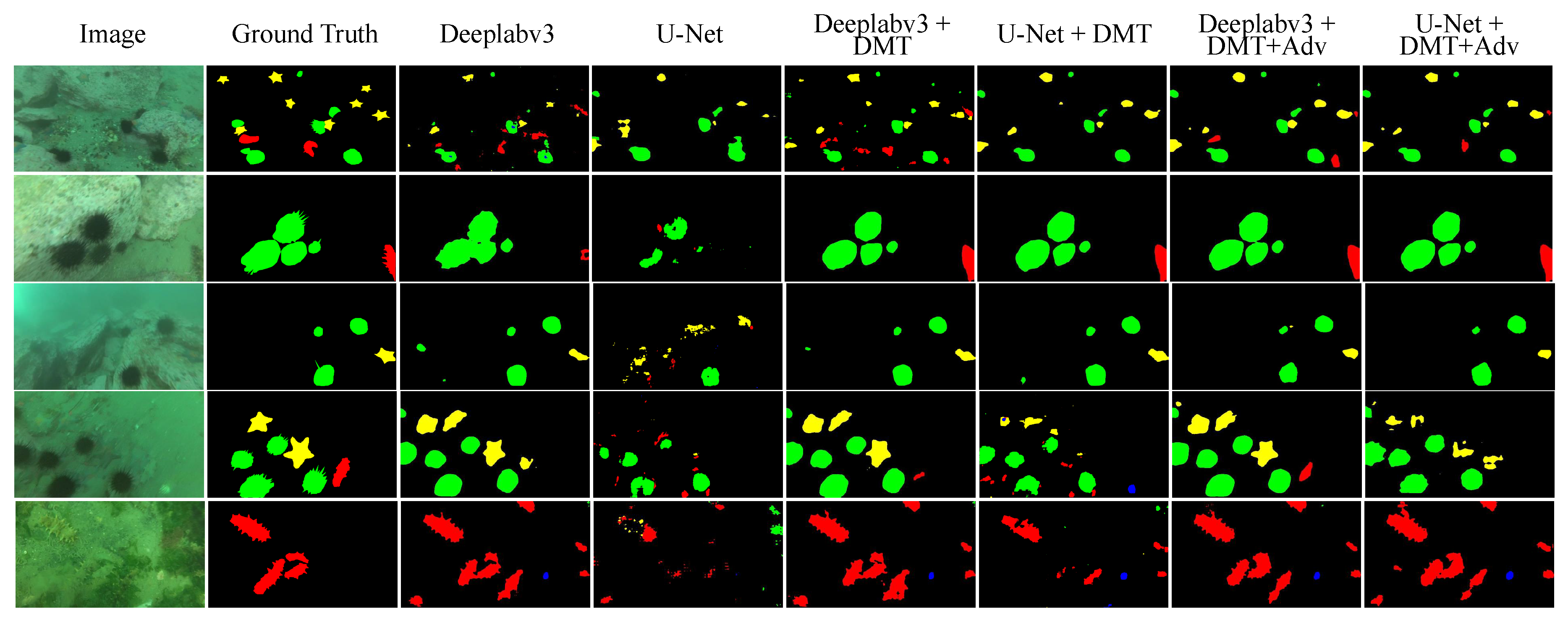

Figure 2.

Visualization of comparative experimental results under 0.125 labeled ratio on UO datasets.The DMAS method demonstrates a significant advantage over the currently prevalent full-supervision semantic segmentation algorithms.

Figure 2.

Visualization of comparative experimental results under 0.125 labeled ratio on UO datasets.The DMAS method demonstrates a significant advantage over the currently prevalent full-supervision semantic segmentation algorithms.

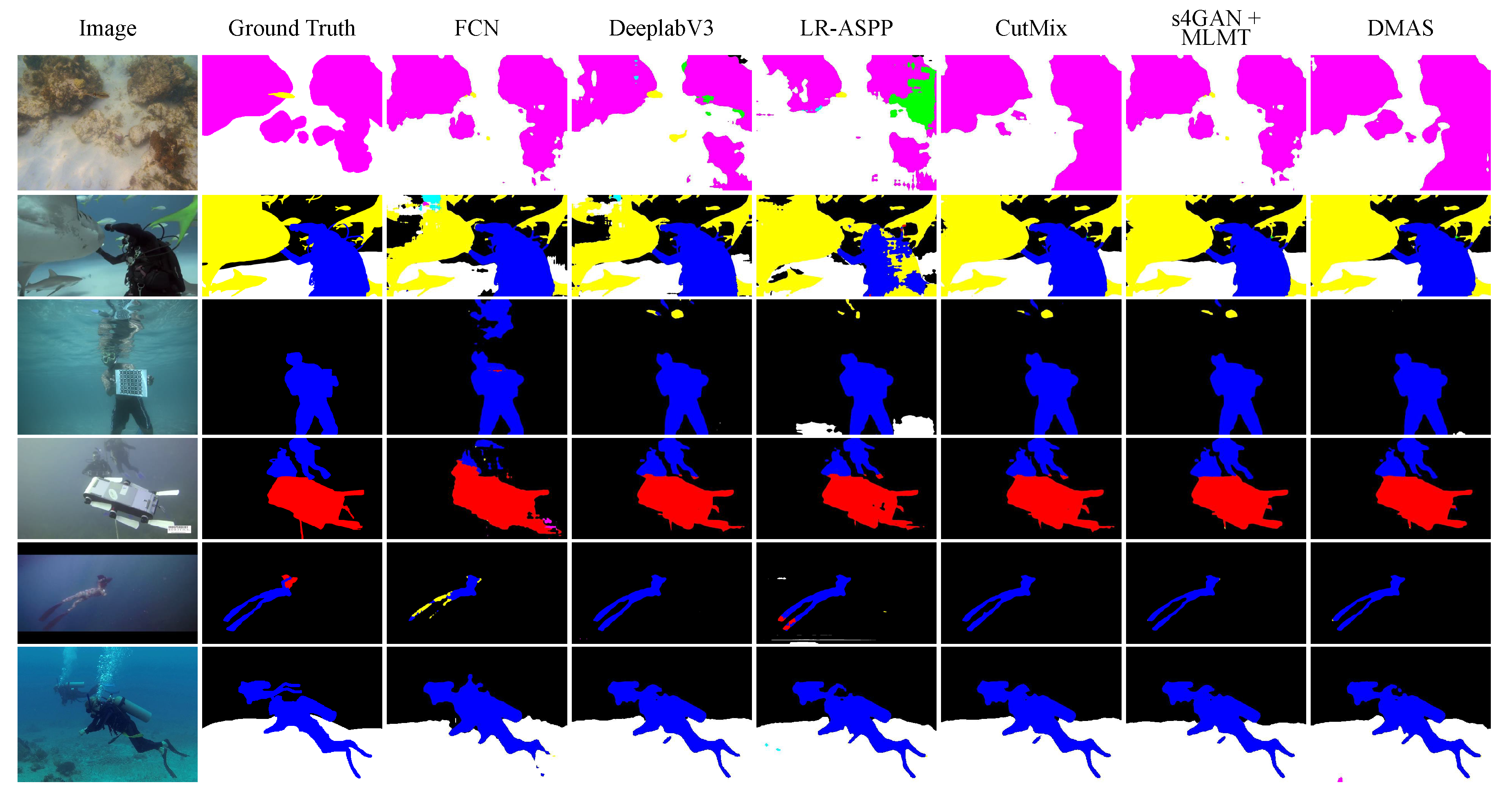

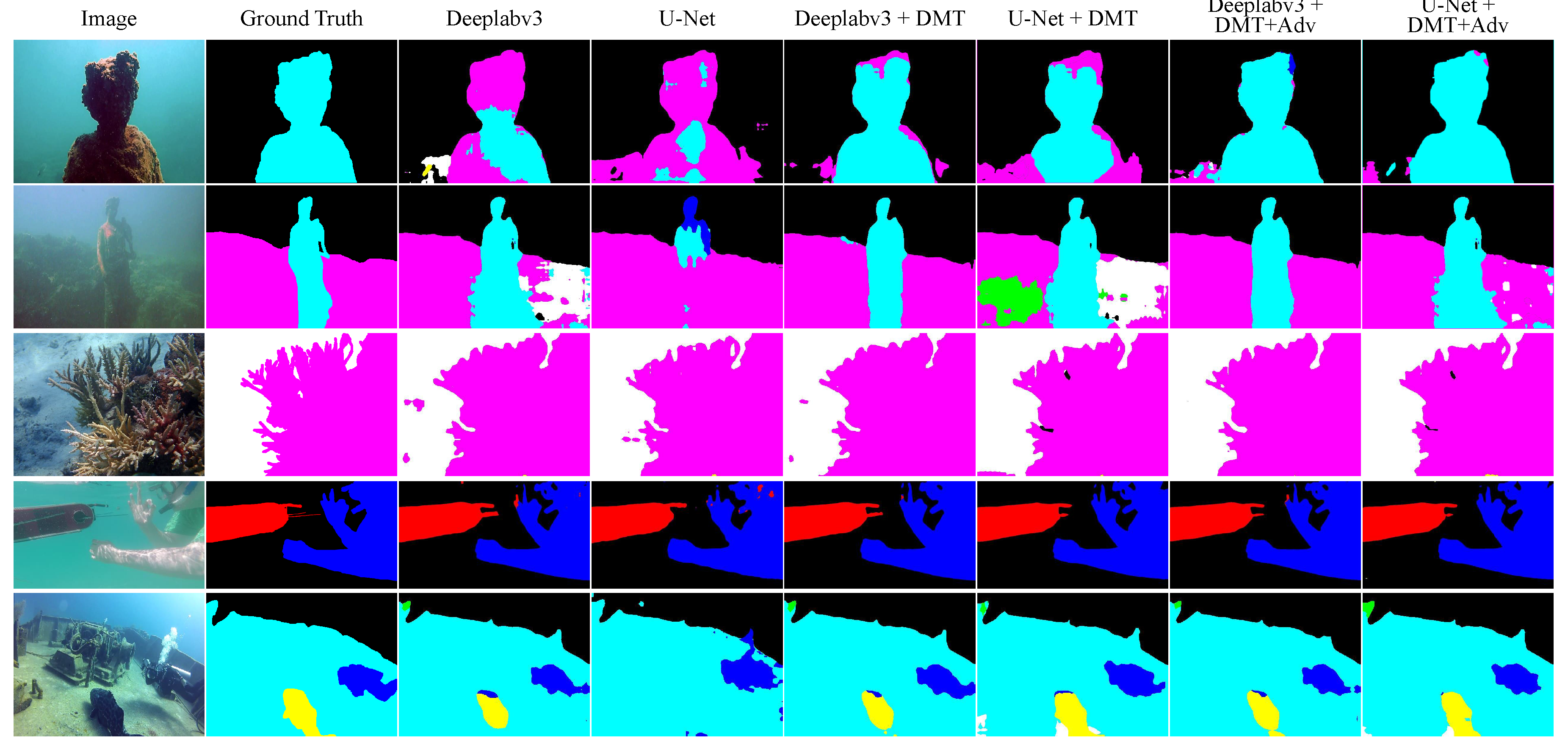

Figure 3.

Visualization of comparative experimental results under 0.125 labeled ratio on SUIM datasets.DMAS excels in semi-supervised segmentation, outperforming full-supervision methods, despite minor edge delineation gaps.

Figure 3.

Visualization of comparative experimental results under 0.125 labeled ratio on SUIM datasets.DMAS excels in semi-supervised segmentation, outperforming full-supervision methods, despite minor edge delineation gaps.

4.1. Dataset

To evaluate the effectiveness of the proposed Dynamic Mutual Adversarial Segmentation (DMAS), we conducted experiments on two publicly available underwater datasets: DUT and SUIM. The DUT dataset includes 6617 images, of which 1487 images have semantic segmentation and instance segmentation annotations, and the remaining 5130 images have object detection box annotations, categorized into four classes: starfish, holothurian, scallops, and echinus. The SUIM dataset includes 1525 images for training and validation, along with 110 test images, each with pixel-level annotations. It encompasses eight categories: fish(FS), reefs(RF), aquatic plants(PL), wrecks/ruins(WR), human divers(DR), underwater robots(RB), and seafloor(SF). Both datasets are divided into training and testing datasets in an 7:3 ratio.

In the training set, we partitioned the labeled and unlabeled data using varying labeled ratios (0.5, 0.25, 0.125, and 1). Specifically, at each random shuffle of the entire training set, the first 0.5, 0.25, and 0.125 fractions were used as labeled data. When the labeled ratio is set to 1, it denotes fully supervised learning, involving only the training and testing sets.

4.2. Implementation Details

The proposed method was implemented using PyTorch. The evaluation was conducted on a server equipped with an Intel Xeon(R) Silver 4214R CPU and an NVIDIA RTX A6000 48GB GPU. We utilized DeepLabv3 with ResNet-101[

32] as the backbone. The entire model was trained for 40,000 iterations, saving the model every 200 iterations during the dynamic mutual learning. The optimal model was selected based on predictions on the test set.

For pre-training, the initial learning rate was set to 0.001, with a weight decay of . The discriminator was trained using the Adam optimizer with a learning rate of . For hyperparameters in the SUISS method, we set them to 0.01 and 0.001 when using labeled and unlabeled data, respectively. Random cropping, random mirroring, and other data augmentation techniques were applied to mitigate overfitting and enhance model performance. To ensure the difference between Model A and Model B, all experiments below show that Model A is an untrained network, while Model B is a pre trained network using the ImageNet dataset.

4.3. Evaluation Metrics

To objectively evaluate and analyze the performance of the DMAS method, we used the mean Pixel Accuracy (mPA) and mean Intersection over Union (mIoU) as comprehensive evaluation metrics for segmentation effectiveness. True Positive (TP) represents the number of correctly predicted samples, False Negative (FN) represents the number of falsely predicted samples, False Positive (FP) represents the number of samples falsely identified as positive, and True Negative (TN) represents the number of correctly identified negative samples. Pixel Accuracy (PA) is defined as:

IoU indicates the intersection over the union between the predicted and the actual value for each category. mIoU is the average IoU across all categories, and its calculation is shown:

4.4. Performance Comparison

We compared the DMAS method on the DUT and SUIM datasets with several widely used fully supervised semantic segmentation algorithms, including:

a) Supervised learning with fine tuning: FCN[

33], DeeplabV3[

23] and LR-ASPP[

34] methods.

b) Semi-supervised learning: CutMix augmentation[

35] and hybrid method s4GAN+MLMT[

36].

All the above algorithms were implemented on the PyTorch platform, utilizing the same NVIDIA RTX A6000 48GB GPU, and were evaluated using the same pre-training and parameters on both the DUT and SUIM datasets.

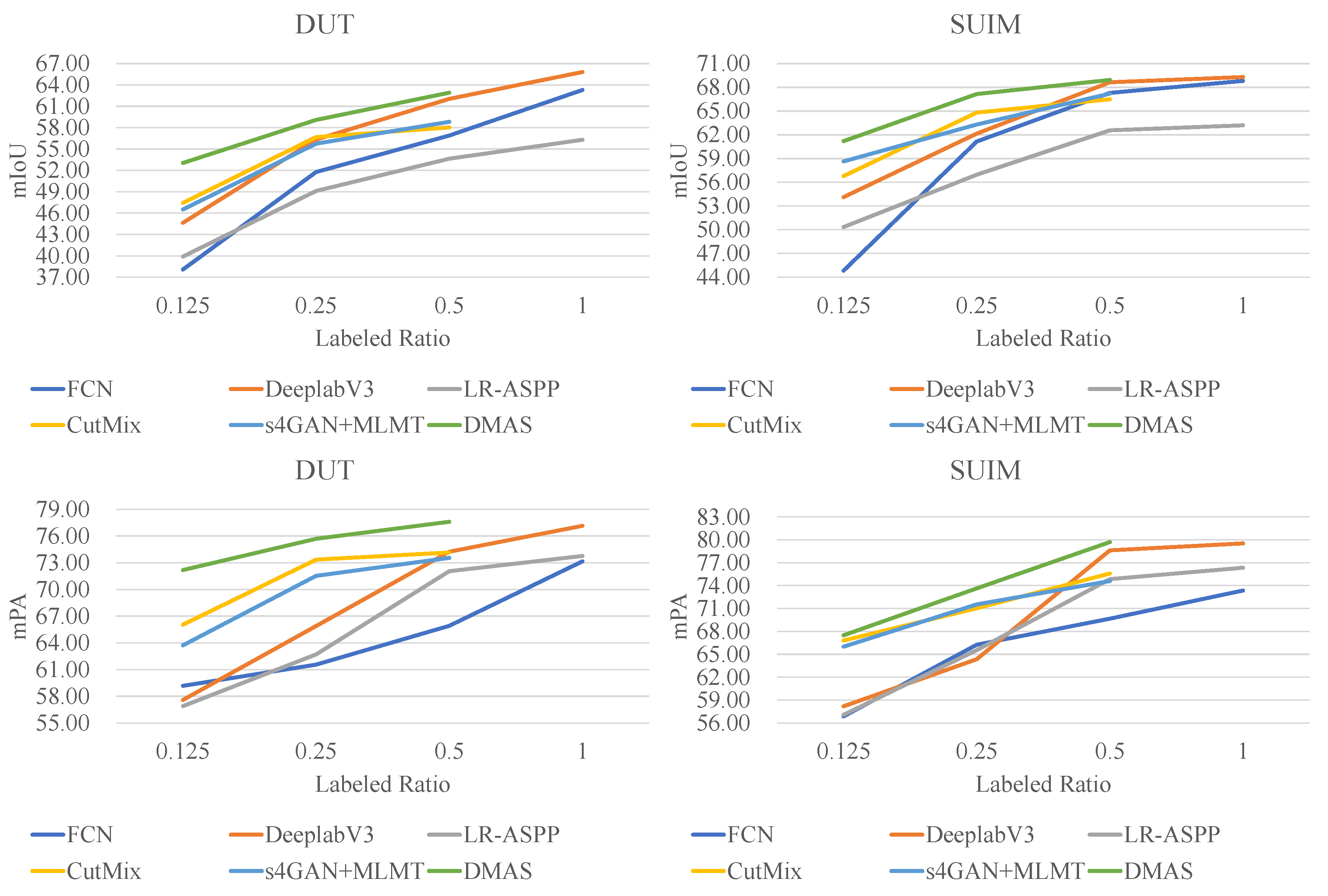

4.4.1. Quantitative Analysis

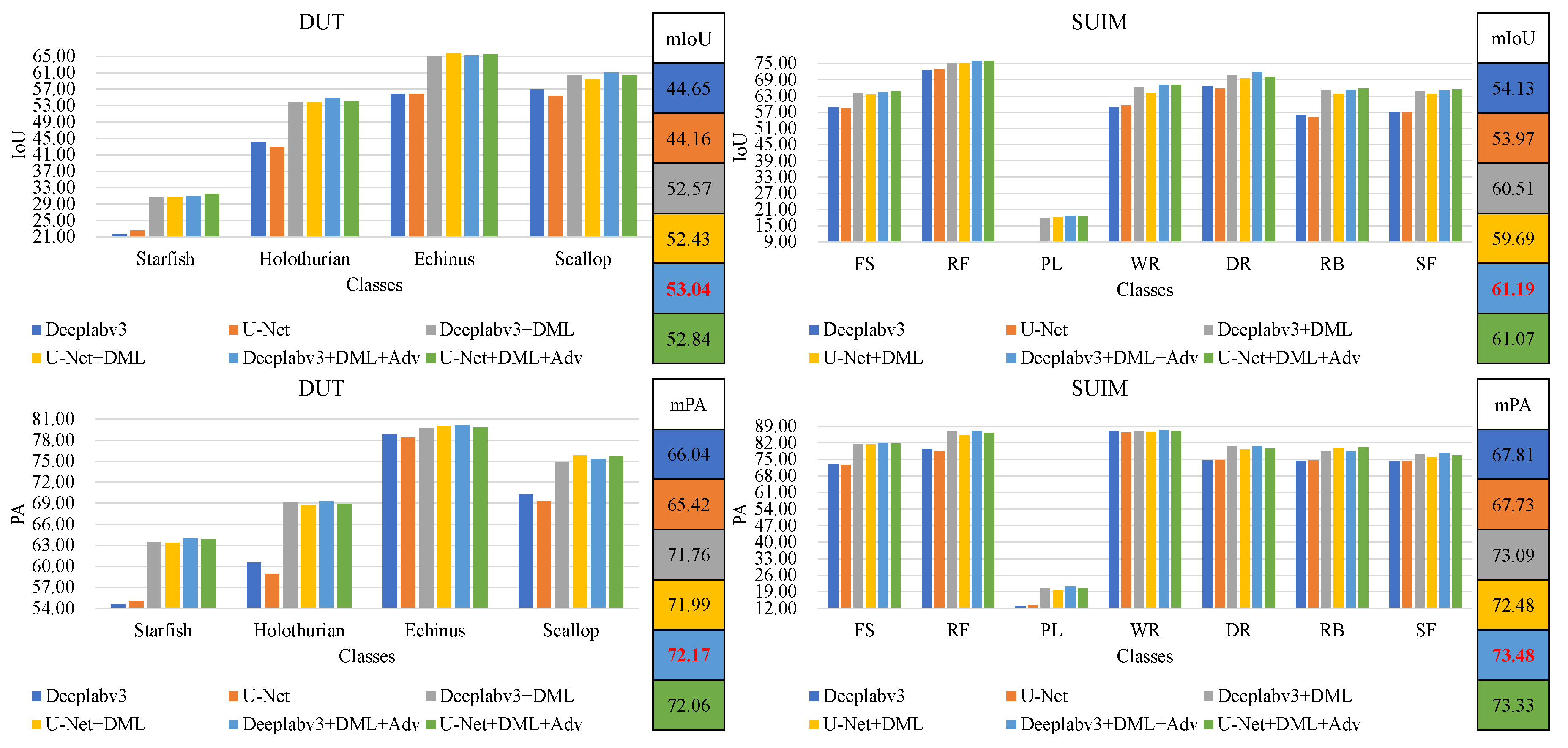

Figure 4 display the differential outcomes of semantic segmentation experiments conducted at varying labeled ratios on the DUT and SUIM datasets, respectively. Analyzing these tables reveals that our proposed method, DMAS, exhibits superior performance compared to existing supervised and semi-supervised learning approachs, even with limited data availability in the DUT and SUIM datasets. Notably, DMAS maintains a consistently high performance regardless of reduced training sample sizes. For instance, at a labeled ratio of 0.125, DMAS achieves commendable mIoU and mPA metrics, comparable to those obtained by LR-ASPP in a fully supervised training scenario. These results highlight the robustness and effectiveness of DMAS in handling scenarios with sparse labeled data. The data clearly support the hypothesis that the semi-supervised DMAS algorithm provides a significant advantage in semantic segmentation tasks, particularly when labeled ratios are limited, thus establishing its superiority in data-efficient learning models.

4.4.2. Qualitative Analysis

To provide a more tangible evaluation of DMAS’s performance, we conducted training on the DUT and SUIM datasets with an annotation ratio of 0.125. The comparative results are illustrated in

Figure 2 and

Figure 3. It is apparent that, even with a limited number of annotated samples, the DMAS method significantly outperforms other semantic segmentation methods based on full supervision or semi-supervision, particularly in terms of pixel classification accuracy and object boundary delineation. Specifically, as shown in the fifth row of

Figure 2 and the third column of

Figure 3, when various methods segment the same image, DMAS demonstrates superior performance in contour edge articulation, pixel classification over larger areas, and the accuracy of classification for smaller objects, surpassing other methods. This reflects DMAS’s efficacy in leveraging unlabeled data to enhance the model’s segmentation capability, thereby achieving successful semi-supervised learning. However, there remains a noticeable gap between DMAS’s predictions and the actual labels, as illustrated in the first row of

Figure 2 and the thirteenth row of

Figure 3. The precision of edge segmentation and regional delineation for targets is still suboptimal, which may be due to inherent performance limitations of the segmentation network or an insufficiency of training data. Overall, in terms of achieving more accurate predictions for underwater image at a reduced cost, the DMAS method demonstrates a significant advantage over the currently prevalent full-supervision semantic segmentation algorithms.

4.5. Ablation Study

To further assess the rationality of the framework design choice in the DMAS method, we conducted ablation experiments on two underwater datasets. Initially, the baseline network consists of a fully supervised trained model within the DMAS method’s segmentation network, including Deeplabv3 and U-Net. Subsequently, this baseline network is enhanced with dynamic mutual learning. In contrast, the DMAS method not only incorporates the baseline network but also introduces model adversarial training and a dynamic mutual learning learning strategy. Under a labeling rate of 0.125, these experiments employed the same pre-trained dataset, trained on the training dataset, and subsequently evaluated on the test dataset. The quantitative analysis of the test sets for both datasets is illustrated in

Figure 5. Training with the dynamic mutual learning method within the same experimental setup has significantly enhanced the model’s segmentation precision compared to solely using the baseline network. Concurrently, the inclusion of an adversarial training strategy has improved the algorithm’s overall performance. For discernible underwater targets, the mean Pixel Accuracy (mPA) exceeds 0.7, and for well-segmented instances, it can reach up to 0.8. This indicates that the dynamic mutual learning strategy can effectively utilize unlabeled data to extract associative information, thereby optimizing the segmentation model.

As illustrated by the visualization results in

Figure 6 and

Figure 7, models trained using the DMAS method can segment the majority of underwater target areas more proficiently. When faced with low-contrast and indistinct contour targets (as shown in the third row of

Figure 6 and the second row of

Figure 7), the models exhibit minimal classification errors and perform superior segmentation of small targets (as shown in the fourth row of

Figure 6) and certain peripheral details. In the case of semantic segmentation of concealed targets (as shown in rows one and five of

Figure 6), numerous errors still persist, yet there is a relative enhancement in classification accuracy compared to the other two methods.

4.6. Discussion

The increasing demand for advanced underwater detection and monitoring technologies has made underwater image semantic segmentation a prominent research area. Researchers are increasingly turning to convolutional neural networks (CNNs) and their variants. These models enhance the accuracy and robustness of segmentation. These models automatically learn hierarchical features, making them well-suited for addressing the complex patterns and textures characteristic of underwater scenes.

Despite these advancements, the field of underwater image semantic segmentation continues to face numerous challenges: 1) The lack of large annotated datasets limits the training and validation of deep learning algorithms. 2) Factors such as low visibility, poor contrast, and the presence of various underwater elements often contribute to significant annotation noise, thereby obscuring the segmentation results.

To tackle the challenges posed by limited noise annotations, many studies have proposed self-training methods, wherein models iteratively label and retrain on their predicted results. This method has been applied to underwater image segmentation. Especially when combined with confidence thresholds, this method can gradually enhance the model’s performance on noisy data. However, a notable limitation of self-training methodes is the lack of mechanisms to detect and correct errors during the training process. Furthermore, the choice of confidence threshold directly impacts the effective utilization of low-confidence pseudo-labels.

To address the issue of underwater image semantic segmentation with limited noisy annotations, we have developed more sophisticated solutions. By leveraging collaborative platforms and crowdsourcing techniques, we can aggregate multiple annotations, which helps reduce noise levels in the training dataset. Aggregated data validated through multiple sources can provide higher quality annotations. We enable dynamic mutual learning between two distinctly different models. Each model iteratively retrains on unannotated data, using pseudo-labels generated by the other model. This process allows the models to guide each other, enhancing their noise correction capabilities. Simultaneously, in selecting the confidence threshold for pseudo-label selection, we no longer rely on traditional confidence levels. Instead, inspired by generative adversarial concepts, we train a discriminator to focus on the performance of pseudo-labels, thereby making their selection more adaptive.

Our method significantly reduces noise levels in underwater image semantic segmentation, but there are still limitations that need to be addressed. Although dynamic mutual learning theoretically improves noise correction in models, its practical application can cause instability during training due to interdependencies among models, which affects convergence speed and overall performance. This is particularly evident in model selection, which consequently affects the efficacy of dynamic mutual learning. To tackle these challenges, we intend to integrate more advanced semantic segmentation network architectures, including multi-scale feature extraction and multi-task learning, to enhance the model’s ability to handle complex underwater environments. Furthermore, we plan to explore multimodal learning methods to improve the model’s robustness and generalization by integrating diverse sensor data, including vision and sonar.

5. Conclusions

This paper introduces a novel semi-supervised method for underwater image semantic segmentation, named Dynamic Mutual Adversarial Segmentation (DMAS). The method employs a two-stage framework: adversarial pre-training and dynamic mutual learning. In the adversarial pre-training stage, the segmentation model is initially trained using labeled data to generate pseudo-labels. Concurrently, a convolutional discriminator is trained on both the segmentation maps derived from labeled and unlabeled data. This dual training process enables the network to differentiate between actual and predicted labels by producing a confidence map, which aids in qualitatively assessing the segmentation accuracy.During the dynamic mutual learning stage, multiple models with different prior knowledge are trained. By analyzing the divergence between these models, errors in the pseudo-labels can be detected. The training process incorporates a dynamically reweighted loss function, which adjusts weights based on the differences between the models. This method reduces the impact of discrepancies that suggest potential errors, thereby improving the precision of the training process.Experimental results on the DUT and SUIM datasets indicate that DMAS significantly enhances semantic segmentation performance, even with a limited amount of labeled data.