Submitted:

28 October 2025

Posted:

29 October 2025

You are already at the latest version

Abstract

Keywords:

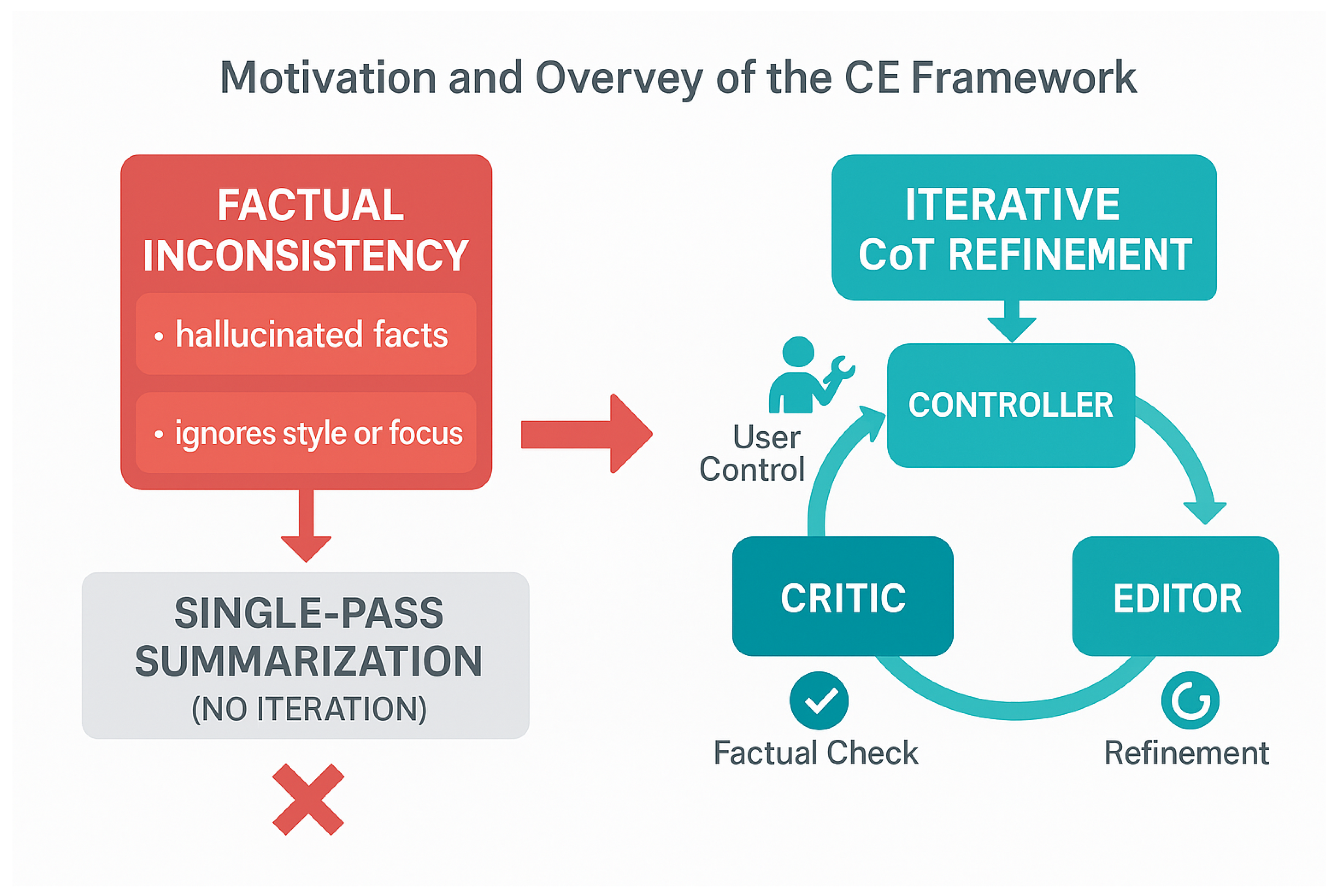

1. Introduction

- We propose C3E, a novel multi-round, controllable chain-of-thought critic-editor framework that effectively addresses both factual inconsistency and lack of user control in LLM-generated summaries.

- We introduce an explicit "Controller" module and integrate specialized zero-shot CoT prompting strategies (e.g., ControllerCoT, IntegratedCoT) to enable fine-grained user control over summary generation and post-editing.

- We extend the FRANK dataset with diverse user control instructions and develop a comprehensive evaluation methodology, including the Control Adherence Score (CAS), to robustly assess both factual consistency and control compliance.

2. Related Work

2.1. LLM-Based Summarization and Factual Consistency

2.2. Controllable Text Generation and Iterative Refinement

3. Method

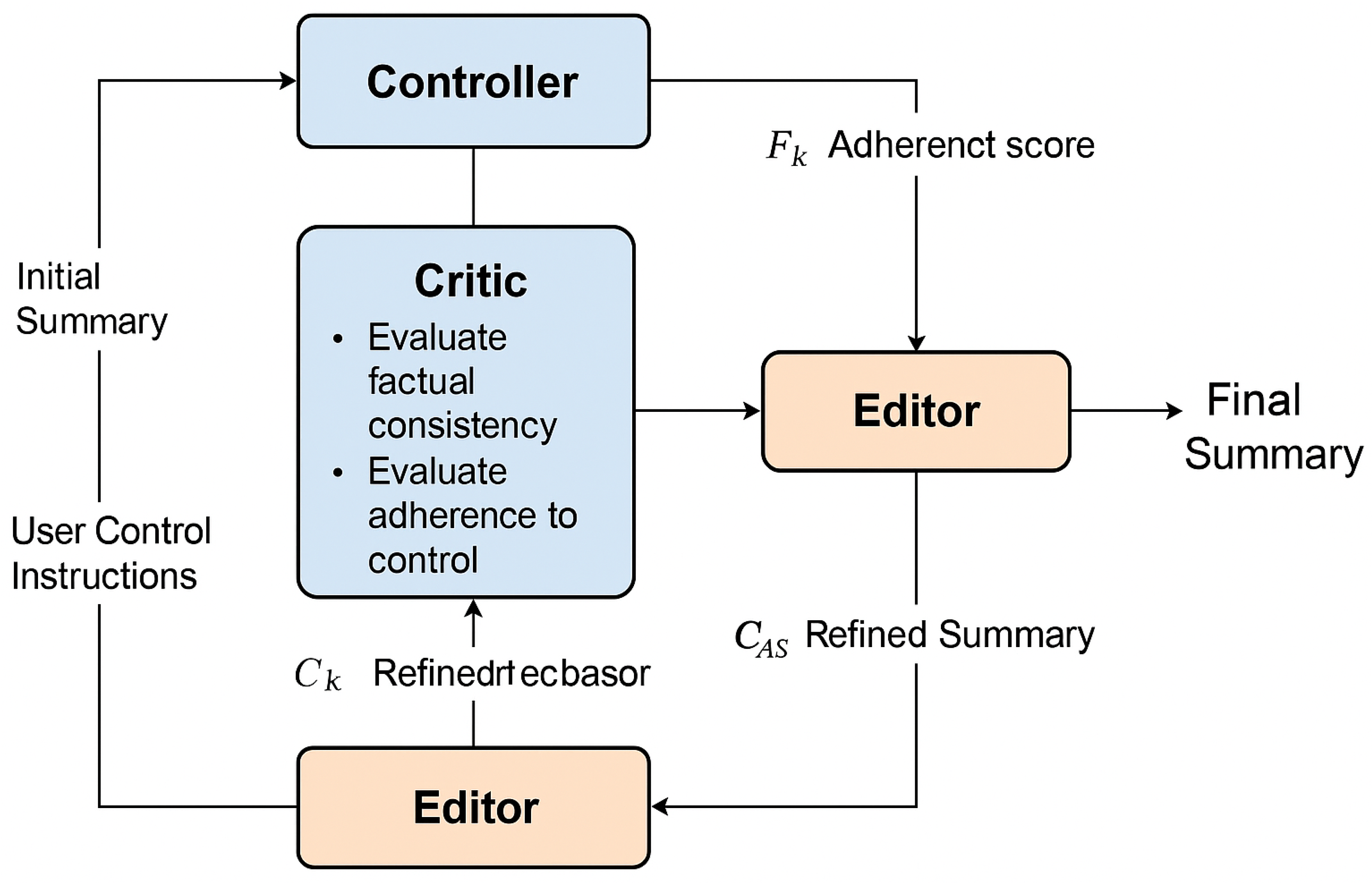

3.1. Framework Overview

3.2. Core Modules

3.2.1. Controller Module

3.2.2. Critic Module

3.2.3. Editor Module

- EditorCoT: This prompt primarily focuses on correcting factual inconsistencies identified by the Critic. It guides the LLM to analyze the problematic span and error type, then formulate a specific correction strategy (e.g., deleting a hallucinated sentence, rephrasing an inaccurate claim based on ), and finally apply the correction to produce a more accurate summary segment.

- IntegratedCoT: This is a more advanced prompt that combines the goals of factual consistency correction and control adherence optimization into a single CoT reasoning process. This allows the Editor to simultaneously consider the identified factual errors and the user’s control instructions during rewriting, leading to more holistic improvements. For instance, the prompt might first instruct the LLM to locate an inconsistent span and error type, then to propose a rewrite, and finally to verify that the new text not only corrects the error but also adheres to length or focus requirements specified in C.

3.3. Multi-Round Iteration and Chain-of-Thought Reasoning

- CoT in Controller: The ControllerCoT prompt guides the initial generation or modification process by requiring the LLM to explicitly consider and address each aspect of the user’s control instructions, ensuring that the starting summary is well-aligned with the user’s intent from the outset.

- CoT in Critic: The CriticCoT prompt enables a detailed and systematic analysis of factual consistency by tracing information back to the source document and methodically checking against control instructions. This structured reasoning allows for precise identification of problematic spans, explicit justification for score assignments, and the generation of highly specific and actionable feedback ().

- CoT in Editor: The Editor’s CoT prompts (EditorCoT and IntegratedCoT) facilitate guided rewriting. The CoT steps involve understanding the Critic’s feedback, formulating a precise plan for correction (e.g., deleting, replacing, rephrasing a specific span), and then executing the plan while maintaining coherence, factual accuracy, and adherence to all control instructions.

3.4. Implementation Details

4. Experiments

4.1. Experimental Setup

4.1.1. Large Language Models

4.1.2. Datasets

4.1.3. Evaluation Metrics

4.1.4. Implementation Details

4.2. Baselines

4.3. Main Results

4.4. Effectiveness of Multi-Round Iteration and Chain-of-Thought Reasoning

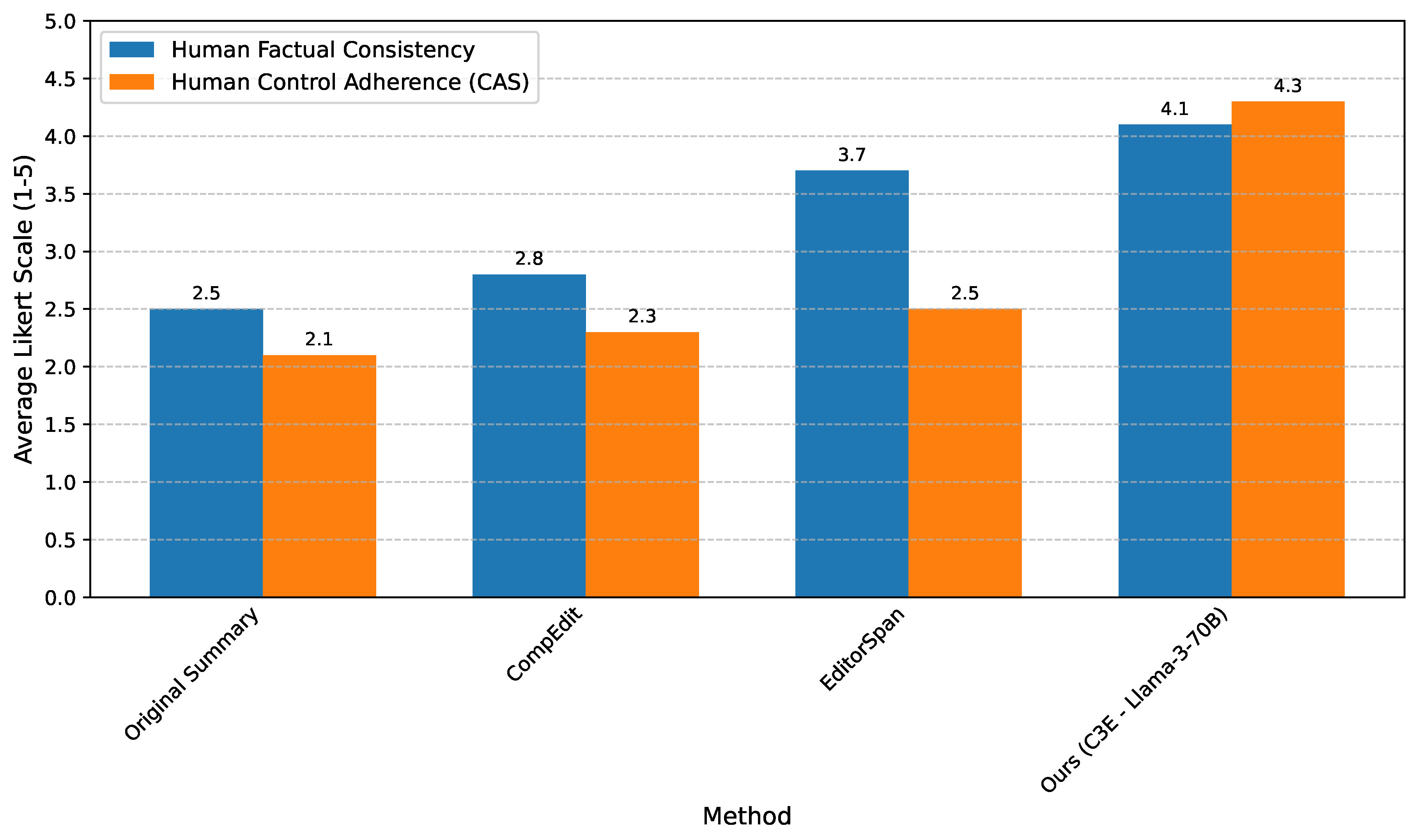

4.5. Human Evaluation

4.6. Ablation Study

- Impact of the Controller: Removing the Controller module (C3E w/o Controller) leads to a noticeable drop in both factual consistency metrics (e.g., QAFactEval decreases from 3.705 to 3.489) and, more significantly, in the Control Adherence Score (CAS drops from 4.3 to 3.5). This demonstrates that the initial alignment of the summary with user controls by the Controller is crucial for setting a strong foundation for subsequent refinement. Without this initial pass, the Critic and Editor have to work harder to correct deviations from instructions.

- Importance of Chain-of-Thought Reasoning: When CoT prompting is removed from all modules (C3E w/o CoT), the performance degrades across all metrics, with QAFactEval falling to 3.210 and CAS to 3.1. This underscores the indispensable nature of structured reasoning for complex tasks like factual verification and nuanced control adherence, confirming that CoT is not merely a stylistic choice but a fundamental enabler of C3E’s robust performance.

- Value of Multi-round Iteration: A single-pass Critic-Editor loop (C3E (Single-pass Critic-Editor)) results in lower performance compared to the full iterative C3E, with QAFactEval at 3.551 and CAS at 3.8. This confirms that the multi-round refinement mechanism is essential for progressively addressing complex issues and achieving convergence towards higher quality and compliance.

- Necessity of the Editor: The `C3E (Critic only, no Editor)` variant, which only evaluates without making corrections, shows the lowest performance across all metrics, emphasizing that the Critic’s feedback is only actionable when paired with an effective Editor.

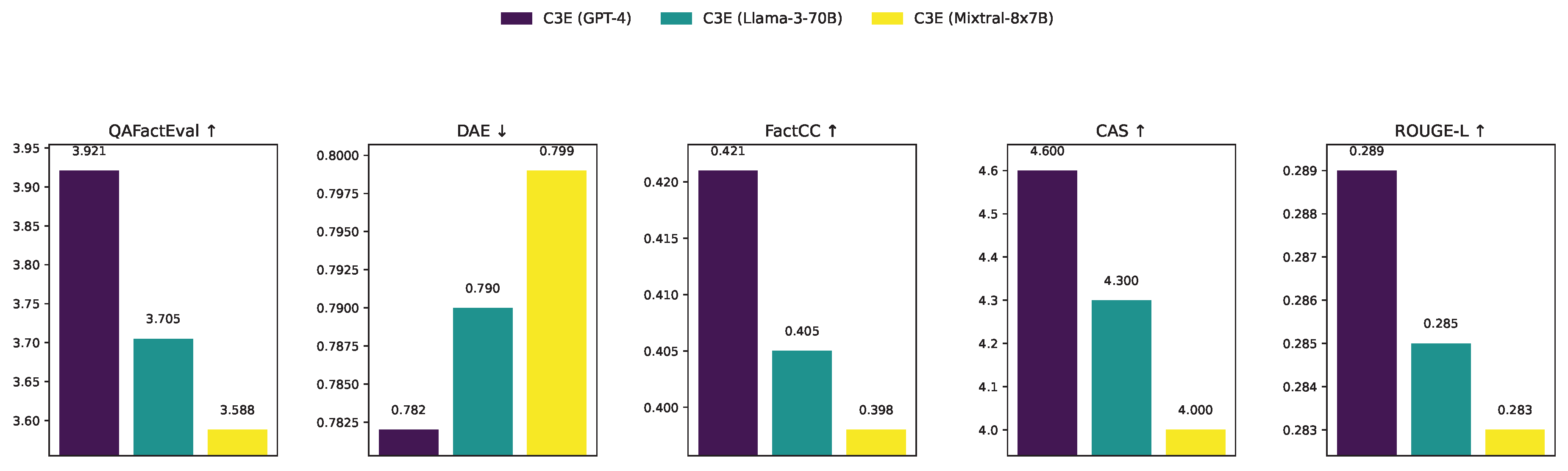

4.7. Impact of Base LLM Choice

- GPT-4’s Superiority: When powered by GPT-4, C3E achieves the highest scores across all factual consistency metrics (QAFactEval: 3.921, FactCC: 0.421) and the highest Control Adherence Score (CAS: 4.6). This demonstrates GPT-4’s advanced reasoning capabilities and its ability to follow complex CoT prompts more effectively, leading to superior factual verification and precise adherence to control instructions.

- Strong Open-Source Alternatives: Llama-3-70B, while slightly trailing GPT-4, still delivers very strong performance, maintaining high factual consistency (QAFactEval: 3.705, FactCC: 0.405) and excellent control adherence (CAS: 4.3). This highlights Llama-3’s viability as a powerful open-source backbone for C3E, offering a compelling balance between performance and accessibility.

- Mixtral-8x7B’s Efficiency: Mixtral-8x7B provides a competitive performance, particularly considering its smaller size and higher inference efficiency compared to Llama-3-70B and GPT-4. It achieves respectable scores (QAFactEval: 3.588, CAS: 4.0), making it a strong candidate for scenarios where computational resources are a primary constraint.

4.8. Analysis of Iterative Refinement Progress

- Consistent Improvement: Both factual consistency metrics (QAFactEval, FactCC) and the Control Adherence Score (CAS) show a steady and significant increase from Round 0 to Round 4. The initial Controller output (Round 0) already provides a controlled summary, but subsequent Critic-Editor loops further refine it.

- Convergence within Four Rounds: The most substantial gains are observed within the first few rounds. By Round 4, the performance metrics largely stabilize, with only marginal improvements or even slight fluctuations in Round 5. This aligns with our preliminary observations that significant quality enhancements are typically achieved within an average of four rounds, validating the design choice.

- Diminishing Edits: The "Edit Success Rate" column, which represents the percentage of summaries requiring further edits in a given round, steadily decreases. This indicates that the Critic identifies fewer issues in later rounds, and the Editor performs fewer substantial modifications, signifying that summaries are progressively converging towards the desired quality and adherence thresholds.

4.9. Detailed Control Adherence Breakdown

- High Adherence for Concrete Controls: C3E demonstrates exceptionally high adherence to quantitative and explicit controls such as Length Constraints (CAS 4.5, Adherence Rate 92.1%) and Keyword Inclusion/Exclusion (CAS 4.4, Adherence Rate 90.7%). The precise nature of these instructions allows the Critic to provide clear feedback and the Editor to make targeted modifications, leading to high success rates.

- Robustness in Semantic Controls: The framework also performs very well on more semantic controls like Focus Areas (CAS 4.2, Adherence Rate 88.5%) and Emotional Tone (CAS 4.1, Adherence Rate 85.3%). The CoT reasoning in the Critic and Editor modules enables the LLMs to understand and apply nuanced semantic adjustments effectively.

- Challenges in Subjective/Complex Controls: While still strong, adherence is slightly lower for more subjective or complex controls such as Perspective Shift (CAS 3.9, Adherence Rate 78.9%) and Stylistic Preferences (CAS 3.8, Adherence Rate 75.2%). These types of controls often require deeper contextual understanding and more creative rewriting, presenting greater challenges for even advanced LLMs. This suggests potential areas for future improvement, perhaps through more specialized prompts or fine-tuning for these specific control types.

5. Conclusion

References

- Chen, W.; Liu, S.C.; Zhang, J. Ehoa: A benchmark for task-oriented hand-object action recognition via event vision. IEEE Transactions on Industrial Informatics 2024, 20, 10304–10313.

- Chen, W.; Zeng, C.; Liang, H.; Sun, F.; Zhang, J. Multimodality driven impedance-based sim2real transfer learning for robotic multiple peg-in-hole assembly. IEEE Transactions on Cybernetics 2023, 54, 2784–2797.

- Chen, W.; Xiao, C.; Gao, G.; Sun, F.; Zhang, C.; Zhang, J. Dreamarrangement: Learning language-conditioned robotic rearrangement of objects via denoising diffusion and vlm planner. IEEE Transactions on Systems, Man, and Cybernetics: Systems 2025.

- Zhang, W.; Deng, Y.; Liu, B.; Pan, S.; Bing, L. Sentiment Analysis in the Era of Large Language Models: A Reality Check. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2024. Association for Computational Linguistics, 2024, pp. 3881–3906. [CrossRef]

- Amplayo, R.K.; Angelidis, S.; Lapata, M. Aspect-Controllable Opinion Summarization. In Proceedings of the Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2021, pp. 6578–6593. [CrossRef]

- Kryscinski, W.; Rajani, N.; Agarwal, D.; Xiong, C.; Radev, D. BOOKSUM: A Collection of Datasets for Long-form Narrative Summarization. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2022. Association for Computational Linguistics, 2022, pp. 6536–6558. [CrossRef]

- Zhou, Y.; Geng, X.; Shen, T.; Tao, C.; Long, G.; Lou, J.G.; Shen, J. Thread of thought unraveling chaotic contexts. arXiv preprint arXiv:2311.08734 2023.

- Zhou, Y.; Li, X.; Wang, Q.; Shen, J. Visual In-Context Learning for Large Vision-Language Models. In Proceedings of the Findings of the Association for Computational Linguistics, ACL 2024, Bangkok, Thailand and virtual meeting, August 11-16, 2024. Association for Computational Linguistics, 2024, pp. 15890–15902.

- Liu, Y.; Liu, P.; Radev, D.; Neubig, G. BRIO: Bringing Order to Abstractive Summarization. In Proceedings of the Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Association for Computational Linguistics, 2022, pp. 2890–2903. [CrossRef]

- Chen, D.; Chen, H.; Yang, Y.; Lin, A.; Yu, Z. Action-Based Conversations Dataset: A Corpus for Building More In-Depth Task-Oriented Dialogue Systems. In Proceedings of the Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 2021, pp. 3002–3017. [CrossRef]

- Fabbri, A.; Wu, C.S.; Liu, W.; Xiong, C. QAFactEval: Improved QA-Based Factual Consistency Evaluation for Summarization. In Proceedings of the Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 2022, pp. 2587–2601. [CrossRef]

- Wadden, D.; Lo, K.; Wang, L.L.; Cohan, A.; Beltagy, I.; Hajishirzi, H. MultiVerS: Improving scientific claim verification with weak supervision and full-document context. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2022. Association for Computational Linguistics, 2022, pp. 61–76. [CrossRef]

- Tang, X.; Nair, A.; Wang, B.; Wang, B.; Desai, J.; Wade, A.; Li, H.; Celikyilmaz, A.; Mehdad, Y.; Radev, D. CONFIT: Toward Faithful Dialogue Summarization with Linguistically-Informed Contrastive Fine-tuning. In Proceedings of the Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 2022, pp. 5657–5668. [CrossRef]

- Scialom, T.; Dray, P.A.; Lamprier, S.; Piwowarski, B.; Staiano, J.; Wang, A.; Gallinari, P. QuestEval: Summarization Asks for Fact-based Evaluation. In Proceedings of the Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2021, pp. 6594–6604. [CrossRef]

- Yu, T.; Dai, W.; Liu, Z.; Fung, P. Vision Guided Generative Pre-trained Language Models for Multimodal Abstractive Summarization. In Proceedings of the Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2021, pp. 3995–4007. [CrossRef]

- Ren, L.; et al. Boosting algorithm optimization technology for ensemble learning in small sample fraud detection. Academic Journal of Engineering and Technology Science 2025, 8, 53–60.

- Ren, L. Causal Modeling for Fraud Detection: Enhancing Financial Security with Interpretable AI. European Journal of Business, Economics & Management 2025, 1, 94–104.

- Ren, L.; et al. Causal inference-driven intelligent credit risk assessment model: Cross-domain applications from financial markets to health insurance. Academic Journal of Computing & Information Science 2025, 8, 8–14.

- Ren, X.; Zhai, Y.; Gan, T.; Yang, N.; Wang, B.; Liu, S. Real-Time Detection of Dynamic Restructuring in KNixFe1-xF3 Perovskite Fluorides for Enhanced Water Oxidation. Small 2025, 21, 2411017.

- Zhai, Y.; Ren, X.; Gan, T.; She, L.; Guo, Q.; Yang, N.; Wang, B.; Yao, Y.; Liu, S. Deciphering the Synergy of Multiple Vacancies in High-Entropy Layered Double Hydroxides for Efficient Oxygen Electrocatalysis. Advanced Energy Materials 2025, p. 2502065.

- Wang, Y.; Le, H.; Gotmare, A.; Bui, N.; Li, J.; Hoi, S. CodeT5+: Open Code Large Language Models for Code Understanding and Generation. In Proceedings of the Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2023, pp. 1069–1088. [CrossRef]

- Gabriel, S.; Celikyilmaz, A.; Jha, R.; Choi, Y.; Gao, J. GO FIGURE: A Meta Evaluation of Factuality in Summarization. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021. Association for Computational Linguistics, 2021, pp. 478–487. [CrossRef]

- Pagnoni, A.; Balachandran, V.; Tsvetkov, Y. Understanding Factuality in Abstractive Summarization with FRANK: A Benchmark for Factuality Metrics. In Proceedings of the Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 2021, pp. 4812–4829. [CrossRef]

- Cao, S.; Wang, L. CLIFF: Contrastive Learning for Improving Faithfulness and Factuality in Abstractive Summarization. In Proceedings of the Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2021, pp. 6633–6649. [CrossRef]

- Xie, Y.; Sun, F.; Deng, Y.; Li, Y.; Ding, B. Factual Consistency Evaluation for Text Summarization via Counterfactual Estimation. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021. Association for Computational Linguistics, 2021, pp. 100–110. [CrossRef]

- Li, Y.; Du, Y.; Zhou, K.; Wang, J.; Zhao, X.; Wen, J.R. Evaluating Object Hallucination in Large Vision-Language Models. In Proceedings of the Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2023, pp. 292–305. [CrossRef]

- Huang, J.; Gu, S.; Hou, L.; Wu, Y.; Wang, X.; Yu, H.; Han, J. Large Language Models Can Self-Improve. In Proceedings of the Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2023, pp. 1051–1068. [CrossRef]

- DeYoung, J.; Beltagy, I.; van Zuylen, M.; Kuehl, B.; Wang, L.L. MS⌃2: Multi-Document Summarization of Medical Studies. In Proceedings of the Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2021, pp. 7494–7513. [CrossRef]

- Pascual, D.; Egressy, B.; Meister, C.; Cotterell, R.; Wattenhofer, R. A Plug-and-Play Method for Controlled Text Generation. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021. Association for Computational Linguistics, 2021, pp. 3973–3997. [CrossRef]

- He, J.; Kryscinski, W.; McCann, B.; Rajani, N.; Xiong, C. CTRLsum: Towards Generic Controllable Text Summarization. In Proceedings of the Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2022, pp. 5879–5915. [CrossRef]

- Shao, Z.; Gong, Y.; Shen, Y.; Huang, M.; Duan, N.; Chen, W. Enhancing Retrieval-Augmented Large Language Models with Iterative Retrieval-Generation Synergy. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023. Association for Computational Linguistics, 2023, pp. 9248–9274. [CrossRef]

- Wang, B.; Deng, X.; Sun, H. Iteratively Prompt Pre-trained Language Models for Chain of Thought. In Proceedings of the Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2022, pp. 2714–2730. [CrossRef]

- Zhou, Y.; Shen, J.; Cheng, Y. Weak to strong generalization for large language models with multi-capabilities. In Proceedings of the The Thirteenth International Conference on Learning Representations, 2025.

- Magister, L.C.; Mallinson, J.; Adamek, J.; Malmi, E.; Severyn, A. Teaching Small Language Models to Reason. In Proceedings of the Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers). Association for Computational Linguistics, 2023, pp. 1773–1781. [CrossRef]

- Nan, L.; Radev, D.; Zhang, R.; Rau, A.; Sivaprasad, A.; Hsieh, C.; Tang, X.; Vyas, A.; Verma, N.; Krishna, P.; et al. DART: Open-Domain Structured Data Record to Text Generation. In Proceedings of the Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 2021, pp. 432–447. [CrossRef]

- Sorensen, T.; Robinson, J.; Rytting, C.; Shaw, A.; Rogers, K.; Delorey, A.; Khalil, M.; Fulda, N.; Wingate, D. An Information-theoretic Approach to Prompt Engineering Without Ground Truth Labels. In Proceedings of the Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Association for Computational Linguistics, 2022, pp. 819–862. [CrossRef]

- Wang, Y.; Chu, H.; Zhang, C.; Gao, J. Learning from Language Description: Low-shot Named Entity Recognition via Decomposed Framework. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021. Association for Computational Linguistics, 2021, pp. 1618–1630. [CrossRef]

| Method | QAFactEval ↑ | DAE ↓ | FactCC ↑ | ROUGE-L ↑ | BERTScore-F1 ↑ |

|---|---|---|---|---|---|

| Original Summary | 2.434 | 0.795 | 0.374 | 0.326 | 0.877 |

| CompEdit | 2.675 | 0.786 | 0.370 | 0.275 | 0.871 |

| EditorSpan (Llama-3.3-70B) | 3.642 | 0.803 | 0.392 | 0.281 | 0.873 |

| Ours (C3E - Llama-3-70B) | 3.705 | 0.790 | 0.405 | 0.285 | 0.875 |

| Method Variant | QAFactEval ↑ | DAE ↓ | FactCC ↑ | CAS ↑ | ROUGE-L ↑ |

|---|---|---|---|---|---|

| C3E (Full) | 3.705 | 0.790 | 0.405 | 4.3 | 0.285 |

| C3E w/o Controller | 3.489 | 0.798 | 0.388 | 3.5 | 0.288 |

| C3E w/o CoT (all modules) | 3.210 | 0.812 | 0.365 | 3.1 | 0.290 |

| C3E (Single-pass Critic-Editor) | 3.551 | 0.792 | 0.395 | 3.8 | 0.287 |

| C3E (Critic only, no Editor) | 2.801 | 0.820 | 0.340 | 2.6 | 0.301 |

| Iteration Round | QAFactEval ↑ | FactCC ↑ | CAS ↑ | Edit Success Rate (%) |

|---|---|---|---|---|

| Round 0 (Controller Output) | 3.012 | 0.355 | 3.2 | 100.0 (Initial) |

| Round 1 | 3.450 | 0.380 | 3.9 | 85.3 |

| Round 2 | 3.615 | 0.395 | 4.1 | 72.1 |

| Round 3 | 3.680 | 0.402 | 4.2 | 55.8 |

| Round 4 | 3.705 | 0.405 | 4.3 | 38.2 |

| Round 5 (Max) | 3.700 | 0.404 | 4.3 | 20.5 |

| Control Type | Average CAS ↑ | Adherence Rate (%) |

|---|---|---|

| Overall C3E (Llama-3-70B) | 4.3 | – |

| Length Constraints (e.g., word count) | 4.5 | 92.1 |

| Focus Areas (e.g., specific entities/themes) | 4.2 | 88.5 |

| Emotional Tone (e.g., positive, neutral) | 4.1 | 85.3 |

| Perspective Shift (e.g., first-person to third-person) | 3.9 | 78.9 |

| Keyword Inclusion/Exclusion | 4.4 | 90.7 |

| Stylistic Preferences (e.g., formal, informal) | 3.8 | 75.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).