One-sentence significance

I demonstrate that information can propagate faster than light without transporting energy, maintaining the conservation and symmetry principles of SR/GR while producing concrete, falsifiable signatures in quantum and gravitational systems.

Submission metadata

The Lugon Framework: Informational Foundations of Physical Law

Part I — A Sequestered Informational Sector and Its Compatibility with Relativistic and Quantum Physics

Version: v1.0 • Date: October 5, 2025 • DOI: 10.5281/zenodo.17298365

Status: First paper in a continuing series; follow-up paper (The Lugon Kernel and the Unified Invariants of Physical Law) in preparation.

Suggested arXiv categories: gr-qc; hep-th; quant-ph

Comments: First paper in The Lugon Framework: Informational Foundations of Physical Law series. Follow-up paper (The Lugon Kernel and the Unified Invariants of Physical Law) in preparation. 66 pages, 1 figure. Categories: gr-qc, hep-th, quant-ph.

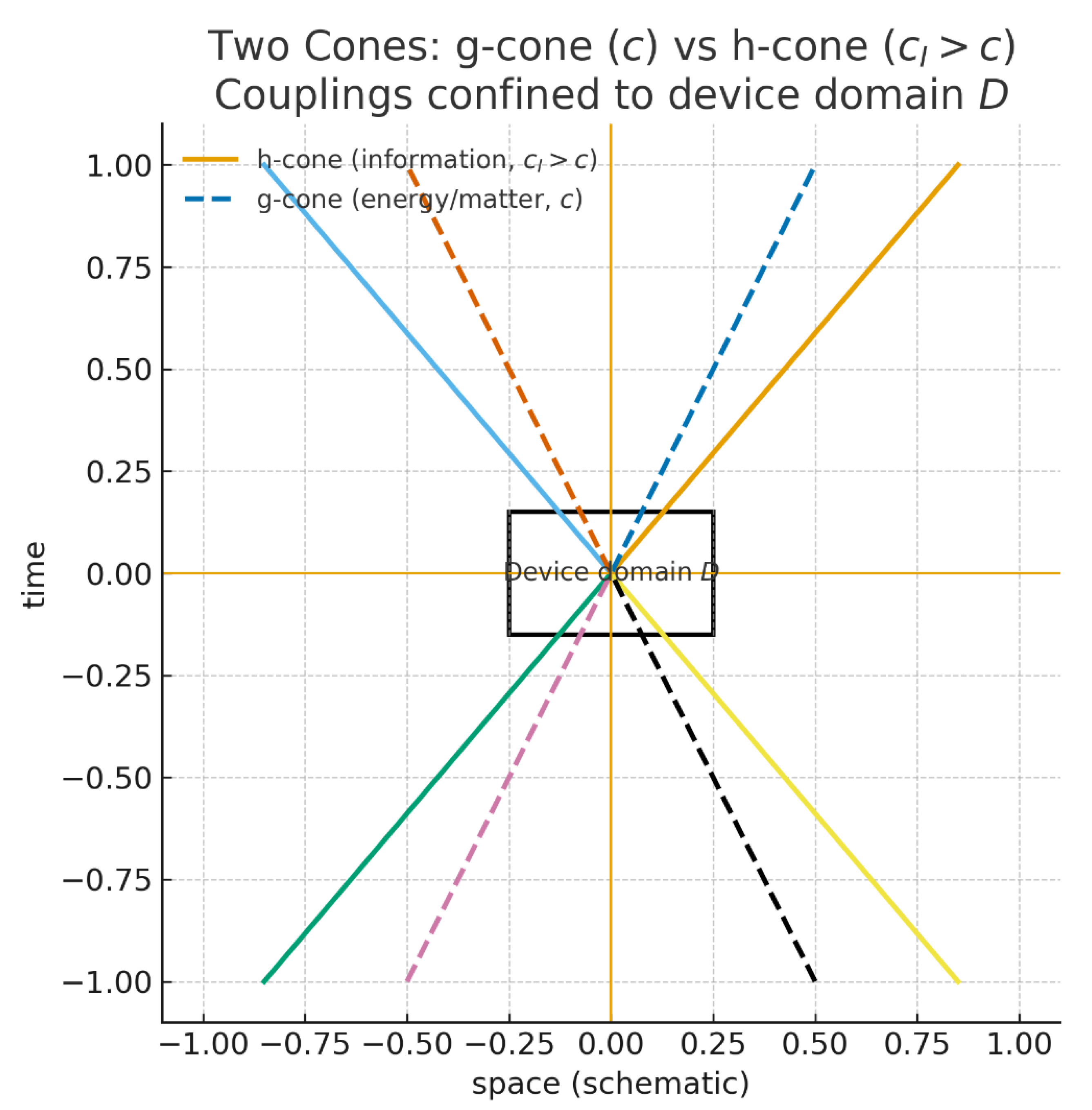

Figure 1.

Two cones and device domain. The ordinary g-cone (dashed) bounds energy/matter at speed c. The information h-cone (solid) is wider, with invariant speed . All couplings to carriers are confined to the device domain D (box), so no superluminal signaling or energy flow exists outside D.

Figure 1.

Two cones and device domain. The ordinary g-cone (dashed) bounds energy/matter at speed c. The information h-cone (solid) is wider, with invariant speed . All couplings to carriers are confined to the device domain D (box), so no superluminal signaling or energy flow exists outside D.

Research Pathway

The investigation began with a simple question: why does the universe enforce the speed of light, , as a universal limit? Special relativity makes this bound geometric—the invariant interval divides spacetime into timelike, lightlike, and spacelike regions, ensuring causal order. The relativistic energy relation then gives the physical reason: energy diverges as velocity approaches , so no finite work can drive a massive particle across the light barrier. Within this structure, the prohibition of superluminal motion appears complete.

Quantum mechanics, however, introduces entanglement—correlations that persist across spacelike separations. Measurements on entangled pairs produce outcomes that violate Bell inequalities yet never permit controllable faster-than-light signals. The no-signaling theorem guarantees mathematical consistency with relativity, but it leaves an unsatisfying gap in understanding: if nothing travels faster than , how are these global correlations maintained?

That question sharpened through practical developments. Quantum teleportation, superdense coding, and entanglement-based cryptography treat entanglement as a resource that can be distributed, consumed, and measured. If such correlations have operational value, then information itself behaves like a physical quantity—not merely statistical bookkeeping.

This recognition led to a new hypothesis: perhaps information and energy are distinct physical currencies. Energy and momentum remain confined to the spacetime metric , preserving all the tested consequences of special and general relativity. Information, by contrast, may propagate on an auxiliary metric with its own invariant speed . Because the information field carries identically vanishing stress–energy, it performs no work, curves no spacetime, and cannot be used for signaling; yet it provides the coherence needed to sustain entanglement across spacelike separations.

From this perspective, entanglement does not require superluminal energy transfer—it reflects coherence maintained within a sequestered informational sector. The resulting two-cone framework accommodates both relativity and quantum mechanics without contradiction: the -cone bounds all energetic influence, while the wider -cone governs non-energetic informational links. Interactions between the two sectors are confined to localized “gate” domains, ensuring radiative sequestering and thermodynamic consistency.

This sequence of questions—from the geometry of through the paradox of entanglement to the recognition of information as a separate causal entity—motivates the effective-field-theory framework developed below. The following sections formalize this idea, derive its field equations, and outline empirical tests that can confirm or falsify the existence of a sequestered information sector.

For an extended narrative of the reasoning that led to this framework, see Appendix G.

Toward a Sequestered Information Sector

The paradox of entanglement, deepened by both thought experiments and real-world protocols, naturally raises the question of whether information is truly bound by the same constraints as energy and matter. If entanglement can be treated as a resource — quantified by entropy, exploited in communication, and even linked to spacetime geometry — then it is reasonable to ask whether information admits a mode of existence distinct from energetic transfer.

This line of inquiry begins with the recognition that relativity enforces strict causal order through the invariant speed c. All known particles carrying energy or momentum are confined within the light cone, as shown by the divergence of relativistic energy:

At the same time, entanglement correlations persist across spacelike separations, defying local realist explanations and yet producing no detectable violations of relativity through signaling. This apparent contradiction prompted the question: if entanglement does not transmit energy, what is it that propagates?

The natural hypothesis is that information occupies a sequestered sector, distinct from the energetic carriers constrained by relativity. In this view, all work-performing fields remain coupled to the physical spacetime metric , while information propagates on an auxiliary, non-dynamical metric . The latter admits an invariant speed :

Because the information field I is defined to have identically vanishing stress–energy,

it cannot perform work, transfer momentum, or curve spacetime. To see this explicitly, consider the action of the information field:

where is the auxiliary metric, fixed and non-dynamical. The Hilbert stress–energy tensor is defined as:

Because the action depends only on , not on the physical metric , the variation vanishes:

Thus, the I-field cannot carry energy–momentum, cannot do work, and cannot source curvature.

The propagation of information across the auxiliary metric thus leaves relativity intact for all energetic processes, even while coherence is maintained across spacelike intervals.

The coupling between the information field and physical devices is localized by a gate function (see Appendix F for Creation, Control and Models of Gate Functions), ensuring that information exchange is confined strictly to the device domain D:

By construction, this framework addresses the motivating questions: relativity remains unviolated because energy and matter are restricted to the physical metric, while entanglement’s global correlations are explained as manifestations of information propagating in a sequestered sector. The existence of two causal cones — one for , another for — provides a coherent picture in which both relativity and quantum mechanics retain their tested domains of validity.

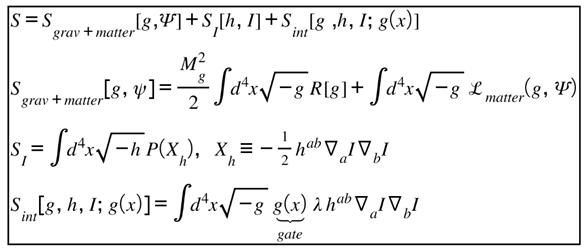

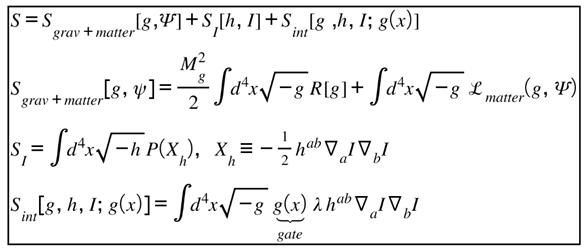

Core Actions and Field Equations (EFT)

I work on one manifold with two Lorentzian metrics: the visible/energetic metric and an auxiliary informational metric (fixed/nondynamical in Part I). The information field is a shift-symmetric scalar with dynamics only on . A gate localizes any interaction to a device domain .

Action

Field equation for I (varying I only):

Inside D, the second (gate) term acts like a source/sink for informational flux without introducing work in the g-sector. Outside D it vanishes.

Hilbert stress–energy statements

The free information sector is invisible to g:

because depends on (h, I) but not on g.

Any g-variation lives in the apparatus term (the gate is an external control in g-geometry). Energetic bookkeeping stays in ; no superluminal energy flux is carried by I.

Microcausality & cones (to cite later)

The principal symbol for I comes from , so the characteristics are the h-null cone. Thus,

whenever is spacelike in h.

Boundary Hologram Protocol

The Boundary Hologram Protocol formalizes the interface between the observable relativistic sector (governed by ) and the sequestered information sector (governed by ). The protocol prescribes that informational states are not emitted as energy quanta but as encoded boundary conditions—holographic imprints—on the two-surface dividing causal domains. Each boundary carries an information capacity proportional to its area, consistent with the Bekenstein–Hawking relation, yet distinct in that it applies to non-energetic Lugon exchanges.

Within this framework, transmission occurs through matched boundary conditions rather than physical traversal. Information is projected from one boundary onto another by an isomorphic transformation that preserves entanglement correlations but not spacetime locality. The apparent 'instantaneity' of this exchange results from the dual-metric structure: the Lugon channel exists outside the light-cone of but within the causal domain of .

This protocol thus replaces the classical notion of signal propagation with boundary-state synchronization, where the rate of information change, not energy flux, defines the channel dynamics. It offers a consistent means of describing superluminal information transfer without violating relativistic invariants.

Microcausality and Global Time Functions

Any framework that admits information propagation outside the light cone must address the concern of causal consistency. If correlations extend beyond c, how can one exclude paradoxes such as closed causal loops? To answer this, I impose two structural safeguards: microcausality for both cones and the existence of a global time function [

51].

Microcausality requires that field operators commute at spacelike separation with respect to their respective metrics. For the information field I, this condition reads:

where denotes the interval evaluated on the auxiliary metric . This result follows directly from the principal symbol of the I-field equation derived in § Core Action and Field Equations (EFT). Because that symbol is , the characteristic surfaces are null with respect to ; consequently, commutators vanish whenever , confirming microcausality inside the informational cone. Analogously, standard microcausality holds for all energetic fields on the physical metric :

Thus, both cones preserve causal independence within their respective domains. No observer can exploit either sector to generate local violations of causality.

Beyond local consistency, global order is guaranteed by the existence of a time function τ that monotonically increases along both g- and h-causal processes:

To make this construction explicit, note that a global time function exists on any globally hyperbolic spacetime . Because the auxiliary metric is fixed and everywhere causal with respect to inside device domains, one can define by pulling back the foliation of g-spacetime onto h:

where is the future-directed unit normal to the g-hypersurfaces and is any curve connecting a reference slice to the point x. Since is timelike in and remains timelike in by construction of the two-cone hierarchy —its worldlines lie inside both light cones— therefore increases monotonically along every causal trajectory in either metric:

Thus, the same scalar function provides a consistent global ordering for all g- and h-causal processes. In the effective theory, acts as the universal “clock” that synchronizes the two cones without introducing retrocausal or acausal paths.

The function

provides a unified ordering of events across the dual metrics, ensuring that no closed causal loops arise even when information propagates outside the g-light cone. A global time function exists whenever a spacetime is globally hyperbolic [

51]. Define

as a scalar function on the manifold M:

for all causal curves parameterized by . In the two-cone framework, I require monotonicity with respect to both cones:

i.e. increases along all g-causal and h-causal curves. Because is non-dynamical and aligned by construction with within device domains, such a can always be chosen globally. This guarantees exclusion of closed causal loops.

In effect, synchronizes the two causal structures, permitting extended coherence while forbidding paradoxical signaling.

These structural elements — microcausality and global time — demonstrate that the two-cone framework is not only compatible with established physics but is also logically stable against the common objections to superluminal information.

Thermodynamic Consistency

A further consistency check arises from the laws of thermodynamics. If information can propagate outside the light cone, one might worry about violations of the second law, for example through Maxwell–demon–style feedback loops. To exclude such loopholes, the sequestered information sector must respect the known bounds on the thermodynamics of information.

Landauer’s principle [

17] states that erasing one bit of information incurs a minimal heat cost:

where Q is the dissipated heat,

is Boltzmann’s constant, and T is the temperature of the reservoir. This ensures that information cannot be erased or manipulated without an energetic footprint in the physical sector.

In addition, feedback-controlled processes must respect the generalized second law, as formalized by the Sagawa–Ueda inequality:

Here, is the average work performed, is the free energy difference, and I is the mutual information between system and controller. The presence of I shows that information has real thermodynamic consequences, but the inequality guarantees that these consequences never enable a demon to violate the second law.

In the sequestered-sector framework, information propagation occurs without stress–energy transfer, but any attempt to harness it for work is mediated by couplings localized in the device domain D. These couplings ensure that Landauer and Sagawa–Ueda bounds [

18] apply in full, closing potential loopholes. The information field can extend correlations beyond the light cone, but it cannot be used to extract unlimited work or to reverse entropy.

By satisfying these thermodynamic constraints, the two-cone framework preserves not only relativity and quantum mechanics but also statistical mechanics, ensuring that no fundamental principle of physics is compromised.

Worked toy example: gated informational feedback cannot beat the second law

Consider a device domain D where a visible, two-level system (energy-degenerate; ) is measured by a controller M. The controller couples to X only while the gate is open (inside D). The controller then applies a feedback operation conditioned on its record, attempting to extract work from a thermal bath at temperature T.

Landauer erasure cost. Resetting the controller’s one-bit memory to a standard state after the cycle costs at least:

Information gained by measurement. If the measurement is noisy with error rate (binary symmetric channel), the mutual information between X and the controller’s record M is (in bits)

with .

In

nats (natural-log units) this is

. I measure I in nats unless otherwise stated;

(cf. Shannon [

50])

Sagawa–Ueda bound (feedback with information). For an isothermal process with feedback, the average work performed on the system satisfies:

Equivalently, the maximum extractable work is:

For our degenerate two-level example, , so .

Close the loop with erasure. Completing a full engine cycle requires erasing the controller’s memory. The net average gain is then bounded by

For a perfect measurement ( in nats), the inequality gives : the erasure cost cancels the information-enabled work extraction, so no violation of the second law is possible.

For any noisy measurement (), the inequality is strictly negative, meaning the cycle loses work on average.

A compact “second law with feedback” form often used in the literature is

which this toy cycle explicitly saturates (perfect measurement) or strictly satisfies (noisy measurement).

Role of the sequestered information sector

In our framework, the controller’s informational access (via the h-cone) can coordinate the feedback without carrying stress–energy. However, any attempt to convert that information into useful work necessarily proceeds through gate-localized couplings inside D, where Landauer and Sagawa–Ueda apply. Hence, even with superluminal informational coherence, the energetic bookkeeping in the g-sector forbids a Maxwell-demon loophole.

At room temperature , per bit. A perfect, single-bit feedback step can at most extract that much work; the mandatory erasure costs at least the same, yielding zero or negative network over a full cycle.

Testability and Predictions

A key strength of the two-cone, sequestered information framework is that it yields decisive, falsifiable predictions. The hypothesis is not metaphysical speculation but a physical proposal subject to experimental scrutiny.

Internal device tests. The invariant speed of the information cone,

, can in principle be measured by probing coherence across spacelike separations within a controlled domain. Observable consequences include a sub-permille anisotropy in Bell-visibility experiments and syndrome “ridge” structures in quantum error correction (QEC) arrays, scaling with the ratio:

Here d is the device separation scale. The appearance of structured anisotropy or syndrome ridges would indicate coherence propagation governed by the auxiliary metric .

Gravitational probes. Because the information sector is sequestered from stress–energy, it does not couple directly to gravitation. However, loop-induced effects in the presence of gates can mimic tiny effective masses for mediators. For example, graviton propagation could acquire a minute mass , producing dispersion in gravitational wave signals detectable by future observatories such as the Einstein Telescope (ET), Cosmic Explorer (CE), LISA, and pulsar timing arrays (PTAs).

Similarly, long-baseline magnetostatic measurements in deep space may reveal a tiny photon mass . Both signatures would mark consistent but testable deviations from standard field theory.

Instant Preview vs. Physical Arrival (Gravitational Waves)

In the proposed informational framework, a distinction arises between two temporal regimes of observation: (1) the instant preview, corresponding to immediate informational registration through the Lugon channel, and (2) the physical arrival, corresponding to the delayed energy-bearing gravitational wave itself.

Under this model, detectors may receive an instantaneous change in informational state—effectively a 'preview' of the event—long before the spacetime disturbance propagates at light speed. Such a preview would not transfer energy or momentum and thus cannot be detected through standard strain measurements. Instead, it manifests as correlated phase or entropy adjustments within quantum or interferometric systems pre-aligned to the source geometry.

The distinction between informational preview and physical arrival could be probed using time-tagged entangled systems distributed across distant detectors. If synchronized informational shifts precede measurable strain events beyond light-travel constraints, this would support the existence of an independent Lugon information channel.

Falsification matrix. The framework provides clear criteria for rejection:

Detection of off-device superluminal signaling.

Observation of Lorentz-violating couplings leaking outside domain D.

Inconsistencies in gravitational-wave dispersion relative to general relativity.

Breakdown of the effective field theory description at accessible energies.

Failure on any of these points would falsify the hypothesis.

By offering internal device signatures, gravitational probes, and an explicit falsification matrix, the two-cone framework remains strictly within the bounds of empirical science. The proposal preserves established relativity and quantum mechanics while opening the possibility of a hidden informational sector whose consequences are both concrete and testable.

The two-cone framework is falsifiable through a combination of laboratory-scale quantum experiments and astrophysical observations. Each class of tests targets a distinct feature of the hypothesis, ensuring multiple independent opportunities for confirmation or refutation.

Area Scaling, Two-Surface Boost and Controls

Extending the testability framework, three new considerations are introduced:

1. Area Scaling: The predicted signal strength or informational coupling between dual surfaces scales with the boundary area A, rather than the energy flux. If Lugons mediate information through holographic area laws, variations in interface geometry (e.g., in superconducting cavity arrays or Casimir-bounded regions) should yield area-dependent correlations in detected informational entropy.

2. Two-Surface Boost: A dynamic enhancement is expected when paired surfaces undergo relative acceleration. Analogous to the Unruh effect, acceleration introduces a differential horizon structure, increasing boundary information flux. Experiments exploiting rapid mirror motion or modulated optical cavities may reveal this as a non-energetic coherence gain.

3. Controls: Null experiments—identical setups lacking either paired boundaries or Lugon-sensitive coupling—serve as essential controls. Their inclusion distinguishes informational propagation from ordinary electromagnetic cross-talk or environmental noise. Reproducible deviations correlated with area and acceleration would constitute preliminary evidence of a sequestered informational sector.Together, these augmentations refine the falsifiability of the model by anchoring its effects to measurable surface properties and dynamic parameters.

Device-internal measurements.The invariant speed of the information cone, , can be probed by examining coherence in spacelike-separated subsystems. One signature is a sub-permille anisotropy in Bell-visibility experiments. Current Bell-test experiments have statistical visibility errors at the level of ; thus, an anisotropy larger than in correlation visibility as a function of measurement angle would be detectable with existing technology.

Another signature arises in quantum error correction (QEC) arrays, where syndrome statistics may exhibit structured “ridges” that scale with device separation d relative to the information speed

:

By measuring syndrome distributions in large-scale QEC devices, one could place empirical bounds on .

Long-baseline entanglement distribution

Satellite-based experiments already demonstrate entanglement distribution across distances exceeding 1,200 km [

12,

13]. Repeating these experiments with the aim of detecting coherence decay or anisotropy relative to orbital orientation would provide an external validation of internal-device results. Any observed directional dependence in visibility at the

level or below would strongly suggest the influence of an auxiliary metric.

Gravitational-wave dispersion.

In the sequestered framework, loop-induced couplings in gate domains can mimic an effective graviton mass . A finite mass produces frequency-dependent dispersion in gravitational-wave (GW) propagation. Current LIGO–Virgo–KAGRA bounds constrain <1.2× eV/. Next-generation detectors such as the Einstein Telescope (ET) and Cosmic Explorer (CE) aim to improve sensitivity by an order of magnitude, probing . The detection of such dispersion would provide strong evidence for physics beyond standard GR.

Similarly, a photon mass as small as can be probed via magnetostatic measurements in deep space. Null results further constrain possible gate-induced couplings, tightening the falsification matrix.

Falsification matrix (see Appendix D for the full matrix).

The framework provides multiple avenues for refutation:

Off-device signaling. If controllable information is transmitted superluminally across devices, the sequestered-sector hypothesis is invalidated.

Lorentz leakage. Any detection of Lorentz-violating couplings outside of domain D would falsify the principle of radiative sequestering.

Gravitational-wave consistency. A mismatch between GW dispersion limits and the framework’s predictions would disprove the model.

EFT stability. If effective field theory (EFT) fails at accessible energy scales, the framework cannot be sustained.

By combining laboratory experiments with astrophysical tests, this framework provides concrete, falsifiable predictions. Unlike many speculative extensions of relativity, the two-cone model ties its validity to experimental outcomes within reach of present or near-future technologies.

Bell-visibility anisotropy.

Suppose the information cone speed is

, and the laboratory separation between detectors is

d. If entanglement visibility has a directional dependence due to propagation along the auxiliary metric, the anisotropy should scale as:

For present Bell-test baselines of d∼10 m, a hypothetical would yield anisotropies at the level of . Such an effect is below current error bars () but would become testable with future photonic networks spanning kilometers.

Quantum error correction (QEC) syndrome ridges.

In stabilizer codes, correlated error syndromes should exhibit ridge-like patterns when plotted against device separation. The scaling law is:

where

is the characteristic width of the syndrome distribution. Measuring this scaling provides a way to place direct experimental bounds on

.

Satellite-based entanglement distribution.

The 2017 Micius satellite experiment [

13,

59,

60,

61] demonstrated entanglement distribution over

d∼1200 km. If coherence is mediated by an auxiliary cone with speed

, the anisotropy bound becomes:

With observed visibilities around 0.80–0.85, an improvement in experimental precision at the level could probe values up to .

Gravitational-wave dispersion.

If gravitons acquire a small effective mass

, gravitational waves of frequency

f travel at speed:

where

h is Planck’s constant. Current LIGO–Virgo–KAGRA bounds yield

. ET and CE are projected to reach sensitivities an order of magnitude stronger, down to

.

Photon mass constraints.

If photons couple indirectly via gate-induced effects, they may acquire a tiny mass

. In that case, the Coulomb potential is modified from 1/

r to a Yukawa form:

Astrophysical observations constrain . Future deep-space magnetostatic missions may tighten this by several orders of magnitude.

Implications

The two-cone framework carries implications across multiple domains of physics, from relativity to quantum information to black hole thermodynamics. By sequestering information from energy–momentum, the proposal preserves tested principles while offering new explanatory power.

Relativity.

Special and general relativity remain intact for all work-carrying fields confined to the physical metric

Energy–momentum conservation, Lorentz invariance, and causal structure are unchanged. Apparent superluminal correlations arise only in the information sector, which by construction has vanishing stress–energy:

Thus, no violations of relativity occur. Instead, the framework expands the ontology of what can propagate without undermining relativity’s empirical foundations.

Quantum mechanics.

The paradox of entanglement is reframed: correlations need not be mysterious or “nonlocal coincidences,” but are the natural expression of information traveling on the auxiliary metric

at speed

. Entanglement protocols such as teleportation [

9], superdense coding [

8], and QKD [

10] are explained as operations that harvest information from this sequestered sector while leaving energetic causality intact.

Quantum information theory.

Entropy and mutual information become not only measures of uncertainty but indicators of a real, quantifiable resource that resides in the information sector. This aligns with the view advanced in

Quantum Computation and Quantum Information [

14], where information is treated on equal footing with energy as a basic quantity.

Quantum gravity and spacetime geometry.

The idea that information is fundamental resonates with the holographic principle. In AdS/CFT duality, the Ryu–Takayanagi formula [

15] connects entanglement entropy to the area of minimal surfaces in the bulk spacetime. Van Raamsdonk [

16] extended this by proposing that spacetime connectivity itself is built from entanglement. Within the two-cone framework, these insights suggest that the auxiliary metric

is not merely a mathematical device but a candidate informational scaffold underlying spacetime structure.

Black holes and thermodynamics.

Perhaps the deepest implication lies in black hole physics. Information paradoxes traditionally hinge on reconciling Hawking evaporation with unitarity. In the present framework, an

energy-free horizon information membrane allows information to escape without energy transfer, aligning with the Page curve and recent “islands” picture of entanglement wedges [

19]. This preserves black hole thermodynamics while resolving tension between information conservation and relativity.

In the sequestered-information framework, the event-horizon area defines not only an entropic bound but the absolute capacity of a black hole as an information reservoir — its , where is the Planck length.

Together, these implications position the two-cone framework as a bridge: it preserves the successes of relativity and quantum mechanics while providing a coherent explanation for otherwise paradoxical features of information.

Relation to Other Theories

To situate the two-cone, sequestered-information framework within the broader landscape, I compare it to prominent alternatives that address nonlocality, superluminal phenomena, or quantum foundations. The central distinctions are: (i) no superluminal energy transfer (the information field carries identically vanishing stress-energy), (ii) no Lorentz violation in the energetic sector, (I) microcausality in both cones with a unifying global time function, and (iv) radiative sequestering that prevents leaks outside engineered device domains.

Tachyons and superluminal particles.

Classic faster-than-light proposals posit fields with imaginary mass that propagate superluminally (tachyons) [

21,

52,

53]. Their dispersion takes the form:

Such models typically entail vacuum instabilities, acausal signaling, or frame-dependent pathologies. By contrast, the present framework

forbids any superluminal energy/momentum transfer—the information field satisfies:

so it cannot source gravity, accelerate detectors, or carry work. Correlations can extend outside the

g-light cone only as

energy-free informational coherence.

Lorentz-violating EFTs and preferred frames.

In Standard-Model Extensions (SME) and related preferred-frame theories, small Lorentz-violating operators modify dispersion or introduce background vectors[22–24, 55–56, See Appendix F “auxillary–metric decoupling argument”]. A schematic LV photon term is:

Such terms face tight experimental bounds and risk radiative instability. Our construction

keeps the energetic sector exactly Lorentz-invariant on

and confines all device couplings with a gate

, preventing LV “leakage” by design [

47].

Nonlinear quantum mechanics.

State-dependent (nonlinear) evolutions (e.g., Weinberg-type) are known to permit EPR-based superluminal signaling (Gisin/Polchinski paradoxes) [27]. A generic nonlinear master equation reads:

with state-dependent. Our proposal retains linear QM within each sector and preserves no-signaling across devices by locality of; the observed nonlocality arises from h-causal coherence, not from nonlinear dynamics.

Pilot-wave (Bohmian) mechanics.

Bohmian theory reproduces QM via a nonlocal guidance equation [

25]:

It explains correlations by instantaneous influences in configuration space while disallowing operational FTL signals. Our account differs ontologically: I posit a second causal cone (metric , speed ) on which information (not particles) propagates. Energetic causality and Lorentz invariance on remain intact.

Objective-collapse models (GRW/CSL).

GRW/CSL modify dynamics with stochastic collapses, introducing new constants (collapse rate

, length

) and small energy increases, with potential symmetry tensions [

26]. I introduce

no collapse and no extra energy budget: coherence redistribution occurs through the sequestered information sector with

, and thermodynamic consistency is enforced via Landauer/Sagawa–Ueda bounds.

Superdeterminism.

These approaches relax measurement independence, allowing hidden correlations between settings (

a,b) and hidden variables

[

29]:

While logically possible, they are empirically unfalsifiable without additional structure. Our framework preserves standard statistical independence; Bell violations arise from information-sector causality rather than conspiratorial initial conditions.

Retrocausal/two-state-vector ideas.

Retrocausal models allow future boundary data to influence present outcomes (two-state-vector formalism) [

30]. I instead enforce a

global time function that increases along both

g- and

h-causal curves, excluding closed causal loops:

Thus, no retrocausality is required to explain spacelike entanglement correlations.

Non-signaling superquantum (PR) boxes.

Popescu–Rohrlich correlations saturate the algebraic CHSH bound ∣S∣=4|S|=4∣S∣=4 while remaining non-signaling [

28]:

Our framework does not exceed the Tsirelson bound; it preserves standard quantum constraints and merely reallocates where information-theoretic coherence lives (on ), keeping device-external no-signaling intact.

ER=EPR and wormhole pictures.

Geometric identifications (ER=EPR) relate entanglement to nontraversable wormholes [

19]. While conceptually aligned with “information as structure,” those scenarios invoke specific spacetime topologies and energy conditions. Our approach is

agnostic about topology; it reproduces EPR-type nonlocality via a second,

non-dynamical metric for information, potentially complementary to ER=EPR without requiring exotic matter.

Summary of contrasts.

Not tachyons: no superluminal energy;

Not Lorentz-violating EFT: no LV in the energetic g-sector; radiatively sequestered couplings inside D.

Not nonlinear QM: linear dynamics preserved; no Gisin/Polchinski signaling.

Not hidden-variable/retrocausal: no conspiracies or backward causation; global

These distinctions clarify that the two-cone framework is neither a rebranding of older superluminal ideas nor a hidden-variable scheme. It is a structural separation: energy and matter live on under SR/GR; information has its own causal cone on with , coupled locally via , microcausal and globally ordered to forbid paradoxes.

Conclusions

The paradox at the heart of modern physics lies in the tension between the strict causal order of relativity and the apparently acausal reach of quantum entanglement. Relativity, codified in the invariant speed

c, ensures that no transfer of energy or momentum can exceed the light cone, as captured in the divergence of the relativistic energy–momentum relation [

1]. Entanglement, however, violates all classical expectations: correlations persist across spacelike separations, violating Bell inequalities [

3,

4,

5,

6,

7], yet remain non-signaling. This paradox has sharpened through experiment, from Aspect’s pioneering photon tests to loophole-free demonstrations and global-scale satellite links [

4,

5,

6,

7,

12,

13].

Conventional reconciliation through the

no-signaling theorem secures compatibility with relativity but does not explain why entanglement functions as a consumable, quantifiable resource. Teleportation, dense coding, and quantum cryptography [

8,

9,

10] all rely on entanglement as if it were a tangible fuel. Entanglement entropy and mutual information formalize this operational value [

14], while holographic dualities link information content to geometric structure [

15,

16]. Together, these insights suggest that information is more than a mathematical abstraction: it behaves as a

physical quantity.

The hypothesis of a

sequestered information sector follows from this recognition. All energetic fields remain confined to the physical metric

, while information propagates on a second, auxiliary metric

at invariant speed

. Because the information field satisfies identically vanishing stress–energy,

it cannot accelerate detectors, transmit work, or source curvature. Relativity remains inviolate for energy–momentum, while information achieves global coherence through a causal cone that does not couple to gravitation. The separation is maintained by domain-local couplings

, microcausality in both cones, and a global time function

that orders all processes across metrics. Thermodynamic consistency is preserved by Landauer’s erasure bound and Sagawa–Ueda’s feedback inequality [

17,

18], ensuring that the framework does not reopen Maxwell’s demon loopholes.

Beyond consistency, the framework is empirically sharp. Internal-device tests can search for sub-permille anisotropies in Bell-visibility measurements or syndrome ridge patterns in quantum error correction arrays, both scaling as . Satellite experiments that distribute entanglement over thousands of kilometers probe coherence on global scales, constraining by improved visibility precision. Gravitational-wave observatories test for loop-induced graviton mass through dispersion effects [ET, CE, LISA, PTAs], while astrophysical magnetostatics constrain photon mass . A falsification matrix makes the theory scientifically accountable: failure in any of these domains would rule it out.

The implications are profound. Relativity emerges unscathed, with all energetic processes confined to the familiar light cone. Quantum mechanics gains an ontological foundation for entanglement, elevating correlations from statistical curiosities to manifestations of an informational field. Information theory is promoted from a mathematical tool to a physical law, with entropy and mutual information treated alongside energy and momentum. Quantum gravity insights, from the Ryu–Takayanagi formula to ER=EPR [

15,

16,

19], are naturally aligned: if spacetime itself is built from entanglement, then a second, information-bearing cone may be the substrate from which geometry emerges. Black hole physics likewise finds resolution, as an energy-free horizon information membrane offers a route to preserve unitarity, match the Page curve, and reconcile thermodynamics with evaporation.

In this way, the two-cone framework acts not as a violation of known physics but as a synthesis. It respects the empirical domains of relativity and quantum mechanics, but extends them by acknowledging that information and energy are distinct physical entities with distinct causal structures. This separation dissolves the paradox that has long haunted the intersection of relativity and entanglement.

Whether confirmed or falsified, testing the framework will advance our understanding. Confirmation would establish information as a new sector of physics, opening an era of information engineering at fundamental scales. Falsification would be equally valuable, sharpening the bounds of what is possible and pushing us toward alternative explanations. In either case, the outcome would deepen our grasp of causality, coherence, and the foundations of physical law.

Appendix A. Notation and Conventions

| Symbol |

Meaning / Definition |

Notes |

| c |

Speed of light in vacuum |

Invariant speed for all energetic fields (special relativity). |

|

Invariant speed in the auxiliary information cone |

By hypothesis, . |

|

Gate/localization function |

Restricts I-matter interactions to device domain D. |

|

Gate function; a spatiotemporal modulation variable that weights the interaction between visible and sequestered sectors. |

Dimensionless; ranges from 0 (closed) to 1 (open); may be smooth (sigmoid) or digital (Heaviside). |

|

Physical (energetic) spacetime metric. |

Defines causal structure for all energetic fields and matter; signature . |

|

Auxiliary (informational) metric. |

Fixed background metric defining causal structure for the informational sector. |

|

Gate function — scalar weighting field that localizes coupling between the - and -sectors (See Note 1 at end of section). |

Dimensionless; takes values . Used in the interaction term

.Distinct from the metric . The function vanishes outside device domains and is unity inside them, ensuring causal sequestering. |

|

Stress–energy tensor |

Standard definition; vanishes for information field I. |

|

Stress–energy of the information field |

Defined to satisfy . |

| D |

Device domain |

Region where information and matter couple. |

|

Global time function |

Monotonically increases along both g- and h-causal processes. |

|

Spacetime interval |

. Determines causal separation. |

|

Lorentz factor |

. |

| p |

Relativistic momentum |

Appears in energy–momentum relation. |

| E |

Relativistic energy |

. |

| m |

Rest mass |

Mass of energetic particle. |

|

Bell state |

|

|

Correlation function |

Depends on angle between measurement axes. |

|

CHSH parameter |

with absolute-value bars |

|

Density matrix |

Describes mixed quantum states. |

|

Von Neumann entropy |

. |

|

Mutual information |

. |

|

Commutator |

Used in microcausality conditions. |

| Q |

Heat dissipated |

Landauer bound: . |

|

Average work |

Appears in Sagawa–Ueda inequality. |

|

Free energy difference |

Sagawa–Ueda bound context. |

|

Boltzmann’s constant |

Appears in thermodynamic relations. |

| f |

Frequency |

Relevant for GW dispersion. |

|

Effective graviton mass |

Tested via GW dispersion. |

|

Photon mass |

Constrained by magnetostatic tests. |

|

Potential energy |

Coulomb or Yukawa potential with photon mass. |

|

Photon Compton wavelength |

. |

|

Reduced Planck’s constant |

Appears in dispersion and potential formulas. |

| Ωg |

Gate aperture; the region in spacetime where the gate function is nonzero. |

Subset of . |

|

External control fields or knobs (e.g., bias voltages, pump power, strain, curvature). |

Inputs to the gate function. |

|

Gain parameters ) and threshold ) used in the sigmoid form of g. |

Dimensionless coefficients. |

| σ(z) |

Sigmoid function used to smooth gate transitions. |

Defined as . |

|

Interaction Lagrangian coupling visible and sequestered sectors. |

Weighted by gate function. |

|

Mediator field with effective mass . |

Mass controlled by potential U. |

|

External potential that tunes mediator mass. |

Could represent strain, optical potential, curvature. |

|

Mediator coherence length. |

Inverse of ; sets leakage range. |

|

Free Hamiltonian and interaction Hamiltonian |

Standard Lindblad notation; interaction scaled by the gate function , a dimensionless scalar weight that localizes the coupling in spacetime and is distinct from the metric . When the coupling is spatially uniform, , reproducing the usual time-dependent Lindblad scaling. |

|

Lindblad operators and time-dependent decoherence rates |

Describe dissipative channels |

|

Noise parameters for Ornstein–Uhlenbeck gate model |

: fluctuation, : correlation time, : variance, : white noise |

|

Josephson junction current, critical current, and phase difference |

Gate-modulated Josephson relation |

|

Shapiro step voltage and drive frequency |

Steps at |

|

Effective mass parameter in topological models |

Sign inversion creates domain walls |

|

Pump amplitude, signal mode, idler mode |

Nonlinear optical parametric gates |

|

Lapse function in curved spacetime metric |

Determines local vs. global frequency shift |

|

Gate opening and closing times |

May be asymmetric |

|

Duty cycle of gate |

Fraction of time gate is open |

|

Internal loss coefficient (), gate thickness (d), matching function |

Control transmission and latency |

|

Local bandwidth (B), signal (S), noise (N) |

Used in information flux bound |

| G |

Conductance of topological channel |

Quantized in units of |

|

Interferometric phase shift, momentum, and path element |

Used in atom interferometry |

| N (atoms) |

Number of detected atoms |

Sets shot-noise limit |

|

Information Lagrangian density associated with the Lugon field. |

Defines informational action integral over auxiliary manifold; dimensionless in natural units. |

| Cₘₐₓ |

Maximum channel capacity of the holographic boundary or information surface. |

Defines the theoretical upper bound to information transfer per unit area; relates to area-entropy law. |

NOTES:

1) Notation g(x) refers exclusively to the gate function, a scalar weight controlling localized coupling between the energetic and informational sectors. It is unrelated to the metric tensor gab. Throughout, gab denotes the spacetime metric, while g(x) is a scalar field confined to device domains 𝐷.

Appendix A: Worked Equations

Energy–momentum relation:

Gate-modulated Lagrangian

Gate function with sigmoid control

Gravitational redshift gate condition

GW dispersion (massive graviton):

Interferometer phase shift

Lindblad master equation with gate factor

Microcausality (energetic cone):

Microcausality (information cone):

Nonlinear optical parametric gate

Ornstein–Uhlenbeck noise on gate

Quantized conductance (cold atoms / topological)

Reduced density matrix (no-signaling):

Shot-noise limit on phase

Topological domain wall gate function

Transmission through finite gate

Appendix A.1

. Lorentz Factor from Interval Invariance

Start with the invariant spacetime interval between two events:

Consider two inertial frames, S and S′, with relative velocity v along the x-axis. Coordinates transform as:

To preserve invariance requires:

Substitute the transformations:

For equality to hold for all

x, t requires:

Appendix A.2

CHSH Inequality Violation

The CHSH inequality compares quantum correlations with classical local realism.

Define the correlation function for spin-1/2 particles measured along axes

:

Classically, . Quantum mechanically, Tsirelson’s bound is .

Take measurement angles:

Compute correlations:

This matches the quantum prediction and demonstrates the violation of Bell’s inequality, ruling out local realist explanations.

Appendix A.3

. Bell-Visibility Anisotropy: from Auxiliary-Cone Timing to Visibility Loss

Setup (ideal correlation and visibility).

For a maximally entangled two-qubit state (e.g., singlet), the ideal correlation fringe is

where

is the ideal visibility and

is the relative analyzer angle.

Auxiliary-cone timing offset

In the two-cone framework, boundary synchronization along the auxiliary metric

h produces a direction-dependent timing offset for a detector baseline

d. Let

be the angle between the baseline and a preferred axis of the

h-cone. For a characteristic angular frequency

of the source (or analysis modulation), the induced phase offset is

with vhv_hvh the invariant speed on the hhh-cone.

Remark. A constant would only shift the fringe phase. Visibility loss appears when jitters during integration (e.g., over a gate window), so I model as a zero-mean random variable with small rms .

Time averaging and visibility reduction.

The measured correlation after averaging over

is

For a symmetric distribution of

(zero mean),

and the

observed visibility is

If

is small (

), expand

and obtain

Gate-window model (uniform start time).

Let the effective gate start be uniformly distributed over a window of width

. The timing offset along the

h-cone contributes a deterministic slope that integrates over the gate, yielding an rms phase

where

parametrizes the entry across the window (variance 1/12). Hence

Express in coherence-time units.

Writing for a characteristic coherence time of the source/analysis,

and the

fractional anisotropy (visibility drop) is

Connection to CHSH.

In a standard CHSH test with settings

, the ideal correlations scale as

. The measured ones inherit the reduced

:

so the CHSH parameter becomes

with

for the optimal angles. This yields a

direction-dependent deficit in

S at order

.

Assumptions & small-parameter regime.

- 2.

so the second-order expansion is valid;

The preferred-axis projection produces the factor; averaging over

- 3.

recovers an isotropic mean.

Experimental scaling summary

At fixed

and

, the leading anisotropy scales quadratically with baseline and has a

angular pattern:

Longer baselines and shorter coherence times amplify the effect; null observation at the predicted sensitivity falsifies the boundary-synchronization mechanism under these assumptions.

Appendix B. Experimental Data-Analysis Recipes

Appendix B.1 Bell-Visibility Anisotropy Scaling

Suppose two detectors are separated by a baseline distance d. The information field I propagates on the auxiliary cone with invariant speed . If correlations are established via this cone, then the relative phase accumulated depends on the direction of separation relative to the laboratory frame.

Define the correlation visibility V as the amplitude of the sinusoidal correlation function measured in a Bell test:

If propagation is isotropic at speed ccc, then V is independent of detector orientation. However, if coherence propagates on the wider cone with speed

, the effective “light travel time” acquires an orientation dependence:

where

is the angle between the detector baseline and the preferred axis of the auxiliary cone. This introduces a small modulation of the observed visibility. To first order, the fractional anisotropy in visibility scales as

where

is the coherence time of the entangled source. For current photonic experiments with

and

, this gives

for

. Kilometer-scale baselines (satellite links) push this sensitivity down by 2–3 orders of magnitude.

Appendix B.2. QEC Syndrome Ridge Scaling

Consider a stabilizer-code array laid out on a 1D or 2D geometry inside the device domain D. Let stabilizer outcomes be at sites a, b separated by. During a gate window of duration (with temporal width ), the information sector can generate domain-local, finite-speed correlations with propagation speed on the h-cone.

Define the connected two-point syndrome correlator

.

Model the gate as a temporal window g(t) with width

, and the h-cone propagation kernel as a narrow pulse

Then the

pair-activation amplitude for joint errors at separation r is proportional to the overlap of the gate and propagation kernels:

Taking g(t) Gaussian with rms width

,

The connected correlator inherits this envelope (up to device-dependent prefactors ():

.

Interpretation (ridge width). In a

2D array, plotting

over site pairs (a,b) produces

ridge-like bands of elevated correlation along loci of approximately constant r. The

characteristic ridge half-width in real space is the

informational correlation length

and the

dimensionless scaling used to compare devices is

with

the lattice spacing (or minimum detector separation).

Directional (anisotropic) gate or lab frame. If the gate is directionally biased or the lab frame defines a preferred axis

, replace r by the projected separation

:

which yields

line-like ridges aligned perpendicular to

.

Practical estimator. From an experimental syndrome dataset over many rounds

, estimate the connected correlator binned by separation r:

then fit

to the Gaussian envelope to extract

and bound

.

Key prediction. Across architectures (superconducting, trapped-ion, photonic),

ridge width scales linearly with the gate temporal width:

so

shorter gate windows sharpen ridges;

longer windows broaden them. Null observation of this scaling—while other internal tests confirm domain-local h-cone coupling—falsifies the mechanism.

Optional Spectral Check

Some teams prefer a Fourier-space diagnostic. The structure factor

will show

narrowing along with width

.

Appendix C. Relation to Quantum Gravity and Holography (Extended)

Ryu–Takayanagi formula: links entanglement entropy to bulk geometry [

15].

Van Raamsdonk proposal: spacetime connectivity emerges from entanglement [

16].

ER=EPR (Maldacena & Susskind): entanglement ↔ wormholes [

19].

Swingle’s tensor networks: holographic geometry from entanglement renormalization [

20].

The sequestered information sector aligns with these insights: if spacetime itself is emergent from entanglement, the auxiliary metric may represent the substrate geometry of information, distinct from the energetic geometry

Appendix C.1. Black Hole Page Curve—Worked Example

This appendix sketches a quantitative Page-curve example for an evaporating, nonrotating, uncharged (Schwarzschild) black hole. The goal is to show how the entanglement entropy of Hawking radiation first increases and then decreases back to zero when islands are included, reproducing a unitary Page curve.

Setup and basic thermodynamics

Schwarzschild horizon area (mass M):

Planck length (definition):

Bekenstein–Hawking entropy:

Explicit

for Schwarzschild:

Evaporation time (order-of-magnitude, Schwarzschild):

Here

is the initial mass. The

Page time is roughly

half the evaporation time for an initially pure state (back-of-envelope):

(Precise numerical factors depend on graybody spectra and species; this schematic suffices for the Page-curve logic.)

Radiation entropy without islands (monotonic growth)

Let

denote the fine-grained entropy of the

Hawking radiation collected outside up to time ttt. In a naive (no-island) calculation,

grows approximately linearly at early times (coarse picture):

If evaporation is unitary, the radiation entropy should not grow indefinitely: it should turn over at the Page time and return to zero by . The no-island calculation fails to capture this turnover.

Generalized entropy and islands (turnover and unitary Page curve)

The modern resolution introduces

islands in the fine-grained entropy via the

generalized entropy functional

and extremizes it over candidate island regions

(the “island prescription”).

Generalized entropy (definition):

Island rule (extremize + choose minimal):

Early times: the extremum with no island dominates, so increases.

After: an extremum with an island becomes preferred. The area term acts like a counter; as the black hole shrinks, decreases and the minimal tracks the remaining black-hole entropy.

This structure yields a

unitary Page curve implementable as the

minimum of two branches (schematic):

where

is the mass trajectory under evaporation. At

,

,

and for the island branch wins, causing to decrease in step with the shrinking.

Thus, the turnover is not put in by hand; it emerges when the generalized entropy is extremized and minimized over island configurations.

How this dovetails with the sequestered information sector

In the two-cone framework,

information can propagate on the auxiliary metric

with invariant speed

but carries

no stress–energy:

An energy-free horizon information membrane can therefore communicate correlations to the radiation without transporting energy across the horizon. This is consistent with black-hole thermodynamics (the Hawking flux and area decrease remain governed by ), while the fine-grained entropy follows the island prescription and produces a unitary Page curve. In short: energetic bookkeeping (area decrease, , stays on ; informational bookkeeping (entanglement flow) is carried by the sector, implementing the turnover required by unitarity.

Optional explicit mass–time sketch (coarse)

One can parametrize a coarse via the standard evaporation scaling , giving (with a constant absorbing greybody factors/species). Then decreases convexly, while a crude increases until crossing at .

This coarse model suffices to visualize the crossing of and near and the subsequent decrease of with the island branch.

Summary

Without islands:grows monotonically → paradox.

-

With islands (generalized entropy):

turnover at , then decrease to 0 (unitarity).

In our framework: Energy stays on ; information flows on with , permitting an energy-free information membrane consistent with thermodynamics and the Page curve.

Appendix D. Falsification Matrix

| Observable |

Signature / Scaling |

Current sensitivity (order) |

Near-term target |

Falsifies if… |

Notes / Refs |

| Bell visibility anisotropy |

|

σV∼10−3 (state-of-the-art lab Bell tests) |

σV≲10−4 on km baselines |

no anisotropy while other internal tests confirm -mediated structure |

Scaling estimate from two-cone hypothesis; relates device separation d to info-cone speed . [4,5,6,7,12,13] |

| QEC syndrome “ridges” |

|

qualitative (pattern-level) |

quantitative ridge-width vs. d with ≤10% error |

absence of any scaling across architectures |

Requires stabilizer-code arrays with variable spacing d. |

| Satellite entanglement anisotropy |

|

global links demonstrated; visibility precision ∼10−3

|

push to 10−4 precision; orientation scans vs. orbit |

null anisotropy bounds imply (tight) |

Micius-class baselines set strong bounds on . [12,13] |

| Internal timing probe of |

|

ns–ps timing in photonics |

ps–fs timing across chip-to-chip links |

timing consistent with luminal or subluminal only |

Requires strictly domain-local coupling (gate ). |

| GW dispersion (effective ) |

|

(LIGO–Virgo–KAGRA) |

(ET/CE) [58] |

detected dispersion inconsistent with predicted loop-suppressed effects or inconsistent nulls |

Constraint on loop-induced graviton mass inside device domains. |

| Photon mass / magnetostatics |

|

(astro) |

push lower via deep-space probes |

detection of Yukawa roll-off at odds with gate-localized loops |

Independent check on radiative sequestering [57]. |

| Off-device signaling |

No controllable superluminal signal |

strong null results to date |

reinforce with space-like separated, loophole-free designs |

any repeatable superluminal message transmission |

Immediate falsification of sequestering (violates no-signaling). |

| Lorentz leakage outside D

|

confines all couplings to D

|

indirect (engineering control) |

direct leakage searches in shielded environs |

observation of LV couplings beyond device boundary |

Violates radiative sequestering premise. |

| EFT stability |

|

model-dependent |

maintain wide hierarchy |

anomalies at accessible energies |

Any breakdown at low energies rules out the EFT. |

| Spectral shift (Analog gravity rotator) |

Resonance opens when ( |

|

|

ppm-level frequency resolution (atomic clocks) |

kHz–Hz linewidth tracking in rotor-driven setups |

| Josephson–optical coincidence |

Shapiro steps at coincide with photon counts; scaling with optical pump power |

Shapiro steps at ; dark counts ∼102 s−1

|

Coincidence rates ≳103 s−1 with GHz pump |

Electrical steps observed without correlated optical photon bursts |

Proposed Model C+E (compound Josephson–optical gate) |

| Cold-atom interferometry / conductance |

Quantized conductance G=ne2/; interferometric phase Δϕ∼0.1 rad |

Atom interferometers: δϕ∼10−3 rad for N=105 |

Resolve phase shifts ≥0.01 rad conductance plateaus |

No quantized conductance or resolvable phase shift above shot-noise |

Proposed Model D+A (strain-domain topological gate) |

| General noise/threshold criterion |

All gate signals exceed noise floor by SNR ≥10 |

Varies (ppm freq; μV Josephson; mrad atom phase) |

SNR ≥ 10 in controlled setups |

Signals remain within noise after averaging (SNR < 10) |

General falsifier for all controllable gate phenomena |

Appendix E. Open Questions and Future Directions

Planck-scale coupling: How does the information sector interact at quantum gravity scales?

Dynamical auxiliary metric: Could itself be emergent or dynamical?

Universality of gates: Are gate functions device-specific or fundamental?

Relation to holography: Is the information cone tied to entanglement wedges in AdS/CFT?

Experimental frontiers:

Extend satellite QKD anisotropy searches.

Deploy QEC arrays with km-scale separation.

Monitor GW dispersion with ET, CE, LISA [

58].

Engineering perspective: If confirmed, sequestered information could open pathways to new forms of communication, error correction, and even spacetime engineering.

Appendix F. Gate Function Physics – Creation, Control, and Models

Primitive definition [

31]

I model a gate as a spatiotemporal modulation:

where

multiplies an otherwise latent interaction between a visible sector

V and a sequestered sector

S.

“closes” the channel;

opens it.

Control fields

Let

be experimental knobs. Then:

Model A: EFT “portal” gate [

46]

Mediator scalar

couples sectors:

Model B: Open-system (Lindblad) control [

29,

30,

31]

Time-dependent master equation:

Model C: Josephson-style weak link [

33,

34]

Model D: Topological domain wall [

35,

36]

Model E: Nonlinear-optical parametric gate [

37,

38,

39]

Model F: Gravitational redshift window [

40,

41,

42]

Thermodynamic cost

Transport through a finite gate [

48,

49,

50]

Noise, leakage, and cloaking

Let leakage channel amplitude outside be . Impose a budget . Two remedies:

(i) Destructive interference: by adding a guard actuator that drives with opposite phase;

(ii)

Topological isolation [

35,

36]

: (Model D), where off-wall states are gapped. In open-system terms, set a large

outside

in:

to soak stray excitations.

Concrete laboratory scenarios

Cavity-strain portal (Model A + C): Mount a high-Q cavity on a piezo frame. Strain tunes via . Gate opens only when the cavity is both on resonance and strained past ; bandwidth limited by mechanical

Cold-atom domain wall (Model D)[

43,

44,

45]: Use Raman dressing to invert an effective mass

long a narrow ribbon; protected edge modes provide a low-loss channel. Fast optical control sweeps the wall to steer the aperture.

OPAs as time windows (Model E): A pulsed pump in a periodically poled waveguide defines ps gates. Use chirped quasi-phase-matching to tune the spectral aperture without moving hardware.

Analog gravity rotator (Model F): A rigid rotor at angular speed

simulates a redshift window by modifying the effective lapse function

. A resonance gate opens when the locally shifted frequency matches a target transition.

Control is achieved by modulating , allowing fast opening and closure of the window. The switching rate is limited by mechanical inertia and rotor stability.

Observables: Spectral lines of a probe transition shift when the gate opens, producing a discrete frequency match condition. Testability comes from measuring transmission spectra as is ramped; a sharp onset of resonance at the predicted redshift is the experimental signature.

Hybrid Josephson–optical gate (Model C + E): A superconducting weak link provides tunable phase bias, while a synchronized optical pump enables parametric drive. The two controls together create a compound aperture where electrical and photonic channels are simultaneously switched. This setup demonstrates cross-disciplinary control: electronic bias defines coarse gating, optical pulses provide ultrafast windows.

Observables: Shapiro-step patterns in the I–V characteristics coincide with optical pump timing, creating correlated spikes in current and photon flux. Joint detection of photons and Josephson oscillations provides a clear signature of the compound gate.

Cold-atom strain-domain system (Model D + A): An optical lattice of ultracold atoms experiences strain from spatially varying laser intensity, altering the effective mass term. A domain wall hosts protected edge channels, while strain simultaneously tunes mediator mass . This dual handle allows domain creation and annihilation on demand, with tunable leakage.

Observables: Atom interferometry detects phase-coherent transport along the domain wall, with conductance quantization as a hallmark. Bragg spectroscopy of the lattice reveals the strain-induced mass inversion, confirming controllable gate creation.

Testability

The models above are not purely abstract: each implies concrete, measurable observables that can serve as experimental signatures of gate dynamics. Testability, therefore, proceeds by identifying quantities that change sharply when a gate opens or closes:

Spectral shifts — in gravitational analogs, the local frequency tracks the lapse function, yielding resonance “on/off” behavior as varies.

Correlated electrical and optical signals — in hybrid Josephson–optical devices, coincidence between Shapiro steps and photon bursts provides a direct fingerprint of compound gating.

Quantized or protected transport — in cold-atom topological setups, conductance quantization and interferometric phase shifts reveal the presence or absence of domain-wall channels.

These observables allow a laboratory program to move beyond theoretical description: one can design experiments with clear “yes/no” criteria for gate existence and dynamics. The following subsections develop these criteria into proposed tests, including parameter ranges, noise budgets, and achievable detection thresholds.

1. Spectral Tests (Analog Gravity Rotator)

A resonance condition is reached when the locally shifted frequency matches a transition:

Detuning from resonance is:

Proposed Test: Place a narrow-linewidth atomic transition kHz scale) in the rotating frame. Sweep rotor angular velocity from .

Noise Budget: Dominant background is Doppler broadening, .

Detection Threshold: Observable transmission change requires:

Thus, measurement resolution at the ppm level suffices.

2. Electrical/Optical Coincidence (Hybrid Josephson–Optical)

Josephson current-phase relation [

33]:

Shapiro step condition [

34]:

Proposed Test: Bias a junction at while pumping with a GHz optical drive (GHz). Expect correlated steps at .

Noise Budget: Johnson–Nyquist noise in resistance gives 3. Cold-Atom Interferometry (Strain-Domain Gates)

Quantized conductance through edge states [

44,

45]:

Phase accumulation along edge:

Proposed Test: Use ultracold atoms at in a 2D lattice with strain tuned by laser intensity. Interferometer arms enclose a domain wall.

Noise Budget: Atom shot noise sets phase uncertainty

with

N the number of detected atoms. For

N,

.

Detection Threshold: Gate-induced phase shift expected . Thus SNR makes the effect clearly resolvable.

Summary

Each model yields clear test conditions:

Spectral gates: ppm-level resolution of frequency shifts.

Hybrid Josephson–optical gates: electrical Shapiro steps coincident with photon counts exceeding dark noise by at least one order of magnitude.

Cold-atom interferometry: phase-shift signals with SNR.

Together, these provide a roadmap for experimental falsifiability of gate physics.

Appendix F.1

. Auxiliary-Metric Decoupling Argument

A frequent concern in two-cone frameworks is that loop corrections might regenerate Lorentz-violating operators in the visible (energetic) sector, even if such terms are absent at tree level. The present construction prevents this through metric-sector factorization and radiative sequestering.

1. Metric factorization

All interaction terms take the form

where

is the gate function and

and

denote visible and informational fields, respectively. Because each Lagrangian is contracted only with its

own metric, covariant indices never mix across metrics. Any cross-term must pass through the

dimensionless scalar gate

, which carries no Lorentz indices and cannot break isotropy on

.

2. Loop suppression

Consider a generic loop with

visible propagators

and

informational propagators

. Each insertion of the gate function introduces a factor

where the average is confined to the device domain

. The mixed-metric amplitude scales as

Since

has support only within

and does not carry momentum on the

-metric light cone, the integral yields no Lorentz-breaking tensor structures in the visible sector. Explicitly, after integrating out the

field one obtains an

effective local operator

which is

Lorentz scalar on

. Tensorial (Lorentz-violating) corrections are forbidden by the absence of shared index structure.

3. Radiative stability

Because

is external and nondynamical, renormalization acts only on the visible and informational propagators independently. Counterterms must respect each metric’s covariance:

with no mixed curvature contractions possible. Hence the two sectors remain

radiatively sequestered: loop corrections renormalize intra-sector couplings but cannot transmit Lorentz violation across metrics.

4. Comparison with Lorentz-violating EFTs

In standard SME frameworks, Lorentz-breaking background tensors or appear directly in kinetic terms and survive renormalization. Here, no such background exists in the -sector: all mixed influence passes through the scalar gate, preserving full Lorentz symmetry of to all orders in perturbation theory.

5. Summary

The combination of metric-sector factorization, scalar-gate mediation, and loop suppression by ensures that radiative corrections cannot reintroduce Lorentz-violating operators in the visible sector. This auxiliary-metric decoupling provides a natural explanation for the stability of radiative sequestering referenced in the main text.

Appendix G . Research Pathway (Extended)

This work did not begin with a ready-made hypothesis but with a sequence of questions about the foundations of physics. Each question arose from dissatisfaction with conventional explanations and the drive to reconcile apparently conflicting principles. What follows is the path by which these questions accumulated, guided one into the next, and ultimately coalesced into the framework presented in this paper.

Relativity and the Speed Limit

The journey began with the most basic and well-tested constraint in modern physics: the universal speed limit of relativity. Special relativity asserts that the speed of light, c, is the maximum velocity at which causal influence can propagate. The question arose: why is c the limit, and what mechanism enforces this boundary?

To answer this, one must revisit the invariance of the spacetime interval and the structure of Lorentz transformations. The line element,

remains invariant across inertial frames, ensuring a fixed causal structure. The classification of intervals as timelike, lightlike, or spacelike delineates what influences can connect distinct events. Timelike intervals allow causal connections, lightlike intervals correspond to propagation at

c, and spacelike intervals forbid causal influence.

Energy–momentum relations deepen the picture. The relativistic energy expression,

shows that as velocity approaches

c, energy diverges without bound. No finite energy can accelerate a massive particle to

c, much less beyond it. Thus, relativity does not merely assume a speed limit; it enforces one through the geometry of spacetime and the divergence of physical quantities. At this stage, the principle seemed airtight: no influence can surpass

c.

Entanglement and the Challenge to Causality

The next question challenged this neat picture: what does quantum entanglement mean for the speed limit? Entanglement correlations appear to extend instantaneously across spacelike separations. Two particles, once entangled, retain correlations in measurement outcomes regardless of the distance between them.

Einstein, Podolsky, and Rosen (EPR) framed this in 1935 as evidence that quantum mechanics might be incomplete. Schrödinger, in response, coined the term Verschränkung (entanglement) to emphasize that the phenomenon was not an incidental feature but a central one. Bell’s theorem decades later sharpened the paradox: if local realism holds, then measurement correlations must satisfy inequalities like the CHSH bound. Quantum mechanics predicts violations, and Sagawa–Ueda bounds later clarified thermodynamic limits.

At this stage, the natural question was: does this mean information is propagating faster than light? Standard explanations respond with the no-signaling theorem: although entanglement produces correlations, no controllable signal can be transmitted superluminally. This answer is formally correct, but it feels like a patch. It reassures that relativity is not violated, yet it does not explain how coherence is maintained across spacelike separations.

Dissatisfaction with No-Signaling

The no-signaling theorem resolves the minimal contradiction, but it fails to address the deeper puzzle. If entanglement were a mere statistical overlay, it should not serve as a functional resource. Yet in practice, it does. This raised the next question: is the no-signaling condition sufficient, or does it obscure something more fundamental?

Here dissatisfaction became a pivot point. The no-signaling theorem ensures compatibility, but not comprehension. It tells us what cannot happen, but not why entanglement enables the extraordinary protocols at the heart of quantum information science.

From Paradox to Practice

The focus then shifted from abstract paradoxes to operational realities: what do real-world protocols reveal about the nature of entanglement?

In quantum teleportation, an unknown quantum state is reconstructed at a distant location using a shared entangled pair and a classical channel. The entanglement is indispensable; without it, teleportation is impossible.

In

superdense coding, one qubit of an entangled pair allows the transmission of two classical bits. Entanglement enhances the channel capacity [

48,

49] beyond classical limits.

In quantum key distribution (QKD), entanglement and the violation of Bell inequalities certify secure keys in a way that no classical protocol can.

Satellite experiments have now extended entanglement distribution to distances exceeding a thousand kilometers, demonstrating coherence across global scales. Entanglement is not only a theoretical construct—it is a resource engineered, distributed, and consumed in laboratories and communication networks.

This led to the next, sharper question: if entanglement acts like a resource, is information itself a physical entity?

Information as a Resource

Entropy and mutual information [

50] provide the formalism for treating information quantitatively. The von Neumann entropy,

measures uncertainty, while mutual information,

quantifies correlations between subsystems. These are not abstract bookkeeping tools—they are measurable, conserved quantities. In holography, the Ryu–Takayanagi relation connects entanglement entropy to geometric surfaces in spacetime. Van Raamsdonk argued that spacetime connectivity itself arises from entanglement structure.