Submitted:

18 October 2025

Posted:

20 October 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

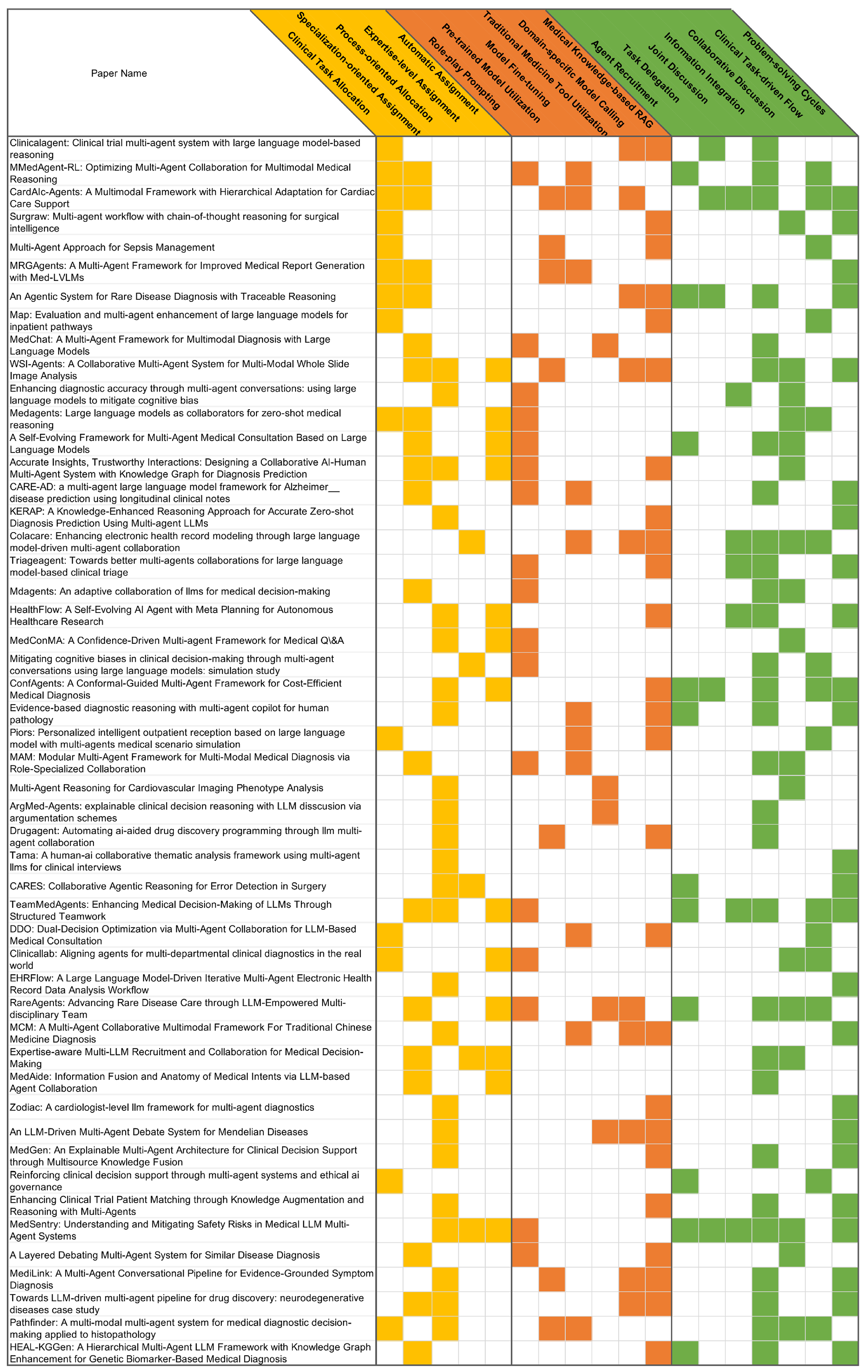

2. Taxonomy of Medical Multi-Agent Systems

2.1. Team Composition

2.2. Medical Knowledge Augmentation

2.2.1. Agent-Intrinsic

2.2.2. Externally-Assisted

2.3. Agent Interaction

2.3.1. Hierarchical Coordination

2.3.2. Peer Collaboration

3. Discussion

3.1. Design and Evaluation of Agent Profiles

3.2. Self-Evolving Agentic Systems

3.3. Human Intervention

3.4. Multimodal Integration

3.5. Interaction Patterns

3.6. Medical Scenarios

4. Conclusion

Appendix A. Appendix

Appendix A.1. Acknowledgments of the Use of LLM

Appendix A.2. Ethics Statement

Appendix A.3. Reproducibility Statement

References

- Chen, X.; Yi, H.; You, M.; Liu, W.; Wang, L.; Li, H.; Zhang, X.; Guo, Y.; Fan, L.; Chen, G.; et al. Enhancing diagnostic capability with multi-agents conversational large language models. NPJ digital medicine 2025, 8, 159. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Zhu, Y.; Zhao, H.; Zheng, X.; Sui, D.; Wang, T.; Tang, W.; Wang, Y.; Harrison, E.; Pan, C.; et al. Colacare: Enhancing electronic health record modeling through large language model-driven multi-agent collaboration. In Proceedings of the Proceedings of the ACM on Web Conference 2025, 2025, pp. [Google Scholar]

- Lu, M.; Ho, B.; Ren, D.; Wang, X. Triageagent: Towards better multi-agents collaborations for large language model-based clinical triage. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, 2024, pp. [Google Scholar]

- Yue, L.; Xing, S.; Chen, J.; Fu, T. reasoning. In Proceedings of the Proceedings of the 15th ACM International Conference on Bioinformatics, Computational Biology and Health Informatics, 2024, pp.

- Kim, Y.; Park, C.; Jeong, H.; Chan, Y.S.; Xu, X.; McDuff, D.; Lee, H.; Ghassemi, M.; Breazeal, C.; Park, H.W. Mdagents: An adaptive collaboration of llms for medical decision-making. Advances in Neural Information Processing Systems 2024, 37, 79410–79452. [Google Scholar]

- Xia, P.; Wang, J.; Peng, Y.; Zeng, K.; Wu, X.; Tang, X.; Zhu, H.; Li, Y.; Liu, S.; Lu, Y.; et al. MMedAgent-RL: Optimizing Multi-Agent Collaboration for Multimodal Medical Reasoning. arXiv preprint arXiv:2506.00555, arXiv:2506.00555 2025.

- Wang, R.; Chen, Y.; Zhang, W.; Si, J.; Guan, H.; Peng, X.; Lu, W. MedConMA: A Confidence-Driven Multi-agent Framework for Medical Q&A. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining. Springer; 2025; pp. 421–433. [Google Scholar]

- Li, X.; Wang, S.; Zeng, S.; Wu, Y.; Yang, Y. A survey on LLM-based multi-agent systems: workflow, infrastructure, and challenges. Vicinagearth 2024, 1, 9. [Google Scholar] [CrossRef]

- Guo, T.; Chen, X.; Wang, Y.; Chang, R.; Pei, S.; Chawla, N.V.; Wiest, O.; Zhang, X. Large language model based multi-agents: A survey of progress and challenges. arXiv preprint arXiv:2402.01680, arXiv:2402.01680 2024.

- Yao, Z.; Yu, H. A survey on llm-based multi-agent ai hospital 2025.

- Alshehri, A.; Alshahrani, F.; Shah, H. A Precise Survey on Multi-agent in Medical Domains. International Journal of Advanced Computer Science and Applications 2023, 14. [Google Scholar] [CrossRef]

- Wang, W.; Ma, Z.; Wang, Z.; Wu, C.; Ji, J.; Chen, W.; Li, X.; Yuan, Y. A survey of llm-based agents in medicine: How far are we from baymax? arXiv preprint arXiv:2502.11211, arXiv:2502.11211 2025.

- Wang, Q.; Wang, T.; Tang, Z.; Li, Q.; Chen, N.; Liang, J.; He, B. MegaAgent: A large-scale autonomous LLM-based multi-agent system without predefined SOPs. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2025, 2025, pp. [Google Scholar]

- Zhuang, Y.; Jiang, W.; Zhang, J.; Yang, Z.; Zhou, J.T.; Zhang, C. Learning to Be A Doctor: Searching for Effective Medical Agent Architectures. arXiv preprint arXiv:2504.11301, arXiv:2504.11301 2025.

- Almansoori, M.; Kumar, K.; Cholakkal, H. Self-Evolving Multi-Agent Simulations for Realistic Clinical Interactions. arXiv preprint arXiv:2503.22678, arXiv:2503.22678 2025.

- Fan, Y.; Xue, K.; Li, Z.; Zhang, X.; Ruan, T. An LLM-based Framework for Biomedical Terminology Normalization in Social Media via Multi-Agent Collaboration. In Proceedings of the Proceedings of the 31st International Conference on Computational Linguistics, 2025, pp.

- Iapascurta, V.; Fiodorov, I.; Belii, A.; Bostan, V. Multi-Agent Approach for Sepsis Management. Healthcare Informatics Research 2025, 31, 209–214. [Google Scholar] [CrossRef] [PubMed]

- Bao, Z.; Liu, Q.; Guo, Y.; Ye, Z.; Shen, J.; Xie, S.; Peng, J.; Huang, X.; Wei, Z. Piors: Personalized intelligent outpatient reception based on large language model with multi-agents medical scenario simulation. arXiv preprint arXiv:2411.13902, arXiv:2411.13902 2024.

- Zhao, W.; Wu, C.; Fan, Y.; Zhang, X.; Qiu, P.; Sun, Y.; Zhou, X.; Wang, Y.; Zhang, Y.; Yu, Y.; et al. An Agentic System for Rare Disease Diagnosis with Traceable Reasoning. arXiv preprint arXiv:2506.20430, arXiv:2506.20430 2025.

- Wang, P.; Ye, S.; Naseem, U.; Kim, J. MRGAgents: A Multi-Agent Framework for Improved Medical Report Generation with Med-LVLMs. arXiv preprint arXiv:2505.18530, arXiv:2505.18530 2025.

- Jia, Z.; Jia, M.; Duan, J.; Wang, J. DDO: Dual-Decision Optimization via Multi-Agent Collaboration for LLM-Based Medical Consultation. arXiv preprint arXiv:2505.18630, arXiv:2505.18630 2025.

- Zhang, Y.; Bunting, K.V.; Champsi, A.; Wang, X.; Lu, W.; Thorley, A.; Hothi, S.S.; Qiu, Z.; Kotecha, D.; Duan, J. CardAIc-Agents: A Multimodal Framework with Hierarchical Adaptation for Cardiac Care Support. arXiv preprint arXiv:2508.13256, arXiv:2508.13256 2025.

- Tang, X.; Zou, A.; Zhang, Z.; Li, Z.; Zhao, Y.; Zhang, X.; Cohan, A.; Gerstein, M. Medagents: Large language models as collaborators for zero-shot medical reasoning. arXiv preprint arXiv:2311.10537, arXiv:2311.10537 2023.

- Yan, W.; Liu, H.; Wu, T.; Chen, Q.; Wang, W.; Chai, H.; Wang, J.; Zhao, W.; Zhang, Y.; Zhang, R.; et al. Clinicallab: Aligning agents for multi-departmental clinical diagnostics in the real world. arXiv preprint arXiv:2406.13890, arXiv:2406.13890 2024.

- Low, C.H.; Wang, Z.; Zhang, T.; Zeng, Z.; Zhuo, Z.; Mazomenos, E.B.; Jin, Y. Surgraw: Multi-agent workflow with chain-of-thought reasoning for surgical intelligence. arXiv preprint arXiv:2503.10265, arXiv:2503.10265 2025.

- Chen, Z.; Peng, Z.; Liang, X.; Wang, C.; Liang, P.; Zeng, L.; Ju, M.; Yuan, Y. Map: Evaluation and multi-agent enhancement of large language models for inpatient pathways. arXiv preprint arXiv:2503.13205, arXiv:2503.13205 2025.

- Chen, Y.J.; Albarqawi, A.; Chen, C.S. Reinforcing clinical decision support through multi-agent systems and ethical ai governance. arXiv preprint arXiv:2504.03699, arXiv:2504.03699 2025.

- Ghezloo, F.; Seyfioglu, M.S.; Soraki, R.; Ikezogwo, W.O.; Li, B.; Vivekanandan, T.; Elmore, J.G.; Krishna, R.; Shapiro, L. Pathfinder: A multi-modal multi-agent system for medical diagnostic decision-making applied to histopathology. arXiv preprint arXiv:2502.08916, arXiv:2502.08916 2025.

- Zhou, Y.; Song, L.; Shen, J. MAM: Modular Multi-Agent Framework for Multi-Modal Medical Diagnosis via Role-Specialized Collaboration. arXiv preprint arXiv:2506.19835, arXiv:2506.19835 2025.

- Li, R.; Wang, X.; Berlowitz, D.; Mez, J.; Lin, H.; Yu, H. CARE-AD: a multi-agent large language model framework for Alzheimer’s disease prediction using longitudinal clinical notes. npj Digital Medicine 2025, 8, 541. [Google Scholar] [CrossRef] [PubMed]

- Chen, K.; Qi, J.; Huo, J.; Tian, P.; Meng, F.; Yang, X.; Gao, Y. A Self-Evolving Framework for Multi-Agent Medical Consultation Based on Large Language Models. In Proceedings of the ICASSP 2025-2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE; 2025; pp. 1–5. [Google Scholar]

- Mishra, P.P.; Arvan, M.; Zalake, M. TeamMedAgents: Enhancing Medical Decision-Making of LLMs Through Structured Teamwork. arXiv preprint arXiv:2508.08115, arXiv:2508.08115 2025.

- Li, H.; Cheng, X.; Zhang, X. Accurate Insights, Trustworthy Interactions: Designing a Collaborative AI-Human Multi-Agent System with Knowledge Graph for Diagnosis Prediction. In Proceedings of the Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, 2025, pp.

- Chen, X.; Jin, Y.; Mao, X.; Wang, L.; Zhang, S.; Chen, T. RareAgents: Advancing Rare Disease Care through LLM-Empowered Multi-disciplinary Team. arXiv preprint arXiv:2412.12475, arXiv:2412.12475 2024.

- Bao, L.; Peng, Z.; Zhou, X.; Cong, R.; Zhang, J.; Yuan, Y. Expertise-aware Multi-LLM Recruitment and Collaboration for Medical Decision-Making. arXiv preprint arXiv:2508.13754, arXiv:2508.13754 2025.

- Yang, D.; Wei, J.; Li, M.; Liu, J.; Liu, L.; Hu, M.; He, J.; Ju, Y.; Zhou, W.; Liu, Y.; et al. MedAide: Information Fusion and Anatomy of Medical Intents via LLM-based Agent Collaboration. Information Fusion, 1037. [Google Scholar]

- Liu, P.R.; Bansal, S.; Dinh, J.; Pawar, A.; Satishkumar, R.; Desai, S.; Gupta, N.; Wang, X.; Hu, S. MedChat: A Multi-Agent Framework for Multimodal Diagnosis with Large Language Models. arXiv preprint arXiv:2506.07400, arXiv:2506.07400 2025.

- Lyu, X.; Liang, Y.; Chen, W.; Ding, M.; Yang, J.; Huang, G.; Zhang, D.; He, X.; Shen, L. WSI-Agents: A Collaborative Multi-Agent System for Multi-Modal Whole Slide Image Analysis. arXiv preprint arXiv:2507.14680, arXiv:2507.14680 2025.

- Zhao, Y.; Wang, H.; Zheng, Y.; Wu, X. A Layered Debating Multi-Agent System for Similar Disease Diagnosis. In Proceedings of the Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 2: Short Papers), 2025, pp.

- Solovev, G.V.; Zhidkovskaya, A.B.; Orlova, A.; Vepreva, A.; Ilya, T.; Golovinskii, R.; Gubina, N.; Chistiakov, D.; Aliev, T.A.; Poddiakov, I.; et al. Towards LLM-driven multi-agent pipeline for drug discovery: neurodegenerative diseases case study. In Proceedings of the 2nd AI4Research Workshop: Towards a Knowledge-grounded Scientific Research Lifecycle; 2024. [Google Scholar]

- Zuo, K.; Zhong, Z.; Huang, P.; Tang, S.; Chen, Y.; Jiang, Y. HEAL-KGGen: A Hierarchical Multi-Agent LLM Framework with Knowledge Graph Enhancement for Genetic Biomarker-Based Medical Diagnosis. bioRxiv, 2025. [Google Scholar]

- Zhang, W.; Qiao, M.; Zang, C.; Niederer, S.; Matthews, P.M.; Bai, W.; Kainz, B. Multi-Agent Reasoning for Cardiovascular Imaging Phenotype Analysis. arXiv preprint arXiv:2507.03460, arXiv:2507.03460 2025.

- Hong, S.; Xiao, L.; Zhang, X.; Chen, J. ArgMed-Agents: explainable clinical decision reasoning with LLM disscusion via argumentation schemes. In Proceedings of the 2024 IEEE International Conference on Bioinformatics and Biomedicine (BIBM). IEEE; 2024; pp. 5486–5493. [Google Scholar]

- Liu, S.; Lu, Y.; Chen, S.; Hu, X.; Zhao, J.; Lu, Y.; Zhao, Y. Drugagent: Automating ai-aided drug discovery programming through llm multi-agent collaboration. arXiv preprint arXiv:2411.15692, arXiv:2411.15692 2024.

- Ke, Y.H.; Yang, R.; Lie, S.A.; Lim, T.X.Y.; Abdullah, H.R.; Ting, D.S.W.; Liu, N. Enhancing diagnostic accuracy through multi-agent conversations: using large language models to mitigate cognitive bias. arXiv preprint arXiv:2401.14589, arXiv:2401.14589 2024.

- Xu, H.; Yi, S.; Lim, T.; Xu, J.; Well, A.; Mery, C.; Zhang, A.; Zhang, Y.; Ji, H.; Pingali, K.; et al. Tama: A human-ai collaborative thematic analysis framework using multi-agent llms for clinical interviews. arXiv preprint arXiv:2503.20666, arXiv:2503.20666 2025.

- Low, C.H.; Zhuo, Z.; Wang, Z.; Xu, J.; Liu, H.; Sirajudeen, N.; Boal, M.; Edwards, P.J.; Stoyanov, D.; Francis, N.; et al. CARES: Collaborative Agentic Reasoning for Error Detection in Surgery. arXiv preprint arXiv:2508.08764, arXiv:2508.08764 2025.

- Zhao, H.; Zhu, Y.; Wang, Z.; Wang, Y.; Gao, J.; Ma, L. ConfAgents: A Conformal-Guided Multi-Agent Framework for Cost-Efficient Medical Diagnosis. arXiv preprint arXiv:2508.04915, arXiv:2508.04915 2025.

- Wu, H.; Zhu, Y.; Wang, Z.; Zheng, X.; Wang, L.; Tang, W.; Wang, Y.; Pan, C.; Harrison, E.M.; Gao, J.; et al. EHRFlow: A Large Language Model-Driven Iterative Multi-Agent Electronic Health Record Data Analysis Workflow. In Proceedings of the KDD’24 Workshop: Artificial Intelligence and Data Science for Healthcare: Bridging Data-Centric AI and People-Centric Healthcare; 2024. [Google Scholar]

- Liang, C.; Ma, Z.; Wang, W.; Ding, M.; Cao, Z.; Chen, M. MCM: A Multi-Agent Collaborative Multimodal Framework For Traditional Chinese Medicine Diagnosis. In Proceedings of the 2025 IEEE International Conference on Image Processing (ICIP). IEEE; 2025; pp. 1438–1443. [Google Scholar]

- Zhou, Y.; Zhang, P.; Song, M.; Zheng, A.; Lu, Y.; Liu, Z.; Chen, Y.; Xi, Z. Zodiac: A cardiologist-level llm framework for multi-agent diagnostics. arXiv preprint arXiv:2410.02026, arXiv:2410.02026 2024.

- Zhou, X.; Ren, Y.; Zhao, Q.; Huang, D.; Wang, X.; Zhao, T.; Zhu, Z.; He, W.; Li, S.; Xu, Y.; et al. An LLM-Driven Multi-Agent Debate System for Mendelian Diseases. arXiv preprint arXiv:2504.07881, arXiv:2504.07881 2025.

- Xie, Y.; Cui, H.; Zhang, Z.; Lu, J.; Shu, K.; Nahab, F.; Hu, X.; Yang, C. KERAP: A Knowledge-Enhanced Reasoning Approach for Accurate Zero-shot Diagnosis Prediction Using Multi-agent LLMs. arXiv preprint arXiv:2507.02773, arXiv:2507.02773 2025.

- Liu, Z.; Xiao, L.; Zhu, R.; Yang, H.; He, M. MedGen: An Explainable Multi-Agent Architecture for Clinical Decision Support through Multisource Knowledge Fusion. In Proceedings of the 2024 IEEE International Conference on Bioinformatics and Biomedicine (BIBM). IEEE; 2024; pp. 6474–6481. [Google Scholar]

- Shi, H.; Zhang, J.; Zhang, K. Enhancing Clinical Trial Patient Matching through Knowledge Augmentation and Reasoning with Multi-Agents. arXiv preprint arXiv:2411.14637, arXiv:2411.14637 2024.

- Chen, K.; Zhen, T.; Wang, H.; Liu, K.; Li, X.; Huo, J.; Yang, T.; Xu, J.; Dong, W.; Gao, Y. MedSentry: Understanding and Mitigating Safety Risks in Medical LLM Multi-Agent Systems. arXiv preprint arXiv:2505.20824, arXiv:2505.20824 2025.

- Mahajan, B.; Ji, K. MediLink: A Multi-Agent Conversational Pipeline for Evidence-Grounded Symptom Diagnosis 2025.

- Chen, C.; Weishaupt, L.L.; Williamson, D.F.; Chen, R.J.; Ding, T.; Chen, B.; Vaidya, A.; Le, L.P.; Jaume, G.; Lu, M.Y.; et al. Evidence-based diagnostic reasoning with multi-agent copilot for human pathology. arXiv preprint arXiv:2506.20964, arXiv:2506.20964 2025.

- Zhu, Y.; Qi, Y.; Wang, Z.; Gu, L.; Sui, D.; Hu, H.; Zhang, X.; He, Z.; Ma, L.; Yu, L. HealthFlow: A Self-Evolving AI Agent with Meta Planning for Autonomous Healthcare Research. arXiv preprint arXiv:2508.02621, arXiv:2508.02621 2025.

- Ke, Y.; Yang, R.; Lie, S.A.; Lim, T.X.Y.; Ning, Y.; Li, I.; Abdullah, H.R.; Ting, D.S.W.; Liu, N. Mitigating cognitive biases in clinical decision-making through multi-agent conversations using large language models: simulation study. Journal of Medical Internet Research 2024, 26, e59439. [Google Scholar] [CrossRef] [PubMed]

- Liang, Y.; Lyu, X.; Chen, W.; Ding, M.; Zhang, J.; He, X.; Wu, S.; Xing, X.; Yang, S.; Wang, X.; et al. WSI-LLaVA: A multimodal large language model for whole slide image. arXiv preprint arXiv:2412.02141, arXiv:2412.02141 2024.

- Seyfioglu, M.S.; Ikezogwo, W.O.; Ghezloo, F.; Krishna, R.; Shapiro, L. videos. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024, pp.

- Sellergren, A.; Kazemzadeh, S.; Jaroensri, T.; Kiraly, A.; Traverse, M.; Kohlberger, T.; Xu, S.; Jamil, F.; Hughes, C.; Lau, C.; et al. Medgemma technical report. arXiv preprint arXiv:2507.05201, arXiv:2507.05201 2025.

- Shao, Z.; Wang, P.; Zhu, Q.; Xu, R.; Song, J.; Bi, X.; Zhang, H.; Zhang, M.; Li, Y.; Wu, Y.; et al. Deepseekmath: Pushing the limits of mathematical reasoning in open language models. arXiv preprint arXiv:2402.03300, arXiv:2402.03300 2024.

- Luo, Y.; Zhang, J.; Fan, S.; Yang, K.; Wu, Y.; Qiao, M.; Nie, Z. Biomedgpt: Open multimodal generative pre-trained transformer for biomedicine. arXiv preprint arXiv:2308.09442, arXiv:2308.09442 2023.

- Demner-Fushman, D.; Kohli, M.D.; Rosenman, M.B.; Shooshan, S.E.; Rodriguez, L.; Antani, S.; Thoma, G.R.; McDonald, C.J. Preparing a collection of radiology examinations for distribution and retrieval. Journal of the American Medical Informatics Association 2015, 23, 304–310. [Google Scholar] [CrossRef] [PubMed]

- Johnson, A.E.; Pollard, T.J.; Greenbaum, N.R.; Lungren, M.P.; Deng, C.y.; Peng, Y.; Lu, Z.; Mark, R.G.; Berkowitz, S.J.; Horng, S. MIMIC-CXR-JPG, a large publicly available database of labeled chest radiographs. arXiv preprint arXiv:1901.07042, arXiv:1901.07042 2019.

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of hallucination in natural language generation. ACM computing surveys 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Smedley, D.; Jacobsen, J.O.; Jäger, M.; Köhler, S.; Holtgrewe, M.; Schubach, M.; Siragusa, E.; Zemojtel, T.; Buske, O.J.; Washington, N.L.; et al. Next-generation diagnostics and disease-gene discovery with the Exomiser. Nature protocols 2015, 10, 2004–2015. [Google Scholar] [CrossRef] [PubMed]

- Mao, X.; Huang, Y.; Jin, Y.; Wang, L.; Chen, X.; Liu, H.; Yang, X.; Xu, H.; Luan, X.; Xiao, Y.; et al. A phenotype-based AI pipeline outperforms human experts in differentially diagnosing rare diseases using EHRs. npj Digital Medicine 2025, 8, 68. [Google Scholar] [CrossRef] [PubMed]

- Shen, C.; Zhang, W.; Li, K.; Huang, E.; Bi, H.; Fan, A.; Shen, Y.; Dong, H.; Zhang, J.; Shao, Y.; et al. FEAT: A Multi-Agent Forensic AI System with Domain-Adapted Large Language Model for Automated Cause-of-Death Analysis. arXiv preprint arXiv:2508.07950, arXiv:2508.07950 2025.

- Sheng, R.; Shi, C.; Lotfi, S.; Liu, S.; Perer, A.; Qu, H.; Cheng, F. Design Patterns of Human-AI Interfaces in Healthcare. arXiv preprint arXiv:2507.12721, arXiv:2507.12721 2025.

- Sheng, R.; Wang, X.; Wang, J.; Jin, X.; Sheng, Z.; Xu, Z.; Rajendran, S.; Qu, H.; Wang, F. TrialCompass: Visual Analytics for Enhancing the Eligibility Criteria Design of Clinical Trials. arXiv preprint arXiv:2507.12298, arXiv:2507.12298 2025.

- Wang, X.; He, J.; Jin, Z.; Yang, M.; Wang, Y.; Qu, H. M2lens: Visualizing and explaining multimodal models for sentiment analysis. IEEE Transactions on Visualization and Computer Graphics 2021, 28, 802–812. [Google Scholar] [CrossRef]

| Category | Subcategory | Sub-subcategory | Related Work |

|---|---|---|---|

| Team Composition | Clinical task allocation | [4,6,17,18,19,20,21,22,23,24,25,26,27,28] | |

| Specialization-oriented assignment | [5,6,19,20,22,23,29,30,31,32,33,34,35,36,37,38,39,40,41] | ||

| Process-oriented allocation | [7,28,32,33,38,40,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59] | ||

| Expertise-level assignment | [2,35,47,56,60] | ||

| Automatic assignment | [7,23,24,31,32,33,34,35,36,38,48,56,59] | ||

| Medical Knowledge | Agent-intrinsic | Role-play prompting | [3,5,6,7,23,24,29,30,31,32,33,34,37,39,45,56,60] |

| Pre-trained model utilization | [17,20,22,28,38,44,57] | ||

| Model fine-tuning | [2,6,18,20,21,22,28,29,30,50,58] | ||

| Externally-assisted | Traditional medicine tool utilization | [34,37,42,43,52] | |

| Domain-specific model calling | [2,4,19,22,34,38,40,50,52,57] | ||

| Medical knowledge-based RAG | [2,3,4,17,18,19,21,25,26,33,38,39,40,41,44,48,50,51,52,53,54,55,57,58,59] | ||

| Agent Interaction | Hierarchical Coordination | Agent recruitment | [6,19,27,31,32,34,41,47,48,56,58] |

| Task delegation | [4,19,22,48,56] | ||

| Joint discussion | [2,3,22,32,45,56,59] | ||

| Information integration | [2,3,4,5,6,19,22,28,29,30,31,32,34,35,36,37,38,40,41,43,44,48,54,55,56,57,58,59,60] | ||

| Peer Collaboration | Collaborative Discussion | [2,5,7,23,24,25,28,29,31,33,34,35,38,39,42,45,56] | |

| Clinical task-driven flow | [2,6,17,18,21,22,23,24,26,27,28,32,34,48,60] | ||

| Problem-solving cycles | [3,19,20,22,25,30,32,38,40,41,46,47,48,49,50,51,52,53,54,55,56,57,58,59] | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).