Submitted:

16 October 2025

Posted:

20 October 2025

Read the latest preprint version here

Abstract

Keywords:

I. Introduction

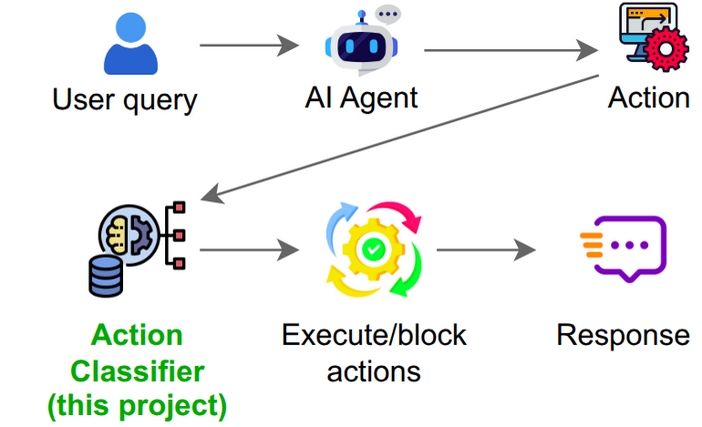

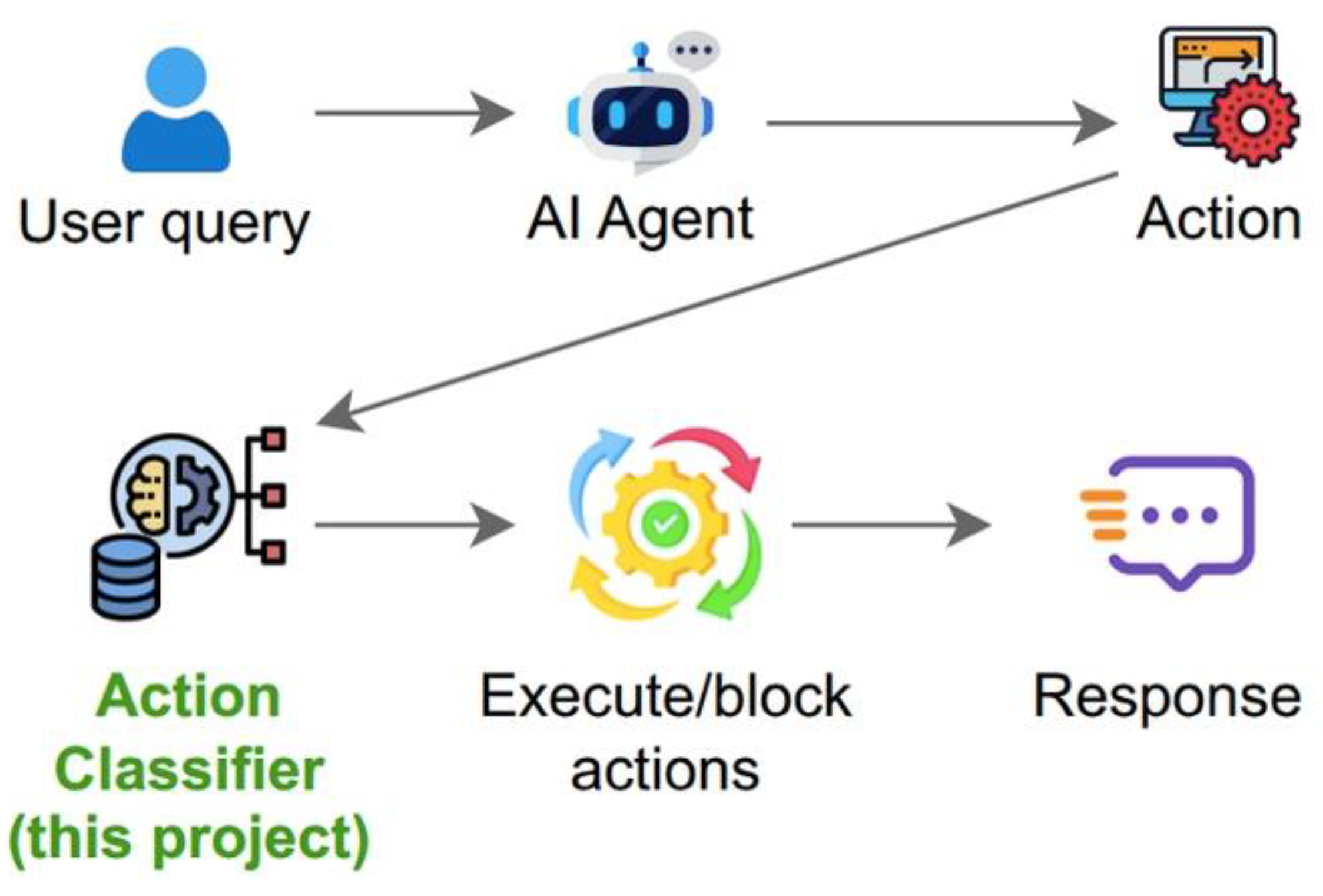

A. Problem Definition

B. Related Work

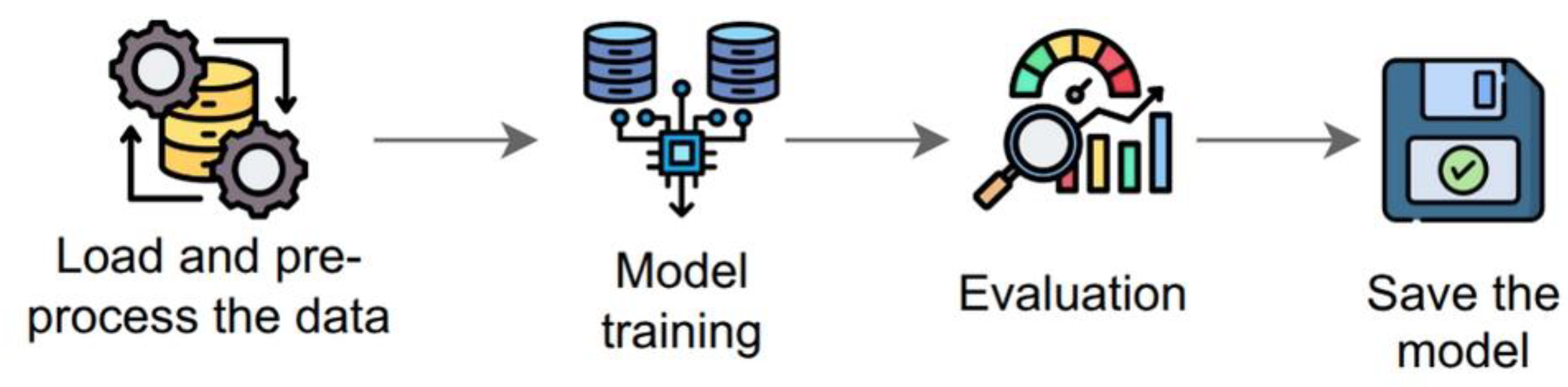

II. Methods

A. Model Architecture

B. Training

C. Evaluation

III. Results

A. Evaluation Score

| Hyperparameter | Value |

|---|---|

| Hidden Layer Size | 512 |

| Number of Epochs | 6 |

| Batch Size | 8 |

| Learning Rate | 0.002 |

IV. Discussion and Limitations

V. Conclusion

References

- F. Piccialli, D. F. Piccialli, D. Chiaro, S. Sarwar, D. Cerciello, P. Qi, and V. Mele, “AgentAI: A comprehensive survey on autonomous agents in distributed AI for industry 4.0,” Expert Systems with Applications, vol. 291, p. 128404, Oct. 2025. [CrossRef]

- H. Su et al., “A Survey on Autonomy-Induced Security Risks in Large Model-Based Agents,” June 30, 2025. arXiv:arXiv:2506.23844. [CrossRef]

- D. McCord, “Making Autonomous Auditable: Governance and Safety in Agentic AI Testing.” [Online]. Available: https://www.ptechpartners.com/2025/10/14/making-autonomous-auditable-governance-and-safety-in-agentic-ai-testing/.

- Z. J. Zhang, E. Z. J. Zhang, E. Schoop, J. Nichols, A. Mahajan, and A. Swearngin, “From Interaction to Impact: Towards Safer AI Agents Through Understanding and Evaluating Mobile UI Operation Impacts,” in Proceedings of the 30th International Conference on Intelligent User Interfaces, Mar. 2025, pp. 727–744. [CrossRef]

- M. Srikumar et al., “Prioritizing Real-Time Failure Detection in AI Agents,” Sept. 2025, [Online]. Available: https://partnershiponai.

- Anthropic, “Introducing the Model Context Protocol,” Anthropic. [Online]. Available: https://www.anthropic.

- Singh, A. Ehtesham, S. Kumar, and T. T. Khoei, “A Survey of the Model Context Protocol (MCP): Standardizing Context to Enhance Large Language Models (LLMs),” Preprints, Apr. 2025. [CrossRef]

- R. V. Yampolskiy, “On monitorability of AI,” AI and Ethics, vol. 5, no. 1, pp. 689–707, Feb. 2025. [CrossRef]

- M. Yu et al., “A Survey on Trustworthy LLM Agents: Threats and Countermeasures,” Mar. 12, 2025. arXiv:arXiv:2503.09648. [CrossRef]

- M. Shamsujjoha, Q. Lu, D. Zhao, and L. Zhu, “Swiss Cheese Model for AI Safety: A Taxonomy and Reference Architecture for Multi-Layered Guardrails of Foundation Model Based Agents,” Jan. 27, 2025. arXiv:arXiv:2408.02205. [CrossRef]

- Z. Xiang et al., “GuardAgent: Safeguard LLM Agents by a Guard Agent via Knowledge-Enabled Reasoning,” May 29, 2025, arXiv: arXiv:2406.09187. arXiv:arXiv:2406.09187. [CrossRef]

- Z. Faieq, T. Z. Faieq, T. Sartori, and M. Woodruff, “Using LLMs to moderate LLMs: The supervisor technique,” TELUS Digital. [Online]. Available: https://www.telusdigital.

- Sentence Transformers, “all-MiniLM-L6-v2,” 2024, Hugging Face. [Online]. Available: https://huggingface.

- UKPLab, “Pretrained Models,” 2024, Sentence Transformers. [Online]. Available: https://www.sbert.net/docs/sentence_transformer/pretrained_models.

- M. Surdeanu and M. A. Valenzuela-Escárcega, “Implementing Text Classification with Feed-Forward Networks,” in Deep Learning for Natural Language Processing: A Gentle Introduction, Cambridge University Press, 2024, pp. 107–116.

- Steele, “Feed Forward Neural Network for Intent Classification: A Procedural Analysis,” Apr. 25, 2024, EngrXiv. [CrossRef]

- R. Kohli, S. Gupta, and M. S. Gaur, “End-to-End triplet loss based fine-tuning for network embedding in effective PII detection,” Feb. 13, 2025. arXiv:arXiv:2502.09002. [CrossRef]

- N. Srivastava, G. Hinton, A. Krizhevsky, I. Sutskever, and R. Salakhutdinov, “Dropout: A Simple Way to Prevent Neural Networks from Overfitting,” Journal of Machine Learning Research, vol. 15, no. 56, pp. 1929–1958, 2014, [Online]. Available: http://jmlr.org/papers/v15/srivastava14a.html.

- J. Gu, C. Li, Y. Liang, Z. Shi, and Z. Song, “Exploring the Frontiers of Softmax: Provable Optimization, Applications in Diffusion Model, and Beyond,” May 06, 2024, arXiv: arXiv:2405.03251. arXiv:arXiv:2405.03251. [CrossRef]

- X. Ying, “An Overview of Overfitting and its Solutions,” Journal of Physics: Conference Series, vol. 1168, no. 2, p. 022022, Feb. 2019. [CrossRef]

- K. R. M. Fernando and C. P. Tsokos, “Dynamically Weighted Balanced Loss: Class Imbalanced Learning and Confidence Calibration of Deep Neural Networks,” IEEE Transactions on Neural Networks and Learning Systems, vol. 33, no. 7, pp. 2940–2951, 2022. [CrossRef]

- L. Prechelt, “Early Stopping — But When?,” in Neural Networks: Tricks of the Trade: Second Edition, G. Montavon, G. B. Orr, and K.-R. Müller, Eds., Berlin, Heidelberg: Springer Berlin Heidelberg, 2012, pp. 53–67. [CrossRef]

- J. He and M. X. Cheng, “Weighting Methods for Rare Event Identification From Imbalanced Datasets.,” Front Big Data, vol. 4, p. 715320, 2021. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).