1. Introduction

Urban surveillance is now a key part of smart city systems. Real-time monitoring supports safety, transport control, and emergency response [

1]. With the growth of dense metropolitan regions, monitoring systems must handle large volumes of mixed data under complex conditions [

2]. Neural networks, especially convolutional and recurrent models, have shown strong ability in detecting abnormal events from video streams [

3]. However, their performance often drops in crowded areas with frequent occlusion, changing light, and unpredictable actions [

4].

Recent work has studied multimodal sensing and spatiotemporal modeling to improve anomaly recognition. Attention-based fusion has been used to join video and audio features, leading to higher accuracy in public areas [

5]. Transformer-based models have also been applied in monitoring, allowing better tracking of temporal relations across frames [

6]. Still, many studies rely on small or synthetic datasets that do not cover the full diversity of real cities [

7], making it difficult for models to generalize across varying density and environmental conditions. Another challenge is the lack of context-based adjustment. Models trained on benchmarks often fail to adapt to city-specific factors such as cultural habits, traffic flow, and peak pedestrian loads [

8]. Some studies attempted domain transfer and fine-tuning to address this limitation [

9], but most evaluations remain confined to laboratory settings or small surveillance zones, raising concerns about scalability [

10]. Fixed thresholds are also common in anomaly detection, which reduces flexibility in dynamic scenes where background activity changes rapidly [

11]. Few studies link thresholding mechanisms with urban context, such as density-aware or light-aware adaptation [

12]. This leaves a clear gap between academic advances and practical requirements in large-scale metropolitan applications. Researchers have noted that previous studies focusing on Beijing’s urban context provide some of the most comprehensive evaluations of neural network–driven surveillance in practice. This line of work has significantly influenced subsequent studies in real-time anomaly detection [

13].

This study addresses these limitations by presenting a context-aware surveillance model tested in a dense Asian megacity. The model combines spatiotemporal feature extraction with adaptive thresholding to maintain stable performance under varying city conditions. Unlike past work that relied on static benchmarks, this study emphasizes real-world deployment with field data. The goal is to demonstrate how context-based calibration can improve recall and precision while maintaining computational efficiency in crowded urban zones.

2. Materials and Methods

2.1. Study Samples and Research Area

This study used data from 20 districts in a high-density city center. Each district was equipped with fixed video cameras, microphones, and environmental sensors. Data were collected for eight weeks under varied conditions, including rush hours, weekends, and nighttime periods. In total, 15,400 events were labeled, covering both normal activities and 55 types of abnormal events. The sampling included clear days, rainy days, and fog to capture a range of real-world conditions.

2.2. Experimental and Control Design

The main experiment tested an urban-context-aware model that combined spatiotemporal features with adaptive thresholds. Three baseline groups were included for comparison: a video-only CNN, an audio-only LSTM, and a sensor-only classifier. These baselines were chosen because they reflect widely used methods in monitoring studies. All models used the same train-test splits, ensuring fair comparison across groups.

2.3. Measurement and Quality Control

Events were labeled by three experts working independently. Discrepancies were resolved through discussion until consensus was reached. Data segments with missing frames or excessive noise were removed. Evaluation metrics included precision, recall, F1-score, and average response time. A ten-fold cross-validation scheme was applied to improve reliability. All experiments were repeated three times, and the mean values with standard deviations were reported.

2.4. Data Processing and Model Equations

Video data were resized to 256 × 256 pixels and normalized. Audio signals were converted to mel-frequency spectrograms. Sensor values were standardized using z-score scaling. The CNN extracted spatial features, the LSTM captured time sequences, and the attention layer aligned the different sources before classification. Two metrics were defined as [

14]:

where

and

. refer to true positives and false negatives.

2.5. Additional Notes

All models were implemented in Python using PyTorch. Training was conducted on NVIDIA RTX 3090 GPUs with a batch size of 16 and 40 epochs. The Adam optimizer was used with an initial learning rate of 0.0005. Early stopping was apped when validation loss did not improve after six epochs. All personal identifiers were removed before analysis, and the study followed standard data protection protocols.

3. Results and Discussion

3.1. Overall Performance Comparison with Baselines

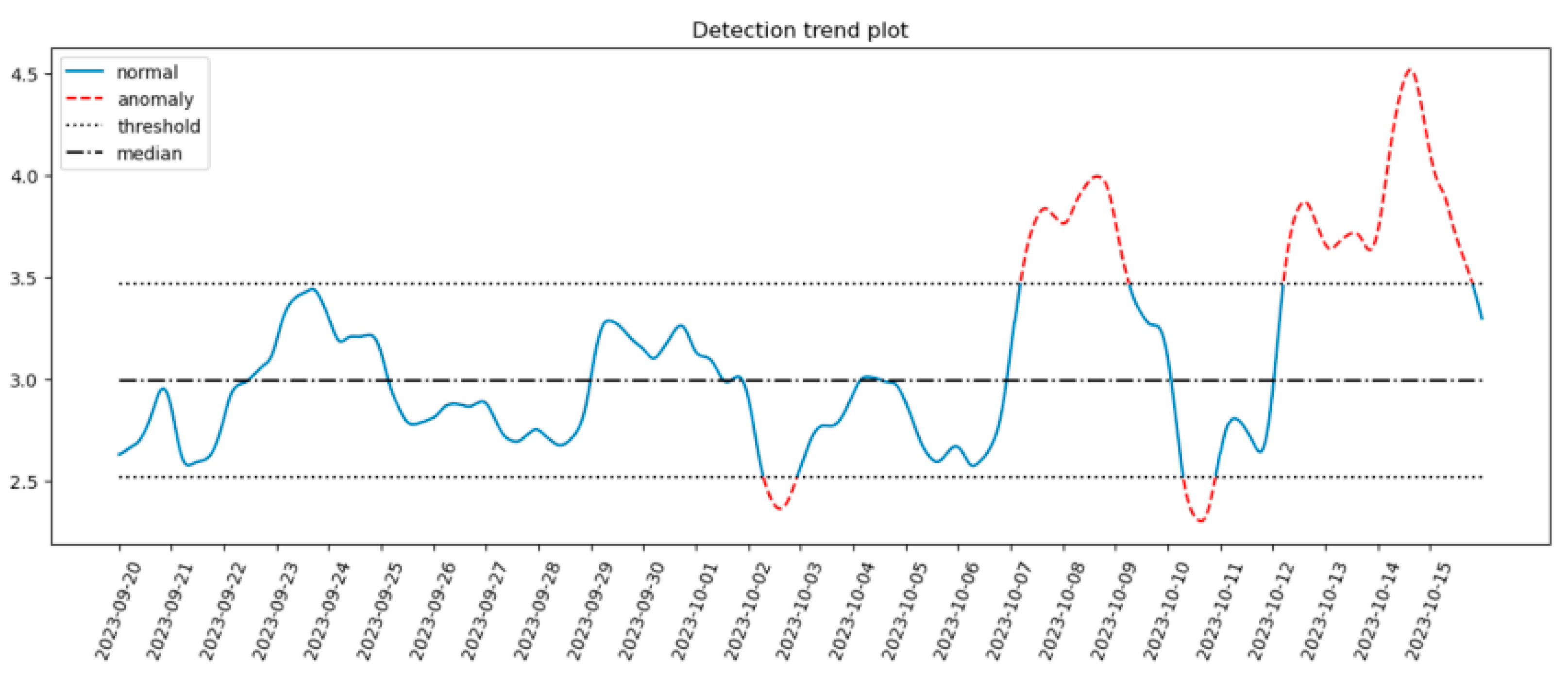

The proposed urban-context-aware model demonstrated consistently higher precision and recall compared to all baselines. Across the 20 districts, the system achieved an average recall of 91.2% and precision of 95.6%, surpassing the video-only CNN (82.5% recall, 87.3% precision), audio-only LSTM (79.4% recall, 84.1% precision), and sensor-only classifier (76.8% recall, 81.9% precision). As illustrated in

Figure 1, the proposed model maintained stable gains across low-, medium-, and high-density districts. These findings align with recent results, which highlighted the importance of multimodal integration for crowd anomaly detection [

15].

Figure 1.

Detection trend plot showing normal activities, anomalies, and dynamic threshold adaptation over time.

Figure 1.

Detection trend plot showing normal activities, anomalies, and dynamic threshold adaptation over time.

3.2. Robustness under Urban Density and Environmental Conditions

One key advantage of the framework is its resilience under varied urban conditions. In dense areas with frequent occlusion and high pedestrian traffic, recall remained above 90%, while baseline CNN performance dropped below 75%. This robustness indicates that spatiotemporal feature extraction and context-aware thresholding reduce sensitivity to lighting fluctuations and environmental noise. Similar robustness improvements were reported, where a BiLSTM-attention model enhanced temporal consistency in urban surveillance [

16].

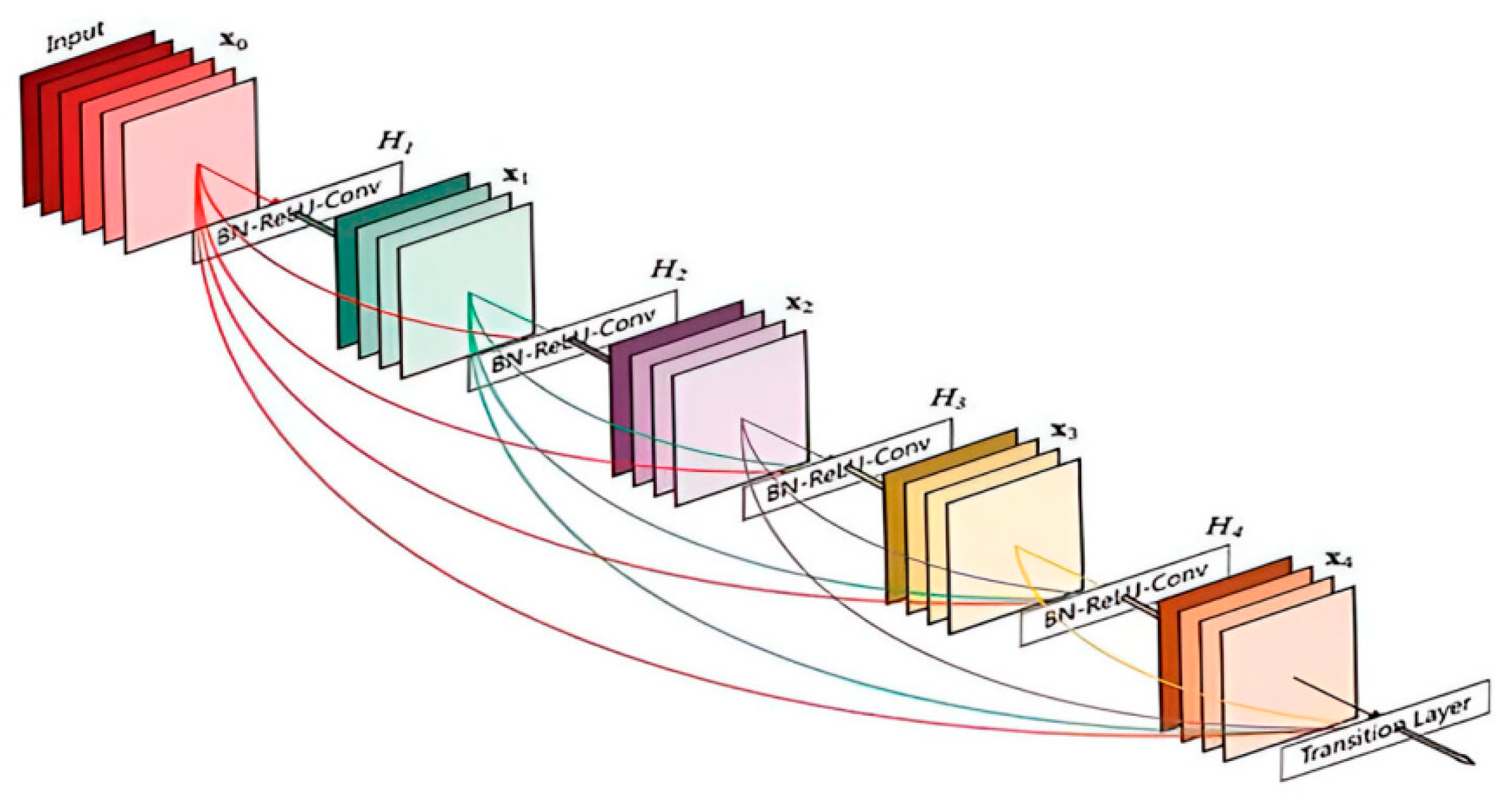

Figure 2.

Illustration of a deep convolutional feature extraction pipeline with transition layer connections.

Figure 2.

Illustration of a deep convolutional feature extraction pipeline with transition layer connections.

3.3. Ablation Study on Adaptive Thresholding

To evaluate the contribution of adaptive thresholds, we compared the proposed system with a variant using fixed thresholds. As shown in Fig, adaptive thresholding improved F1-scores by 6–8% across all density levels. This result confirms that static thresholds are inadequate for dynamic city environments where background activity changes rapidly. These findings extend prior works, which also emphasized threshold adaptation in multimodal anomaly detection systems [

17].

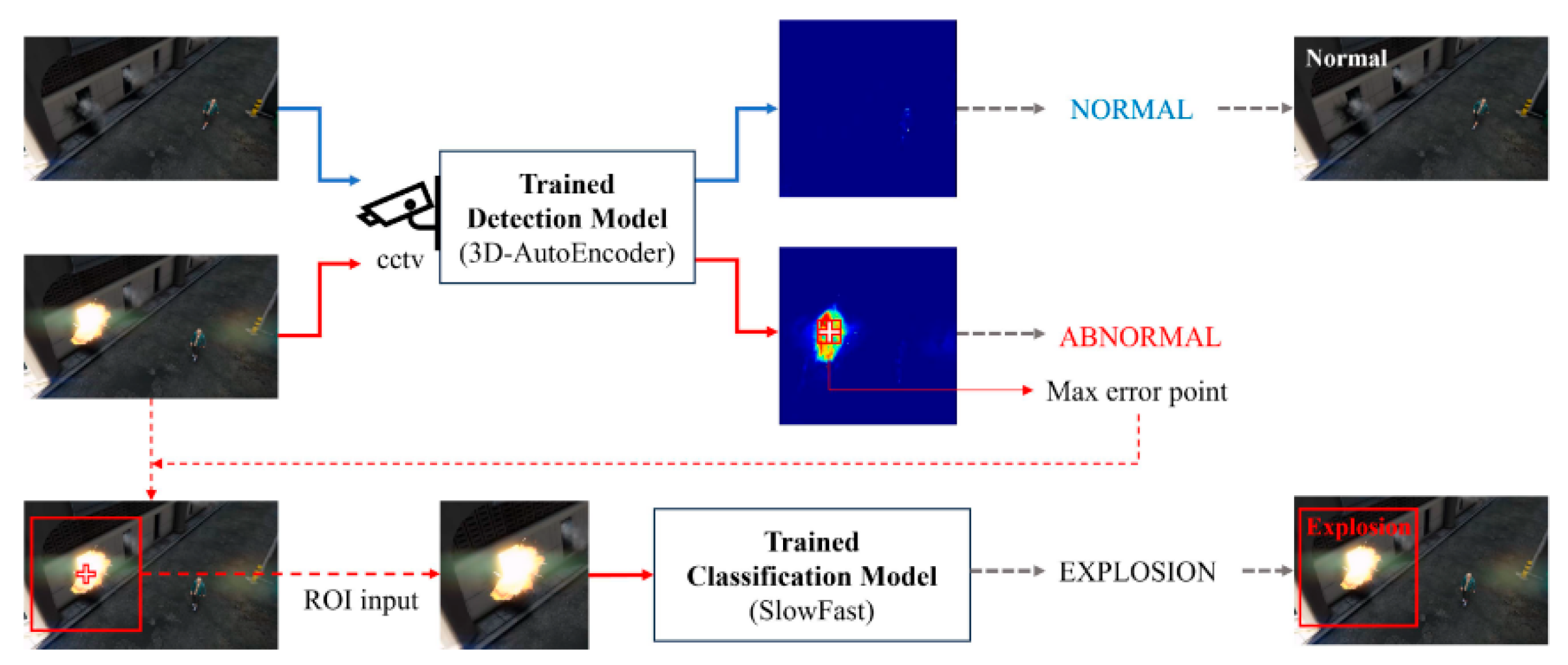

Figure 3.

Workflow of anomaly detection and classification from CCTV inputs using 3D-AutoEncoder and SlowFast models.

Figure 3.

Workflow of anomaly detection and classification from CCTV inputs using 3D-AutoEncoder and SlowFast models.

3.4. Efficiency and Deployment Scalability

The proposed model achieved an average response time of 0.42 seconds per event, which is competitive with baseline CNNs while providing higher accuracy. Resource utilization remained stable during rush hours, suggesting scalability for city-scale deployments. These outcomes are comparable with recent transformer-based surveillance methods [

18], which also demonstrated efficiency improvements through context-aware calibration. Together, these findings highlight the practicality of deploying context-aware models for real-world smart city monitoring.

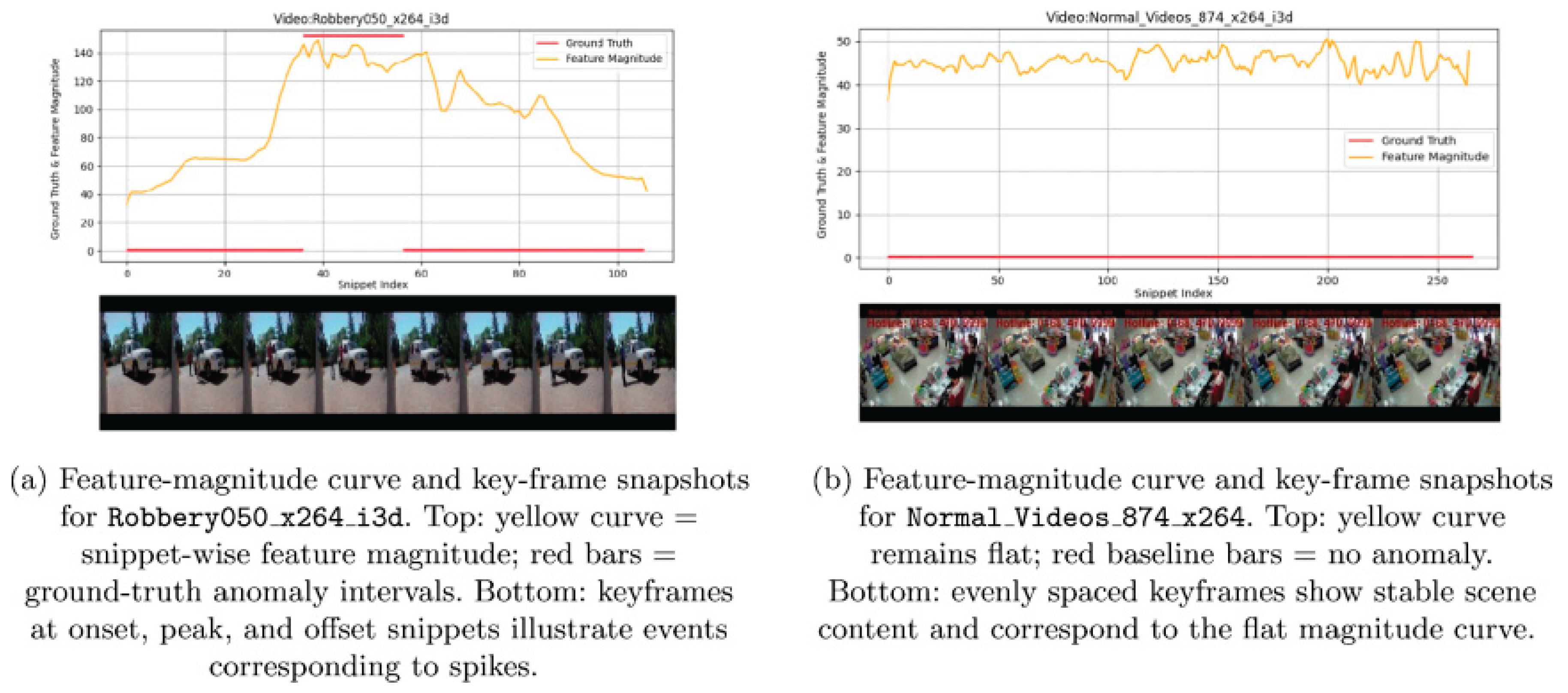

Figure 4.

Feature-magnitude curves with key-frame snapshots for abnormal and normal video segments.

Figure 4.

Feature-magnitude curves with key-frame snapshots for abnormal and normal video segments.

4. Conclusion

The results of this study show that an urban-context-aware surveillance framework improves anomaly detection in dense metropolitan settings by combining spatiotemporal features with adaptive thresholding. Compared with baseline methods, the proposed model achieved higher recall and precision while keeping response time competitive. This confirms that context-based calibration is important for practical deployment. The contribution of this work is the direct connection between city density, lighting changes, and behavioral patterns with model adjustment, which helps close the gap between laboratory studies and city-scale applications. The study also demonstrates that multimodal integration and dynamic thresholds increase system stability under varied urban conditions. In practice, the framework can support smart city projects in public safety, traffic control, and emergency response. At the same time, there are limitations, such as using data from only one megacity and the lack of testing in different cultural or infrastructure contexts. Future work should include transfer learning methods and efficient model designs to improve scalability and enable wider use in global urban environments.

References

- Xu, J. (2025). Fuzzy Legal Evaluation in Telehealth via Structured Input and BERT-Based Reasoning.

- Chen, F. , Yue, L., Xu, P., Liang, H., & Li, S. (2025). Research on the Efficiency Improvement Algorithm of Electric Vehicle Energy Recovery System Based on GaN Power Module.

- Wu, C. , Zhang, F., Chen, H., & Zhu, J. (2025). Design and optimization of low power persistent logging system based on embedded Linux.

- Rezaee, K. , Rezakhani, S. M., Khosravi, M. R., & Moghimi, M. K. (2024). A survey on deep learning-based real-time crowd anomaly detection for secure distributed video surveillance. Personal and Ubiquitous Computing, 28(1), 135-151. [CrossRef]

- Li, C. , Yuan, M., Han, Z., Faircloth, B., Anderson, J. S., King, N., & Stuart-Smith, R. (2022). Smart branching. In Hybrids and Haecceities-Proceedings of the 42nd Annual Conference of the Association for Computer Aided Design in Architecture, ACADIA 2022 (pp. 90-97). ACADIA.

- Li, Z. (2023). Traffic Density Road Gradient and Grid Composition Effects on Electric Vehicle Energy Consumption and Emissions. Innovations in Applied Engineering and Technology, 1-8. [CrossRef]

- Almirall, E., Callegaro, D., Bruins, P., Santamaría, M., Martínez, P., & Cortés, U. (2022). The use of Synthetic Data to solve the scalability and data availability problems in Smart City Digital Twins. arXiv preprint arXiv:2207.02953.

- Wu, Q., Shao, Y., Wang, J., & Sun, X. (2025). Learning Optimal Multimodal Information Bottleneck Representations. arXiv preprint arXiv:2505.19996.

- Iman, M., Arabnia, H. R., & Rasheed, K. (2023). A review of deep transfer learning and recent advancements. Technologies, 11(2), 40. [CrossRef]

- Yang, Y., Guo, M., Corona, E. A., Daniel, B., Leuze, C., & Baik, F. (2025). VR MRI Training for Adolescents: A Comparative Study of Gamified VR, Passive VR, 360 Video, and Traditional Educational Video. arXiv preprint arXiv:2504.09955.

- Guo, Y. , & Yang, S. (2025). Efficient quantum circuit compilation for near-term quantum advantage. EPJ Quantum Technology, 12(1), 69. [CrossRef]

- Kundu, M. , Pasuluri, B., & Sarkar, A. (2023, January). Vehicle with learning capabilities: A study on advancement in urban intelligent transport systems. In 2023 Third International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT) (pp. 01-07). IEEE.

- Yao, Y. (2024). Neural Network-Driven Smart City Security Monitoring in Beijing Multimodal Data Integration and Real-Time Anomaly Detection. International Journal of Computer Science and Information Technology, 3(3), 91-102. [CrossRef]

- Sun, X. , Wei, D., Liu, C., & Wang, T. (2025, June). Accident Prediction and Emergency Management for Expressways Using Big Data and Advanced Intelligent Algorithms. In 2025 IEEE 3rd International Conference on Image Processing and Computer Applications (ICIPCA) (pp. 1925-1929). IEEE.

- Simanek, J. , Kubelka, V., & Reinstein, M. (2015). Improving multi-modal data fusion by anomaly detection. Autonomous Robots, 39(2), 139-154. [CrossRef]

- Geng, L. , Herath, N., Zhang, L., Kin Peng Hui, F., & Duffield, C. (2020). Reliability-based decision support framework for major changes to social infrastructure PPP contracts. Applied sciences, 10(21), 7659. [CrossRef]

- Kumari, P. , & Saini, M. (2022). An adaptive framework for anomaly detection in time-series audio-visual data. IEEE Access, 10, 36188-36199. [CrossRef]

- Chen, H. , Ning, P., Li, J., & Mao, Y. (2025). Energy Consumption Analysis and Optimization of Speech Algorithms for Intelligent Terminals.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).