Submitted:

14 October 2025

Posted:

15 October 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

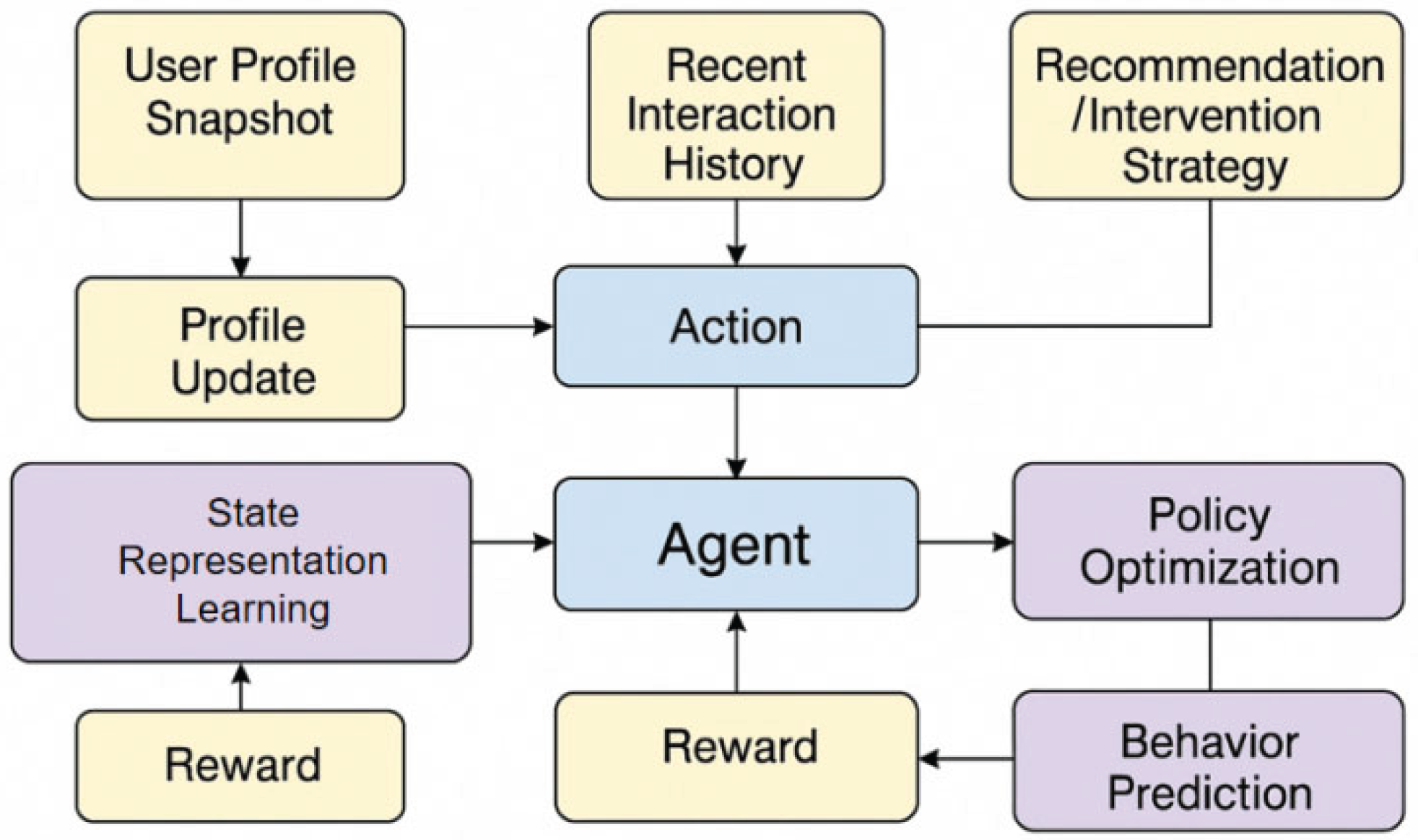

2. Proposed Approach

3. Performance Evaluation

A. Dataset

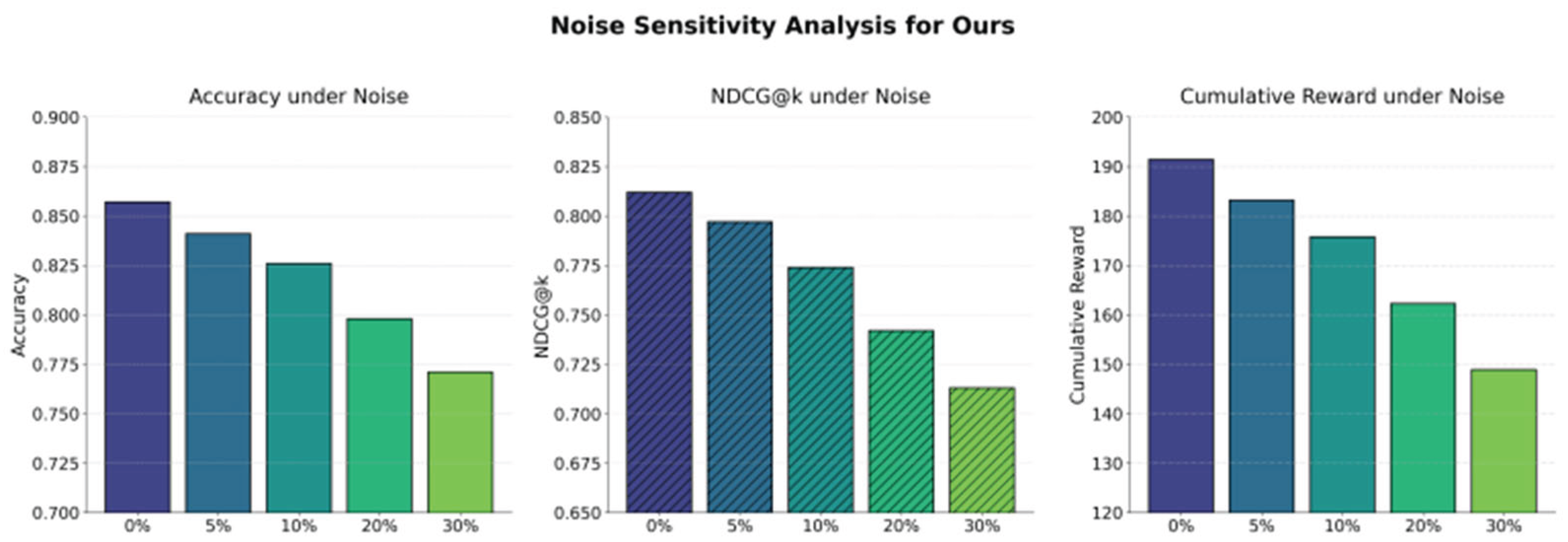

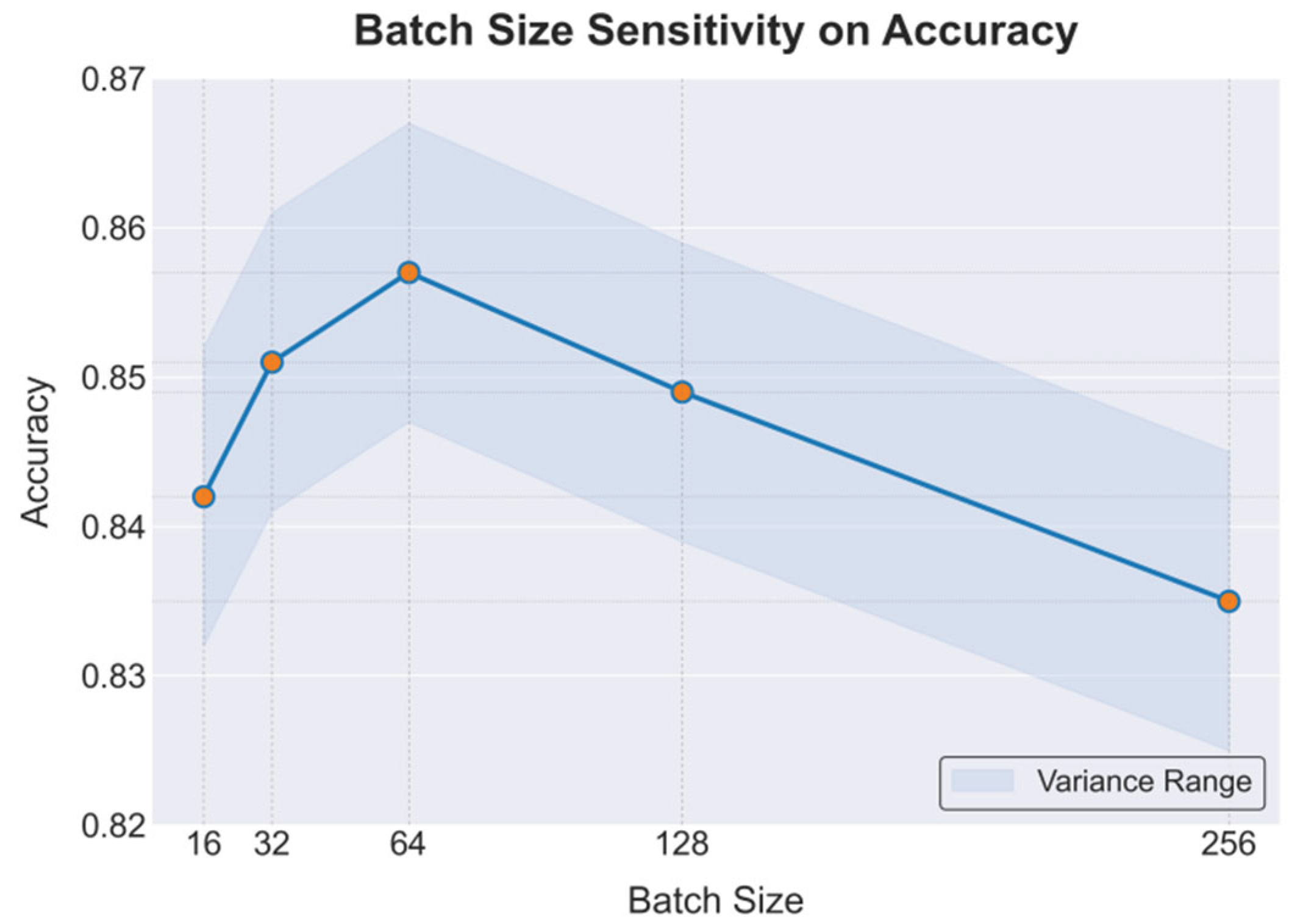

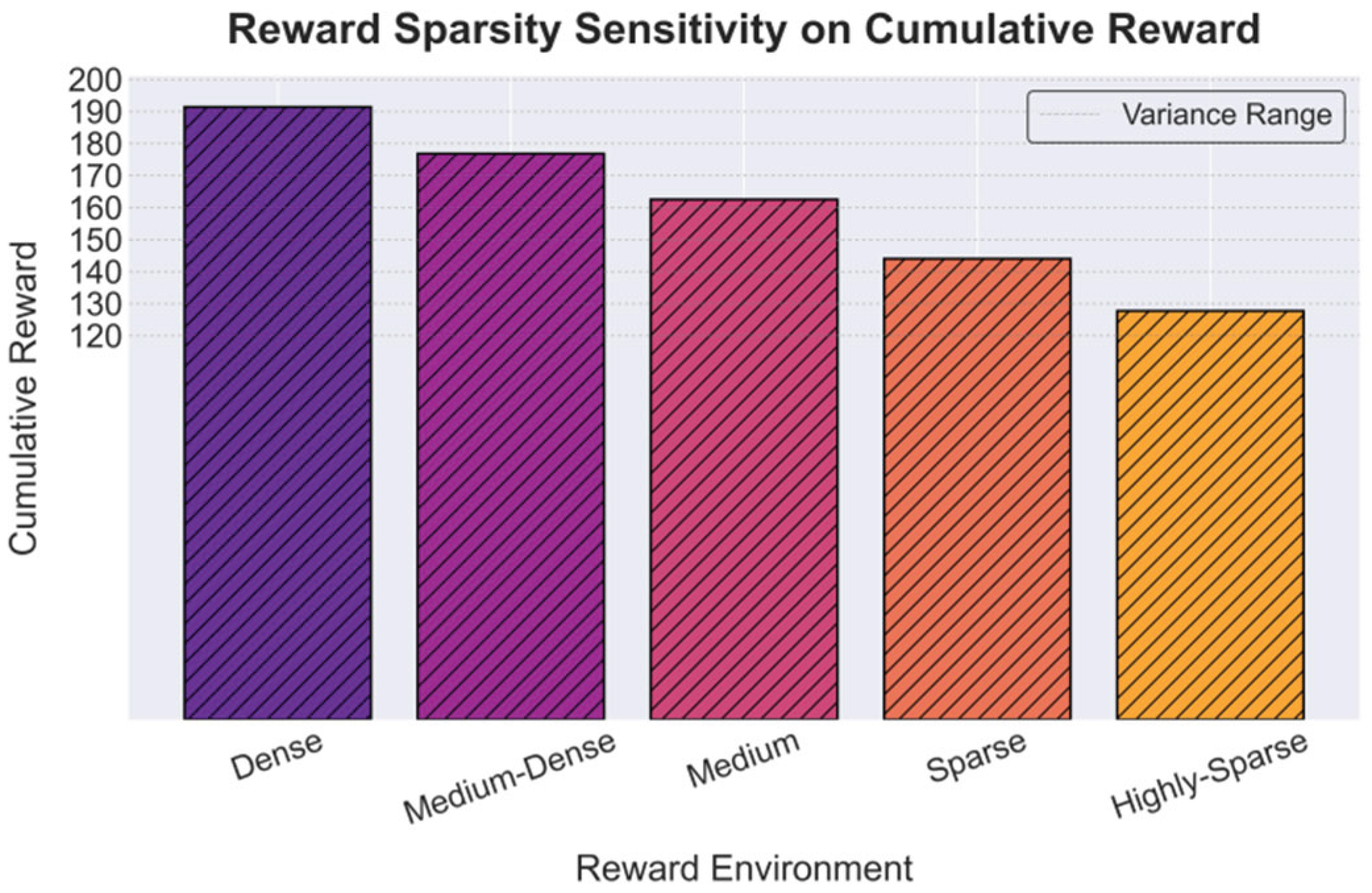

A. Experimental Results

4. Conclusions

References

- W. D. Wang, P. Wang, Y. Fu, et al., "Reinforced imitative graph learning for mobile user profiling," IEEE Transactions on Knowledge and Data Engineering, vol. 35, no. 12, pp. 12944–12957, 2023. [CrossRef]

- H. Liang, "DRprofiling: Deep reinforcement user profiling for recommendations in heterogenous information networks," IEEE Transactions on Knowledge and Data Engineering, vol. 34, no. 4, pp. 1723–1734, 2020. [CrossRef]

- W. Shao, X. Chen, J. Zhao, et al., "Sequential recommendation with user evolving preference decomposition," Proceedings of the 2023 Annual International ACM SIGIR Conference on Research and Development in Information Retrieval in the Asia Pacific Region, pp. 253–263, 2023.

- H. Liu, Y. Zhu, C. Wang, et al., "Incorporating heterogeneous user behaviors and social influences for predictive analysis," IEEE Transactions on Big Data, vol. 9, no. 2, pp. 716–732, 2022. [CrossRef]

- G. Yao, H. Liu, and L. Dai, "Multi-agent reinforcement learning for adaptive resource orchestration in cloud-native clusters," arXiv preprint arXiv:2508.10253, 2025.

- Y. Wang, H. Liu, G. Yao, N. Long, and Y. Kang, "Topology-aware graph reinforcement learning for dynamic routing in cloud networks," arXiv preprint arXiv:2509.04973, 2025.

- P. Li, Y. Wang, E. H. Chi, et al., "Hierarchical reinforcement learning for modeling user novelty-seeking intent in recommender systems," arXiv preprint arXiv:2306.01476, 2023.

- A. Khamaj and A. M. Ali, "Adapting user experience with reinforcement learning: Personalizing interfaces based on user behavior analysis in real-time," Alexandria Engineering Journal, vol. 95, pp. 164–173, 2024. [CrossRef]

- A. Chen, C. Du, J. Chen, et al., "Deeper insight into your user: Directed persona refinement for dynamic persona modeling," arXiv preprint arXiv:2502.11078, 2025.

- S. Pan and D. Wu, "Hierarchical text classification with LLMs via BERT-based semantic modeling and consistency regularization," unpublished.

- C. Liu, Q. Wang, L. Song, and X. Hu, "Causal-aware time series regression for IoT energy consumption using structured attention and LSTM networks," unpublished.

- Y. Li, S. Han, S. Wang, M. Wang, and R. Meng, "Collaborative evolution of intelligent agents in large-scale microservice systems," arXiv preprint arXiv:2508.20508, 2025.

- R. Zhang, "AI-driven multi-agent scheduling and service quality optimization in microservice systems," Transactions on Computational and Scientific Methods, vol. 5, no. 8, 2025.

- W. Xu, J. Zheng, J. Lin, M. Han, and J. Du, "Unified representation learning for multi-intent diversity and behavioral uncertainty in recommender systems," arXiv preprint arXiv:2509.04694, 2025.

- V. Shakya, J. Choudhary, and D. P. Singh, "IRADA: Integrated reinforcement learning and deep learning algorithm for attack detection in wireless sensor networks," Multimedia Tools and Applications, vol. 83, no. 28, pp. 71559–71578, 2024. [CrossRef]

- W. C. Kang and J. McAuley, "Self-attentive sequential recommendation," Proceedings of the 2018 IEEE International Conference on Data Mining (ICDM), pp. 197–206, 2018.

- D. Jannach and M. Ludewig, "When recurrent neural networks meet the neighborhood for session-based recommendation," Proceedings of the 2017 ACM Conference on Recommender Systems, pp. 306–310, 2017.

- N. Vij, A. Yacoub, and Z. Kobti, "XLNet4Rec: Recommendations based on users' long-term and short-term interests using Transformer," Proceedings of the 2023 International Conference on Machine Learning and Applications (ICMLA), pp. 647–652, 2023.

| Method | Acc | NDCG@k | Cumulative Reward |

|---|---|---|---|

| IRADA [15] | 0.732 | 0.641 | 125.6 |

| SASRec [16] | 0.764 | 0.673 | 139.8 |

| GRU4Rec [17] | 0.781 | 0.702 | 148.3 |

| XLNet4Rec [18] | 0.812 | 0.745 | 163.7 |

| Ours | 0.857 | 0.812 | 191.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).