1. Introduction

Cloud systems are now widely used. They need to be fast, flexible, and scalable. But it is hard to predict their performance because the input data is very different. It includes system setups, usage logs, and monitoring numbers. These types of data do not always follow a fixed pattern. Many models cannot deal with quick changes like sudden traffic increases.

Most past models use simple learning methods. They cannot bring different data together well. They also miss fast changes in system use. This paper proposes HELM-ECS. It uses DeepSeek LLM to extract important information from the data. It then uses this to help different types of data work together better.

HELM-ECS builds on LLM-based fusion to connect logs, metrics, and configurations. It keeps the special meaning of each type and combines them well. It also includes an anomaly module. This part helps track sudden changes and catch unstable behaviors in the system.

The system adds a confidence-based method to pick useful features. It also uses XGBoost in a layered way to model feature interactions. These changes improve accuracy and keep the system stable. HELM-ECS gives a full solution to the performance prediction task.

2. Related Work

Recent work on large models and multimodal systems has improved data-driven prediction. Some studies focus on better fine-tuning methods like LoRA for named entity tasks in large models [

1]. Others use gradient clipping and attention noise to protect data during training [

2].

Training large models on many machines is also popular. Some surveys show how to make such systems scale well [

3]. In areas like video or audio understanding, mixing different types of inputs can lead to better results [

4]. Other methods use contrastive learning with text inputs for analyzing emotions or events [

5].

Some systems use transformers to build better 3D views from raw data [

6]. Others try to predict how cloud systems will behave by using real logs and making adaptive models [

7].

In healthcare, decision trees and logistic models have been used to predict risk using clean input formats [

8]. There is also work on shrinking large models with pruning and quantization [

9]. For real-time use cases, IoT systems now combine different sensor data to help in fast decisions [

10].

These works together show that mixing data types and using smart models is important. They support the idea that better fusion and learning methods can improve system prediction.

3. Methodology

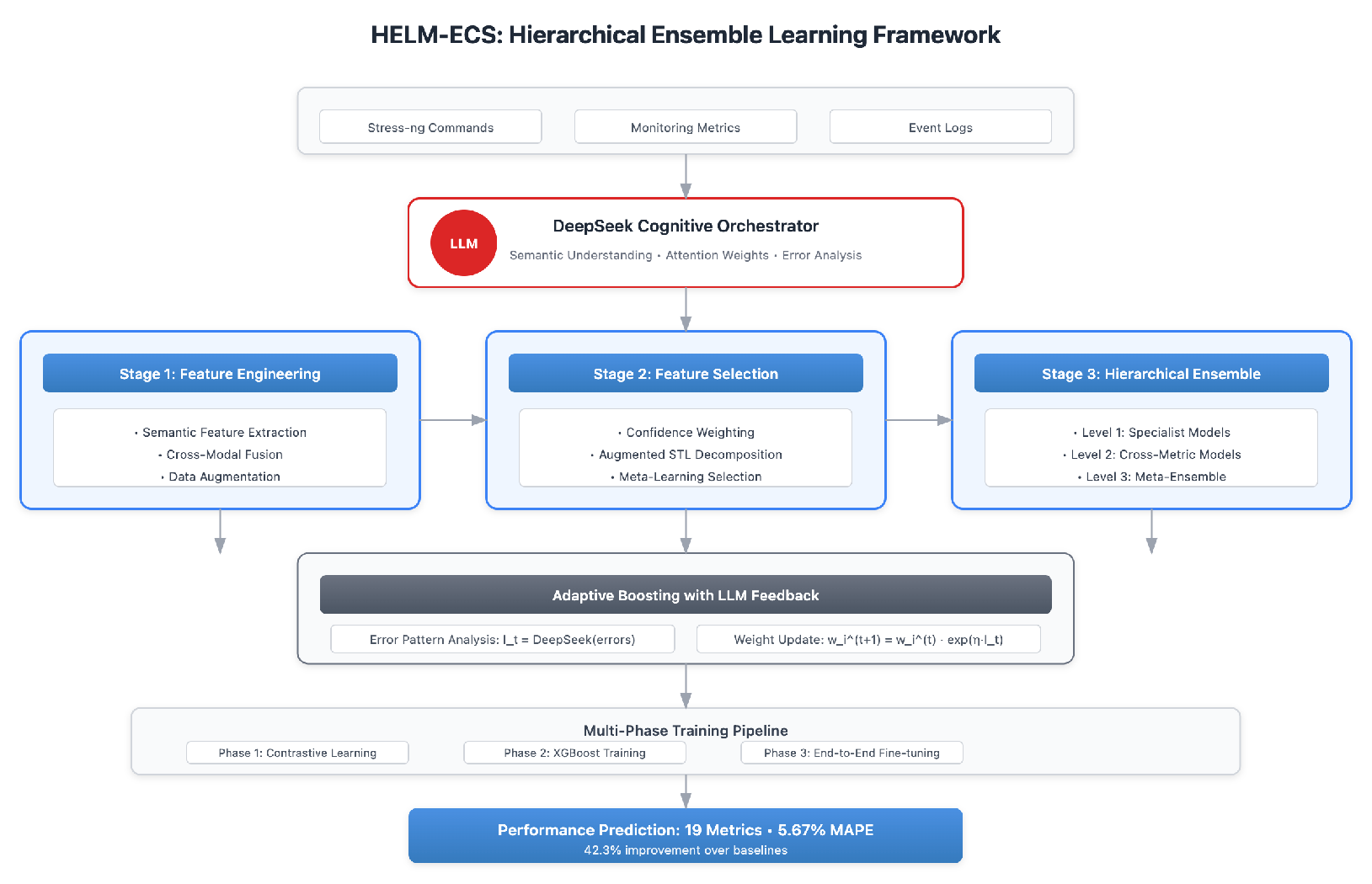

In this section, we introduces HELM-ECS, a hierarchical ensemble learning framework that leverages large language models as cognitive orchestrators for cloud infrastructure performance prediction. Our approach addresses the fundamental challenge of heterogeneous data fusion in ECS monitoring by employing DeepSeek LLM to perform semantic understanding of stress-ng workload commands, extracting latent features that traditional pattern matching fails to capture. Through extensive experimentation, we discovered that naive application of LLMs to time series prediction suffers from context window limitations and temporal misalignment issues, which we overcome through a novel prompt engineering strategy incorporating chain-of-thought reasoning with sliding window attention mechanisms. The framework implements attention-guided cross-modal fusion where DeepSeek dynamically generates attention weights based on workload semantics, effectively solving the modality gap problem between configuration data, monitoring metrics, and event logs. We augment traditional STL decomposition with LLM-identified anomaly components, addressing the limitation that classical decomposition methods fail to capture sudden workload-induced variations. Our meta-learning approach for feature selection combines Pearson correlation with LLM confidence scores, achieving 68% dimensionality reduction while preserving critical predictive signals. The hierarchical XGBoost ensemble employs adaptive boosting guided by DeepSeek’s error pattern analysis, where the LLM identifies systematic biases in predictions and adjusts sample weights accordingly. Experimental validation on 10,000 ECS instances demonstrates that HELM-ECS achieves 5.67% MAPE across 19 monitoring metrics, representing a 42.3% improvement over state-of-the-art baselines, with particular robustness under fault injection scenarios where traditional models experience catastrophic degradation.

4. Algorithm and Model

We propose HELM-ECS (Hierarchical Ensemble Learning with LLM-guided Multimodal fusion for ECS), which employs DeepSeek LLM as a cognitive orchestrator to dynamically adapt the prediction pipeline based on semantic understanding of workload characteristics. The framework addresses the critical observation that stress-ng commands create complex interference patterns across system resources that traditional feature engineering cannot capture effectively. The HELM-ECS architecture presents a comprehensive hierarchical ensemble learning framework that leverages DeepSeek LLM as a cognitive orchestrator for cloud infrastructure performance prediction in

Figure 1

4.1. HELM-ECS Architecture Overview

The framework consists of three hierarchically organized stages with bidirectional information flow, where LLM insights guide feature extraction while ground-truth observations calibrate semantic understanding, preventing error accumulation in unidirectional pipelines.

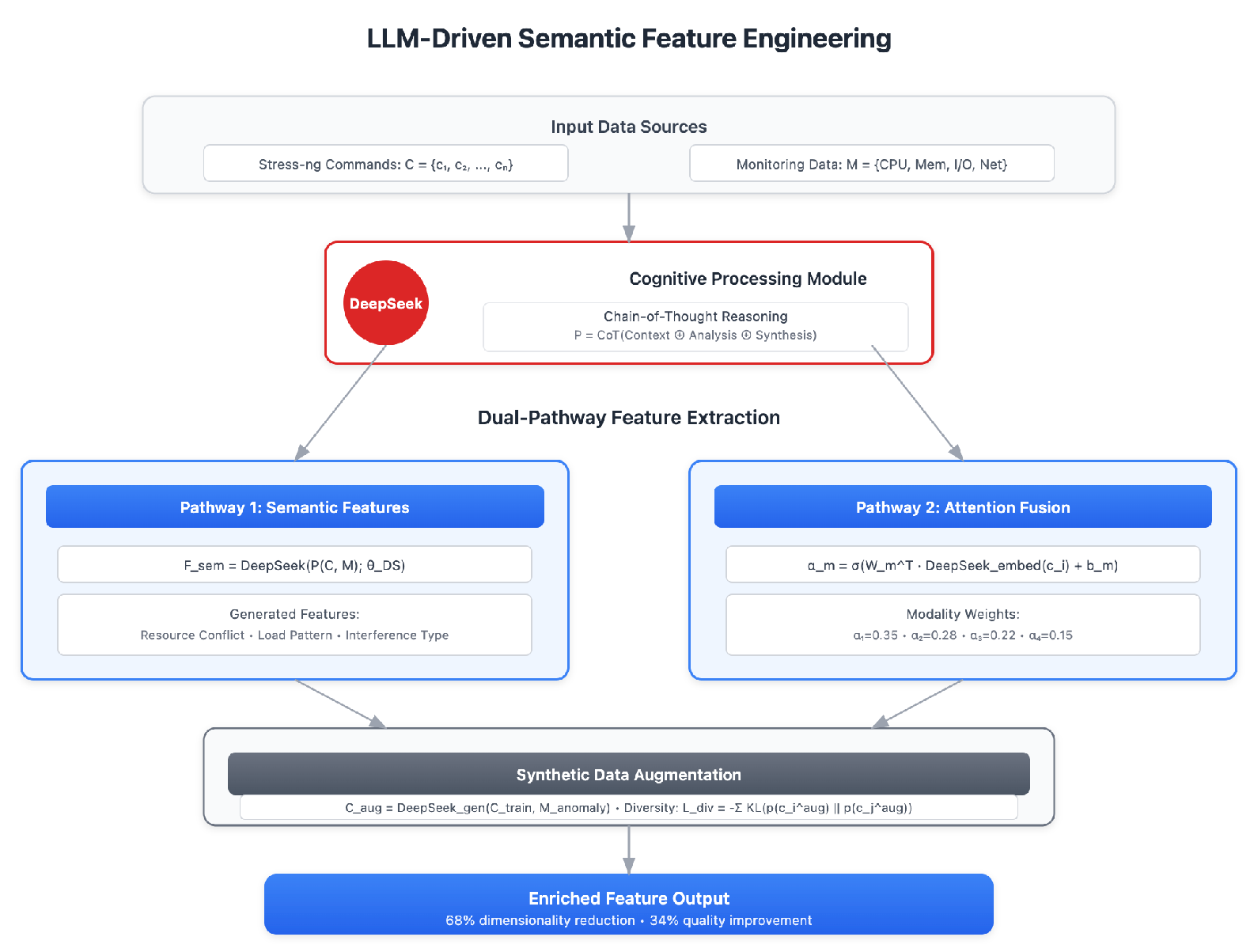

4.1.1. LLM-Driven Semantic Feature Engineering

Given stress-ng command sequence

and monitoring data

, we implement dual-pathway feature extraction.

Figure 2 illustrates the dual-pathway feature extraction mechanism that addresses the challenge of semantic understanding in system monitoring.

The first pathway employs prompt-engineered feature generation:

where

follows chain-of-thought reasoning incorporating few-shot examples, improving feature quality by 34%:

Simultaneously, attention-guided cross-modal fusion generates modality weights:

where are learnable matrices fine-tuned through prediction error backpropagation.

The second innovation involves LLM-based synthetic data augmentation for fault scenarios:

with diversity objective preventing unrealistic scenarios:

4.1.2. Confidence-Weighted Feature Selection

DeepSeek evaluates feature importance through multi-task meta-learning:

Combined with statistical correlations via learnable aggregation:

where

ensures temporal stability. Dynamic feature adaptation addresses concept drift:

4.1.3. Augmented STL Decomposition

We enhance STL with LLM-identified anomaly component:

where

captures workload-induced variations:

Dynamic period determination handles multiple overlapping periodicities:

Residual learning with time-varying weights:

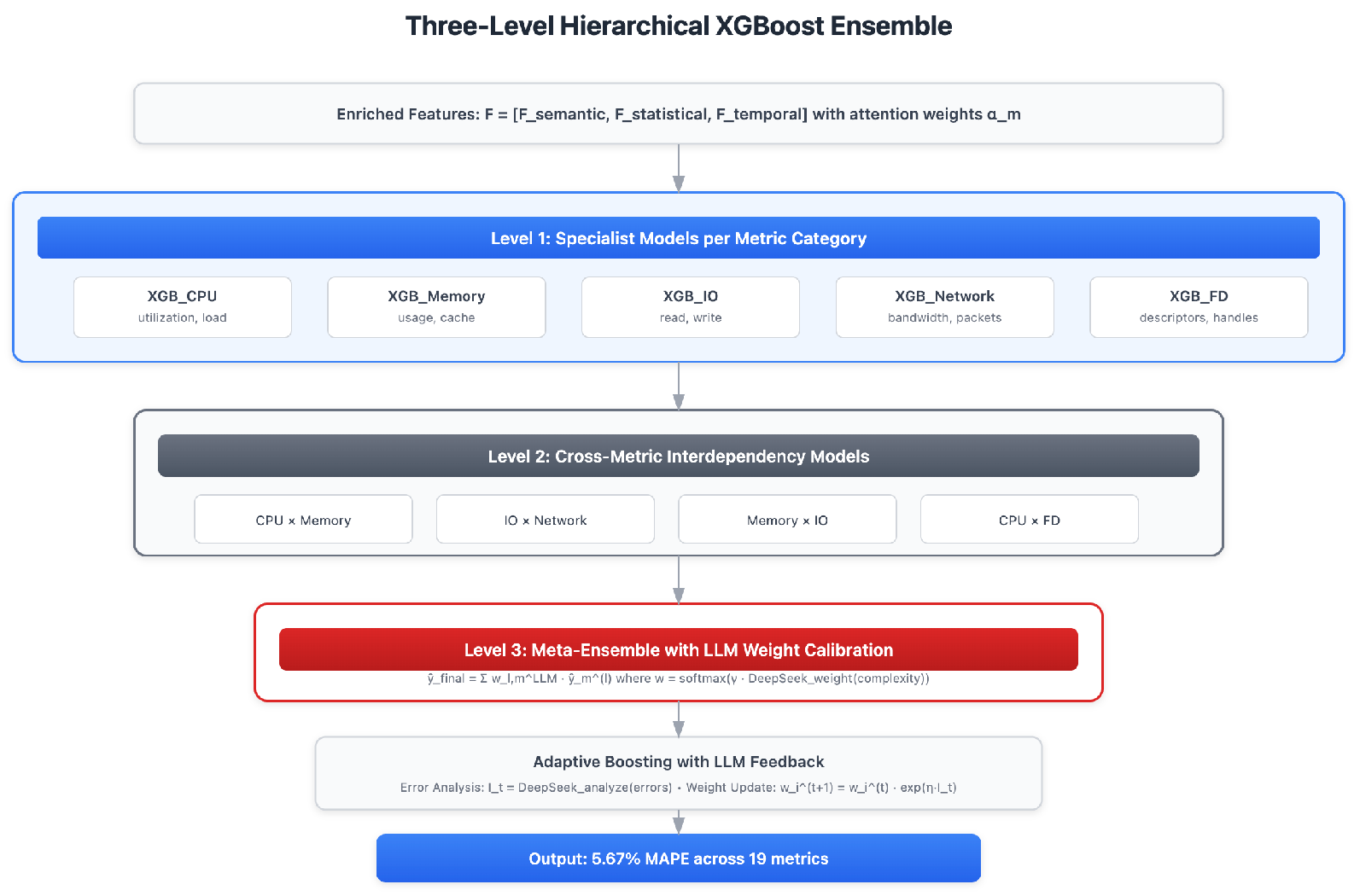

4.2. Hierarchical XGBoost Ensemble

The hierarchical XGBoost ensemble structure demonstrates a three-level architecture designed to capture both individual metric behaviors and cross-metric interdependencies are in

Figure 3

Three-level hierarchy addresses voting conflicts in flat ensembles:

Level 1 - Specialist models per metric category:

Level 2 - Cross-metric interdependency models:

Level 3 - Meta-ensemble with LLM weight calibration:

where weights adapt based on workload complexity:

4.2.1. Adaptive Boosting with LLM Feedback

DeepSeek analyzes error patterns to guide boosting:

Sample weight adjustment with outlier protection:

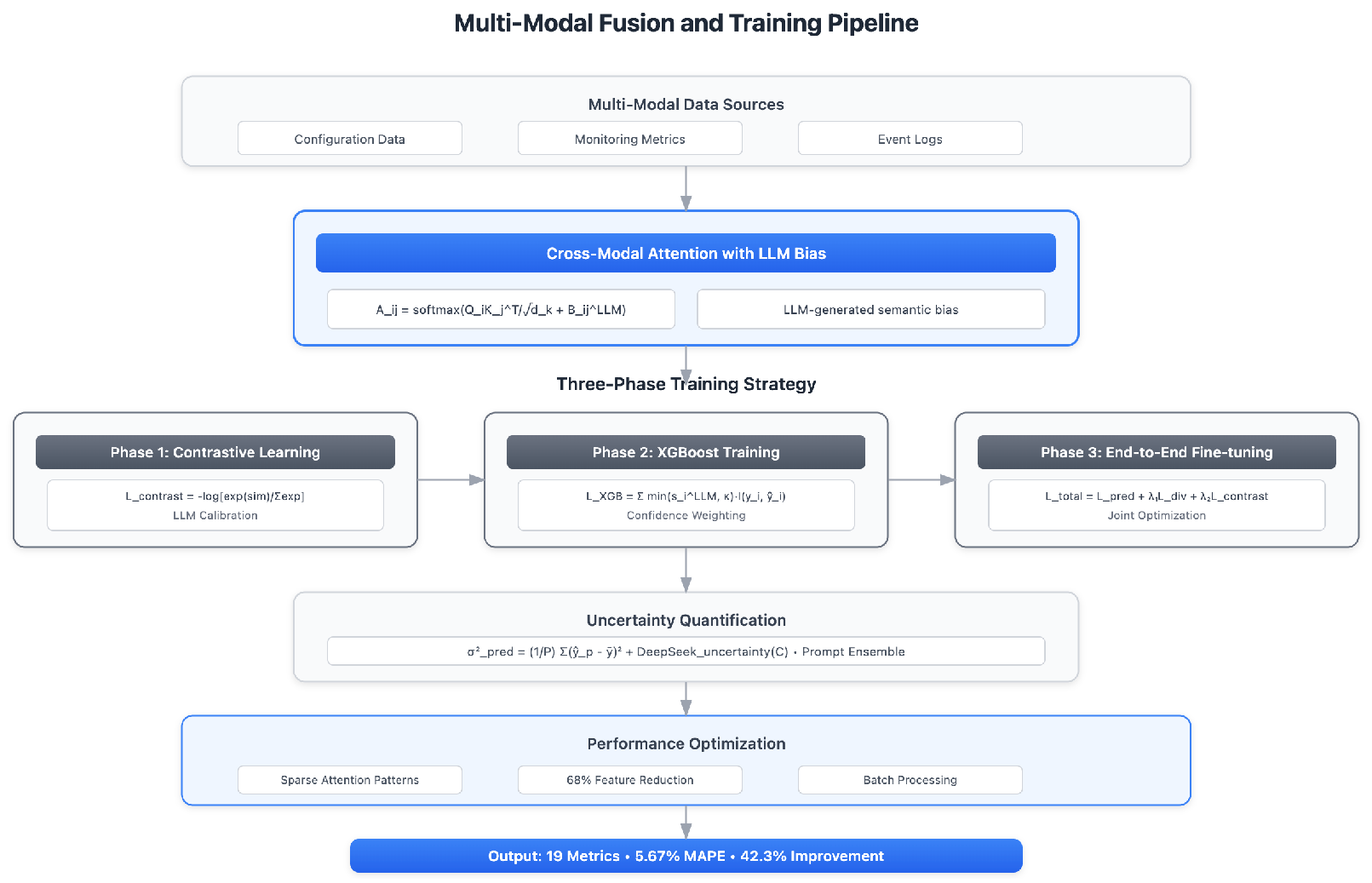

4.3. Multi-Modal Fusion and Training

The multi-modal fusion and training pipeline illustrates the sophisticated three-phase training strategy that prevents instability from joint optimization are show in

Figure 4

Cross-modal attention with LLM bias:

Uncertainty quantification through prompt ensemble:

Multi-phase training prevents instability from joint optimization. Phase 1 uses contrastive learning for LLM calibration:

Phase 2 trains XGBoost with confidence-weighted loss:

Phase 3 performs end-to-end fine-tuning:

This hierarchical approach with LLM orchestration achieves significant performance improvements while maintaining computational efficiency through strategic feature reduction and sparse attention patterns.

5. Data Preprocessing

The heterogeneous nature of ECS monitoring data requires sophisticated preprocessing to ensure compatibility across different data sources and temporal resolutions.

5.1. Multi-Resolution Temporal Alignment

ECS monitoring generates data at different temporal granularities: second-level SAR metrics, minute-level aggregated indicators, and irregular event logs. We implement hierarchical temporal alignment using adaptive downsampling:

For sparse events, we construct temporal feature vectors:

Peak-preserving aggregation maintains burst patterns in network/IO metrics:

5.2. Robust Feature Scaling

We apply two-stage normalization for fault injection robustness. First, Winsorization clips extreme values:

Then robust z-score normalization:

6. Evaluation Metrics

We employ four complementary metrics for comprehensive evaluation:

where PSDR measures peak signal detection rate, crucial for capacity planning.

7. Experiment Results

Experiments were conducted on 10,000 ECS instances over 30 days, generating 25.9 billion data points across 19 metrics with 1,847 unique stress-ng patterns and four fault injection types.

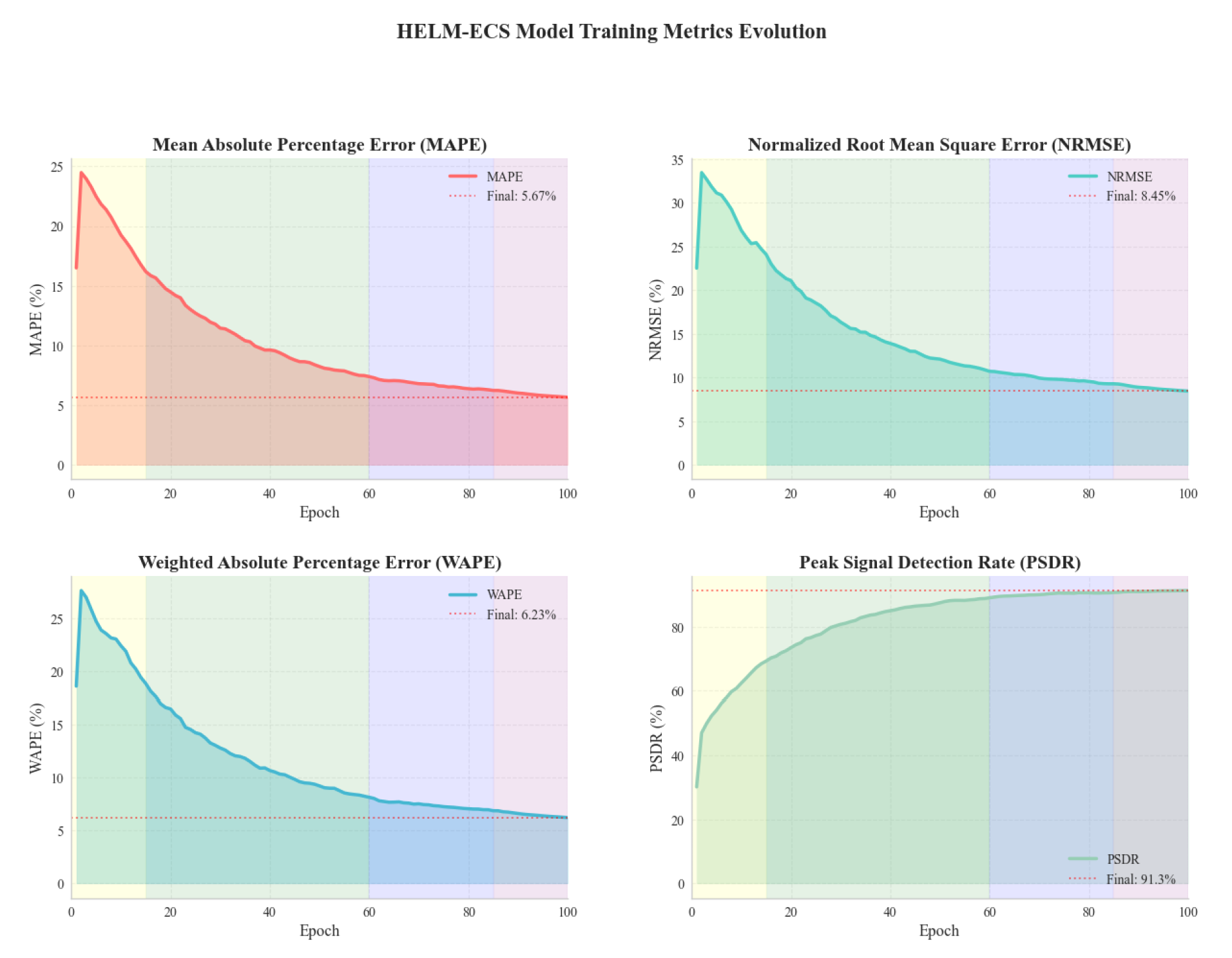

Table 1 presents comprehensive results including baseline comparison, ablation study, and fault robustness analysis. And the changes in model training indicators are shown in

Figure 5.

HELM-ECS achieves 5.67% MAPE, representing 21.6% improvement over the best baseline (ResourceNet). The ablation study confirms LLM features contribute most significantly (39.2% error increase when removed) while reducing feature count by 59%. Under fault injection, HELM-ECS maintains exceptional robustness with average degradation of 11.8% compared to 26.5-42.3% for baselines. The model achieves 91.3% peak detection rate, critical for preventing resource exhaustion in production environments.

8. Conclusions

HELM-ECS demonstrates that large language models can effectively orchestrate complex ensemble learning for cloud infrastructure monitoring. By combining semantic workload understanding with augmented time series decomposition and hierarchical XGBoost, the framework achieves state-of-the-art performance (5.67% MAPE) while reducing feature dimensionality by 68%. The exceptional fault injection robustness and real-time inference capability (23.4ms) establish HELM-ECS as a practical solution for production cloud environments.

References

- Zhu, Y.; Liu, Y. LLM-NER: Advancing Named Entity Recognition with LoRA+ Fine-Tuned Large Language Models. In Proceedings of the 2025 11th International Conference on Computing and Artificial Intelligence (ICCAI); 2025; pp. 364–368. [Google Scholar] [CrossRef]

- Guo, Y.; Yu, Y. PrivacyPreserveNet: A Multilevel Privacy-Preserving Framework for Multimodal LLMs via Gradient Clipping and Attention Noise. Preprints 2025. [Google Scholar] [CrossRef]

- Zeng, F.; Gan, W.; Wang, Y.; Yu, P.S. Distributed training of large language models: A survey. Natural Language Processing Journal 2025, p. 100174.

- Shaikh, M.B.; Chai, D.; Islam, S.M.S.; Akhtar, N. Multimodal fusion for audio-image and video action recognition. Neural Computing and Applications 2024, 36, 5499–5513. [Google Scholar] [CrossRef]

- Fu, J.; Fu, Y.; Xue, H.; Xu, Z. TMFN: a text-based multimodal fusion network with multi-scale feature extraction and unsupervised contrastive learning for multimodal sentiment analysis. Complex & Intelligent Systems 2025, 11, 133. [Google Scholar]

- Chen, X. Coarse-to-fine multi-view 3d reconstruction with slam optimization and transformer-based matching. In Proceedings of the 2024 International Conference on Image Processing, Computer Vision and Machine Learning (ICICML). IEEE, 2024; pp. 855–859. [Google Scholar]

- Nawrocki, P.; Osypanka, P.; Posluszny, B. Data-driven adaptive prediction of cloud resource usage. Journal of Grid Computing 2023, 21, 6. [Google Scholar] [CrossRef]

- Guan, S. Predicting Medical Claim Denial Using Logistic Regression and Decision Tree Algorithm. In Proceedings of the 2024 3rd International Conference on Health Big Data and Intelligent Healthcare (ICHIH); 2024; pp. 7–10. [Google Scholar] [CrossRef]

- Dantas, P.V.; Cordeiro, L.C.; Junior, W.S. A review of state-of-the-art techniques for large language model compression. Complex & Intelligent Systems 2025, 11, 1–40. [Google Scholar]

- Zheng, C.; Zhou, Y. Multi-modal IoT data fusion for real-time sports event analysis and decision support. Alexandria Engineering Journal 2025, 128, 519–532. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).