3.1. Algorithm Based on Truncation Error Analysis

The basic logic of the diffusion ODE is as follows: When the moving distance in step

t to

is large during inference, the weakness of the poor discretion in the noisy stage will not be as detrimental as the non-noisy steps. These insights have been proved in a previous paper[

8] via an experiment that discretizes the inference process by choosing a uniform variant gap in each discretized time step to obtain arrays:

and

. Then, the researchers calculate the root mean square error (RMSE) between the one-step inference from

to

and the 200-step inference from

to

. The experimental results show that the truncation error increases monotonically as the start variant decreases.

Specifically, for the DPM++ 1s solver in Equation (12), its truncation error is

, and for DPM++2s solver, its truncation error is

, where

[

5]. Moreover, the DPM++2m solver applies a multistep algorithm that uses the previous cache result to calculate the second-order term in the Taylor expansion to prevent additional calculation. By applying this method, its inference budget is

[

5], which is different from that of the normal second-order solver, with the inference budget

[

24]. Its truncation error is

[

5]. Although the

of the multistep algorithm is smaller than the single-step method when the budget of the inference step is fixed, its truncation form, compared with the 1s-solver, makes it unsuitable for few-step inference. A previous study [

8] shows that the global truncation error is bound by

, where

. The

E depends on the discrete step

N, the start time step

, and the ending timestep

, the existing constant

C. The meaning of this upper bound is that if we discretize the solving process uniformly, and the discretizing method is not sufficiently precise, the global truncation error will be limited by the less noisy part, which contributes the highest

.

Based on this observation, one researcher [

8] proposes a following discrete method:

Equation (15) controls the variant

via

p, and if the

p increases, the

will increase in the noisier part and decrease in the less noisy part. Moreover, according to the observation from the paper[

26], if we choose

and

, the fourth-to-last step corresponds to the first

perturbation steps, and it will cost

inference budget. Considering the U-Net has 860 million parameters, and the variant of the

is approximately

, performing four-step ODE inference at this point is illogical. Moreover, the

-VAE used for decoding is also trained with a small KL-term constraint, which enables it to handle the noise in the latent variable.

Based on that observation, one study [

26] proposes another discrete method:

When choosing

, the

in both the noisy part and the less noisy part is larger than that in Karras’s original method.

Furthermore, we should consider the meaning of the score function by rewriting the Equation (6) as follows:

which means the score function is pointing to the average of all possible images that can cause this noisy image. Naturally, one can surmise that

in the least noisy stage. In this situation, the inference task can be approximated by a pure denoising task, and we do not need further inference if we can modify the U-Net to perform the denoising task without additional training.

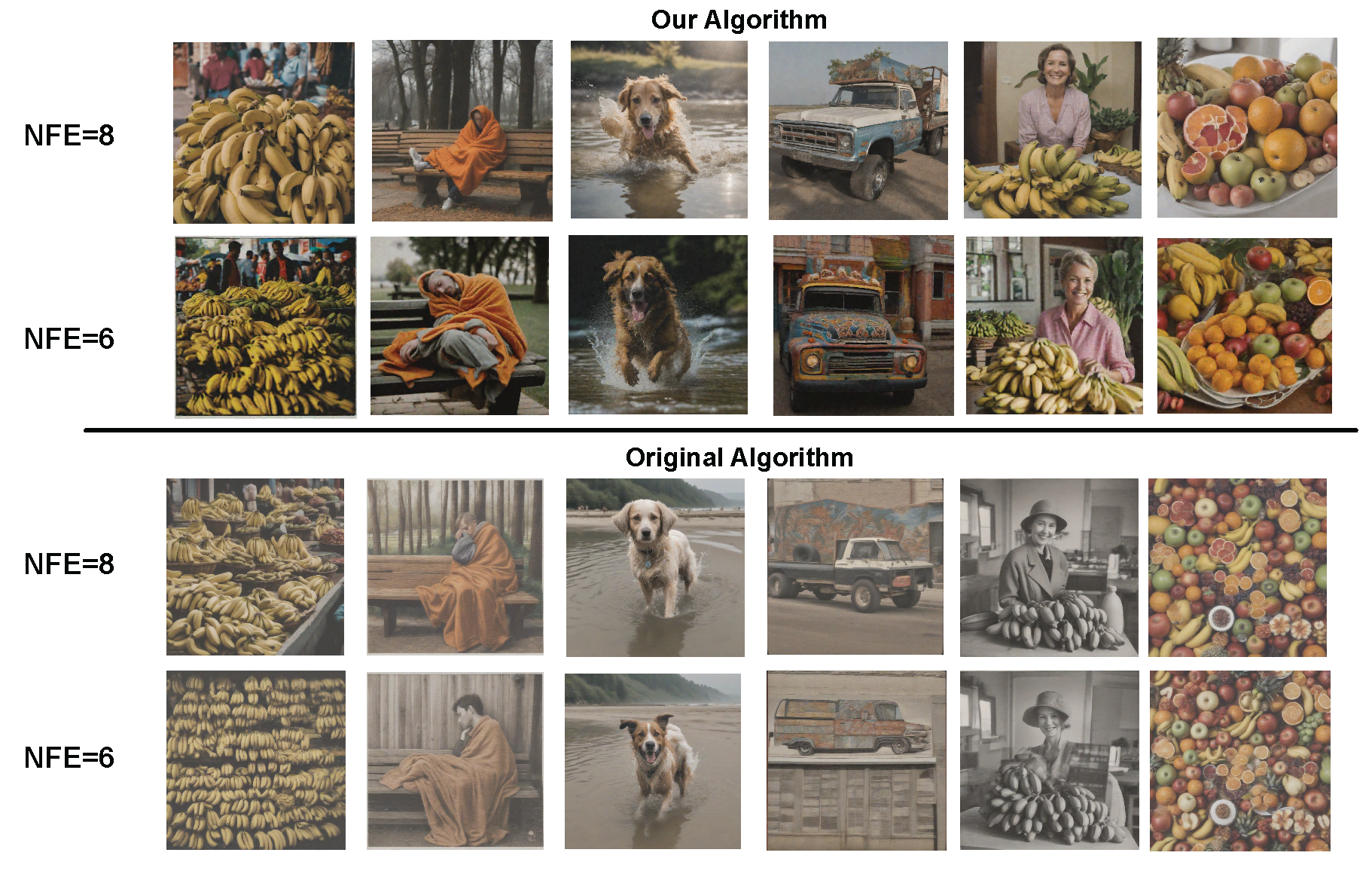

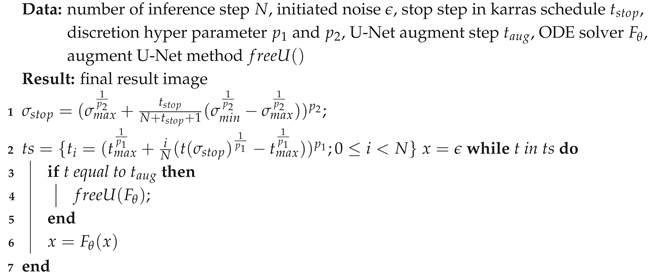

Based on the aforementioned idea and the experiment results in

Section 4, we propose the following discrete method that aims to correct the output in the less noisy stage:

where

is defined via the following formula:

which is not zero, as the decode

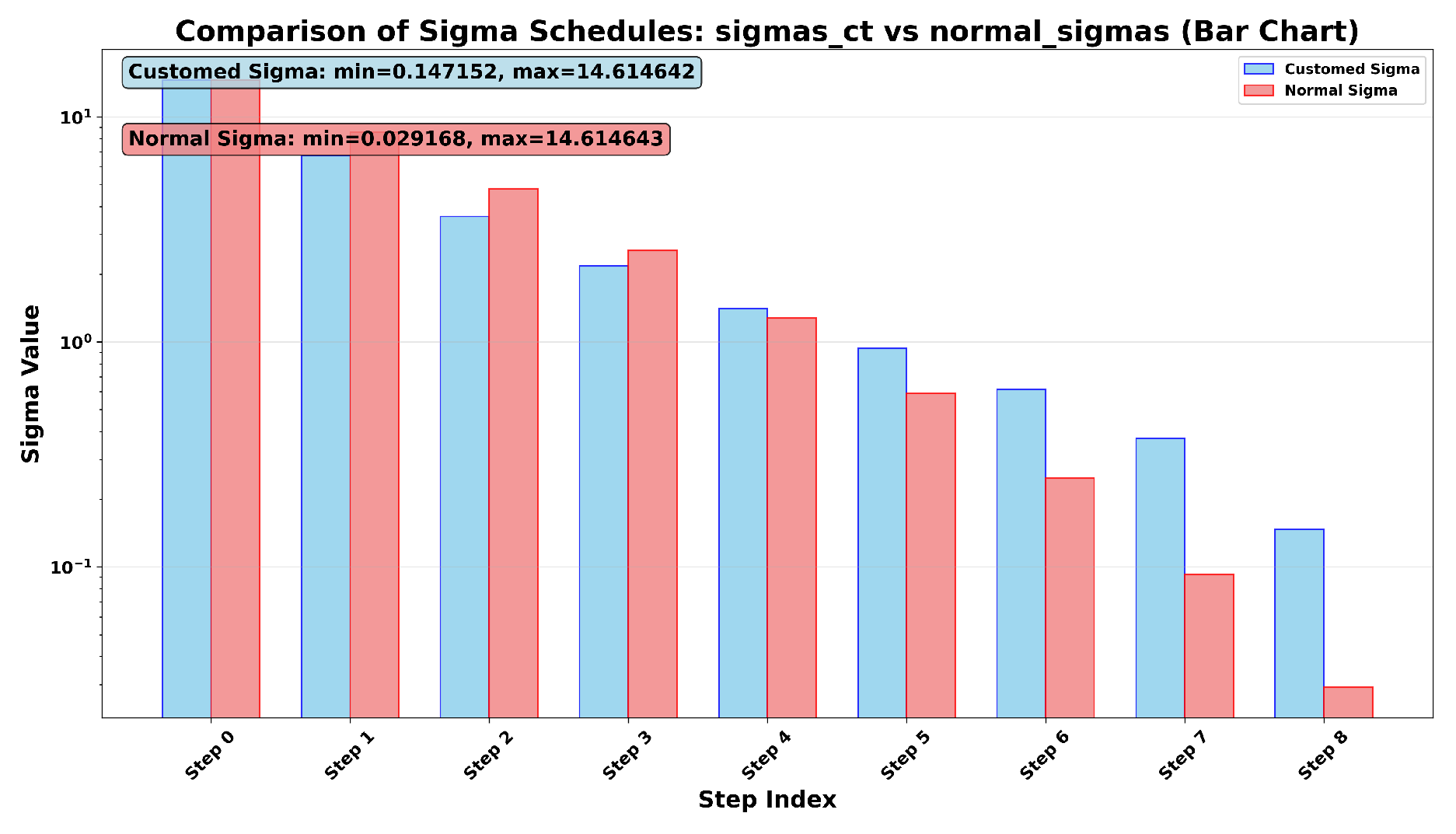

-VAE trained with a small KL-term constraint can handle a small amount of noise, and this setting can reduce the one-step inference budget. The visual different between our discrete method and the method proposed by the Karras’ paper is in

Figure 2

Additionally, consider the Equation (17); the changing

to the

means removing the blurred part of the result caused by the average, which can be achieved by modifying the skip connection and the backbone feature of the U-Net. Previous study[

32] adds a scalar, fast Fourier transformation (FFT) and inverse fast Fourier transformation (iFFT) modules to the skip connection, which the backbone feature being modified by the scalar can use to enhance denoising capability. Moreover, the skip connection being modified by the FFT and iFFT can prevent further smoothing and add details by amplifying the special part of the noisy backbone feature map that lower than a particular frequency. The decorator only applies to the first two skip connections, and the scaler affected the backbone feature is

and

, while the scaler affects the FFT and iFFT is

and

.

Also, consider that the normal ResNet[

33] can be viewed as an ODE[

34] by using the following formula:

where

is the feature output in layer

t.

The U-Net, as a black box ODE solver, can be regarded as another ODE. Thus, modifying the skip connection and scale backbone feature will further increase its output and the moving distance of the diffusion reverse ODE, which let the start step of using U-Net decorator should be parameterized in 1024 x 1024 image synthesis, as it contributes to the increase of moving distance in the noisy part. This behavior causes the global truncation error in solving ODE, which may let the variables be out of the distribution (OOD). We also visualize the decoded feature in the trajectory of different samplers in

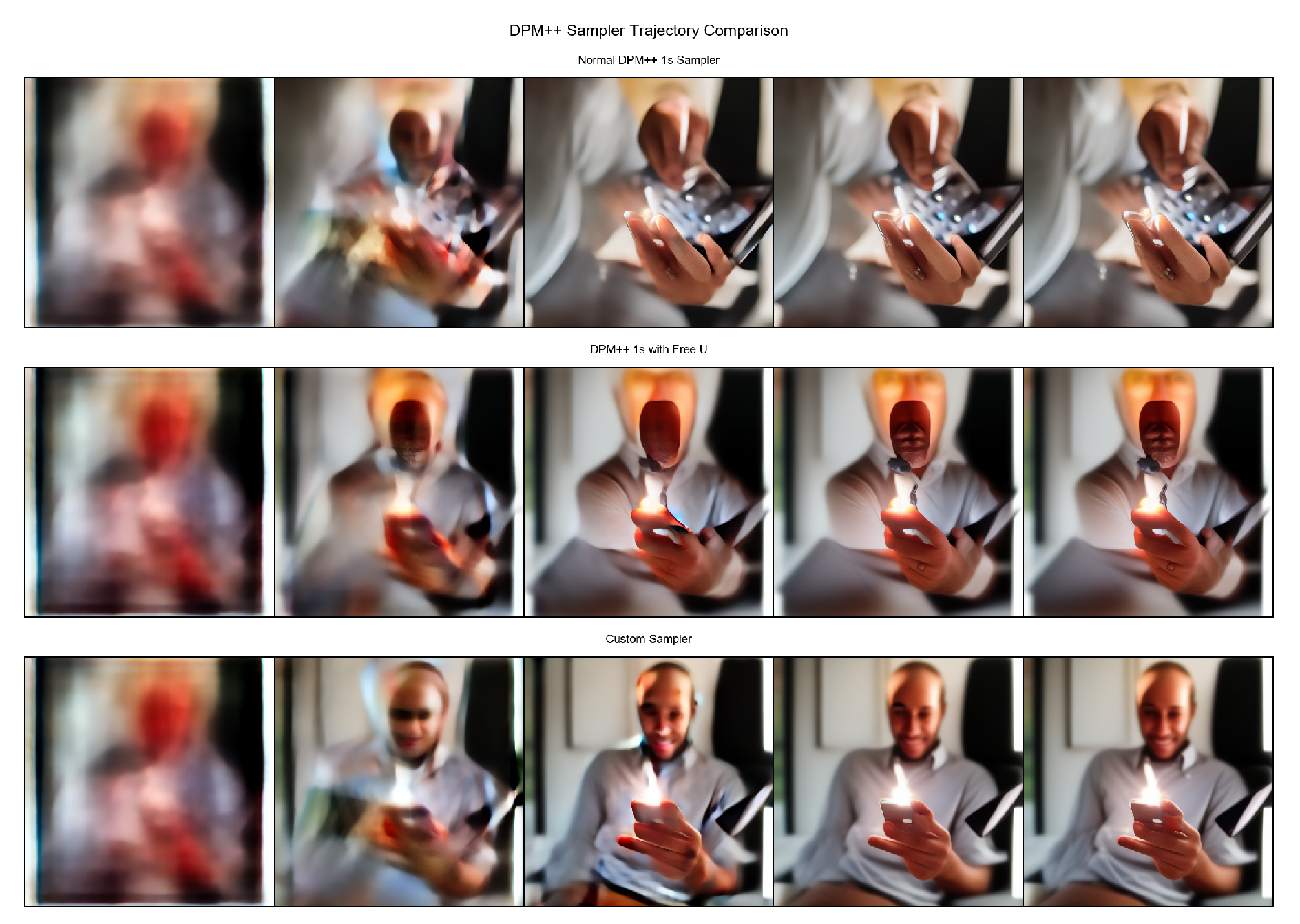

Figure 3.

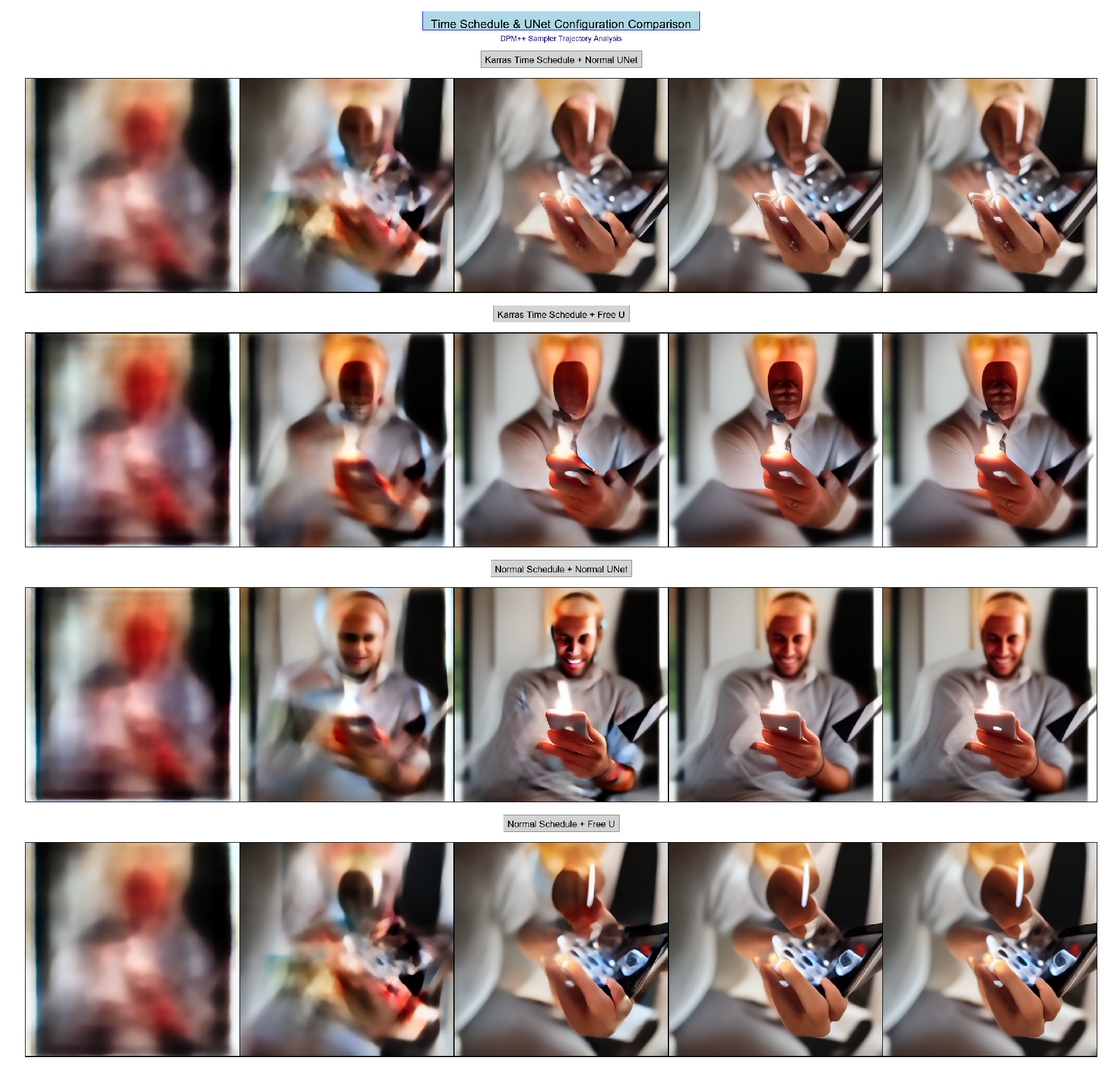

We then visualize the decode trajectory of using different discrete methods by applying the same sampler DPM++ 1s sampler in

Figure 4. The normal time schedule using Free-U will generate a trajectory similar to the method using Karras’ time schedule without using Free-U, which exhibits the evidence of our previous claim.

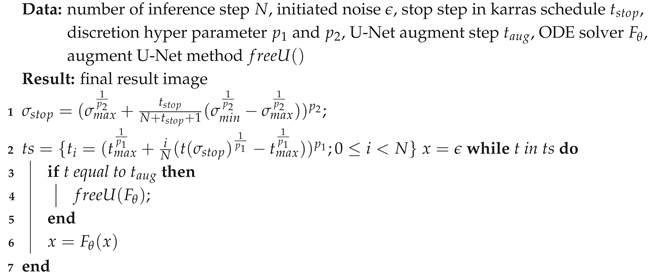

Our algorithm can be described by the tab:alorithm

|

Algorithm 1: custom Karras scheduler in the common framework of Diffusion ODE solving |

|

3.2. Information Theory Analysis of the Different Algorithms

A common point of confusion in deep learning at an early age is how neural networks with so many parameters still have generalization ability[

35]. The normal statistical model uses an extremely limited number of parameters to ensure that it can concisely describe the phenomenon and capture the essence of the observed data. For this problem, the study theory[

36] claims that limiting the number of functions that a model can express helps ensure that the model approaches the best functions it can have, and that it also bounds the distance between the final function a neural network study about and the average of functions. Generally, a possible function expressed by a model is a hypothesis

, and if the number of input data points is

N, the number of possible hypotheses is less than

. According to the information theory, for a dataset

with data

D, the typical set that a model can gain access to is

[

37]. Considering the amount of data in the typical set, and the mutual information between the output and the middle feature of the neural network

, we can further bound the models’ capacity for potential hypotheses.

Explicitly, we can consider the probability of middle feature

z given output

y, and the

provides a match between

y and

z. The information bottleneck theory[

38] shows that

denotes the volume of

Y project to the

Z. Hence,

further constraints the possible hypotheses. As the mutual information bounds the distance between the study hypothesis and the average hypothesis, generative adversarial networks (GANs) [

39], which face mode collapse[

40] that only generate a subset of the dataset, can benefit from it by adding a mutual information constraint [

41]. One study[

26] shows that this phenomenon also appears in the diffusion distillation, which consistently changes the score function and triggers an increase in mutual information. The basic idea of the proof in that study is related to

-variation auto-encoder (

-VAE)[

30], in which, when

-VAE decreases

to let VAE generate high-quality images, the upper bounce of the mutual information constraint will be abated, and there will be an increase in the peak signal-to-noise ratio (PSNR) of the encoding feature

Z. From this perspective, the diffusion model can be deemed as a hierarchy VAE[

4], while progressive distillation[

15] increases the mutual information by letting the model directly use

to replace the original output

. Where

is a noisy feature output by solving ODE. Hence, the PSNR also increases. A recent example of diffusion distillation is SDXL-lightning[

12], which adds GAN loss to the progressive distillation training, and its FID remains similar to normal progressive distillation.

However, it is intuitive that changing the direction of the score function to allow it to point to the final result, rather than the centroid of the possible image, will also affect the mutual information, even if the output does not have an increased PSNR. We provide further analysis regarding this.

The diffusion ODE can be regarded as a Markov process

, in which

follows the input of the diffusion model predicted by the U-Net, and we have the following Equation (23):

in which

is the real

sample from the dataset.

In

-VAE, when given the random variable

Z, the output

Y is assumed to be sampled from a Gaussian distribution using

Y as its mean, and abating the

makes the middle feature

Z more similar to

Y while enhancing the log likelihood of the final synthesis. Moreover, Equation (23) shows that if we modify the

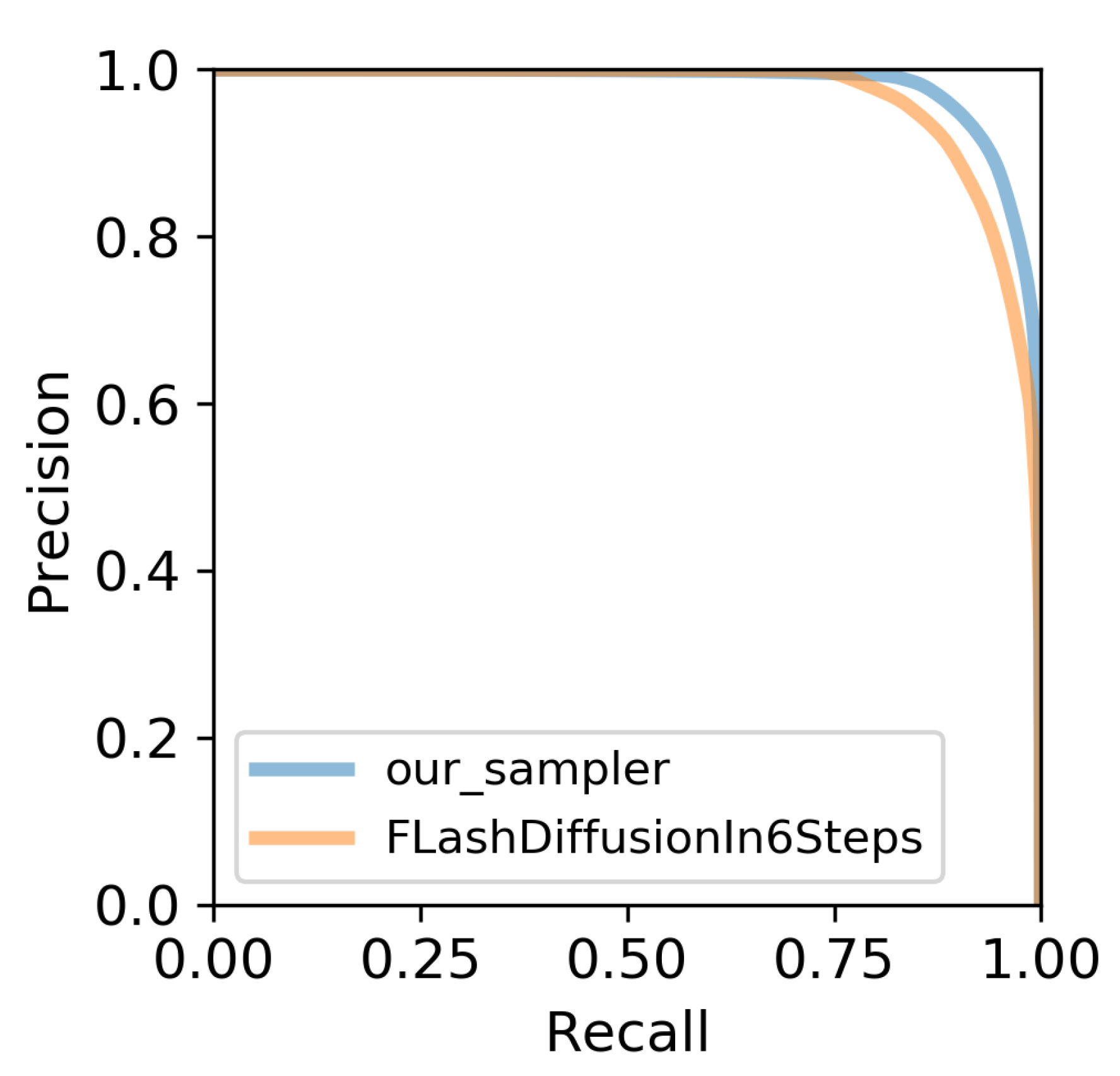

to make it similar to the final output to enhance the log-likelihood by rectifying the trajectory, then the upper bound of the mutual information will also increase. Most distillation methods that do not increase the PSNR of the output will still face this problem. We evaluate the Precision and Recall of Distribution (PRD)[

42] of flash diffusion and our sampler to further validate this claim.