1. Introduction

The Foreign Exchange (FOREX) market, a global behemoth in financial exchange, facilitates the continuous, 24/5, trade of currencies across diverse participants and time zones, thereby acting as a vital conduit for international trade, investment, and risky speculation.

The FOREX ecosystem involves a multitude of actors, including multinational corporations engaged in cross-border trade, governments making strategic interventions, central banks shaping monetary policies, financial institutions providing liquidity and market-making services, and individual traders venturing into the online arena. Despite its complexity and inherent volatility, the FOREX market attracts traders and investors due to features like short trading and leverage. FOREX allows short selling, which is the act of selling a currency that the agent does not currently own, with the commitment to buy it in the near future.

Exchange rates, reflecting the relative value of one currency compared to another, can be classified based on their regime (fixed or floating) and type (nominal or real). Fixed exchange rates, dictated by governments or central banks, remain constant, while floating rates are determined by supply and demand. Nominal exchange rates represent the current market value of a currency pair, while real exchange rates account for inflation. The flexibility of floating rates allows currencies to adapt to changing economic conditions, facilitating trade and investment flows, promoting price stability, and maintaining external balance. The exchange rates of major reserve currencies such as the US dollar (USD), the Euro (EUR), the Japanese Yen (JPY), the British Pound Sterling (GBP) hold significant importance in the global economic landscape due to their crucial role in international trade and financial transactions.

Accurate exchange rate forecasts are crucial for various market participants, including traders, investors, businesses, and policymakers. These predictions inform decisions about currency trades, asset allocation, and risk management, ultimately impacting portfolio performance. Businesses and policymakers rely on these models to plan and execute international transactions, manage foreign currency exposure, and mitigate risks associated with currency fluctuations. Additionally, they play a role in formulating effective monetary and fiscal policies aimed at achieving macroeconomic stability, managing inflation, and fostering sustainable economic growth.

Traditionally, exchange rate forecasting relied on fundamental and technical analysis. Fundamental analysis involves studying economic indicators like interest rates, inflation, GDP growth, trade balances, and even geopolitical developments to understand the underlying factors driving currency movements. Technical analysis, on the other hand, focuses on historical price data, chart patterns, and technical indicators to identify trends and predict future price movements. It is important to note that expert opinions, intuition, and qualitative judgments are sometimes used. However, their success rate can vary dramatically.

In recent years, a paradigm shift has occurred, with advanced computational techniques like machine learning (ML) and artificial intelligence (AI) making inroads into exchange rate forecasting. These approaches leverage big data analytics, deep learning, and neural networks to process vast amounts of financial data, identify complex patterns, and generate precise predictions. Deep learning models have proven particularly effective, demonstrating superior predictive capabilities compared to traditional methods [

1]. Their ability to capture non-linear relationships, temporal dependencies, and high-dimensional features inherent in financial time series data sets them apart. Classic architectures like Convolutive Neural Networks (CNN) and Recurrent Neural Networks (RNN) have limitations. For instance, CNN pooling layers disregard crucial part-whole correlations and lose valuable data, while RNNs are prone to gradient vanishing or exploding issues during backpropagation.

Addressing these challenges, Vaswani et al., (2017) [

29] introduced the Transformer, a novel deep learning model. This model, originally excelling in natural language processing (NLP) tasks, replaces traditional CNN and RNN frameworks with an attention mechanism. Unlike the sequential structure of RNN and LSTM, the Transformer’s self-attention mechanism can be trained in parallel and requires less complexity to gather global information.

While Transformer architectures have revolutionized NLP tasks such as machine translation and language modeling, financial institutions are exploring its ability to tackle the complexities of financial time series forecasting. This integration holds immense potential due to the inherent challenges associated with predicting financial market behavior. Additionally, advancements in computing technology and data availability have facilitated the widespread adoption of Transformer-based models by academic researchers seeking an edge in currency trading and investment strategies.

Advancements in technology alone are not a panacea, especially when considering the recent complexities in the FOREX market, such as the fluctuations caused by the COVID-19 pandemic and the Russia-Ukraine conflict. These events posed significant challenges for traders in predicting currency pair movements, as economic factors, sentiment, and geopolitical developments all played a significant role in shaping exchange.

Inspired by the success of Transformers in modeling sequential data in NLP, the same concept is employed in this study to forecast the evolution of exchange rates in the FOREX market. While recent studies have explored their use for trading purposes [

2,

3,

4,

5], we focus on the use of Transformers for next-day closing price prediction, which to the best of our knowledge is the first effort of its kind in the FOREX.

In their analysis, Fisher et al., [

4] examine Transformer models with time embeddings for FX-Spot forecasting, comparing results with traditional models like LSTM for major currency pairs (EUR/USD, USD/JPY, GBP/USD) from November 2020 to January 2022. Their method includes both univariate and multivariate models, utilizing historical prices along with technical and fundamental data. Findings reveal that Transformers significantly outperform LSTM. Transformers demonstrated strength in noisy, high-frequency environments, proving effective for complex financial series.

Gradzki & Wojcik [

3] focus on high-frequency Forex trading with Transformers, comparing them to ResNet-LSTM across six currency pairs and five time intervals (60 to 720 minutes). This study employs a Transformer architecture for forecasting, enhanced by technical analysis for improved accuracy. The findings indicate that Transformers slightly outperform ResNet-LSTM, especially in longer intervals (480, 720 minutes). However, transaction costs significantly impact performance in shorter intervals (e.g., 60 minutes), underscoring the necessity for realistic backtesting.

Exploring a Transformer Encoder model for minute-level Forex trading, [

5] specifically focus on EURUSD and GBPUSD. The model integrates Exponential Moving Averages (EMA) with varying smoothing factors to better capture price trends. Trained on data from July 2023, it achieves a cross-entropy loss below 0.2, indicating strong predictive accuracy. However, profitability is limited by high-frequency trading costs, as spreads can negate gains, demonstrating that real-world outcomes are significantly affected by transaction costs.

In a significant contribution, Kantoutsis et al., [

2] presents the Momentum Transformer, an attention-based deep learning model that outperforms traditional momentum and mean reversion strategies, as well as LSTM-based models. By leveraging attention mechanisms, it captures long-term dependencies and adapts to market shifts, such as those seen during the SARS-CoV-2 crisis. Back-testing from 1995 to 2020 reveals superior performance, particularly in recent years and during significant market events. While the hybrid Temporal Fusion Transformer (TFT) performed best overall, pure attention models also demonstrated strong performance. The study suggests an ensemble approach for improved results across asset classes and highlights the model’s robustness in commodities trading.

In our approach we test the forecasting ability of our Transformer-based model, called EXPERT on 9 currency pairs: EUR/USD, AUD/CAD, EUR/AUD, EUR/CAD, GBP/AUD, NZD/USD, USD/JPY, USD/MXN, BRL/USD and evaluate it against six widely used forecasting models: the Stochastic Gradient Descent (SGD), the Bagging Regression (BGR), the Extreme Gradient Boosting (XGB), the Random Forests (RF), the Linear Regressor, and the Long Short-Term Memory (LSTM) model.

Each dataset for these 9 currency pairs has been individually used in every forecasting model, using the classic training - testing scheme. The training set is utilized to fine-tune the parameters of the model; the performance of the trained models is evaluated on the testing set. All models predict the closing price for the next day. The estimated value is then evaluated with the actual values.

The paper is organized as follows.

Section 2 reviews related work, while

Section 3 presents the collected dataset. Every aspect of the EXPERT model is analyzed in

Section 4. The alternative forecasting models are briefly introduced in

Section 5.

Section 6 presents the evaluation metrics used. The forecasting performance of the EXPERT model against the competition is presented in

Section 7. In the same section, we present the performance of the EXPERT model for larger forecasting horizons and evaluate its performance using the Multiple Comparisons with the Best method on five samples from our dataset. In

Section 8, we evaluate the success of a Transformer-based automatic trading system against other similar systems, and in

Section 9, we conclude the paper.

2. Related work

A systematic review of the existing literature was conducted to gain a comprehensive understanding of machine learning prediction models in exchange rates [

6,

7,

8,

10].

The improvements reported for neural networks in the paper by Islam et al., [

10] focus on a hybrid GRU-LSTM model that outperforms standalone GRU and LSTM models, as well as a simple moving average (SMA) model, across several performance metrics, including the MSE, RMSE, MAE, and R2 score. Comparisons were made against these benchmarks to demonstrate the efficacy of the proposed model. The models were tested on historical foreign exchange data for four major currency pairs: EUR/USD, GBP/USD, USD/CAD, and USD/CHF, using a data set that spans from January 1, 2017, to June 30, 2020. The hybrid model was specifically applied to predict the closing prices of these currency pairs for both 10-minute and 30-minute timeframes, highlighting its superior predictive capabilities.

In their study on exchange rate prediction, Panda et al., [

8] reveal that a hybrid GRU-LSTM model effectively predicts future closing prices in the FOREX market. Applied to major currency pairs (EUR/USD, GBP/USD, USD/CAD, USD/CHF), this model outperformed standalone GRU, LSTM, and simple moving average (SMA) models in terms of MSE, RMSE, and MAE for 10-minute intervals, and excelled with GBP/USD and USD/CAD in 30-minute intervals. It also achieved a higher

score, indicating a lower prediction risk. Using a dataset of closing prices from January 1, 2017, to June 30, 2020, the model showed strong predictive capabilities, though it struggled during sudden price fluctuations. Future enhancements are planned, including applications to more currency pairs and shorter timeframes.

The key findings of the paper [

7] emphasize the significant advantages of machine learning algorithms over traditional stochastic models in financial market forecasting. After surveying more than 150 relevant articles, the study demonstrates that machine learning algorithms generally outperform stochastic methods by effectively capturing nonlinear dynamics in financial time series across various asset classes and market geographies. Recurrent neural networks (RNNs) exhibit superior performance compared to feedforward neural networks and support vector machines, likely because of their ability to leverage temporal dependencies.

The paper of Seze et al., [

6] reviews significant advancements in deep learning (DL) models for financial time series forecasting, showcasing their superiority over traditional machine learning approaches. Long Short-Term Memory (LSTM) networks are favored for their effectiveness in handling time-varying data and capturing temporal dependencies. More than half of the studies surveyed focus on recurrent neural networks (RNNs) for price trend predictions, while deep multilayer perceptrons (DMLPs) are often used for classification tasks. In addition, there is increasing interest in deep reinforcement learning (RL) for algorithmic trading, offering new opportunities to integrate behavioral finance insights.

Fletcher [

9] demonstrates that machine learning techniques can theoretically be applied to make accurate currency predictions. Their findings indicate that it is possible to forecast the direction of movement (up, down, or within the bid-ask spread) of the EUR/USD pair between 5 and 200 seconds into the future, with accuracy rates ranging from 90% to 53%, respectively. Additionally, they have shown that it is feasible to predict price turning points for a basket of currencies in a way that can be profitably exploited.

Goncu [

20] applied machine learning regression methods—Ridge, decision tree, support vector, and linear regression—to predict monthly average exchange rates, focusing on the USD/TRY (Turkish Lira). Key macroeconomic factors, such as domestic money supply, interest rates, and the prior month’s exchange rate, are used for prediction. Among the tested models, Ridge regression delivers the most accurate forecasts, with relative errors under 60 basis points. Out of sample back-testing over various time periods confirms Ridge’s superior performance, suggesting it effectively balances accuracy and overfitting. The model can also be used for scenario analysis, helping policymakers and investors assess the impact of interest rate changes on exchange rates.

Research by Qi et al., [

18] introduces event-driven features to improve Forex trading predictions by identifying trend changes and retracement points for optimal trade entry. The authors tested deep learning models, including LSTM, BiLSTM, and GRU, against a baseline RNN, with GRU and BiLSTM outperforming the others across various currency pairs. The best model, GRU with 60 timesteps for EUR/GBP, achieved an RMSE of 1.50x

and a MAPE of 0.12%, surpassing previous studies. These findings show that the proposed models, combined with event-driven features, can provide accurate, low-risk trading strategies.

The development of more advanced models has been proposed from Islam & Hosssain [

19] where they introduced a network combining the GRU with the LSTM for improved FOREX rate prediction.

3. The Dataset

In this study, our objective is twofold: a) to create a Transformer-based model (EXPERT) for forecasting exchange rates and b) to test it against a set of well-known forecasting methodologies. To ascertain the overall most accurate forecasting model, we must test them on a rich and diverse dataset that includes exchange rates with different characteristics. Testing our models on multiple pairs helps to mitigate the risk of overfitting to the specific market characteristics present in a single currency pair, increasing the model’s reliability and applicability to real-world trading scenarios. The dataset was compiled using the Metatrader application and comprises two major, five minor, and three exotic exchange rates, spanning the period from January 1999 to March 2022 for the weekdays. Each entry in the dataset contains the Open, High, Low, and Close values.

The dataset was divided into training, validation, and testing sets. The first 86% of the data was used for training, with an internal validation split of 10%, meaning that 68.8% of the total dataset was used for training and 17.2% for validation. The remaining 14%, which is our testing subset, was used as a validation (out-of-sample) data to evaluate the models’ ability to generalize to new, unseen data. A sliding window approach was applied to create sequences for both the training and testing phases, allowing the model to capture temporal dependencies. This data-splitting strategy provided a robust evaluation of the model’s performance across both the training and out-of-sample data.

The currency pairs exchange rates were categorized as major, minor, or exotic based on the European Securities and Markets Authority (ESMA). In this context, the currency pairs in our datasets are classified as:

Major Currency Pairs:

Minor Currency Pairs (Cross Currency Pairs):

- 3.

EUR/AUD (Euro/Australian Dollar)

- 4.

EUR/CAD (Euro/Canadian Dollar)

- 5.

AUD/CAD (Australian Dollar/Canadian Dollar)

- 6.

GBP/AUD (British Pound/Australian Dollar)

- 7.

NZD/USD (New Zealand Dollar/US Dollar)

Exotic Currency Pairs:

- 8.

USD/MXN (US Dollar/Mexican Peso)

- 9.

BRL/USD (Brazilian Real/US Dollar)

The major currency pairs are the most widely traded currencies globally. According to ESMA the major currencies are currency pairs comprising any two of the following currencies: US Dollar (USD), the Euro (EUR), the Japanese Yen (JPY), the British Pound (GBP) and the Canadian Dollar (CAD). All other currencies are considered non-major. Exotic currency pairs involve one major currency and one currency from a smaller or emerging economy; they generally have higher volatility and higher spreads compared to major and minor pairs.

Table 1 provides a summary of the essential descriptive statistics for each currency pair, helping to capture the main characteristics of their price series: minimum and maximum value, mean and standard deviation, first () and third () quartile, skewness, and kurtosis.

Table 1.

Descriptive statistics for the currency pairs

Table 1.

Descriptive statistics for the currency pairs

| |

Min |

Max |

Mean |

St.D. |

|

|

Skewness |

Kurtosis |

| AUD/CAD |

0.75 |

1.07 |

0.95 |

0.05 |

0.92 |

1.00 |

-0.46 |

0.18 |

| EUR/AUD |

1.16 |

2.08 |

1.54 |

0.15 |

1.44 |

1.64 |

0.04 |

0.52 |

| EUR/CAD |

1.21 |

1.72 |

1.46 |

0.09 |

1.40 |

1.53 |

-0.17 |

-0.37 |

| EUR/USD |

0.82 |

1.59 |

1.19 |

0.15 |

1.10 |

1.31 |

-0.13 |

-0.33 |

| GBP/AUD |

1.44 |

2.64 |

1.81 |

0.21 |

1.67 |

1.91 |

0.65 |

0.30 |

| NZD/USD |

0.39 |

0.88 |

0.66 |

0.11 |

0.61 |

0.74 |

-0.63 |

-0.25 |

| USD/JPY |

75.81 |

134.72 |

107.11 |

12.51 |

102.27 |

116.22 |

-0.77 |

0.12 |

| USD/MXN |

9.86 |

25.34 |

15.93 |

3.58 |

12.90 |

19.13 |

0.19 |

-1.29 |

| BRL/USD |

0.23 |

0.65 |

0.42 |

0.11 |

0.33 |

0.52 |

0.05 |

-1.17 |

For each currency, a dataset was compiled consisting of the Open, High, Low and Close values (open is the price at the start of the period, close is the price at the end of the period, high is the highest price traded during the period and low is the lowest price traded during the period). All timeseries were normalized to the 0-1 range using the classic MinMax normalization. In every case, lagged values of the four time series were employed to forecast the closing price at time instance

. The optimal lags for each currency pair were identified through an exhaustive trial and error search for lag values up to 20 and can be found in

Table 2.

4. The EXPERT model

The Transformer model, first introduced by Vaswani et al. [

29], revolutionized Natural Language Processing (NLP) by outperforming Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs) in tasks like machine translation. This architecture’s key innovation is the self-attention mechanism, which enables the model to capture long term dependencies and analyze input sequences more comprehensively.

In time series forecasting, decision making processes across sectors like finance, retail, and industry often rely on multivariate time series data. Traditionally, statistical models such as Vector Autoregression (VAR) [

24], and Autoregressive Moving Average (ARMA) [

23] have been used to forecast time series data. Recently, machine learning and particularly deep learning models such as RNNs and CNNs have been explored for this purpose [

26,

27]. In parallel, the Transformer model was tested as an alternative and promising approach for time series forecasting [

25]. Our study lies in the same methodological path.

4.1. Architecture Modifications and Network Structure

The overall architecture for time series forecasting draws inspiration from the Transformer Encoder structure but includes several adjustments, likely based on a combination of good practices in the literature and insights from studies like [

25].

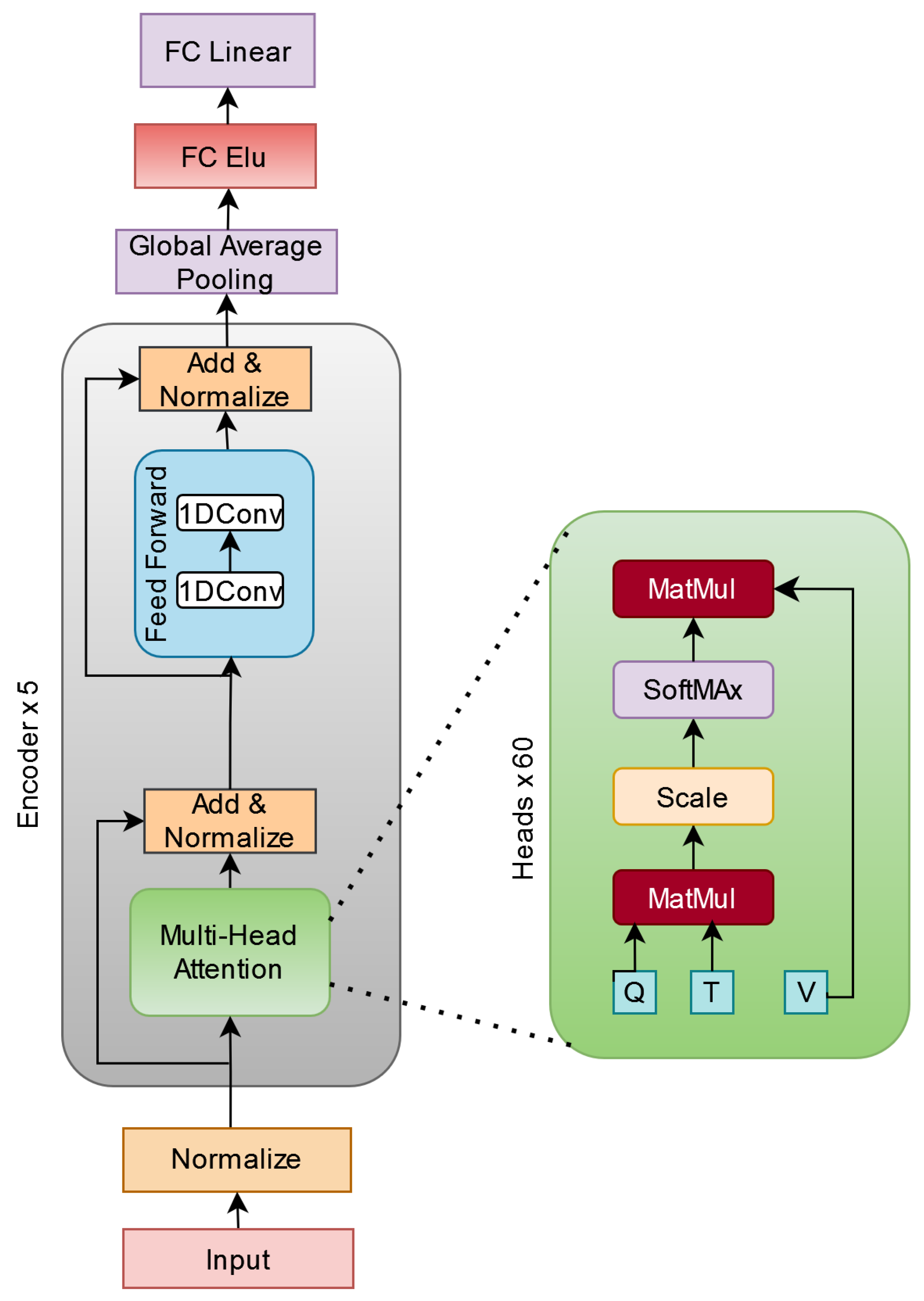

Self Attention and Layer Normalization: Each encoder block starts with layer normalization, a technique that stabilizes the training process by standardizing the input across each layer, ensuring numerical stability and faster convergence. This is followed by multi head self attention, a mechanism that enables the model to focus on different parts of the input sequence simultaneously. Unlike traditional models that process sequences step by step, self attention allows the model to capture diverse temporal patterns by analyzing all positions in the sequence at once. For time series data, the self attention layers are adapted to capture relationships between time points, focusing on temporal dependencies rather than word to word associations, as seen in natural language processing (NLP) models.

Residual Connections and Feedforward Networks: To maintain the flow of important information through the network and address the vanishing gradient problem—where gradients become too small during training, impeding learning—residual connections are employed. These connections add the input of a layer directly to its output, preserving information from earlier layers. After the self attention step, a feedforward neural network (FFN) processes the output, enabling the model to learn complex, nonlinear relationships within the time series. The FFN often includes convolutional layers that scan over input data and ReLU (Rectified Linear Unit) activations, which introduce nonlinearity and help the model detect intricate temporal patterns. This combination of techniques draws from the original Transformer model by Vaswani et al. [

29], and has been adapted for time series analysis [

25].

Regularization Techniques: To prevent overfitting, where the model performs well on training data but poorly on unseen data, dropout regularization is applied. Dropout randomly "turns off" a fraction of neurons during training, forcing the model to learn more robust features. This regularization is used both after the feedforward layers and within the multi layer perceptron (MLP) used for forecasting. By improving the model’s ability to generalize, these techniques enhance its robustness in handling unseen time series data.

A comprehensive discussion of these terms is provided in

Section 4.4.

4.2. Output Processing and Forecasting

Once the input sequence has passed through the encoder blocks, the output is aggregated using global average pooling. This operation condenses the sequence into a fixed length vector by summarizing information across all positions, making it easier for the model to focus on key features.

The pooled representation is then fed into an MLP for final processing. The MLP consists of a fully connected layer with Exponential Linear Unit (ELU) activation, followed by a linear output layer, which directly regresses the target values, suitable for continuous time series prediction. The linear activation function in the output layer is critical for regression tasks where the goal is to predict real valued outputs.

4.3. EXPERT Unique Features Architecture

The proposed EXPERT model builds upon the core concepts of the Transformer architecture, but incorporates several key modifications to address the specific demands of time series forecasting, particularly in financial data. The original Transformer model, designed primarily for Natural Language Processing (NLP) tasks, includes components such as positional encodings, a decoder, and causal masking that are unnecessary for time series forecasting. Below, we outline the unique aspects of our architecture, focusing on how the EXPERT model diverges from the standard Transformer model and why these changes are necessary for accurate forecasting.

No Positional Encoding

In the original Transformer, positional encoding is applied to account for the lack of inherent order information in input sequences. However, for time series data, where sequential order is inherent, this additional encoding is redundant. Therefore, the EXPERT model omits positional encodings, relying on the inherent structure of the time series to capture temporal relationships. This simplification reduces computational complexity while preserving the time dependent characteristics of the data.

Encoder Only Architecture

While the classical Transformer consists of both an encoder and a decoder, the EXPERT model uses an encoder only structure, as forecasting tasks do not require output sequences to be generated (e.g., in machine translation). The encoder processes the historical time series data, and no decoder is necessary, as the output is a single future prediction rather than a sequence. This architectural choice focuses all learning capacity on extracting meaningful patterns from past data, which is critical for making accurate time series forecasts.

No Masking in Attention Mechanism

In NLP tasks, causal masking is applied in the decoder to ensure the model does not access future tokens when making predictions. However, since time series forecasting only involves predicting future values based on past data, the EXPERT model does not require masking. The attention mechanism is free to focus on any part of the input sequence, optimizing its ability to capture long range dependencies and interactions within the historical data.

Global Average Pooling for Temporal Feature Aggregation

Our EXPERT model applies Global Average Pooling (GAP) to aggregate the sequence of hidden states generated by the encoder. This aggregation provides a condensed representation of the entire time series, summarizing its overall trend and relevant features. GAP is well suited for time series tasks, as it reduces the sequence into a single feature vector that captures the most salient information for forecasting.

Use of Convolutional Layers in Feed Forward Networks

While the original Transformer applies fully connected layers in the feed forward networks, the EXPERT model employs Conv1D layers to capture local temporal dependencies between adjacent time steps. Convolutional layers are more effective in extracting short term patterns, which are crucial for tasks like financial forecasting where trends and relationships evolve over time. By using Conv1D, the EXPERT model is able to learn finer grained local structures in the data while still maintaining the benefits of the multi head self attention mechanism.

Customized Dropout Rates to Mitigate Overfitting

The EXPERT model incorporates custom dropout rates tailored to different layers of the architecture. Specifically, dropout is applied in both the encoder blocks and the Multilayer Perceptron (MLP) layers. This differentiation helps prevent overfitting, particularly when dealing with highly volatile financial time series data, where overfitting can lead to poor generalization performance. In contrast, the classical Transformer applies uniform dropout across layers, which may not be optimal for time series forecasting.

Data Normalization and Numerical Embedding

In contrast to the word embeddings used in NLP tasks, the EXPERT model applies MinMax scaling to normalize the numerical time series data. This normalization ensures that all input features are on the same scale, which is critical for stabilizing training and improving model performance when forecasting values that vary widely in magnitude. The use of this preprocessing step further highlights the model’s adaptation to the specific challenges posed by financial time series data.

These modifications demonstrate that the EXPERT model is uniquely optimized for time series forecasting, particularly for financial applications where patterns, trends, and long range dependencies must be carefully captured. The encoder only architecture and adjustments to the attention and feed forward layers enable the model to make accurate predictions of future exchange rates based on historical data.

4.4. The EXPERT Architecture

Initially, the EXPERT model receives input data in the form of historical currency exchange rate values. Each input sequence represents historical exchange rates for a specific currency pair over a period of time. This input data is passed through an embedding layer, converting it into numerical vectors that the model can understand. The embedded input sequences are then passed through multiple transformer encoder layers, each consisting of multi head self attention mechanisms

1 and feed forward neural networks. After processing through these layers, the model generates output sequences.

The model is trained using historical currency financial data, adjusting its parameters to minimize the difference between its predictions and the actual data. Once the model is trained, it attempts to predict the next day’s closing price for the exchange rates mentioned in this paper.

The EXPERT architecture consists of the following components:

Figure 1.

Encoder based model architecture of EXPERT.

Figure 1.

Encoder based model architecture of EXPERT.

Embedding Layer

The embedding layer initiates with data normalization, a technique essential for stabilizing the training process by standardizing the input data. This is achieved using Layer Normalization, which normalizes the input data as follows:

where:

and

is a small constant for numerical stability, and

d is the dimension of the input features.

Encoder

The core of the EXPERT model is the encoder, which takes the input time series data and transforms it into a sequence of hidden states. This is done using a stack of encoder blocks. Each encoder block consists of two sublayers:

Self attention layer: This layer allows the model to learn long range dependencies in the input data. A key part of this is the residual connection, which ensures that the input is passed forward while also allowing the model to learn relationships:

Feed forward network: The feed forward network allows the model to learn non linear relationships between the input data and the output value. Mathematically, this is computed as:

where

,

,

,

, and

d represents the dimensionality of the input and output of the FFN. It is typically the hidden size of the input sequence after processing by the encoder. It remains consistent throughout the architecture, f represents the dimensionality of the intermediate layer within the FFN. This is usually larger than d, providing the network with a greater capacity to model complex transformations.

Global Average Pooling

The global average pooling layer takes the sequence of hidden states from the encoder and converts it into a single vector. This vector represents the overall trend of the input time series data. The operation is defined as:

where

n is the sequence length. The pooling operation compresses the sequence, allowing the model to focus on global trends.

Multilayer Perceptron (MLP)

The MLP takes the output of the global average pooling layer as input and predicts the output value. Each layer of the MLP performs the following transformation:

where

and

are the weights and biases, and ELU is the activation function applied at each layer.

Output Layer

The final output layer applies a linear transformation to predict the output value. This is represented by the following equation:

where

and

are the weights and biases of the output layer.

Encoder Based Model - Hyperparameter Values

The optimization of the benchmarks in this study was focused on hyperparameter tuning to improve model performance. Key hyperparameters considered for optimization included the number of attention heads, feed forward network dimension, the number of transformer blocks, learning rate scheduling, and dropout rates. These parameters were chosen due to their significant impact on the performance of transformer based models.

The corresponding value range for each hyperparameter were delineated as follows:

Number of attention heads: Tested values ranged from 2 to 60 heads.

Feedforward network dimension: It varied between 128 and 1024 units.

Number of transformer blocks: The number of transformer layers varied between 2 to 7 blocks.

Learning rate: A custom learning rate scheduler was implemented to progressively increase the learning rate during the initial warm up period (30 epochs), followed by gradual decay over 100 epochs. The base learning rate was set at , with a minimum learning rate of .

Dropout rates: Applied to both the transformer layers and the fully connected layers, dropout rates ranged between 0.1 and 0.48 to prevent overfitting.

The search for optimal hyperparameters was carried out using a combination of grid search and manual tuning, informed by early experimental results and prior knowledge. Grid search was employed for discrete parameters such as the number of attention heads and transformer blocks, while a more manual approach was applied for parameters like learning rate and dropout, as these often required finer control during training iterations. Additionally, we tested various training dataset ranges, from 75% to 96%, with the remaining portion of the data reserved for testing. This allowed us to assess the model’s performance across different training set sizes.

The implementation leveraged TensorFlow and Keras libraries for deep learning, with specific reliance on Keras’ Sequential API and the MultiHeadAttention and Conv1D layers for constructing EXPERT model architecture. The MinMaxScaler from scikit-learn was used for feature scaling, and early stopping with learning rate scheduling was implemented using Keras callbacks to prevent overfitting and optimize the learning process.

Table 2 presents the hyperparameter configurations used for different currency pairs in our EXPERT models. While the general structure of the model remains consistent across different setups, key parameters such as the number of attention heads, feedforward dimension, and batch size vary depending on the specific dataset. The proposed architecture consists of multiple stacked transformer blocks, each incorporating a self attention mechanism with varying head sizes and feedforward layers. The MLP layer contains 256 units for all currency pairs. To prevent overfitting, dropout is applied to both the MLP layers and the encoder blocks, with an MLP dropout rate varying per experiment, while the encoder dropout remains fixed at 0.1 for all cases. A global average pooling layer aggregates sequence level representations, which are subsequently passed through fully connected layers to generate the final forecasting output. The model is trained using adaptive optimizers, such as ADAM and ADAMW, with batch sizes optimized for each currency pair to ensure robust performance.

7. Model’s Performance

7.1. Next Day Forecasting

In this section, we assess the forecasting performance of the proposed EXPERT model against six alternative forecasting models, namely Linear Regression, Random Forests, SGD, XGB, Bagging Regression and LSTM on 9 exchange rates: EUR/USD, AUD/CAD, GBP/AUD, NZD/USD, USD/JPY, EUR/AUD, EUR/CAD, USD/MXN, BRL/USD, covering the dynamics of various currency pairs on the next day forecasting.

Table 3 showcase the efficacy of each forecasting model for every currency exchange rate. The bold values show the optimal model, and the underlined values show the second best one.

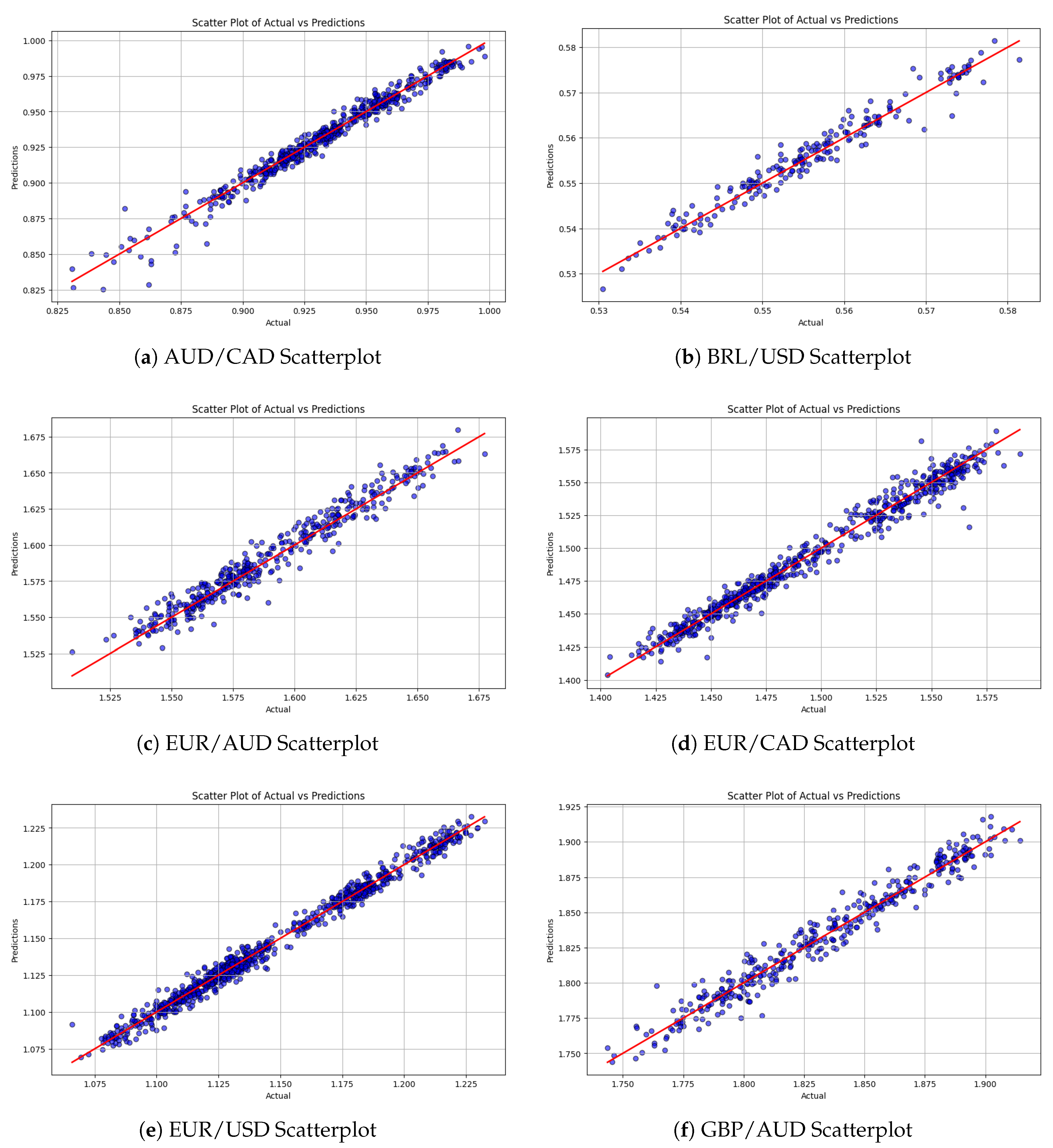

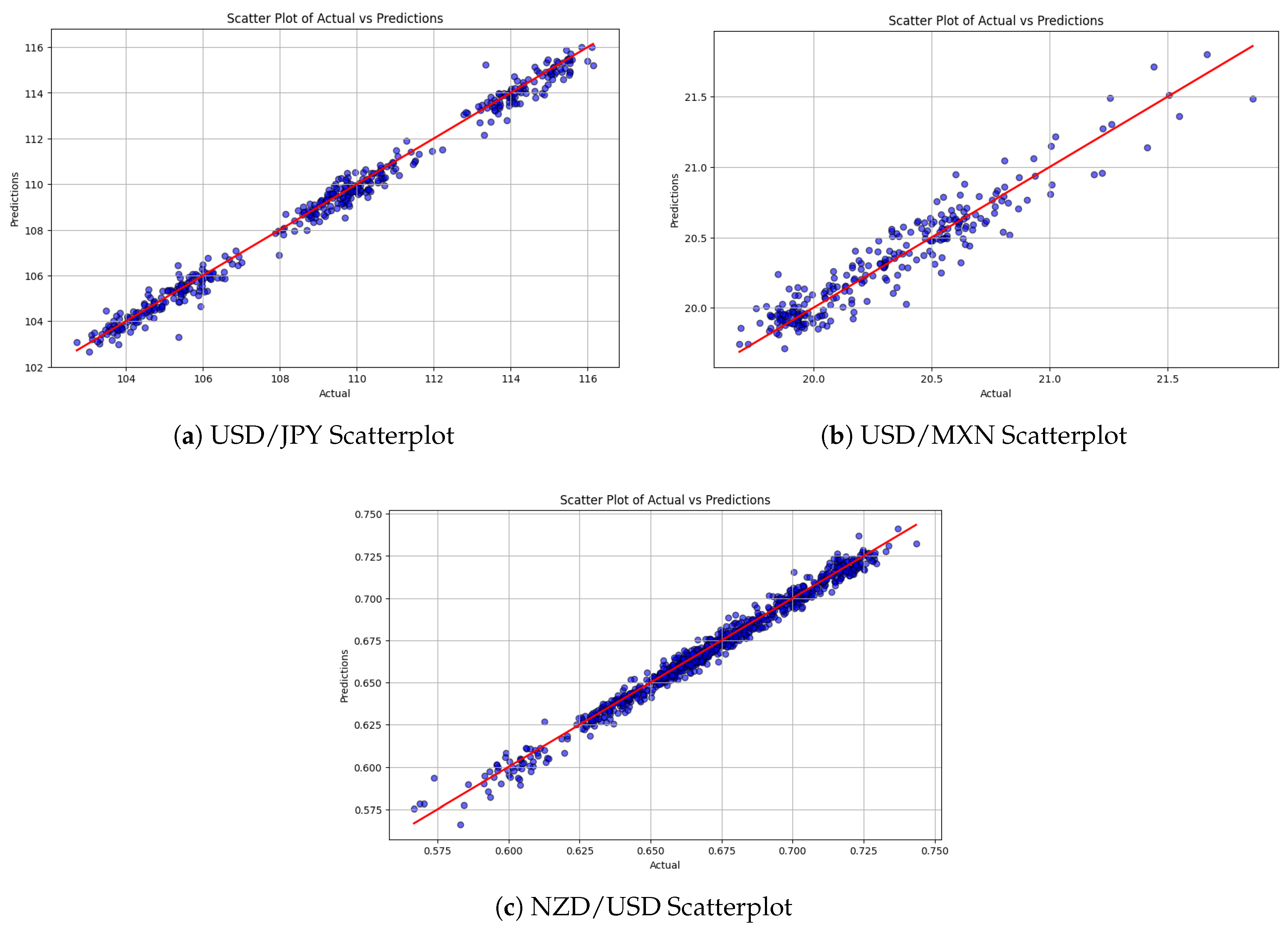

Upon analysis of the reported results, several key conclusions can be drawn: a) all methodologies demonstrate high levels of accuracy in their predictions, with MAPE values consistently below 1%, b) the MAE values align well with MAPE, enhancing confidence in the conclusions, and c) the proposed Transformer based EXPERT model consistently outperformed all other methodologies. However, in the BRL/USD pair, the LIN methodology exhibited a lower MAE compared to the EXPERT model, despite the latter maintaining superior overall performance with lower MAPE and MSE values. The forecasting accuracy of EXPERT as counted by the MAPE ranged from 0.449% in the case of the NZD/USD to 0.275% in the case of USD/JPY.

The LSTM model achieved the second best performance in most cases, including EUR/USD, AUD/CAD, EUR/AUD, GBP/AUD and USD/JPY. Linear regression (LIN) ranked second in exchange rate BRL/USD in terms of MAPE, achieving the second lowest MAPE value, and also ranked second in the USD/MXN exchange rate based on MAE and MSE. Additionally, other models like SGD and XGB occasionally demonstrated close competition across the datasets.

In

Table 4 we present the relative performance gain using the EXPERT model over the second best forecasting model.

The forecasting accuracy gain achieved by the EXPERT model over the second best method ranges from 3.21% in the case of the OLS forecasting model for the BRL/USD exchange rate to 18.29% in the case of the LSTM model for the NZD/USD exchange rate.

Figure 2 and

Figure 3 present a series of scatter plots comparing actual versus forecasted prices, offering a comprehensive visual assessment of the model’s predictive performance across different scenarios. These plots illustrate the degree of correlation between observed and predicted values, highlighting trends and potential discrepancies in the forecasts.

7.2. Longer Forecasting Horizon

To further assess the proposed EXPERT methodology, we tested its predicting power at multiple forecasting horizons ranging from two days up to fourteen days ahead for the EUR/USD currency pair.

Static Forecasting

Initially, a static forecasting scenario was examined wherein the independent variables were restricted to historical values up to the time instance t, while the target values remained . For each forecasting horizon, the EXPERT model was re-trained following the identical procedure applied for the next day forecast. For instance, when evaluating a forecasting horizon of +3, data up to time instance t were utilized during model training to predict the value observed at time instance .

Dynamic Forecasting

In the second scenario, we employed the next day forecasting model in a recursive manner to dynamically extend the forecasting horizon. In this approach, the independent variables were confined to historical values up to instance t. However, predicted values obtained in were utilized to make forecasts for , and similarly, predicted values in were used to anticipate , and so forth.

Table 5 shows the performance of the Static and the Dynamic Forecasting scenarios for various horizons.

As expected, the accuracy decreases as the forecasting horizon distance increases. However, the forecasting error is acceptable even for the fourteen days ahead forecasting model, reaching just 3.5%. The performance of the dynamic forecast is more accurate than the static forecast for every forecasting horizon, yielding very close to the static forecast after 14 days.

7.3. Multiple Comparisons with the Best Evaluation

Hsu’s MCB method [

28] is a multiple comparison approach to identify factor levels that are best, insignificantly different, or significantly different from the best (the best is defined as either the highest or lowest mean). When employed with a trained model, it provides precise analysis of level mean differences. It constructs a confidence interval for the difference between each level mean and the best among others.

In this section, we aim to evaluate the performance reliability of the EXPERT model across distinct parts of the EUR/USD dataset. To achieve this, five discrete samples, each comprising 20 data points, were extracted. These samples are chosen to reflect different market conditions, allowing the evaluator to determine how well the EXPERT model performs under varying market scenarios.

Sample 1: December 28, 2018, to January 24, 2019.

Sample 2: May 2, 2019, to May 29, 2019.

Sample 3: February 6, 2020, to March 4, 2020.

Sample 4: February 3, 2021, to March 2, 2021.

Sample 5: January 5, 2022, to February 1, 2022.

The evaluation of the EXPERT model’s performance was conducted using the MAE between the predicted and the actual values:

where

m is the sample size (in our case

) and

k is the index of the first observation in the current sample.

To determine the statistical significance of performance differences across samples Hsu’s Multiple Comparisons with the Best (MCB) method was applied with an alpha value of 0.05. In our context, the "best" refers to the sample with the smallest mean, against which other samples are compared.

The differences between the mean of each period and the mean of the "best" period are used to calculate confidence intervals for each period. These intervals determine if the difference between each group and the best group is statistically significant or not according to the following rules shown in

Table 6:

In our case, the smallest mean (the best case) is identified in the second sample; Results are shown in

Table 7.

Our analysis employing MCB shows that the proposed model performed similarly to the best sample across different samples. This is a hint of consistency and uniformity in the results, underscoring the reliability of our findings. We can suggest that the EXPERT model will yield accurate, reliable, and equivalent outcomes across diverse scenarios.