1. Introduction

The textile industry represents excellence for the European economy. It is expected to grow further, driven by the rise of fast fashion and the affordability of products, which encourage consumers to purchase and own larger quantities of clothing. However, textile production significantly impacts on the environment due to overconsumption and manufacturing practices that involve chemicals for fabric production, treatment, and dyeing. In the present scenario, the industry’s future relies on creating creative ways to turn discarded textiles into inputs for new manufacturing techniques. Unfortunately, adopting green practices can be difficult, particularly for small and medium-sized businesses (SMEs), as they call for complete control over a product’s lifetime, from design and manufacture to distribution and end-of-life management. Due to their high expenses and the requirement for large investments that usually only pay off in the medium to long term, these organizations frequently find it difficult to make such improvements. With innovative businesses actively addressing their impact on ecosystems and society, sustainable businesses have gained traction in recent years. Adopting sustainability principles offers strategic growth potential, but it also comes with costs, investments, and significant creative initiatives that might not yield immediate financial rewards. With this strategy, businesses may launch products with a reduced environmental effect without sacrificing usability or aesthetics, and they can recover materials and energy at the end of a product’s lifecycle. The textile and clothing industry is no exception: leading companies are transitioning from traditional production methods to a systemic approach incorporating circular economy principles. This includes efficient resource utilization, reliance on renewable energy, minimizing or eliminating waste, and effective product end-of-life management. These initiatives are aligned with the concept of Product Lifecycle Thinking, which has recently been acknowledged as a key strategy by the European Community (specifically by the Directorate-General for the Environment, supported by the European Environment Agency). This approach is part of a broader framework for sustainable development, designed to foster an integrated environmental policy across European states. In this context, the European Union came forth with the “Europe 2020 Strategy” intended to build up inclusive economic growth, the three main priorities of which focus on investment in education, knowledge, research, and innovation- smart growth; economies that are greener and more resource-efficient- sustainable growth; and employment, social, and regional cohesion, and efforts to combat poverty-inclusion grasp. Wool textile recycling represents one of the best options for waste minimization and enhancement of a circular economy in the textile industry, thus representing a global excellence in manufacturing. As one of the most significant processes in terms of anthropogenic negative environmental effects, this procedure enables the provision of raw materials that may not require the use of virgin wool or the requirement to colour the garments using dyeing processes. A standard procedure for businesses that recycle wool is to look through their warehouse for appropriately coloured materials based on the customer’s selection. These are chosen based on their colour resemblance to the desired one as well as their understanding of the recycling procedure that follows. A fabric’s colour may alter slightly as a result of this treatment. Therefore, the operators of the company who are required to classify the fabrics must understand how the various colours blend to form a new one. Because the selection process mainly depends on the operators’ judgment, it might vary greatly based on the operator’s level of competence, colour perception, and fatigue. Additionally, the productivity of this approach is low and inconsistent. Consequently, automatic or semi-automatic digital methods and tools for effectively classifying recycled textiles by colour are crucial for enhancing recycling efficiency, particularly as they facilitate the sorting of materials for reuse or repurposing.

Early works on this topic (1997-2015) were proposed in the literature, primarily exploring the use of deterministic methods, mainly based on the Kubelka-Munk (K-M) theory [1,2], as well as traditional Machine Learning (ML) algorithms and Artificial Neural Networks. Given the computational and technological constraints of the time, these methods had the twofold advantage of improving the visual human-based classification and introducing the seed of current AI-based methods. The spectral reflectance of a mixture of components (colorants) that have been described by the absorption (K) and scattering (S) coefficients was mostly predicted using deterministic approaches. By combining pre-dyed fibres with CMC colour variations less than 0.8, several experiments about the tristimulus-matching algorithm based on the Stearns-Noechel model [3] (and its implementations [4–6]) made it possible to forecast the formula for matching a given colour standard consistently. In [7], a colour-matching system based on Friele’s hypothesis was created. A methodology for merging and optimizing colour and texture features is offered in [8], and the adoption of methods based on imaging-based colorimetry is suggested in [9] as a partial solution to these problems. Concerning early machine-learning techniques, support vector machines (SVMs) and k-nearest neighbours (k-NN) were frequently employed [10]. Features used in these methods were manually generated and extracted from colour spaces such as RGB, HSV, and LAB. Among the attributes employed in feature selection were histograms, texture-based disparities, and mean intensities. These models were less adaptive than deep learning, but they performed well on smaller datasets and were computationally inexpensive. However, the effectiveness of older approaches can be significantly impacted by changes in lighting, camera quality, and backdrop circumstances, requiring extensive pre-processing to normalize photographs. Scalable or real-time deployment is hindered by these limitations [11]. Because of their versatility and hierarchical learning ability, neural networks have dominated developments in fabric classification. In fact, [12] suggests a completely automated, real-time colour classification method for recycling wool apparel. The tool correctly identifies the clothing by adhering to the selection criteria supplied by human expertise by combining a feed-forward backpropagation artificial neural network (FFBP ANN)-based approach with a statistical technique known as the matrix approach of a self-organizing feature map (SOFM). The colours used to manufacture mélange fabrics can be grouped using several studies that can be used directly or inferred [13] since only a few investigations are in the literature [14]. A method for real-time classification of both mélange and solid colour woollen fabrics is proposed in [15] to minimize the processing time and the subjectivity of the classification.

Although these methods are computationally efficient for small datasets (a good approach for SMEs operating in several Textile Districts) they perform poorly when applied to large datasets or multi-class classification tasks. Adding new classes or expanding datasets often requires retraining or redesigning feature extraction methods. Deep learning methods are less vulnerable to variations in background, texture, and illumination because they can generalize from large and diverse datasets. Using pre-trained models allows for quick adaptation to new datasets or classes without having models be retrained from scratch, which can save a significant amount of time and resources [16]. As a result, AI systems execute more accurately and reliably than traditional techniques, especially under difficult conditions. Their real-time colour processing and classification capabilities are ideal for industrial applications such as automated quality control. In other words, by combining high-resolution cameras with artificial intelligence (AI) techniques like Machine Learning (ML) and Deep Learning (DL), the automatic and accurate analysis of fabric colours is enabled. These solutions are useful in industries where efficiency and dependability are crucial, such as textile production, quality control, and recycling

Therefore, this paper’s primary goal is to present a thorough analysis of current AI-based techniques that are quickly displacing both deterministic and conventional artificial neural network (ANN)-based methods, proposing advantages of contemporary AI techniques, such as their adaptability, scalability, and capacity to handle complicated datasets, by examining recent developments. The remainder of the paper is as follows; in Section 2 scientific literature is searched to determine the most promising approaches for reliable colour classification of textile fabrics to be reused in a circular process. Common issues related to Machine Vision systems used to acquire relevant data from recycled fabrics are also explored. The Section also presents an overview of AI methods from traditional ANNs-based approaches to modern deep learning-based methods. Finally, in Section 3, the main conclusions and a discussion on possible future developments are carried out.

2. Scientific Literature Research Methodology

The SCOPUS database was used to oversee the following query on October 29, 2024, which included the keywords “IA,” “fabrics,” “colour,” “classification,” and “recycling.” The full Boolean string adopted is as follows:

(“artificial intelligence” OR “machine learning” OR “deep learning”) AND (fabric OR textile) AND (colour OR color) AND classification AND recycling

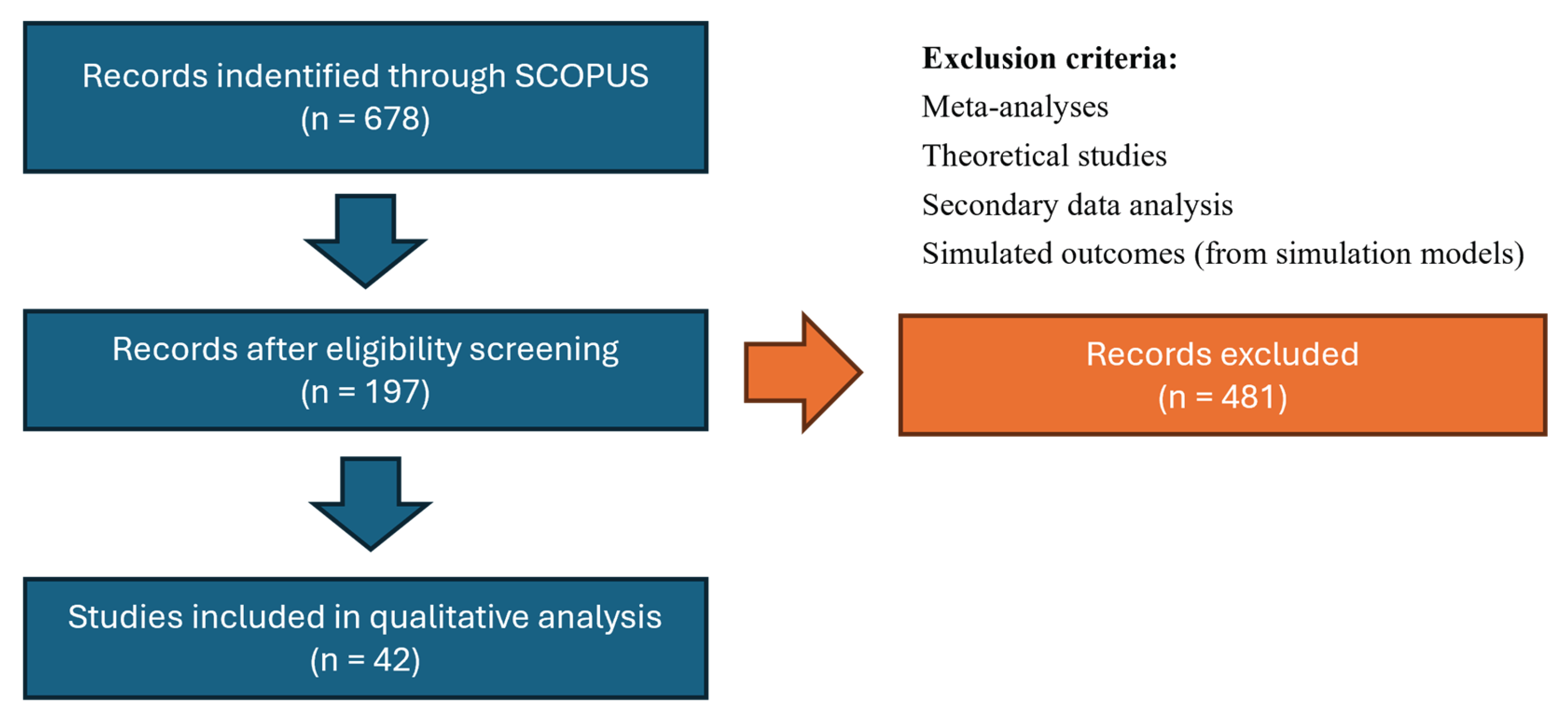

According to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRIMSA)-based analysis of

Figure 1, to be included in the state-of-the-art research, studies had to be empirical, which necessitates the collection and analysis of empirical data. Consequently, meta-analyses, theoretical studies, secondary data analysis, and simulated outcomes (from simulation models) were disregarded. Additionally, consideration was limited to publications that made the entire body of literature available.

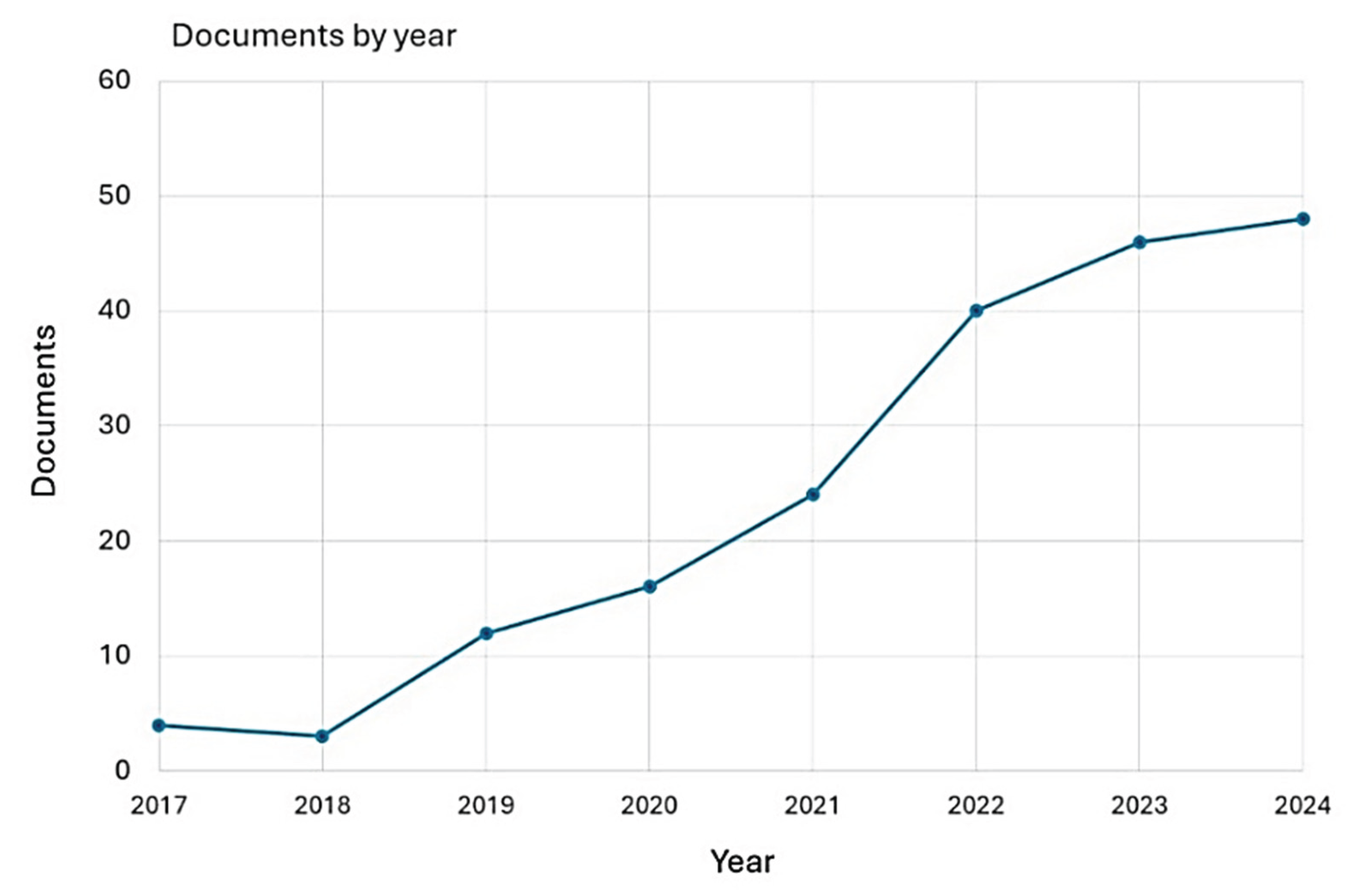

Surprisingly, despite the huge industrial interest in colour-based classification methods for recycled fabrics, the literature research outcomes sum up to less than 200 papers and even the most recent literature studies rely on traditional Neural Networks. The graph in

Figure 2 shows an almost linear increase starting in 2018.

A refinement is carried out by searching for the most often declared terms throughout the complete collection of 678 publications using Scopus’ “refine research” feature. Since many papers may share two or more keywords, it is pointless to display the percentage of them that address a particular topic. However, it is possible to extract the most common in the relevant fields. As shown in

Table 1, the primary keywords can be grouped in a collection of macro-areas of interest.

As already mentioned, the objective of this study is to provide an overview of current methods and pertinent research on the use of cutting-edge technologies, as indicated in

Table 1’s macro-areas, in light of the previously mentioned categorization. Before exploring the most relevant methods, it should be noticed that a reliable classification system relies primarily on a machine vision (MV) architecture able to capture input data, and on the availability of a dataset (or pre-processed inputs) before being trained and tested. Therefore, the next sub-section provides a brief description of MV systems commonly adopted for colour classification and hints at common issues with data.

3. Machine Vision Systems

The main limitation of industrial cameras is that colour is acquired, for each pixel of the image, by only three parameters (RGB); therefore, the conversion in the CIELAB space is dependent on such three pixel-by-pixel values. Spectrophotometers are the best choice for capturing more consistent data since spectral analysis allows acquiring reflectance or transmittance of light at specific wavelengths (typically in the range [400 – 700 nm], with a step of 10 nm) thus generating spectral curves . Reflectance values can be easily converted into tristimulus values once the illuminant is known and the CIELAB space is easily retrievable. However, the main limitation of this acquisition system is related to the small dimensions of acquired areas of the fabric, typically not exceeding a size. Hyperspectral Imaging Systems overcome this limitation by capturing images across a wide range of wavelengths, from the visible spectrum to the near infrared (NIR). Hyperspectral cameras usually provide from 0.5-2 fps (with highest spectral resolution) up to 30 fps (usually with lower resolution), each frame consisting of a monochrome image of the scene together with the reflectance factors, commonly in the spectral range 400–1000 nm (with a minimum spectral resolution equal to 2.8 nm) for a given series of points measured in the inspected area.

Combining images with spectral data it is possible to expand the capabilities of industrial cameras; however, this kind of MV is quite expensive. In conclusion, using different devices, the MV system allows for acquiring different typologies of data (images, reflectance spectra, or hyperspectral images) and retrieving the coordinates in commonly adopted spaces (with particular regard to CIELAB). As an important remark, it should be considered that in most of the colour classification systems overviewed in this work, these acquired data are used as input data for the sorting system, being the target data for a given colour class. Usually, the fabrics are initially grouped by families (e.g., red, blue, white, etc.); afterward, each fabric belonging to a family can be additionally classified into classes (for example four classes for the red family, seven classes for brown, etc., see

Figure 3).

Therefore, for all the classification systems considered in this work, there are:

Training data - they usually consist of one or more of the following data from the fabric to be classified: 1) acquired images, 2) acquired reflectance values, 3) a combination of image and reflectance values, 4) RGB, CIELAB, or other colour space information directly retrieved using a MV system or available in public databases. The AI-based algorithms can be trained using either images or colorimetric data i.e., colour coordinates.

Target data – consists of colour classes and, possibly, sub-classes; the classification can be based either on human assessment of the fabrics (e.g., based on the knowledge of the expert operators working in the textile company) or on the use of standards such as PANTONE® or RAL Colour Chart.

4. Overview of AI-Based Methods

As mentioned above, the main aim is to provide an overview of current strategies and methods implemented to comply with the colour-based classification of recycled fabrics for their reintroduction in a circular process. Accordingly, also due to the surprisingly limited number of papers retrieved in the bibliographic analysis, the methods are classified based on the main AI-based technique adopted, starting from traditional ANNs-based approach, exploring the most commonly used method (i.e., CNNs) and closing with the (limited) number of works using RNNs.

4.1. Traditional ANNs-Based Methods

Unexpectedly, today several methods adopt traditional Artificial Neural Network-based approaches, especially when the main input of the sorting system is composed of CIELAB data. Most recent works share that it is possible to use probabilistic networks or competitive layers to “guide” the classification of fabrics toward supervised training. For instance, a “simple, yet effective, machine vision-based system combined with a probabilistic neural network for carrying out reliable classification of plain, regenerated wool fabrics” is proposed in [19]. To classify the recycled wool fabrics, a set of colour classes must be defined, and an appropriately designed acquisition mechanism must also be in place. Following the image acquisition, useful information was extracted using image-processing techniques. Self-Organizing maps (SOMs) are also used for classification. A segmentation approach that combines the effective dense subspace clustering with the self-organizing maps neural network is proposed in [20]. Following the fabric image’s pre-processing, the self-organizing maps algorithm was used for the primary clustering, and the effective dense subspace clustering algorithm was used for the secondary clustering. This pre-processing of the image could help perform a subsequent classification using either deterministic approaches or CNN architectures whose input is the clustered image (this would reduce the complexity of the input data). For colour difference detection and assessment, according to [22], other available intelligent techniques used today are: “Support Vector Machine (SVM) algorithm and Least-squares SVM (LSSVM), Support Vector Regression (SVR) and Least-squares SVR (LSSVR)” [23] as well as the “Random Vector Functional-link net (RVFL), the Extreme Learning Machine (ELM) and the Kernel ELM (KELM) and the Regularization ELM (RELM) and the Online Sequential ELM (OSELM) learning algorithms” [24].

Additionally, it has been demonstrated that RVFL, ELM, and their variations provide non-iterative training protocols, speed, and the ability to provide predictive models [25]. ELM stands out in particular due to its simple architecture, quick learning speeds, and few parameter changes. Moving on to optimization techniques, the PCA approach is widely used in feature extraction and is renowned for its ability to separate major components and reduce dimensions. However, Genetic Algorithms (GAs) are a search optimization method that mimics the natural selection process.

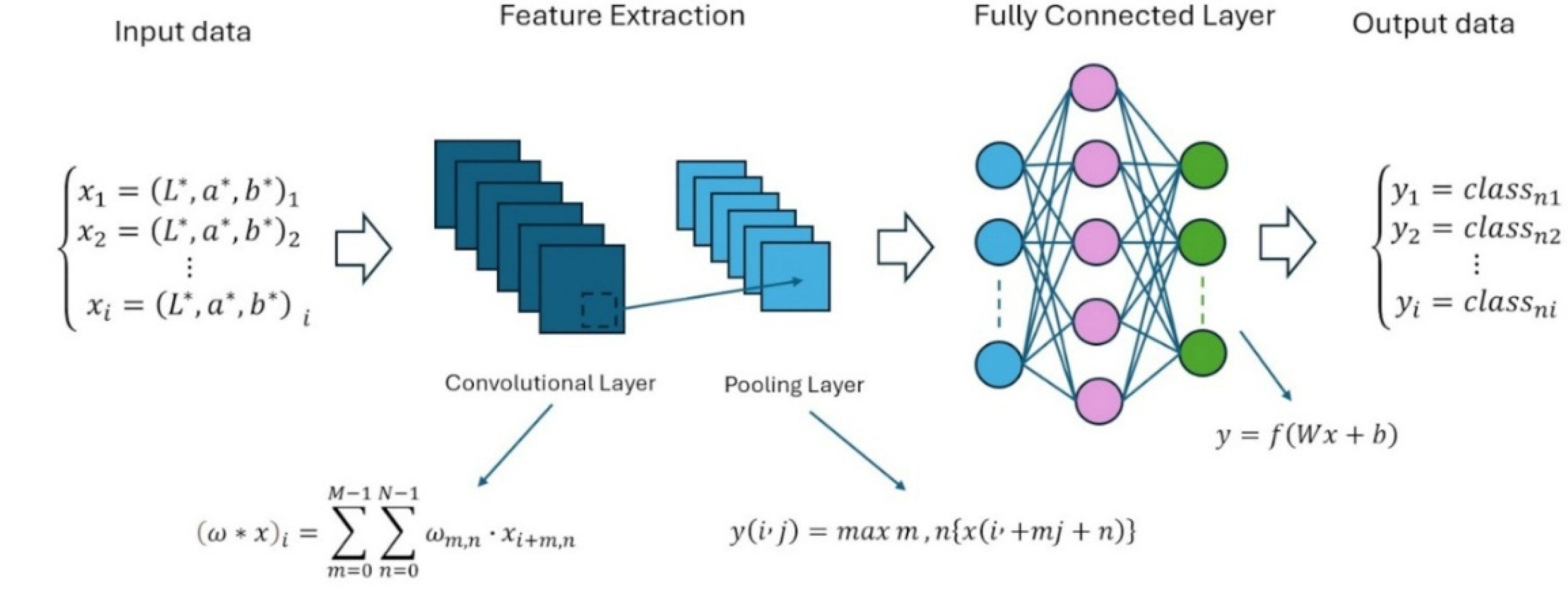

4.2. Convolutional Neural Networks

As deep Convolutional Neural Network (CNN) models like AlexNet [26], GoogleNet [27], VGGNet [28], and ResNet [29] have emerged, the use of CNNs to extract characteristics from images has progressively gained popularity. Therefore, several academics have successfully coupled deep learning with apparel classification in recent years [30]. As widely recognized, Convolutional Neural Networks (CNNs) are “ feedforward neural networks that can extract features from data with convolution structures” [31]. Their architecture typically consists of three different layers: convolution, pooling, and fully connected (FC) layers (see

Figure 4).

A typical CNN layer can be represented by a mapping between the input data

(e.g., vectors whose coordinates are the L*, a*, and b* values of a given fabric or directly the RGB image acquired using the MV system) and a new space

(which is a function of the coordinates of the weighted sum of input activations

) according to the following equation:

In Equation (2),

is a weight matrix and

is a bias term. The convolution operation in Equation (2) is defined as follows:

where

is the weight at the coordinate

in the convolution filter (size

) and

is the input value in position

. The transfer function

can be linear or non-linear, depending on the CNN architecture. Common activation functions include ReLU (Rectified Linear Unit):

Pooling reduces the spatial dimensions of feature maps, retaining important features

while reducing computation. Max pooling, for example, takes the maximum value in each pool region:

where

and

are the indices within the pooling window. Finally, fully connected layers combine features to make predictions. Each output of the CNN is computed by using Equation (1) with the predicted value

:

where

is Weights matrix.

Recent studies investigated the use of CNNs for fabric colour classification. A new technique in [

32] converts fabric images into a “difference space,” which is the separation between the colours of the image and a predetermined reference set. This method generates colour-difference channels for input into CNN thus improving the accuracy of recognition. The architecture is based on Convolutional and pooling layers, while dense layers with SoftMax activation are used for final classification.

A pre-trained deep learning network is utilized in [

33] where woven materials are identified according to their density and texture while inadvertently capturing data. This method demonstrates how reliable transfer learning methods are for complex textile classification. Another research [

34] uses CNNs and ensemble learning to manage differences in illumination and fabric textures. A deep learning method based on the Random Forest and K-NN algorithms was used to develop and analyse an intelligent colour-based object sorting system [

35], showing that the former algorithm performs better in classification. To finalize the automatic fabric composition recognition in the sorting process, [

36] uses the principles and methods of deep learning for the qualitative classification of waste textiles based on the analysis of near-infrared (NIR) spectroscopy.

According to the authors’ experimental results, the convolutional network classification approach using normalized and pixelated NIR can automatically classify several typical fabrics, including polyester and cotton. Again, referring to [

32], a novel architecture based on a group of CNNs with input in the colour difference domain is also suggested. The authors in [

37] suggest a clothing classification technique based on a parallel convolutional neural network (PCNN) coupled with an optimized random vector functional link (RVFL) to increase the accuracy of clothing image recognition. The technique extracts feature from photos of apparel using the PCNN model.

The issues with conventional convolutional neural networks (such as limited data and overfitting) are then addressed by the structure-intensive, dual-channel convolutional neural network, or PCNN. The transition of traditional RGB spaces into more effective “difference spaces,” which allows CNNs to discern minute differences in fabric images, is another major advance. In circumstances where textural patterns could typically make it difficult to identify colours, this method improves outcomes by gathering variations by referring to a pre-set and using this augmented input for categorization [

38].

The authors in [

39] adopted a deep learning method of support vector machine to classify a set of automatic matching systems between textile fabric colour and pantone card automatically. Their method works without manual matching, thereby improving work efficiency and eliminates the human observer bias.

Table 2 lists some advantages offered by CNNs as a classifier for fabrics.

Summing up, even though CNNs are reliable tools, their performance is dependent on the availability of enough data, pre-processingto reduce problems with texture and lighting, and computational resources.

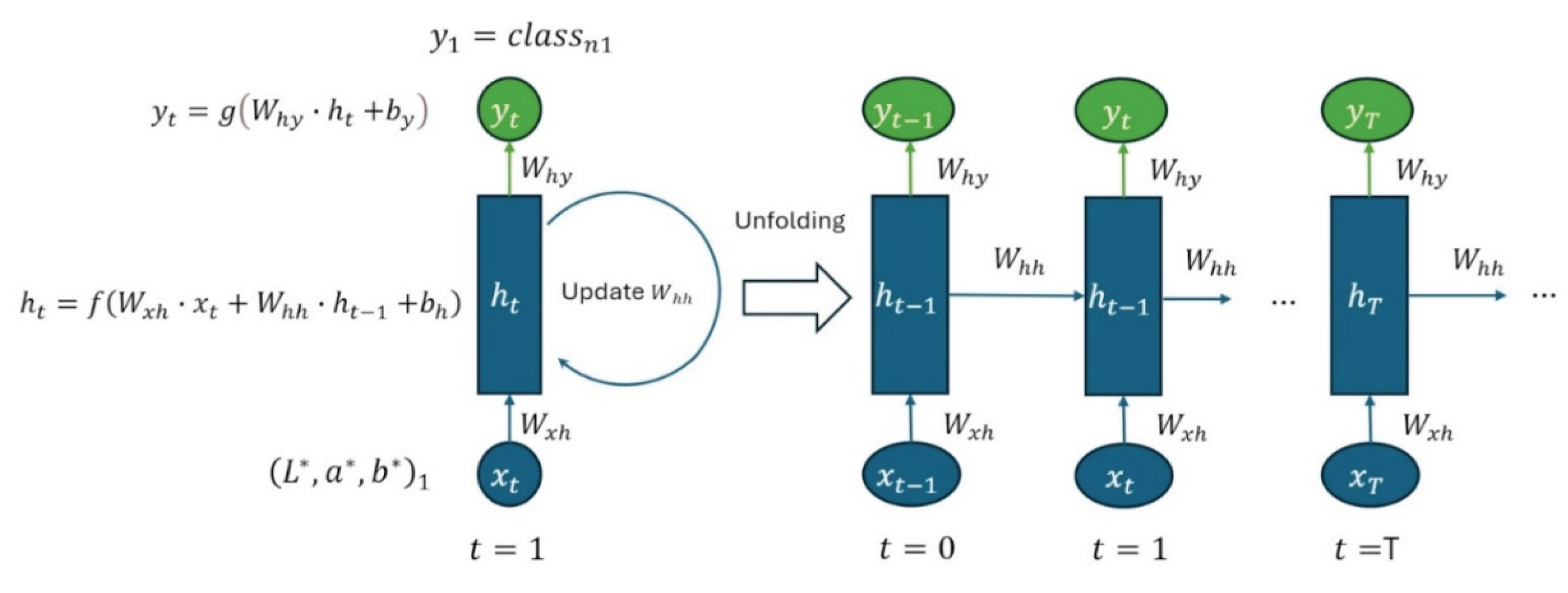

4.3. Recurrent Neural Networks

The use of Recurrent Neural Networks (RNNs) is emerging today as one of the most promising approaches, as demonstrated by the results obtained from the (limited) number of works dealing with this topic. RNNs (see

Figure 5) are a type of neural network that captures temporal dependencies and is intended to oversee sequential data. In RNNs, the hidden state at time

, i.e.,

is computed based on the current input

(e.g., a vector containing CIELAB coordinates) and the previous hidden state

at the time

.

The core update equation is:

where

is a weight matrix for input

,

is a weight matrix for the hidden state,

is a bias vector and

is the activation function which maps the hidden with the input spaces. The predicted class

at the time

is computed as follows:

where

is a weight matrix connecting the hidden state to output,

is the bias vector for the output layer and

is the activation function for the output (e.g., SoftMax for classification). When managing a sequence of input data

, the RNN can be unfolded over time, leading to the following computations for each time step:

The most relevant work adopting the RNN architecture for devising classification is [

39] where authors propose “advanced neural network techniques, including convolutional layers and RNNs, to classify textures and colours of fabrics by exploring lighting and rotation effects on fabric detection”. First, the collection and preprocessing of fabric images are conducted, and the dataset is enlarged using data augmentation techniques. Then, “a pre-trained model is used, where only the newly added layers are trained, and the older layers are left frozen.” The results are categorized using the types of woven fabric (plain, twill, and satin) once the high-level texture information has been extracted. In [

41] a “novel RNN model that can effectively analyse hyperspectral pixels as sequential data and then determine information categories via network reasoning is proposed”, paving the way towards “future research, showcasing the huge potential of deep recurrent networks for hyperspectral data analysis”. Many more research adopts RNN architectures (e.g., [

42,

43,

44,

45]), but due to the aforementioned lack of studies dealing with classifiers for use in the textile field, it seems evident that there is room for further research on this topic employing this kind of network.

This assumption is because RNNs have several advantages, as listed in

Table 3. It is important to highlight that for this kind of architecture, in the context of colour classification, pixel rows or columns from the acquired digital image are treated as sequences, where each step corresponds to a pixel or a patch of pixels. This is a mathematical trick to test RNN with spatial data, despite they are mainly adopted to treat sequential data, as stated in

Table 3. Otherwise, this kind of networks can be used for the estimation of dye usage reduction and/or to estimate emission savings in circular processes. These interesting aspects, however, fall outside the scope of this work.

4.4. Quality Control Metrics

The use of Recurrent Neural Networks (RNNs) is emerging today as one of the most promising approaches, as demonstrated by the results obtained from the (limited) number of works dealing with this topic. RNNs (see

Figure 5) are a type of neural network that captures temporal dependencies and is intended to oversee sequential data. In RNNs, the hidden state at time

, i.e.,

is computed based on the current input

(e.g., a vector containing CIELAB coordinates) and the previous hidden state

at the time

.

Once a fabric is provided, an ideal classification system should be able to identify the correct class. However, there is a great deal of variation in fabrics that can be given to a textile company, particularly recycled materials. As a result, a categorization error should be accepted. Since the error grows as the classification classes move apart, such an error should consider that a classification error between two “adjacent” classes is acceptable. In actuality, a fabric is combined with other fabrics of the same class after being assigned to a particular class. Because of this, the impact of a misclassification involving “similarly” coloured materials is minimal. On the other hand, assigning it to the incorrect class could produce undesirable outcomes if the misclassification is significant.

Therefore, some metrics for measuring the performance of the classification system are deemed. For traditional ANNs, a convenient method, introduced in [

19] is based on the definition of a “likelihood classification index”

.

Another interesting index is the so-called “reliability index”

[

19], defined as follows:

where “

is the total number of fabrics correctly classified,

is the number of fabrics classified in a closer class, and

is the number of samples to be classified” [

19]. Both metrics have the advantage of assessing the performance of the classification on one side by strongly penalizing incorrect classification but, on the other side, by minimizing the risk of misclassifications assigning a proper weight to samples which are classified in the closest class with respect to the manual classification.

Dealing with CNNs, the network’s performance may be evaluated using the so-called loss function. Cross-entropy loss is commonly used. It is defined as follows:

where

is the number of classes (e.g., families),

is a true label (1 if the class is correct, 0 otherwise) and

is the predicted probability for class

. The main benefit of using this loss function for the classification of colored fabrics is that the log function penalizes incorrect predictions more when the confidence is high, encouraging the CNN model to assign high probability to the correct class (or, at least, on the closest one).

When a RNN is used, the loss function

is computed based on the model’s output

and the target

:

where

is typically a cross-entropy loss for classification tasks.

Once the fabric classification is carried out, both

and

parameters can be evaluated for CNNs and RNNs architectures. Accordingly, to provide a preliminary benchmark between the performance obtained using traditional ANN-based, CNNs and RNNs methods, they have been assessed against the set of 200 samples provided by the Italian Textile Company Manteco S.p.A., located in Prato and described in [

19]; samples are classified into eight families. In detail, the dataset, which is not publicly available, consisted of a target set of 40 fabric samples made of recycled wool, representing all the target offered to company customers. Each sample represented a particular class belonging to a family. Ten overall families made up the used catalogue, as shown in

Table 4.

Table 5 shows

values obtained with the method in [

19], in [

32], and in [

41], using CNNs and RNNs trained using DeepFashion2 [

46] and FabricNET [

47] datasets. The dataset of 200 fabric colour samples was randomly divided into 70% for training (140 samples), 15% for validation (30 samples) and 15% for testing (30 samples).

These colours frequently lack strong, distinguishing characteristics that allow them to stand out in an image or dataset, according to scientific literature [

48]. This is primarily because of low contrast and texture (i.e., slight variations in intensity or texture when compared to other colours), partial overlap with shadows or lighting variations, and “reduced” information: black and grey colours primarily provide luminance information, which may not be sufficient for confident classification. To strengthen the comparative analysis of artificial intelligence techniques for textile fabric colour classification, a performance comparison of traditional Artificial Neural Networks (ANNs), Convolutional Neural Networks (CNNs), and Recurrent Neural Networks (RNNs) based on standard classification metrics is here proposed. Results are reported using stratified 5-fold cross-validation on the aforementioned benchmark dataset, consisting of 200 labelled fabric samples grouped across 8 colour families.

The following evaluation metrics were computed for each model [

49]:

Accuracy (Acc): overall classification correctness.

Precision (P): correct predictions per class.

Recall (R): sensitivity or true positive rate.

F1-score (F1): harmonic mean of precision and recall.

γ-index (γ): reliability metric accounting for adjacent class misclassifications, as defined in Equation (10).

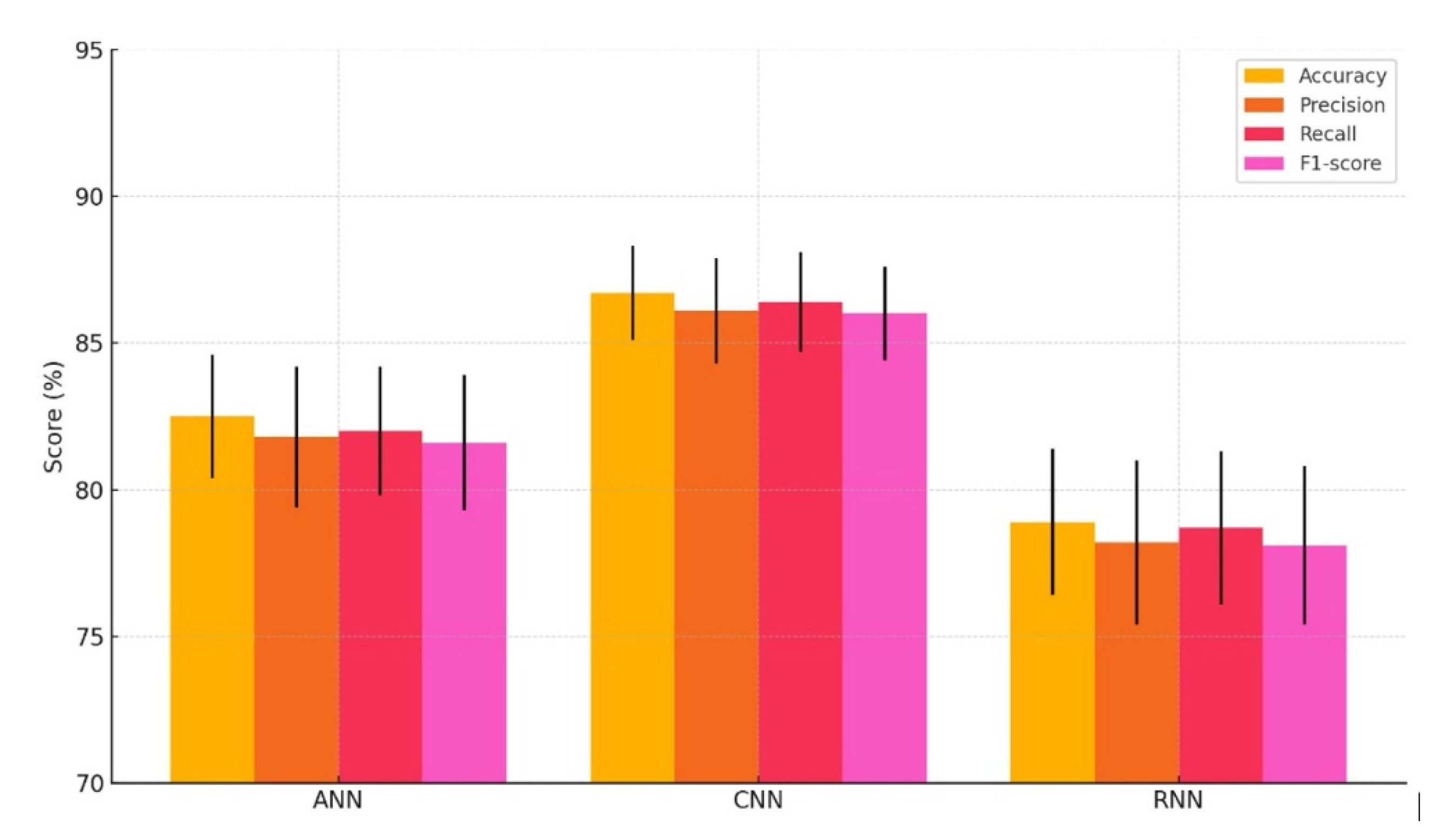

All models were evaluated using stratified 5-fold cross-validation to ensure class balance within each fold. Performance was averaged over the folds, and standard deviations were reported to assess variance (see

Table 6).

Figure 6 presents a bar chart comparison of average performance across the three model types. CNN-based methods consistently outperform both traditional ANNs and RNNs across all metrics, with relatively low variance. ANN-based models offer competitive results with slightly higher variance, while RNN-based models underperform slightly, particularly in classification precision and F1-score, likely due to their sensitivity to training data sequence and noise.

The higher accuracy of CNNs is likely due to their good generalization across colour families and efficient extraction of spatial data. They are especially well-suited for industrial use due to their adaptability to changes in texture, lighting, and shadows. Additionally, CNNs demonstrated higher robustness to reduced data volumes when pre-trained models were utilized with transfer learning, even though all approaches benefit from enriched datasets. In fact, for this specific case study, the CNN-based method consistently outperforms the other two methods in most colour families, with an average accuracy of 86.1%, indicating its robustness and adaptability for this task. The ANN-based method follows closely with an average accuracy of 83.2%, showing reliable performance but slightly less effective than the CNN approach. The RNN-based method achieves an average accuracy of 77.9%, suggesting that its sequential processing approach may not be as well-suited for the type of data available for the case study. However, all three architectures provide results averagely comparable to or outperforming traditional methods based on the use of neural networks or deterministic approaches, whose performance “varies in the range 57-80% for a dataset composed of a large number of samples and higher number of colours” [

19] concerning the dataset used in this case study. In particular, all three methods perform very well with white and brown families, with the CNN-based method reaching the highest accuracy (91.1% for White and 95.2% for Brown), highlighting its strength in classification. With red and violet, the CNN-based and RNN-based methods achieve similar results, with minor differences, while the ANN-based method slightly underperforms.

All methods show a noticeable drop in accuracy, particularly for RNN-based, green samples, while the lowest accuracies across all methods (69.2–71.3%) is reached for grey and black, thus indicating difficulty in distinguishing between subtle shades or patterns in this range. Based on the case study, the CNN-based method is the most effective approach overall, providing robust and consistent accuracy. However, further improvements, especially in distinguishing low-contrast or subtle colour families, are necessary for practical applications. The performance of the ANN-based method suggests it can still be a viable alternative in resource-constrained environments, while the RNN-based method may require adaptation or reconsideration for this type of problem. However, it should be noted that the case study contains a relatively small number of samples classified; as a result, the results should only be seen qualitatively, that is, as a first assessment of how well the previously discussed techniques performed. More evaluation will be required to compare the three methods more consistently.

5. Roadmap for Adoption in Textile SMEs

The integration of AI-powered colour classification systems into textile recycling workflows presents significant opportunities for small and medium-sized enterprises (SMEs) aiming to enhance sustainability and operational efficiency. However, the transition from traditional, operator-dependent processes to data-driven, automated systems requires a structured and scalable implementation roadmap. This section outlines an approach tailored to the specific needs and constraints of textile SMEs.

Phase 1: Assessment and Readiness Evaluation

Before implementation, SMEs should assess their current infrastructure, workforce skills, and data availability. Key steps include Process Mapping, where the Company should identify where manual colour sorting occurs and the variability it introduces, Data Audit, where the availability of digital images or colorimetric data from past production or recycling batches should be considered, and Technology Readiness i.e., the assessment of existing camera systems, lighting setups, and computing resources.

Phase 2: Pilot System Deployment

A small-scale pilot allows testing of AI models in a controlled production environment without disrupting ongoing operations. From the hardware point of view, a basic machine vision system using industrial RGB cameras and standard illumination (e.g., D65 light sources) should be established. Then, using such a system, a representative set of fabric samples, focusing on a few colour families, need to be properly acquired. Finally, it will be possible to train a CNN model on this limited dataset using transfer learning to minimize data and resource requirements and AI outputs can be compared against human operators, assessing accuracy, consistency, and classification speed.

Phase 3: Integration with Production Workflow

Once validated, the system can be scaled and integrated into operational workflows. System Integration encompass embedding AI models into existing sorting stations or quality control points, with real-time prediction interfaces. Staff should be upskilled to interact with the system, interpret outputs, and manage exceptions. Furthermore, the Company should continuously collect feedback from operators and improve the model with newly classified data.

Phase 4: Expansion and Optimization

To fully benefit from the AI solution, SMEs should plan for long-term scalability and optimization by gradually incorporate more colour families, fabric types, and edge cases into the training data and by tracking key performance indicators such as dye usage reduction, throughput improvement, and misclassification rate.

Phase 5: Collaboration and Standardization

Finally, SMEs can benefit from collaboration with research institutions, industry consortia, or textile hubs, joining collaborative efforts to build open datasets for fabric classification and aligning classification criteria with industry standards (e.g., Pantone, RAL) to ensure interoperability.

It should be noticed that while AI-powered classification systems offer notable advantages in accuracy and repeatability, their deployment in real-world textile environments, particularly within SMEs, must contend with several practical trade-offs and operational challenges. Main challenges are:

Speed vs. Accuracy - AI models prove to be effective in terms of classification accuracy, often exceeding 85–90%. However, models often require more computational resources and longer inference times, which may slow down throughput in fast-paced production environments. On the other hand, lightweight or compressed models, while faster, may compromise on precision—especially in cases involving subtle colour variations or mixed textures. Accordingly, optimization techniques such as model pruning, quantization, and edge computing should be considered to balance this trade-off, enabling rapid classification without significantly sacrificing accuracy.

Cost vs. Scalability - Initial investments in machine vision systems, AI development, and dataset preparation can be significant since high-resolution industrial cameras, hyperspectral sensors, or custom lighting setups can raise capital costs. Moreover, custom AI model development may require specialized expertise, further increasing adoption barriers for SMEs. So, SMEs should start with cost-effective RGB-based systems and apply transfer learning using pre-trained networks.

Lighting Variations - Inconsistent or suboptimal lighting during image acquisition can lead to inaccurate colour representation and model predictions. This is particularly critical for fabrics with low colour contrast or delicate gradients. Standardizing the lighting environment using D65 or TL84 artificial illuminants, along with proper enclosure of the vision setup, could help maintain colour consistency. Incorporating colour calibration targets in each batch can further correct for minor shifts.

Fabric Texture and Surface Properties - Surface textures such as gloss, pile, weave pattern, and shadowing can interfere with colour perception in images; in fact, highly textured or patterned fabrics may introduce noise into the classification process and AI models may unintentionally learn texture patterns instead of pure colour features. Therefore, preprocessing techniques (e.g., texture suppression filters, normalization) or the use of combined image and spectral data (e.g., using hyperspectral imaging) should be considered to improve classification robustness.

Data Quality and Labelling Consistency - AI models require large, well-labelled datasets to perform reliably. However, manual labelling is often subjective and inconsistent, especially in colour-based classification. This should push SMEs in collaborating with experienced operators to define consistent labelling criteria. Semi-supervised learning and data augmentation (e.g., lighting, contrast variation) can enrich the dataset without extensive manual effort.

The aforementioned roadmap has been adopted by the company Manteco S.p.A. in 2025. An early experimentation in colour classification was made in the first two weeks of June 2025 by classifying 350 samples into the 8 families stated above. Samples were manually classified by a pool of three colourists under a controlled environment (neutral background, D65 illumination). Each expert evaluated independently, without any communication with the other evaluators, and completed an individual evaluation form in which they assigned their classification to each sample. Once all experts had completed their classification, the forms were collected and compared by an independent coordinator or the research team. Any disagreements were then reviewed by the entire team and resolved through subsequent consensus. Once the manual classification was completed, the γ CNN-based method was applied, achieving a classification accuracy of 97.3% compared to the manual method. Although these results are preliminary and class-wise accuracy has not yet been calculated, the findings demonstrate a good level of accuracy for CNN-based systems.

6. Conclusions

This study addressed the critical need for effective and efficient methods to classify recycled textiles based on colour, a key step in advancing circular economy practices in the textile industry. Traditional deterministic and early machine learning methods, while pioneering, sometimes fall short in handling the classification issue. For this reason, emerging technologies, such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), seem to provide better performances, under the condition that large datasets are available. In general, the findings highlight the advantages of CNNs for hierarchical feature extraction and RNNs for processing sequential dependencies in fabric colour gradients. These advanced methods demonstrate higher accuracy, robustness to noise, and adaptability compared to traditional techniques.

Table 7 maps the evolutionary progression of AI-based textile colour classification methods highlighting how the field has advanced in terms of methodology, data usage, and industrial application over time.

The main findings are summarized as follows:

While CNNs have been extensively explored and implemented, RNNs remain underutilized in the domain of textile classification despite their potential to enhance the understanding of sequential and contextual data, such as fabric patterns and gradients. RNNs’ ability to capture temporal dependencies enables a nuanced interpretation of colour transitions, making them uniquely suited for scenarios where changes occur across a sequence of image pixels. This capability is especially beneficial for fabrics with intricate designs or colour gradients that evolve spatially.

The “integration of advanced RNN variants, such as Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU), mitigates some of the gradient issues commonly faced in traditional RNNs, enabling the model to learn long-term dependencies more effectively” [

50].

Even while accuracy and efficiency have increased with current methods, allowing the proposed methods to reach an accuracy comparable with the one obtainable in different fields (e.g., medical [

51] or agricultural ones [

52]), there are still issues, such as the computing needs of deep learning model training, the requirement for high-quality annotated datasets, and the vulnerability of AI systems to changing environmental conditions.

The complexity and “black box” nature of AI models hinder interpretability and trust in industrial applications. By designing computationally efficient models optimized for deployment on resource-constrained devices, it could be possible to ensure transparency and reliability in industrial quality assurance by gradually introducing these systems at an industrial scale.

To further advance the field, future research can focus on several areas. First, particular attention should be addressed to dataset enrichment, by developing comprehensive and diverse datasets representing various fabric textures, lighting conditions, and colour variations to improve the robustness of AI models. Another important aspect is related to the further testing of RNN models and the future exploration of hybrid models combining them with CNNs to improve performance in complex classification tasks. Incorporating transformer-based architecture could, in fact, further enhance accuracy and scalability. Furthermore, future work should be addressed on the alignment of AI models quantifying their environmental impact and optimizing them for minimal resource use during training and deployment and by establishing standardized metrics and evaluation protocols for classification systems to ensure consistent performance across different production environments. In conclusion, even though the state of textile classification has advanced significantly, the path to a sustainable and circular textile industry will be paved by investigating synergistic model architectures, improving interpretability, and guaranteeing ecologically conscious AI implementations.

Moreover, as AI research advances, several emerging paradigms offer promising opportunities for improving textile classification and circular economy practices. Some examples are:

- Self-Supervising Learning (SSL): they enable models to learn useful representations from unlabelled data, a critical advantage in the textile industry where large, annotated datasets are rare or expensive to obtain. SSL frameworks such as SimCLR, MoCo, and BYOL [

53] use pretext tasks (e.g., predicting image augmentations, colour distortions, or patch ordering) to train encoders without labels.

- Attention Mechanisms and Transformers: These models learn long-range dependencies across an image, making them adept at identifying subtle colour gradients, fine textures, and pattern repetitions that are common in complex fabric types (e.g., mélange or jacquard). Although not yet widely applied in the textile industry, early experiments with Visual Transformers (ViTs) in defect detection and fashion recognition show substantial improvements over ResNet and VGG baselines [

54].

Finally, despite the present paper is in the context of European Global Excellence in Manufacturing, it should be noted that several projects outside Europe demonstrate successful applications of AI to circular textile practices. India is home to several large-scale textile manufacturers and recyclers (e.g., Arvind Mills) that are actively exploring AI for fabric identification, sorting, and dye optimization. Academic institutions like IIT Delhi and NIFT have published studies on automated loom control, defect detection, and texture classification using deep learning [

55]. In Bangladesh, the textile sector is beginning to integrate AI for waste reduction and fabric reuse, often in partnership with European sustainability programs. China leads globally in textile production and AI research. Textile hubs in Zhejiang and Jiangsu provinces use machine vision systems enhanced by CNNs for real-time defect classification and colour sorting [

56]. Several factories employ smart dyeing machines optimized by AI to reduce chemical usage and water consumption. Chinese institutions also contribute to deep learning research on colour matching algorithms, spectral data classification, and real-time grading systems, with practical applications in both domestic and export-oriented factories. In United States, Universities such as MIT and UC Berkeley are also involved in developing AI-based quality control systems tailored to sustainable materials [

57]. Eventually, AI-powered textile recycling is starting to appear in early-stage innovation zones thanks to pilot projects supported by Google AI for Social Good, the H&M Foundation, or UNIDO. These initiatives show the value of appropriately utilizing cutting-edge technologies and techniques to develop a sustainable innovation, increase classification precision, and promote a more inclusive, data-driven approach to textile recycling globally.

Funding

This research received no external funding

Data Availability Statement

No open data are available for this work

Conflicts of Interest

The author declare no conflicts of interest

References

- R. Furferi and M. Carfagni, “Colour mixing modelling and simulation: Optimization of colour recipe for carded fibres,” Modelling and Simulation in Engineering, vol. 2010, no. 1, pp. 487678, 2010. [CrossRef]

- S. H. Amirshahi and M. T. Pailthorpe, “An algorithm for optimizing color prediction in blends,” Textile Research Journal, vol. 65, no. 11, pp. 632–637, 1995. [CrossRef]

- E. I. Stearns and F. Noechel, “Spectrophotometric prediction of color wool blends,” American Dyestuff Reporter, vol. 33, no. 9, pp. 177–180, 1944.

- L. I. Rong and G. U. Feng, “Tristimulus algorithm of colour matching for precoloured fibre blends based on the Stearns-Noechel model,” Coloration Technology, vol. 122, no. 2, pp. 74–81, 2006. [CrossRef]

- B. Thompson and M. J. Hammersley, “Prediction of the colour of scoured-wool blends,” Journal of the Textile Institute, vol. 69, no. 1, pp. 1–7, 1978. [CrossRef]

- S. Z. Kazmi, P. L. Grady, G. N. Mock, and G. L. Hodge, “On-line color monitoring in continuous textile dyeing,” ISA Transactions, vol. 35, no. 1, pp. 33–43, 1996. [CrossRef]

- B. Philips-Invernizzi, D. Dupont, and C. Cazé, “Formulation of colored fiber blends from Friele′s theoretical model,” Color Research and Application, vol. 27, no. 3, pp. 191–198, 2002. [CrossRef]

- M. A. Hunt, J. S. Goddard Jr., K. W. Hylton, T. P. Karnowski, R. K. Richards, M. L. Simpson, K. W. Tobin Jr., and D. A. Treece, “Imaging tristimulus colorimeter for the evaluation of color in printed textiles,” Proceedings of SPIE, Machine Vision Applications in Industrial Inspection VII, SPIE Publications, article no. 3652, 1999.

- T. Mäenpää, J. Viertola, and M. Pietikäinen, “Optimising colour and texture features for real-time visual inspection,” Pattern Analysis & Applications, vol. 6, pp. 169–175, 2003.

- J. Y. Tou, Y. H. Tay, and P. Y. Lau, “Recent trends in texture classification: A review,” Symposium on Progress in Information & Communication Technology, vol. 3, no. 2, pp. 56–59, 2009.

- Amelio, G. Bonifazi, F. Cauteruccio, E. Corradini, M. Marchetti, D. Ursino, and L. Virgili, “DLE4FC: A Deep Learning Ensemble to Identify Fabric Colors,” Proceedings of SEBD, pp. 13–21, 2023.

- R. Furferi and L. Governi, “The recycling of wool clothes: An artificial neural network colour classification tool,” International Journal of Advanced Manufacturing Technology, vol. 37, pp. 722–731, 2008.

- C. F. J. Kuo and C. Y. Kao, “Self-organizing map network for automatically recognizing color texture fabric nature,” Fibers and Polymers, vol. 8, no. 2, pp. 174–180, 2007. [CrossRef]

- R. Furferi, “Colour mixing modelling and simulation: Optimization of colour recipe for carded fibres,” Modelling and Simulation in Engineering, vol. 2011, pp. 487678, 2011.

- R. Furferi, “Colour classification method for recycled melange fabrics,” Journal of Applied Sciences, vol. 11, no. 2, pp. 236–246, 2011. [CrossRef]

- M. da Silva Barros, A. C., E. F. Ohata, S. P. P. da Silva, J. S. Almeida, and P. P. Rebouças Filho, “An innovative approach of textile fabrics identification from mobile images using computer vision based on deep transfer learning,” Proceedings of 2020 International Joint Conference on Neural Networks (IJCNN), pp. 1–8, 2020.

- R. Hirschler, D. F. Oliveira, and L. C. Lopes, “Quality of the daylight sources for industrial colour control,” Coloration Technology, vol. 127, no. 2, pp. 88–100, 2011.

- R. Furferi, L. Governi, and Y. Volpe, “Image processing-based method for glass tiles colour matching,” The Imaging Science Journal, vol. 61, no. 2, pp. 183–194, 2013. [CrossRef]

- R. Furferi and M. Servi, “A machine vision-based algorithm for color classification of recycled wool fabrics,” Applied Sciences, vol. 13, no. 4, p. 2464, 2023.

- M. Qian, Z. Wang, X. Huang, Z. Xiang, P. Wei, and X. Hu, “Color segmentation of multicolor porous printed fabrics by conjugating SOM and EDSC clustering algorithms,” Textile Research Journal, vol. 92, no. 19–20, pp. 3488–3499, 2022.

- S. Liu, Y. K. Liu, K. Y. C. Lo, and C. W. Kan, “Intelligent techniques and optimization algorithms in textile colour management: A systematic review of applications and prediction accuracy,” Fashion and Textiles, vol. 11, no. 1, p. 13, 2024. [CrossRef]

- S. Liu, Y. K. Liu, K. Y. C. Lo, and C. W. Kan, “Intelligent techniques and optimization algorithms in textile colour management: A systematic review of applications and prediction accuracy,” Fashion and Textiles, vol. 11, no. 1, p. 13, 2024. [CrossRef]

- J. Zhang, K. Zhang, J. Wu, and X. Hu, “Color segmentation and extraction of yarn-dyed fabric based on a hyperspectral imaging system,” Textile Research Journal, vol. 91, no. 7–8, pp. 729–742, 2020.

- S. Liu, C. K. Lo, and C. W. Kan, “Application of artificial intelligence techniques in textile wastewater decolorisation fields: A systematic and citation network analysis review,” Coloration Technology, vol. 138, no. 2, pp. 117–136, 2022.

- X. Liu and D. Yang, “Color constancy computation for dyed fabrics via improved marine predators algorithm optimized random vector functional-link network,” Color Research and Application, vol. 46, no. 5, pp. 1066–1078, 2021. [CrossRef]

- A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet classification with deep convolutional neural networks,” Communications of the ACM, vol. 60, pp. 84–90, 2017.

- C. Szegedy, V. Vanhoucke, S. Loffe, et al., “Rethinking the inception architecture for computer vision,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, IEEE Press, pp. 2818–2826, 2016.

- K. Simonyan and A. Zisserman, “Very deep convolution networks for large-scale image recognition,” Proceedings of International Conference on Learning Representations (ICLR), San Diego, CA, USA, May 2015.

- K. M. He, X. Y. Zhang, S. Q. Ren, et al., “Deep residual learning for image recognition,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, IEEE Press, pp. 770–778, 2016.

- Z. Zhou, W. Deng, Y. Wang, and Z. Zhu, “Classification of clothing images based on a parallel convolutional neural network and random vector functional link optimized by the grasshopper optimization algorithm,” Textile Research Journal, vol. 92, no. 9–10, pp. 1415–1428, 2022. [CrossRef]

- Z. Li, F. Liu, W. Yang, S. Peng, and J. Zhou, “A survey of convolutional neural networks: Analysis, applications, and prospects,” IEEE Transactions on Neural Networks and Learning Systems, vol. 33, no. 12, pp. 6999–7019, 2021.

- A. Amelio, G. Bonifazi, E. Corradini, S. Di Saverio, M. Marchetti, D. Ursino, and L. Virgili, “Defining a deep neural network ensemble for identifying fabric colors,” Applied Soft Computing, vol. 130, p. 109687, 2022.

- S. Das and K. Shanmugaraja, “Application of artificial neural network in determining the fabric weave pattern,” Zastita Materijala, vol. 63, no. 3, pp. 291–299, 2022.

- D. P. Penumuru, S. Muthuswamy, and P. Karumbu, “Identification and classification of materials using machine vision and machine learning in the context of industry 4.0,” Journal of Intelligent Manufacturing, vol. 31, no. 5, pp. 1229–1241, 2020.

- R. Kiruba, V. Sneha, S. Anuragha, S. K. Vardhini, and V. Vismaya, “Object color identification and classification using CNN algorithm and machine learning technique,” Proceedings of 4th International Conference on Pervasive Computing and Social Networking (ICPCSN), pp. 18–24, 2024.

- R. Thakur, D. Panghal, P. Jana, A. Rajan, and A. Prasad, “Automated fabric inspection through convolutional neural network: An approach,” Neural Computing and Applications, vol. 35, no. 5, pp. 3805–3823, 2023. [CrossRef]

- Z. Liu, W. Li, and Z. Wei, “Qualitative classification of waste textiles based on near infrared spectroscopy and the convolutional network,” Textile Research Journal, vol. 90, no. 9–10, pp. 1057–1066, 2020.

- Z. Zhou, W. Deng, Y. Wang, and Z. Zhu, “Classification of clothing images based on a parallel convolutional neural network and random vector functional link optimized by the grasshopper optimization algorithm,” Textile Research Journal, vol. 92, no. 9–10, pp. 1415–1428, 2022. [CrossRef]

- S. Das, S., A. Wahi, “Digital Image Analysis Using Deep Learning Convolutional Neural Networks for Color Matching of Knitted Cotton Fabric”. Journal of Natural Fibers, vol. 19(17), pp. 15716–15722, 2022.

- A. Wang, W. Zhang, and X. Wei, “A review on weed detection using ground-based machine vision and image processing techniques,” Computers and Electronics in Agriculture, vol. 158, pp. 226–240, 2019.

- M. A. Iqbal Hussain, B. Khan, Z. Wang, and S. Ding, “Woven fabric pattern recognition and classification based on deep convolutional neural networks,” Electronics, vol. 9, no. 6, p. 1048, 2020.

- L. Mou, P. Ghamisi, and X. X. Zhu, “Deep recurrent neural networks for hyperspectral image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 55, no. 7, pp. 3639–3655, 2017.

- K. S. Kumar and M. R. Bai, “LSTM based texture classification and defect detection in a fabric,” Measurement: Sensors, vol. 26, p. 100603, 2023.

- T. Peng, X. Zhou, J. Liu, X. Hu, C. Chen, Z. Wu, and D. Peng, “A textile fabric classification framework through small motions in videos,” Multimedia Tools and Applications, vol. 80, pp. 7567–7580, 2021. [CrossRef]

- M. R. Islam, M. Z. H. Zamil, M. E. Rayed, M. M. Kabir, M. F. Mridha, S. Nishimura, and J. Shin, “Deep learning and computer vision techniques for enhanced quality control in manufacturing processes,” IEEE Access, vol. 12, pp. 30000–30016, 2024.

- https://github.com/switchablenorms/DeepFashion2.

- https://www.kaggle.com/datasets/acseckn/fabricnet.

- Zhang, H., & Zhu, Q. (2018). Color-based image classification and the impact of color similarity and lighting variation. Journal of Visual Communication and Image Representation, 53, 100–110.

- Diallo, R., Edalo, C., Awe, O. O. (2024). Machine Learning Evaluation of Imbalanced Health Data: A Comparative Analysis of Balanced Accuracy, MCC, and F1 Score. In Practical Statistical Learning and Data Science Methods: Case Studies from LISA 2020 Global Network, USA (pp. 283-312). Cham: Springer Nature Switzerland.

- R. Patil, V. Gudivada, “A review of current trends, techniques, and challenges in large language models (llms)”. Applied Sciences, vol. 14(5), article no. 2074, 2024.

- P. Paithane, “Optimize multiscale feature hybrid-net deep learning approach used for automatic pancreas image segmentation”. Machine Vision and Applications, vol. 35, pp- 135, 2024.

- P.M. Paithane, Random forest algorithm use for crop recommendation. ITEGAM-JETIA, vol. 9(43), pp. 34-41, 2023.

- Khan, A., AlBarri, S., Manzoor, M. A. (2022, March). Contrastive self-supervised learning: a survey on different architectures. In 2022 2nd international conference on artificial intelligence (icai) (pp. 1-6). IEEE.

- Atliha, V., Šešok, D. (2020, April). Comparison of VGG and ResNet used as Encoders for Image Captioning. In 2020 IEEE Open Conference of Electrical, Electronic and Information Sciences (eStream) (pp. 1-4). IEEE.

- Patil, D., & Asra, S. (2024, August). Fabric Defect Detection Systems and Methods in India: A Comprehensive Review. In 2024 International Conference on Emerging Techniques in Computational Intelligence (ICETCI) (pp. 269-272). IEEE.

- Xu, C., Xu, L., Luo, K., Zhang, J., Sitahong, A., Yang, M., Zhang, C. (2024). The essence and applications of machine vision inspection for textile industry: a review. The Journal of The Textile Institute, 1-25.

- Malhotra, Y. MIT Computer Science & AI Lab: AI-Machine Learning-Deep Learning-NLP-RPA: Executive Guide: including Deep Learning, Natural Language Processing, Autonomous Cars, Robotic Process Automation.

Figure 1.

PRISMA chart, adapted in this work for the qualitative analysis of scientific literature dealing with AI-based colour classification of textiles.

Figure 1.

PRISMA chart, adapted in this work for the qualitative analysis of scientific literature dealing with AI-based colour classification of textiles.

Figure 2.

Documents dealing with the topic of AI in fabric classification based on colour.

Figure 2.

Documents dealing with the topic of AI in fabric classification based on colour.

Figure 3.

Example of classification in terms of colour performed by the Company Manteco S.p.A. located in Prato (Italy). In this example, four classes of “pink”, “red” and “blue” families are depicted.

Figure 3.

Example of classification in terms of colour performed by the Company Manteco S.p.A. located in Prato (Italy). In this example, four classes of “pink”, “red” and “blue” families are depicted.

Figure 4.

Architecture of a CNN used for colour classification. Input data are L*, a*, and b* coordinates of the acquired fabric (computed starting from RGB images or spectral data). The pertaining class of the fabric is the network output.

Figure 4.

Architecture of a CNN used for colour classification. Input data are L*, a*, and b* coordinates of the acquired fabric (computed starting from RGB images or spectral data). The pertaining class of the fabric is the network output.

Figure 5.

Architecture of RNN for colour classification. Input data are L*, a*, and b* coordinates of the acquired fabric (computed starting from RGB images or spectral data). The output consists of the fabric colour class.

Figure 5.

Architecture of RNN for colour classification. Input data are L*, a*, and b* coordinates of the acquired fabric (computed starting from RGB images or spectral data). The output consists of the fabric colour class.

Figure 6.

Comparison of model performance metrics (mean across folds) for ANN, CNN, and RNN architectures applied to textile colour classification.

Figure 6.

Comparison of model performance metrics (mean across folds) for ANN, CNN, and RNN architectures applied to textile colour classification.

Table 1.

Graph representations.

Table 1.

Graph representations.

| Macro-area |

Number of occurrences |

| Convolutional Neural Networks (CNNs) |

170 |

| Traditional Artificial Neural Networks |

34 |

| Recurrent Neural Networks (RNNs) |

6 |

Table 2.

Main advantages and disadvantages linked to the use of CNNs for classification of fabrics based on colour.

Table 2.

Main advantages and disadvantages linked to the use of CNNs for classification of fabrics based on colour.

| Advantages |

Description |

Disadvantages |

Description |

High Accuracy

|

CNNs’ capacity to extract hierarchical features renders them highly effective at spotting small variations in colour hues and patterns. |

Data Dependence |

Large, labelled datasets are necessary. Unbalanced or small datasets may cause overfitting. |

| Robustness to Noise |

Advanced CNN designs use numerous feature layers and preprocessing approaches to accommodate changes in lighting, shadows, and fabric texture. |

Computational Intensity |

CNN training is a resource-intensive process for sophisticated models and high processing capacity, such as GPUs or TPUs. |

Automatic Feature Extraction

|

CNNs simplify development and improve scalability by automatically learning the pertinent features from raw images, in contrast to traditional methods that call for laborious feature engineering. |

Susceptibility to Representation Issues |

If preprocessing is not used, colour fluctuations brought on by various lighting conditions or irregular image acquisition may result in misclassification. |

| Adaptability |

CNNs are resource-efficient for tasks like fabric colour classification because transfer learning enables them to apply previously trained models to new datasets with sparse input. |

Overfitting to Patterns |

Instead of concentrating only on colour, CNNs can occasionally be overfitted to the texture patterns in textiles, which lowers their capacity for generalization. |

Integration with Ensemble Methods

|

When CNNs and ensemble approaches are combined, generalization is enhanced, and overfitting is decreased. This is especially advantageous for textiles with a variety of colours or textures. |

Interpretability Challenges |

The network design makes it challenging to comprehend the reasoning behind a specific categorization choice, which can be problematic for quality assurance procedures. |

Table 3.

Main advantages and disadvantages linked to the use of RNNs for classification of fabrics based on colour.

Table 3.

Main advantages and disadvantages linked to the use of RNNs for classification of fabrics based on colour.

| Advantages |

Description |

Disadvantages |

Description |

Sequential Data Processing

|

When tasks involve sequential dependencies, like examining a sequence of pixel intensities or patterns in photographs, RNNs are adequate. This is useful when colour gradients or patterns extend across image pixels. |

High Computational Costs |

RNNs, as in the case of CNNs, are computationally intensive, especially on large datasets of high-resolution fabric images. |

| Contextual Understanding |

RNNs can improve classification task accuracy by utilizing contextual information. Sequential data is a better way to understand tiny texture changes and fabrics’ colour transitions. |

Sensitivity to Data Quality |

RNNs are prone to overfitting, particularly when there is noise or imbalance in the training dataset. This is crucial in textile manufacturing since fabric samples may have different perspectives or uneven lighting. |

| Adaptability |

To solve the vanishing gradient issue and enable effective learning of long-term dependencies, variants like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) can be useful for identifying intricate colour patterns. |

Gradient Problem |

Gradient problems may cause standard RNNs to perform poorly on lengthy sequences. Despite being lessened by GRUs and LSTMs, these solutions make the model more complex. |

Automation in Complex Scenarios

|

Compared to conventional machine learning models, RNN-based models are better at adjusting to changing fabric orientations and dynamic lighting circumstances. |

Training Times |

RNNs frequently require more time to train than feedforward networks, thus limiting the implementation in industrial situations where speed is crucial. |

Table 4.

Dataset used to test the ANN-based, CNNS and RNNs algorithms.

Table 4.

Dataset used to test the ANN-based, CNNS and RNNs algorithms.

| Families |

Family Number |

Classes |

# of Fabrics in the Target Set |

# of Fabrics in the Training Set |

# of Fabrics for Each Family (Training Set) |

| White |

1 |

1, 2, 3, 4 |

4 |

82 |

20, 18, 21, 23 |

| Beige |

2 |

5, 6, 7, 8 |

4 |

78 |

17, 22, 21, 18 |

| Brown |

3 |

9, 10, 11, 12 |

4 |

84 |

21, 19, 21, 23 |

| Orange |

4 |

13, 14, 15, 16 |

4 |

76 |

17, 19, 20, 21 |

| Pink |

5 |

17, 18, 19, 20 |

4 |

77 |

18, 18, 21, 20 |

| Red |

6 |

21, 22, 23, 24 |

4 |

85 |

23, 20, 20, 22 |

| Violet |

7 |

25, 26, 27, 28 |

4 |

81 |

19, 18, 23, 21 |

| Blue |

8 |

29, 30, 31, 32 |

4 |

90 |

23, 21, 22, 24 |

| Green |

9 |

33, 34, 35, 36 |

4 |

75 |

17, 21, 18, 18 |

| Gray/black |

10 |

37, 38, 39, 40 |

4 |

72 |

16, 15, 20, 21 |

Table 5.

values obtained with three different methods.

Table 5.

values obtained with three different methods.

| Family |

ANN-based method [19] |

CNN-based method [32] |

RNN-based method [40] |

| White |

80.4% |

91.1% |

88.3% |

| Brown |

92.5% |

95.2% |

93.8% |

| Red |

84.3% |

85.7% |

87.6% |

| Violet |

79.4% |

85.3% |

84.8% |

| Blue |

75.3% |

75.9% |

74.5% |

| Green |

73.5% |

75.6% |

71.4% |

| Gray and Black |

69.2% |

71.3% |

69.6% |

| Average Value |

83.2% |

86.1% |

77.9% |

Table 6.

Quantitative Results.

Table 6.

Quantitative Results.

| Model |

Accuracy (%) |

Precision (%) |

Recall (%) |

F1-score (%) |

γ-index (%) |

| ANN |

82.5 ± 2.1 |

81.8 ± 2.4 |

82.0 ± 2.2 |

81.6 ± 2.3 |

83.2 |

| CNN |

86.7 ± 1.6 |

86.1 ± 1.8 |

86.4 ± 1.7 |

86.0 ± 1.6 |

86.1 |

| RNN |

78.9 ± 2.5 |

78.2 ± 2.8 |

78.7 ± 2.6 |

78.1 ± 2.7 |

77.9 |

Table 7.

Evolutionary progression of AI-based textile colour classification methods.

Table 7.

Evolutionary progression of AI-based textile colour classification methods.

| Year |

Reference |

Methodology |

Key Contribution |

Technological Milestone |

| 1995 |

Amirshahi & Pailthorpe [2] |

Deterministic (Kubelka-Munk theory) |

Early modelling of colour prediction in wool blends |

Foundation of physics-based colour theory |

| 2008 |

Furferi & Governi [12] |

ANN (FFBP + SOFM) |

First real-time ANN-based system for wool garment recycling |

Entry of supervised learning in textile sorting |

| 2011 |

Furferi [15] |

Deterministic + ANN |

Colour classification for mélange fabrics |

Hybrid approaches for textured fabrics |

| 2016 |

Simonyan & Zisserman [28] |

CNN (VGGNet) |

Deep learning for image-based classification gains traction |

Start of deep feature extraction in textiles |

| 2020 |

Liu et al. [37] |

CNN + NIR Spectroscopy |

Classification of waste textiles using spectral CNN input |

Multimodal (image + spectral) learning |

| 2022 |

Amelio et al. [32] |

CNN (Ensemble) |

Classification via colour-difference space with CNN ensemble |

Precision colour matching with pre-processing innovations |

| 2022 |

Zhou et al. [30] |

Parallel CNN + RVFL |

Joint classification of colour and pattern using ensemble methods |

Integration of colour and texture pipelines |

| 2023 |

Furferi & Servi [19] |

Probabilistic ANN + MV |

Lightweight ANN system for SMEs using RGB inputs |

Industrial application in real sorting lines |

| 2024 |

Liu et al. [21] |

Systematic Review |

Meta-analysis of intelligent textile colour management techniques |

Recognition of AI as a standard for colour tasks |

| 2025 |

This Review |

CNN, RNN, SSL, FL (theoretical) |

Comprehensive roadmap, new trends, and benchmarking |

Strategic blueprint for future circular AI systems |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).