Submitted:

18 August 2025

Posted:

21 August 2025

You are already at the latest version

Abstract

Keywords:

I. Introduction

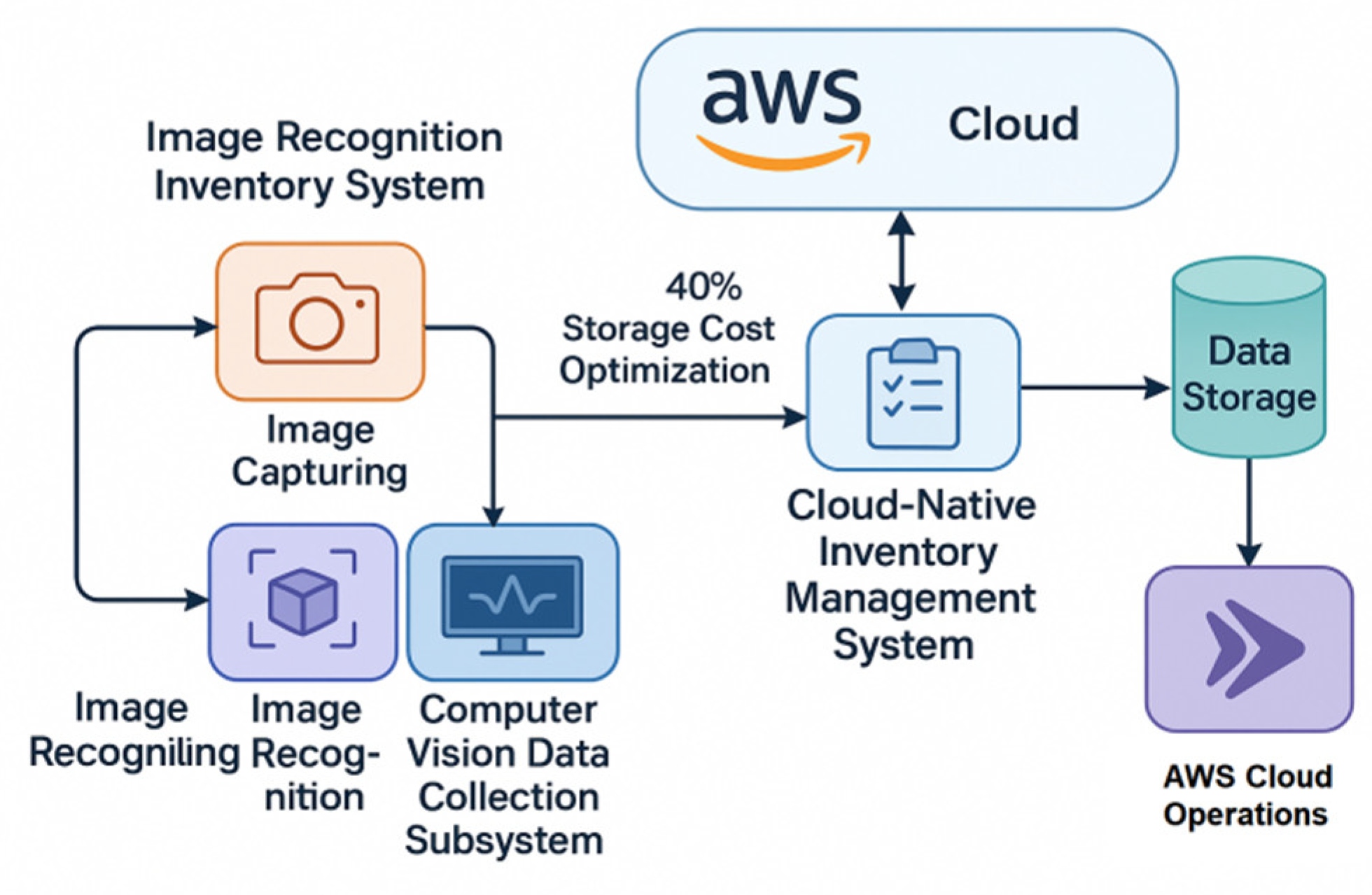

II. Overall Framework of Computer Vision Based Inventory Management System

III. Computer Vision Based Inventory Management System Software Design

A. Computer Vision Data Acquisition Subsystem

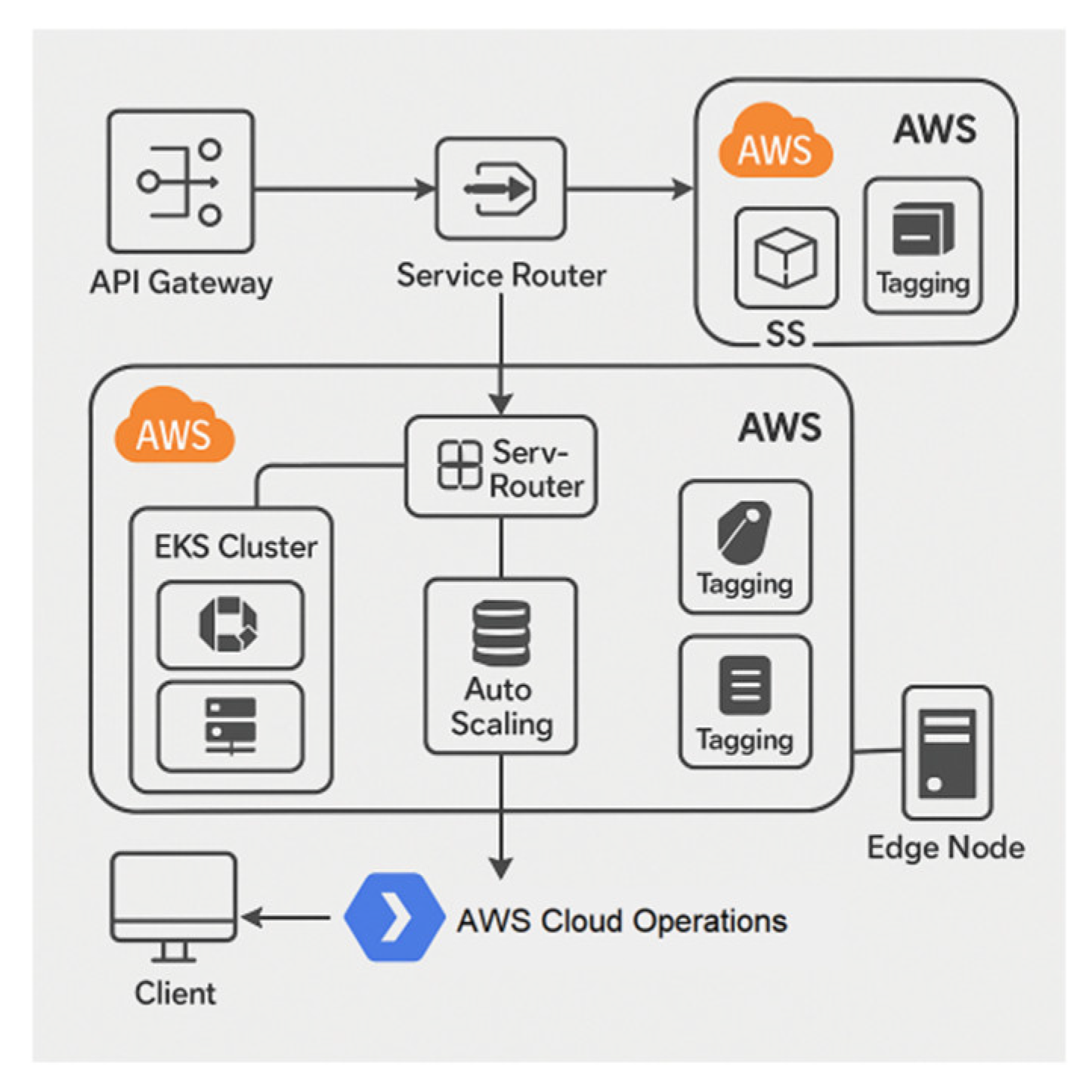

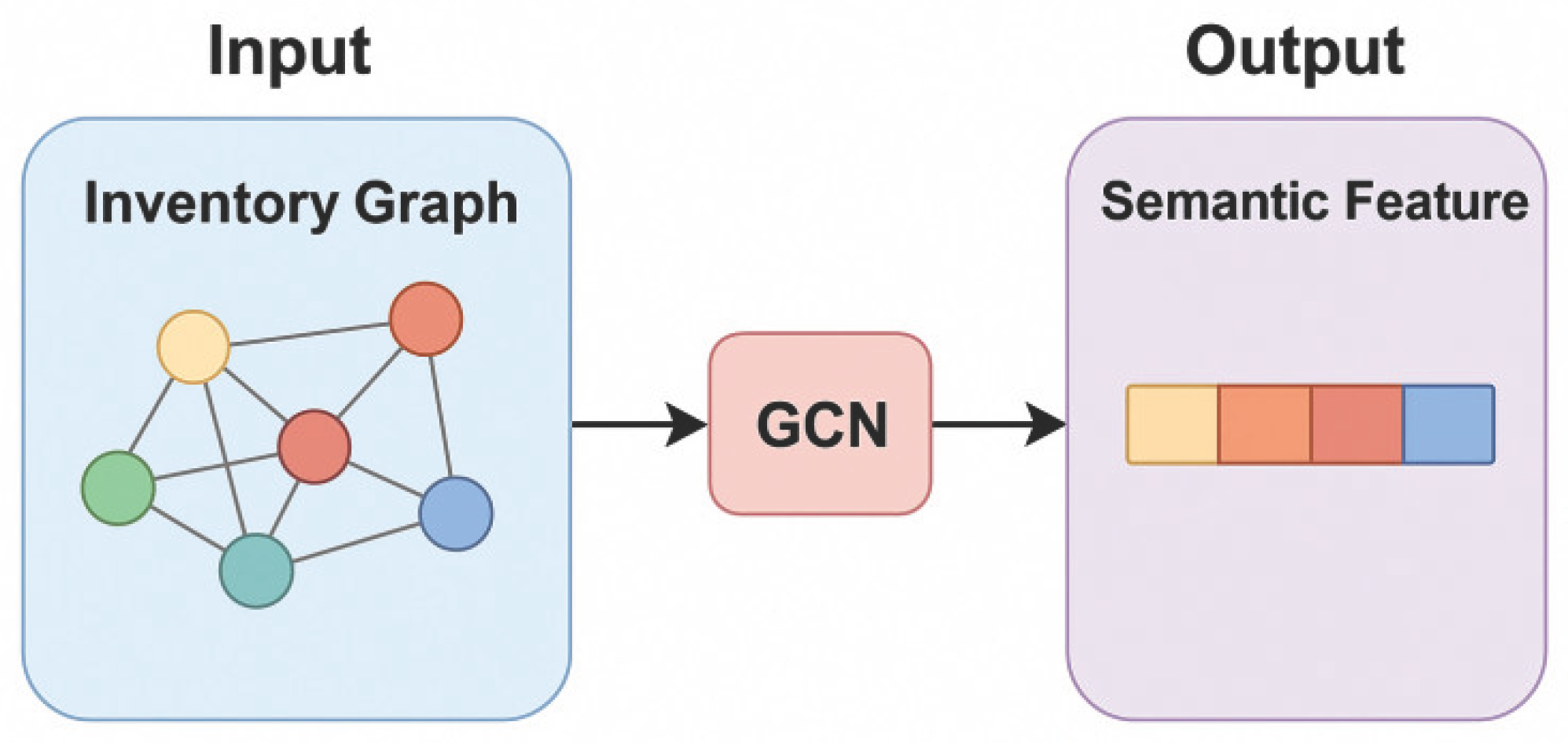

B. Cloud-Native Inventory Management System

C. Retail Cost Reduction Key Technology Realization

IV. System Experimental Results and Cost Reduction Effect Analysis

A. Experimental Environment and Test Program

B. System Functional Verification

C. Analysis of Retail Cost Reduction Effect

V. Conclusion

References

- Shahin M, Chen F F, Hosseinzadeh A, et al. Robotics multi-modal recognition system via computer-based vision[J]. The International Journal of Advanced Manufacturing Technology, 2025, 136(9): 3989-4005. [CrossRef]

- Chbaik N, Khiat A, Bahnasse A, et al. Analyzing Smart Inventory Management System Performance Over Time with State-Based Markov Model and Reliability Approach, Enhanced by Blockchain Security and Transparency[J]. Statistics, Optimization & Information Computing, 2025, 13(2): 508-530. [CrossRef]

- Maskey, R. Enhancing Robotic Precision: Integrating Computer Vision with Advanced Mechanical Systems[J]. Global Research Review, 2025, 1(1): 9-16.

- Jafari F, Dorafshan S. Vision based stockpile inventory measurement using uncrewed aerial systems[J]. Ain Shams Engineering Journal, 2025, 16(2): 103251. [CrossRef]

- Madamidola O A, Daramola O A, Akintola K G, et al. A review of existing inventory management systems[J]. International Journal of Research in Engineering and Science (IJRES), 2024, 12(9): 40-50.

- Alam M K, Thakur O A, Islam F T. Inventory management systems of small and medium enterprises in Bangladesh[J]. Rajagiri management journal, 2024, 18(1): 8-19. [CrossRef]

- Kaushik, J. An inventory model for deteriorating items with blockchain process: how will it transform inventory management system[J]. Cogent Business & Management, 2025, 12(1): 2479571. [CrossRef]

- Panigrahi R R, Shrivastava A K, Nudurupati S S. Impact of inventory management on SME performance: a systematic review[J]. International Journal of Productivity and Performance Management, 2024, 73(9): 2901-2925. [CrossRef]

- KODAKANDLA, N. Serverless Architectures: a Comparative Study of Performance, Scalability, and Cost in Cloud-native Applications[J]. Iconic Research And Engineering Journals, 2021, 5(2): 136-150.

- Zhang R, Li Y, Li H, et al. Evolutionary game analysis on cloud providers and enterprises’ strategies for migrating to cloud-native under digital transformation[J]. Electronics, 2022, 11(10): 1584. [CrossRef]

| image resolution | Original single frame size (MB) | Average size after compression (MB) | compression ratio | coding format | Whether to use tiered storage |

| 1920×1080 | 2.8 | 1.1 | 0.392 | WebP | be |

| 1280×720 | 1.6 | 0.65 | 0.406 | WebP | be |

| 640×480 | 0.95 | 0.41 | 0.432 | JPEG2000 | clogged |

| image resolution | Original frame size (MB) | WebP code size (MB) | Zstd secondary compression size (MB) | Total compression ratio | storage strategy | Lifecycle version control |

| 1920×1080 | 2.8 | 1.1 | 0.74 | 0.264 | S3 Glacier | ≤3 version |

| 1280×720 | 1.6 | 0.62 | 0.45 | 0.281 | S3 Glacier | ≤3 version |

| 640×480 | 0.95 | 0.41 | 0.3 | 0.316 | Local SSD Cache | ≤2 version |

| sports event | Data before optimization | Optimized data | Cost reduction or optimization rate |

| Average image volume per SKU (MB) | 2.8 | 0.74 | 73.57% |

| Total daily image writes (GB) | 156.3 | 71.3 | 54.40% |

| Average daily cost of storage occupancy ($) | 328 | 196.4 | 40.12% |

| Service Load Balancing Ratio | 1.52 | 1.08 | 28.95% |

| Peak CPU utilization (%) | 94.2 | 68.5 | 27.27% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).