Submitted:

07 August 2025

Posted:

08 August 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

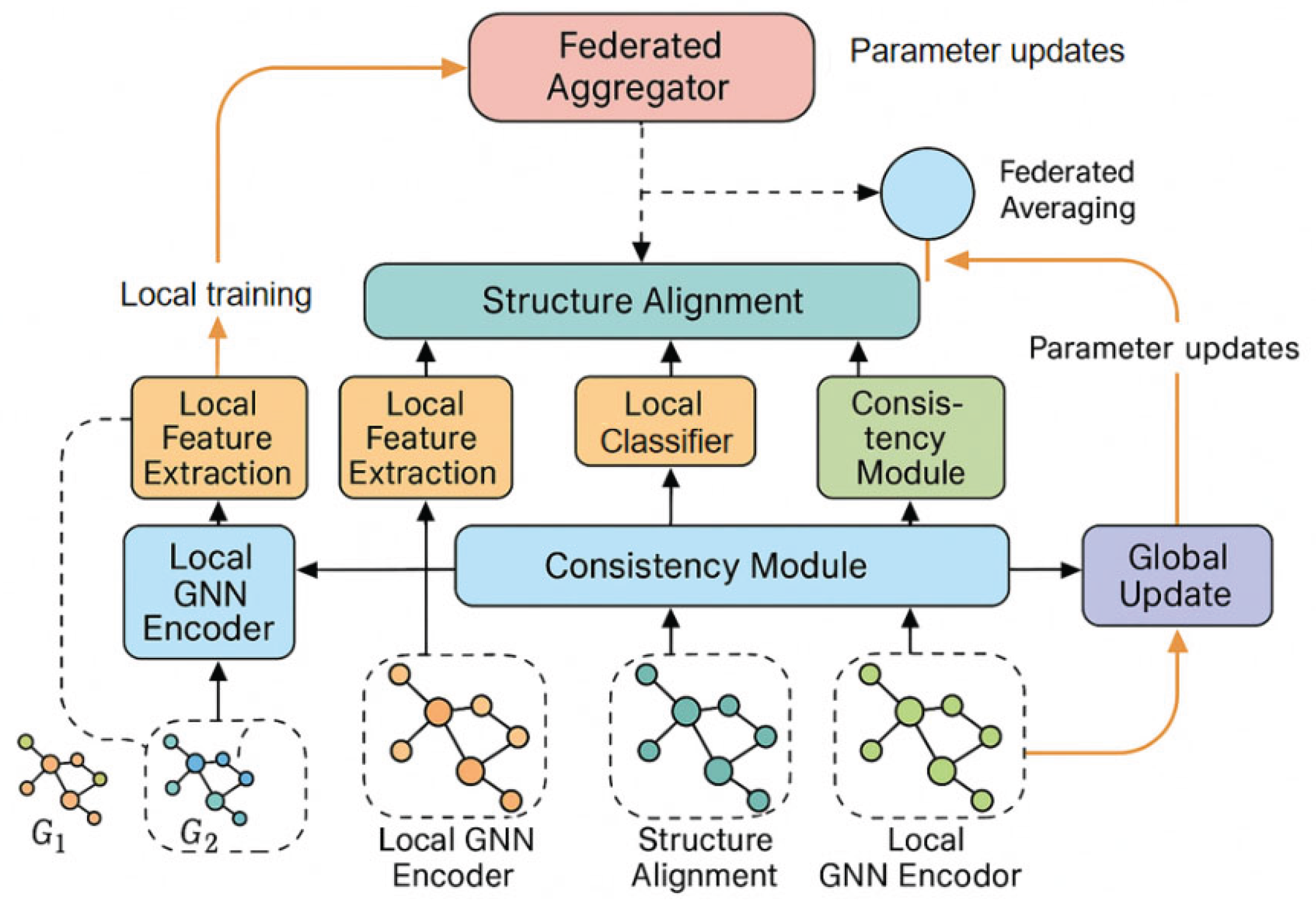

2. Method

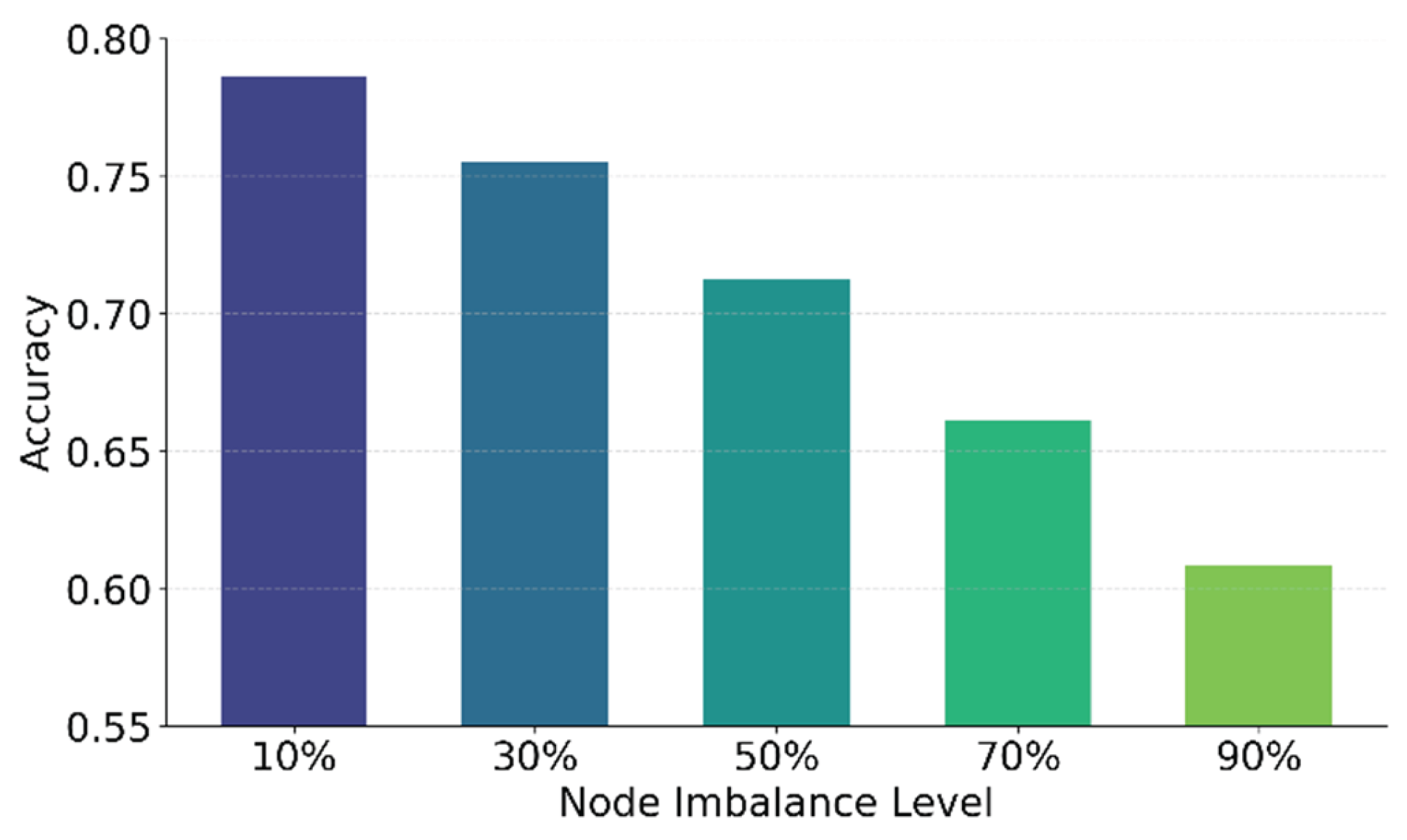

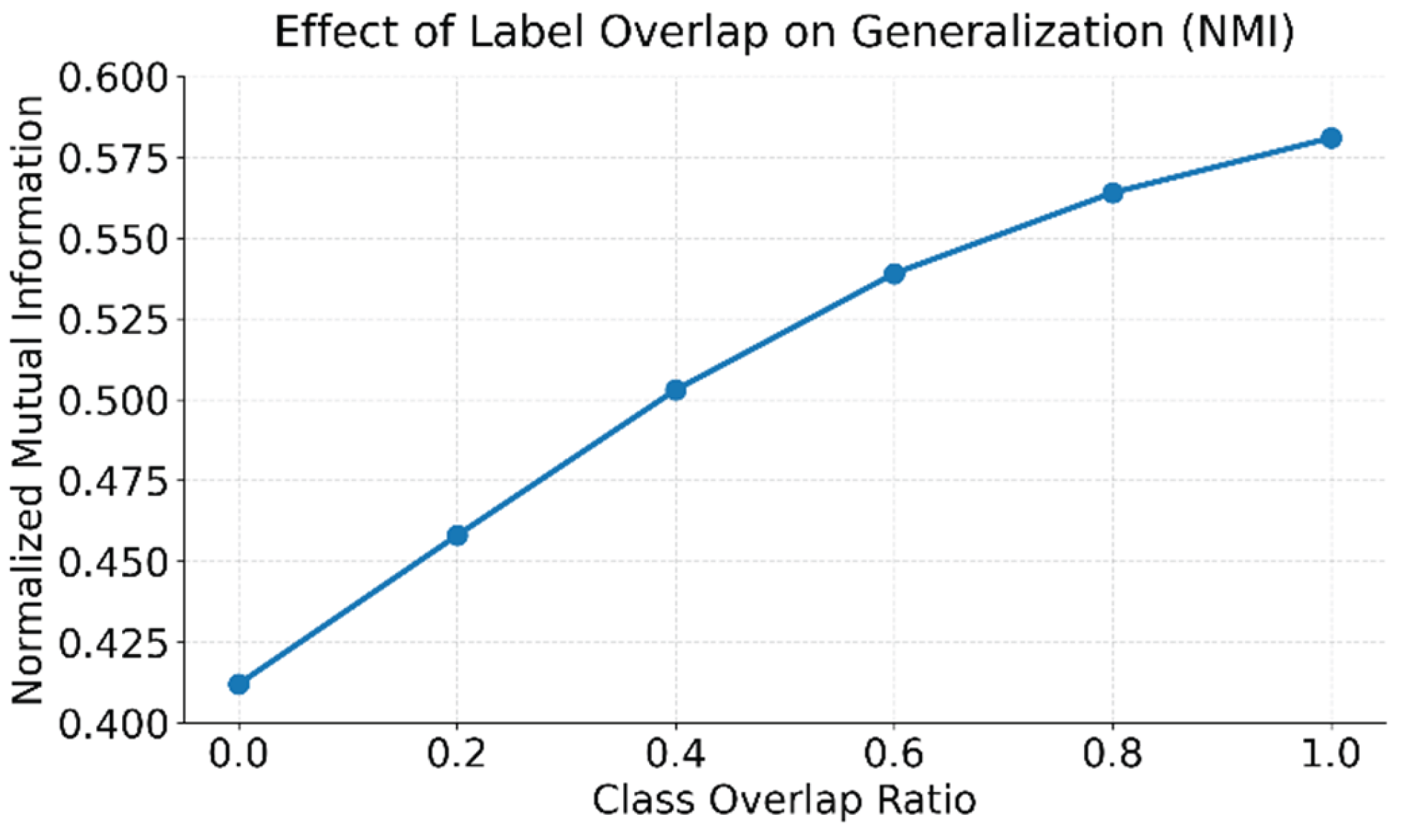

3. Performance Evaluation

3.1. Dataset

3.2. Experimental Results

4. Conclusions

5. Future Research

References

- T. Xu, X. Deng, X. Meng, H. Yang, and Y. Wu, “Clinical NLP with attention-based deep learning for multi-disease prediction,” arXiv preprint arXiv:2507.01437, 2025. [CrossRef]

- X. Su, “Deep forecasting of stock prices via granularity-aware attention networks” , 2024. [CrossRef]

- S. Lyu, Y. Deng, G. Liu, Z. Qi, and R. Wang, “Transferable modeling strategies for low-resource LLM tasks: A prompt and alignment-based,” arXiv preprint arXiv:2507.00601, 2025. [CrossRef]

- Y. Wang, “Structured compression of large language models with sensitivity-aware pruning mechanisms,” Journal of Computer Technology and Software, vol. 3, no. 9, 2024. [CrossRef]

- Q. Wu, “Task-aware structural reconfiguration for parameter-efficient fine-tuning of LLMs” , 2024. [CrossRef]

- X. Quan, “Layer-wise structural mapping for efficient domain transfer in language model distillation,” Transactions on Computational and Scientific Methods, vol. 4, no. 5, 2024. [CrossRef]

- X. Fu, B. Zhang, Y. Dong, et al., “Federated graph machine learning: A survey of concepts, techniques, and applications,” ACM SIGKDD Explorations Newsletter, vol. 24, no. 2, pp. 32-47, 2022. [CrossRef]

- Y. Zou, N. Qi, Y. Deng, Z. Xue, M. Gong, and W. Zhang, “Autonomous resource management in microservice systems via reinforcement learning,” arXiv preprint arXiv:2507.12879, 2025. [CrossRef]

- M. Gong, “Modeling microservice access patterns with multi-head attention and service semantics” , 2025. [CrossRef]

- R. Meng, H. Wang, Y. Sun, Q. Wu, L. Lian, and R. Zhang, “Behavioral anomaly detection in distributed systems via federated contrastive learning,” arXiv preprint arXiv:2506.19246, 2025. [CrossRef]

- Y. Ren, “Deep learning for root cause detection in distributed systems with structural encoding and multi-modal attention”, 2024. [CrossRef]

- B. Fang and D. Gao, “Collaborative multi-agent reinforcement learning approach for elastic cloud resource scaling,” arXiv preprint arXiv:2507.00550, 2025. [CrossRef]

- Deng, L.; Huang, Y.; Liu, X.; Liu, H.; Lu, Z. Graph2MDA: a multi-modal variational graph embedding model for predicting microbe–drug associations. Bioinformatics 2021, 38, 1118–1125. [CrossRef]

- H. Cheng, H. Wen, X. Zhang, et al., “Contrastive continuity on augmentation stability rehearsal for continual self-supervised learning,” Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 5707-5717, 2023.

- J. Zhou and Y. Lei, “Multi-source heterogeneous data fusion algorithm based on federated learning,” Proceedings of the International Conference on Soft Computing in Data Science, pp. 46-60, 2023. [CrossRef]

- Peng, S.; Zhang, X.; Zhou, L.; Wang, P. YOLO-CBD: Classroom Behavior Detection Method Based on Behavior Feature Extraction and Aggregation. Sensors 2025, 25, 3073. [CrossRef]

- W. Zhu, “Fast adaptation pipeline for LLMs through structured gradient approximation,” Journal of Computer Technology and Software, vol. 3, no. 6, 2024. [CrossRef]

- Z. Xu, K. Ma, Y. Liu, W. Sun, and Y. Liu, “Causal representation learning for robust anomaly detection in complex environments,” 2025.

- Z. Wang, W. Kuang, Y. Xie, et al., “FederatedScope-GNN: Towards a unified, comprehensive and efficient package for federated graph learning,” Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, pp. 4110-4120, 2022. [CrossRef]

- Wu, C.; Wu, F.; Lyu, L.; Qi, T.; Huang, Y.; Xie, X. A federated graph neural network framework for privacy-preserving personalization. Nat. Commun. 2022, 13, 1–10. [CrossRef]

- C. Wu, F. Wu, Y. Cao, et al., “FedGNN: Federated graph neural network for privacy-preserving recommendation,” arXiv preprint arXiv:2102.04925, 2021. [CrossRef]

- R. Liu, P. Xing, Z. Deng, et al., “Federated graph neural networks: Overview, techniques, and challenges,” IEEE Transactions on Neural Networks and Learning Systems, 2024. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).