Submitted:

26 July 2025

Posted:

29 July 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Research Question

- What is the mean average precision (mAP) achieved by YOLOv8 on a diverse dataset of trash items photographed in their natural environment, taking into account factors such as various kinds of trash, various lighting conditions, different backdrops, obstacles, and different sizes

- Does YOLOv8 perform better than its predecessors?

1.2. Structure of Report

-

Section 1 - IntroductionA broad overview of the importance of trash segregation and how computer vision might enhance it is provided in the introduction.

-

Section 2 - Related WorkThis work is related to research topic and its suggested solution since it provides procedural overview of study which was necessary.

-

Section 3 - MethodologyTechnical Approach, a methodological strategy that breaks project into manageable, sequential steps.

-

Section 4 - Results & EvaluationAll the experimentation performed will be critically evaluated in this section

- Section 5 - Conclusion & Future Work Insights that have been gained by this research and possible recommendations that can help improve upon this research will go in this section.

2. Related Work

2.1. Waste Object Detection Using different Techniques

2.2. Object Detection Using YOLO

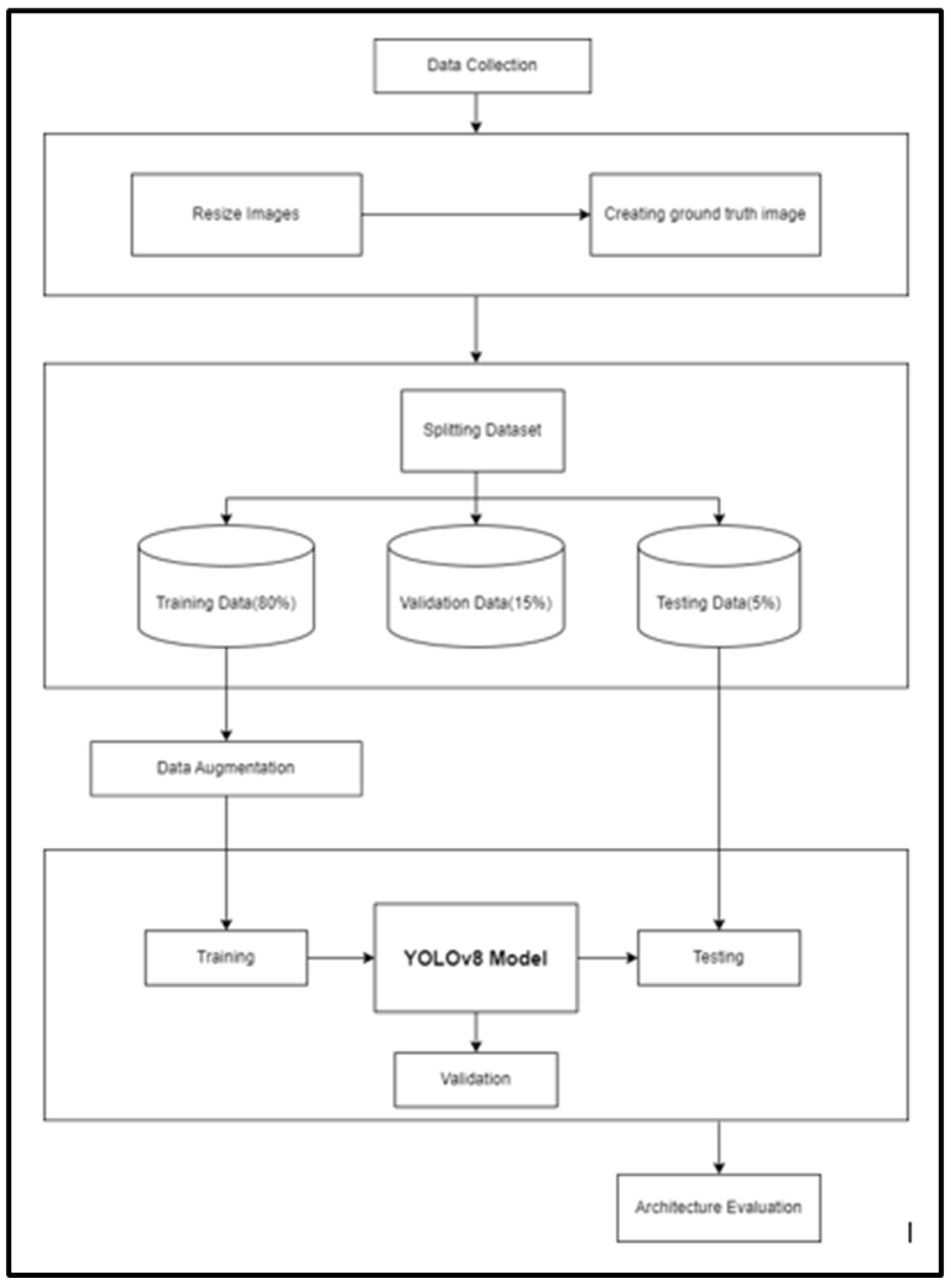

3. Methodology

3.1. Data Collection

3.2. PreProcessing and Data Split

3.3. Data Augmentation

3.4. Splitting Dataset

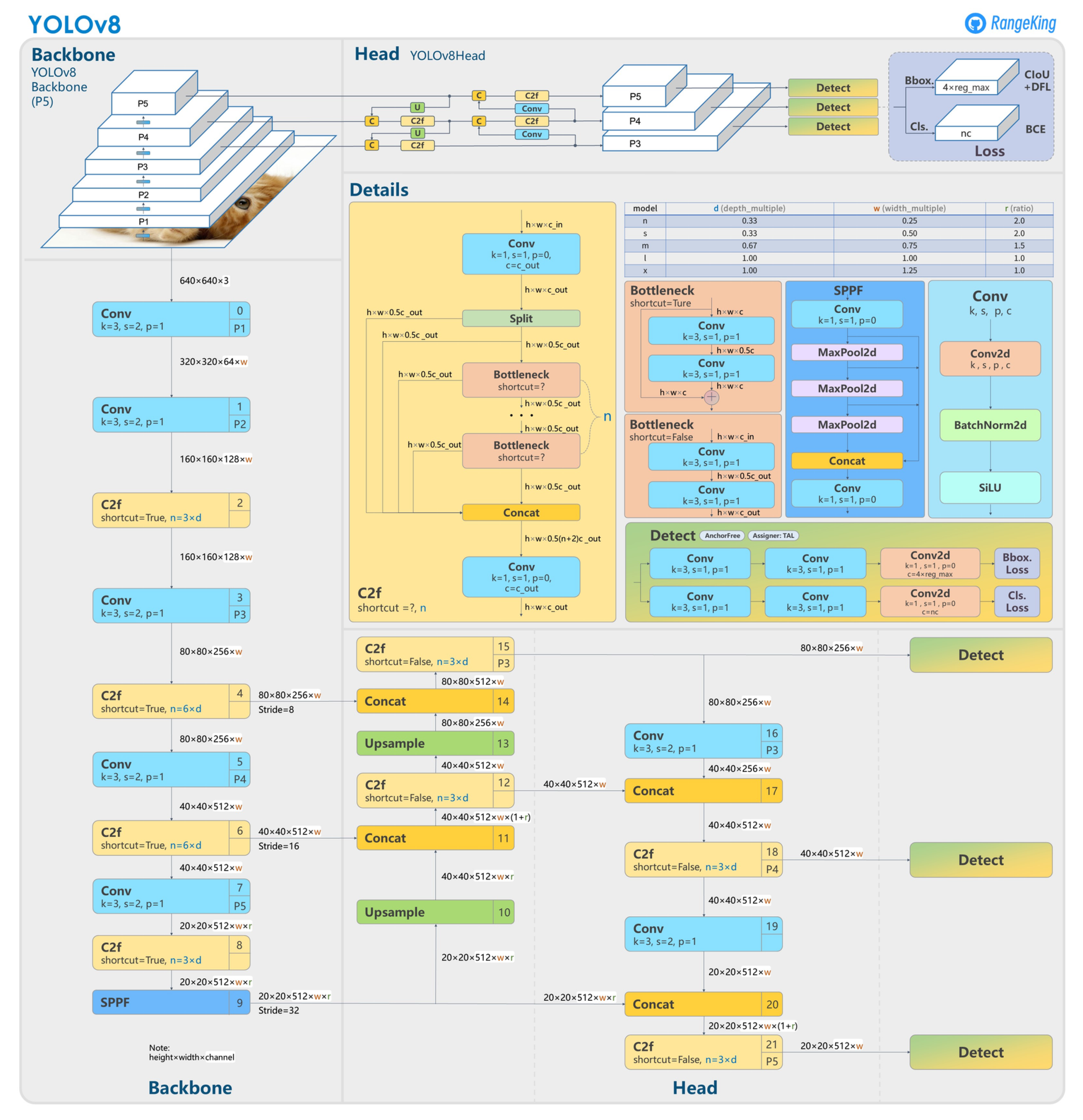

3.5. Modelling

3.6. Model Building

3.7. Architecture Evaluation

4. Evaluation

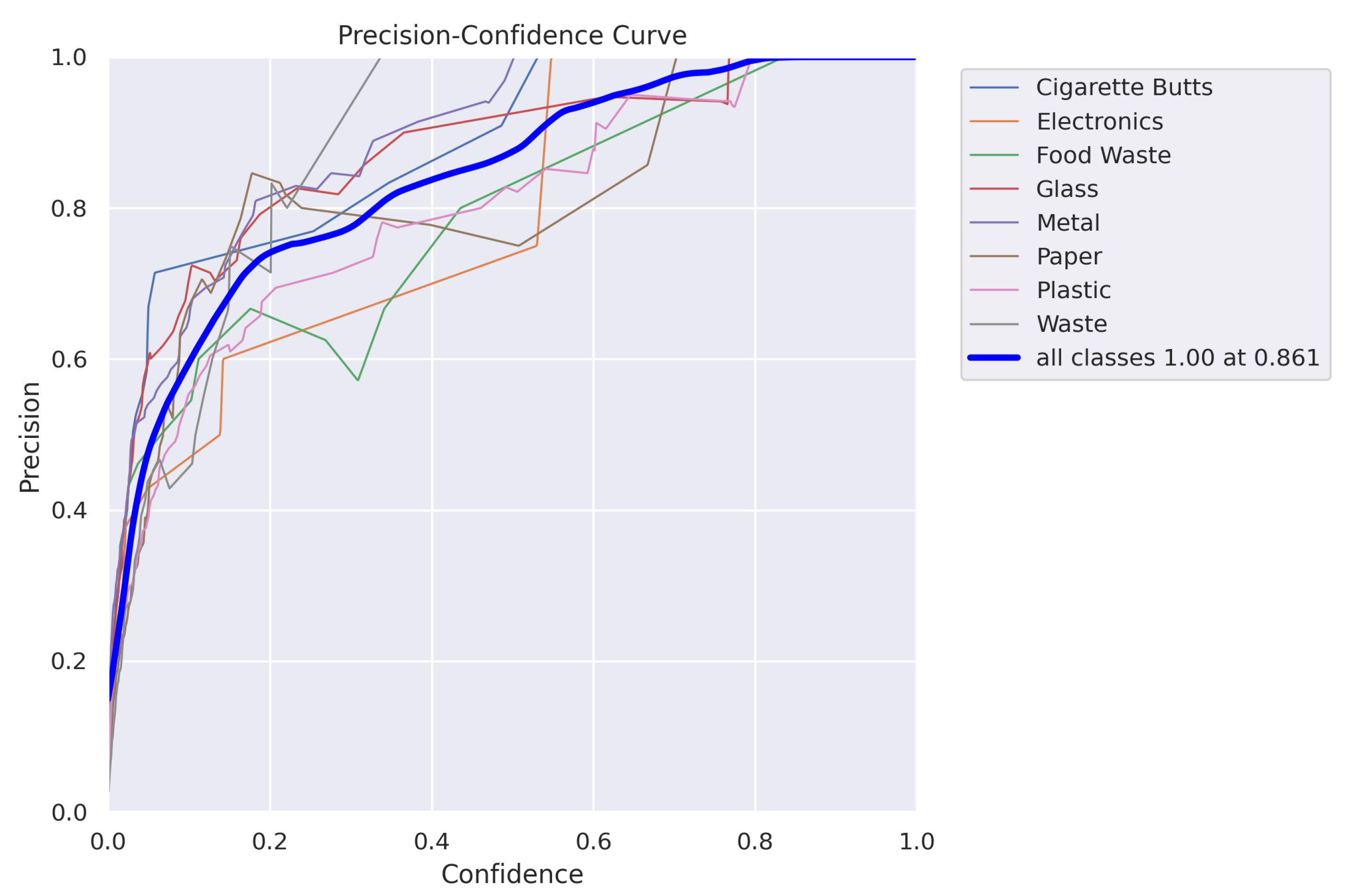

4.1. Precision

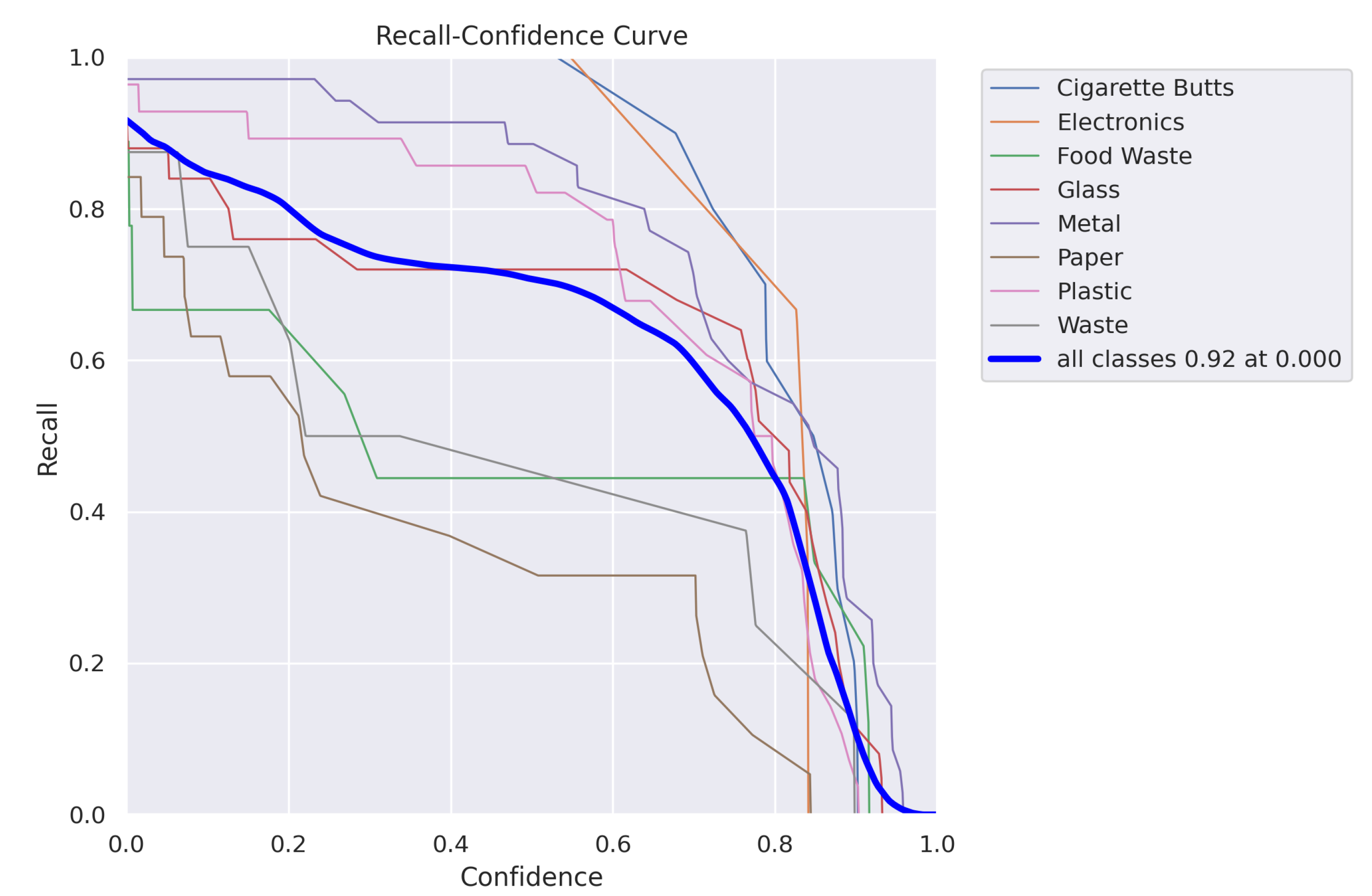

4.2. Recall

5. Conclusions and Future Work

Acknowledgments

References

- Kumar, T.; Mileo, A.; Bendechache, M. KeepOriginalAugment: Single Image-based Better Information-Preserving Data Augmentation Approach. In Proceedings of the 20th International Conference on Artificial Intelligence Applications and Innovations; 2024. [Google Scholar]

- Kumar, T.; Mileo, A.; Brennan, R.; Bendechache, M. RSMDA: Random Slices Mixing Data Augmentation. Applied Sciences 2023, 13, 1711. [Google Scholar] [CrossRef]

- Chandio, A.; Gui, G.; Kumar, T.; Ullah, I.; Ranjbarzadeh, R.; Roy, A.M.; Hussain, A.; Shen, Y. Precise single-stage detector. arXiv 2022. [Google Scholar] [CrossRef]

- Kumar, T.; Mileo, A.; Brennan, R.; Bendechache, M. Advanced Data Augmentation Approaches: A Comprehensive Survey and Future directions. arXiv, 2023; arXiv:2301.02830. [Google Scholar]

- Kumar, T.; Park, J.; Ali, M.S.; Uddin, A.S.; Ko, J.H.; Bae, S.H. Binary-classifiers-enabled filters for semi-supervised learning. IEEE Access 2021, 9, 167663–167673. [Google Scholar] [CrossRef]

- Roy, A.M.; Bhaduri, J.; Kumar, T.; Raj, K. A computer vision-based object localization model for endangered wildlife detection. Ecological Economics, Forthcoming 2022. [Google Scholar] [CrossRef]

- Kumar, T.; Brennan, R.; Mileo, A.; Bendechache, M. Image data augmentation approaches: A comprehensive survey and future directions. IEEE Access 2024. [Google Scholar] [CrossRef]

- Chandio, A.; Shen, Y.; Bendechache, M.; Inayat, I.; Kumar, T. AUDD: audio Urdu digits dataset for automatic audio Urdu digit recognition. Applied Sciences 2021, 11, 8842. [Google Scholar] [CrossRef]

- Turab, M.; Kumar, T.; Bendechache, M.; Saber, T. Investigating multi-feature selection and ensembling for audio classification. International Journal of Artificial Intelligence & Applications 2022. [Google Scholar]

- Raj, K.; Singh, A.; Mandal, A.; Kumar, T.; Roy, A.M. Understanding EEG signals for subject-wise definition of armoni activities. arXiv 2023. [Google Scholar] [CrossRef]

- Kumar, T.; Park, J.; Bae, S.H. Intra-Class Random Erasing (ICRE) augmentation for audio classification. In Proceedings of the Proceedings Of The Korean Society Of Broadcast Engineers Conference. The Korean Institute of Broadcast and Media Engineers; 2020; pp. 244–247. [Google Scholar]

- Park, J.; Kumar, T.; Bae, S.H. Search of an optimal sound augmentation policy for environmental sound classification with deep neural networks. In Proceedings of the Proceedings Of The Korean Society Of Broadcast Engineers Conference. The Korean Institute of Broadcast and Media Engineers; 2020; pp. 18–21. [Google Scholar]

- Singh, A.; Raj, K.; Meghwar, T.; Roy, A.M. Efficient Paddy Grain Quality Assessment Approach Utilizing Affordable Sensors. Artificial Intelligence 2024, 5, 686–703. [Google Scholar] [CrossRef]

- Khan, W.; Kumar, T.; Cheng, Z.; Raj, K.; Roy, A.; Luo, B. SQL and NoSQL Databases Software architectures performance analysis and assessments—A Systematic Literature review. arXiv 2022, arXiv:2209.06977. [Google Scholar] [CrossRef]

- Turab, M.; Kumar, T.; Bendechache, M.; Saber, T. Investigating multi-feature selection and ensembling for audio classification. arXiv 2022, arXiv:2206.07511. [Google Scholar] [CrossRef]

- Kumar, T.; Bhujbal, R.; Raj, K.; Roy, A.M. Navigating Complexity: A Tailored Question-Answering Approach for PDFs in Finance, Bio-Medicine, and Science 2024.

- Barua, M.; Kumar, T.; Raj, K.; Roy, A.M. Comparative Analysis of Deep Learning Models for Stock Price Prediction in the Indian Market 2024.

- Raj, K.; Mileo, A. Towards Understanding Graph Neural Networks: Functional-Semantic Activation Mapping. In Proceedings of the International Conference on Neural-Symbolic Learning and Reasoning. Springer; 2024; pp. 98–106. [Google Scholar]

- Zhu, L.; Husny, Z.J.B.M.; Samsudin, N.A.; Xu, H.; Han, C. Deep learning method for minimizing water pollution and air pollution in urban environment. Urban Climate 2023, 49, 101486. [Google Scholar] [CrossRef]

- Ayturan, Y.A.; Ayturan, Z.C.; Altun, H.O. Air pollution modelling with deep learning: a review. International Journal of Environmental Pollution and Environmental Modelling 2018, 1, 58–62. [Google Scholar]

- India-Today. India’s Trash Bomb: 80% of 1.5 Lakh Metric Tonne Daily Garbage Remains Exposed, Untreated. India Today, 2019.

- Liu, Z.; Adams, M.; Walker, T.R. Are exports of recyclables from developed to developing countries waste pollution transfer or part of the global circular economy? Resources, Conservation and Recycling 2018, 136, 22–23. [Google Scholar] [CrossRef]

- Beltrami, E.J.; Bodin, L.D. Networks and vehicle routing for municipal waste collection. Networks 1974, 4, 65–94. [Google Scholar] [CrossRef]

- Delalleau, O.; Bengio, Y. Shallow vs. deep sum-product networks. Advances in neural information processing systems 2011, 24. [Google Scholar]

- Najafabadi, M.M.; Villanustre, F.; Khoshgoftaar, T.M.; Seliya, N.; Wald, R.; Muharemagic, E. Deep learning applications and challenges in big data analytics. Journal of big data 2015, 2, 1–21. [Google Scholar] [CrossRef]

- Soni, G.; Kandasamy, S. Smart Garbage Bin Systems – A Comprehensive Survey. In Proceedings of the Smart Secure Systems – IoT and Analytics Perspective; Venkataramani, G.P., Sankaranarayanan, K., Mukherjee, S., Arputharaj, K., Sankara Narayanan, S., Eds.; Singapore, 2018; pp. 194–206. [Google Scholar]

- Sakr, G.E.; Mokbel, M.; Darwich, A.; Khneisser, M.N.; Hadi, A. Comparing deep learning and support vector machines for autonomous waste sorting. In Proceedings of the 2016 IEEE international multidisciplinary conference on engineering technology (IMCET). IEEE; 2016; pp. 207–212. [Google Scholar]

- Yang, M.; Thung, G. Classification of trash for recyclability status. CS229 project report 2016, 2016, 3. [Google Scholar]

- Hulyalkar, S.; Deshpande, R.; Makode, K.; Kajale, S. Implementation of smartbin using convolutional neural networks. Int. Res. J. Eng. Technol 2018, 5, 1–7. [Google Scholar]

- Sejera, M.; Ibarra, J.B.; Canare, A.S.; Escano, L.; Mapanoo, D.C.; Suaviso, J.P. Standalone frequency based automated trash bin and segregator of plastic bottles and tin cans. In Proceedings of the 2016 IEEE Region 10 Conference (TENCON). IEEE; 2016; pp. 2370–2372. [Google Scholar]

- Kulkarni, H.; Raman, N. Waste Object Detection and Classification; CS230 Report: Deep Learning, 2018.

- Macasaet, N.A.G.; Martinez, E.R.R.; Vergara, E.M. Automatic Segregation of Limited Wastes through Tiny YOLOv3 Algorithm. In Proceedings of the 2022 13th International Conference on Computing Communication and Networking Technologies (ICCCNT), Oct 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, 2016; pp. 779–788.

- Zhou, Q.; Liu, H.; Qiu, Y.; Zheng, W. Object Detection for Construction Waste Based on an Improved YOLOv5 Model. Sustainability 2023, 15. [Google Scholar] [CrossRef]

- Lin, W. YOLO-Green: A Real-Time Classification and Object Detection Model Optimized for Waste Management. In Proceedings of the 2021 IEEE International Conference on Big Data (Big Data); 2021; pp. 51–57. [Google Scholar] [CrossRef]

- Wan, D.; Lu, R.; Wang, S.; Shen, S.; Xu, T.; Lang, X. YOLO-HR: Improved YOLOv5 for Object Detection in High-Resolution Optical Remote Sensing Images. Remote Sensing 2023, 15. [Google Scholar] [CrossRef]

- Wang, T.; Cai, Y.; Liang, L.; Ye, D. A multi-level approach to waste object segmentation. Sensors 2020, 20, 3816. [Google Scholar] [CrossRef] [PubMed]

| 1 | |

| 2 | |

| 3 |

| W/o Augmentation | With Augmentation | ||||

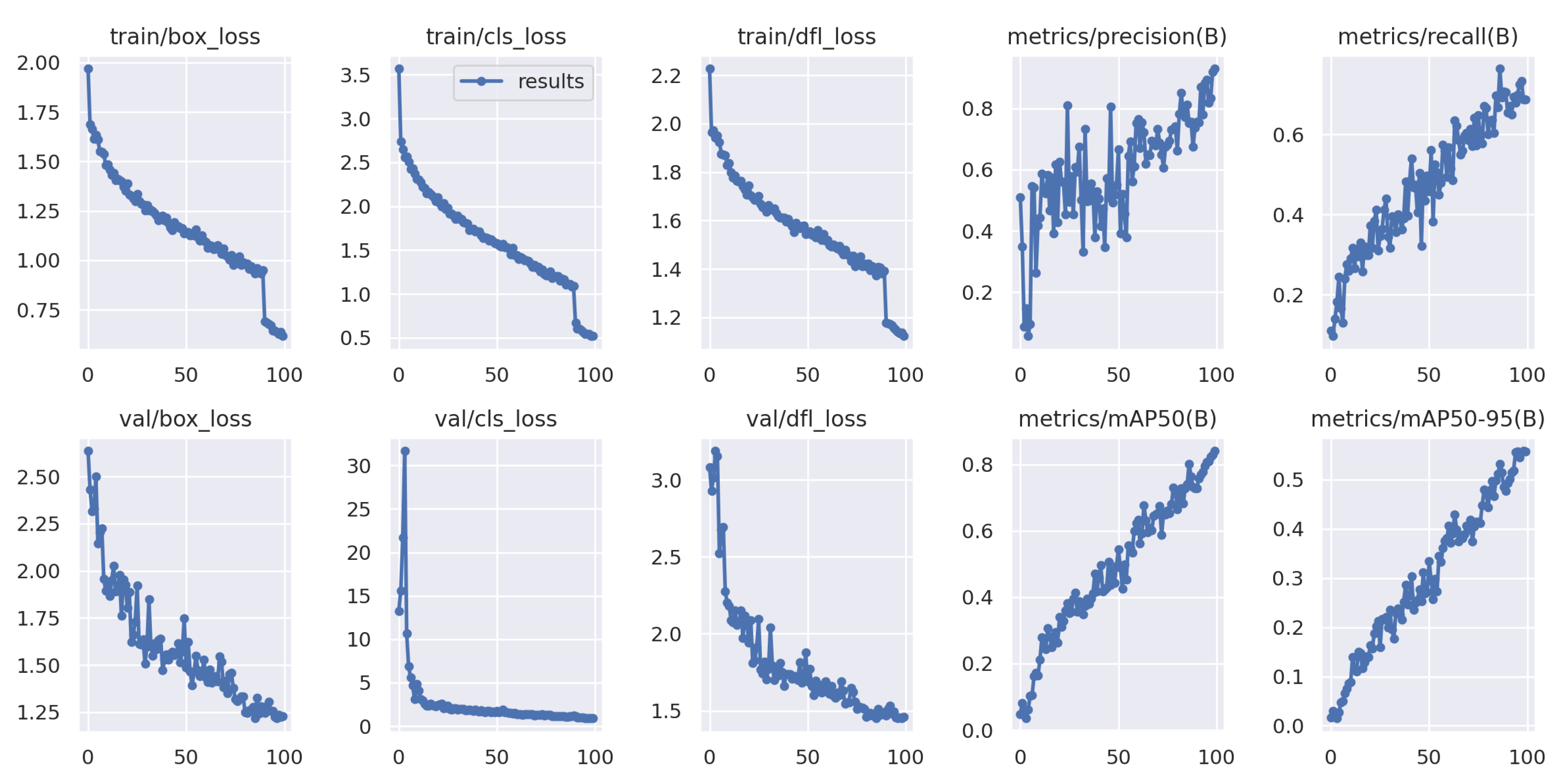

| Epochs | 50 | 100 | 50 | 100 | |

| Model | Model 1 | Model 2 | Model 3 | Model 4 | |

| mAP50 | 0.741 | 0.774 | 0.594 | 0.841 | |

| mAP50-95 | 0.544 | 0.555 | 0.406 | 0.557 | |

| W/o Augmentation | With Augmentation | ||||

| Epochs | 50 | 100 | 50 | 100 | |

| Model | Model 5 | Model 6 | Model 7 | Model 8 | |

| mAP50 | 0.505 | 0.688 | 0.41 | 0.541 | |

| mAP50-95 | 0.338 | 0.491 | 0.212 | 0.328 | |

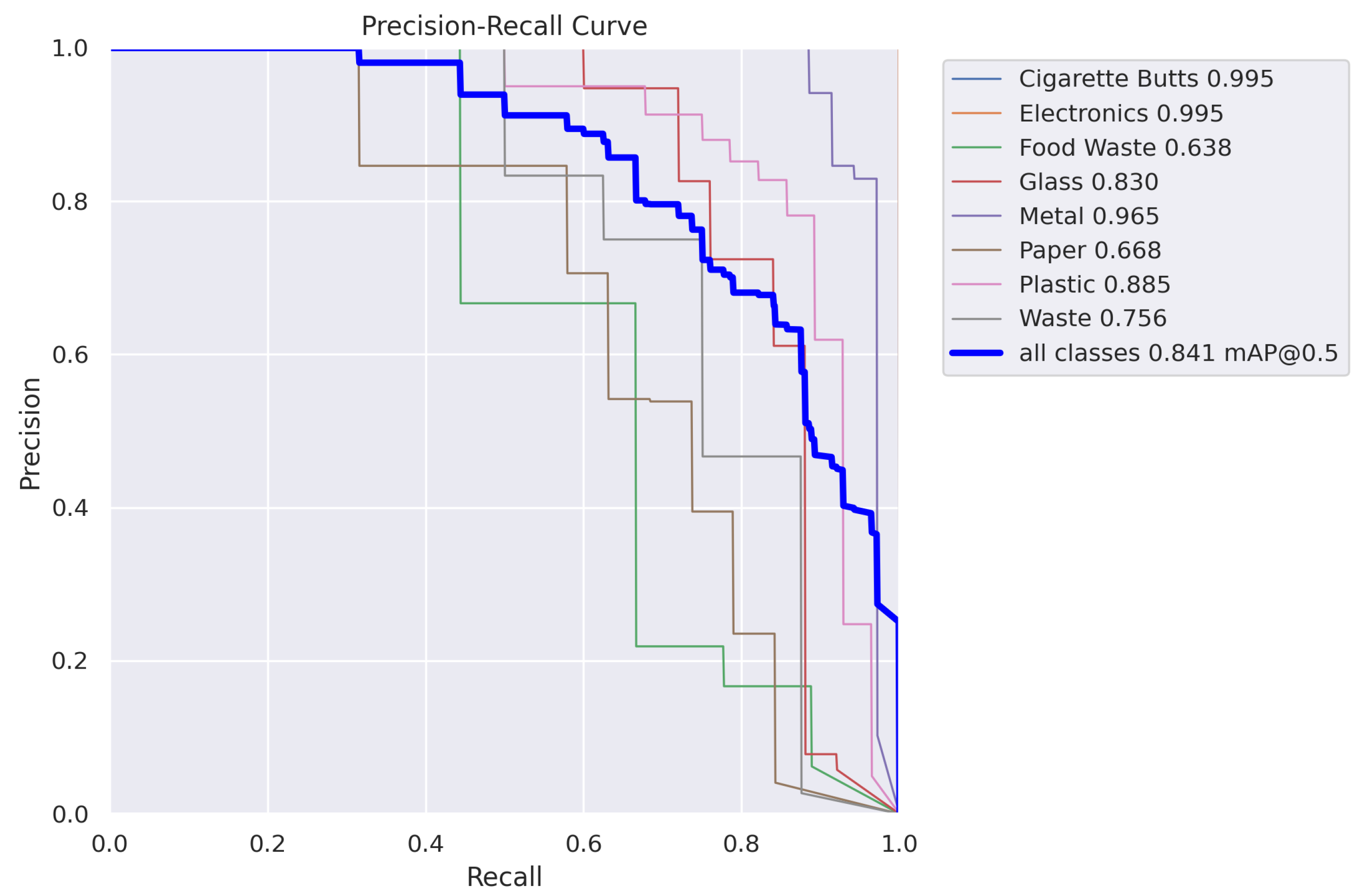

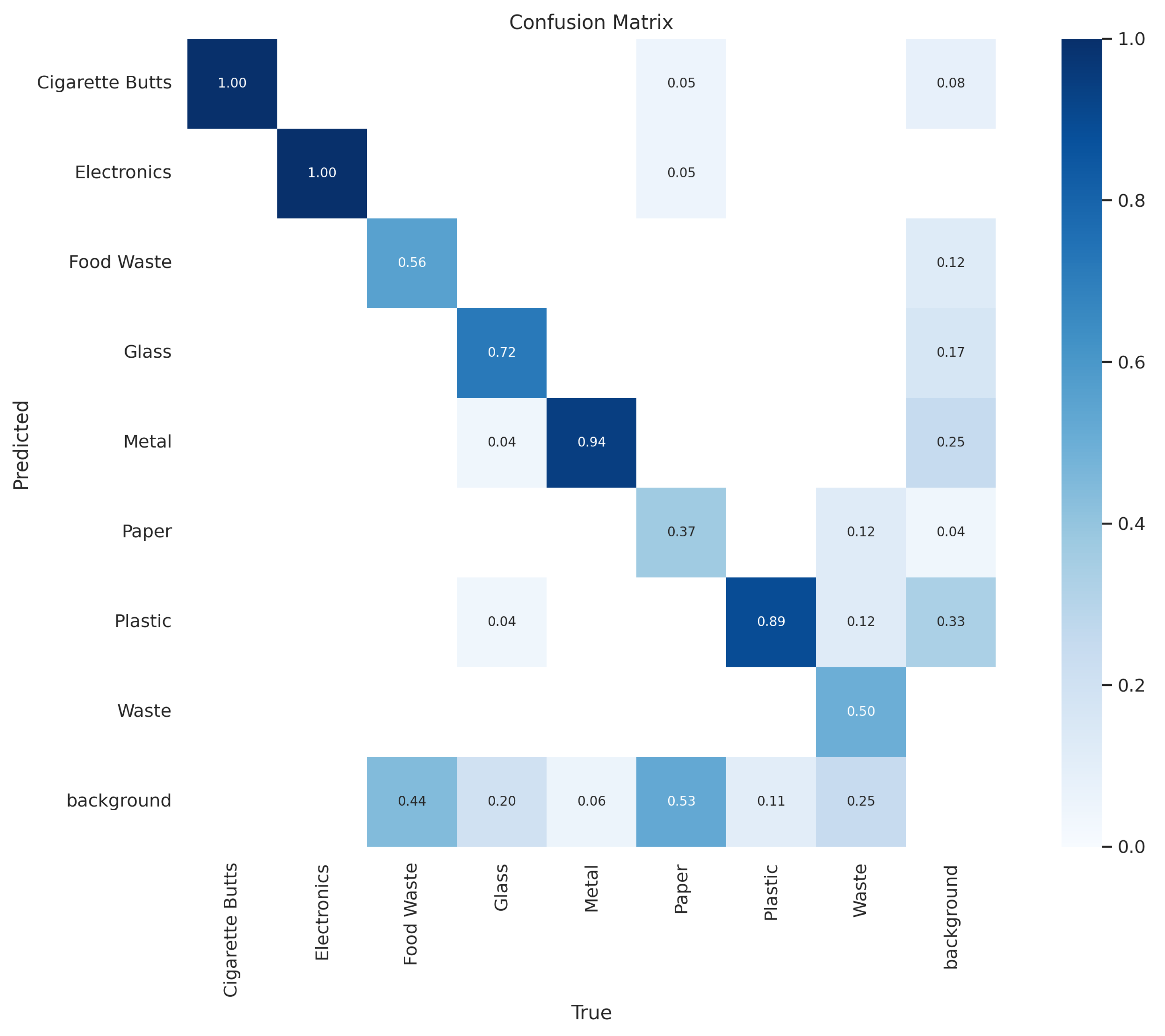

| Class | Precision | Recall | mAP50 | mAP50-95 |

| All | 0.93 | 0.687 | 0.841 | 0.557 |

| Cigarette Butts | 1 | 0.976 | 0.995 | 0.592 |

| Electronics | 1 | 0.979 | 0.995 | 0.764 |

| Food Waste | 0.865 | 0.444 | 0.638 | 0.434 |

| Glass | 0.938 | 0.72 | 0.83 | 0.602 |

| Metal | 1 | 0.825 | 0.965 | 0.684 |

| Paper | 0.789 | 0.316 | 0.668 | 0.35 |

| Plastic | 0.849 | 0.804 | 0.885 | 0.595 |

| Waste | 1 | 0.433 | 0.756 | 0.437 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).