Submitted:

25 July 2025

Posted:

25 July 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Work

3. Proposed Method

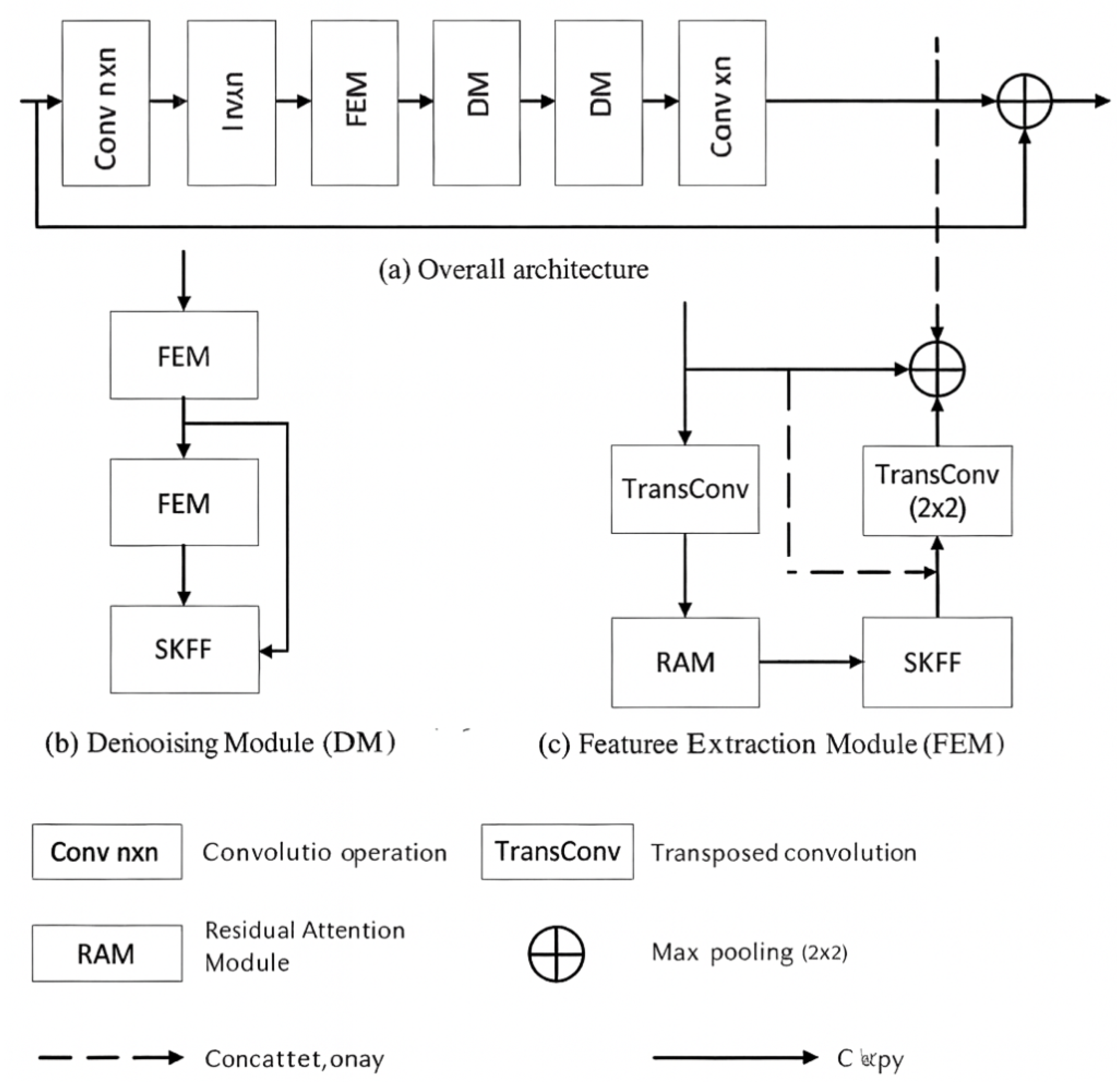

3.1. RAFDN Architecture

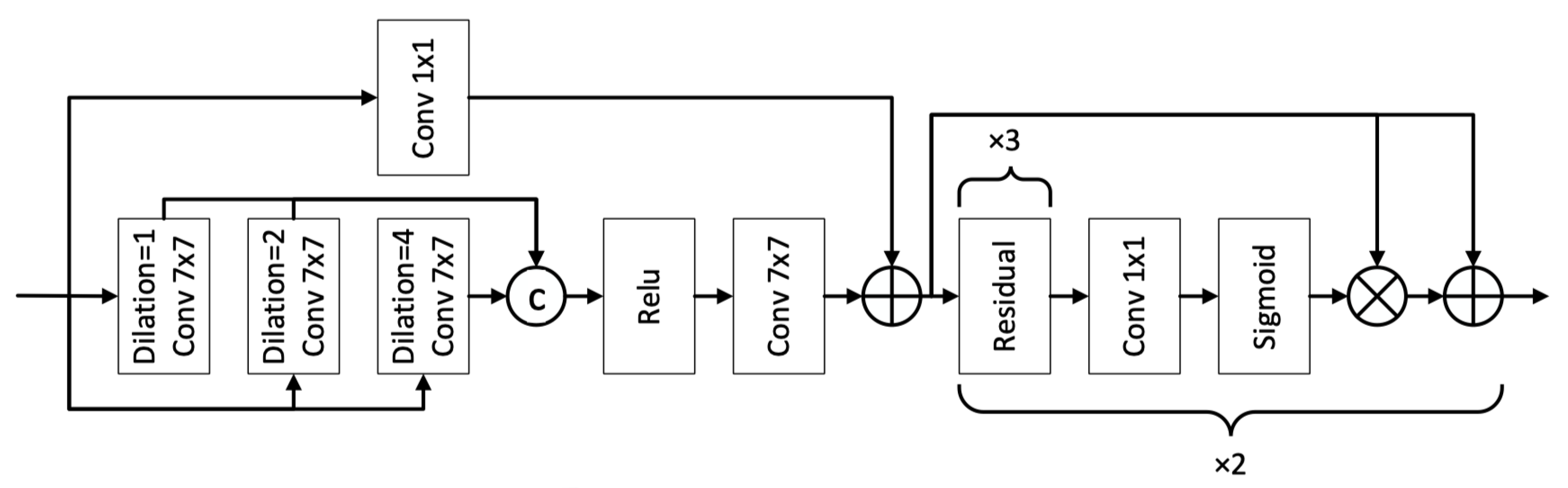

3.2. RAM Structure

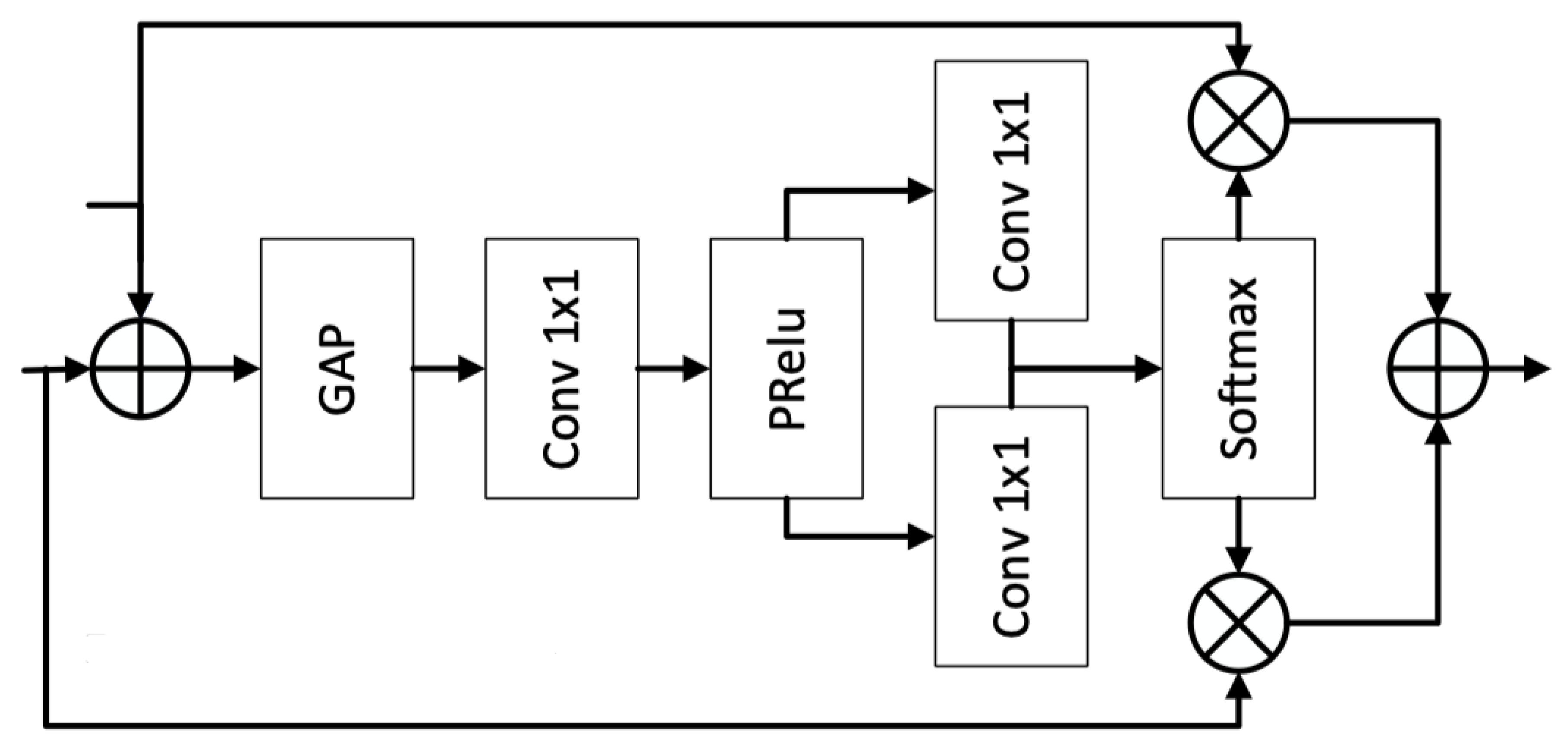

3.3. SKFF Structure

4. Experimental Analysis and Results

4.1. Experimental Data and Environment Settings

4.2. Experimental Results

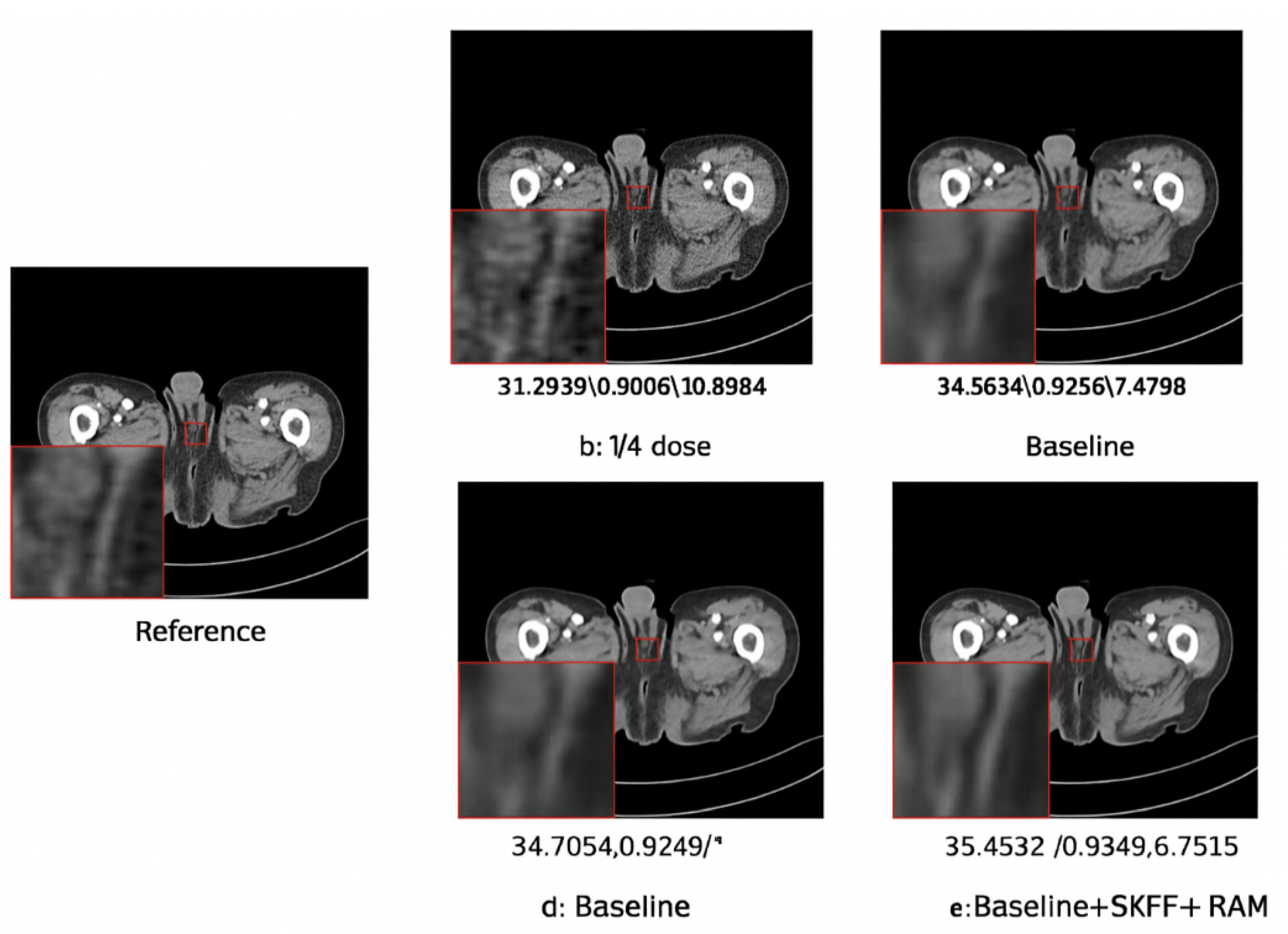

4.2.1. Ablation Study

4.2.2. Comparison with Other Methods

5. Conclusions

References

- Hsieh J. Adaptive streak artifact reduction in computed tomography resulting from excessive X-ray photon noise. Med Phys, 1998, 25(11): 2139–2147. [CrossRef]

- Wang J, Li T, Lu H, et al. Penalized weighted least-squares approach to sinogram noise reduction and image reconstruction for low-dose X-ray computed tomography. IEEE Trans Med Imaging, 2006, 25(10): 1272–1283. [CrossRef]

- Brenner DJ, Hall EJ. Computed tomography—an increasing source of radiation exposure. N Engl J Med, 2007, 357(22): 2277–2284.

- Chen H, Zhang Y, Kalra MK, et al. Low-dose CT with a residual encoder–decoder convolutional neural network. IEEE Trans Med Imaging, 2017, 36(12): 2524–2535. [CrossRef]

- Wolterink JM, Leiner T, Viergever MA, et al. Generative adversarial networks for noise reduction in low-dose CT. IEEE Trans Med Imaging, 2017, 36(12): 2536–2545. [CrossRef]

- Yang Q, Yan P, Zhang Y, et al. Low-dose CT image denoising using a generative adversarial network with Wasserstein distance and perceptual loss. IEEE Trans Med Imaging, 2018, 37(6): 1348–1357. [CrossRef]

- Xiang Y, He Q, Xu T, Hao R, Hu J, Zhang H. Adaptive Transformer Attention and Multi-Scale Fusion for Spine 3D Segmentation. In: Proc 2025 5th Int Conf on Artificial Intelligence and Industrial Technology Applications (AIITA), 2025, pp. 2009–2013. IEEE.

- Xu T, Xiang Y, Du J, Zhang H. Cross-Scale Attention and Multi-Layer Feature Fusion YOLOv8 for Skin Disease Target Detection in Medical Images. J Comput Technol Softw, 2025, 4(2).

- Wu Y, Lin Y, Xu T, Meng X, Liu H, Kang T. Multi-Scale Feature Integration and Spatial Attention for Accurate Lesion Segmentation. 2025.

- An T, Huang W, Xu D, He Q, Hu J, Lou Y. A deep learning framework for boundary-aware semantic segmentation. In: Proc 2025 5th Int Conf on Artificial Intelligence and Industrial Technology Applications (AIITA), 2025, pp. 886–890. IEEE.

- Liu J, Zhang Y, Sheng Y, Lou Y, Wang H, Yang B. Context-Aware Rule Mining Using a Dynamic Transformer-Based Framework. In: Proc 2025 8th Int Conf on Advanced Algorithms and Control Engineering (ICAACE), 2025, pp. 2047–2052. IEEE.

- Peng Y. Structured Knowledge Integration and Memory Modeling in Large Language Systems. Trans Comput Sci Methods, 2024, 4(10).

- Zhang W, Xu Z, Tian Y, Wu Y, Wang M, Meng X. Unified Instruction Encoding and Gradient Coordination for Multi-Task Language Models. 2025.

- Xu T, Deng X, Meng X, Yang H, Wu Y. Clinical NLP with Attention-Based Deep Learning for Multi-Disease Prediction. arXiv preprint, arXiv:2507.01437, 2025.

- Cai G, Kai A, Guo F. Dynamic and Low-Rank Fine-Tuning of Large Language Models for Robust Few-Shot Learning. Trans Comput Sci Methods, 2025, 5(4).

- Peng Y, Wang Y, Fang Z, Zhu L, Deng Y, Duan Y. Revisiting LoRA: A Smarter Low-Rank Approach for Efficient Model Adaptation. In: Proc 2025 5th Int Conf on Artificial Intelligence and Industrial Technology Applications (AIITA), 2025, pp. 1248–1252. IEEE.

- Han X, Sun Y, Huang W, Zheng H, Du J. Towards Robust Few-Shot Text Classification Using Transformer Architectures and Dual Loss Strategies. arXiv preprint, arXiv:2505.06145, 2025.

- Sun X, Duan Y, Deng Y, Guo F, Cai G, Peng Y. Dynamic operating system scheduling using double DQN: A reinforcement learning approach to task optimization. In: Proc 2025 8th Int Conf on Advanced Algorithms and Control Engineering (ICAACE), 2025, pp. 1492–1497. IEEE.

- Zou Y, Qi N, Deng Y, Xue Z, Gong M, Zhang W. Autonomous Resource Management in Microservice Systems via Reinforcement Learning. arXiv preprint, arXiv:2507.12879, 2025.

- Zhan J. Elastic Scheduling of Micro-Modules in Edge Computing Based on LSTM Prediction. J Comput Technol Softw, 2025, 4(2).

- Fang B, Gao D. Collaborative Multi-Agent Reinforcement Learning Approach for Elastic Cloud Resource Scaling. arXiv preprint, arXiv:2507.00550, 2025.

- Duan Y, Yang L, Zhang T, Song Z, Shao F. Automated UI Interface Generation via Diffusion Models: Enhancing Personalization and Efficiency. In: Proc 2025 4th Int Symp on Computer Applications and Information Technology (ISCAIT), 2025, pp. 780–783. IEEE.

- Cui W, Liang A. Diffusion-transformer framework for deep mining of high-dimensional sparse data. J Comput Technol Softw, 2025, 4(4).

- Ma Y, Cai G, Guo F, Fang Z, Wang X. Knowledge-Informed Policy Structuring for Multi-Agent Collaboration Using Language Models. J Comput Sci Softw Appl, 2025, 5(5).

- Zhao Y, Zhang W, Cheng Y, Xu Z, Tian Y, Wei Z. Entity Boundary Detection in Social Texts Using BiLSTM-CRF with Integrated Social Features. 2025.

- Peng S, Zhang X, Zhou L, Wang P. YOLO-CBD: Classroom Behavior Detection Method Based on Behavior Feature Extraction and Aggregation. Sensors, 2025, 25(10): 3073. [CrossRef]

- Jiang N, Zhu W, Han X, Huang W, Sun Y. Joint Graph Convolution and Sequential Modeling for Scalable Network Traffic Estimation. arXiv preprint, arXiv:2505.07674, 2025.

- Zhang H, Ma Y, Wang S, Liu G, Zhu B. Graph-Based Spectral Decomposition for Parameter Coordination in Language Model Fine-Tuning. arXiv preprint, arXiv:2504.19583, 2025.

- Wang Y, Zhu W, Quan X, Wang H, Liu C, Wu Q. Time-Series Learning for Proactive Fault Prediction in Distributed Systems with Deep Neural Structures. arXiv preprint, arXiv:2505.20705, 2025.

- Wang S, Zhuang Y, Zhang R, Song Z. Capsule Network-Based Semantic Intent Modeling for Human-Computer Interaction. arXiv preprint, arXiv:2507.00540, 2025.

- Wu Q. Internal Knowledge Adaptation in LLMs with Consistency-Constrained Dynamic Routing. Trans Comput Sci Methods, 2024, 4(5).

- He Q, Liu C, Zhan J, Huang W, Hao R. State-Aware IoT Scheduling Using Deep Q-Networks and Edge-Based Coordination. arXiv preprint, arXiv:2504.15577, 2025.

- Gong M. Modeling Microservice Access Patterns with Multi-Head Attention and Service Semantics. J Comput Technol Softw, 2025, 4(6).

- Ren Y. Deep Learning for Root Cause Detection in Distributed Systems with Structural Encoding and Multi-modal Attention. J Comput Technol Softw, 2024, 3(5).

- Wang H. Causal Discriminative Modeling for Robust Cloud Service Fault Detection. J Comput Technol Softw, 2024, 3(7).

- Meng R, Wang H, Sun Y, Wu Q, Lian L, Zhang R. Behavioral Anomaly Detection in Distributed Systems via Federated Contrastive Learning. arXiv preprint, arXiv:2506.19246, 2025.

- Dai L, Zhu W, Quan X, Meng R, Chai S, Wang Y. Deep Probabilistic Modeling of User Behavior for Anomaly Detection via Mixture Density Networks. arXiv preprint, arXiv:2505.08220, 2025.

- Zhan J. MobileNet Compression and Edge Computing Strategy for Low-Latency Monitoring. J Comput Sci Softw Appl, 2024, 4(4).

- Pan R. Deep Regression Approach to Predicting Transmission Time Under Dynamic Network Conditions. J Comput Technol Softw, 2024, 3(8).

- Yang T. Transferable Load Forecasting and Scheduling via Meta-Learned Task Representations. J Comput Technol Softw, 2024, 3(8).

- Xing Y. Bootstrapped structural prompting for analogical reasoning in pretrained language models. Trans Comput Sci Methods, 2024, 4(11).

- Tang T. A meta-learning framework for cross-service elastic scaling in cloud environments. J Comput Technol Softw, 2024, 3(8).

- Lou Y, Liu J, Sheng Y, Wang J, Zhang Y, Ren Y. Addressing Class Imbalance with Probabilistic Graphical Models and Variational Inference. In: Proc 2025 5th Int Conf on Artificial Intelligence and Industrial Technology Applications (AIITA), 2025, pp. 1238–1242. IEEE.

- Deng Y. Transfer Methods for Large Language Models in Low-Resource Text Generation Tasks. J Comput Sci Softw Appl, 2024, 4(6).

- Wei M, Xin H, Qi Y, Xing Y, Ren Y, Yang T. Analyzing data augmentation techniques for contrastive learning in recommender models. 2025.

- Wang X. Medical Entity-Driven Analysis of Insurance Claims Using a Multimodal Transformer Model. J Comput Technol Softw, 2025, 4(3).

- Zhang T, Shao F, Zhang R, Zhuang Y, Yang L. DeepSORT-Driven Visual Tracking Approach for Gesture Recognition in Interactive Systems. arXiv preprint, arXiv:2505.07110, 2025.

- Xing Y, Wang Y, Zhu L. Sequential Recommendation via Time-Aware and Multi-Channel Convolutional User Modeling. Trans Comput Sci Methods, 2025, 5(5).

- Wang X, Liu G, Zhu B, He J, Zheng H, Zhang H. Pre-trained Language Models and Few-shot Learning for Medical Entity Extraction. In: Proc 2025 5th Int Conf on Artificial Intelligence and Industrial Technology Applications (AIITA), 2025, pp. 1243–1247. IEEE.

- Peng Y. Semantic Context Modeling for Fine-Grained Access Control Using Large Language Models. J Comput Technol Softw, 2025, 3(7).

- Lou Y. Capsule Network-Based AI Model for Structured Data Mining with Adaptive Feature Representation. Trans Comput Sci Methods, 2024, 4(9).

- Zhu L, Guo F, Cai G, Ma Y. Structured preference modeling for reinforcement learning-based fine-tuning of large models. J Comput Technol Softw, 2025, 4(4).

| Metric | Baseline | Baseline + SKFF | Baseline + SKFF + RAM |

|---|---|---|---|

| PSNR (dB) | |||

| SSIM | |||

| RMSE |

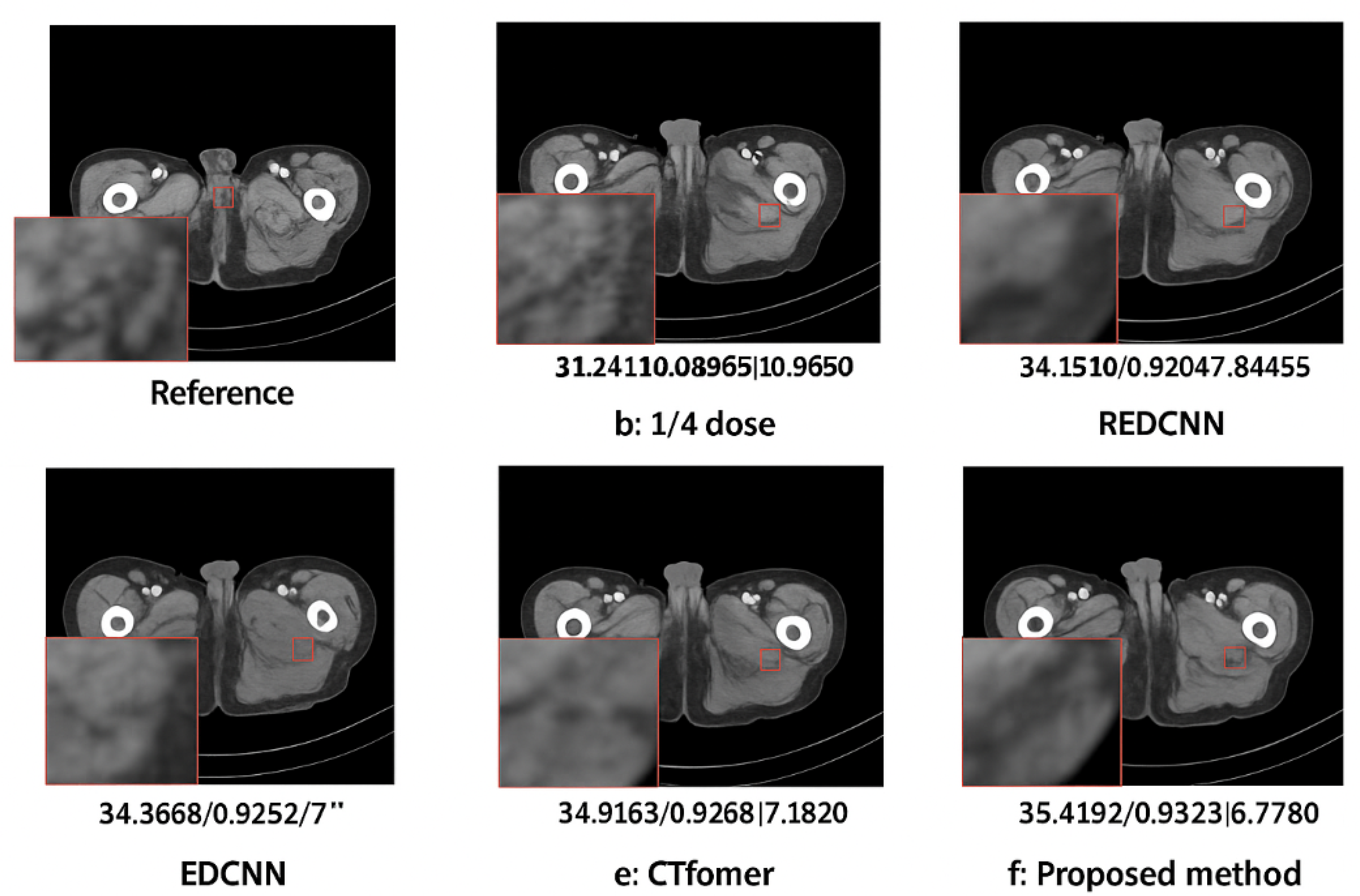

| Method | PSNR (dB) | SSIM | RMSE |

|---|---|---|---|

| LDCT | 29.248 ± 2.110 | 0.875 ± 0.036 | 14.241 ± 3.952 |

| REDCNN | 32.329 ± 1.833 | 0.905 ± 0.028 | 9.863 ± 2.044 |

| EDCNN | 32.308 ± 2.139 | 0.907 ± 0.031 | 9.964 ± 2.543 |

| CTformer | 33.080 ± 1.868 | 0.911 ± 0.030 | 9.071 ± 2.054 |

| Proposed Method | 33.618 ± 1.881 | 0.916 ± 0.029 | 8.528 ± 1.938 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).