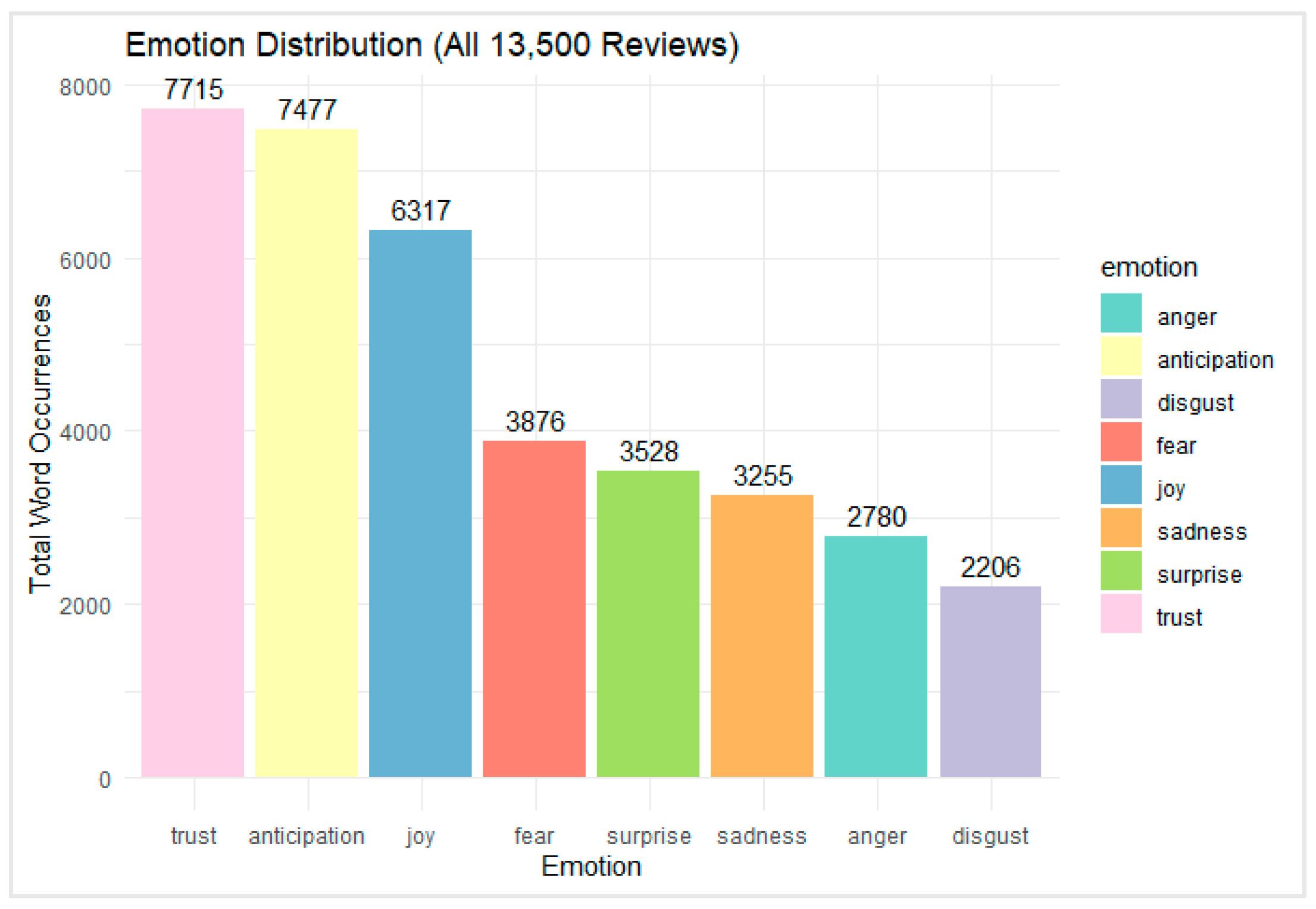

On the other hand, an emotion-based sentiment analysis was conducted on 13,500 user reviews of the Netflix streaming application. As illustrated in

Figure 3, the emotional landscape of user feedback is predominantly positive, with trust (n = 7,715), anticipation (n = 7,477), and joy (n = 6,317) emerging as the top three emotional categories. These findings indicate a high level of user confidence in the platform, alongside feelings of excitement and satisfaction with the content and service. Negative emotions such as fear (n = 3,876), sadness (n = 3,255), anger (n = 2,780), and disgust (n = 2,206) were also present but occurred less frequently. These emotions likely reflect frustration with technical issues, customer service, or specific content themes. The emotion surprise (n = 3,528) appears to play a more neutral or mixed role, potentially corresponding to unexpected content outcomes or features.

The prevalence of trust and anticipation suggests that users not only rely on Netflix but also look forward to its content updates and releases. Meanwhile, the notable expression of joy affirms overall satisfaction with the entertainment experience. In summary, the emotional profile of Netflix user reviews skews strongly positive, reinforcing earlier findings from the word cloud analysis. Negative emotions are present but do not dominate the overall sentiment landscape, suggesting high user engagement and general approval of the streaming service.

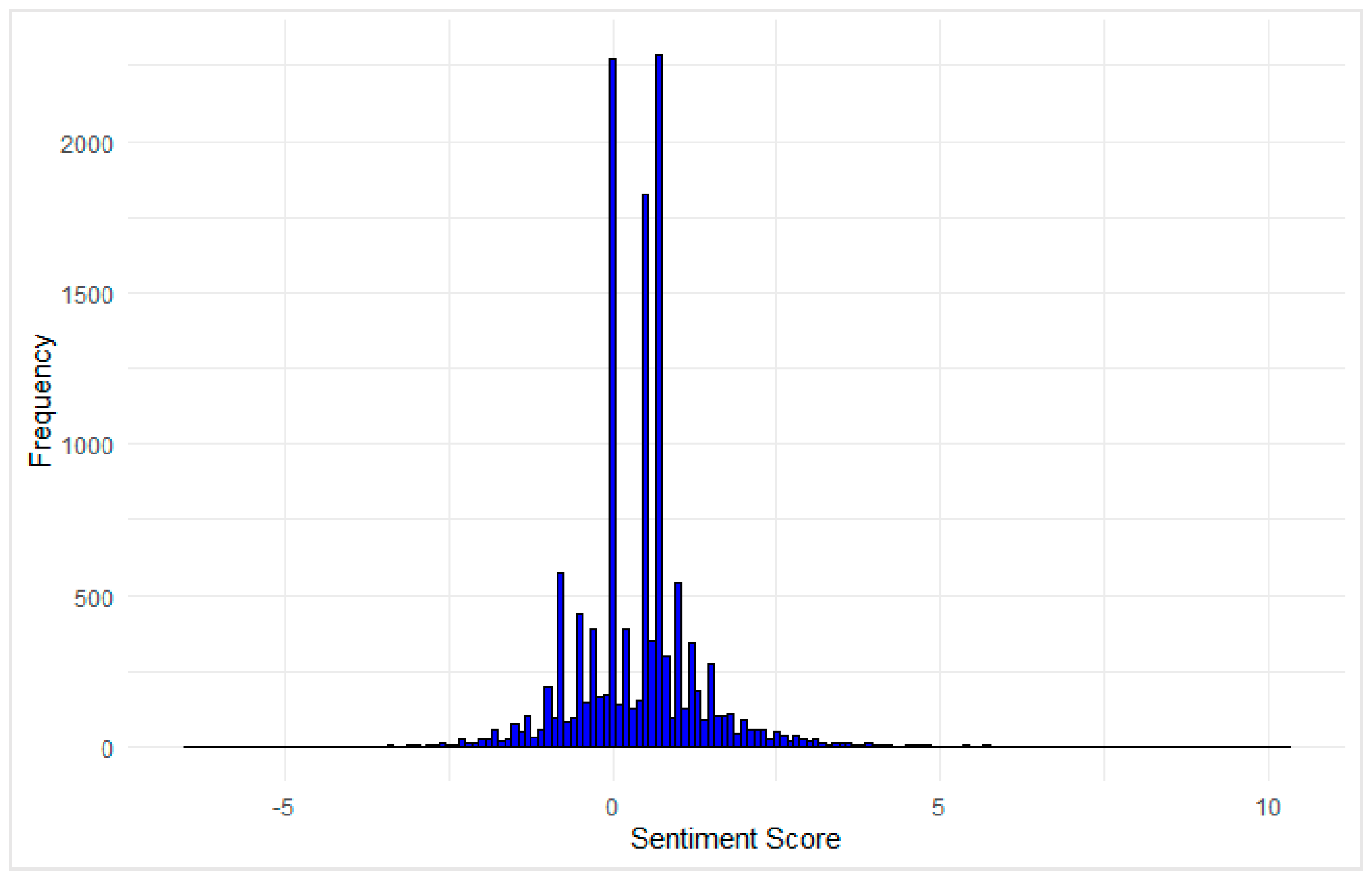

The relatively narrow spread and sharp peak also suggest that most reviews are moderately opinionated rather than strongly polarized. This pattern is consistent with earlier findings from the word cloud and emotion analysis, which indicated a strong prevalence of positive terms such as “good,” “love,” and “awesome,” while negative expressions were fewer and less intense. Overall, the sentiment score distribution supports the conclusion that user feedback on the Netflix streaming app is predominantly neutral to positive, with minimal extreme sentiment in either direction.

a minority of users who expressed dissatisfaction or encountered issues with the app. These proportions reinforce previous findings from the word cloud, emotion distribution, and sentiment score analyses, all of which collectively demonstrate a sentiment landscape that is predominantly positive, with only limited expressions of negativity. This pattern suggests strong user engagement and general satisfaction with the Netflix streaming experience.

3.1. Statistical Findings

Statistical findings in sentiment analysis serve to quantify and validate subjective textual data. By applying statistical methods, researchers can identify patterns, test hypotheses, and assess relationships between sentiment and external variables [

6]. These findings enable the transformation of qualitative opinions into measurable insights, supporting evidence-based conclusions and enhancing model reliability [

10]. First, a boxplot was generated to illustrate the distribution and variability of user ratings, providing an initial overview of central tendency and potential outliers.

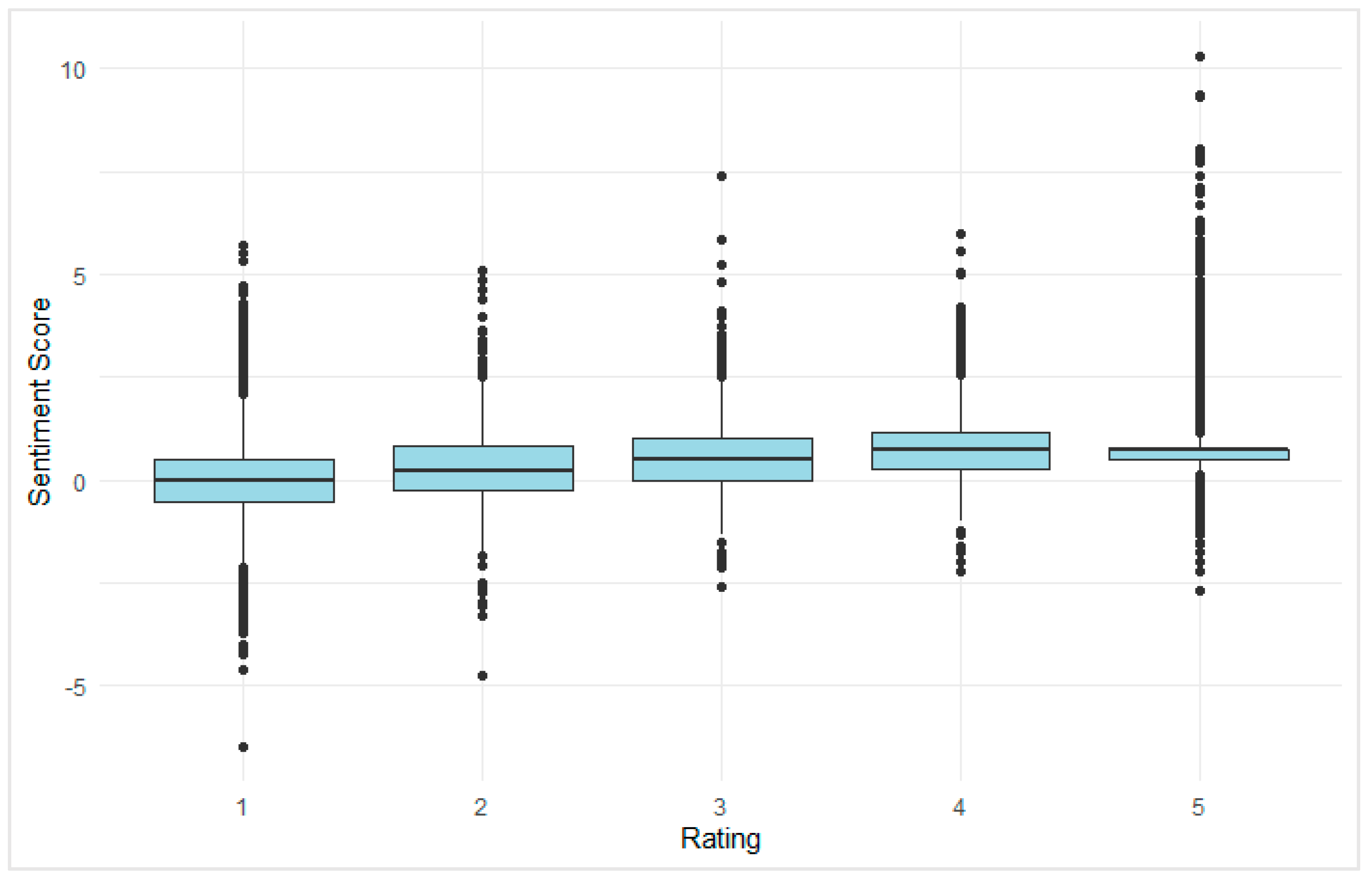

A boxplot was constructed to examine the distribution of sentiment scores across user rating categories (1 to 5 stars) (

see Figure 5). The analysis revealed a general upward trend in median sentiment scores with increasing rating values, suggesting a positive association between star rating and user sentiment. Specifically, the median sentiment scores were lowest for 1-star reviews and increased progressively through to 4-star reviews. Notably, the 4-star rating category exhibited the highest median sentiment score.

Despite the overall trend, the 5-star category showed slightly lower median sentiment compared to 4-star ratings and exhibited the widest interquartile range, indicating higher variability in sentiment. A considerable number of both high and low sentiment outliers were also observed in this category, suggesting inconsistencies between textual sentiment and assigned rating. Conversely, the 1-star category, as expected, had a lower median and a narrower interquartile range, yet still included a few positive sentiment outliers. This underscores the importance of analyzing both numerical ratings and textual reviews in tandem to accurately interpret user feedback.

Due to this, a statistical modeling approach was employed to generate deeper insights into the relationship between viewer responses and engagement metrics on the Netflix platform. Variables included in the analysis were user ratings (Score), sentiment scores derived from review text, the number of thumbs-up reactions (ThumbsUpCount), and sentiment labels (e.g., positive, negative, neutral).

A Spearman’s rank-order correlation was conducted to assess the relationship between user rating (score), thumbsUpCount and sentiment score (sentiment_score) in Netflix user reviews. This non-parametric test was selected because the assumption of normality for sentiment_score was violated, as confirmed by histogram and Q-Q plot inspection. In addition, the Spearman correlation coefficient was computed using the formula provided below:

Where; spearmans rank correlation coefficient, difference between the two ranks of each observation and = number of observations.

Results in

Table 2 revealed a moderate positive correlation between score and sentiment_score,

ρ = .42, p < .001, indicating that higher app ratings were associated with more emotionally positive reviews, it also exhibits statistical significance at the 1% level (p < 0.01), confirming the robustness of the correlation results. For instance, several empirical studies such as [

13], achieved high accuracy classifying sentiments that aligned with users’ rating behavior, and earlier exploratory analysis of [

15] for Netflix fields suggesting systematic covariance between sentiment-derived features and rating scores. On the other hand, there is a moderate negative correlation was also observed between score and thumbsUpCount,

ρ = −.29, p < .001, suggesting that lower-rated reviews tended to receive more user approval via thumbs-up reactions. Finally, a very weak negative correlation was found between sentiment_score and thumbsUpCount,

ρ = −.04, p < .001, indicating that the emotional tone of reviews had minimal association with user engagement.

An ordinal logistic regression analysis was also conducted to investigate the relationship between one or more independent factors and the likelihood that an ordinal outcome will fall into a certain category or a higher category. It is a statistical method for modelling and analyzing ordinal categorical outcome is ordinal logistic regression, commonly referred to as ordered logistic regression. It is defined as:

Where;

= are model coefficient parameters (intercepts and slopes),

J-1. Due to parallel lines assumption, slopes are constant across categories whereas intercepts are different. Hence our equation can be re-written as:

Where;= is the cumulative probability of the response variable falling in or below category j, = is the threshold parameter for category j and are the coefficients associated with the predictor variables . This model is used to examine whether the sentiment score of a review and the number of helpfulness votes (thumbsUpCount) significantly predicted the star rating (score) given to a streaming service application on the Google Play Store. The dependent variable, score, was treated as an ordinal factor ranging from 1 to 5.

Prior to analysis, multicollinearity was assessed using Variance Inflation Factors (VIFs). The VIF values for both predictors were approximately 1.00, with corresponding Tolerance values near 1.00, indicating no multicollinearity concerns (sentiment_score VIF = 1.001, Tolerance = 0.999; thumbsUpCount VIF = 1.001, Tolerance = 0.999). This model was used was conducted to examine whether user rating (score) and the number of helpfulness votes (thumbsUpCount) predict the sentiment label of app reviews (sentiment_label: Negative, Neutral, Positive). The reference category for the outcome variable was set to Neutral.

Results in

Table 3 revealed that sentiment score was a strong positive predictor of rating (

β = 0.85, SE = 0.023, t = 39.04, p < .001), suggesting that more positive sentiment in review text is associated with higher user ratings. In contrast, thumbsUpCount was a small but statistically significant negative predictor (

β = –0.0010, SE = 0.0003, t = –3.29, p = .001), indicating that reviews receiving more helpfulness votes were slightly more likely to be associated with lower ratings. This may reflect a bias toward critical reviews being more informative or visible. Several sentiment analysis studies of [

7] confirm the reliability of sentiment classification in Netflix reviews. Broader investigations using LSTM- and deep-learning models ([

16] [

1] indicates that sentiment is a strong predictor of rating outcomes. Additionally, research by [

11] supports the observation that critical reviews garner more helpfulness votes—consistent with the modest negative predictive effect of thumbs-up count in the present study.

In addition, Levene’s test for homogeneity of variance indicated that the variance of sentiment scores differed significantly across rating levels, F(4, 13,495) = 100.83, p < .001. This finding suggests that sentiment scores are more dispersed at some rating levels (e.g., 1-star) than others, possibly reflecting mixed emotional expressions within low ratings. The model demonstrates that review sentiment is a robust predictor of user ratings, while helpfulness votes may reflect underlying dissatisfaction that is not captured by rating alone.

Lastly, Multinomial logistic regression is applied when the dependent variable has more than two categories that are not ordered. This method extends binary logistic regression to deal with multiple classes by estimating the probability of each outcome category relative to a baseline. estimates the probability of each target variable's possible category (class). To calculate the probability of a specific class, we use a formula:

Where; is the target class, is the input features, are the weights (coefficients) for each class and is the mathematical constant (Euler’s number). For each class, we calculate a score , which is just a weighted sum of the input features. The final probability for each class is based on the ratio of its score compared to all other classes.

Prior to model estimation, multicollinearity was assessed using Variance Inflation Factor (VIF) and Tolerance statistics. Both predictors exhibited VIF values of 1.00 and Tolerance values of 1.00, indicating no violation of multicollinearity assumptions and confirming that the predictors were sufficiently independent. A multinomial logistic regression was performed to model the relationship between the predictor variables (score and thumbsUpCount) and sentiment classification (Negative, Neutral, and Positive). The

Table 4 fit between the model in containing only the intercept and the observed data improved significantly with the addition of the predictor variables,

χ²(4, N = 13,500) = 4096.71, p < .001. The final model explained approximately 38% of the variance in sentiment classification, as indicated by Nagelkerke R² = .38, and demonstrated an acceptable fit to the data.

Results in

Table 5 indicated that both score and thumbsUpCount were significant predictors of sentiment classification. Specifically, an increase in score was associated with a decreased likelihood of a review being classified as Negative (

= –0.49, SE = 0.02, z = –24.78, p < .001), and a greater likelihood of being labeled Positive (

= 0.31, SE = 0.014, z = 22.65, p < .001). These findings are consistent with prior studies demonstrating that higher star ratings or satisfaction scores tend to predict more positive sentiment in textual reviews [

3] [

17].

Moreover, the thumbsUpCount variable significantly contributed to sentiment classification. For both Positive and Negative labels (versus Neutral), an increase in thumbs-up count was associated with higher odds of classification, with nearly identical coefficients (

≈ 0.146, SE = 0.021, z ≈ 6.94, p < .001) and (

≈ 0.313, SE = 0.014, z ≈ 22.65, p < .001). This finding is supported by recent research demonstrating that emotionally intense reviews whether they are highly positive or negative are more likely to receive helpfulness votes (i.e., thumbs-up), suggesting that extreme sentiment expressions attract more user engagement [

5].