Submitted:

21 July 2025

Posted:

22 July 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

- The replacement of traditional MLP layers in ViTs with KANs to leverage their local plasticity and mitigate catastrophic forgetting.

- In-depth exploration and evaluation of different KAN configurations, shedding light on their impact on performance and scalability.

- Demonstration of superior knowledge retention and task adaptation capabilities of KAN-based ViTs in CL scenarios, validated through experiments on benchmark datasets like MNIST and CIFAR100.

- Development of a robust experimental framework simulating real-world sequential learning scenarios, enabling rigorous evaluation of catastrophic forgetting and knowledge retention in ViTs.

2. Background Knowledge and Motivation

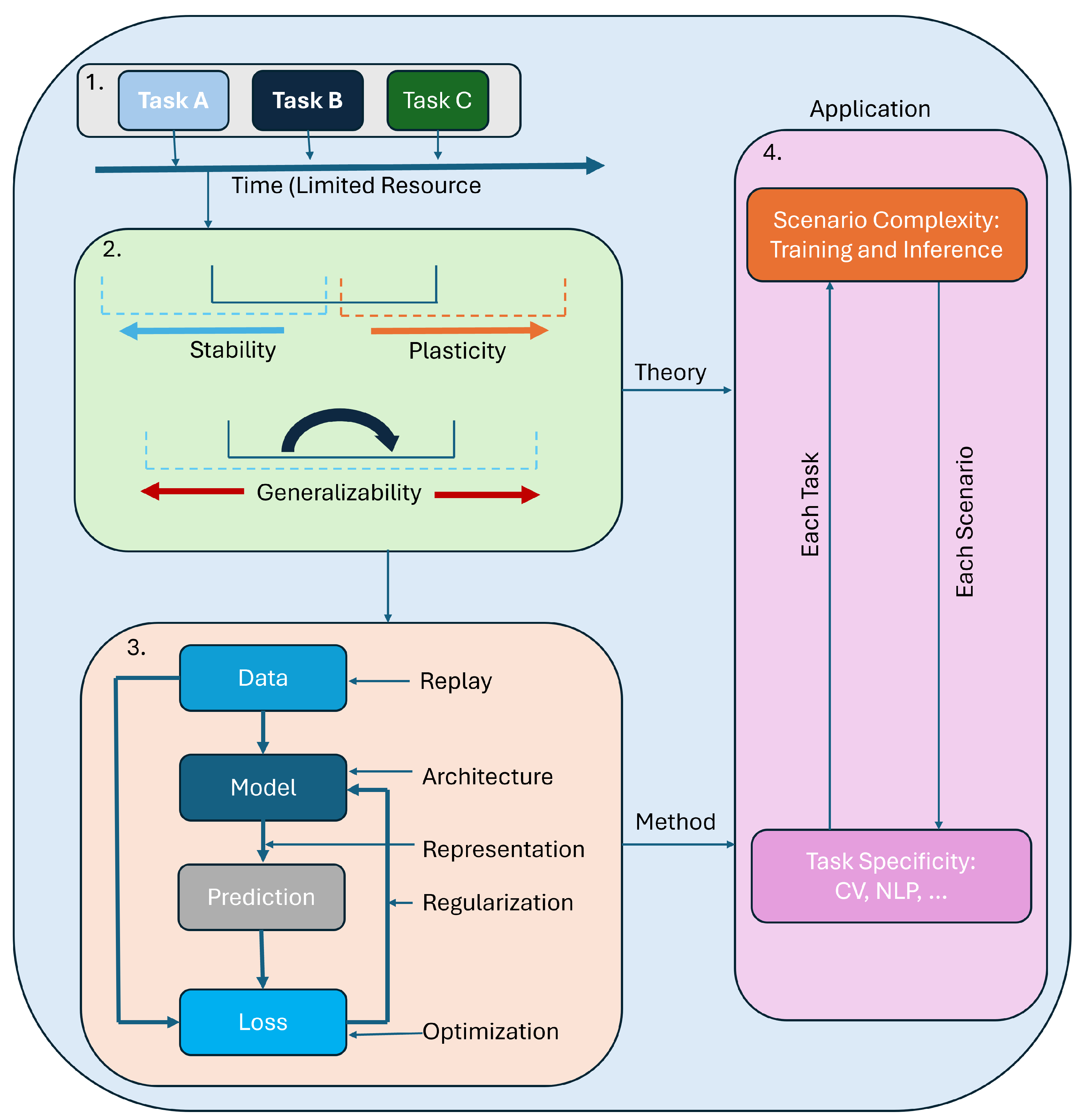

2.1. Continual Learning and Its Importance

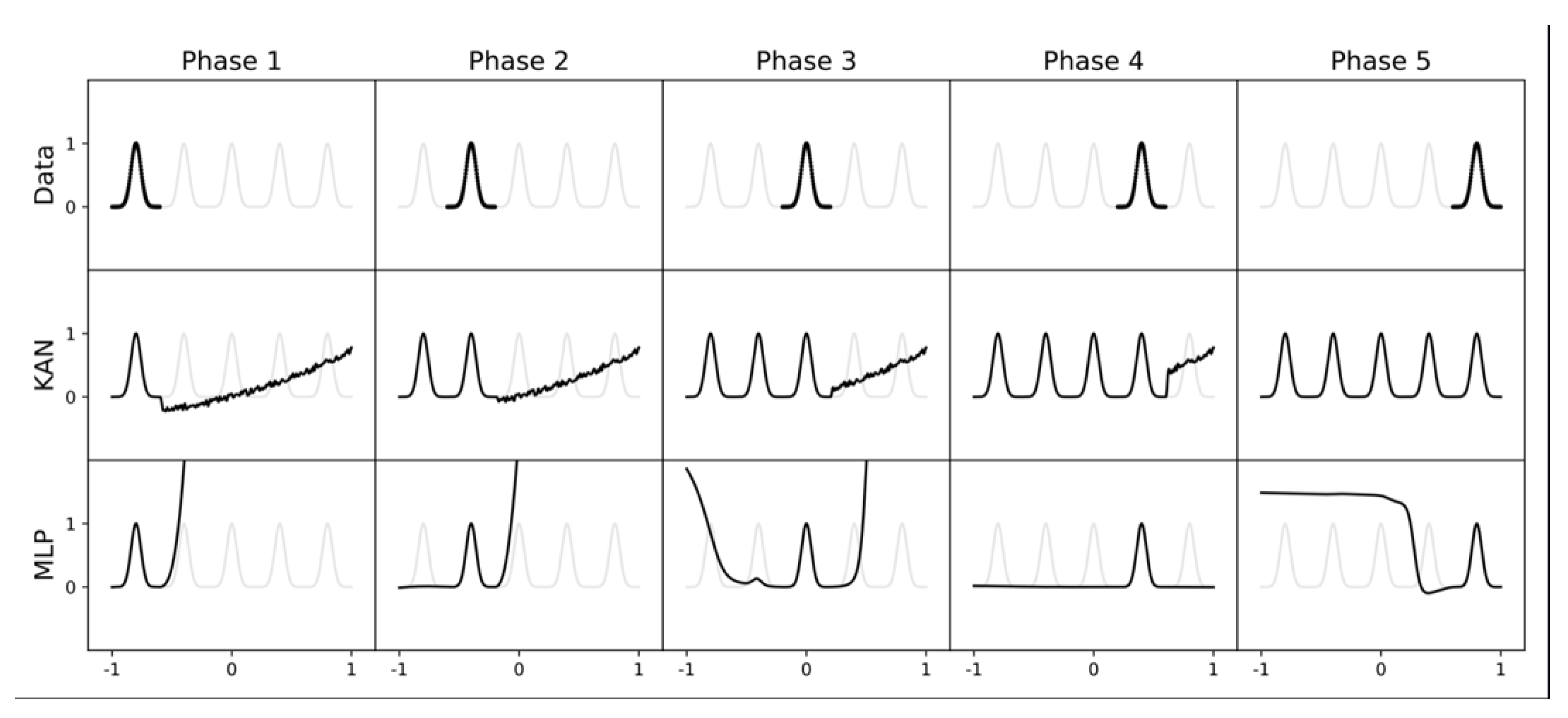

2.2. Catastrophic Forgetting: The Core Challenge

2.3. Vision Transformers

2.4. Kolmogorov–Arnold Networks

2.4.1. KANs in Neural Network Architectures

2.4.2. Kolmogorov-Arnold Network in Continual Learning

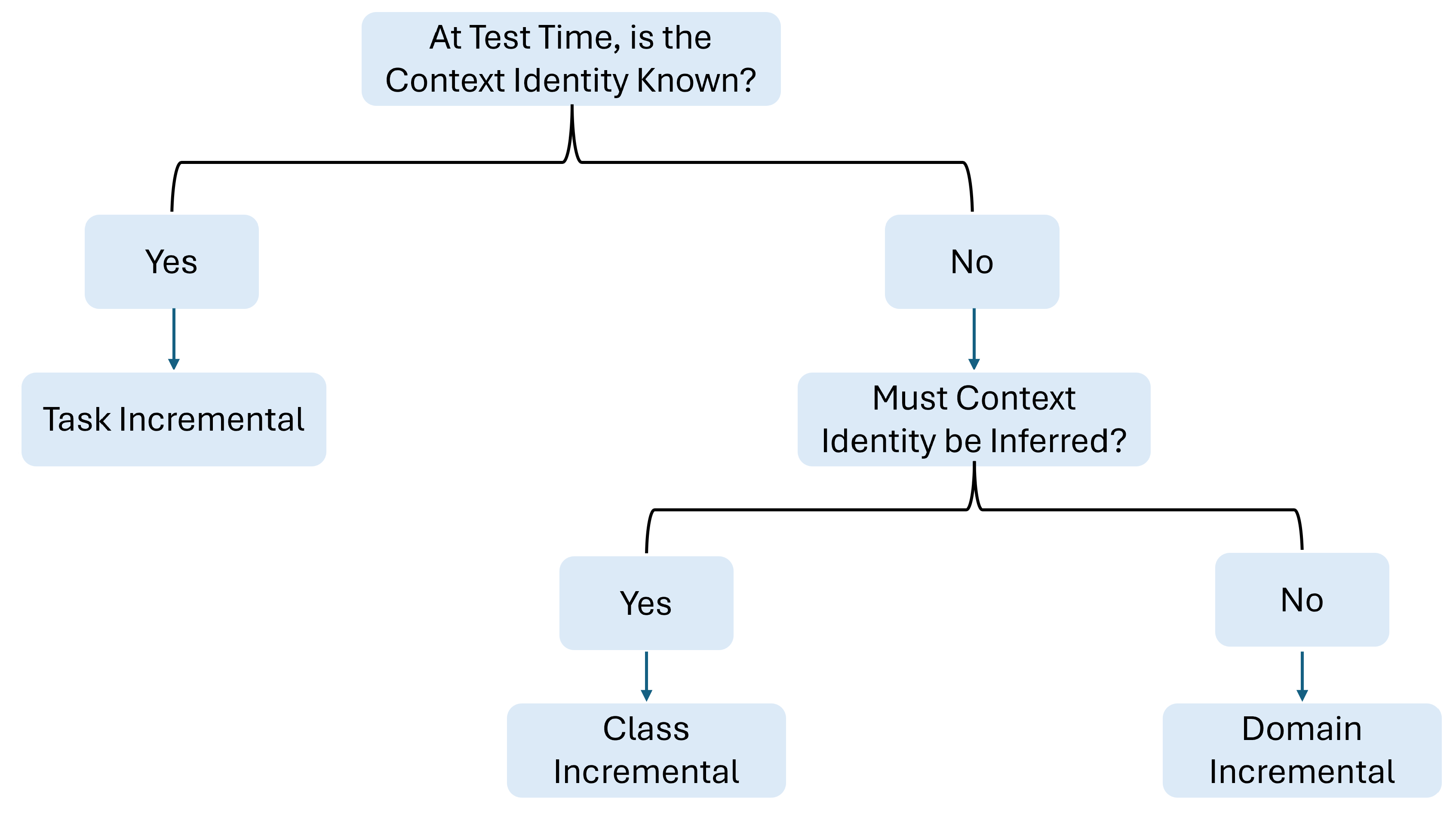

2.5. Continual Learning and Incremental Learning

2.6. Task-Based Incremental Learning to Mitigate Catastrophic Forgetting

2.7. Replay-Based Methods

2.7.1. Comparative Analysis

2.7.2. Existing Gaps and Motivation

- Can KAN enhance ViT’s capacity for CL by mitigating forgetting while maintaining learning efficiency?

- Does the combination provide a synergistic effect that improves overall task performance, especially in challenging scenarios with complex datasets like CIFAR100?

2.8. Summary

3. Materials and Methods

3.1. Models and Variants

3.1.1. Overview of MLP, KAN, and KAN-ViT Architectures

3.1.2. Multi-Layer Perceptrons

3.1.3. Kolmogorov-Arnold Network

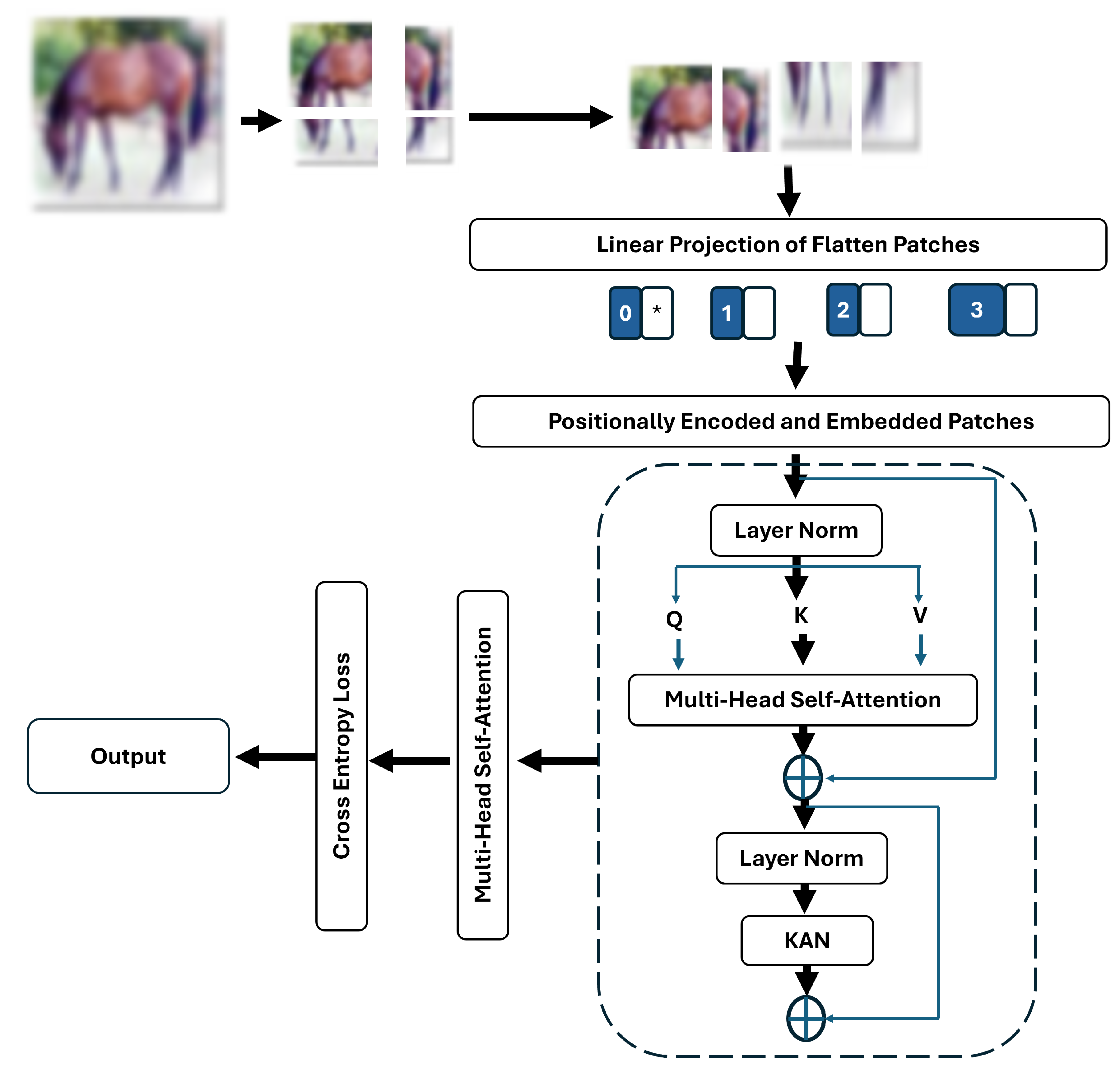

3.1.4. Kolmogorov-Arnold Network-ViT

3.1.5. Choosing a Standard Pre-Trained Vision Transformer Model

3.1.6. Replacement of MLP Layers with Kolmogorov-Arnold Network

3.1.7. Ensuring Seamless Integration of KAN Layers

3.1.8. Synergy Between Kolmogorov and Vision Transformer

3.2. Datasets and Task Splits

3.3. Evaluation Metrics

3.3.1. Last Task Accuracy

3.3.2. Average Incremental Accuracy

3.3.3. Average Global Forgetting

3.3.4. Experimental Validation and Benefits

4. Experimental Setup

4.1. Training Procedure

4.1.1. Training Epochs for Task 1

-

Epochs for Task 1 : 7The first task in the MNIST experiments is allocated 7 epochs, slightly more than the subsequent tasks. This additional training time allows the model to establish a solid foundation by thoroughly learning the features of the initial set of classes (2 classes in this case).

- Reasoning: Since the model starts with no prior knowledge, extra epochs are needed to ensure the weights are optimized effectively for the initial learning process.

- Outcome: This helps create robust feature representations that can serve as a basis for learning subsequent tasks.

-

Epochs for Task 1 : 25In CIFAR100 experiments, the first task receives 25 epochs. The larger number of epochs compared to MNIST reflects the increased complexity of the CIFAR100 dataset, which consists of higher-resolution images and more diverse classes.

- Reasoning: The model requires more training time to process and extract meaningful patterns from the more complex data of the first 10 classes.

- Outcome: This ensures that the model builds a robust understanding of the dataset, reducing the risk of misrepresentation as new tasks are introduced.

4.1.2. Training Epochs for Remaining Tasks

-

Epochs for Remaining Tasks: 5After the initial task, the model undergoes 5 epochs of training for each new task.

- Reasoning: By this stage, the model has already developed basic feature extraction capabilities. The focus shifts to integrating new class-specific knowledge while retaining previously learned information.

- Efficiency: Limiting the epochs ensures the process is computationally efficient, reducing training time without significantly compromising performance.

-

Epochs for Remaining Tasks: 10Each subsequent task in the CIFAR100 experiments is trained for 10 epochs.

- Reasoning: The complexity of CIFAR100 images necessitates more epochs per task compared to MNIST to fine-tune the model for new classes. However, it is fewer than Task 1 epochs since the initial learning has established general features that the model can reuse.

- Outcome: This balanced approach prevents overfitting to new tasks while maintaining computational feasibility.

4.1.3. Balancing Training Objectives

- Thorough Initial Learning: Extra epochs for Task 1 ensure that the model builds a strong initial representation.

- Efficiency in Subsequent Tasks: Reduced epochs for remaining tasks help balance computational resources and prevent overfitting to newer tasks while preserving knowledge from previous tasks.

4.2. Implementation Details

5. Results and Discussion

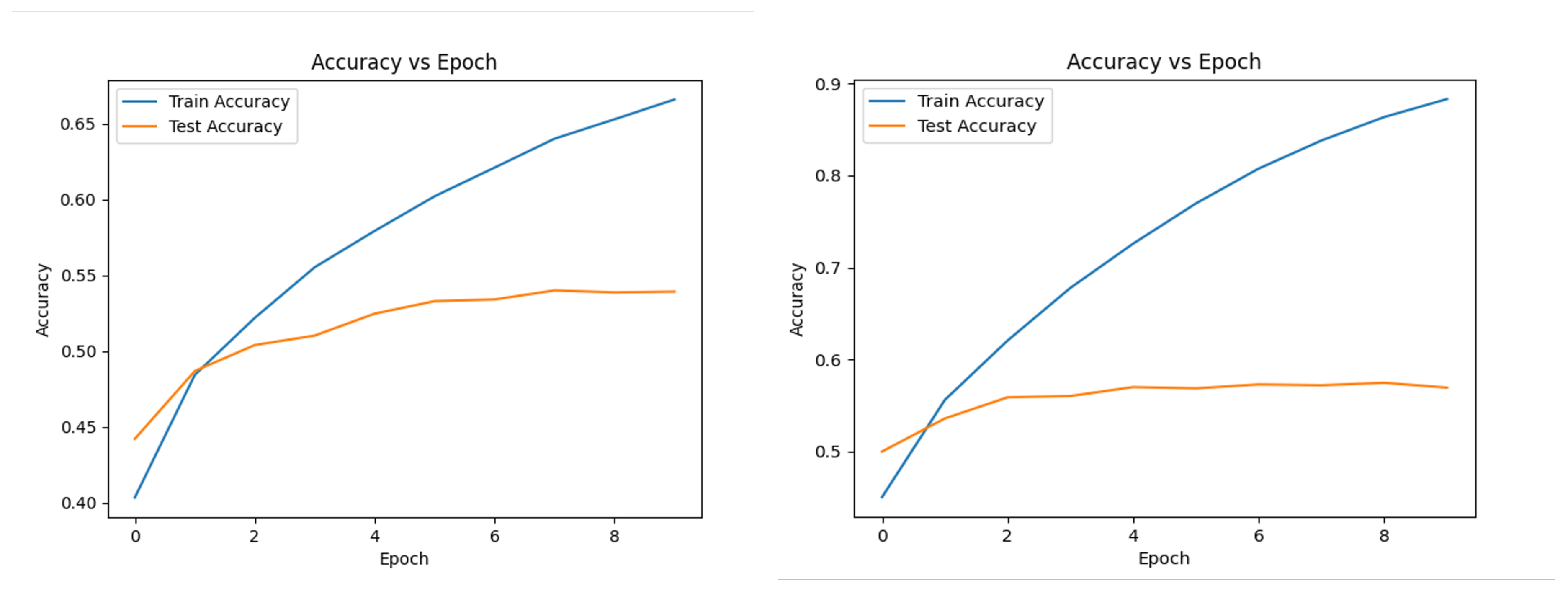

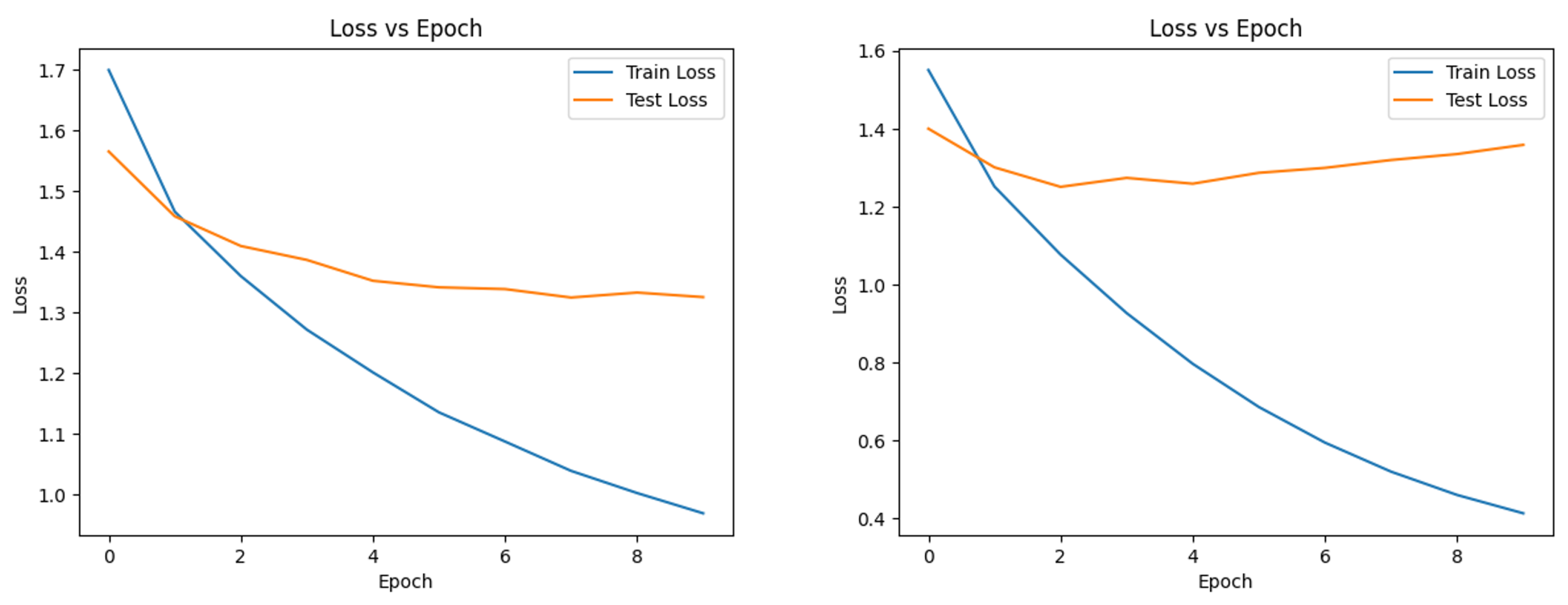

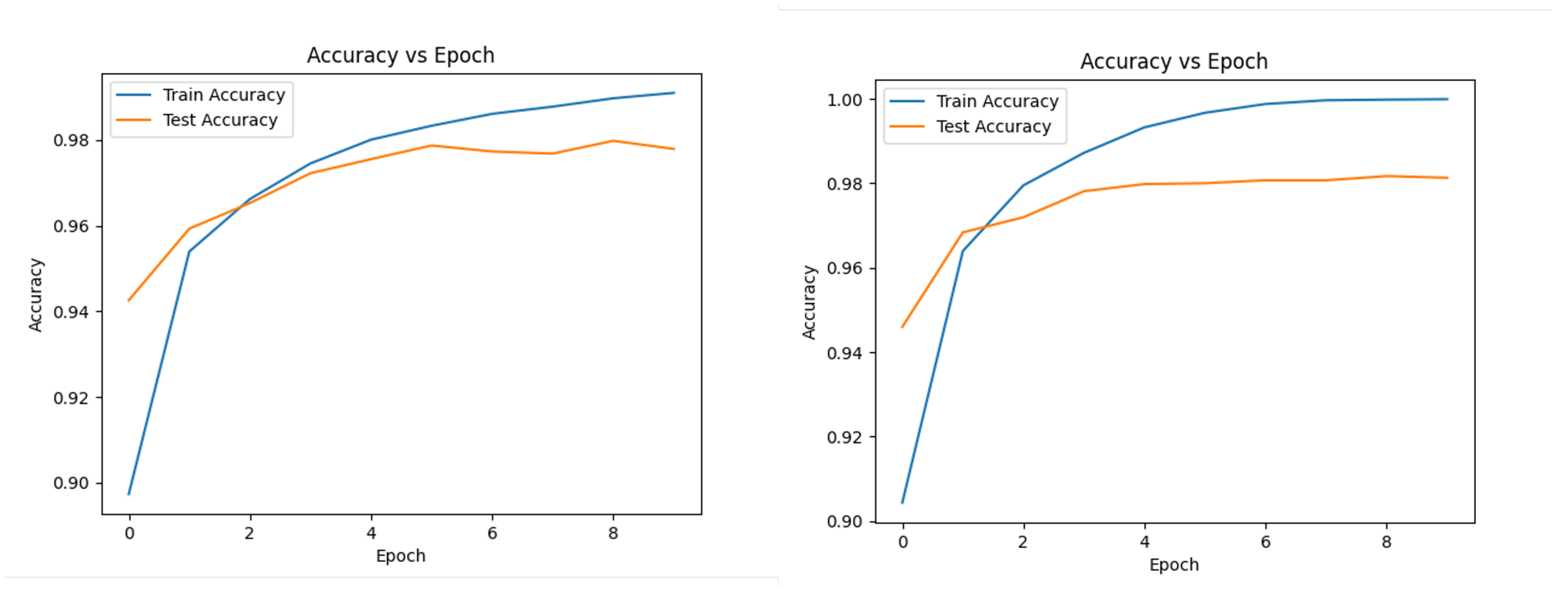

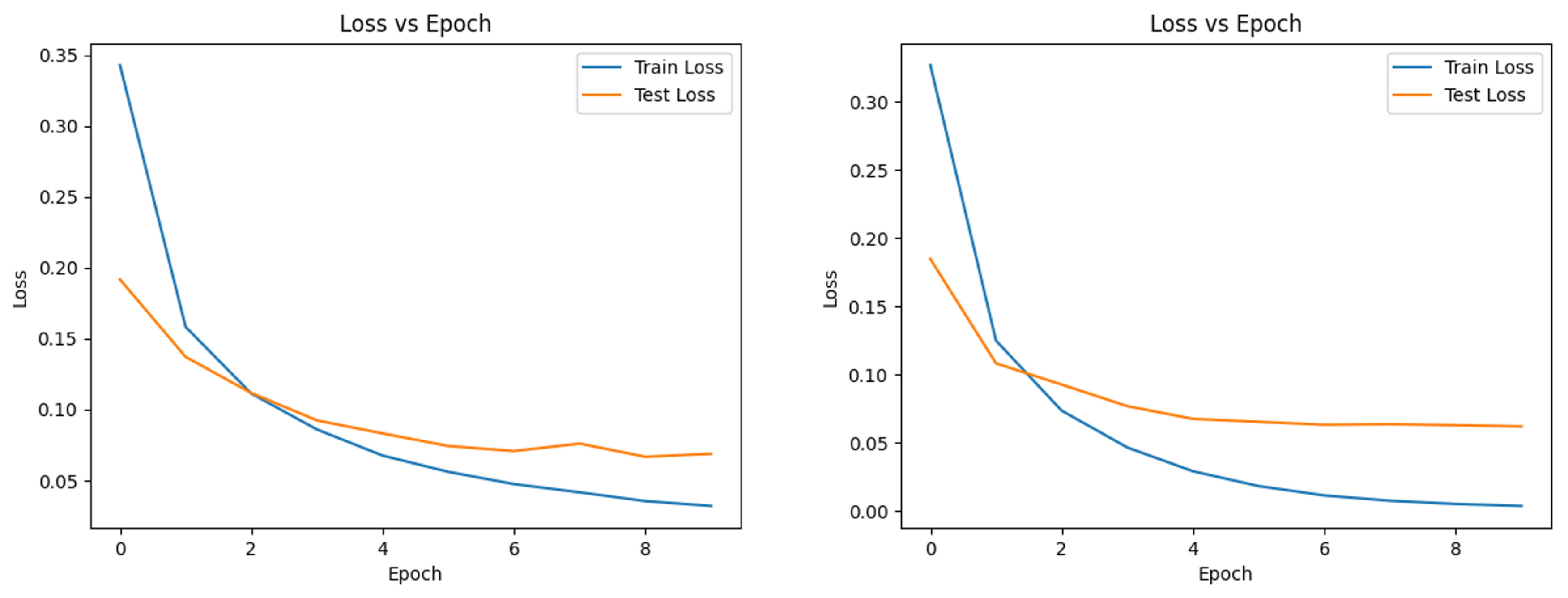

5.1. Overall Performance Analysis

6. Conclusion and Future Work

CRediT authorship contribution statement

Declaration of Competing Interests

Acknowledgments

References

- Wang, L.; Zhang, X.; Su, H.; Zhu, J. A comprehensive survey of continual learning: Theory, method and application. IEEE Transactions on Pattern Analysis and Machine Intelligence 2024. [Google Scholar] [CrossRef] [PubMed]

- Van de Ven, G.M.; Tuytelaars, T.; Tolias, A.S. Three types of incremental learning. Nature Machine Intelligence 2022, 4, 1185–1197. [Google Scholar] [CrossRef] [PubMed]

- Gupta, R.; Gupta, S.; Parikh, R.; Gupta, D.; Javaheri, A.; Shaktawat, J.S. Personalized Artificial General Intelligence (AGI) via Neuroscience-Inspired Continuous Learning Systems. arXiv preprint arXiv:2504.20109, arXiv:2504.20109 2025.

- Lesort, T.; Caccia, M.; Rish, I. Understanding continual learning settings with data distribution drift analysis. arXiv preprint arXiv:2104.01678, arXiv:2104.01678 2021.

- Lesort, T.; Lomonaco, V.; Stoian, A.; Maltoni, D.; Filliat, D.; Díaz-Rodríguez, N. Continual learning for robotics: Definition, framework, learning strategies, opportunities and challenges. Information fusion 2020, 58, 52–68. [Google Scholar] [CrossRef]

- Xu, X.; Chen, J.; Thakur, D.; Hong, D. Multi-modal disease segmentation with continual learning and adaptive decision fusion. Information Fusion 2025, 102962. [Google Scholar] [CrossRef]

- Parisi, G.I.; Kemker, R.; Part, J.L.; Kanan, C.; Wermter, S. Continual lifelong learning with neural networks: A review. Neural networks 2019, 113, 54–71. [Google Scholar] [CrossRef] [PubMed]

- Thrun, S. Lifelong learning algorithms. In Learning to learn; Springer, 1998; pp. 181–209. [Google Scholar]

- Aljundi, R.; Chakravarty, P.; Tuytelaars, T. Expert gate: Lifelong learning with a network of experts. In Proceedings of the IEEE conference on computer vision and pattern recognition; 2017; pp. 3366–3375. [Google Scholar]

- De Lange, M.; Aljundi, R.; Masana, M.; Parisot, S.; Jia, X.; Leonardis, A.; Slabaugh, G.; Tuytelaars, T. A continual learning survey: Defying forgetting in classification tasks. IEEE transactions on pattern analysis and machine intelligence 2021, 44, 3366–3385. [Google Scholar]

- Ke, Z.; Liu, B.; Xu, H.; Shu, L. CLASSIC: Continual and contrastive learning of aspect sentiment classification tasks. arXiv preprint arXiv:2112.02714, arXiv:2112.02714 2021.

- Mirza, M.J.; Masana, M.; Possegger, H.; Bischof, H. An efficient domain-incremental learning approach to drive in all weather conditions. In Proceedings of the Proceedings of the IEEE/CVF conference on computer vision and pattern recognition; 2022; pp. 3001–3011. [Google Scholar]

- Uguroglu, S.; Carbonell, J. Feature selection for transfer learning. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Springer; 2011; pp. 430–442. [Google Scholar]

- Mounsaveng, S.; Vazquez, D.; Ayed, I.B.; Pedersoli, M. Adversarial learning of general transformations for data augmentation. arXiv preprint arXiv:1909.09801, arXiv:1909.09801 2019.

- Li, D.; Wang, T.; Chen, J.; Kawaguchi, K.; Lian, C.; Zeng, Z. Multi-view class incremental learning. Information Fusion 2024, 102, 102021. [Google Scholar] [CrossRef]

- Tao, X.; Hong, X.; Chang, X.; Dong, S.; Wei, X.; Gong, Y. Few-shot class-incremental learning. In Proceedings of the Proceedings of the IEEE/CVF conference on computer vision and pattern recognition; 2020; pp. 12183–12192. [Google Scholar]

- Masana, M.; Liu, X.; Twardowski, B.; Menta, M.; Bagdanov, A.D.; Van De Weijer, J. Class-incremental learning: survey and performance evaluation on image classification. IEEE Transactions on Pattern Analysis and Machine Intelligence 2022, 45, 5513–5533. [Google Scholar] [CrossRef] [PubMed]

- Cossu, A.; Graffieti, G.; Pellegrini, L.; Maltoni, D.; Bacciu, D.; Carta, A.; Lomonaco, V. Is class-incremental enough for continual learning? Frontiers in Artificial Intelligence 2022, 5, 829842. [Google Scholar] [CrossRef] [PubMed]

- Masip, S.; Rodriguez, P.; Tuytelaars, T.; van de Ven, G.M. Continual learning of diffusion models with generative distillation. arXiv preprint arXiv:2311.14028, arXiv:2311.14028 2023.

- Liu, L.; Li, X.; Thakkar, M.; Li, X.; Joty, S.; Si, L.; Bing, L. Towards robust low-resource fine-tuning with multi-view compressed representations. arXiv preprint arXiv:2211.08794, arXiv:2211.08794 2022.

- Song, H.; Kim, M.; Park, D.; Shin, Y.; Lee, J.G. Learning from noisy labels with deep neural networks: A survey. IEEE transactions on neural networks and learning systems 2022, 34, 8135–8153. [Google Scholar] [CrossRef] [PubMed]

- Lin, H.; Shen, S.; Zhang, Y.; Xia, R. Unsupervised Contrastive Graph Kolmogorov–Arnold Networks Enhanced Cross-Modal Retrieval Hashing. Mathematics 2025, 13, 1880. [Google Scholar] [CrossRef]

- Lin, Z.; Wang, D.; Cao, C.; Xie, H.; Zhou, T.; Cao, C. GSA-KAN: A hybrid model for short-term traffic forecasting. Mathematics 2025, 13, 1158. [Google Scholar] [CrossRef]

- Dahan, S.; Williams, L.Z.; Fawaz, A.; Rueckert, D.; Robinson, E.C. Surface analysis with vision transformers. arXiv preprint arXiv:2205.15836, arXiv:2205.15836 2022.

- Grosz, S.A.; Jain, A.K. Afr-net: Attention-driven fingerprint recognition network. IEEE Transactions on biometrics, behavior, and identity science 2023, 6, 30–42. [Google Scholar] [CrossRef]

- Kheddar, H. Transformers and large language models for efficient intrusion detection systems: A comprehensive survey. Information Fusion, 1033. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, arXiv:2010.11929 2020.

- Wu, G. Powerful Design of Small Vision Transformer on CIFAR10. arXiv preprint arXiv:2501.06220, arXiv:2501.06220 2025.

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljačić, M.; Hou, T.Y.; Tegmark, M. Kan: Kolmogorov-arnold networks. arXiv preprint arXiv:2404.19756, arXiv:2404.19756 2024.

- Ramasesh, V.V.; Dyer, E.; Raghu, M. Anatomy of catastrophic forgetting: Hidden representations and task semantics. arXiv preprint arXiv:2007.07400, arXiv:2007.07400 2020.

- Shen, Y.; Guo, P.; Wu, J.; Huang, Q.; Le, N.; Zhou, J.; Jiang, S.; Unberath, M. Movit: Memorizing vision transformers for medical image analysis. In Proceedings of the International Workshop on Machine Learning in Medical Imaging; Springer, 2023; pp. 205–213. [Google Scholar]

- Kolmogorov, A.N. On the representations of continuous functions of many variables by superposition of continuous functions of one variable and addition. In Proceedings of the Dokl. Akad. Nauk USSR; 1957; 114, pp. 953–956. [Google Scholar]

- Krizhevsky, A.; Hinton, G.; et al. Learning multiple layers of features from tiny images 2009.

- Livieris, I.E. C-kan: A new approach for integrating convolutional layers with kolmogorov–arnold networks for time-series forecasting. Mathematics 2024, 12, 3022. [Google Scholar] [CrossRef]

- Rusu, A.A.; Rabinowitz, N.C.; Desjardins, G.; Soyer, H.; Kirkpatrick, J.; Kavukcuoglu, K.; Pascanu, R.; Hadsell, R. Progressive neural networks. arXiv preprint arXiv:1606.04671, arXiv:1606.04671 2016.

- Rebuffi, S.A.; Kolesnikov, A.; Sperl, G.; Lampert, C.H. icarl: Incremental classifier and representation learning. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition; 2017; pp. 2001–2010. [Google Scholar]

- Lopez-Paz, D.; Ranzato, M. Gradient episodic memory for continual learning. Advances in neural information processing systems 2017, 30. [Google Scholar]

- Lilhore, U.K.; Imoize, A.L.; Lee, C.C.; Simaiya, S.; Pani, S.K.; Goyal, N.; Kumar, A.; Li, C.T. Enhanced convolutional neural network model for cassava leaf disease identification and classification. Mathematics 2022, 10, 580. [Google Scholar] [CrossRef]

- Aldahdooh, A.; Hamidouche, W.; Deforges, O. Reveal of vision transformers robustness against adversarial attacks. arXiv preprint arXiv:2106.03734, arXiv:2106.03734 2021.

- Nafisah, S.I.; Muhammad, G.; Hossain, M.S.; AlQahtani, S.A. A comparative evaluation between convolutional neural networks and vision transformers for COVID-19 detection. Mathematics 2023, 11, 1489. [Google Scholar] [CrossRef]

- Zhang, Z.; Xu, R.; Zhou, J.; Wang, C.; Pei, X.; Xu, W.; Zhang, J.; Guo, L.; Gao, L.; Xu, W.; et al. Image Recognition with Online Lightweight Vision Transformer: A Survey. arXiv preprint arXiv:2505.03113, arXiv:2505.03113 2025.

- Pereira, G.A.; Hussain, M. A review of transformer-based models for computer vision tasks: Capturing global context and spatial relationships. arXiv preprint arXiv:2408.15178, arXiv:2408.15178 2024.

- Lee, J.; Choi, D.W. Lossless Token Merging Even Without Fine-Tuning in Vision Transformers. arXiv preprint arXiv:2505.15160, arXiv:2505.15160 2025.

- Shi, M.; Zhou, Y.; Yu, R.; Li, Z.; Liang, Z.; Zhao, X.; Peng, X.; Vedantam, S.R.; Zhao, W.; Wang, K.; et al. Faster Vision Mamba is Rebuilt in Minutes via Merged Token Re-training. arXiv preprint arXiv:2412.12496, arXiv:2412.12496 2024.

- Sharma, N.; Jain, V.; Mishra, A. An analysis of convolutional neural networks for image classification. Procedia computer science 2018, 132, 377–384. [Google Scholar] [CrossRef]

- Basu, A.; Sathya, M. Handwritten Digit Recognition Using Improved Bounding Box Recognition Technique. arXiv preprint arXiv:2111.05483, arXiv:2111.05483 2021.

- Picek, L.; Šulc, M.; Matas, J.; Jeppesen, T.S.; Heilmann-Clausen, J.; Læssøe, T.; Frøslev, T. Danish fungi 2020-not just another image recognition dataset. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision; 2022; pp. 1525–1535. [Google Scholar]

- ParisiGerman, I.; PartJose, L.; et al. Continual lifelong learning with neural networks 2019.

- Theotokis, P. Human Brain Inspired Artificial Intelligence Neural Networks. Journal of Integrative Neuroscience 2025, 24, 26684. [Google Scholar] [CrossRef] [PubMed]

- Rahman, S.; Lee, W. Out of distribution performance of state of art vision model. arXiv preprint arXiv:2301.10750, arXiv:2301.10750 2023.

| 1 | |

| 2 | |

| 3 | |

| 4 |

| Dataset | Model | Epoch | Train Accuracy (%) |

Test Accuracy (%) |

Train Inference Time Per Epoch(s) |

Test Inference Time Per Epoch(s) |

|---|---|---|---|---|---|---|

| CIFAR100 | FASTKAN | 7 | 98.5 | 54.6 | 1.939 | 0.123 |

| MLP | 8 | 64.0 | 54.0 | 1.125 | 0.043 | |

| EfficientKAN | 9 | 86.3 | 57.5 | 3.663 | 0.326 | |

| MNIST | FastKAN | 10 | 99.9 | 98.3 | 2.331 | 0.117 |

| MLP | 9 | 99.0 | 98.0 | 1.341 | 0.025 | |

| EfficientKAN | 7 | 99.9 | 98.1 | 4.389 | 0.405 |

| Model | Dataset | Average Incremental Accuracy(%) |

Last Task Accuracy(%) |

Average Global Forgetting |

|---|---|---|---|---|

| MLP | MNIST | 45.8 | 22.1 | 95.2 |

| EfficientKAN | MNIST | 52.2 | 50.9 | 71.0 |

| Dataset | Model | Average Incremental Accuracy |

Last Task Accuracy |

Average Global Forgetting |

|---|---|---|---|---|

| MNIST | ViT_MLP | 17.70 | 6.66 | 35.16 |

| ViT_KAN | 18.44 | 4.67 | 33.47 | |

| CIFAR100 | ViT_MLP | 13.63 | 4.75 | 44.67 |

| ViT_KAN | 15.49 | 4.77 | 49.85 |

| Model | Dataset | Average Incremental Accuracy |

Last Task Accuracy |

Average Global Forgetting Accuracy |

|---|---|---|---|---|

| ViT_MLP | CIFAR100 | 16.58 | 6.09 | 55.72 |

| ViT_KAN | CIFAR100 | 17.23 | 6.47 | 57.45 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).