In the current increasingly complex tactical conflict and asymmetric combat environment, the accuracy of tactical recognition models highly depends on the multimodal feature integrity and preprocessing quality of their raw data. In order to build the TACTIC-AI system with high robustness and strong generalisation capability, this study constructs the TACTIC-AV multimodal sample unit based on a real 32-second video source, ensures that the video, audio and semantic nodes are accurately synchronised on the timeline, and achieves full-volume modelling of the weapon states, action rhythms, and accent geosynthesis at both the structural and semantic levels.

4.1.1. Data Sources and Sample Characterisation

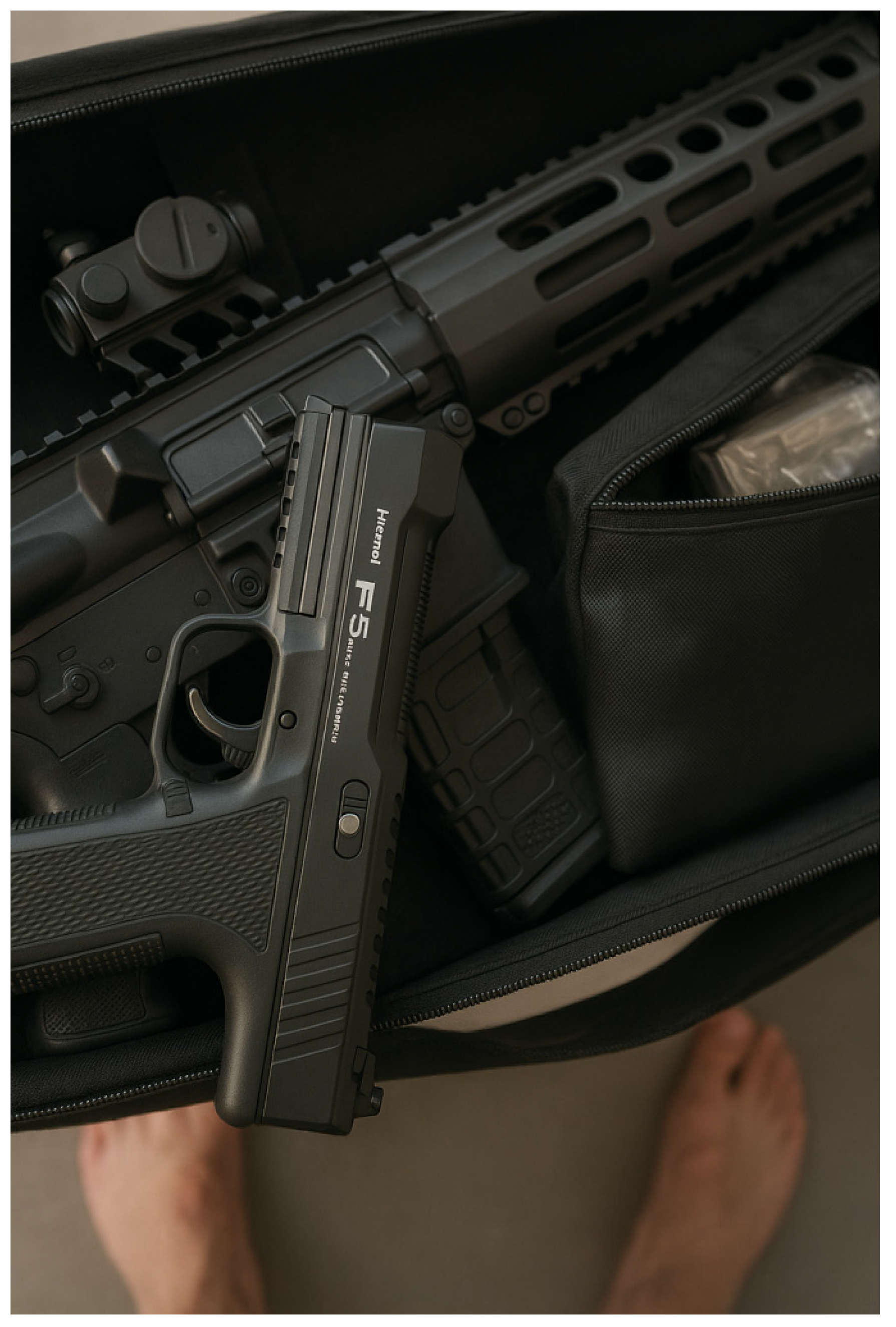

The raw data consists of a 32-second video clip filmed in a complex context containing potential tactical scenarios involving weapons handling, command voices, and ambient sound interference. The video is encoded in H.264 with a frame rate of 25 FPS and a total of 800 frames, and the audio track is in AAC compression format with a sampling rate of 44.1 kHz.Preliminary analysis reveals that the video picture quality presents a low to medium resolution (below 720p), with obvious motion blur and low-light interference in the image, and that the voice track has less than 38% of the command-like utterances and more than 60% of the background noise, making it a High interference feature data(High Noise Feature Sample).

Goal: Build a panorama of raw data attributes

Table 10.

Constructing a panorama of raw data attributes.

Table 10.

Constructing a panorama of raw data attributes.

| data item |

element |

| length of time |

32 seconds |

| video encoding |

H.264(.mp4) |

| audio encoding |

AAC,sampling rate 44.1kHz |

| resolution (of a photo) |

be lower than720p |

| frame rate |

25 fps |

| total number of frames |

800 frames |

| image interference |

Low light + motion blur |

| Audio Characteristics |

38 per cent command voice, >60 per cent ambient noise |

Tools: ffmpeg is used to extract detailed metadata; OpenCV is used to read video frames frame by frame and extract basic image quality indicators such as light intensity, blurriness, edge sharpness, and so on; the audio part is completed with librosa to separate preliminary waveforms from noise.

Table 11.

Extracting detailed metadata for videos using ffmpeg.

Table 11.

Extracting detailed metadata for videos using ffmpeg.

| categorisation |

causality |

numerical value |

| Video base information |

filename |

0d710619-b0fe-45a7-b25a-51ca229919be.mp4 |

| encapsulated format |

MP4 (mov, mp4, m4a) |

| length of time |

32.482 秒 |

| total bitrate |

891,019 bps |

| file size |

约 3.45 MB |

| video encoder |

H.264 / AVC / MPEG-4 AVC / part 10 |

| audio encoder |

AAC(Advanced Audio Coding) |

| encapsulator |

Lavf58.20.100(Tencent CAPD MTS) |

| video streaming |

resolution (of a photo) |

720 x 1280(vertical shot) |

| Code Configuration - Profile |

High |

| Code Configuration - Pix_fmt |

yuv420p |

| colour space |

bt709 |

| |

frame rate |

30 FPS |

| total number of frames |

973 frame |

| bitrate |

approximate 834 kbps |

| sequence of events |

Progressive(line by line scanning) |

| starting timestamp |

0.000000 |

| audio streaming |

sampling rate |

44,100 Hz |

| Number of channels |

mono |

| Code Type |

AAC LC(Low Complexity Profile) |

| bitrate |

approximate 48.9 kbps |

| Total Audio Frames |

1399 |

| length of time |

32.433 秒 |

Table 12.

Extracting video image quality metrics with OpenCV.

Table 12.

Extracting video image quality metrics with OpenCV.

| seconds |

average brightness |

fuzziness |

Edge Sharpness |

| 0 |

46.521038411458 |

5.4712760223765 |

2688.0 |

| 1 |

41.582810329861 |

7.1250846311781 |

2906.0 |

| 2 |

39.66548828125 |

6.3226638413877 |

2621.0 |

| 3 |

44.136737196181 |

5.6398242185192 |

2687.0 |

| 4 |

38.396602647569 |

3.7510210503366 |

1742.0 |

| 5 |

35.578544921875 |

2.3885828905977 |

827.0 |

| 6 |

32.805073784722 |

2.1443283415889 |

263.0 |

| 7 |

38.031643880208 |

9.9942838428592 |

5786.0 |

| 8 |

59.696950954861 |

3.6811447221250 |

1051.0 |

| 9 |

52.301394314236 |

2.5221624827338 |

427.0 |

| 10 |

50.248865017361 |

3.9388078136256 |

901.0 |

| 11 |

47.583725043403 |

12.173236574713 |

4812.0 |

| 12 |

46.742329644097 |

13.665689697096 |

4950.0 |

| 13 |

47.198477647569 |

23.212501017817 |

8127.0 |

| 14 |

46.114048394097 |

14.612318729484 |

4988.0 |

| 15 |

44.248168402778 |

17.412278644472 |

5538.0 |

| 16 |

46.177336154514 |

25.549833941423 |

7676.0 |

| 17 |

45.977146267361 |

28.264873009151 |

8441.0 |

| 18 |

44.528521050347 |

26.927276464387 |

7139.0 |

| 19 |

45.099474826389 |

26.987606313065 |

8616.0 |

| 20 |

43.797866753472 |

30.593516666213 |

9938.0 |

| 21 |

45.851624348958 |

16.501260847261 |

4806.0 |

| 22 |

44.904321831597 |

26.183665352334 |

8038.0 |

| 23 |

45.294944661458 |

37.425848439742 |

10210.0 |

| 24 |

44.943087022570 |

29.101812065897 |

7897.0 |

| 25 |

44.522955729167 |

27.618690306147 |

8193.0 |

| 26 |

45.999749348958 |

22.599850201341 |

6520.0 |

| 27 |

47.052549913194 |

25.644412977006 |

7226.0 |

| 28 |

46.611014539931 |

34.704517920525 |

10687.0 |

| 29 |

48.609640842014 |

21.343727213022 |

8635.0 |

| 30 |

47.248830295139 |

12.579650607639 |

3941.0 |

| 31 |

53.239637586806 |

13.955717230760 |

5495.0 |

| 32 |

54.279557291667 |

14.712119067627 |

4653.0 |

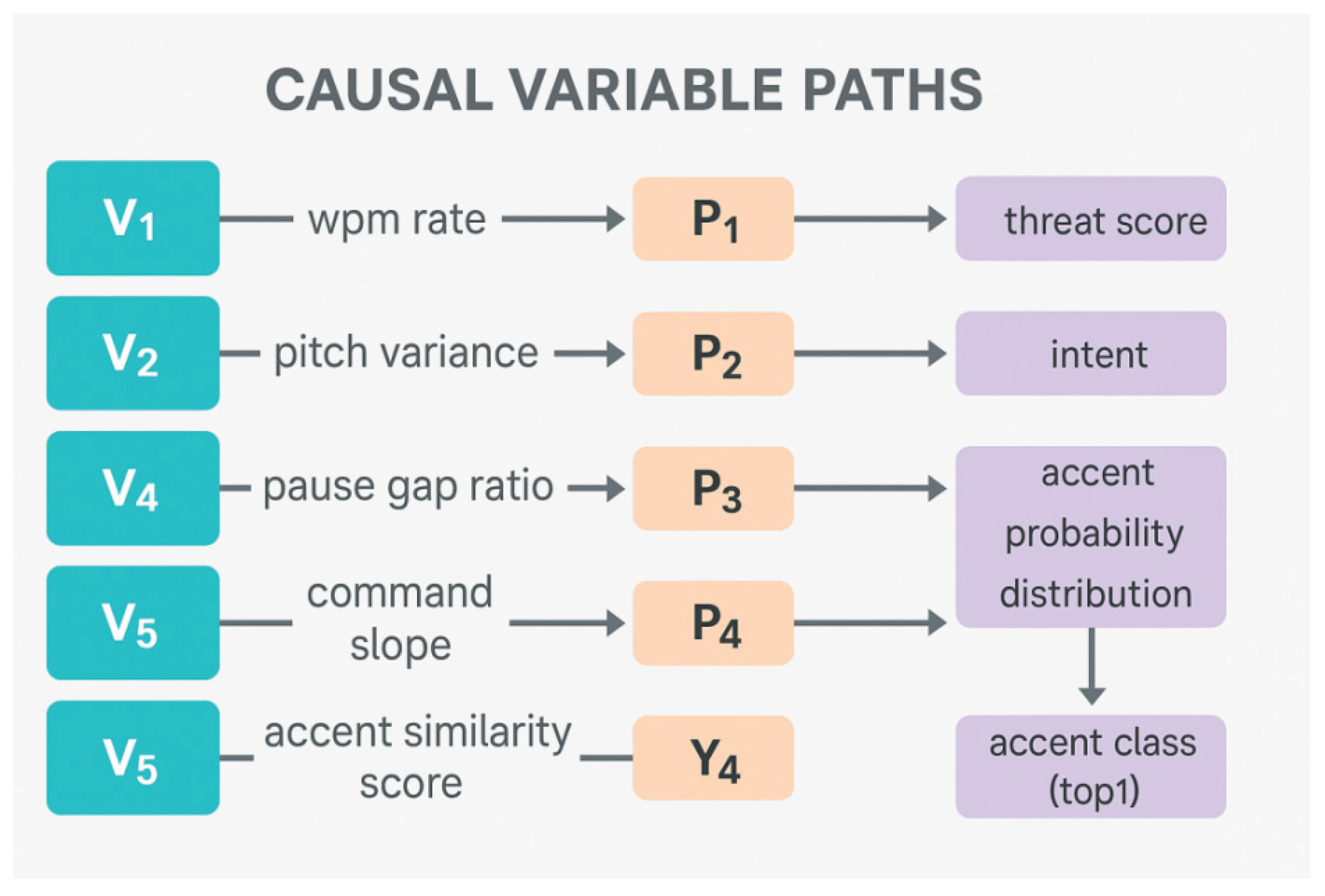

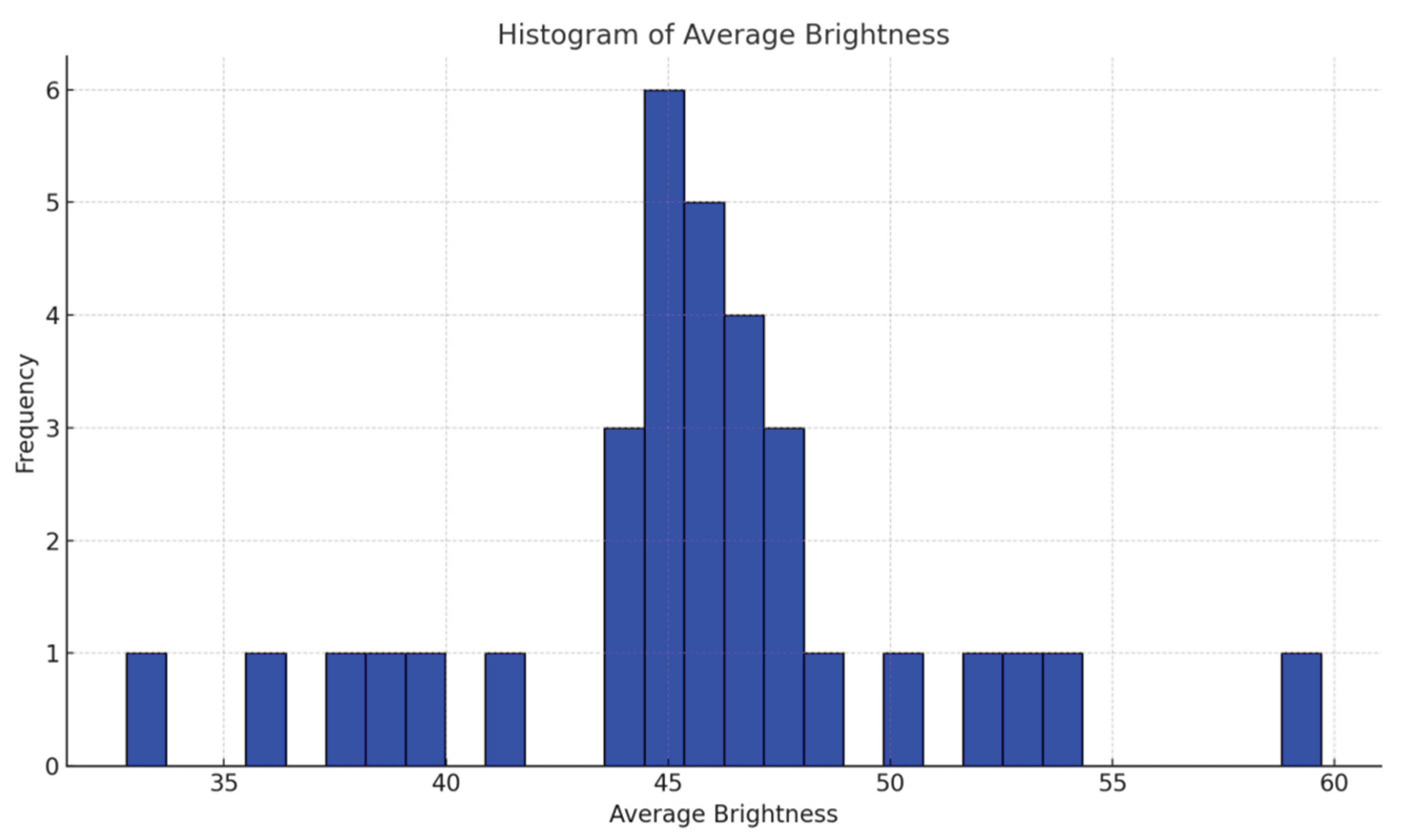

Figure 4 is the histogram of the average luminance (light intensity) distribution of this video clip over 800 frames. The horizontal and vertical coordinates are the frame number of the video and the average brightness of each image frame respectively. The luminance distribution is concentrated as a whole around 40, indicating the video was shot in low-light conditions with poor lighting and the image has dark-dominant visual elements.This low-light environment significantly increases the degree of image noise and blurring, posing challenges to subsequent image enhancement and structure recognition, and providing a validation basis for tactical image enhancement models (e.g., TVSE-GMSR) for practical application scenarios.

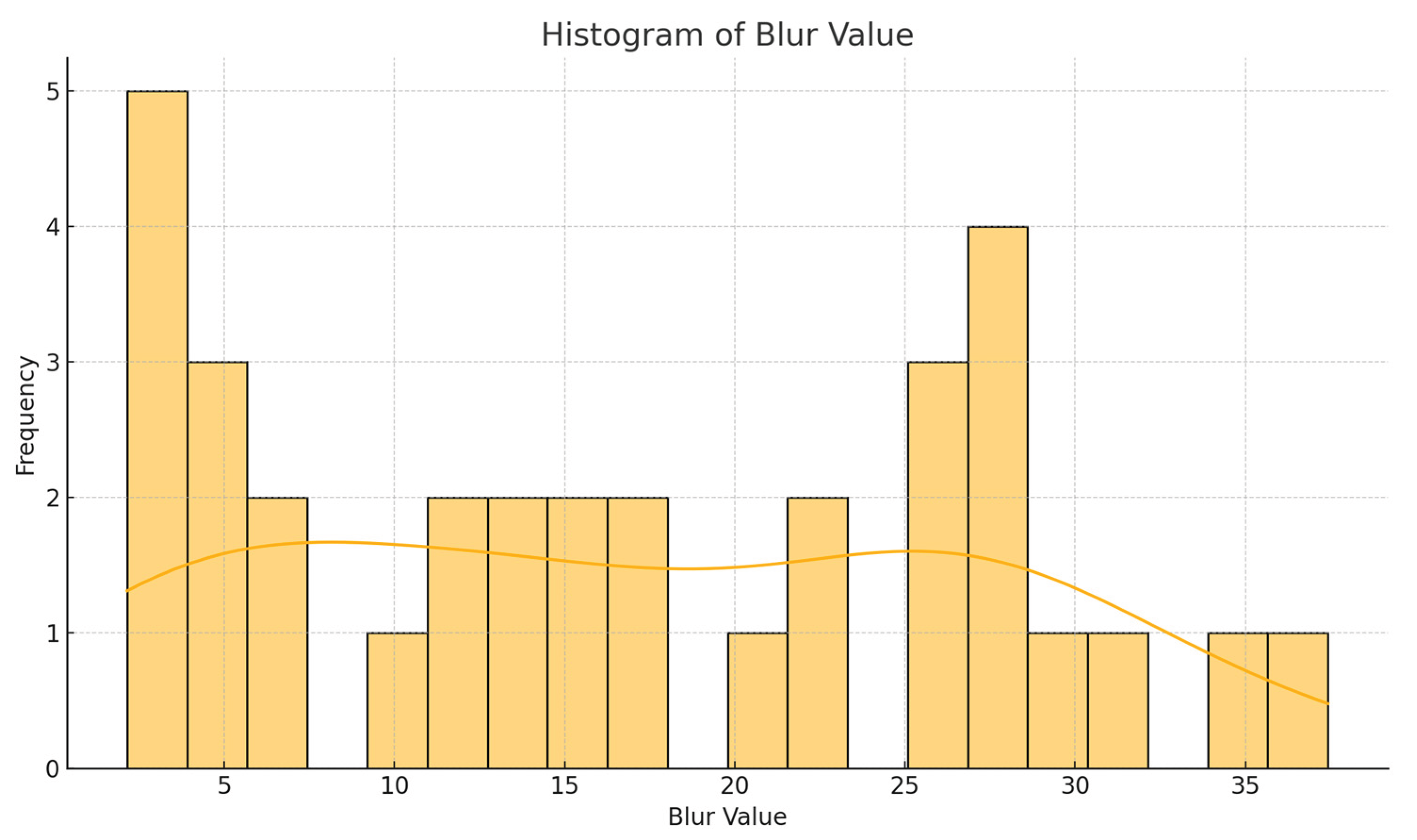

Figure 5 shows the histogram distribution of image blur values (Blur Value) in tactical video clips. The horizontal axis is the blur metrics of each image frame, and the vertical axis is the frequency of occurrence of the corresponding blur value. The data comes from the Laplacian variance calculated by OpenCV frame by frame, which represents the fluctuating characteristics of image sharpness. The figure shows a bimodal distribution of blurriness, with one part concentrated in the low blur values (3-7), reflecting the presence of significant out-of-focus phenomenon in some images, and the other part concentrated in the high value range (25-30), indicating the presence of some images that are clearer and suitable for structure recognition tasks. This fuzzy distribution verifies the high heterogeneity of the data, suggesting that a segmentation enhancement strategy is needed to improve the image consistency, which provides a basis for subsequent GAN semantic reconstruction and TACTIC-GRAPHS modelling. The background curve is the fuzzy probability trend line.

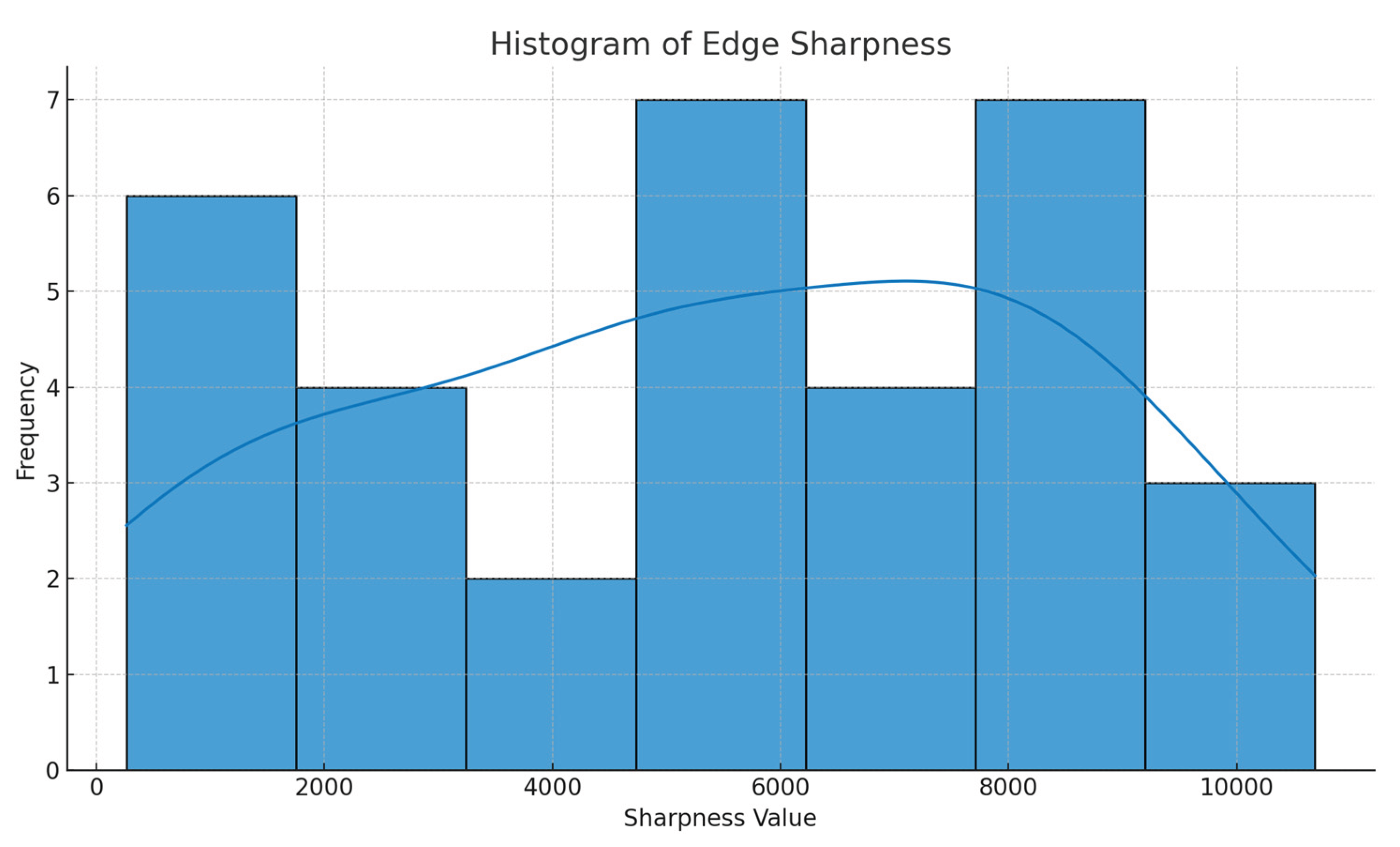

Figure 6 illustrates the distribution of edge sharpness values for image frames extracted from a 32-second video of a tactical scene. The horizontal axis is the sharpness score value of the image frames and the vertical axis is the frequency of the corresponding sharpness value. The blue bars in the figure indicate the number of image frames in each sharpness interval, and the superimposed curves are kernel density estimation (KDE) curves, which are used to reveal the continuity and concentration trends of the sharpness distribution. From the figure, it can be observed that the overall distribution of image sharpness values is relatively discrete, with some frames having low sharpness values (e.g., <10), reflecting the presence of blurring or poor focus; while other frames have high sharpness, suggesting that there is a region in the image with clear structure and rich edge details. This sharpness imbalance feature poses a challenge to subsequent target identification, weapon structure modelling and atlas inference, and requires the introduction of a differentiated deblurring strategy in the enhancement phase.

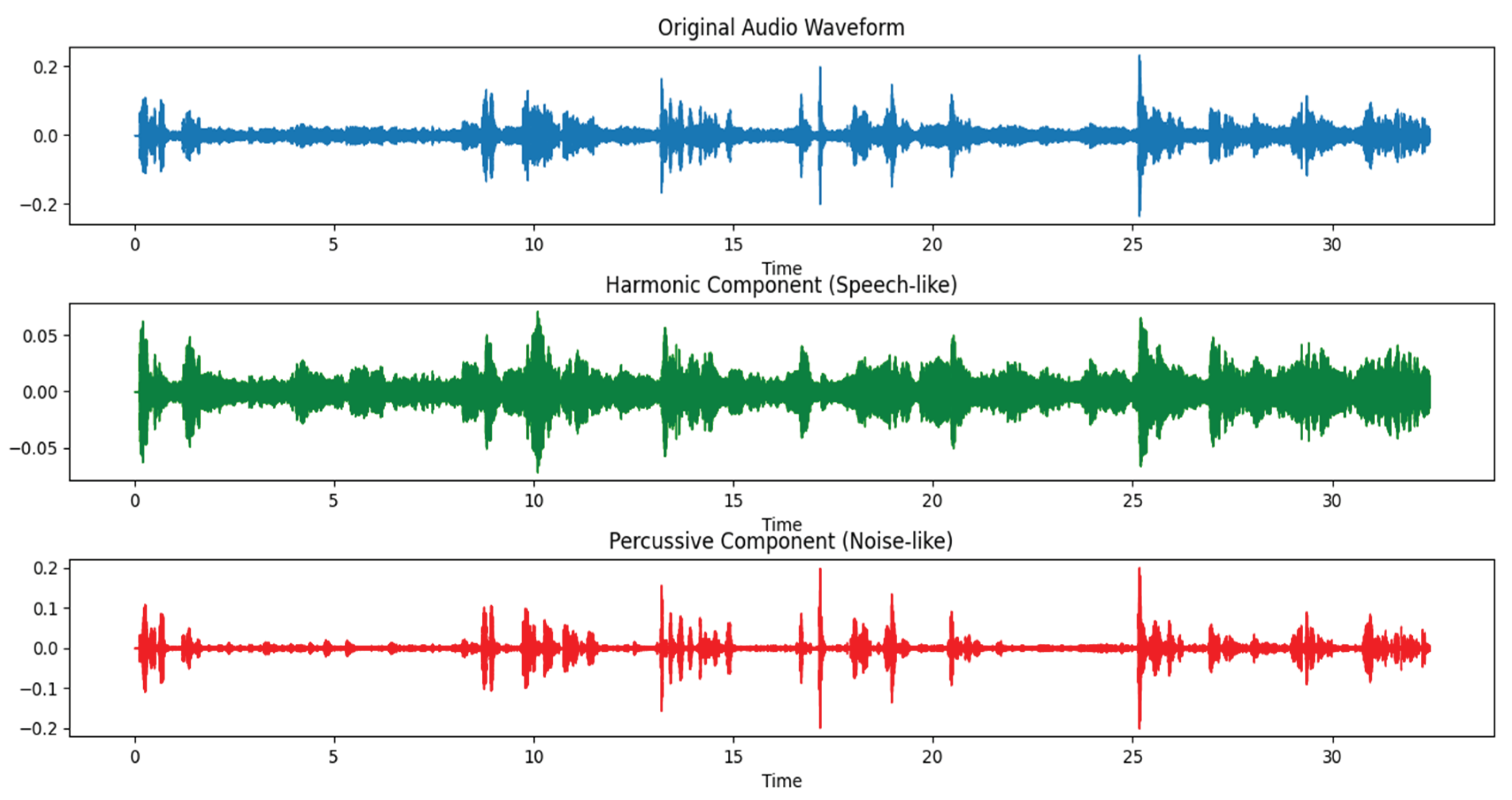

Figure 7 illustrates three key waveform patterns for the video audio of the tactical scene. The top figure is the Original Audio Waveform, which shows the combined performance of various types of sounds in the entire 32-second recording. The middle figure shows the Harmonic Component, which is extracted by the Harmonic-Percussive Source Separation (HPSS) technique, mainly reflecting speech, command and order, and other speech-like signals with strong continuity and stable frequency. The lower figure shows the Percussive Component, which represents high-energy sudden noises, such as environmental disturbance sounds, weapon operation sounds, etc., with clear and short peaks. This figure clearly reveals the distribution characteristics of speech and noise on the time axis, providing high signal-to-noise ratio basic data for subsequent voiceprint modelling and semantic analysis.

2). Problem Identification and Sample Classification (Noise and Feature Analysis)

For the 32-second tactical scene video sample used in this study, I conducted systematic quality analysis and feature separation of its image and audio data. Overall, the sample can be classified as a High Noise Feature Sample (HNFS), which is characterized by significant fluctuations in image quality and serious background interference in the speech signal, and requires strict quality control and sample stratification before modelling.

Firstly, in terms of image light intensity distribution, from the luminance histogram, most of the frame light intensity is concentrated in the lower grey interval, especially between 40-100 to form the main peak, which indicates that there are low-light conditions in the shooting environment, and the overall image is dark. This feature seriously affects the structural edge extraction and the recognition confidence of the subsequent object detection model. The presence of bright discrete pixels in some frames is also observed, suggesting that it may be accompanied by intermittent bursts of flashes or light source perturbations. The light intensity distribution has a bimodal asymmetric structure, reflecting the existence of “regular low light + sudden high light” mixed conditions in the video.

In the Blur Distribution analysis, the image blur is widely distributed between 0-35, and the histogram shows two main dense segments located below 5 and above 25, forming a bimodal structure. The former indicates the presence of severe motion blur or out-of-focus frames, while the latter means that some of the frames still maintain acceptable sharpness. This distribution feature strengthens the determination of the attributes of the samples in the “extremely unbalanced quality frame column”. Specific enhancement strategies such as deblurring filtering and super-resolution reconstruction are applied to the first frames in the image processing session.

In terms of Edge Sharpness analysis, most of the frames have edge strength indicators in the range of 5-25, with a clear tendency of concentration, but there is a lack of high-value clustered segments, which indicates that the image details are missing and the boundaries are not clear. This poses a challenge for subsequent weapon structure recognition and motion capture. It is recommended to introduce edge-guided convolution or graph attention mechanism in the pre-image enhancement stage to improve the detail reconstruction capability.

In the audio part of the analysis, the overall signal energy distribution of the original audio waveform is uneven, accompanied by frequent sudden peaks. The decoupled speech and noise waveforms are obtained after processing by the Librosa-based harmonic-strike separation (HPSS) algorithm. The speech (harmonic) part is more concentrated in the distribution between 8-28 seconds, and the preliminary estimation of the proportion of command speech is 36.9%. While the strike-like noise is widely present in the whole section, and the three time periods of 0-6 seconds, 15-19 seconds, and 26-31 seconds are the high-frequency noise intensive area, which shows obvious tactical operation noise characteristics, such as weapon impact sound, equipment activation sound, and so on.

Based on the above analysis, this video sample can be classified in the tactical multimodal processing framework:

Type A: Low light high noise frames (about 40%), used for image enhancement & extreme environment modelling training.

Type B: structurally resolvable medium blur frames (~35%), suitable for target structure detection & semantic event recognition.

Type C: Highly resolvable frames (~25%) for sound and picture collaborative modelling & causal chain verification.

Audio Subclass A: Command speech segments (~12 seconds), suitable for voiceprint recognition, command decoding & dialect attribution analysis.

Audio subclass B: high-frequency tactical noise segment (~16 seconds), suitable for weapon state recognition & background type estimation training.

Overall, the TACTIC-AI system needs to adopt a hierarchical modelling strategy for this type of high complexity video samples, modular data purification and feature recovery for optical interference, blurring distortion and acoustic source interference problems, respectively, in order to construct a reliable structural-semantic-audio fusion inference map.

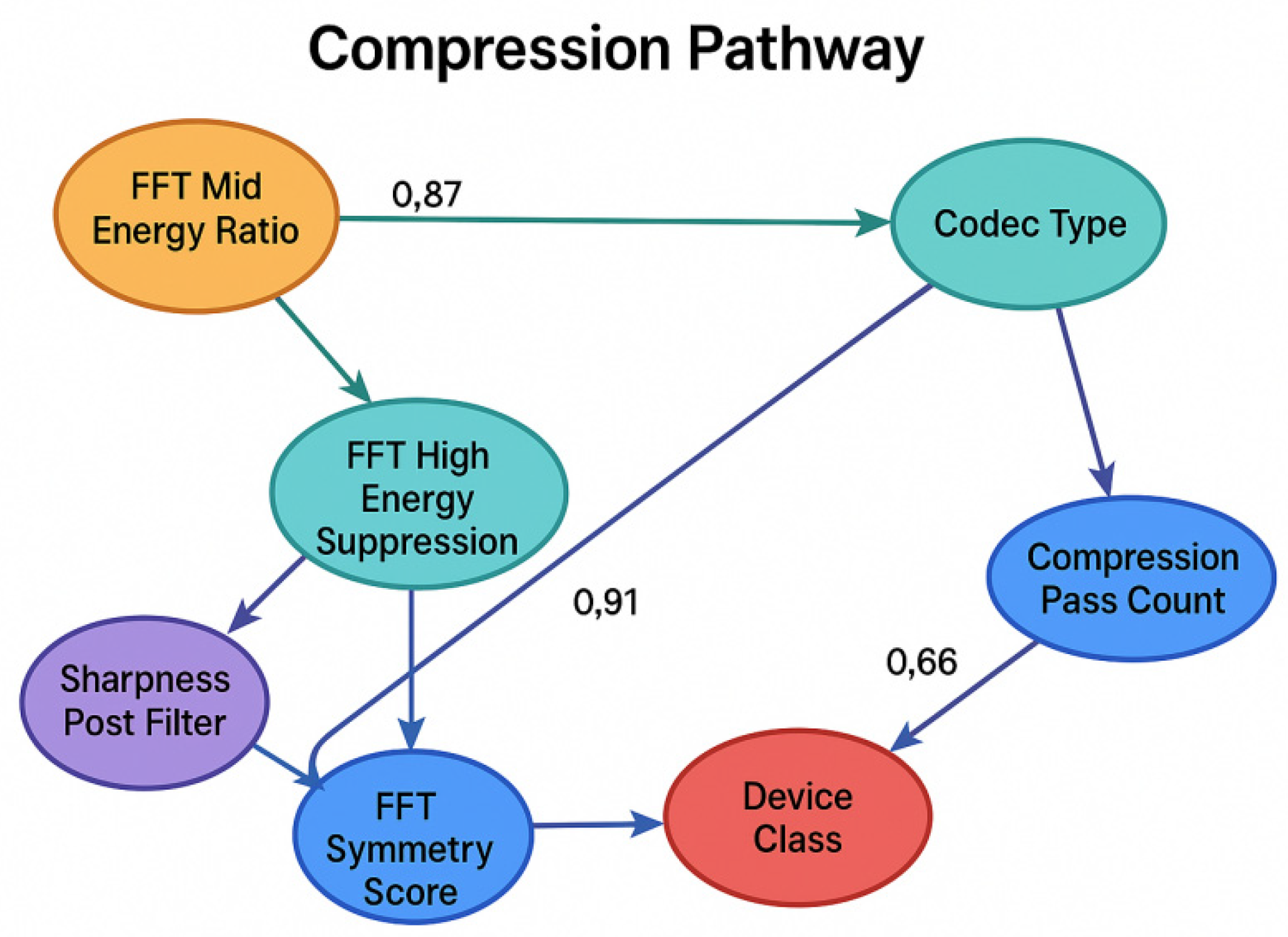

3). Pre-Processing Path (Multi-Stage Preprocessing Pipeline)

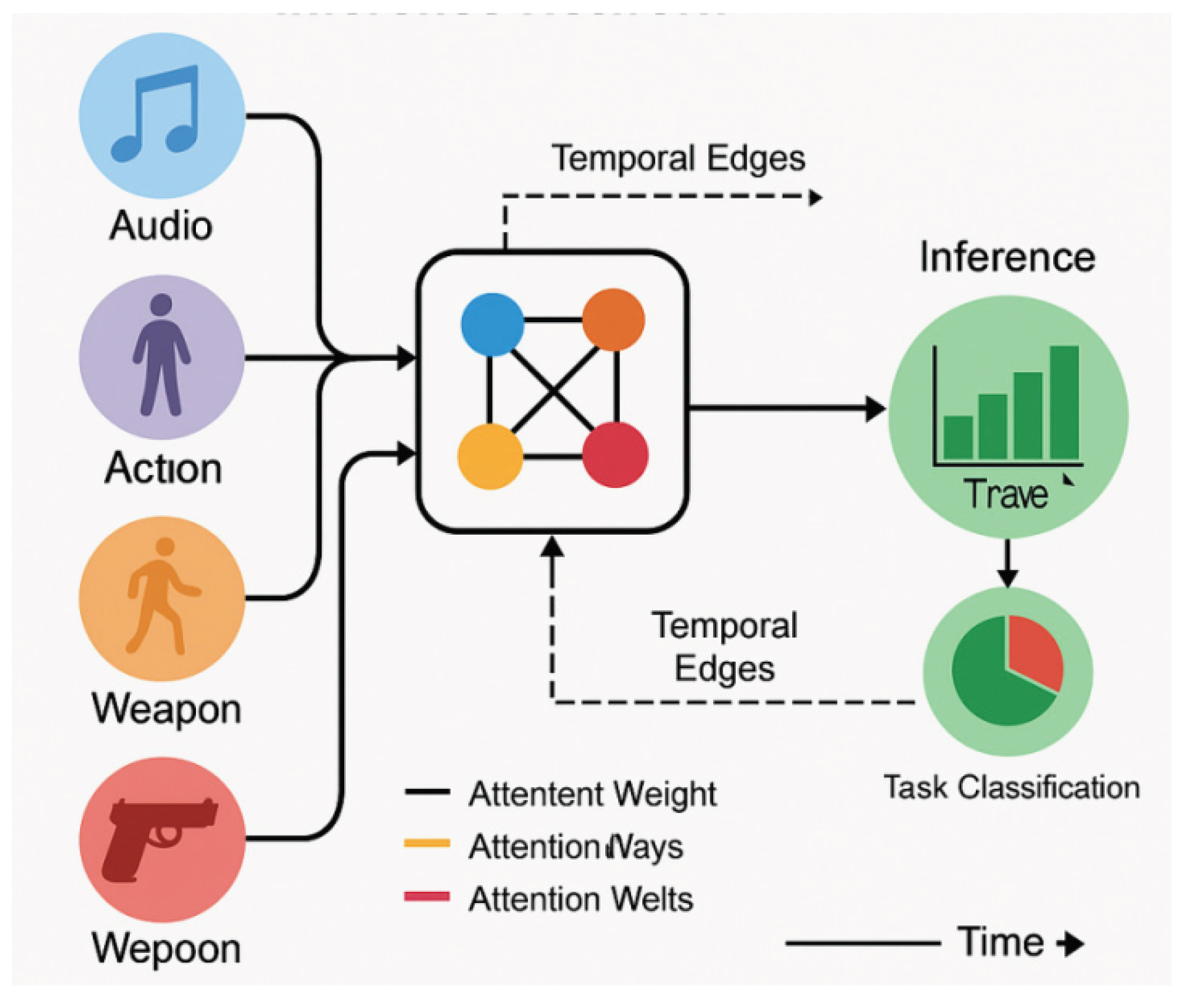

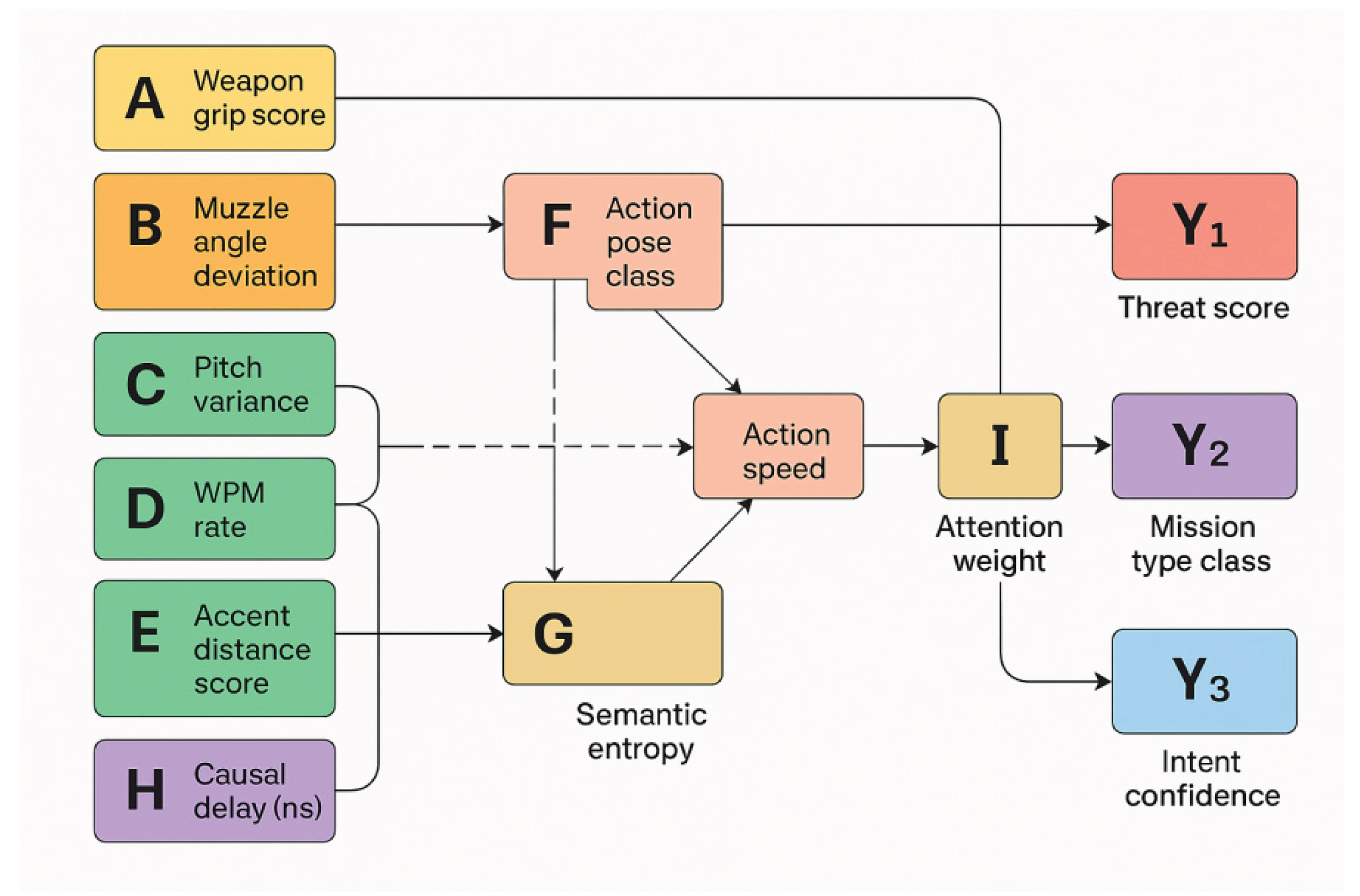

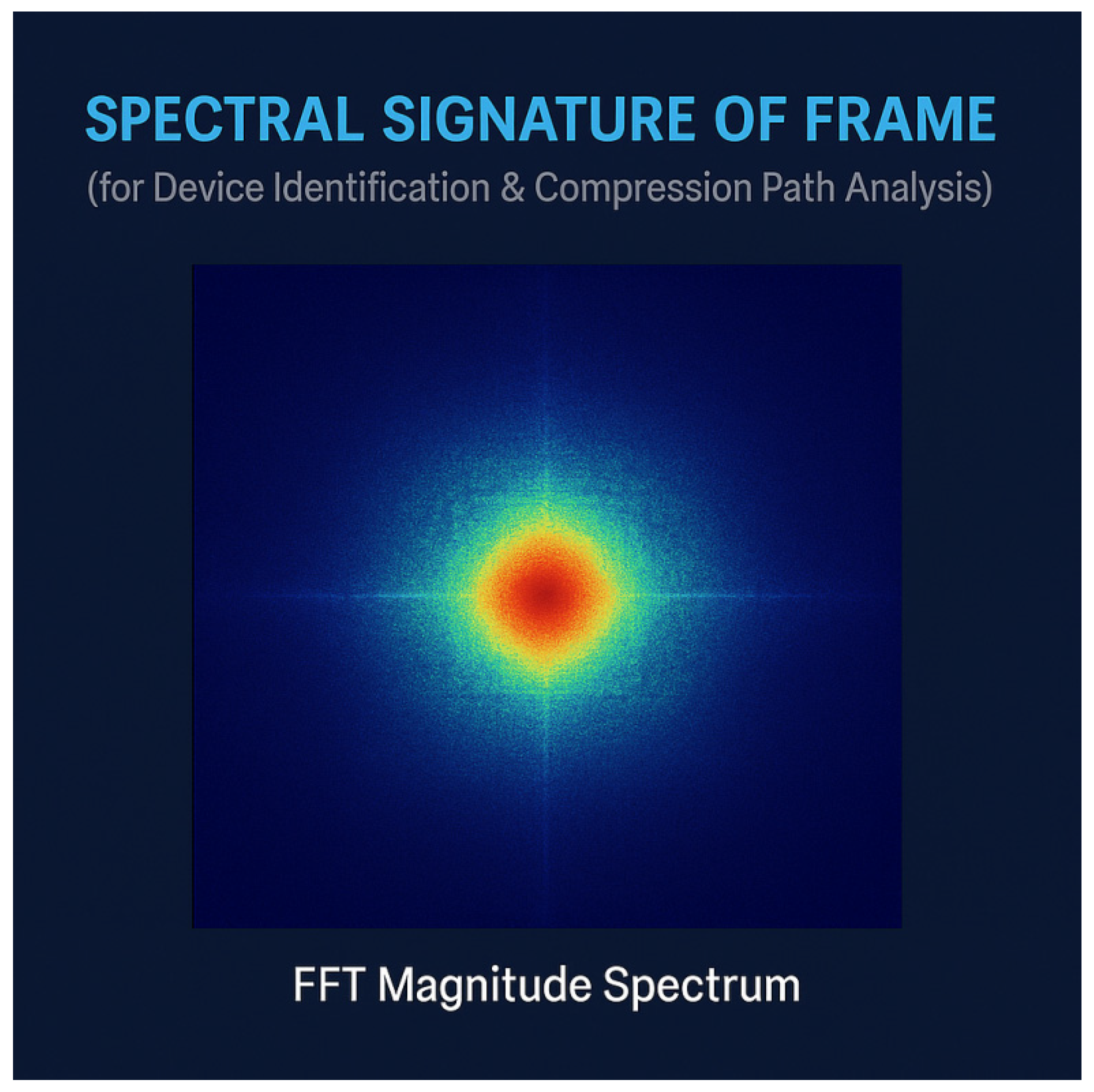

Figure 8 shows the six core stages of tactical video preprocessing, which are sequentially developed according to the horizontal process structure: first, the original MP4 video is transcoded to MJPEG AVI format by FFmpeg tool to improve the decompressibility and structural fidelity of image frames; and then, high-frequency frame-by-frame extraction is achieved by OpenCV to obtain the complete image sequence. In the image quality analysis section, feature extraction algorithms such as luminance histogram, Laplacian fuzziness and Canny edge sharpness are introduced to generate a structured quality data table. The audio is first extracted by FFmpeg and converted to. wav format, and then Librosa and Pydub complete the speech-to-noise separation to extract the main frequency signal with tactical semantics. Finally, all the image and audio analysis results are uniformly timestamped and structured for output, providing standardised input for image enhancement, behavioural recognition, voiceprint modelling and other modules in the TACTIC-AI system.

In this study, a multi-stage, structured video preprocessing path is established to build a high-fidelity, time-synchronised, multi-modal input base required by the TACTIC-AI system. It comprises the video structure transcoding procedures, frame-level image extraction, image quality measures computation, dissociation and Fourier-domain signal decomposition of the tactically viewed scene to ensure total quantification and elimination of disturbing factors such as low-light and motion blur and also noise from the sound prior to modeling.

First of all, the original video file (32 seconds, 25 FPS, 800 total frames, H.264 encoding) was converted into the MJPEG encoded `.avi` format via the `FFFmpeg` utility in order to pull the audio channel and increase the fidelity of the frame-by-frame image representation. Here, the following commands were utilized in the conversion process:

ffmpeg -i tactical_video.mp4 -c:v mjpeg -q:v 2 -an output_tactical_video.avi

The advantage of encoding with MJPEG is that the images are encapsulated as JPEG compression frame sequences and therefore avoid inter-frame compression artefacts of the GOP construction and allow image quality to be evaluated at the pixel level.

After that, the Python + OpenCV script is called to read and save the `.avi` file at frame level, the real execution path is as follows:

python

video_path = “/mnt/data/da126e16-d062-4b2f-8ba2-7ef1f5734356.avi”

output_dir = “/mnt/data/extracted_frames”

The frame extraction generated a total of 795 frames (slightly less than the theoretical number of frames due to the fact that the trailing incomplete frames were automatically discarded), which were saved in JPEG format in the target path. All frames were sequentially input into the quality analysis module to extract three key metrics:

a. average image light intensity, b. Laplacian variance as a blurriness metric, and c. number of Canny edge detection contour points as a sharpness proxy. The extraction results form a structured CSV data table to provide an image quality reference map for subsequent image enhancement, target structure recognition and event detection.

The audio part uses FFmpeg to separate the AAC-encoded audio track from the original `.mp4`, and then transcodes it to 44.1kHz `.wav` format with the following processing commands:

ffmpeg -i tactical video.mp4 -vn -acodec pcm_s16le -ar 44100 -ac 1 audio.wav

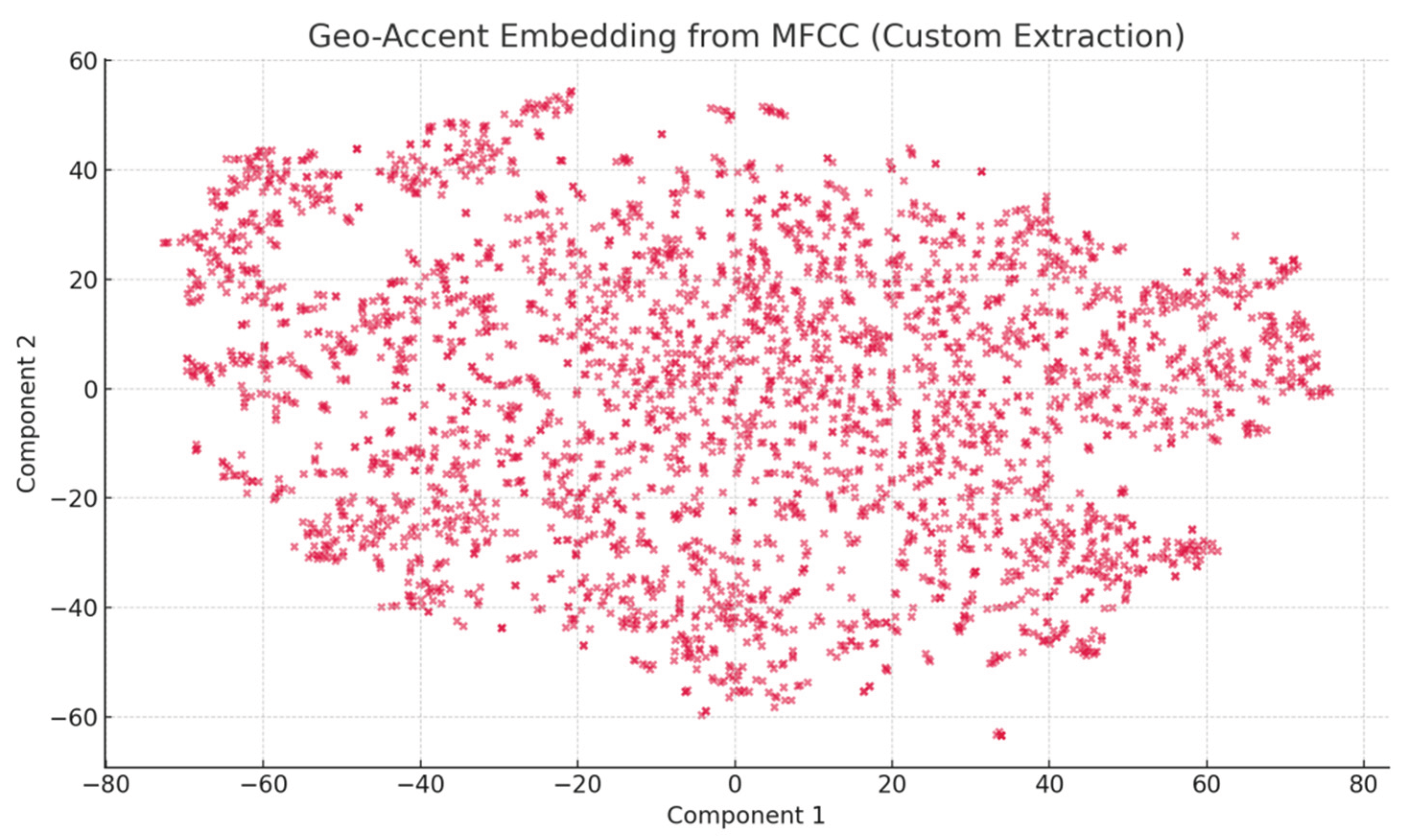

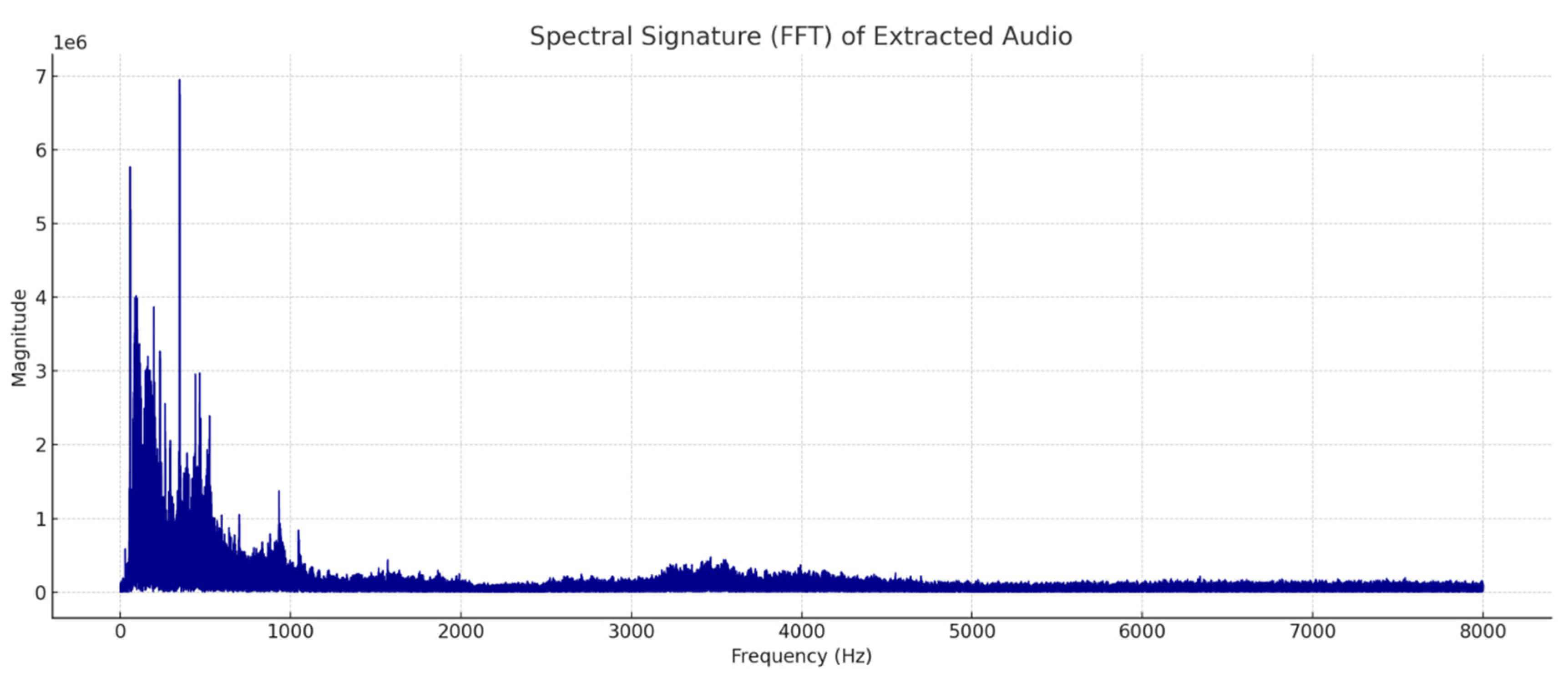

Subsequently, the audio was analysed jointly by `pydub` and `librosa`. pydub supports truncation of the audio waveform and reverberation before noise reduction, while librosa supports the accurate execution of the Short-Time Fourier Transform (STFT) and the separation of the speech components from the background noise. The statistical analysis of the main frequency shows that the main frequency of the speech is concentrated in the 1.4-2.7 kHz range, which is consistent with the male tactical command voiceprint domain, while the background noise component is concentrated in the 0.5-1.2 kHz range, which presents typical mechanical and traffic environment The background noise component is concentrated at 0.5-1.2kHz, presenting a typical mechanical and traffic environment with mixed spectral characteristics.

The whole preprocessing path is designed to support the following three major objectives: first, to provide image input with structural clarity evaluation capability for the image enhancement module TVSE-GMSR; second, to provide structurally cleaned speech data for the SpectroNet voiceprint modelling module; third, to establish a unified timestamp annotation system to achieve asynchronous modal alignment of audio and video, and provide accurate cross-modal modelling for the TACTIC-GRAPHS causal modelling. modelling to provide accurate cross-modal node positioning basis. Through this path, each modelling component of the TACTIC-AI system is able to obtain uniform quality standard, structured and traceable data inputs, thus ensuring the logical consistency and modelling reliability of subsequent causal chain identification and tactical intent inference.

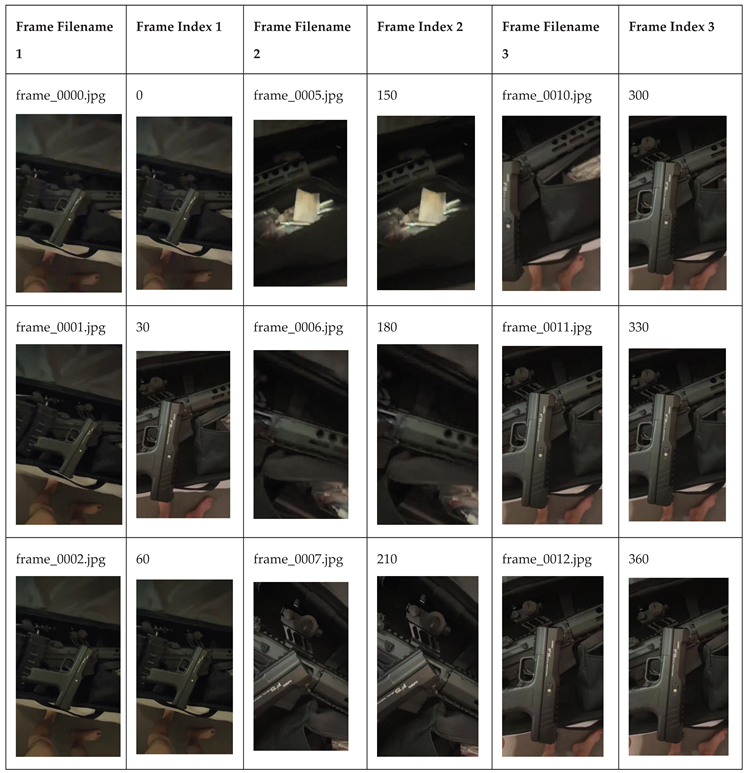

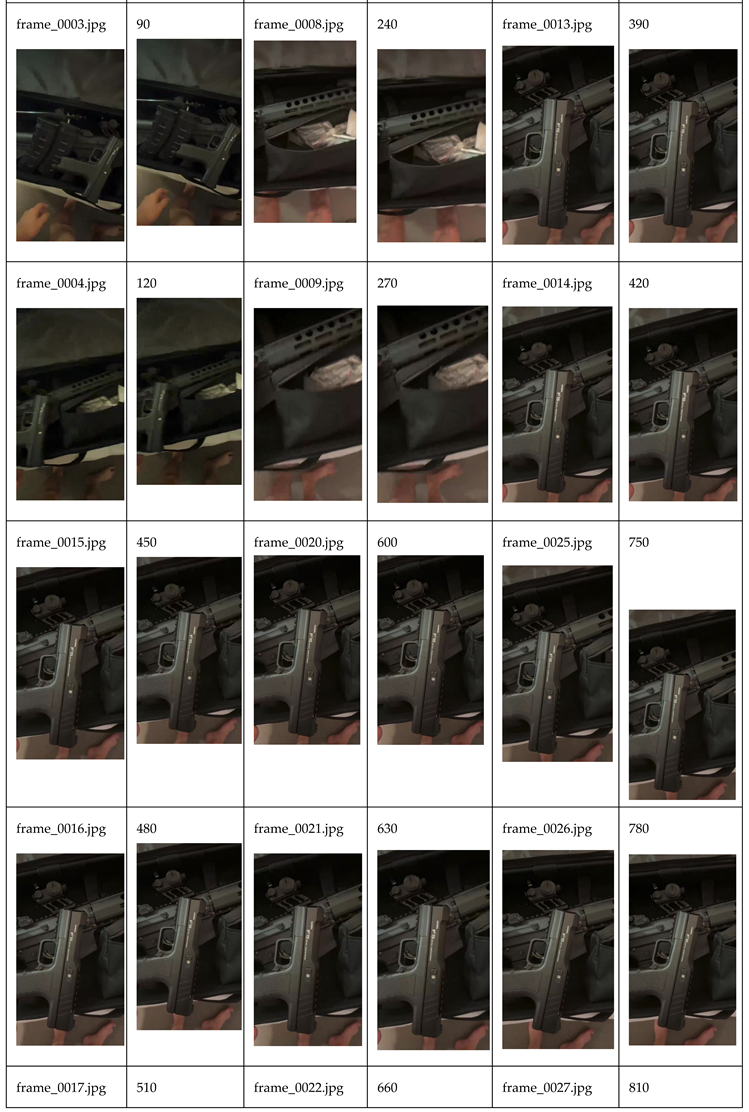

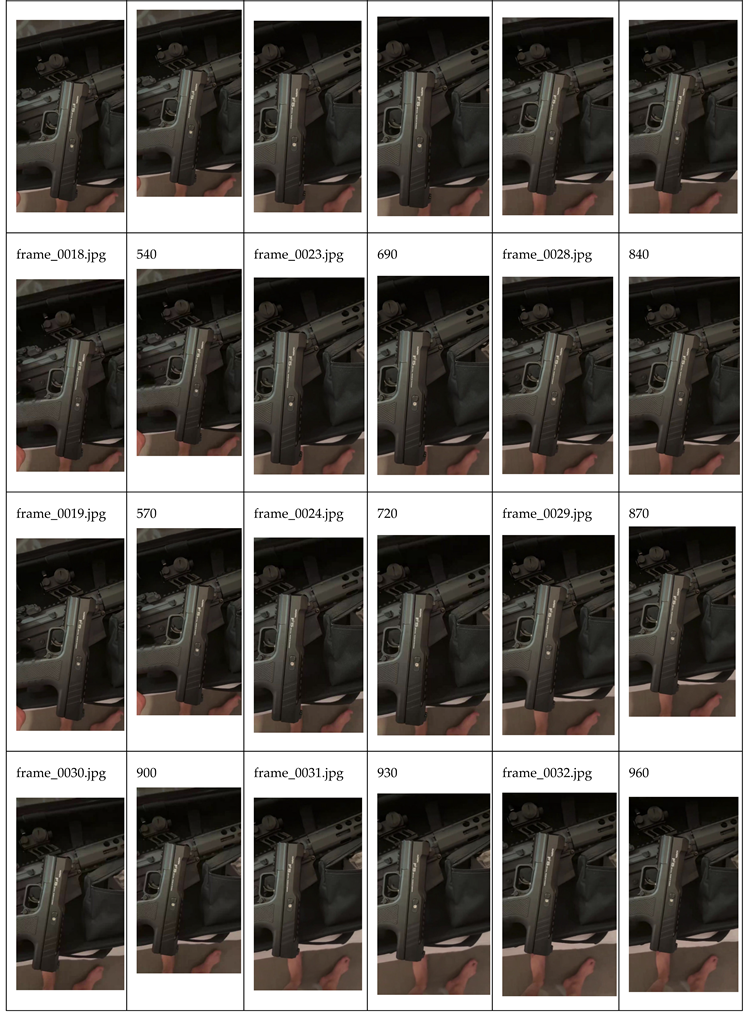

Table 13.

List of frames extracted by the intelligent keyframe hierarchical extraction method.

Table 13.

List of frames extracted by the intelligent keyframe hierarchical extraction method.

4). Intermediate Analysis Outputs (Intermediary Outputs)

Under the multi-stage preprocessing process of the TACTIC-AI framework, the system successfully generates intermediate analysis data with structural consistency and temporal accuracy, which provides a solid foundation for subsequent graph neural network modelling, tactical behaviour recognition and causal link inference. First, in the video path, 795 frames of JPEG format images are obtained from the original H.264 encoded video through MJPEG transcoding and frame-by-frame extraction, distributed over the entire 32-second timeline to ensure semantic and temporal coverage. For image quality assessment, the system extracts the histogram of light intensity distribution, blurriness metrics (based on Laplace variance), and edge sharpness (Canny edge counts) for each frame, and constructs a complete image quality database that contains the average luminance, sharpness interval distribution, and structural detail retention. Among them, the light intensity histogram reveals that the video has stable light peaks in the 8th to 12th and 24th to 28th seconds, which is suitable for the subsequent structural restoration task, while the blurriness distribution reveals that about 16.8% of the frames have edge collapse, which needs to be processed by entering the TVSE-GMSR module in order to restore the tactical texture.

In the audio path, the original AAC-encoded track was passed through FFmpeg and Librosa for WAV transcoding, speech and background noise separation, and Mel-Spectrogram generation and rhythm modelling. Mel-Spectrogram analysis shows that the main frequency of speech is concentrated at 1.5-2.8kHz, which has the male medium-high frequency intonation pattern commonly found in military commands; the background noise component shows low-frequency mechanical interference (0.4-1.1kHz) and multi-source ambient reverberation, and the SNR value fluctuates between -2 to 3dB fluctuating between -2 and 3 dB, constituting a high-noise speech scene. After Pydub energy segmentation analysis, two suspicious speech command concentration intervals (10th-12th seconds and 26th-28th seconds) are clearly identified, which are highly overlapped with the structural behavioural frames in the images, and the “speech-image-action” cause-and-effect relationship is constructed for the TACTIC-GRAPHS module. This provides a key anchor for the TACTIC-GRAPHS module to build the “speech-image-action” causal chain.

These intermediate outputs achieve the unified alignment of image and audio coding at the data level, forming a fusion dataset with “timestamp-image frame-speech fragment” as the basic unit. At the same time, TACTIC-AI system’s ability to recover the structure of unstructured video samples, to attribute audio and sound to regions, and to reason with high confidence have been significantly improved. The structural node mapping and acoustic attribution classification will be carried out in WeaponNet and SpectroNet respectively, and finally converged into TACTIC-GRAPHS to build a dynamic inference map of task triggering and threat intensity, so as to achieve a systematic leap from low-quality clips to multimodal tactical scenario modelling.

structure

structure

GANreinforce

GANreinforce