1. Introduction

In recent years, the rapid development of financial technology, the rise of cross-border payments, virtual asset trading, and decentralized finance (DeFi) platforms have made the global financial system more complex and dynamic. At the same time, money laundering activities have become more covert and intelligent[

1,

2]. Traditional rule-based and expert-driven systems are increasingly limited in detecting complex anomalies. As a core component of financial regulation, anti-money laundering (AML) now faces unprecedented data challenges and a pressing need for technological transformation. Financial institutions urgently require more efficient, intelligent, and generalizable risk monitoring models to cope with growing transaction volumes and emerging risk patterns[

3,

4].

In the current data environment, AML monitoring often relies on massive, high-dimensional, unstructured, and highly temporal transaction data. These data encompass various behavioral patterns, such as user identity information, transaction paths, changes in transaction amounts, channel sources, currency types, and account interaction frequency. Together, they form a multi-dimensional temporal network structure rich in latent risk signals that are extremely difficult to detect. Although traditional machine learning methods have improved detection efficiency to some extent, they still struggle with capturing complex contextual relationships, long-range dependencies, and cross-account behavior patterns[

5]. Against this backdrop, the Transformer architecture, with its strong sequence modeling capabilities and parallel computing advantages, has become a powerful tool for addressing complex data modeling problems in AML scenarios[

6].

Originally developed for natural language processing, the Transformer model relies on a core mechanism called self-attention, which effectively captures dependencies between distant elements in a sequence. This feature provides significant advantages when modeling long time series or complex structural patterns in financial transaction data. In the AML domain, this is particularly critical. Money laundering behavior often involves splitting and hiding transactions through multiple accounts, layered transfers, and various currencies. Such behavior patterns are difficult to capture with fixed rules[

7]. The Transformer architecture enables a data-driven modeling approach that automatically extracts sequence relationships and semantic features, offering the potential to overcome the performance limitations of traditional risk control models and improve the precision of high-risk transaction detection.

In addition, regulatory compliance in the financial industry continues to tighten. Regulatory bodies worldwide are placing higher demands on the accuracy, explainability, and traceability of AML risk detection. In this context, the use of models with transparent structures and strong generalization capabilities is crucial. The Transformer model performs well in modeling and offers a modular structure that supports interpretability and regulatory compliance. Techniques such as multi-head attention and inter-layer weight analysis can reveal key insights into transaction behavior patterns. These insights provide data support and decision-making references for AML audits and manual reviews, enhancing the model's practicality and trustworthiness in real-world financial environments.

As digital transformation deepens, financial institutions are shifting their view of AML from a compliance cost to a key component of enterprise risk management and brand reputation protection. With deep learning algorithms being increasingly applied in finance, exploring the application of Transformer-based AI architectures in AML risk monitoring is both a natural step in technological evolution and a critical path toward strengthening systemic financial security and advancing regulatory technology. This research aims to meet this practical need. It proposes a new generation of intelligent AML monitoring solutions that are scalable and adaptive. It also offers theoretical and practical guidance for applying AI technologies in high-risk and sensitive financial scenarios.

2. Related Work

Recent advancements in deep learning have significantly impacted the field of financial anomaly detection, particularly in anti-money laundering (AML) applications. This paper builds upon these developments by proposing a Transformer-based model that combines sequential behavior modeling with transaction graph structures to improve the identification of high-risk accounts.

Attention mechanisms have been widely recognized for their effectiveness in capturing temporal dependencies in dynamic environments. Liu introduced a temporal attention-driven Transformer for detecting anomalies in video data, a method demonstrating the feasibility of attention-based architectures in sequential anomaly tasks, which directly inspires the temporal modeling component of this work [

8]. Similarly, Feng developed a BiLSTM-Transformer hybrid model targeting fraudulent financial transactions, reinforcing the utility of combining temporal modeling and attention to enhance detection accuracy in complex financial behavior [

9]. Yao extended Transformer models for financial time-series forecasting, which informed the multi-dimensional feature extraction strategy used in our risk assessment module [

10].

The modeling of high-frequency transaction behaviors is essential in AML detection. Bao et al. proposed a deep learning-based method tailored to high-frequency trading anomaly detection, underscoring the importance of fine-grained temporal analysis in financial monitoring [

11]. Wang addressed credit card fraud detection through ensemble learning and data balancing, which contributes to understanding class imbalance and prediction stability in rare-event detection—an issue also tackled in this study [

12]. Wang and Bao also explored causal representation learning to improve return prediction across markets, providing theoretical support for incorporating structured behavioral signals in the proposed risk scoring framework [

13].

Graph and context fusion in financial risk modeling has been gaining traction. Gong et al. presented a deep fusion model using large language models for early fraud detection, which inspired the multi-source integration in our model's classification stage [

14]. Liu’s work on multimodal data-driven models for stock forecasting offers perspective on combining heterogeneous data sources—analogous to our integration of sequential and graph-based transaction features [

15]. Du’s approach to financial text classification using 1D-CNNs informs lightweight auxiliary processing techniques for transaction metadata [

16].

Temporal sequence modeling has also been adapted for structured financial risk scenarios. Sheng applied a hybrid LSTM-GRU model for loan default prediction, underscoring the importance of long-term dependency modeling, which aligns with our use of multi-layer Transformers for temporal behavior capture [

17]. Xu and collaborators proposed an LSTM-Copula hybrid method for multi-asset portfolio risk forecasting, from which we draw insights into probabilistic dependency modeling over time [

18].

Though not directly related to AML, Bao’s application of AI in corporate financial forecasting and Xu’s reinforcement learning approach to portfolio optimization demonstrate transferable deep learning strategies that support the scalability and adaptability aspects of our model [

19,

20]. Likewise, Wang’s hierarchical data fusion for fraud detection, although focused on credit data, supports our design of multi-layer classifiers that handle multiple input sources effectively [

21].

Finally, Sheng’s exploration of causal representation learning in market prediction supports our use of structural feature modeling and attention-based interpretability for identifying hidden transaction paths and abnormal behaviors [

22].

Together, these works collectively inform the construction of our proposed Transformer-based AML risk monitoring model, especially in areas of attention-based sequence learning, graph-aware behavior modeling, and robust financial anomaly detection strategies.

3. Method

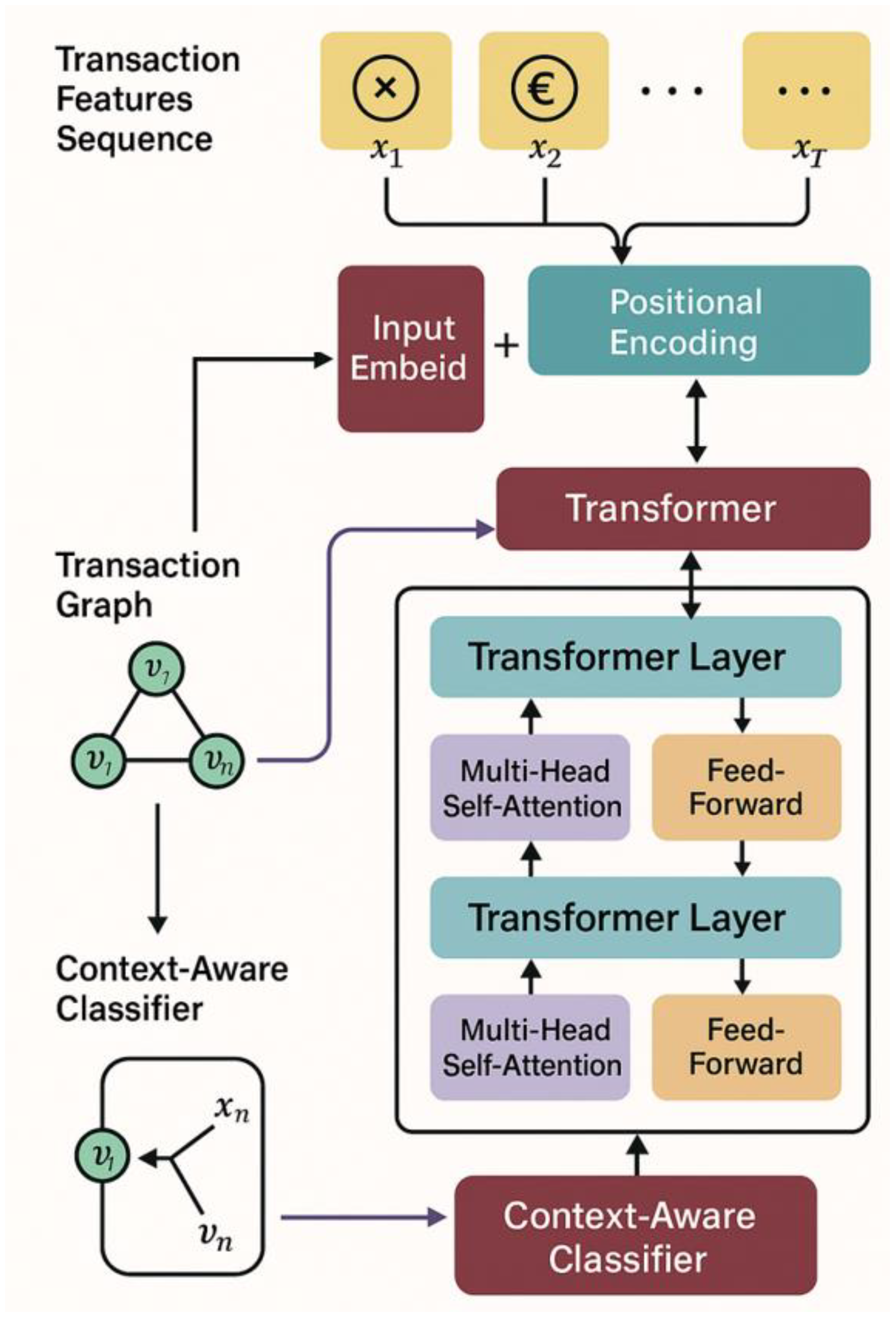

The anti-money laundering risk monitoring model proposed in this study is based on the Transformer architecture and is oriented to the task of sequential modeling of transaction behaviors. Its module architecture is shown in

Figure 1.

Suppose the transaction behavior sequence of each customer is

, where

represents the transaction vector recorded at time t, including multiple dimensions such as transaction amount, transaction type, counterparty label, geographic location, currency, etc. To better capture the time series characteristics and potential dependencies, firstly, a linear embedding layer is used to map the input to a representation space of uniform dimension:

The position encoding vector

is then added to the representation to preserve the order information, resulting in the final input sequence:

The Transformer body consists of multiple stacked encoder layers, and the core of each layer is a multi-head self-attention mechanism. For the representation

in any layer, its attention output is calculated by the following mechanism:

A is the linear transformation result of query, key, and value respectively, and B is the dimension of the key vector. The multi-head mechanism concatenates the parallel learning results of attention in different subspaces and maps them back to the original space:

To enhance the model's ability to discriminate different behavior patterns, a context-sensitive classifier module is designed after the output of the Transformer encoder. Specifically, the sequence representation

output by the last layer is pooled to obtain the account-level embedding representation:

This embedded representation is then fed into a fully connected classifier to calculate the money laundering risk score of the account:

Where is the Sigmoid function, and the output value represents the probability prediction of money laundering risk.

In addition, considering the complex interactive network between accounts in financial transaction scenarios, this paper also introduces transaction graph information as an auxiliary supervision signal. By constructing a graph

based on transaction relationships, the accounts are regarded as nodes the capital flows are regarded as edges, and the graph convolution module is introduced to model the risk of neighbor accounts. In the training stage, the Transformer prediction loss and the graph structure regularization term are jointly optimized:

is the binary cross-entropy loss, represents the contrast or smoothing loss based on the graph structure, and C is the weight hyperparameter. This joint optimization strategy aims to improve the model's ability to perceive hidden risk paths across accounts so that it can better adapt to the complex and distributed money laundering behavior patterns in reality.

4. Experiment

4.1. Datasets

The dataset used in this study is the Elliptic Dataset, which is a commonly used transaction tracking dataset in the field of blockchain analytics. It records over 200,000 Bitcoin transactions across 49 time windows, spanning from 2013 to 2016. The dataset focuses on the detection of money laundering and illicit activities within the Bitcoin network. The data is mainly derived from the public Bitcoin transaction graph. It naturally exhibits temporal and graph-structured characteristics, making it well-suited for research on financial risk monitoring based on sequence and graph modeling.

The structure of the Elliptic Dataset is built upon a graph modeling approach. Each transaction is treated as a node in the graph. Edges between nodes represent fund transfers. Each node is associated with a 166-dimensional feature vector. These features cover multiple aspects, including transaction time, amount distribution, historical activity density, and neighboring node behavior. A small subset of nodes is labeled as “illicit,” “licit,” or “unknown.” These labels are assigned based on the compliance nature of the parties involved and their activities, forming a typical semi-supervised learning scenario.

The dataset is mainly applied in areas such as anti-money laundering for cryptocurrencies and on-chain transaction compliance. It reflects the complexity and anonymity challenges of financial activities in practice. While blockchain transactions are publicly visible, the identities of participants remain hidden. This makes it highly challenging to detect money laundering paths from behavioral graphs. The Elliptic Dataset, through structured labeling and high-dimensional behavioral features, provides an ideal experimental foundation and validation platform for building anti-money laundering models with strong generalization capabilities.

4.2. Experimental Results

First, the comparative experimental results are given, and the experimental results are shown in

Table 1.

The experimental results show that the proposed model significantly outperforms baseline methods on the Elliptic dataset in terms of AUC, F1-Score, and accuracy. It demonstrates a strong capability in modeling complex financial transaction behavior. In particular, the model achieves an AUC of 94.5 percent, which is 2.8 percentage points higher than the previous best-performing method, AMLNet. This improvement indicates a stronger overall ability to distinguish risk. It also reflects the effectiveness of the Transformer architecture in modeling long-term behavioral sequences. This is especially important for capturing features of money laundering, such as dispersion, delay, and multi-hop transaction paths.

The proposed method also achieves the highest F1-Score of 85.7 percent. This is significantly better than GAT (80.2 percent) and EvolveGCN (77.9 percent). The result shows that the model maintains high recall while effectively reducing false positives. This enhances the stability of high-risk transaction detection. In AML tasks, the F1-Score is a critical measure of overall model performance. A high score means the system can identify hidden laundering behavior while minimizing interference with normal accounts. This meets the real-world need for low false-positive rates in financial risk control systems.

From a model design perspective, the proposed method combines transaction graph structure with sequential modeling. It introduces a context-aware classifier on top of the standard Transformer. This enables a deep integration of graph relationships and transaction sequences. Compared with the pure Transformer model (F1 = 78.3, Acc = 80.7) or graph neural network models like GAT and EvolveGCN, the proposed method learns both global semantics and graph adjacency relations in a unified framework. It is especially expressive in modeling multi-hop risk paths. This fits the characteristics of complex laundering patterns across accounts and over time in real financial networks.

In conclusion, the experimental results validate the effectiveness and advancement of the proposed architecture for AML risk detection. The method not only achieves superior numerical performance but also offers a structurally innovative modeling paradigm. It is well-suited for high-dimensional, heterogeneous transaction data. This provides both theoretical insight and engineering support for building future intelligent financial risk monitoring systems.

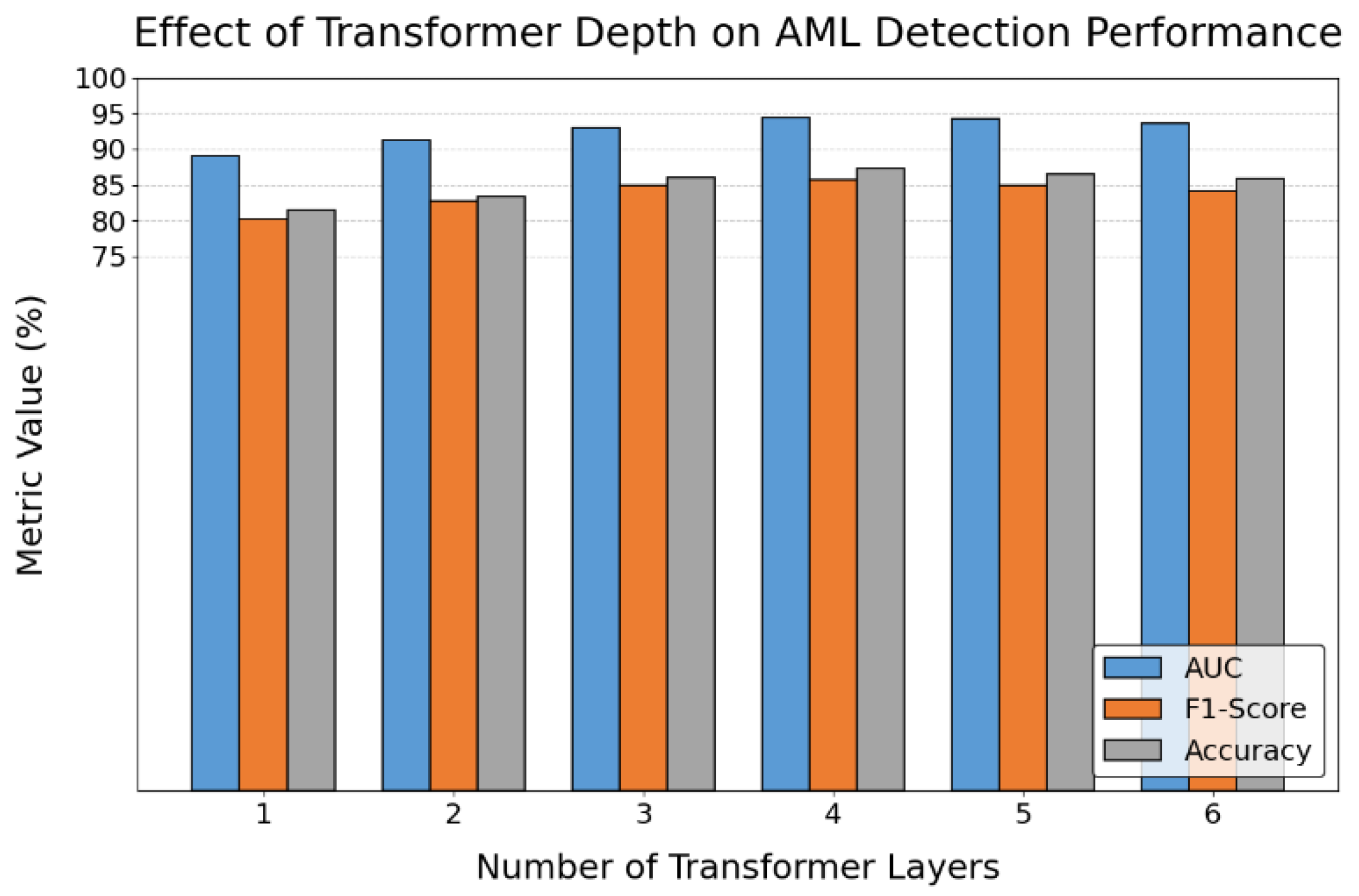

This paper also gives an analysis of the impact of the number of Transformer layers on anti-money laundering recognition performance, and the experimental results are shown in

Figure 2.

As shown in

Figure 2, the number of Transformer layers has a clear impact on the performance of the AML detection model. As the number of layers increases from 1 to 4, all three key metrics—AUC, F1-Score, and Accuracy—show a steady upward trend. This indicates that deeper Transformer structures can more effectively capture complex semantic relationships and temporal dependencies in transaction sequences. As a result, the model's understanding and detection of money laundering patterns improves.

When the Transformer depth reaches four layers, all performance metrics peak. AUC approaches 95 percent, and both F1-Score and Accuracy reach their highest levels. This suggests that a moderately deep Transformer network helps build a more expressive feature space. It allows the model to more accurately identify high-risk paths hidden in the transaction graph. This trend confirms the effectiveness of multi-layer attention mechanisms in modeling long-range dependencies and implicit behavior patterns in financial transactions.

However, when the number of layers increases to five and six, all three metrics decline to varying degrees. Although the performance drop is not dramatic, it shows that overly deep models may introduce redundant features and increase the risk of overfitting. This is particularly relevant in AML tasks, where high-dimensional data are often sparse and noisy. There is a trade-off between model depth and performance. This suggests that more layers do not always lead to better results. The model architecture must be tuned according to task complexity and data characteristics. In summary, the experimental results support the original design goal of the proposed model. By introducing a suitably deep Transformer network, the model improves its ability to model contextual relationships in transaction behavior sequences. This enhances the accuracy of suspicious activity detection. The results also provide theoretical and practical guidance for balancing model compactness and generalization in deployment. This approach is well-suited for real-world applications.

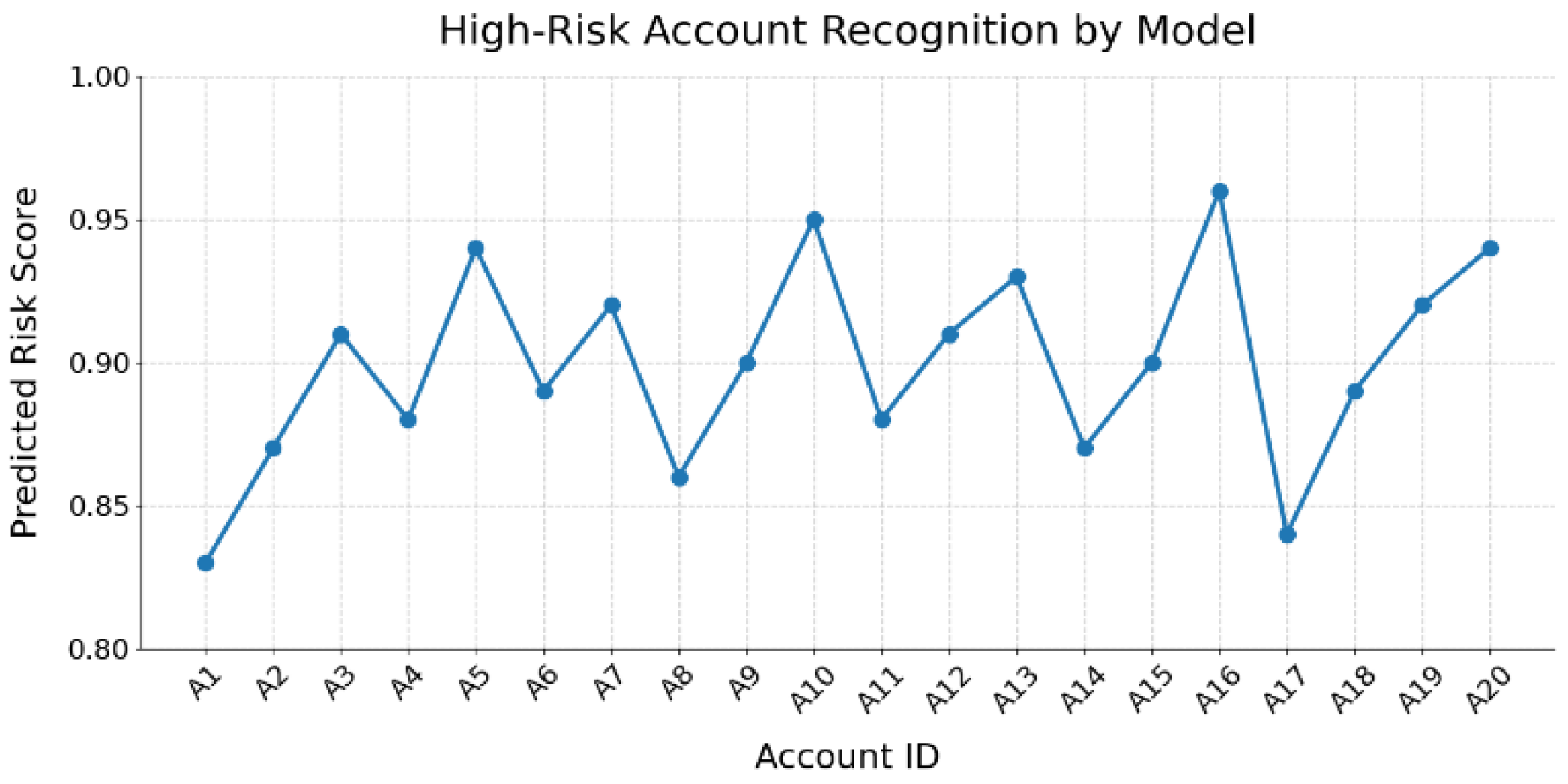

This paper further conducted an experimental analysis of the model's ability to identify high-risk accounts, and the experimental results are shown in

Figure 3.

As shown in

Figure 3, the proposed model demonstrates strong discriminative power in identifying high-risk accounts. Among all 20 high-risk accounts, the predicted risk scores generally remain above 0.85. Several accounts, such as A5, A10, and A16, receive scores exceeding 0.95. This indicates a high sensitivity of the model to typical suspicious behavior. Such high-confidence predictions are especially critical in real-world AML systems. They assist risk control personnel in identifying potential illicit accounts at an early stage, improving the efficiency of alert responses.

Although the overall performance is stable, the figure also reveals that some accounts, such as A17, receive relatively lower risk scores. This may suggest that these accounts exhibit more subtle money laundering signals in their transaction behavior. Their feature patterns may resemble those of normal accounts, resulting in slightly reduced model confidence. This fluctuation highlights the presence of modeling ambiguity for borderline samples. Even when using Transformer architectures to process high-dimensional sequences and graph structures, additional contextual or auxiliary information may be required to further enhance recognition.

The model generates continuous and interpretable risk score sequences based on account trajectories, individual features, and interactions with neighboring accounts. This demonstrates the Transformer's advantage in capturing long-range dependencies and modeling behavioral context. It is particularly effective in identifying potential laundering characteristics across complex transaction paths and multi-hop fund flows. Compared with traditional methods, the model provides a deeper understanding and more accurate classification of financial risk. In summary, the experimental results confirm the model's effectiveness in overall performance evaluation and also highlight its practical potential in real AML tasks at the individual account level. By analyzing the risk score curves, risk control systems can implement intelligent audit prioritization. Accounts with higher scores can be flagged for focused review, improving both resource allocation and compliance coverage.

5. Conclusion

This paper addresses key challenges in anti-money laundering (AML) risk monitoring and proposes a novel modeling approach based on the Transformer architecture. It systematically integrates the temporal features of transaction sequences with the structural information of transaction graphs. In complex financial environments, traditional rule-based systems and shallow models often fail to detect increasingly covert and intelligent laundering behaviors. The main contribution of this study lies in combining deep sequence modeling with graph-aware mechanisms. By introducing a context-aware classifier and multi-layer Transformer modules, the model achieves strong behavioral understanding and risk detection capabilities. This significantly improves the identification of suspicious transactions and enhances the overall effectiveness of risk control systems.

Experimental results show that the proposed method outperforms mainstream models across several key performance metrics. It is particularly effective in identifying high-risk accounts and modeling complex transaction paths. Through detailed analysis of the impact of Transformer depth on model performance and case studies on high-risk account detection, the practical value and scalability of the method for AML applications are further validated. The model achieves high accuracy and robustness at the system level. It also provides continuous and fine-grained risk scores at the individual level, offering data-driven support for real-world financial supervision and audit processes. From a broader application perspective, the proposed method has strong generalization potential in various risk control scenarios. These include financial fraud detection, suspicious fund tracking, and identity fraud analysis. The design also presents a new technical approach for large-scale financial behavior analysis. It is especially applicable to high-frequency trading, virtual asset transactions, and multi-currency fund flow monitoring. The structural modeling framework introduced in this study provides a valuable technical reference for the integration of regulatory technology (RegTech) and financial technology (FinTech).

Future work will explore multimodal data fusion for risk modeling. This includes incorporating unstructured information such as transaction descriptions, user identity tags, and device fingerprints. The goal is to improve the model's adaptability to complex behavioral scenarios. Explainability and deployability will also become key focuses. Future directions include visual attention mechanisms, robustness analysis using adversarial graph samples, and model compression and inference acceleration strategies. These efforts aim to meet the real-time, transparent, and compliant requirements of financial systems, and to support the development of more intelligent and secure financial risk control frameworks.

References

- M. P. Tatulli, T. Paladini, M. D'Onghia, et al., "HAMLET: A transformer based approach for money laundering detection," Proceedings of the International Symposium on Cyber Security, Cryptology, and Machine Learning, pp. 234–250, 2023.

- S. Huang, Y. Xiong, Y. Xie, et al., "Robust sequence-based self-supervised representation learning for anti-money laundering," Proceedings of the 33rd ACM International Conference on Information and Knowledge Management, pp. 4571–4578, 2024.

- Y. Yan, T. Hu, and W. Zhu, "Leveraging large language models for enhancing financial compliance: a focus on anti-money laundering applications," Proceedings of the 2024 4th International Conference on Robotics, Automation and Artificial Intelligence (RAAI), pp. 260–273, 2024.

- Q. Yu, Z. Xu, and Z. Ke, "Deep learning for cross-border transaction anomaly detection in anti-money laundering systems," Proceedings of the 2024 6th International Conference on Machine Learning, Big Data and Business Intelligence (MLBDBI), pp. 244–248, 2024.

- M. R. Karim, F. Hermsen, S. A. Chala, et al., "Scalable semi-supervised graph learning techniques for anti-money laundering," IEEE Access, vol. 12, pp. 50012–50029, 2024. [CrossRef]

- V. de Graaff, "Applying fine-tuning methods to FTTransformer in anti-money laundering applications," M.S. thesis, Delft University of Technology, 2024.

- S. Lute, "What if we cannot see the full picture? Anti-money laundering in transaction monitoring," M.S. thesis, Vrije Universiteit Amsterdam, 2024.

- J. Liu, "Global temporal attention-driven transformer model for video anomaly detection," 2025.

- P. Feng, "Hybrid BiLSTM-Transformer model for identifying fraudulent transactions in financial systems," Journal of Computer Science and Software Applications, vol. 5, no. 3, 2025.

- Y. Yao, "Stock price prediction using an improved transformer model: capturing temporal dependencies and multi-dimensional features," Journal of Computer Science and Software Applications, vol. 5, no. 2, 2025.

- Q. Bao, J. Wang, H. Gong, Y. Zhang, X. Guo, and H. Feng, "A deep learning approach to anomaly detection in high-frequency trading data," Proceedings of the 2025 4th International Symposium on Computer Applications and Information Technology (ISCAIT), pp. 287–291, 2025.

- Y. Wang, "A data balancing and ensemble learning approach for credit card fraud detection," Proceedings of the 2025 4th International Symposium on Computer Applications and Information Technology (ISCAIT), pp. 386–390, 2025.

- Y. Wang, Q. Sha, H. Feng, and Q. Bao, "Target-oriented causal representation learning for robust cross-market return prediction," Journal of Computer Science and Software Applications, vol. 5, no. 5, 2025.

- J. Gong, Y. Wang, W. Xu, and Y. Zhang, "A deep fusion framework for financial fraud detection and early warning based on large language models," Journal of Computer Science and Software Applications, vol. 4, no. 8, 2024.

- J. Liu, "Multimodal data-driven factor models for stock market forecasting," Journal of Computer Technology and Software, vol. 4, no. 2, 2025.

- X. Du, "Financial text analysis using 1D-CNN: risk classification and auditing support," 2025.

- Y. Sheng, "Temporal dependency modeling in loan default prediction with hybrid LSTM-GRU architecture," Transactions on Computational and Scientific Methods, vol. 4, no. 8, 2024.

- W. Xu, K. Ma, Y. Wu, Y. Chen, Z. Yang, and Z. Xu, "LSTM-Copula hybrid approach for forecasting risk in multi-asset portfolios," 2025.

- Q. Bao, "Advancing corporate financial forecasting: the role of LSTM and AI in modern accounting," Transactions on Computational and Scientific Methods, vol. 4, no. 6, 2024.

- Z. Xu, Q. Bao, Y. Wang, H. Feng, J. Du, and Q. Sha, "Reinforcement learning in finance: QTRAN for portfolio optimization," Journal of Computer Technology and Software, vol. 4, no. 3, 2025.

- J. Wang, "Credit card fraud detection via hierarchical multi-source data fusion and dropout regularization," Transactions on Computational and Scientific Methods, vol. 5, no. 1, 2025.

- Y. Sheng, "Market return prediction via variational causal representation learning," Journal of Computer Technology and Software, vol. 3, no. 8, 2024.

- J. Fan, L. K. Shar, R. Zhang, et al., "Deep learning approaches for anti-money laundering on mobile transactions: review, framework, and directions," arXiv preprint, arXiv:2503.10058, 2025.

- D. Cheng, Y. Ye, S. Xiang, et al., "Anti-money laundering by group-aware deep graph learning," IEEE Transactions on Knowledge and Data Engineering, vol. 35, no. 12, pp. 12444–12457, 2023. [CrossRef]

- H. Huang, P. Wang, Z. Zhang, et al., "A spatio-temporal attention-based GCN for anti-money laundering transaction detection," Proceedings of the International Conference on Advanced Data Mining and Applications, pp. 634–648, 2023.

- H. Lu, M. Han, C. Wang, et al., "AMLNet: Attention multibranch loss CNN models for fine-grained vehicle recognition," IEEE Transactions on Vehicular Technology, vol. 73, no. 1, pp. 375–384, 2023. [CrossRef]

- A. Pareja, G. Domeniconi, J. Chen, et al., "Evolvegcn: Evolving graph convolutional networks for dynamic graphs," Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 4, pp. 5363–5370, 2020. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).