Submitted:

06 July 2025

Posted:

07 July 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. The XTorch Framework

2.1. Architecture

- xt::models: A collection of pre-implemented, standard neural network architectures. The DCGAN Generator and Discriminator used in our experiment are part of this module. This allows for rapid prototyping without needing to redefine common models from scratch.

- xt::datasets: C++ classes for interfacing with popular datasets. The xt::datasets::CelebA class handles the parsing of the dataset directory and attribute files, abstracting away the file I/O boilerplate.

- xt::transforms: A suite of data preprocessing and augmentation modules that mimic torchvision.transforms. The Compose class allows users to chain transformations like Resize, CenterCrop, and Normalize into a sequential pipeline.

- xt::dataloaders: This is the cornerstone of XTorch’s performance. We provide an ExtendedDataLoader that is a ground-up C++ implementation of a parallel data loader.

2.2. The ExtendedDataLoader

- Multi-threaded Prefetching: The data loader spawns a pool of C++ worker threads. Each thread independently fetches, decodes, and transforms a batch of data.

- Shared Memory Queue: The processed tensor batches are placed into a concurrent, thread-safe queue. This avoids the costly serialization/deserialization and IPC overhead inherent in Python’s multi-processing approach.

- Maximized GPU Saturation: The main training loop thread simply dequeues a ready batch and moves it to the target device, ensuring the GPU is fed a continuous stream of data.

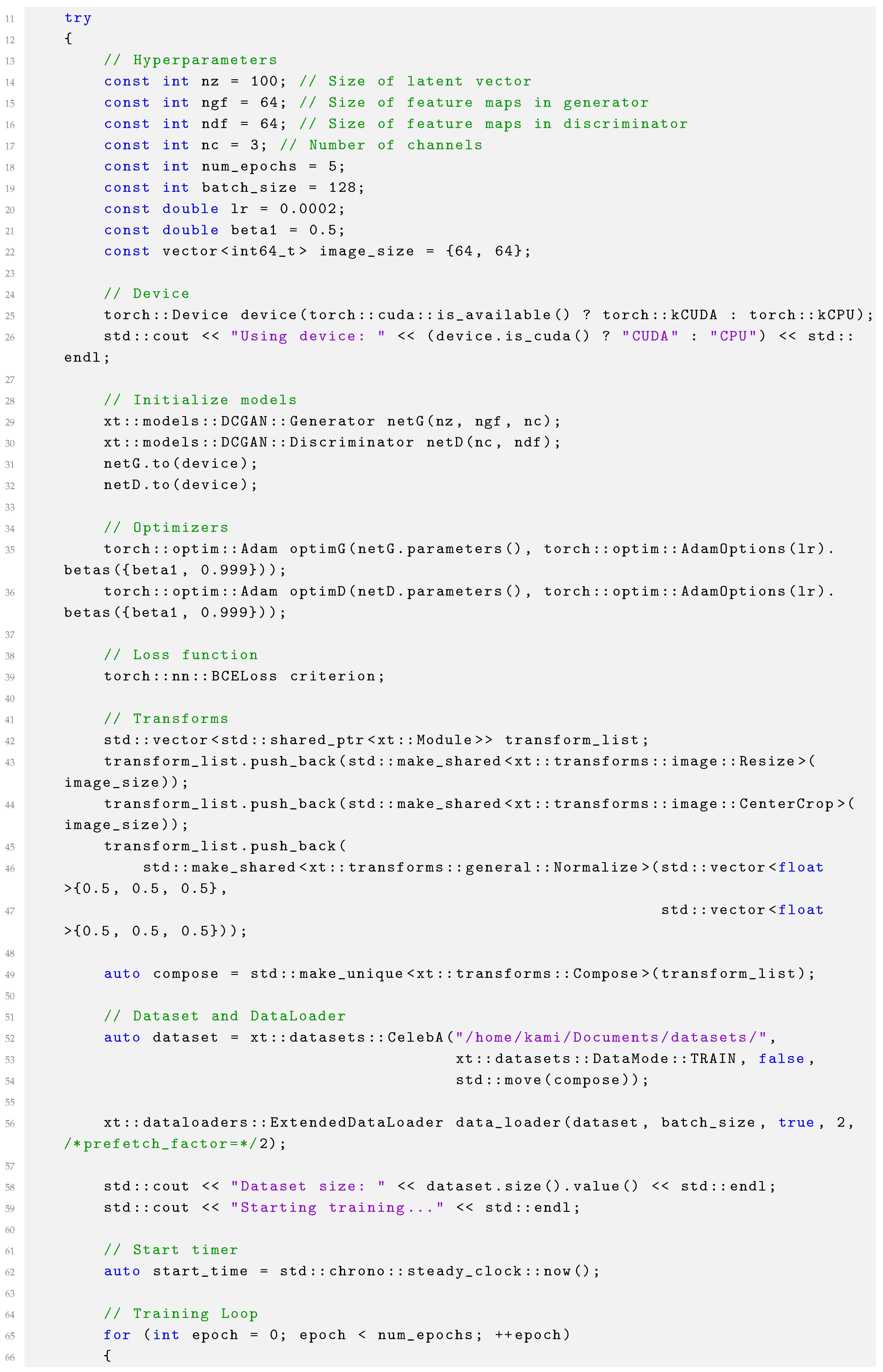

3. Experimental Setup

-

Hardware:

- –

- CPU: AMD Ryzen 9 5950X (16-core)

- –

- GPU: NVIDIA RTX 3090 (24GB VRAM)

- –

- RAM: 64GB DDR4

-

Software:

- –

- OS: Ubuntu 20.04

- –

- CUDA Toolkit: 11.6

- –

- PyTorch / LibTorch: 1.12.1 (cxx11 ABI)

- –

- Compiler: g++ 9.4.0

- Dataset: CelebFaces Attributes (CelebA) dataset.

- Model: Deep Convolutional Generative Adversarial Network (DCGAN).

- Training Parameters: Epochs: 5, Batch Size: 128, Optimizer: Adam (lr=0.0002, beta1=0.5), Image Size: 64x64.

- PyTorch Baseline: The official PyTorch DCGAN example, modified to use two RTX 3090 GPUs via torch.nn.DataParallel. The DataLoader was configured with num_workers=8.

- XTorch Implementation: The C++ code provided in Appendix A, run on a single RTX 3090 GPU. The ExtendedDataLoader was configured with num_workers=2.

4. Results and Analysis

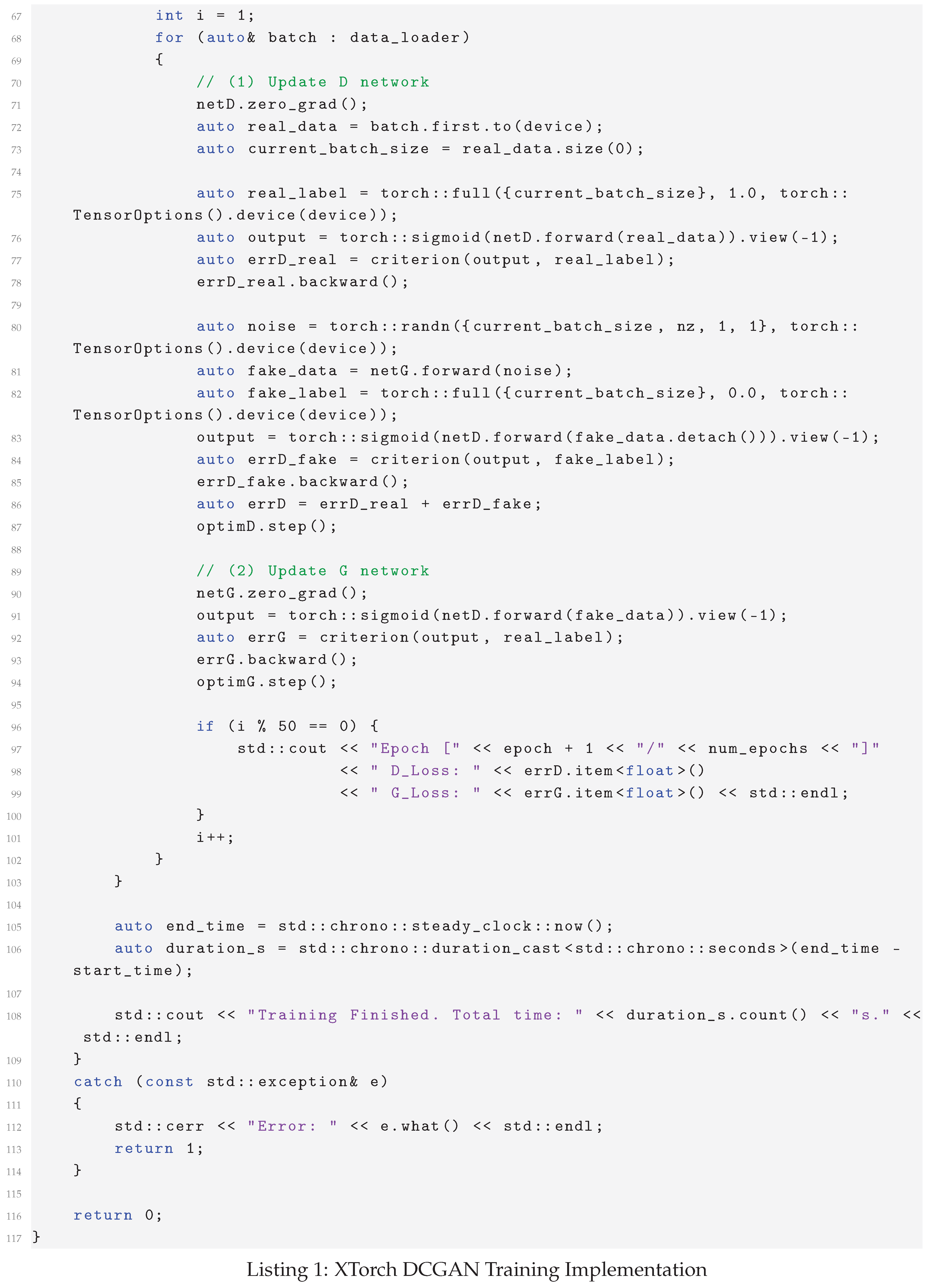

- Elimination of Data Loading Bottlenecks: The primary contributor to the speedup is the efficiency of the C++ ExtendedDataLoader.

- No Python Interpreter Overhead: The main training loop in C++ is compiled to highly efficient machine code, avoiding the accumulated overhead of a Python-based loop.

- Inefficiency ofDataParallel: The single-GPU XTorch implementation completely avoids the scatter/gather overhead inherent in the DataParallel module.

5. Conclusions and Future Work

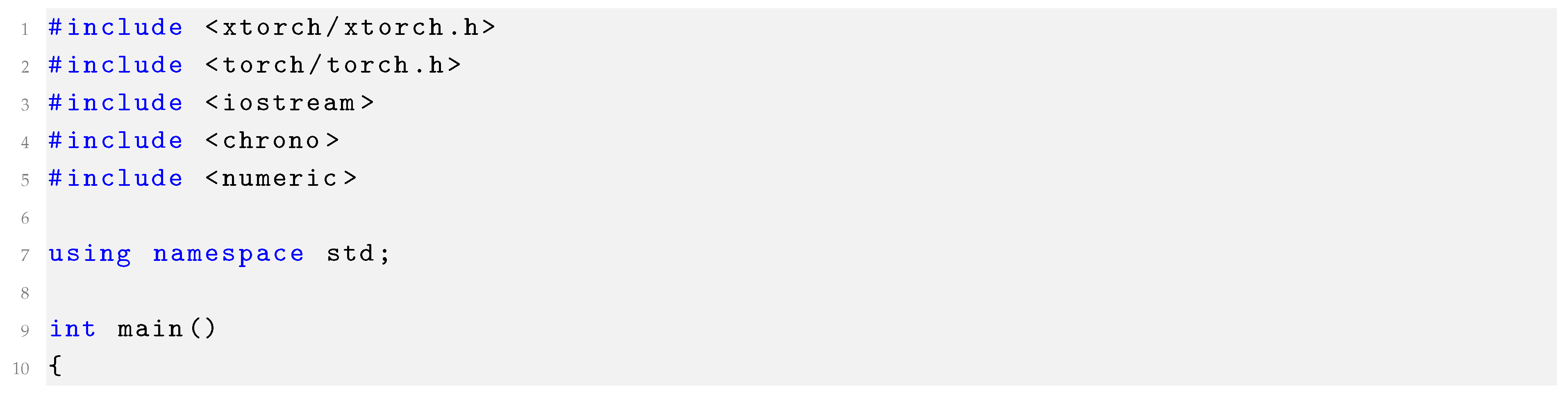

Appendix A. XTorch DCGAN Training Source Code

References

- A. Paszke, et al. (2019). PyTorch: An Imperative Style, High-Performance Deep Learning Library. Advances in Neural Information Processing Systems 32.

- M. Abadi, et al. (2016). TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. arXiv preprint arXiv:1603.04467, arXiv:1603.04467.

- A. Radford, L. Metz, & S. Chintala. (2015). Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv preprint arXiv:1511.06434, arXiv:1511.06434.

- Z. Liu, et al. (2015). Deep Learning Face Attributes in the Wild. Proceedings of the IEEE International Conference on Computer Vision.

| Framework | GPU Configuration | Total Time (seconds) |

|---|---|---|

| PyTorch | 2 x RTX 3090 (DataParallel) | 350 s |

| XTorch | 1 x RTX 3090 | 219 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).