1. Introduction: Redefining Intelligence in the Algorithmic Age

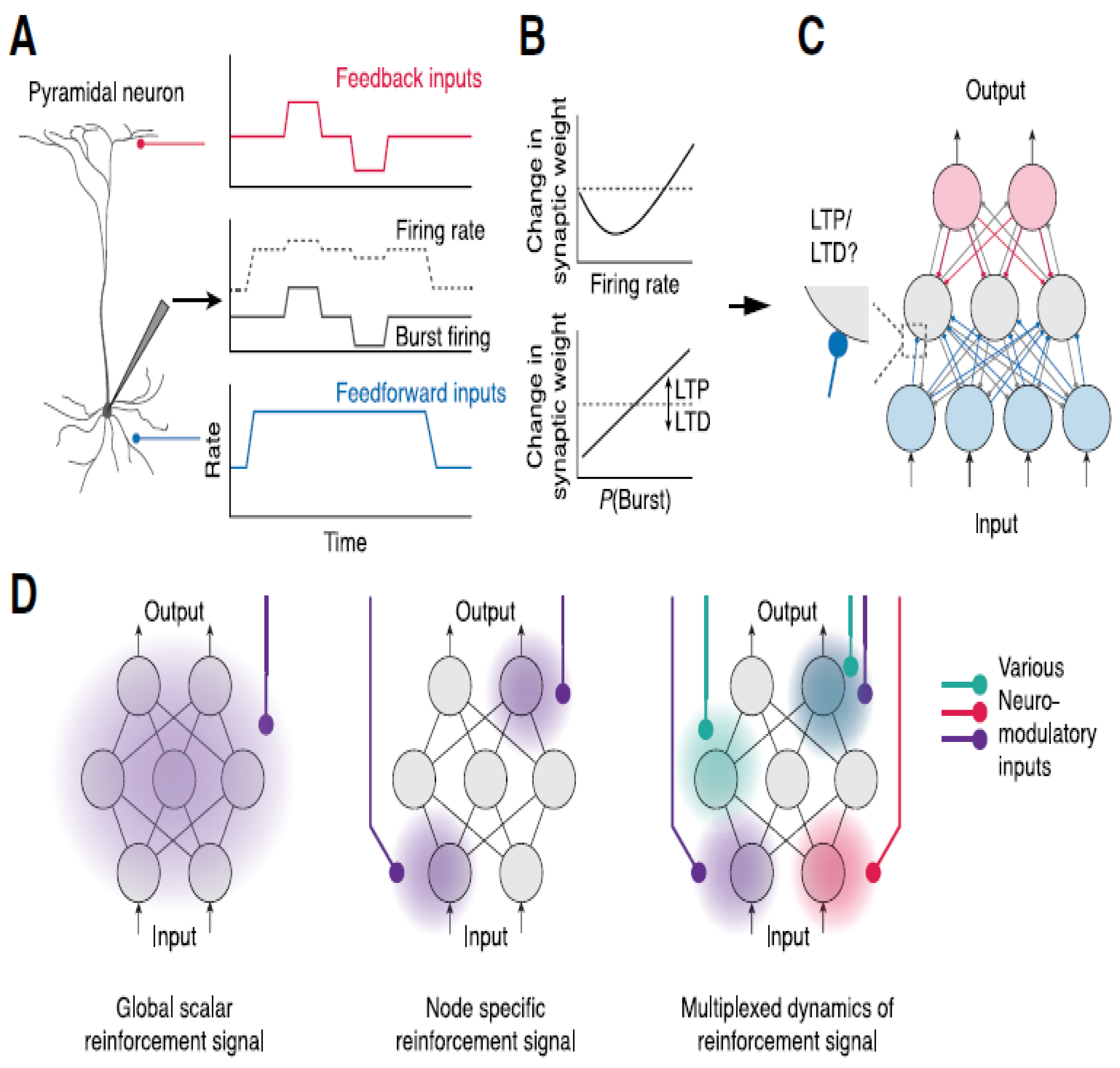

The very definition of intelligence is at the heart of the human-AI debate. While the human brain, a product of millions of years of evolution, exhibits a remarkable plasticity, emotional depth, and contextual understanding, AI, particularly in its current narrow form, demonstrates superhuman capabilities in specific, data-intensive tasks, see

Figure 1 (c.f., Cohen et al., 2020). As observed, A, The way a pyramidal neuron reacts to an input is influenced by where that input hits on the dendrite. Inputs that come in close to the soma have a direct impact on the neuron’s firing rate, while feedback inputs that land on the apical dendrites can influence burst firing (P(Burst)). B, The firing rates of both presynaptic and postsynaptic neurons, along with P(Burst), play a crucial role in controlling plasticity, which includes long-term potentiation (LTP) and long-term depression (LTD). C, The way cortical neurons integrate feedback and feedforward inputs might help tackle the credit assignment problem in hierarchical artificial neural networks (ANNs). D, A diagram illustrating how neuromodulation can be incorporated into ANNs. On the left, an error signal from a network disturbance is transmitted through a global neuromodulatory effect. In the middle, error signals are conveyed via node-specific neuromodulatory inputs. On the right, different neuromodulatory inputs could be involved in signaling various error functions.

The traditional yardstick of intelligence, often measured by logical reasoning and problem-solving, is being continuously challenged by the advent of machines that can master complex games and perform intricate calculations at speeds unattainable by the human mind (Schrittwieser et al., 2020). This paper will explore this evolving understanding of intelligence, moving beyond a simplistic binary to a more nuanced appreciation of their complementary strengths.

2. The Landscape of Intelligence: A Tale of Two Processors

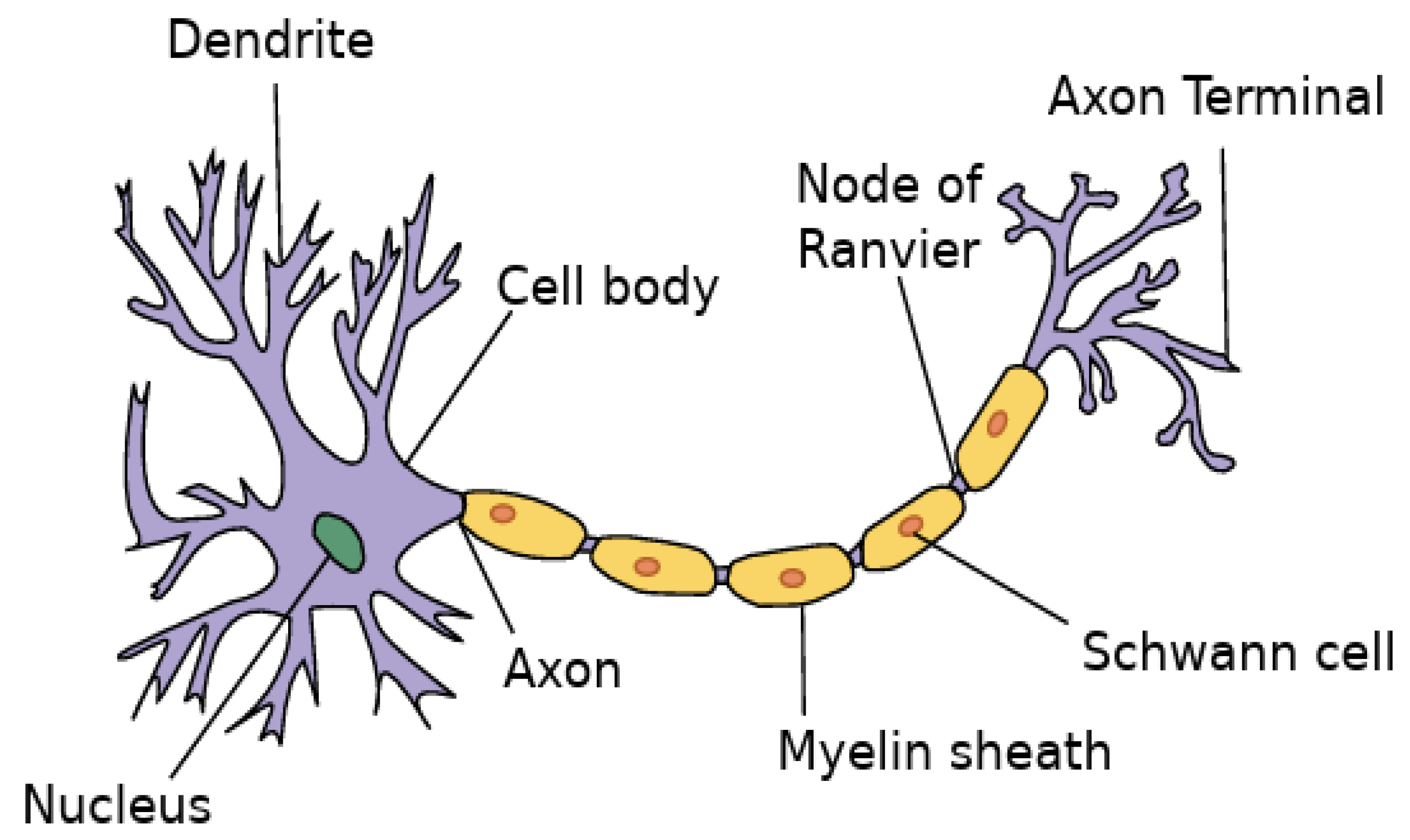

The human brain and artificial intelligence systems evaluate data in quite distinct ways. With its associative and pattern-based reasoning (Tian et al., 2023), the architecture of the brain is a highly parallel, dispersed network of neurons. This biological foundation enables intuitive leaps, innovative problem-solving(Mageed & Nazir, 2024; Mageed, 2024a; Mageed, 2024b; Mageed, 2024c; Mageed, 2024d; Mageed, 2025a; Mageed, 2025b; Mageed et al., 2024a; Mageed et al., 2024b; Mageed et al., 2024c), and an amazing capacity to learn from sparse and incomplete data. On the other hand, modern AI, mostly powered by deep learning, is great at pattern recognition in massive databases, see

Figure 2 and

Figure 3 (c.f., Zhang et al., 2023)

Though artificial intelligence can identify relationships with remarkable precision, the capacity to comprehend the “why” behind the data still presents a major obstacle. These systems, however, frequently lack the causal reasoning and common-sense understanding that define human cognition (Bareinboim et al., 2022; Thompson et al., 2020; (Mageed & Nazir, 2024; Mageed, 2024a; Mageed, 2024b; Mageed, 2024c; Mageed, 2024d; Mageed, 2025a; Mageed, 2025b; Mageed et al., 2024a; Mageed et al., 2024b; Mageed et al., 2024c)

3. The Enigma of Consciousness: The “Hard Problem” and the Machine

A pivotal, and perhaps the most profound, distinction lies in the realm of subjective experience, or consciousness. As (Del Pin et al., 2021) famously articulated, the “hard problem” of consciousness – the question of why and how we have subjective experiences – remains unsolved. While AI can simulate emotions and even generate seemingly introspective text, there is no evidence to suggest that these systems possess genuine phenomenal consciousness (Seth, 2024). The integrated information theory proposed by (Chang et al., 2020) offers a framework for quantifying consciousness, a metric that current AI systems are far from satisfying. (Feinberg & Mallat, 2025) further illuminates the neural correlates of consciousness in the human brain, highlighting the intricate biological mechanisms that give rise to our inner world, a complexity yet to be replicated in silicon.

4. Open Challenges on the Path to Artificial General Intelligence (AGI)

Creating Artificial General Intelligence (AGI)—an artificial intelligence capable of comprehending, learning, and using its intelligence to address a wide range of issues, very much like a human being—is riddled with difficulties. Emphasizing the necessity of systems able to integrate many cognitive talents, (Summerfield, 2023) describes the complex nature of this project. One of the major problems is the brittleness of present artificial intelligence: systems taught on one job frequently fail dramatically when confronted with a barely different one(Mageed & Nazir, 2024; Mageed, 2024a; Mageed, 2024b; Mageed, 2024c; Mageed, 2024d; Mageed, 2025a; Mageed, 2025b; Mageed et al., 2024a; Mageed et al., 2024b; Mageed et al., 2024c). The creation of strong and ethically aligned artificial intelligence presents another major difficulty, one (Mastrogiorgio & Palumbo, 2025) nicely brought up in their examination of the possible existential risks of superintelligence. (Wang et al., 2021) promoted an approach of “intelligence without representation” that stresses embodied cognition and real-world engagement, therefore providing a possible but difficult way ahead.

5. Prospects: A Symphony of Collaboration

Instead of seeing the expansion of artificial intelligence as a zero-sum game, the most hopeful future is in the synergy of human-AI cooperation. The individual talents of artificial and human intelligence are quite complimentary rather than mutually exclusive. AI can be a strong instrument to enhance human intelligence by processing enormous volumes of data and finding patterns that may go unnoticed by human perception (Kim & Bassett, 2023). This collaboration can help to speed up scientific research, improve medical diagnoses, and inspire fresh kinds of creative expression. Going forward, the attention must change from a competitive story to one of co-evolution, whereby the individual skills of both human and artificial minds are utilized to meet the difficult problems confronting mankind.

6. Conclusion: A Dialectic in Motion

The debate over whether the human brain or artificial intelligence is “smarter” is, in many respects, a bit misguided. It assumes there’s a single definition of intelligence, which overlooks the incredible variety of cognitive skills we possess. The human brain, with its ability to think, create, and understand complex ideas, is truly a wonder of nature. On the other hand, artificial intelligence brings its own strengths, showcasing immense processing power and analytical skills. The real goal isn’t to build a machine that can perfectly mimic human thought, but rather to cultivate a partnership with our intelligent creations. This collaboration could lead us into a new age of remarkable intellectual discovery and achievement. The path to understanding and utilizing intelligence in all its forms is an ongoing journey filled with dialogue and exploration.

References

- Bareinboim, E., Correa, J.D., Ibeling, D. and Icard, T., 2022. On Pearl’s hierarchy and the foundations of causal inference. In Probabilistic and causal inference: the works of judea pearl (pp. 507-556).

- Chang, A.Y., Biehl, M., Yu, Y. and Kanai, R., 2020. Information closure theory of consciousness. Frontiers in Psychology, 11, p.1504. [CrossRef]

- Cohen, Y., Engel, T.A., Langdon, C., Lindsay, G.W., Ott, T., Peters, M.A., Shine, J.M., Breton-Provencher, V. and Ramaswamy, S., 2022. Recent advances at the interface of neuroscience and artificial neural networks. Journal of Neuroscience, 42(45), pp.8514-8523. [CrossRef]

- Del Pin, S.H., Skóra, Z., Sandberg, K., Overgaard, M. and Wierzchoń, M., 2021. Comparing theories of consciousness: why it matters and how to do it. Neuroscience of Consciousness, 2021(2), p.niab019. [CrossRef]

- Feinberg, T.E. and Mallatt, J.M., 2025. Consciousness demystified. MIT Press.

- Kim, J.Z. and Bassett, D.S., 2023. A neural machine code and programming framework for the reservoir computer. Nature Machine Intelligence, 5(6), pp.622-630. [CrossRef]

- Mageed, I.A. and Nazir, A.R., 2024. AI-Generated Abstract Expressionism Inspiring Creativity through Ismail A Mageed’s Internal Monologues in Poetic Form. Annals of Process Engineering and Management, 1(1), pp.33-85.

- Mageed, I.A., 2024a. Entropic Artificial Intelligence and Knowledge Transfer. Adv Mach Lear Art Inte, 5(2), pp.01-08.

- Mageed, I.A., 2024b. Ismail’s Threshold Theory to Master Perplexity AI. Management Analytics and Social Insights, 1(2), pp.223-234.

- Mageed, I.A., 2024c. On the Rényi Entropy Functional, Tsallis Distributions and Lévy Stable Distributions with Entropic Applications to Machine Learning. Soft Computing Fusion with Applications, 1(2), pp.87-98.

- Mageed, I.A., 2024d. Information Data Length Theory of Human Emotions, How, What and Why. Int J Med Net, 2(4), pp.01-09.

- Mageed, I.A., 2025a. Surpassing Beyond Boundaries: Open Mathematical Challenges in AI-Driven Robot Control. MDPI Preprints.

- Mageed, I.A., 2025b. The hidden mathematics to treat cancer… innovative mathematics to unlock life mysteries. Computational Algorithms and Numerical Dimensions, 4(2), pp.106-144.

- Mageed, I.A., Bhat, A.H. and Alja’am, J., 2024. Shallow Learning vs. Deep Learning in Social Applications. In Shallow Learning vs. Deep Learning: A Practical Guide for Machine Learning Solutions (pp. 93-114). Cham: Springer Nature Switzerland.

- Mageed, I.A., Bhat, A.H. and Edalatpanah, S.A., 2024. Shallow Learning vs. Deep Learning in Finance, Marketing, and e-Commerce. In Shallow Learning vs. Deep Learning: A Practical Guide for Machine Learning Solutions (pp. 77-91). Cham: Springer Nature Switzerland.

- Mageed, I.A., Bhat, A.H. and Rehman, H.U., 2024. Shallow Learning vs. Deep Learning in Anomaly Detection Applications. In Shallow Learning vs. Deep Learning: A Practical Guide for Machine Learning Solutions (pp. 157-177). Cham: Springer Nature Switzerland.

- Mastrogiorgio, A. and Palumbo, R., 2025. Superintelligence, heuristics and embodied threats. Mind & Society, pp.1-15. [CrossRef]

- Schrittwieser, J., Antonoglou, I., Hubert, T., Simonyan, K., Sifre, L., Schmitt, S., Guez, A., Lockhart, E., Hassabis, D., Graepel, T. and Lillicrap, T., 2020. Mastering atari, go, chess and shogi by planning with a learned model. Nature, 588(7839), pp.604-609. [CrossRef]

- Seth, A.K., 2024. Conscious artificial intelligence and biological naturalism. Behavioral and Brain Sciences, pp.1-42. [CrossRef]

- Summerfield, C., 2023. Natural General Intelligence: How understanding the brain can help us build AI. Oxford university press.

- Thompson, N.C., Greenewald, K., Lee, K. and Manso, G.F., 2020. The computational limits of deep learning. arXiv preprint arXiv:2007.05558, 10.

- Tian, S., Li, W., Ning, X., Ran, H., Qin, H. and Tiwari, P., 2023. Continuous transfer of neural network representational similarity for incremental learning. Neurocomputing, 545, p.126300. [CrossRef]

- Wang, J., Chen, W., Xiao, X., Xu, Y., Li, C., Jia, X. and Meng, M.Q.H., 2021. A survey of the development of biomimetic intelligence and robotics. Biomimetic Intelligence and Robotics, 1, p.100001. [CrossRef]

- Zhang, A., Lipton, Z.C., Li, M. and Smola, A.J., 2023. Dive into deep learning. Cambridge University Press.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).