1. Introduction

The link between energy and information is

a priori confusing because of the great influence of Descartes philosophy on modern science and the object/subject duality (or body/mind duality) that he explicitly introduced [

1,

2]. On the one hand, energy is one of the most fundamental concepts in physics and the physical world is usually viewed as an object, that is to say something that is observed. On the other hand, information is “the imparting of knowledge in general” (Oxford Dictionary) and knowledge refers to the human as a subject, that is to say a being that is thinking and observing, in brief an observer. The link observed/observer is clear but it becomes disturbing when we say that information is energy. There is confusion of roles.

In a quite general manner, a scientific theory is usually viewed as a consistent set of concepts accounting for objective reality (that which we can know) [

3,

4,

5,

6]. Everything should be clear. Except that science does not deal with the essence of thing, so that the most fundamental concepts has no definition (this is the case of energy). And except that science also does not deal with the existence of thing, so what reality means is actually a matter of personal viewpoint that has been a subject of debate from ancient Greece to the present day.

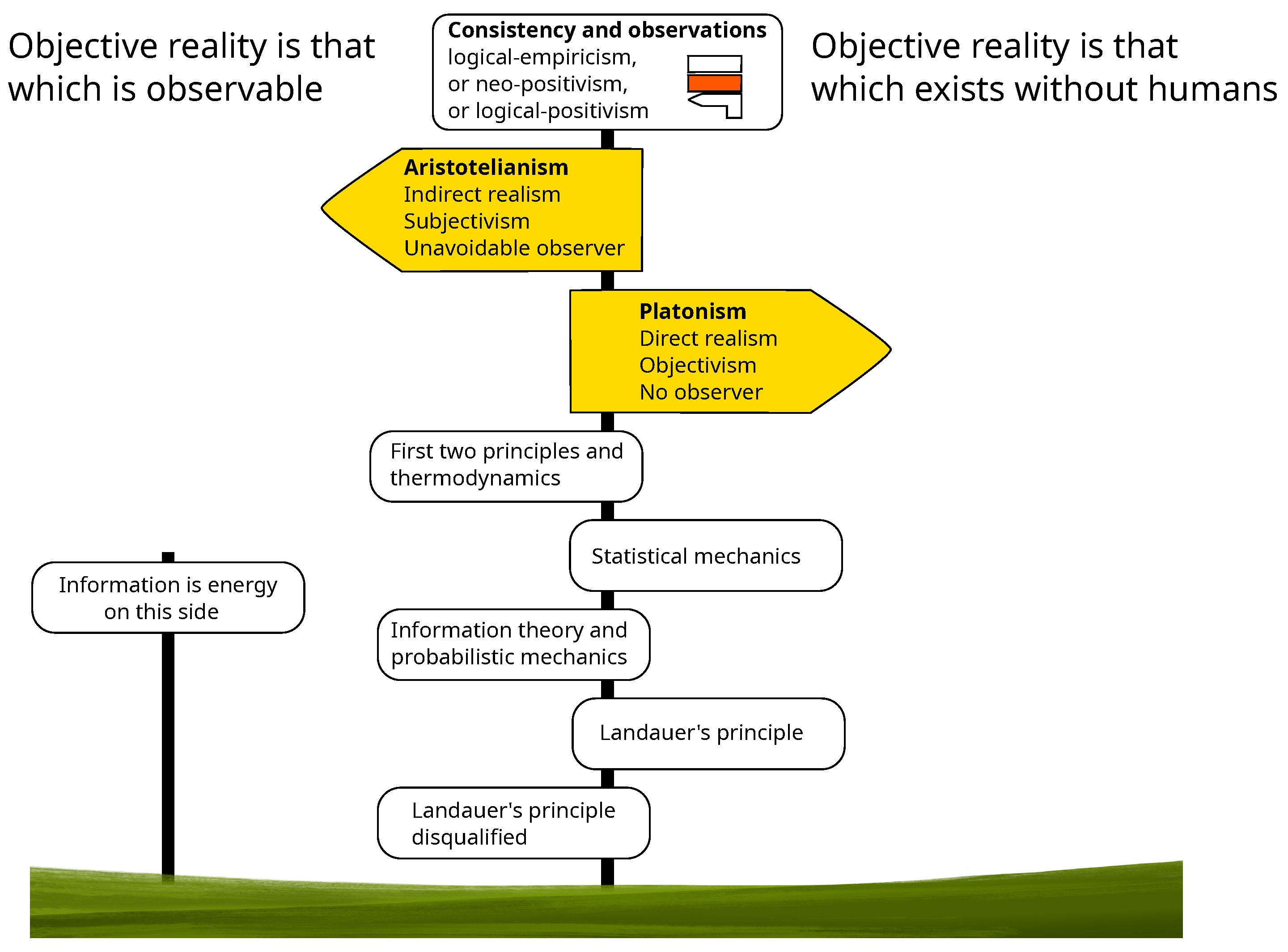

Broadly speaking, the different points of view fall into two categories, which can be symbolized by that of Plato and that of Aristotle:

Plato: we can know through reason what exists independently of humans. This intelligible world, which exists without any observer, is the objective reality with which science deals.

Aristotle: what we can know is only the part of the world with which we interact, that is, the part that is observable. This sensible world common to all is the objective reality with which science deals.

The object/subject duality has its roots in this very old debate. The Plato’s intelligible world is objective by definition. The way in which the sensible world becomes objective is less direct. In this latter case, “objective” means “non-personal”, that is, “independent of the person of the observer”. In other words, an observation must be reproducible by anyone with the same means and the same information. The objectivization of the sensible world necessarily involves the concept of information.

Both points of view are defensible and this article will certainly not close the debate. Nowadays mathematics (and so logic) are usually viewed as purely Platonic, whereas natural sciences are usually perceived as Aristotelian because observations and experiments are the ultimate judges of truth: “

all knowledge about reality begins with experience and terminates in it” (A. Einstein [

5]). However, even the natural sciences use mathematics and are submitted to the imperative of logical consistency and embed a Platonic element. Thus, logical-empiricism, or neo-positivism, or logical-positivism, which recognizes the two contributions: logic and observations as the only sources of knowledge, should be, it seems to me, fairly consensual. In any case, whatever one’s personal opinion on this debate, classical logic, and more particularly its law of non-contradiction, being the only and ultimate common point on which everyone agrees, provided that this logic is preserved, neither option is more scientific than the other.

The aim of this paper is to show how, despite what we have just said, on the question of energy and thermodynamics the role of the observer and his knowledge is regularly denied with the only implicit argument being that of a pure Platonism. This comes at the cost of many inconsistencies that are regularly resolved by reintroducing the observer into the problem again. Submitted to these two alternating forces, the position of the observer has oscillated from the origin of the concept of energy to the present day (

Figure 1). The purpose of this article is to retrace this chronicle. It is divided in five sections that follow the chronology: thermodynamics and its first two principles, statistical mechanics, Shannon’s information theory, Landauer principle and its derivatives and finally the invalidation of the latter.

2. Thermodynamics and the First Two Principles

The concept of energy comes from thermodynamics, which is probably the most emblematic example of what a phenomenological theory is. Everything starts from observations and experiments to which a consistent set of general laws is derived by induction [

7,

8]. But thermodynamics is and remains phenomenological. That is to say, the concepts used in thermodynamics are highly dependent on observations and on the prior information needed for those observations: what and where to look and with what instruments.

The famous quote “

The idea of dissipation of energy depends on the extent of our knowledge” (J. C. Maxwell [

9]), clearly refers to the second principle and entropy (dissipation) and puts the observer at a privileged position. But actually this position was already established from the first principle. So that, thermodynamics as a whole distances physics from a purely Platonic conception of

objective reality.

2.1. First Principle: Energy, Changes and Equilibrium

Before Joule, we knew about heat, which is what is necessary to raise the temperature of a body, and we knew about mechanical work, which is what is necessary to set it in motion. But anyone who has ever warmed their hands by rubbing them together knows that mechanical work can turn into heat. Joule [

10] showed that the quantity of heat so produced is always proportional to the quantity of mechanical work provided. So that by choosing a correct unit for both, these two quantities are equal. A new physical quantity was born: energy. It was not discovered, but invented.

However, “

it is important to realize that in physics today, we have no knowledge of what energy is.” (R. Feynmann [

11]). Energy is a pure abstraction. There is no such thing as an “energy particle” (an object) that could exist independently of us and independently of our thinking. If energy is considered as belonging to

the objective reality, this reality is not that of Plato.

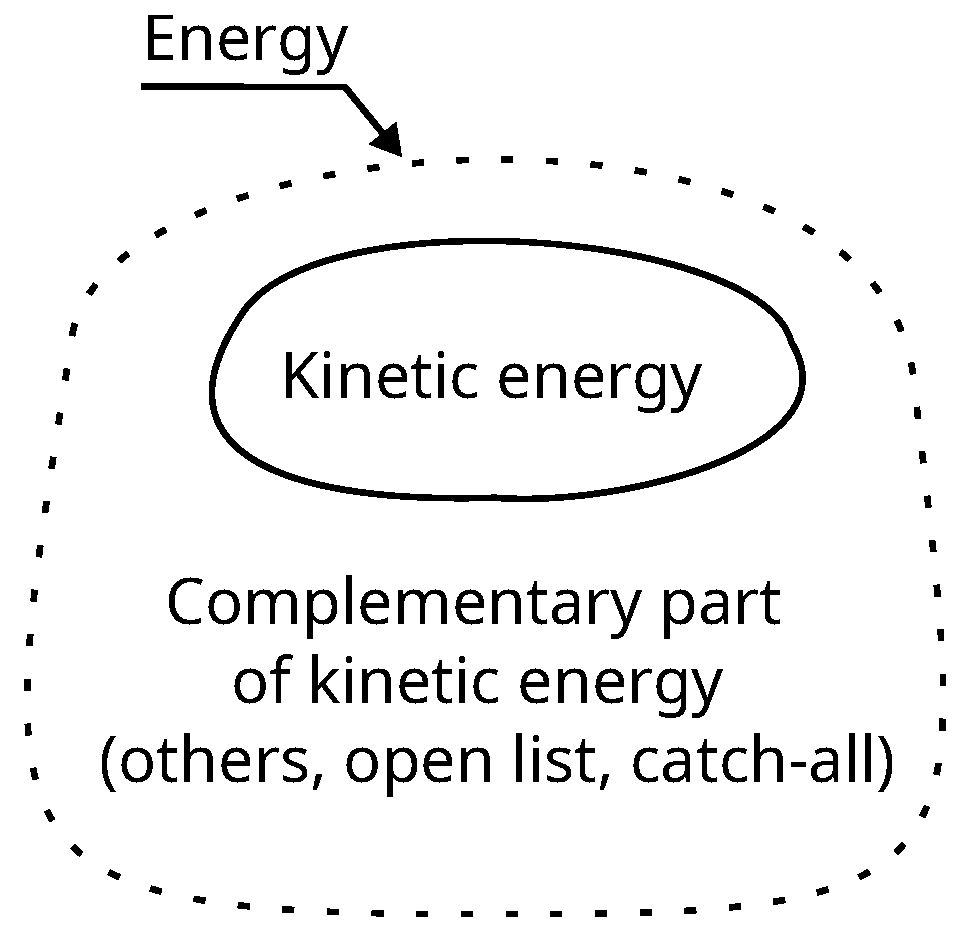

Energy is only “defined” by a principle of conservation: the first principle. But this is not a definition that would allow us, for example, to recognize energy with certainty when we see it. In classical mechanics, we have kinetic energy and potential energy, the latter being “potential” in the sense that it is potentially kinetic energy. In special relativity, we have energy of motion (kinetic energy) and energy of rest (rest mass), the latter being here again defined by opposition to the former. In thermodynamics, energy is transferred under the form of heat or under the form of work (anything that is not heat), and energy is stored under the form of thermal energy (identified later as corresponding to the average kinetic energy of particles) or under the form of anything that is not thermal energy. Clearly, the only thing we can recognize with certainty is kinetic energy. The other forms of energy are “defined” as not being kinetic energy, they are defined as a sort of complementary part of the latter. But it is a complementary part based on a whole that is not closed and always opened to the discovery of new forms of energy. In case we discover that a phenomenon produces a non-zero energy balance, it means that a new form of energy has been discovered. Since the birth of this concept, this is how it has always worked and there is actually no alternative that would be logically consistent. This is a key point. The principle of conservation is inseparable from leaving open the list of what is considered to be energy. Closing this list would amount to consider as impossible new discoveries. But by definition, we do not know the future of our knowledge, so that new discoveries cannot be excluded. Hence the inconsistency that would consist is closing the list.

In the absence of anything better, energy is “defined” as the thing that is conserved. But like that, this operational definition is incomplete, because the idea of conservation makes sense in relation to change. So that, energy is the thing that is conserved when everything else change. This is in line with Planck’s idea of defining the energy of a body “

as the faculty to produce external effects” [

8]-§56, as “effects” are only evaluated by changes (for a very interesting paper on the link between energy and changes see [

12]).

The concept of energy is therefore in reality inseparable from that of change, which is itself inseparable from that of time. This brings us to the definition of equilibrium in thermodynamics which is conceived as the stationary state in which no change occurs. An important point for the sequel deserves to be underlined. In phenomenological thermodynamics, there are no fluctuations in the theory, nothing spontaneous takes the system out of its equilibrium, which therefore does not need to be restored. Equilibrium does not need to be an attractor for the theory to be consistent. The equilibrium is the optimum of nothing at all. It was only after the advent of statistical mechanics that this notion of optimum appeared.

Figure 2.

Principle of conservation: energy is the thing that is conserved when everything else change. If a phenomenon produces a non-zero energy balance, it means that a new form of energy has been discovered. We known kinetic energy. the others form a list that remains open to new discovery.

Figure 2.

Principle of conservation: energy is the thing that is conserved when everything else change. If a phenomenon produces a non-zero energy balance, it means that a new form of energy has been discovered. We known kinetic energy. the others form a list that remains open to new discovery.

2.2. Second Principle: Asymmetry of Changes, Irreversibility and Clausius Entropy

While mechanical work can be completely changed into heat, the reverse is always incomplete [

8]. Relative to the energy involved, any change of a system in a given direction, say from state A to state B, is never the symmetrical of the reverse that brings the system from B to A. The system always provides more heat to the surroundings from A to B than the surroundings to the system from B to A. The system always supplies more heat to the environment on the way there, than the environment supplies to the system on the way back. Regardless of the changes that occur during a cycle, part of energy is always dissipated as heat transferred to the surroundings :

where the subscript AA designates the cycle

, the sign of

is relative to the system and

is the quantity of heat dissipated. In Equation (

1), the equality sign holds for ideal reversible processes, which strictly speaking never exist in reality. For real systems, the heat dissipated for a complete cycle is always strictly positive but tends towards zero as the evolution rate tends also towards zero. These quasi-static systems, although rigorously speaking irreversible, are called reversible for convenience of language. Because there exist free processes, that run out of control and which rate of evolution cannot be driven, so that the heat dissipated is always finite. These are the ones we call irreversible.

This inevitable heat dissipation is accounted for by the second law of thermodynamics that introduces a new state function

S, named entropy [

13] (which will be referred to as Clausius entropy hereafter), such as, whatever the change of state that a system undergoes at constant thermal energy

T, we always have:

where

is the quantity of heat given by the system and received by the surroundings. Equation (

2) is the Clausius inequality.

The equality sign in Equation (

2) holds for a reversible ideal change of state (whose path expressed by a differential form can be followed infinitely slowly in a quasistatic manner) and serves as a definition of entropy in thermodynamics:

The inequality sign in Equation (

2) is the general case. At constant internal energy, the sum of heat

Q and work

W received by the system is zero:

, and Equation (

2) can be rewritten as

where

W is the work required to change the state of the system toward a state of lower entropy. In other words (that will be useful in the sequel), any negative variation of entropy of the system requires a minimum work

to be provided by the surroundings.

2.3. The Role of the Observer

Thermodynamics is phenomenological, so that by definition it places the observer in a key position. Thermodynamics deals only with phenomena. This means that thermodynamics deals only with observable changes, but also that in thermodynamics there is no change that is not observable. The objective reality of thermodynamics is Aristotelian.

The consequence, which seems to be a truism, is that in thermodynamics, whether two states are different or not depends on the ability to distinguish changes and differences. For example, it depends on the resolution of our instruments, it depends of detection threshold of impurities etc. It depends also on our current knowledge, for example there was no change in isotopic composition before the discovery of isotopes [

14]. In the case where two states are perceived as identical, no work is necessary to move the system from one to the other because there is only one. Let us take two examples.

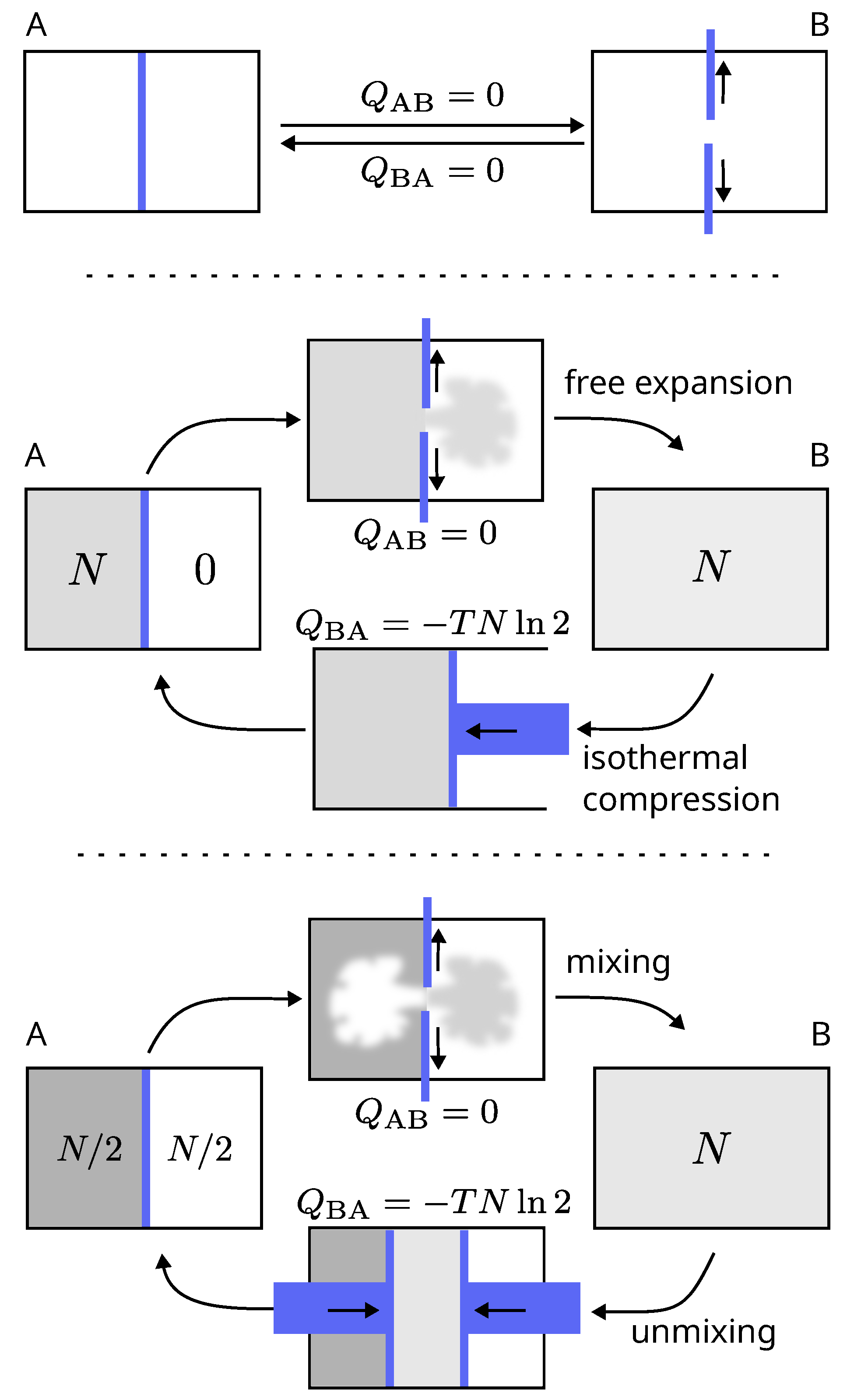

2.3.1. Free Expansion

Consider a container equipped with a wall that divides it in two and can be removed and replaced without friction (

Figure 3). Let us call A the initial state with the partition. Remove the partition (state B), then replace it and compare this final state A’ to state A. Any difference between states A and A’ depends on what we know about these states and on what we are able to measure. Let us assume that we are able to measure the energy exchanges between the container and the surroundings (heat or work), but that in this case we did not measured anything :

- 1.

If the information we have is limited to what we have just said, then states A and A’ are identical. No further operation or additional work is required to return to the initial state and a thermodynamic cycle is closed (

Figure 3, top).

- 2.

If we have the additional information that initially in state A, the left side of the container was filled with

N particles of gas, while the right side was empty (this assumes that we have at our disposal a detector capable of quantifying the amount of gas), then A and A’ differ. An additional mechanical work is necessary to compress the gas by a factor 2 so that it returns into its initial compartment and the system is restored to state A. At constant temperature, this work dissipates at least

of heat into the surroundings (

Figure 3, middle).

The first step of this example, that consists in removing the partition, is the free expansion of a gas. It is not accompanied by any exchange of heat or work, the internal energy is constant and the thermal energy (the temperature) of the gas does not change. So, if these are the only sources of information (first case), nothing has happened and this first step of the cycle is entirely reversible, because we do not know that it was in fact a free expansion. If we have an additional source of information (second case with a gas detector), then during this first step something has happened that requires additional work to be reversed. The first step (the free expansion) is then thermodynamically irreversible.

The thermodynamic irreversibility of a process depends on our knowledge.

2.3.2. Gibbs Paradox of Mixing

The free expansion of a volume of gas into another is quite similar to the experiment of mixing two volumes of gas. The latter case can be considered as the free expansions of two volumes of gas into each other (

Figure 3, top and bottom).

Consider two initial sub-volumes filled by the same quantity of gas particles, at the same pressure and thermal energy, but which can be of the same species, or of two different species (e.g., two different isotopes). Remove the partition. Here again, as for the case of free expansion, whether or not an additional work is needed to return the system to the initial state, and so the difference of entropy between the final and initial states, depends on what we know about the initial state. This depends on an additional source of information allowing to distinguish the two gas species and to decide whether or not they are identical.

This experiment of mixing is at the basis of what is known as the Gibbs’ paradox of discontinuity of the entropy of mixing: the entropy upon mixing is believed to vary discontinuously from 0 to

with the dissemblance whereas other physical quantities vary continuously. Gibbs actually considered this as a veridical paradox in the sense that everything is correct (the discontinuity is actual) and there no contradiction [

15]. He simply noted this discontinuity and immediately gives us the solution: “

It is to states of systems thus incompletely defined that the problems of thermodynamics relate” (J.W. Gibbs [

16] p.228).

2.3.3. Incomplete Information

Filled or empty (free expansion), identical or not (mixing), it seems clear-cut (hence the paradox of mixing considered as veridical), but actually it is not the case. The paradox is falsidical and there is no discontinuity. In the first experiment (free expansion), the actual work needed to restore the initial state depends on how many gas particles between 0 and

N must be actually compressed, i.e., what is considered empty for the other compartment. In the second experiment (mixing), the actual work depends on which degree of separation of the two species is considered satisfactory. In both cases, the minimum actual work to return to the initial state varies continuously from 0 to

, and thus the entropy difference between the two states from 0 to

[

17].

It could be argued that these are special cases in which the observer does not have full information. Full information does not depend on the observer and could be considered as an intrinsic property of the system that belongs to a Platonic objective reality. But this does not hold logically, because it is impossible, at any moment, to be certain that one has all the information. The total information is and will always remain unknown, and that which we have will always remain incomplete.

2.3.4. The Observer’S Choice

The observer suffers the best instrumental resolution and our current common knowledge of the physical world, all things which are not under his control. But there are other examples where his role is more active and the observer decides for himself which information deserves attention or not.

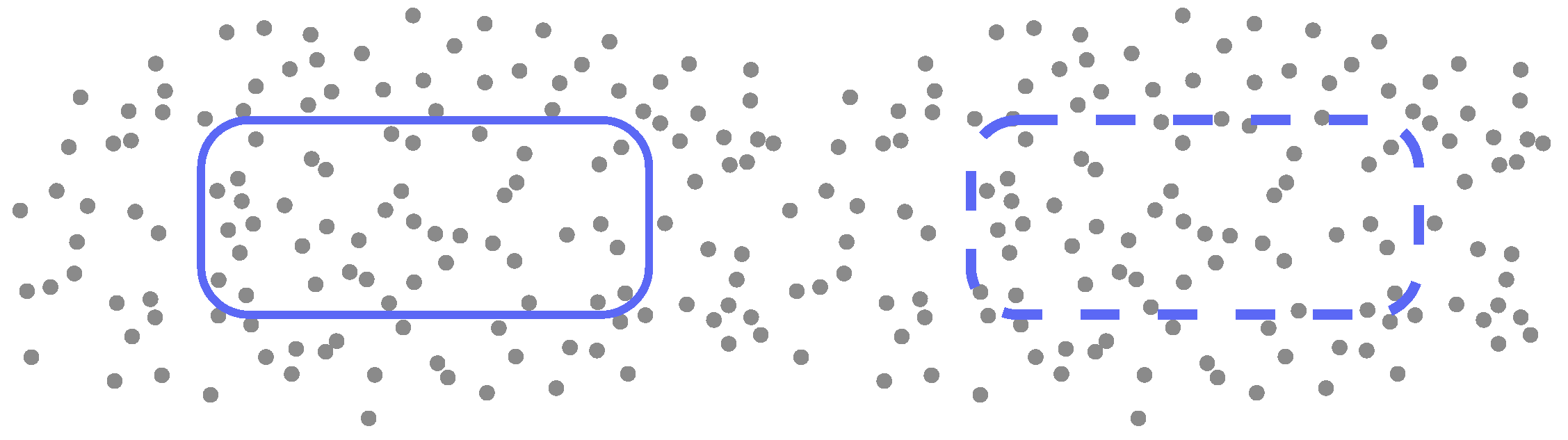

Consider a sub-volume of gas within a larger reservoir filled with the same gas species made of distinguishable particles (

Figure 4). If the sub-volume is closed, the particles constituting the system are given, so that their identity is information which is not necessarily interesting but which undoubtedly contributes to the total information allowing the system to be described.

On the contrary, if the sub-volume is open, its particles are continually exchanged with the reservoir, so that their identity does not participate at all in the total information about the system, although strictly speaking this information exists.

With this example, it appears that the observer’s definition of the system determines what information is worth considering. In the thermodynamics of open systems, the identity of the particles is irrelevant, and neither are their permutations. The reason is not that the particles are indistinguishable or untraceable (they are always distinguishable or traceable as soon as they are large enough). The reason is that we decided that it was not relevant according to the definition we gave of the system.

Let us report a quote from van Kampen: “

The choise between [distinguishable or indistinguishable molecules] depends on whether the experimentalist is able and willing to make the distinction, not on the fundamental properties of the molecules. Thus the [Gibbs] paradox is resolved by replacing the Platonic idea of entropy with an operational definition” [

18].

2.4. Information Is Energy: the Proof by Demons

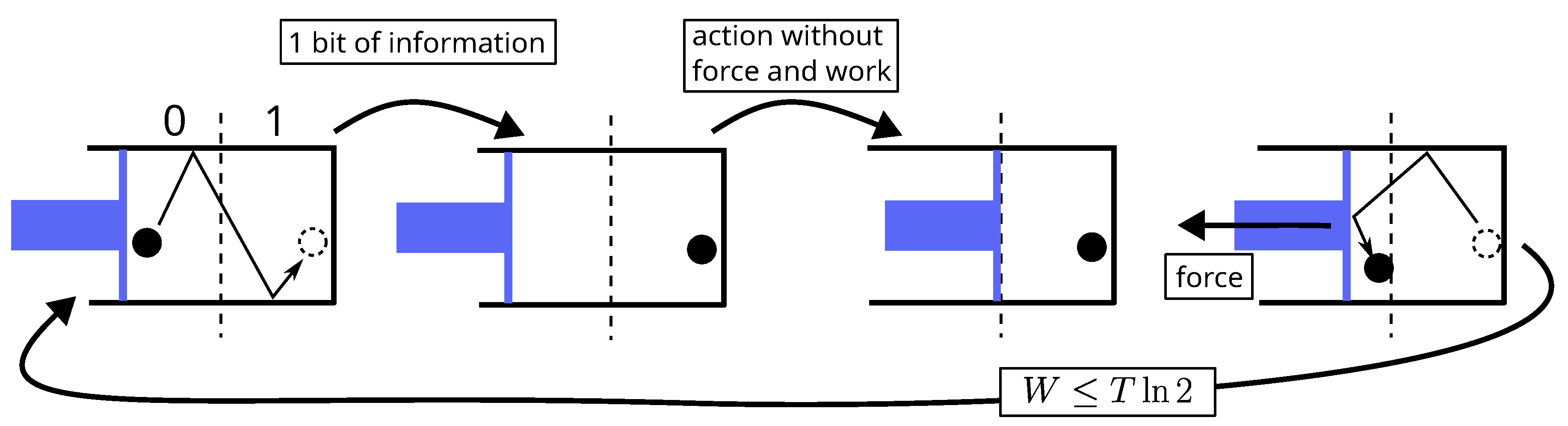

Changes and therefore the energy needed to bring them about are not intrinsic to a process or a system. They also depends on the information we have. Does this mean that information is energy ? Yes and it is proved by the intervention of demons.

From Maxwell [

7] to Szilard [

19] passing by Loschmidt [

20] demons observe thermodynamic systems at the scale of particles, acquire information about them, and use this information to produce energy (see

Figure 5). Where does this energy come from ? It appears to come from nothing but information. So that, according to the conservation principle, information must be added to the open list of known forms of energy. This is how the different forms of energy have always been added to the list [

11]: chemical energy, electrical energy, magnetic energy, radiant energy, nuclear energy, potential energy, dark energy... There is absolutely no reason to do otherwise for information. And moreover, doing otherwise would lead to inconsistencies.

Most of the time the action of demons is presented as a very subtle point that would lead us to wrongly believe that the second law is violated (see e.g., [

21,

22,

23,

24,

25,

26,

27]). Thanks to even more subtle reasoning it is finally not. But in reality, it is not just the second law that would be challenged by demons. It is the first principle of conservation that would be ruined if information were not considered energy. Fortunately, this is impossible by construction of this principle (see

Section 2.1). The principle of conservation necessarily implies a list of different forms of energy that will remain forever open. This solution to the problem posed by demons is certainly less subtle than others, but it is above all more robust.

3. Statistical Mechanics

Thermodynamics clearly tends towards the Aristotelian conception of objective reality and this is precisely where the problem lies for Platonists. So, since the advent of atoms, attempts have been made to correct this. This is the reductionist approach of statistical mechanics which aims to derive thermodynamics from atoms, the mechanics of their collisions and from probabilities, in a way more compatible with a Platonic conception.

This presentation may seem biased, it may seem like presumed intent. But it is not and the intent is clearly stated from the beginning by Gibbs himself. Motivations for reductionism should be the unification with fluid mechanics, or with anything else, but here this is not claimed. Instead, Gibbs who first used the term statistical mechanics in the title of his book [

28] subtitles it “the rational foundation of thermodynamics”. Does this mean that Gibbs considered thermodynamics to be irrational ? Certainly not. By “rational” he means “Platonic”, that is, more objective and more independent of humans than phenomenology. What is more objective and independent of humans than atoms and Newton’s laws of mechanics ?

But, to realize the program of statistical mechanics, there is a first obstacle to overcome: with which probability distribution should we begin the calculations ?

3.1. The Pragmatic Approach of the Fundamental Postulate

With atoms comes thermal agitation. Even at the equilibrium, thermodynamic systems are dynamical: they are continually changing their microscopic configuration (their phase or microstate), which we have absolutely no way of knowing with certainty but only in terms of probabilities. Provided that the distribution is known. This is the first problem to be solved by starting with the simplest case of an isolated system which exchanges neither energy nor matter with the surroundings.

The pragmatic approach to this problem is straightforward: “

When one does not know anything the answer is simple. One is satisfied with enumerating the possible events and assigning equal probabilities to them.” (R. Balian [

29] p.143). This is the fundamental postulate of statistical mechanics, that is a variation of the Laplace’s “principle of insufficient reason” [

30] or “principle of indifference” [

31]: basically, all microscopic configurations have same probability because we have no reason (no information) to think otherwise. Probabilities are viewed as “expectations” that depend on the information we have.

However, this solution is felt to be intellectually unsatisfying. Probably the strongest argument against this approach is this: “

It cannot be that because we are ignorant of the matter we know something about it.” (R.L. Ellis [

32]), which is very difficult to contest (except in the framework of information theory that will be the subject of the next section). Thus, the alternative solution retained by Platonists is that of the ergodic hypothesis: probabilities are viewed as frequencies of occurrence of configurations and are intrinsic to the system.

3.2. The Ergodic Hypothesis

Consider like Gibbs ([

28] p.1) a Hamiltonian mechanical system made of

colliding particles (so that

n is the degree of freedom), each requiring to be fully described only three coordinates for its position

r and three others for its momentum

p. A microscopic configuration, also called a phase or microstate, is thus a point of coordinates

, abbreviated

, in a

-dimensional space, namely the phase space. Even in the macroscopic stationary state of equilibrium, the thermal agitation causes the system to continually change phase, that is, to move along a continuous trajectory in phase-space. Let us look at some properties of this trajectory.

In a continuum, the probability of a point has no meaning but must rather concern a given volume around that point and involve a local volume-density of points . This density is up to a normalization factor a density of probability and once multiplied by the volume gives the number of phases within this volume. If the system is isolated, its total energy (its Hamiltonian) is strictly constant and each phase-point of the elementary volume in question must strictly fulfill this constraint. So that, the considered elementary-volume belongs actually to the subset of the phase space that includes only all phases that are compatible with the constraint of a given constant energy, that is to say the space of all compatible phases (a ()-dimensional hyper-surface of the phase space). is the space of possible phases.

Due to dynamics, according to the different trajectories of each phase-point, any elementary volume will move and evolve within

, just like an elementary volume of a fluid under a flow. A strictly constant total energy means no fluctuation, so that the flowing fluid of phase-points is actually incompressible: its density is strictly independent of time and position on the trajectory.

This is the Liouville’s theorem. The density of probabilities of phases throughout a trajectory in

is uniform. The dynamics is said to be volume-preserving.

Consider two trajectories, since the system is deterministic, they cannot intersect, otherwise at the intersection the system evolution would not be determined. Two trajectories are either disjoint or identical. The ergodic hypothesis is that there are identical. Thus, if we prepare two samples with exactly the same macroscopic constraints (therefore two macroscopically identical samples) but at time two supposedly different phases, the trajectories of the latter will be the same, except that they will have started at a different phase-point. Therefore, this only trajectory encompasses all possible phases for an isolated system. In other words, the set of phase-points of this trajectory and the ensemble of possibilities, namely the microcanonical ensemble, are only one. The density of probabilities of phases in the microcanonical ensemble is thus uniform.

3.3. The Link with Thermodynamics

Once the probability distribution for an isolated system is established, thanks to the ergodic hypothesis, other cases can be derived with much less strong hypotheses, and in particular the canonical case of a closed system in contact with a temperature reservoir [

33]. With these distributions, the next step of statistical mechanics would be to calculate and express other quantities, particularly that related to the evolution, or absence of evolution, of a system, namely its entropy. In other words, starting only from the atomic scale and probabilities, the questions are:

- 1.

Is there a way, free from any a priori connection to thermodynamics, to derive a formula for entropy that allows us to define it ?

- 2.

Is there a way, free from any a priori connection to thermodynamics, to predict the time evolution of a dynamical system of particles ?

- 3.

Is there a way to define equilibrium not only as stationary (as in thermodynamics) but also as the stable state ?

These three questions are closely related and it is important to realize that they are absent from phenomenological thermodynamics, but only introduced by statistical mechanics. 1) In phenomenological thermodynamics, there is no need of a formula to calculate entropy, because it is a measurable quantity (it is the heat exchanged for a reversible change). Measurement makes definition. 2) Phenomenological thermodynamics deals with states, which by definition are static. It deals with changes of state, it does not deal with what happens during the change but only with what the state was before and what it is after. Dynamics and time variable were introduced by statistical mechanics. 3) In phenomenological thermodynamics, the equilibrium is stationary. Once at equilibrium, an isolated system remains there because nothing is supposed to move it away from it. Statistical mechanics introduces probabilities and therefore fluctuations into the theory. The system may spontaneously deviate from equilibrium. So that, the stationary state must be stabilized by something that must also be introduced into the theory.

In this section, these three questions are detailed with particular attention to the consistency of the answers they receive and to the reality of the emancipation from thermodynamics that they promise.

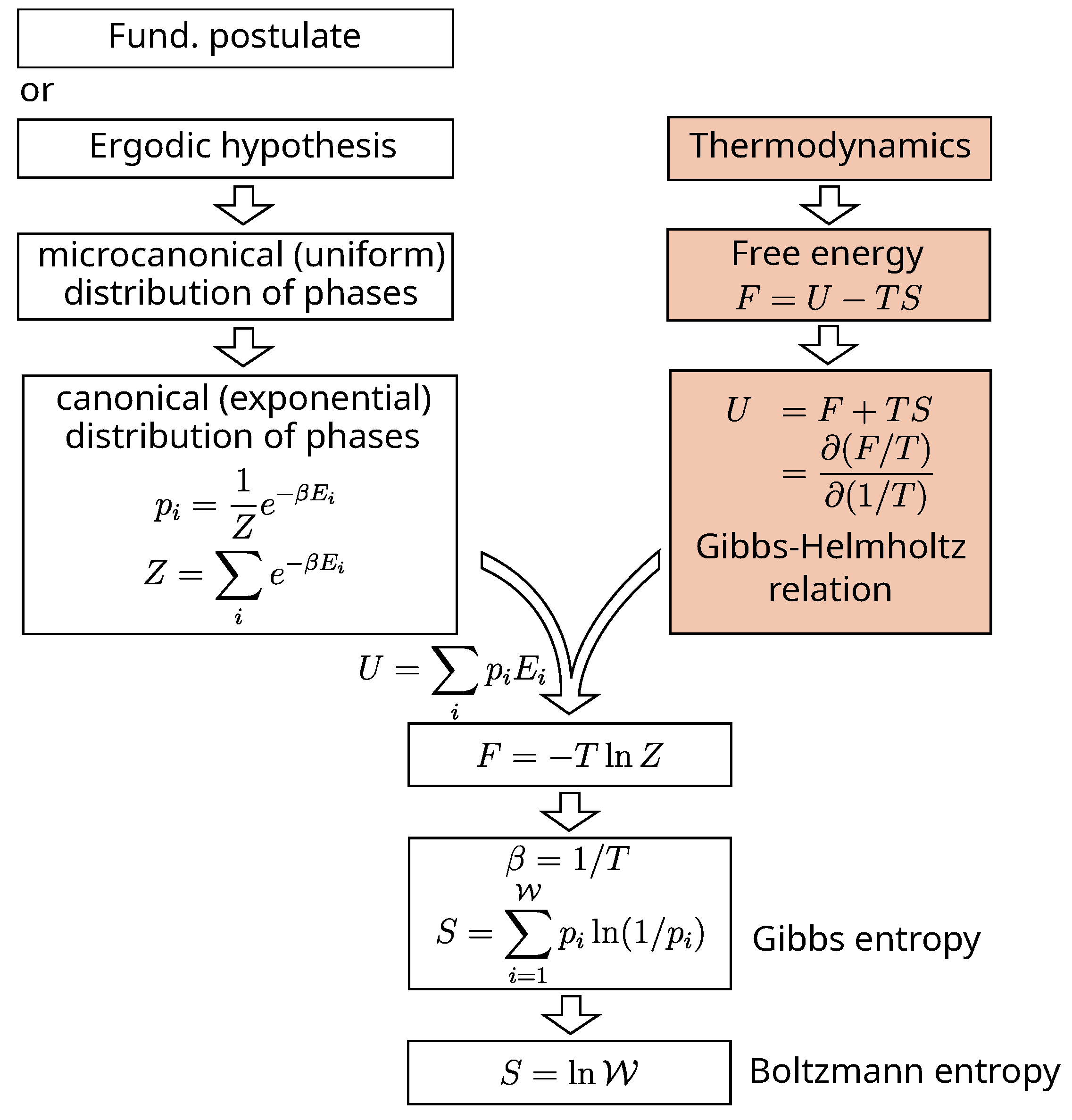

3.3.1. Gibbs and Boltzmann Entropies

Is there a way, free from any

a priori connection to thermodynamics, to derive a formula for entropy that allows us to define it ? The answer is no. At some point or another, thermodynamics will come into play in the derivation.

Figure 6 gives the outline of an example of derivation [

33], other examples of derivation can be found in ref. [

34,

35]. All methods amount to calculating from the distribution of phases certain statistical quantities, which by identification with classical equations of thermodynamics, lead to the Gibbs’ statistical entropy formula for closed systems:

or to that of Boltzmann for isolated systems:

where

is the cardinality of the discretized space

of possible phases and

the probability of phase

i.

Compared to the initial ambition of statistical mechanics, the result is a little disappointing.

3.3.2. Towards Equilibrium and H-Theorem

Is there a way, free from any

a priori connection to thermodynamics, to predict the time evolution of a dynamical system of particles ? This question is implicitly related to entropy and to the Clausius inequality (Equation (

2)). If a spontaneous change of an isolated system towards an equilibrium state corresponds in the end to a positive change in entropy, it seems natural that for a dynamical system of particles there must exist a time-dependent statistical quantity that increases with time during the change. Boltzmann addressed this question of evolution towards equilibrium and looked for such a probability rule that would substitute to the second law of thermodynamics [

36].

Starting from the kinetics theory of gases of Maxwell [

37] and in particular from the distribution of velocities he obtained, Boltzmann approached the problem of collision between two particles also in terms of probabilities. Because, even if the result of a given collision is purely deterministic, the exact conditions in which this collision occurs is unpredictable and random. This is the hypothesis of “molecular chaos”. For his calculation [

36,

38], Boltzmann considers the 6-dimensional phase-space

of one single particle and denotes

the corresponding probability density at time

t. From the hypothesis of molecular chaos, he obtains a transport equation that allows him to argue that a certain statistical quantity

always increases with time until it becomes constant at the equilibrium :

with

These equations forms the H-theorem. It was the first time that anything resembling statistical entropy had been written. The H-theorem seems natural and is frequently viewed [

39,

40,

41] as an alternative statistical expression of the 2nd law of thermodynamics plus a definition of equilibrium (equality sign in Equation (

8)) that ensures its stability.

Except that the mathematical proof of this theorem is still in progress [

38,

42]. But moreover, except that

H is not yet entropy. Because here,

f is a density of particle that differs from a density of probability of phase, so that it is not yet related to thermodynamic measurable quantities. Thus, even if it may be interesting to avoid any

a priori link with thermodynamics in the derivation of the 2nd law (and in this sense the H-theorem is a great success), the absence of an

a posteriori link is problematic.

But let us leave these points aside and focus on another problem that was raised by Loschmidt [

20,

43]. Newton’s equations of classical mechanics do not introduce any time irreversibility. If a motion is possible in one direction, it is also possible in the opposite direction. Loschmidt imagines a dynamical system made of colliding particles that tends towards equilibrium. At a given moment, a demons is capable of reversing the velocities of all the particles. This new microscopic state is just as compatible as the previous one with the macroscopic properties of the system, but the latter will now evolve backward and deviate from equilibrium. This is incompatible with the H-theorem. So, we wonder by what sleight of hand does Boltzmann arrive at his result ? So, we wonder how irreversibility suddenly arises ? It is actually put from the beginning of the reasoning under the form of the hypothesis of “molecular chaos”. A collision is conceived as fundamentally asymmetric in time: random before, deterministic after. This is acknowledged by Boltzmann himself: “

Any attempt to deduce [the Clausius inequality] from the nature of the bodies and from the law for the forces acting among them, without invoking the initial conditions, must therefore be in vain” [

20].

The H-theorem is obtained because the 2nd law has been moved to the root of proof. Therefore, considering the H-theorem as a proof of the 2nd law would clearly be circular reasoning.

3.3.3. The Paradox of the Stability of Equilibrium

Probabilities introduce fluctuations. A system at equilibrium is stationary on average, but may transiently deviate from equilibrium. It is therefore imperative to introduce something into the theory, an additional postulate or principle, that would play the role of an internal restoring force. Otherwise, the equilibrium would theoretically not be stable. In mechanics, a body will spontaneously follow a descent path of potential energy until it reaches the minimum. Similarly, in thermodynamics, we already have the 2nd law, according to which the entropy of an isolated system can only increase spontaneously. The postulate that entropy is maximal at equilibrium would ensure stability [

44]. This postulate would make the equilibrium an attractor.

Here is the main problem. An attractor is a region of the phase space where many trajectories will converge, so that the local phase-density will increase. And this is incompatible with a volume-preserving dynamics and a constant probability density throughout the trajectory (Equation (

5)).

This contradiction is particularly apparent in light of Poincaré’s recurrence theorem, which states that any volume-preserving dynamical system, whose trajectory is restricted to a bounded region of phase space (for instance with a finite volume and given total energy), behaves recurrently with arbitrary precision. In short, the system always returns at some time or another as close as desired to any phase already visited. This recurrence actually justifies considering probabilities as frequencies, but it leads to expected behaviors of the system radically opposed to observations and radically opposed to the conception of equilibrium as being stable. This is the paradox mentioned by Zermelo: it cannot be excluded that all the gas particles from an open bottle that have escaped into the room will concentrate themselves back into the bottle.

The argument for resolving this paradox [

36,

45] is based on different time scales: that of observations which is very short compared to that of recurrence which is geological (so that its probability tends to zero). The latter argument resolves the apparent contradiction that can be seen in the fact that something that is possible in principle is never observed in practice. But this does not resolve the real contradiction of considering a possibility that never occurs as an intrinsic property of a system. This does not resolve the real contradiction of considering probabilities of events as frequencies while denying their recurrence. This does not resolve the real contradiction of considering equilibrium as stable while not being an attractor.

3.4. Gibbs Paradox of Joining Two Volumes of Gas

A second paradox is introduced by statistical mechanics. It is related to the Gibbs paradox of mixing evoked in

Section 2.3.2, so that it is often called the second Gibbs paradox, even though Gibbs never mentioned it.

The first Gibbs paradox concerns the joining of two volumes of gas consisting of different or identical species. The second paradox concerns only the latter case, but considered from the point of view of thermodynamics or that of statistical mechanics. The removal of the partition between two identical volumes

V, filled with the same gas at the same temperature and pressure, is not accompanied by any measurable exchange of heat or work with the surroundings. When the partition is put back in position, the system is restored to its original state. The difference of Clausius entropy between the joined and disjoined states is thus zero:

From the point of view of phases probabilities, it is not so simple. In equation

7, let us write

as:

where

is the number of possibilities in position for

N particles and

the number of possibilities in momentum. For a volume

V with

particles, the number of possibilities in position is

. Thus for two equal disjoined volumes

V with

particles each, one has:

Once the partition is removed, the position of each particle can now be anywhere in

(instead of anywhere in

V). So that

becomes

, or:

In both cases, disjoined and joined, the distribution of particles momentum is the same (the total energy is unchanged). Thus, the difference in Boltzmann entropy between the two states is

, leading to:

To be compared to Equation (

10). The puzzle is that both entropy variations should be the same since Boltzmann’s entropy is derived from Clausius’s (

Figure 6).

To this paradox, usually two solutions are proposed [

46,

47,

48,

49,

50,

51,

52,

53,

54]) (except the one involving the notion of information presented in the next section) :

-

Quantum mechanics solution: according to this point of view it is not possible to understand classically the difference between Equations (

10) and

14, the solution is quantum ([

47] p.141). The number of possibilities for the joined and disjoined states are both overestimated because identical particles cannot be distinguished. In the disjoined case, the

permutations of a combination of

particle-positions must be counted as 1. Equation (

12) must be replaced by:

and similarly, Equation (

13) must be replaced by:

So that these corrections give:

instead of equation

14. This argument is known as “correct” Boltzmann counting, even though it is no more correct than the following one.

-

Classical mechanics solution: The number of possibilities in the disjoined state is actually underestimated in Equation (

12) [

46,

49,

50]. Because when partitioning, a particle can end up in either of the two compartments leading to as much possibilities which are not counted in Equation (

12). The correct calculation should be increased by a factor accounting for the different possible combinations of partitioning

N particles in two sets of

each:

So that, using Equation (

13) and

18 one obtains for the difference of Boltzmann entropy:

that is exactly the same as Equation (

17) but with a completely different argument.

These two solutions in combination with an approximation of the Stirling formula in Equation (

17) or

19 for the calculation of the second term give

. The paradox is supposed to be resolved. In reality, as it stands, it is not, and it can be shown that these solutions benefit from the cancellation of two approximations [

55]. The solution must be amended by considering fluctuations and using of the exact Stirling formula (rather than an approximation of it). But the purpose of this article is rather to highlight what exactly these solutions entail regarding the role of the observer.

The first point concerns the “undistinguishability” of particles. Actually, as soon as a particle is large enough to be traceable by the mean, for instance, of a microscope and a camera, it becomes distinguishable from the others. Single-particle tracking is now feasible for particles above 20 nm (see for a recent review [?]). The implementation to many particles is a question of computing power and there is no conceptual impediment to this (for recent papers on these technics see e.g., [

56,

57,

58]). The question is therefore not whether the particles are distinguishable or not, but whether they are distinguished or not. The difference is very important, because in the second case it depends on the observer. It depends on the means and information available to the observer. And it depends on what the observer considers to be relevant. Of course, before the age of atoms, thermodynamics did not care about tracking and distinguishing particles, because it simply did not care about particles. Despite this, the theory was and still is correct.

The second point concerns the solution proposed in the framework of classical mechanics. This time, particles can be distinguishable and distinguished, but in counting the number of possibilities offered to the system, we add the different possible combinations for the distribution of the particles between the two compartments. There is no problem with this, except that it must be understood that once the partition is established in the disjoined state, there is no possibility for the system to modify it. So these possibilities are not possibilities accessible to the partitioned system at equilibrium, they are possibilities accessible just before the partition is carried out. On this respect, the partitioned system at equilibrium (the disjoined system) is no longer ergodic [

59]. This solution is actually incompatible with the ergodic hypothesis. The different combinations are not possibilities offered to the system, they simply contribute to the uncertainty that the observer has about it.

4. Shannon’S Information Theory

Shannon’s information theory [

60] initially aimed to optimize physical media for the storage (optimization in terms of size) and transmission (optimization in terms of bandwidth) of information conceived as the random outcomes of a source. This concrete physical problem seemed far removed from those of thermodynamics. However, it turned out that this was not the case.

4.1. Quantity of Information

Consider a source that sequentially emits a message whose letters from the receiver’s point of view are as many random outcomes

, which have to be encoded and stored. For the encoding, the first thing to do is a mapping

between the finite space

of possibilities for

and a subset of natural numbers:

With this mapping, since

,

requires

bits to be recorded. Thus, a first approach to the problem is to plan a fixed length equal to

for each outcome to be sure not to lose information [

61]. But this is clearly not always optimal (see

Table 1) as it introduces for small numbers unnecessary 0 at the beginning of their binary code. An encoding procedure that uses just the necessary number of bits

for each outcome

n is much more optimal.

However, the storage space saved with a variable-length encoding rule depends largely on the mapping chosen. To optimize storage space, rare outcomes should be mapped to large numbers while frequent outcomes should be mapped to smaller numbers. Shannon [

60] showed that in no case, however, can the minimum average number

H of bits per outcome be less than

By writing

we obtain the Shannon entropy of the dynamical source.

H is the minimum average number of bits per outcome needed to encode the random outcomes

of the source.

H is called the quantity of information emitted by the source. It is the information we would need to describe the source, understand an information we do not necessarily have.

Here, let us focus on the meaning of the word “information” in Shannon’s theory. Imagine that

is a random natural number lying in a given interval. Denote

the quantity of information of the source given by Equation (

20). Imagine you learn, by one way or another, that the outcomes are all even. This means that you know in advance that the first bit of all binary encoded outcomes is 0. So you do not need to store it every time. You have saved one bit of information:

. An important point is that the latter information we got about the outcomes distribution remains valid until the parity changes. It is not needed to give us this information twice. In fact, the same information cannot be given twice. The second time, it is no longer information. When we get information from reading a newspaper, the amount of information we have learned does not increase if we read the same newspaper a second time. The meaning of the word information in Shannon’s theory is in fact the usual meaning of the word. Shannon only found a way to quantify the information. This will be a key point in what follows.

Shannon entropy (Equation (

21)) is nothing other than the Gibbs formula (Equation (

6)) in the case where the outcomes considered are the phases of a dynamical system. With this result, the Gibbs formula is obtained without any connection to thermodynamics. It is interesting, but for now it only seems like an analogy. Just as with the H-theorem (see

Section 3.3.2), a connection must be established for this analogy to be of any use in statistical mechanics. But the difference is that here, it can be established without inconsistency. The connection begins with the maximum-entropy theorem and continues with its application by Jaynes [

62] to the problem of statistical mechanics that led to the maximum entropy principle.

4.2. Maximum Shannon Entropy Theorem (Maxent)

Statistical mechanics faces the problem of determining from which distribution of phases to start calculations. Hence the fundamental postulate (

Section 3.1) or the ergodic hypothesis (

Section 3.2) options. The approach of information theory to solving this problem is the one commonly used in modeling any set of experimental measurements. We must take into account all available information, but no more. This is typically achieved by maximizing uncertainty within the constraints of satisfying our knowledge.

In his paper [

60], Shannon showed that the quantity of information

(Equation (

20)) is actually the only measure of uncertainty on

that combines three interesting qualities: 1) continuous in

p; 2) increasing in

for a uniform distribution; 3) additive over different independent sources of uncertainty. In addition, for the problem of seeking for the probability distribution of a random variable, Shore and Johnson [

63] showed that maximizing

is the only procedure allowing to obtain a single solution for the probability distribution. Hence the theorem:

Maximum Shannon entropy theorem (MaxEnt):

The best distribution that maximizes the uncertainty on , while accounting for our knowledge, is the one that maximizes Shannon entropy.

The a priori probability distribution of anything can thus be determined with a rational criterion. A fortiori the distribution of phases of an isolated system. The distribution with which to start the calculations of statistical mechanics can thus be determined rigorously with the method of Lagrange multipliers where the imposed constraints are the knowledge we have about the system. The result is exactly the same as that of the fundamental postulate or that of the ergodic hypothesis: a uniform probability distribution of phases for an isolated system.

4.3. Maximum Entropy Principle

The maximum Shannon entropy theorem provides a rational criterion to determine the prior probability distribution of a random variable. But this does not provide a criterion that could serve as a definition of equilibrium. This does not tell us which random variable would have its distribution maximizing Shannon entropy at equilibrium. This does not legitimate the use of phase distribution. Jaynes [

64] noted that, based on the basic postulate that equilibrium is unique, the variables to be considered must have a distribution that would be invariant in form under similarities (rotation, translation and scaling), like the distribution of phases, but also like the number density of particles. With this postulate, plus the MaxEnt theorem, we can write a principle on which to base statistical mechanics:

This principle, together with the 2nd law, makes the equilibrium an attractor.

4.4. Representationalism

Shannon entropy is a measure of uncertainty. So, there is one question in relation with the previous definition of equilibrium that may bother some. Why would a system at equilibrium (an object) maximize the uncertainty we (subjects) have about it? This would be like a role reversal.

Actually, there is no role reversal because with information theory applied to statistical mechanics, as in thermodynamics, there is no dualism. The observer is present from the outset in the problem to be solved. The maximum Shannon entropy principle can be paraphrased as follows: 1) All knowledge of equilibrium originates from observations; 2) This knowledge allows us to make a mental representation of the equilibrium; 3) This mental representation, to be rational, must maximize the uncertainty, so as not to invent knowledge that we do not have. In the statement of the above maximum entropy principle, “equilibrium” has to be thought as “the mental representation of the equilibrium”.

Let us take an example. Imagine we are rolling a die for a public game and are tasked with deciding the fair probabilities (the odds) of each outcome. In a first case, someone tells us that the die is normal, so that the probability for each outcome is 1/6 (uniform distribution). In a second case, someone tells us that the die is loaded but does not tell us how. So, we have absolutely no other rational choice but to leave the previous odds for betting unchanged (even though you know it is wrong), until the actual probability distribution has been measured. But this measurement is not possible for phases.

The probability distribution of anything, which is to be rationally used as a basis for further calculations, is not an intrinsic property of the system. It is a property of the mental representation we have of it. So that, the object of the calculations in question is this mental representation. Subject and object merge.

4.5. Statistical Mechanics Becomes Probabilistic Mechanics

The maximum Shannon entropy principle makes the equilibrium stable. All right, but what is the difference with establishing directly this principle without information theory ?

Without information theory, we would actually need two postulates: 1) the fundamental postulate (3.1) or the ergodic hypothesis (3.2); and 2) the maximum entropy principle. The first to determine the distribution of phases at equilibrium, the second to stabilize equilibrium. Beyond the economy provided by information theory (one principle instead of two), the latter brings the consistency that was absent before. The MaxEnt theorem does not suffer from the criticisms that can be made against the principle of insufficient reason (fundamental postulate). Also, the maximum Shannon entropy principle does not suffer from the inconsistency of the ergodic hypothesis. Because with information theory, probabilities are expectations that depend on our knowledge (a priori probabilities), they are not frequencies (a posteriori probabilities which are supposed to be measurable but cannot be measured, probabilities which are supposed to be frequencies but concern outcomes that are not recurrent in practice).

For the rest, by starting with the maximum Shannon entropy principle, everything in statistical mechanics is the same, but without dubious starting assumption and with the added bonus of solving some puzzling questions: the 2nd Gibbs paradox and the puzzles posed by demons.

4.6. Gibbs Paradoxes

The first Gibbs paradox (

Section 2.3.2) concerning the mixing of identical or different gas species was actually already solved by Gibbs himself: “

It is to states of systems thus incompletely defined that the problems of thermodynamics relate” (J.W. Gibbs [

16] p.228). The second Gibbs paradox (

Section 3.4) concerning the joining of two volumes of the same gas species, was introduced by the Boltzmann formula for entropy. With information theory, we find a solution for the second paradox that is in line with that of Gibbs for the first :

Clausius entropy (Equation (

3)) concerns a system about which we have incomplete information.

Boltzmann (or Gibbs) statistical entropy (Equation (

6) and

7) concerns a system about which we are supposed to have all the information.

Shannon statistical entropy (Equation (

20) and

21) gives the same formula than Boltzmann or Gibbs, but probabilities have not the same meaning. They allows us to account for the actual information we have, or the information we consider relevant [

17].

With information theory, we can consider fluctuations or not, isotope composition or not, impurities or not etc. and we can obtain all intermediate cases between that of thermodynamics and that of a fully known system on which we have all the information. But how to be sure to have all the information without closing the door to further progress in knowledge ? Information theory avoids this paradox.

4.7. Brillouin Negentropie Principle of Information and Demons

The general formula for Shannon entropy makes the Gibbs formula (Equation (

6)) a special case. But Shannon’s result does not invalidate the connection between Gibbs and Clausius entropy (

Figure 6) that has been previously established. Simply, this connection is made

a posteriori since it was not used for the derivation of the formula. Anyway, Clausius entropy

S and Shannon quantity of information are linked, via Gibbs entropy and this allows us to write:

with

H given by Equation (

20). It follows that the Clausius inequality (Equation (

4)) can be rewritten as:

where

is the uncertainty on the actual phases of the system.

Acquiring any information about the current phase of a dynamical system reduces the uncertainty we have about it. Or in other words, the information we get about a system has a negative contribution to its entropy [

65,

66]. For one bit of acquired information, we have

. By using Equation (

23), one gets:

where

is the work that we have to provide (and that will be dissipated as heat) per bit of acquired information. Brillouin called this the “

Negentropie principle of information” [

65,

66]. This is in fact nothing more than a reformulation of the Clausius inequality that takes into account Gibbs’ and Shannon’s mathematical results connecting Clausius entropy to statistical entropy and finally to the quantity of information.

With equation

24, the acquisition of information has a cost, so that demons are no longer demoniac.

Acquiring information about a system is a complex process consisting in many elementary operations which have to be cyclically performed if the system is dynamical. These operations include measuring, writing the measurement result, but also copying, overwriting, reading, erasing memory etc. Equation

24 tells us about the minimum work to be provided, or the minimal heat dissipated, at the end of one cycle for one bit of information. This equation concerns the net energy balance of the global cycle. It is general without exception, as is the 2nd law. But Equation (

24) does not tell us precisely where, in the cycle of elementary operations, the inevitable dissipation occurs. It does not tell us which step causes this dissipation. Moreover, this equation does not tell us that such a particular dissipative step exists. There is no obligation for this, it is the entire cycle that dissipates a minimum of heat, not any particular step. The “location” of dissipation (if it is localized) will depend on each specific case: which system is considered, which information, which treatment exactly, etc. It is often mentioned (e.g., [

67]) that Brillouin locates dissipation in the measurement stage. This is true. But this only occurs after the statement of the principle of negentropy [

66]. This principle remains free of any constraints regarding the location of the dissipation.

5. Landauer Erasure Principle

It is not possible to deny the advantages of introducing the notion of information in statistical mechanics. And in reality no ones does. The criticism is situated at an epistemological level: “

[This approach] is associated with a philosophical position in which statistical mechanics is regarded as a form of statistical inference rather than as a description of objective physical reality” (Penrose [

68]). “

Such a view, [...] would create some profound philosophical problems and would tend to undermine the objectivity of the scientific enterprise” (Denbigh & Denbigh [

69]). “

It is an approach which is mathematically faultless, however, you must be prepared to accept the anthropomorphic nature of entropy” (Lavis [

70]). It could not be clearer that the problem lies in the conception of

objective reality which is not Platonic enough.

For Platonists, the relationship between energy and information would be much more acceptable if we had something that would allow us to affirm that “

information is physical” [

71]. “Physical”, translate “independent of us”, “objectively materialized by something”. This is the program of Landauer [

72,

73], followed by Bennett [

74,

75], who tackled the problem via that of demons.

Acquired information must be stored, at least temporarily (otherwise it is not acquired), under the form of data-bit in memory. Thus, the finite size of the memory of any concrete implementation of a demoniac machine necessarily implies a cyclic erasure of data-bit. The Landauer’s claim is that the unavoidable heat dissipation for one bit of information (Equation (

24)) discussed in

Section 4.7, is precisely due to this erasure. “

Landauer’s principle [...] makes it clear that information processing and acquisition have no intrinsic, irreducible thermodynamic cost whereas the seemingly humble act of information destruction does have a cost” (Bennett [

75]).

In other words, Landauer erasure principle, by precisely locating where the dissipation occurs, makes the process of acquiring information (and in particular its energy cost) something that is thought to be intrinsic to the system. Something that is thought to be independent of us. Let us see how Landauer achieved this result.

5.1. Landauer’S Derivation of His Principle

Landauer’s derivation lies in four items:

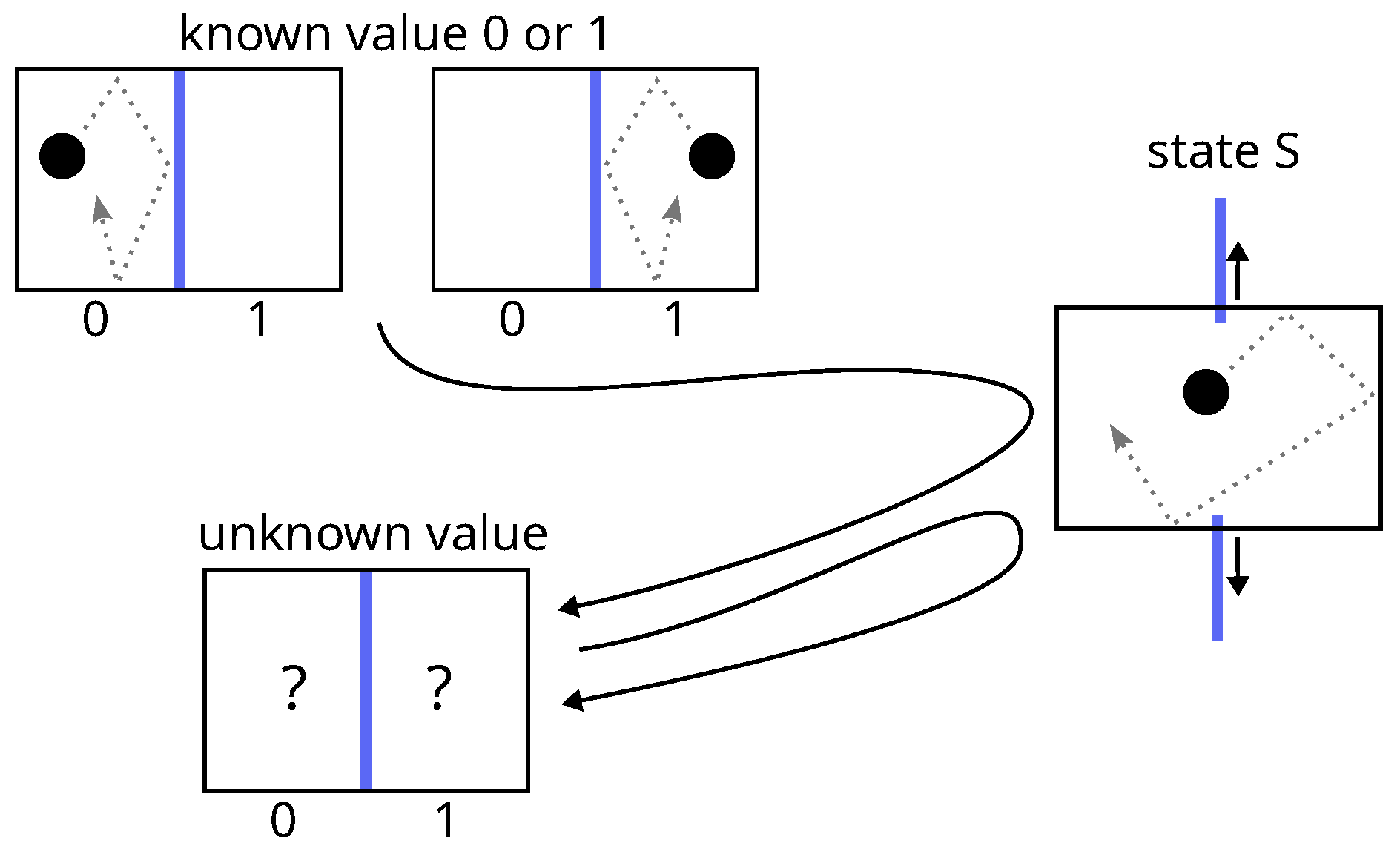

- 1.

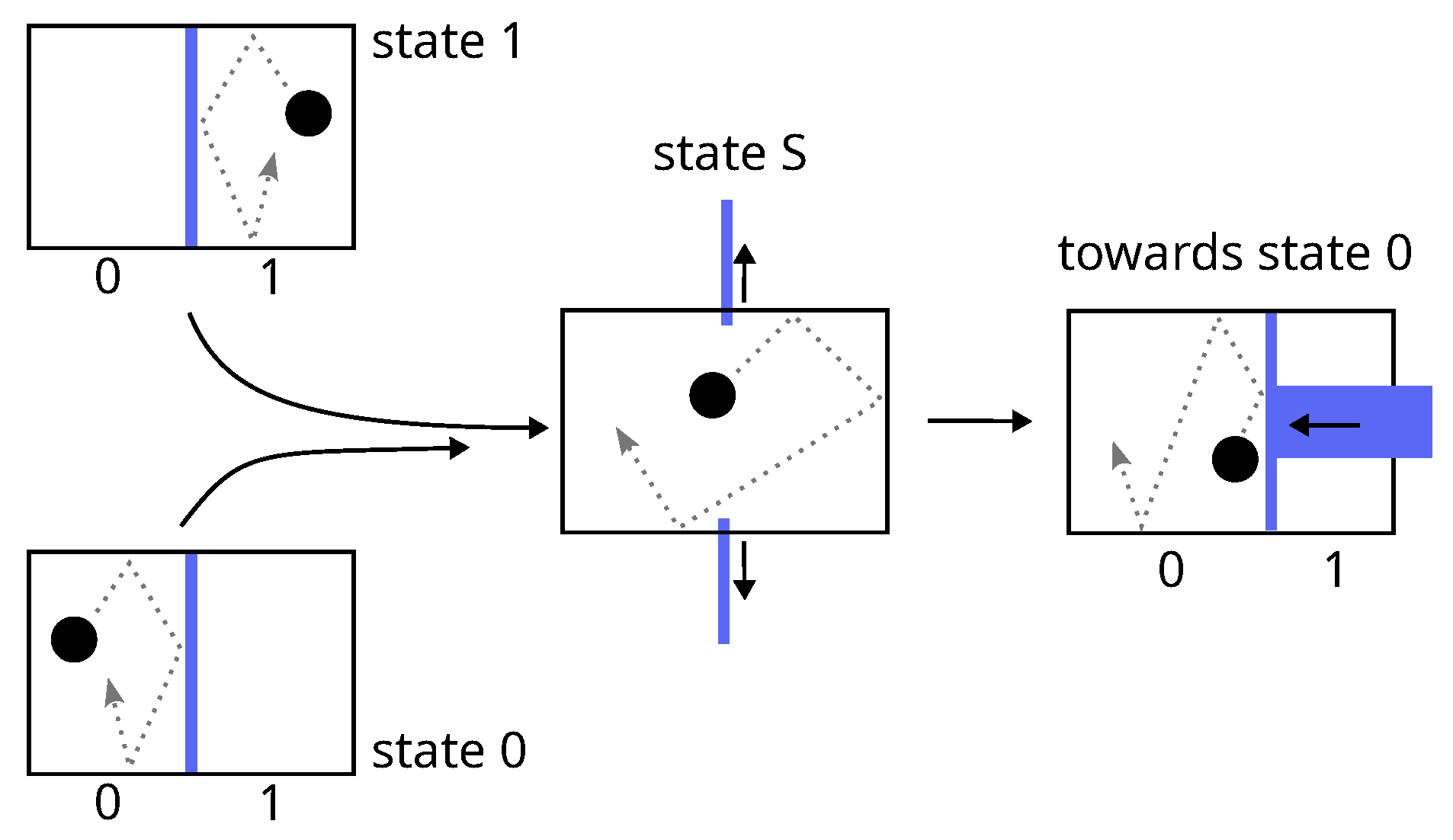

The concrete implementation of a bit of memory is necessarily achieved via a physical system which must exhibit two stable thermodynamic states of equilibrium denoted 0 and 1, e.g., a particle in a bistable potential or a particle in a box divided in two.

- 2.

The procedure for erasing (the erasure) a bit of memory, say setting the bit-value to state 0, must be independent of its initial value (0 or 1), because it must be able to work for an unknown value.

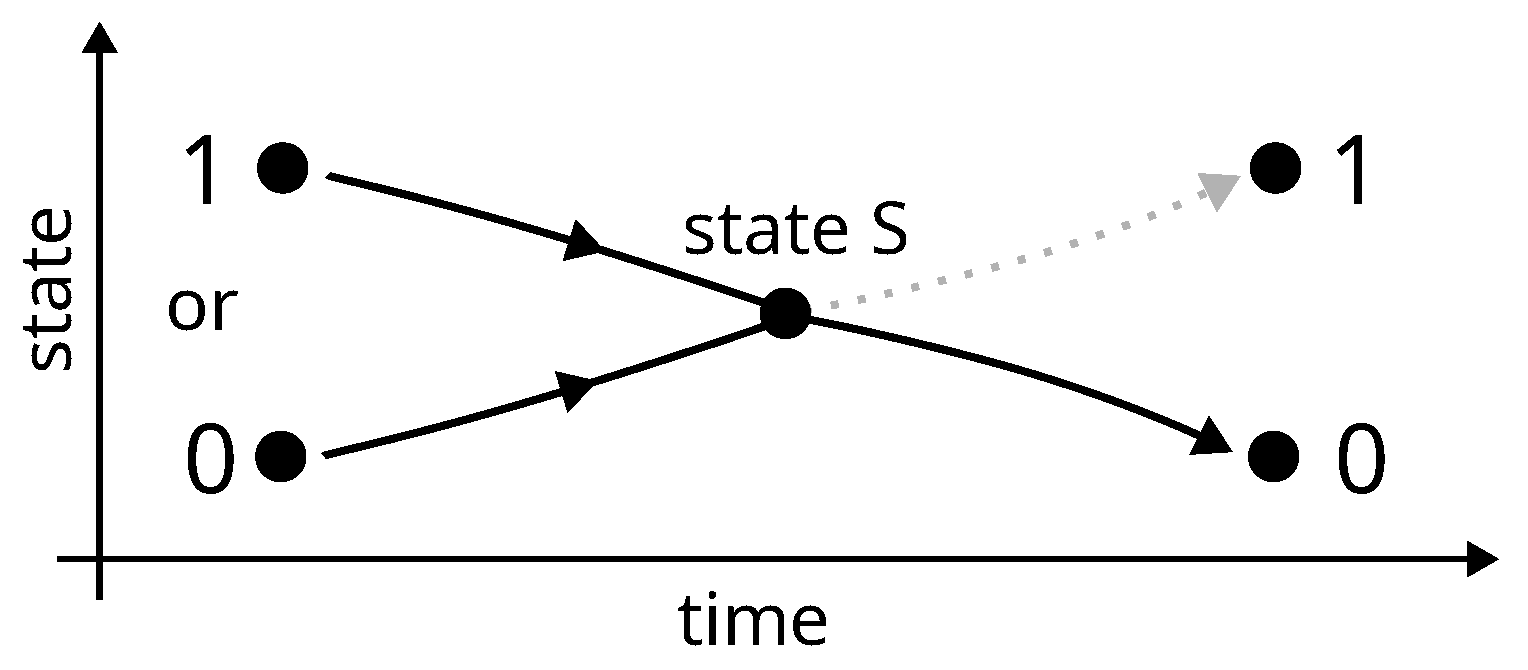

- 3.

The two different paths that take the bit from state 0 or from state 1 to state 0 necessarily merge into one, at a point (called state S) where the value of the bit is undetermined. Before this point, both paths are judged necessarily uncontrolled and thermodynamically irreversible, because in the reverse direction, state S is a bifurcation, which is assumed not to be tractable for a controlled deterministic procedure. The consequence is that neither work nor heat can be obtained from the system during this step between state 0 or 1 and state S.

- 4.

According to Clausius inequality (Equation (

2) or

4), the subsequent step, from state S to state 0, necessarily requires at least

of work that will be dissipated as heat in the surroundings.

The first step (item 3) is similar to the free expansion of a gas while the second (item 4) resembles an isothermal compression. The two together leads to the energy balance:

This inequality is known as Landauer bound and constitute the core of Landauer principle.

Figure 7.

Landauer erasure: according to Landauer, the junction of two paths at a point called state S makes the process before this point necessarily uncontrolled and thermodynamically irreversible (just like the free expansion of gas).

Figure 7.

Landauer erasure: according to Landauer, the junction of two paths at a point called state S makes the process before this point necessarily uncontrolled and thermodynamically irreversible (just like the free expansion of gas).

Landauer principle and Brillouin negentropy principle of information (

Section 4.7) are often confused. This point should be clarified.

5.2. Landauer Erasure Vs. Brillouin Negentropy

Let us bring Brillouin and Landauer inequalities together:

and see how they differ and resemble.

A first major difference between the two principles which is not immediately apparent concerns their respective robustness. Brillouin negentropy principle comes : 1) from the 2nd law of thermodynamics and from the Clausius inequality, which have been confirmed by all known experiments; and 2) from two mathematical results: Gibbs’s and Shannon’s ones. Since mathematical results are demonstrations that are not affected by the problem of induction posed in the natural sciences, it follows that the validity of the principle of negentropy is ultimately only conditioned by the validity of the 2nd law. Brillouin negentropy principle will remain true as long as the 2nd law does. This makes us very confident. As for Landauer principle, it also uses the Clausius inequality (item 4,

Section 5.1), but it is also conditioned by the validity of the three preceding hypothetic items. Landauer considered a special case, without any assurance that it could be generalized. This makes us less confident.

Brillouin negentropy principle can be considered a fundamental general principle of physics. The status of Landauer principle is more uncertain. This point cannot be denied and that is why the two principles should not be confused.

Brillouin principle concerns the acquisition of one bit of information (so encoding the source outcomes requires one bit less). Landauer principle concerns the erasure of a data-bit. An in-depth comparison is done in the next section

Section 6, but here let us just deal with the following point. Although the meaning of the two inequalities is very different, both lead to the same result in the case where the acquisition of information consists of a cyclic sequence of operations involving erasure. As for example the cyclic operations performed by a Szilard demon having a finite size of memory. In this case both inequalities give the energy cost that must be paid by the demon for each cycle. So that the energy produced by the device does not come from nothing. Although conceptually different, the two solutions to the problem posed by the demon are equivalent. The only difference is that Brillouin inequality concerns the net balance of an overall acquisition cycle (that may include erasure); whereas Landauer inequality stipulates where exactly in the cycle the energy cost is paid (in the erasure). The only difference is a question of (non-)localization.

According to the last point, it is not possible to consider that Landauer erasure principle solves problems posed by demons that would not be solved by Brillouin negentropy principle, unless one considers that the non-localization of the dissipation poses problem. Let us report some recent quotes among many others on this matter:

“

The Landauer principle is one of the cornerstone of the modern theory of information” (Herrera [

76])

“

Landauer’s principle was central to solving the paradox of Maxwell’s demon.” (Lutz & Ciliberto [

77])

“

Informational theory is usually supplied in a form that is independent of any physical embodiment. In contrast, Rolf Landauer in his papers argued that information is physical and it has an energy equivalent.” (Bormashenko [

78]). In other words, Landauer principle is more physical than Brillouin’s.

“

Since [Szilard’s work] in 1929, the story has remained much the same. The only major change was made by Landauer, who suggested that the erasure of information was specifically what generated heat.” (Witkowski et al. [

79]). As a reminder, Shannon’s work dates from 1948, Brillouin’s from 1953, Landauer’s from 1961.

Landauer’s solution is clearly preferred and the non-localization of dissipation therefore does indeed pose a problem.

Acquisition of information cycles are cyclic sequences of operations, they are not periodic sequences of thermodynamic states since the incoming value of data varies from cycle to cycle. An acquisition cycle is actually a process that takes the acquisition device from state A to state B (different or not from A). Let us compare with mechanical processes. Consider a body that is moved from point A to point B. The minimum mechanical work to be provided for this is given by the difference of potential energy of the body between these two points, regardless of the path taken between the two. There is no law of mechanics that dictates that work must be done precisely somewhere. There is no law of physics that tells us where work is done along the path. Despite this, potential energy is considered to be physical. The negentropy principle and how it solves the problem of demons operate exactly the same way.

Why something that does not pose problem with mechanics and energy, poses problem with thermodynamics and information ? Why is something, which is not necessary for energy to be considered physical, requested for information ?

The reason is that with energy we can easily forget that it is a pure abstraction. We can easily forget that energy is not “materialized” by something like “an energy particle” (an object) that could exist independently of us (see

Section 2.1). We can easily forget that energy is not something that belongs to the Platonic

objective reality. With information we cannot. Localizing and materializing information is viewed as the solution.

The last attempt at localizing and materializing information is the principle of information-mass equivalence [

76,

80,

81,

82,

83,

84]. In short: any piece of information is stored under the form of data-bits, these are supposed to store energy when set to a particular value, energy has a mass equivalence, thus information has a mass. Information becomes definitely physical, understand tangible and materialized.

Landauer’s motivations in stating his principle were probably not of this nature, but in my opinion, the reasons of its success certainly are. Because there is no point in Landauer’s reasoning that resists close examination.

6. Invalidation of Landauer Erasure Principle

Literature on information and Landauer’s principle reveals a great deal of confusion in the vocabulary, such that we no longer know what we are talking about. The confusion is about information and data-bit, known and unknown, logically reversible versus irreversible etc. It is therefore imperative to clarify and untangle all these notions.

6.1. Piece of Information Versus Data-Bit

To the word information we give the usual meaning that is the primary sense of the Oxford Dictionary: “Information: The imparting of knowledge in general. Knowledge communicated concerning some particular fact, subject, or event”.

This usual meaning is the one retained in the literature on this subject: “

But what is information? A simple, intuitive answer is “what you don’t already know”” [

77]. “

If someone tells you that the earth is spherical, you surely would not learn much” [

85].

This meaning is also Shannon’s (see end of

Section 4.1), who additionally made information quantifiable. So that, acquiring one bit of information about a dynamical source means that one bit less is required for the encoding of the outcomes, the uncertainty we have in describing the system decreases. But information cannot be given twice. Acquiring a second time the same piece of information about a source does not save any more encoding space.

Any piece of information is necessarily stored in memory on a material support composed of material units called data-bits for convenience. A data-bit is a material object which displays two distinguishable stationary configurations (0 and 1). A data-bit can be duplicated, or copied, and this increases the amount of memory used but leaves the quantity of information unchanged. In other words, one bit of information is necessarily stored under the form of a data-bit set to a particular value. But conversely, a data-bit set to a particular value is not always information. Because, many copies of a data-bit contain no more information than the original alone.

A data-bit is necessarily located somewhere, while a piece of information is not. Consider a newly acquired piece of information stored under the form of a given data-bit. Make a copy of it, which is by definition located elsewhere. Regarding the information, the original does not have a special status compared to the copy. The original and the copy are two distinct physical objects but do not encode for two distinct pieces of information. Erase the original or the copy: the information is still there. Both must be erased so that the information is irretrievably destroyed. If there exists many copies of an original data-bit storing a piece of information, the corresponding information is not “diluted” or shared among each copy. Duplicating or erasing one copy or the original, leaves the amount of information unchanged, provided one copy still exists. Neither the original data-bit nor the copies contain a small portion of information that could therefore be considered to be located on that data-bit. The same is true if an original data-bit is unique.

In terms of computer science, a data-bit is identified by a pointer whereas a piece of information by a value.

It is true that any piece of information needs a material support in the form of data-bits, but this material support does not make the piece of information localized on this support. Although information requires matter, it is non-material and remains intangible and abstract. This may seem paradoxical, but actually other abstract physical concepts require matter but are never materialized: energy, fields, time, interactions (we are still looking for the graviton)... Information is physical and needs a material embodiment, but that does not mean it must be confused with its physical embodiment, that does not mean it must be confused with its physical support. Both must be clearly dissociated. This is a first misunderstanding to avoid.

Landauer contributes to the confusion, for instance: “

Information is not a disembodied abstract entity; it is always tied to a physical representation. It is represented by engraving on a stone tablet, a spin, a charge, a hole in a punched card, a mark on paper, or some other equivalent” [

73]. The fact that information can be embodied in multiple ways proves precisely that it is abstract and cannot be confused with its embodiment: embodiment changes but information does not. Moreover, the fact that information must be embodied also proves that it is abstract: matter does not need to be embodied, nor does a data-bit.

6.2. (Ir)Recoverable Loss of Information Versus Thermodynamic (Ir)Reversibility

Another way to think about the crucial difference between abstract information and material data-bit is that a data-bit is a thermodynamic system in itself, whereas a piece of information is not.

In particular, a data-bit can undergo thermodynamic processes such as erasure. That is to say, processes that only involve the data-bit (the thermodynamic system) and the part of the surroundings which interacts with it. Given an initial state and a final state of the data-bit, whether or not the process is thermodynamically reversible depends solely on the heat exchanged with the surroundings via the Clausius inequality (Equation (

2)). Given these two states of the data-bit, the thermodynamic (ir)reversibility of a process is a property of the path.

On the contrary, the irreparable destruction of a piece of information, is not only conditional on the erasure of one given local data-bit, but is also conditional on the non-existence of a copy somewhere in the global system. Whether or not a local process causes a global loss of information depends on the initial state and final state of the global system. The irreparable destruction of a piece of information that a process can cause is a property of these two states. It is not a property of the path [

86].

In other words, we can erase a given data-bit in a thermodynamically reversible or irreversible way, but at the end, whatever the way, the initial and final states of the global system is the same. Likewise, we may, or may not, irretrievably lose a piece of information by erasing a given data-bit. But if an eventual copy is far away from the erased data-bit, the local erasing process does not “know” whether or not the copy exists. So that its thermodynamic (ir)reversibility is independent of the existence of the copy (unless we consider that everything interact with everything, but then, not only thermodynamics must be reconstructed, but all of physics).

The thermodynamic (ir)reversibility of a process undergone by a given local data-bit and the (ir)recoverable loss of information of the global system [

86] are totally independent (to say otherwise is either a mistake or comes from an implicit different meaning given to the word information). This is due to the mere fact that information is a non-material abstraction whereas a data-bit is not.

6.3. Logical Irreversibility: Equivocal Operation or Irrecoverable Loss of Information ?

This brings us to introduce and clarify the term “logical irreversibility” often used in relation with information and thermodynamic irreversibility.

Let us consider mathematical functions which apply on a finite subset of integers and can be entirely defined by a table consisting in two columns with input and output values. Bijections (one-to-one functions) allow the input value to be determined unequivocally from knowledge of the output. But, mathematical functions like or are not bijection, so that one given output value does not unequivocally define an input. The function enters in this last category that will be called “equivocal” functions.

When considering a function like erase (or set to 0), implicitly, we are not considering an abstract mathematical function that applies to numbers having a given value. A number cannot be erased, nor set to a particular value. The number or the number 2 cannot be erased. When considering erase, we are considering the physical implementation of a mathematical equivocal function in a computer that applies on material entities such as data-bits. erase does not apply on a value but applies on the data-bit pointed by its argument. Although the erase function is equivocal, whether or not it causes a loss of information depends on the existence of a copy of the erased data-bit (see previous section).