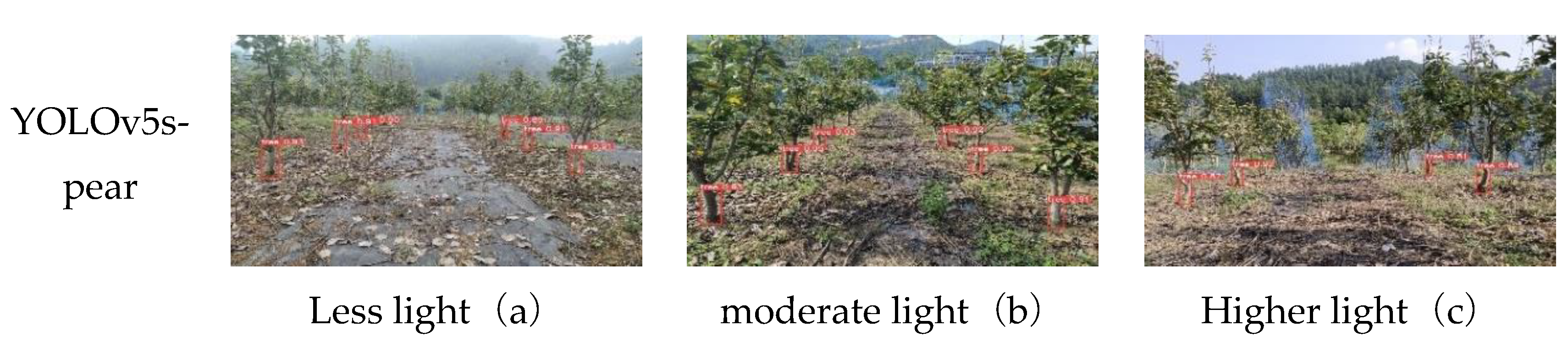

According to the actual growth and distribution characteristics of pear tree trunks in the orchard, this paper selects YOLOv5s as the base network for pear tree trunk detection to be improved, and the pear tree trunk detection accuracy is further improved by optimising and improving the model structure. In addition, the pear tree trunk detection results are used as inputs to locate the spatial coordinates of pear tree trunks.

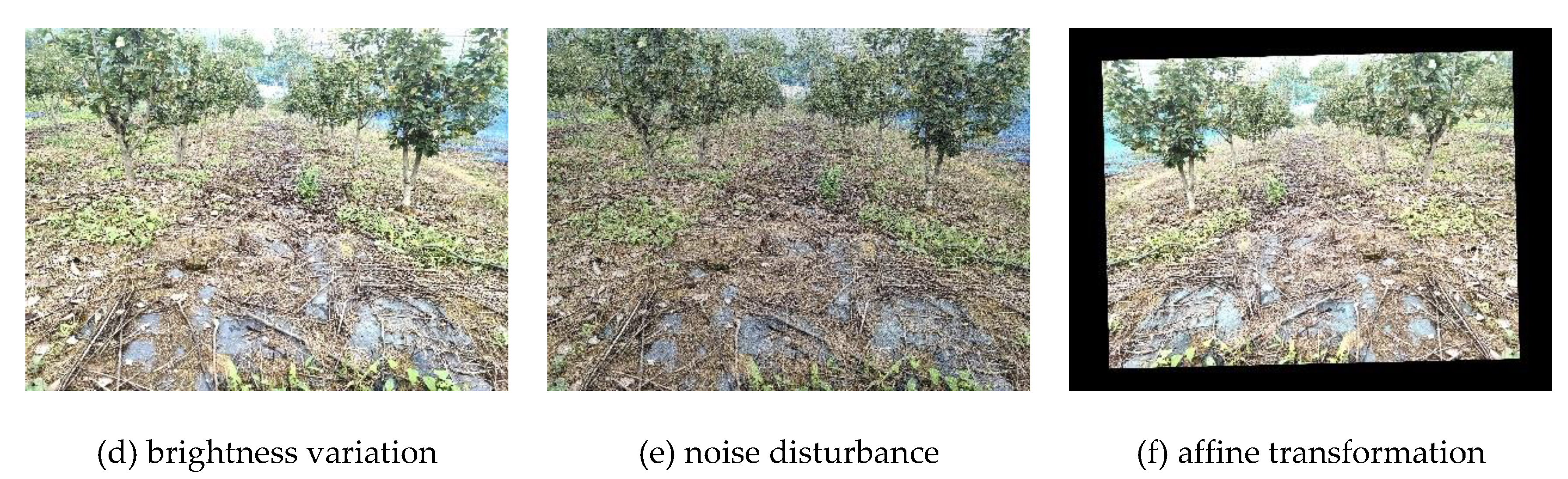

Combining the internal and external parameters calibrated by the binocular camera and the transformation relationship between the pixel coordinates and the world coordinate system, the spatial coordinates of the pear tree trunk in the orchard environment can be obtained. Compared with other network models in the YOLO series, the detection accuracy and detection speed of YOLOv5s are better, so in this paper, we choose YOLOv5s as the base network for pear tree trunk detection to be improved, and the structure of the improved YOLOv5s network model (i.e., YOLOv5s-pear) is shown in

Figure 3. Firstly, the C3 module in the penultimate layer of Backbone, the backbone feature extraction network, is replaced with the C3TR module to enhance the feature extraction capability of the backbone network; The CA attention mechanism is embedded to enhance the feature fusion and adaptation capability of the backbone network; The standard convolution in the Neck part is replaced with GSConv, and the standard convolution inside the C3 module is also replaced with GSConv and named as C3GS, which reduces the number of model parameters and improves the detection speed without affecting the model detection accuracy; The feature extraction network in the Neck part is replaced with a bidirectional feature pyramid network BiFPN structure to improve the performance of the model in handling target detection in multi-scale and complex environments. Then, the information about the positioning of the pear tree trunk is obtained by taking the pear tree trunk detection results as spatial coordinates. Combined with the internal and external parameters of the binocular camera calibration, the actual distance between the binocular camera and the pear tree trunk is calculated. Next, using the transformation relationship between the pixel coordinate system and the world coordinate system, the pixel coordinates of the spatial positioning reference point of the pear tree trunk in the image are converted to the actual spatial coordinates of the pear tree trunk in the orchard environment.

2.2.1. Pear Tree Trunk Detection in Orchard Environment Based on Improved YOLOv5s

Compared with other network models in the YOLO series, YOLOv5s has significantly improved detection accuracy and detection speed, and YOLOv5s can be adapted to the needs of object detection in different environments. Its model structure includes Input, Backbone, Neck, and Head. The backbone block includes CBS, C3TR and CA modules, which are responsible for extracting the feature information of foreground objects in the image. In order to enhance the feature extraction of pear tree trunks in a wide range of complex environments, and to reduce the impact of branch and leaf occlusion, exposure interference, etc. on the detection accuracy of pear tree trunks in the image, targeted improvements were made to YOLOv5s. The images were taken backlit under the influence of the open air environment. The results show that under the influence of strong exposure, the detected features of the pear tree trunk cannot be clearly characterised in the image, thus seriously affecting the detection accuracy of the pear tree trunk image by YOLOv5s. Therefore, on the basis of the original YOLOv5s model structure, according to the improvement method shown in

Figure 4, the C3 module in the penultimate layer of the backbone unit is replaced by the C3TR module, and the CA (Coordinate Attention) attention mechanism is added at the end; the standard convolution in the Neck part is replaced by GSConv, and the standard convolution in the C3 module is also replaced by GSConv and named C3GS; the feature extraction network in the Neck part is replaced by a bidirectional feature pyramid network BiFPN structure, which enhances the feature extraction of pear tree trunks in the complex environment of the orchard, and reduces the influence of occlusion and exposure interference on the detection accuracy of pear tree trunks in the image (the improved YOLOv5s model is named YOLOv5s-pear).

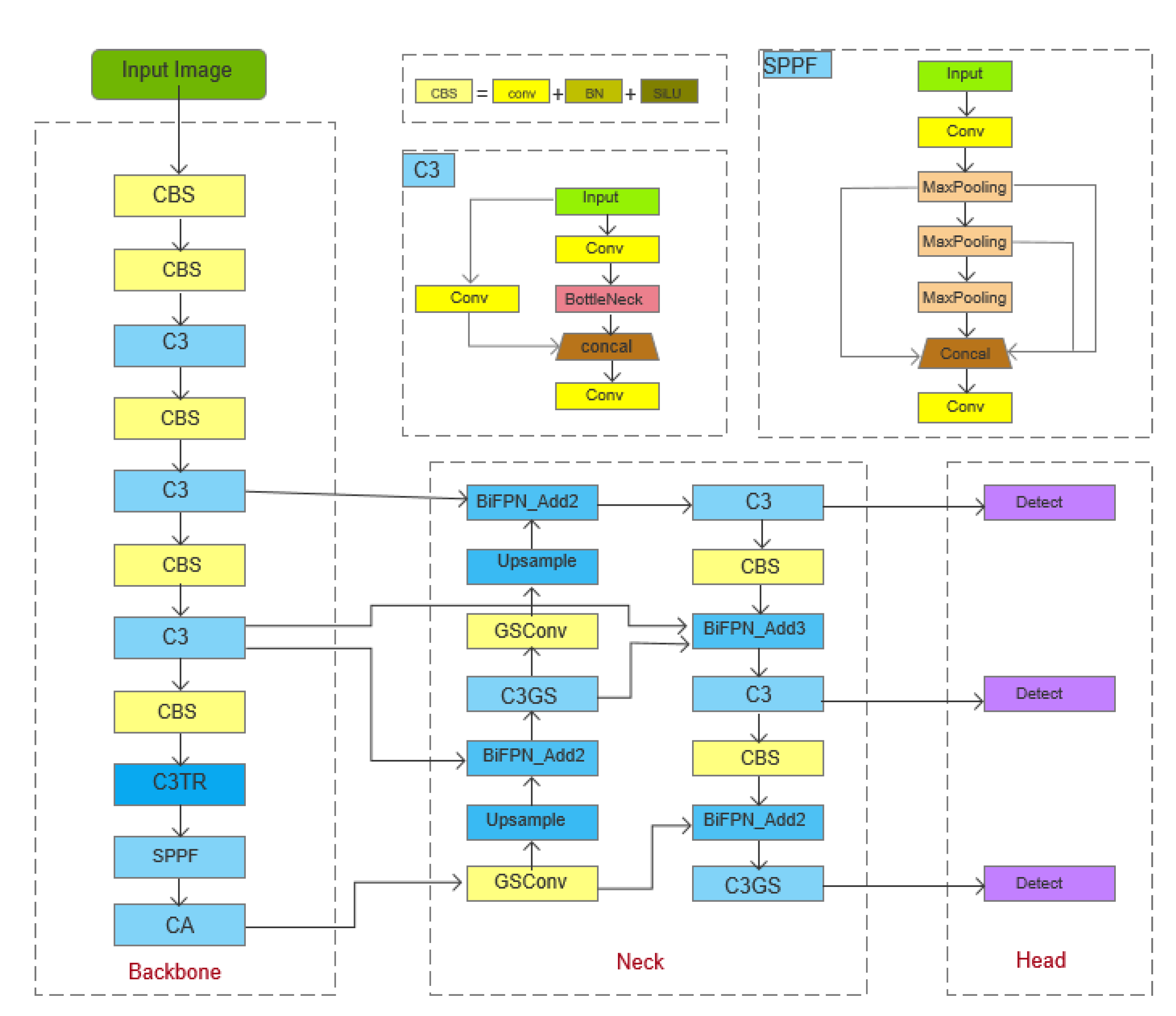

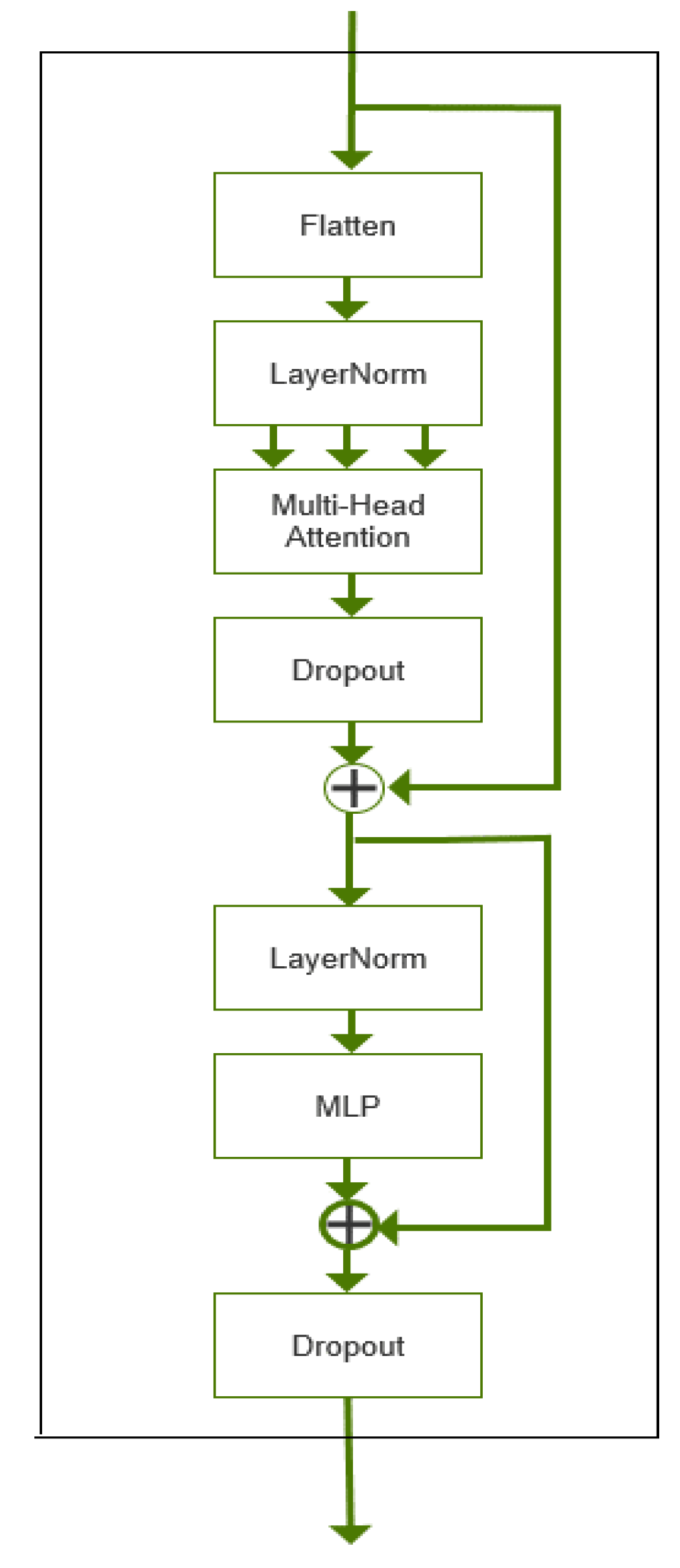

In this paper, the original convolutional structure is replaced with the Transformer module, whose Transformer structure module is shown in

Figure 5, and richer image information extraction is achieved by replacing the Bottleneck module in the C3 module. In this paper, the Transformer encoder module is added to the last C3 module in the trunk part of Yolov5s, which can improve the feature extraction ability of the model for the pear tree trunk region in complex environments on the basis of almost no increase in computational resources.

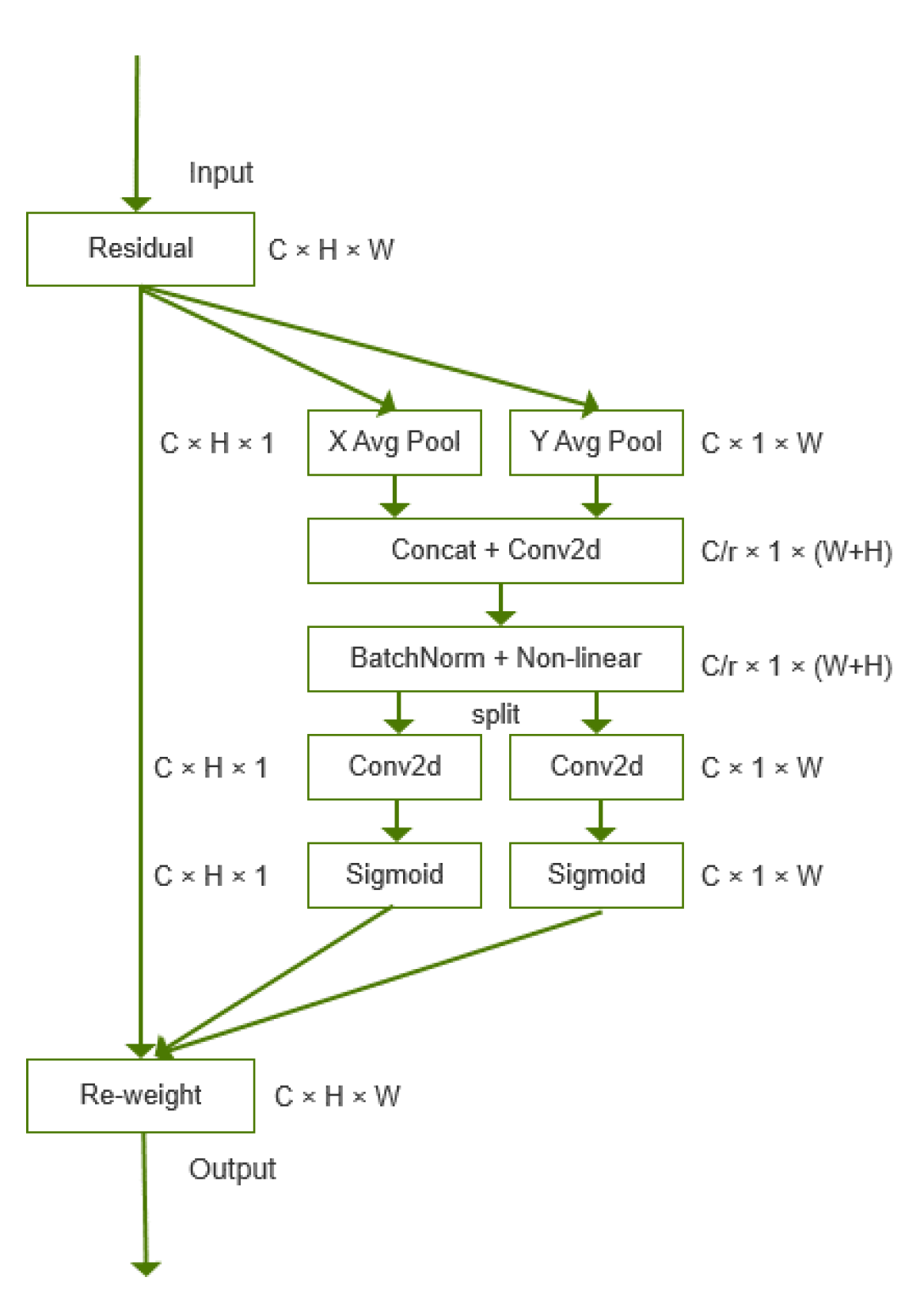

The CA attention mechanism is a hybrid domain attention mechanism [

16].Adding the CA module to the last layer of the backbone network of Yolov5s not only captures cross-channel and location-sensitive information, which helps the model to locate and identify objects more accurately; compared with other attention mechanisms, CA is relatively more lightweight, can be easily inserted into the target detection model of Yolov5s, and does not significantly increase the computational overhead.The CA structure is shown in

Figure 6.

The implementation process of the CA attention mechanism can be divided into two steps: in the first step, given an input layer X, an average pooling operation is performed horizontally and vertically using pooling kernels of size (H,1) and (1, W), respectively. For the cth channel with height H, and the cth channel with width W. In the second step, the feature maps in the width and height directions are spliced together and then passed through a 1 × 1 convolutional layer and a Sigmoid activation function to obtain a feature map f of shape 1 (W + H) × C/r, where C is the number of channels of the input feature map, and r is a scaling factor used to reduce the dimensionality of the feature map. The feature map f is then partitioned according to the original height and width and passed through a 1×1 convolutional layer and a Sigmoid activation function, respectively, to obtain the attention weights in the height and width directions Finally, the attention weights in the height and width directions are multiplied by the original feature map, respectively, to obtain the final output feature map.

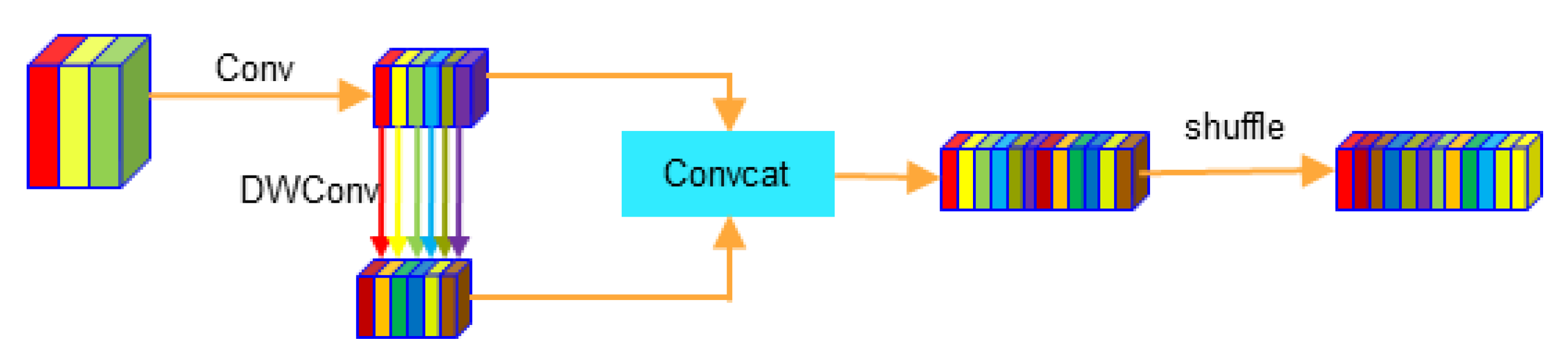

GSConv combines the advantages of traditional convolution and depth-separable convolution. Through channel reordering operations, GSConv enables the exchange of information between different convolutional groupings, maintaining the superiority of traditional convolution in feature extraction and fusion, while successfully achieving the goal of lightweighting [

17]. The use of GSConv in Neck replaces the traditional convolutional layer, due to the fact that the Neck feature map has the largest channel size and the smallest width and height dimensions, the serial processing of the feature map using GSConv reduces the repetitive information and does not require compression, which achieves an increase in the efficiency of the convolutional layer with little or no compromise in the quality of the features [

18]. In this paper, the standard convolution module in the neck part is replaced with GSConv, and the network structure of GSConv is shown in

Figure 7.

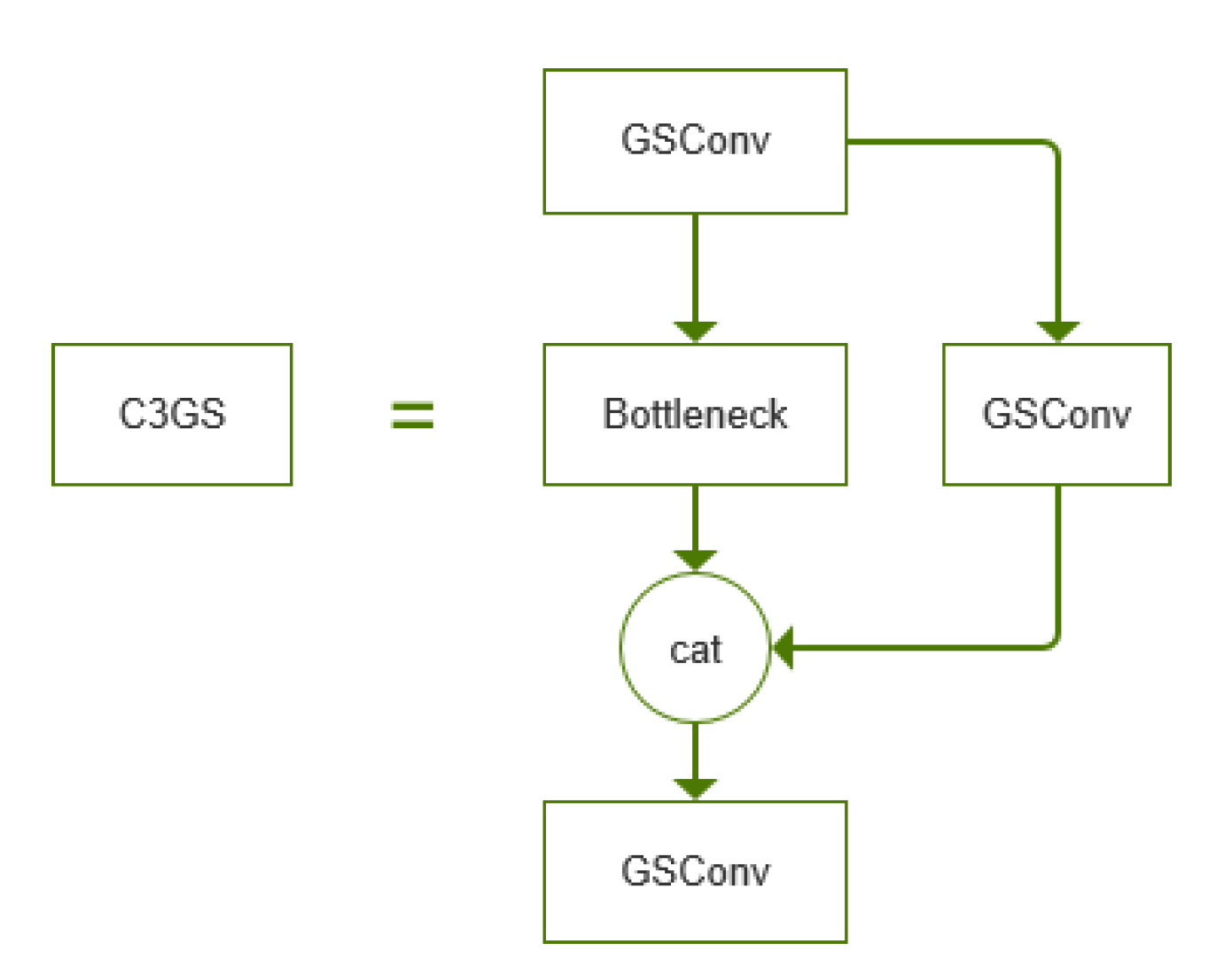

GSConv implementation steps, firstly, perform standard convolution on the input feature map and halve the number of channels; secondly, perform deep convolution on the output of the standard convolution and the other half of the channels; then, stitch the results of the two convolutions in the channel dimension; finally, perform a shuffle operation on the spliced feature map, so that the information from the standard convolution and the deep convolution can be exchanged in the channel dimension and fusion. In order to further improve the computational cost-effectiveness of the network, this paper also replaces the standard convolution module in the C3 module of the neck section with GSConv and names it C3GS, and the C3GS network structure is shown in

Figure 8.

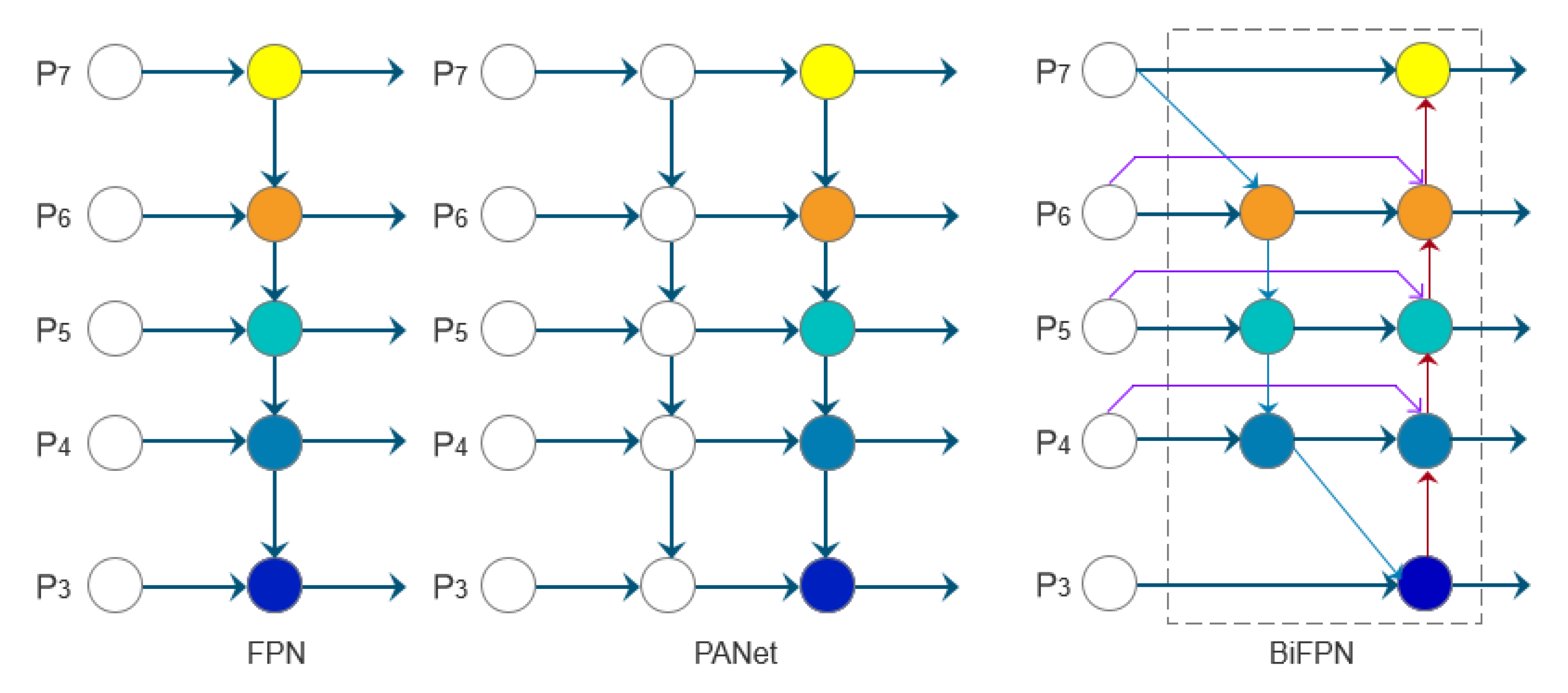

Considering that input features of different scales contribute differently to the final output, this paper introduces a bidirectional feature pyramid network (BiFPN) to improve the feature extraction network in order to balance the contribution of each feature layer, and its network structure is shown in

Figure 9. The main optimisation consists of three aspects: first, simplifying the network structure without compromising performance by removing nodes with only one input edge and no feature fusion; second, adding additional edges between the original input and output nodes in the same layer to fuse more features without significantly increasing the computational cost; and finally, treating bi-directional paths (top-down and bottom-up) as one feature network layer and repeated multiple times to achieve higher level feature fusion. Compared to PANet, BiFPN uses fast normalised fusion to balance the contributions of different input features by introducing learnable weights. This process normalises the weights to between [0,1], which improves the computational speed and efficiency.

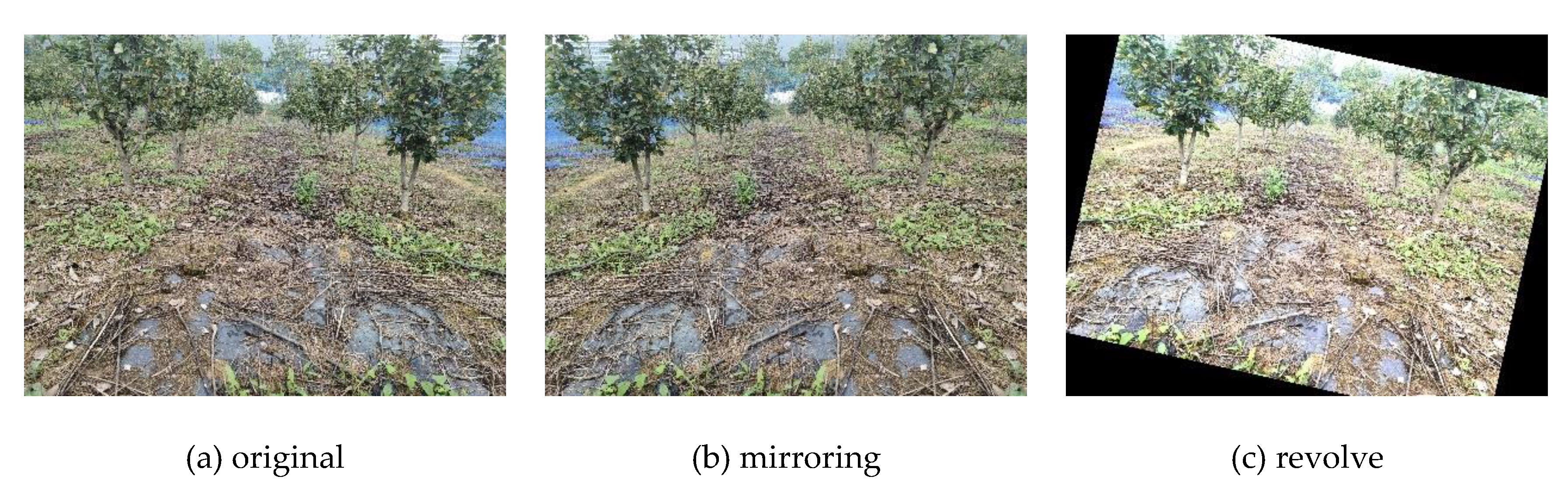

The pear tree trunk dataset is manually labelled one by one using the graphical image annotation tool Labelimg, and saved as an XML file in accordance with the PASCAL VOC dataset format, where each XML file contains information about the coordinates of the trunk pixel positions for network training. In order to achieve accurate pear tree trunk detection, the labelling rules are as follows, the minimum outer rectangular box of the trunk is in accordance with the actual size of the trunk, to improve the accuracy of pear tree trunk positioning. The complete trunk of the pear tree is labelled in the rectangular box, ensuring that the connection between the roots of the trunk and the soil is close to the midpoint of the bottom edge of the labelling box. The results of the labelling example are shown in

Figure 10.

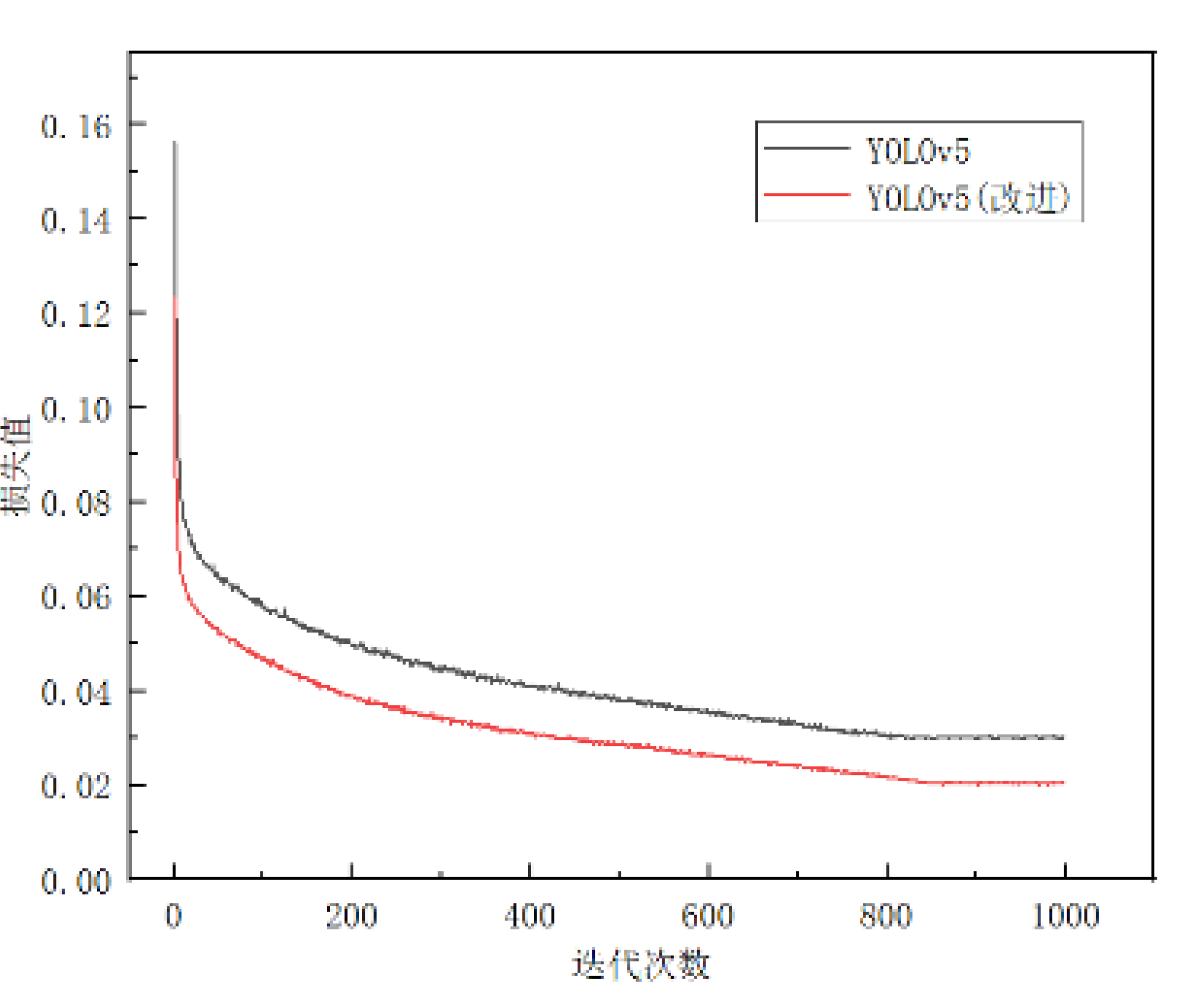

The software environment for the deep learning experiment in this paper is a framework based on Unbuntu20.04, python3.9, pytorch2.0.0, and CUDA11.7, and the hardware environment is configured as follows: the CPU is an Inter Core 12400, and the graphics card is a GeForce RTX3060 graphics card with 12GB of video memory. The training configuration includes a sample batch size set to 16, a stochastic gradient descent (SGD) optimiser with an initial learning rate of 0.01, a minimum learning rate of 0.0001, a momentum of 0.957, and a weight-decay of 0.0005. The batch size is set to a header of 16 during the iterative training process. from

Figure 11, we can see that in the early stage of training, the batch size is set to 16. it can be seen that at the beginning of training, the loss shows a large decrease with the increase of the number of iterations. When the number of generations is increased to 300, the loss is basically stable. The loss value starts to stabilise when 800 rounds are trained, and the total loss value of YOLOv5s converges at 0.03, and the total loss value of YOLOv5s-pear is 0.02.

After the training of the YOLOv5s-pear model is completed, the performance of the YOLOv5s-pear model is verified using the validation set of images:The results are shown in

Figure 12.

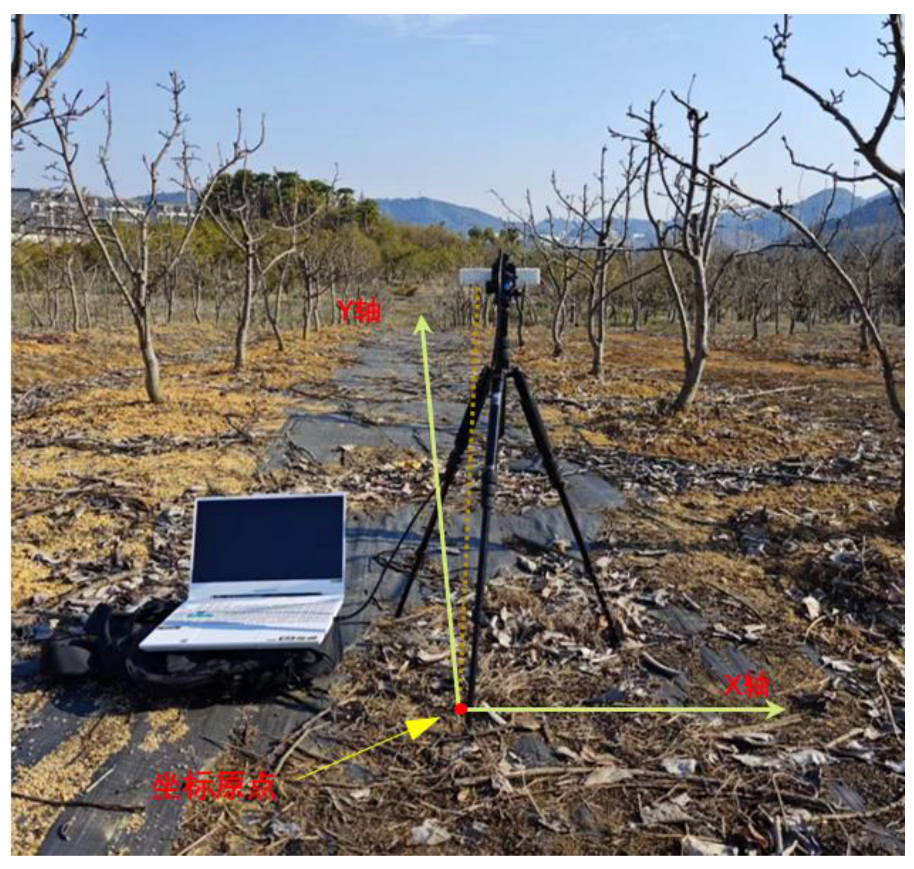

2.2.2. 3D Localisation of Pear Tree Trunks in an Orchard Environment

To achieve spatial localisation of pear tree trunks in complex background environments based on pear tree trunk target detection, it is necessary to find the coordinate information of pear tree trunks, determine the distance between pear tree trunks and the optical centre of the camera (depth information), and use the transformation relationship between the world coordinate system and the pixel coordinate system to convert the coordinates of the pear tree trunks in the pixel coordinate system to the coordinates in the world coordinate system. The 3D spatial coordinate information of the pear tree trunk is then obtained by combining the acquired depth information of the pear tree trunk with the stereo imaging principle of the binocular camera.

In order to obtain the distance (depth information) between the pear tree trunk and the optical centre of the camera, it is necessary to use the binocular camera to achieve effective acquisition of the depth information of the pear tree trunk, following the principle of stereo imaging of the binocular camera and using the calculation rule of similar triangles, as shown in

Figure 13.

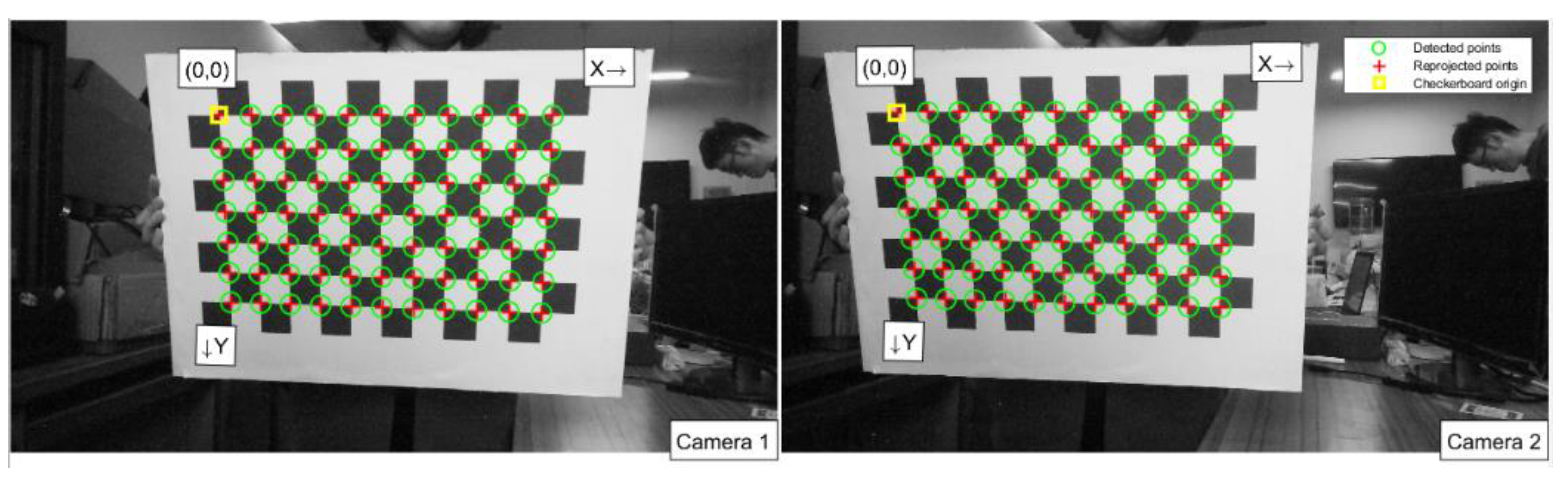

In this paper, the binocular camera is calibrated in the Camera Calibration Toolbox of Matlab R2021b, which has higher accuracy and stronger robustness compared to manual calibration. In the experiments of this paper, due to the measurement distance is far, in order to ensure the accuracy of the positioning of the pear tree trunk, used is a larger calibration board, selected 8x12 grid, a single checkerboard grid side length of 40mm, the overall is about the size of A3. An example of the calibration is shown in

Figure 14.

The binocular camera calibration results are shown in

Table 2:

After obtaining the internal reference and distortion parameters of the binocular camera, in order to obtain the three-dimensional spatial information of the pear tree trunk, the conversion of the pixel coordinate system, the image coordinate system, the camera coordinate system and the world coordinate system was carried out through the pinhole imaging principle of the camera and the method of matrix operation: the schematic diagram of the spatial coordinate system conversion is shown in

Figure 15.

In this paper, we choose the midpoint of the lower border of the rectangular box of the pear tree trunk output from target recognition as the positioning point of the pear tree trunk, and the coordinates of the upper left corner of the rectangular box are , The coordinates of the lower right corner are , The coordinates of the midpoint of the lower border of the rectangular box are calculated as follows:

(1)

Extracted pear tree trunk loci are indicated by blue dots as shown in

Figure 16.

The positioning of the pear tree trunk is that the binocular camera determines the position of the pear tree trunk by improving the pixel coordinates of the midpoints of the lower border of the YOLOv5s model detection frame, and obtains the parallax information of the same pear tree trunk after a successful matching by the SGBM algorithm, and calculates the position of the pear tree trunk in the camera coordinate system by the principle of triangulation of the binocular camera.