Submitted:

19 May 2025

Posted:

20 May 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Work

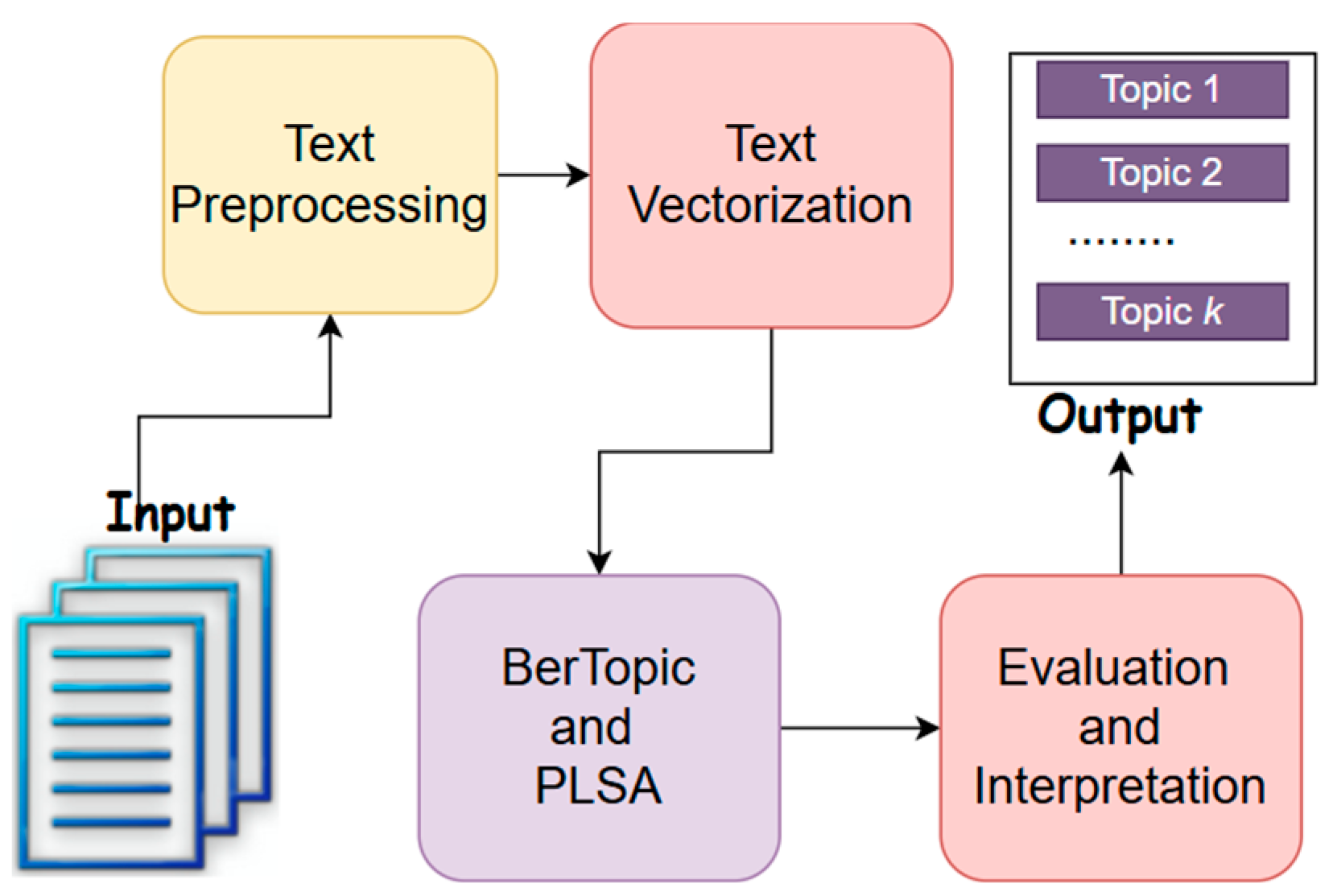

3. Materials and Methods

A. Data Collection

B. Data Processing

C. Topic Modeling Procedure

D. Evaluation Metrics

E. Experimental Setup

4. Results

4.1. Model Performance Metrics

4.2. Comparative Topic Analysis

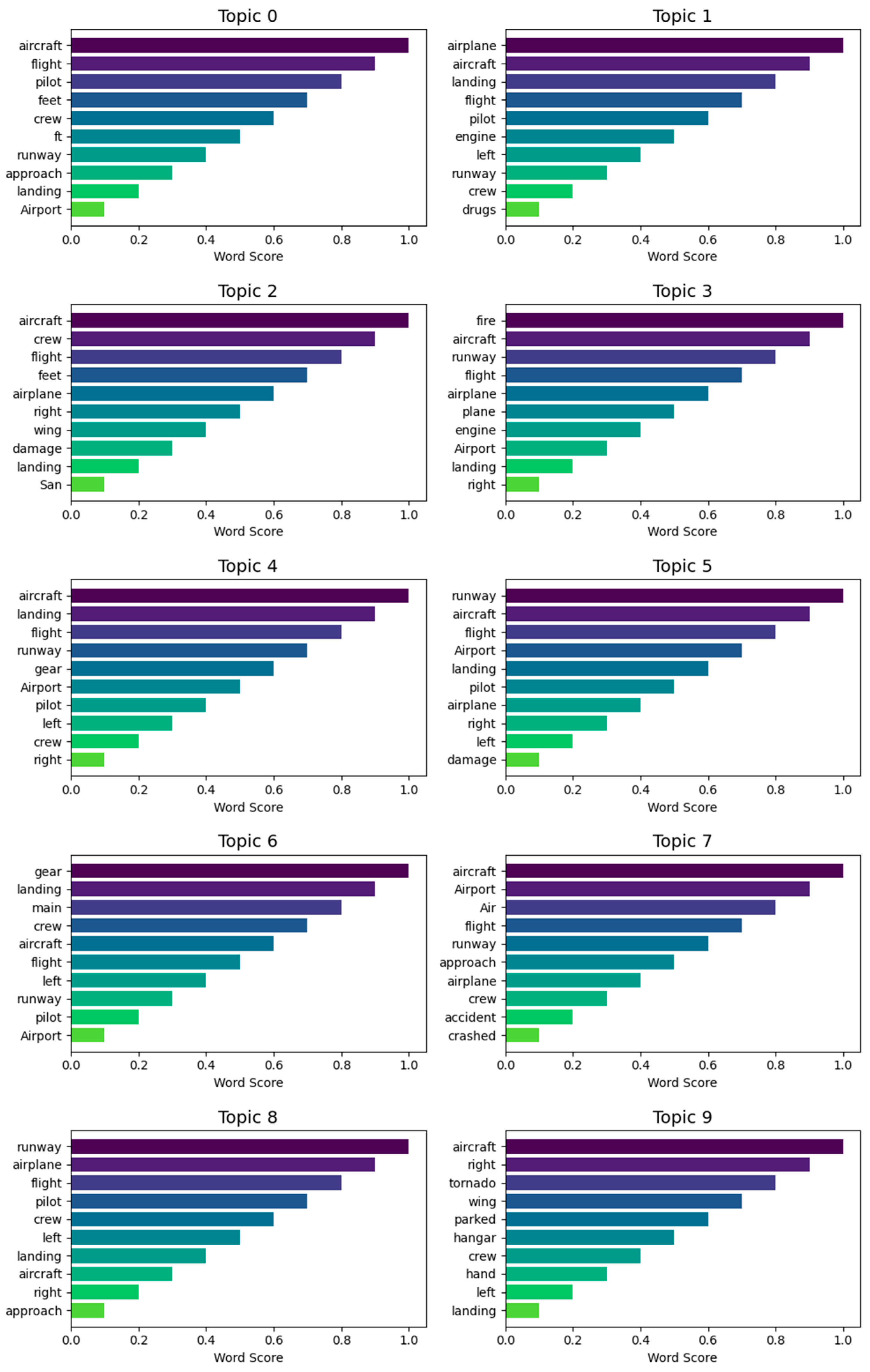

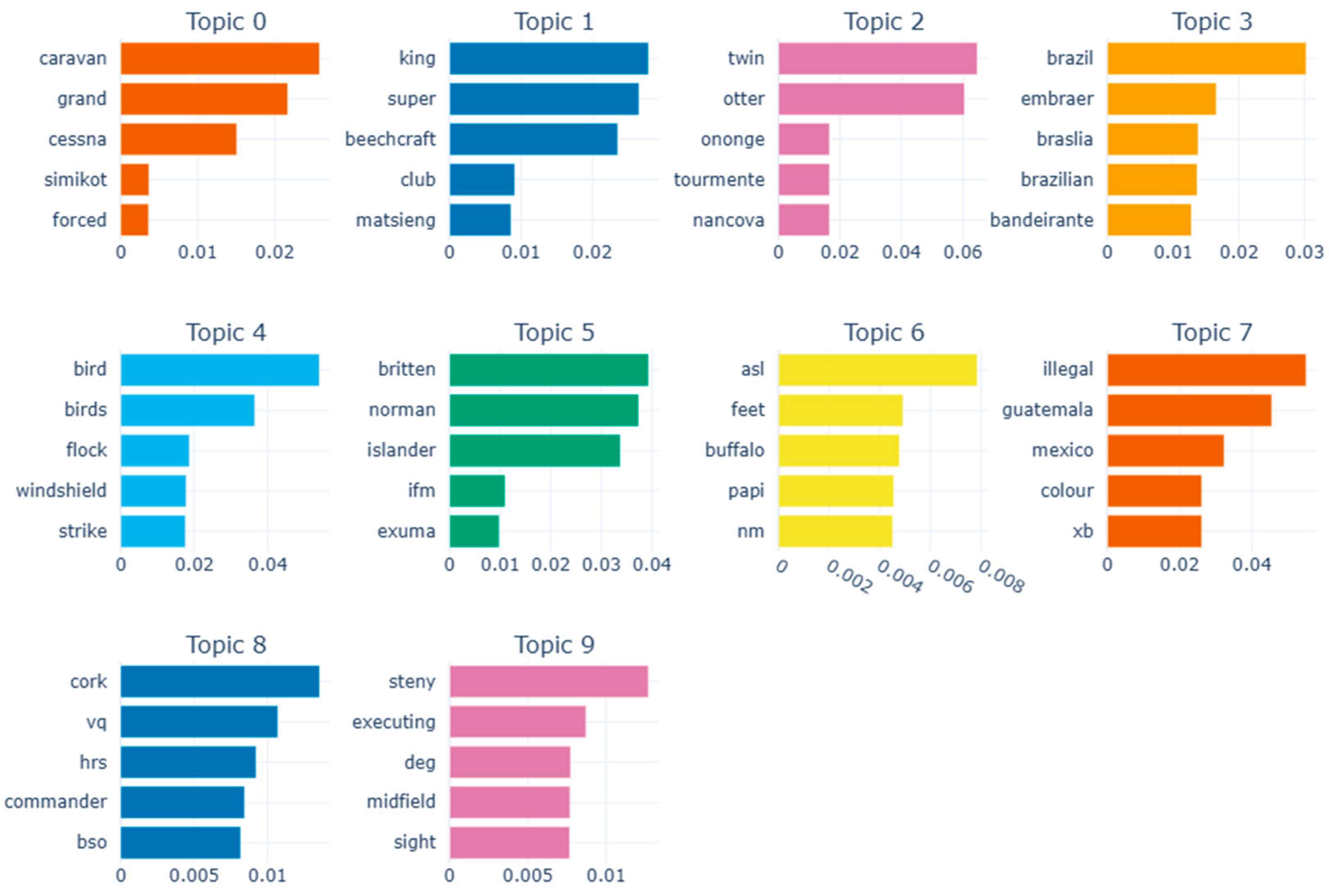

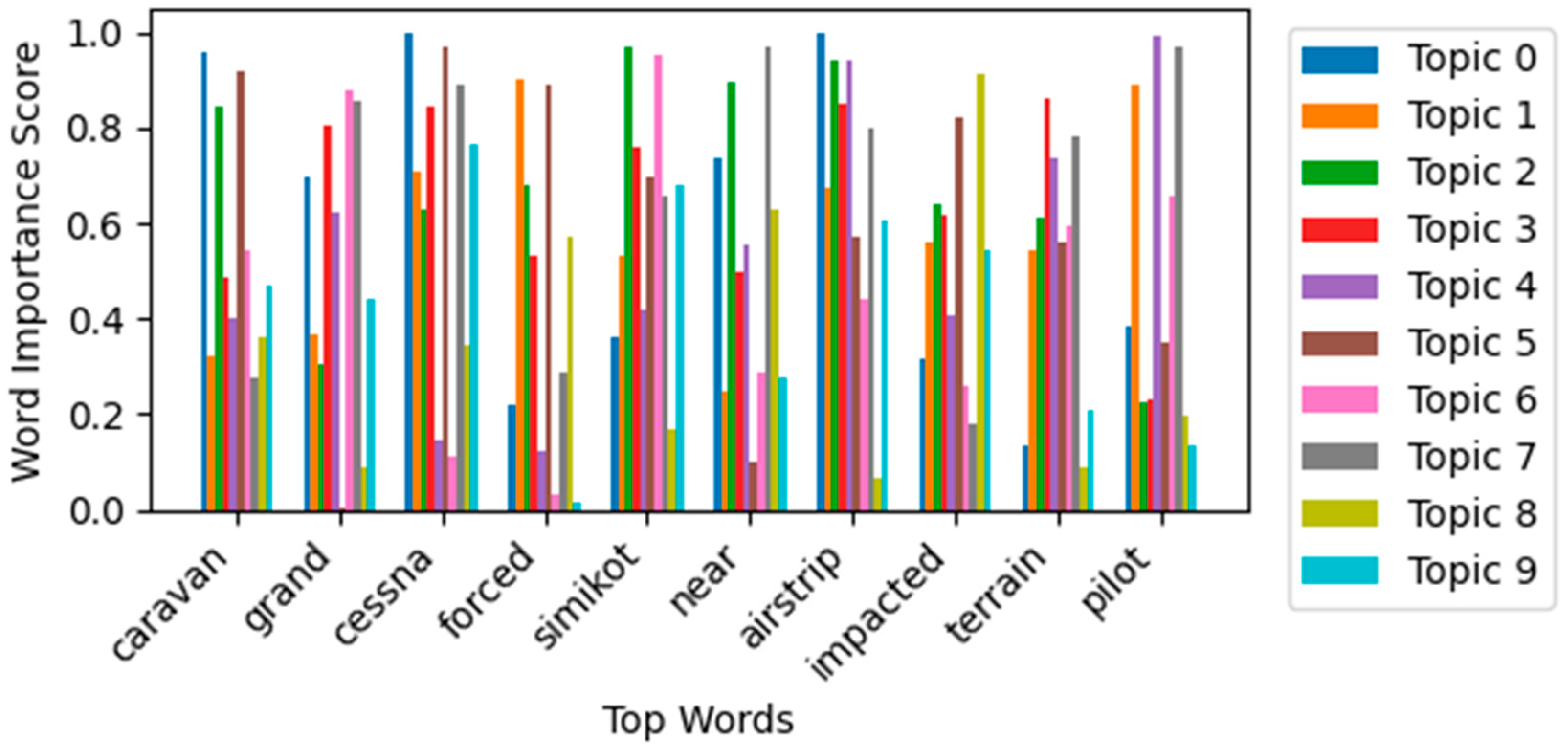

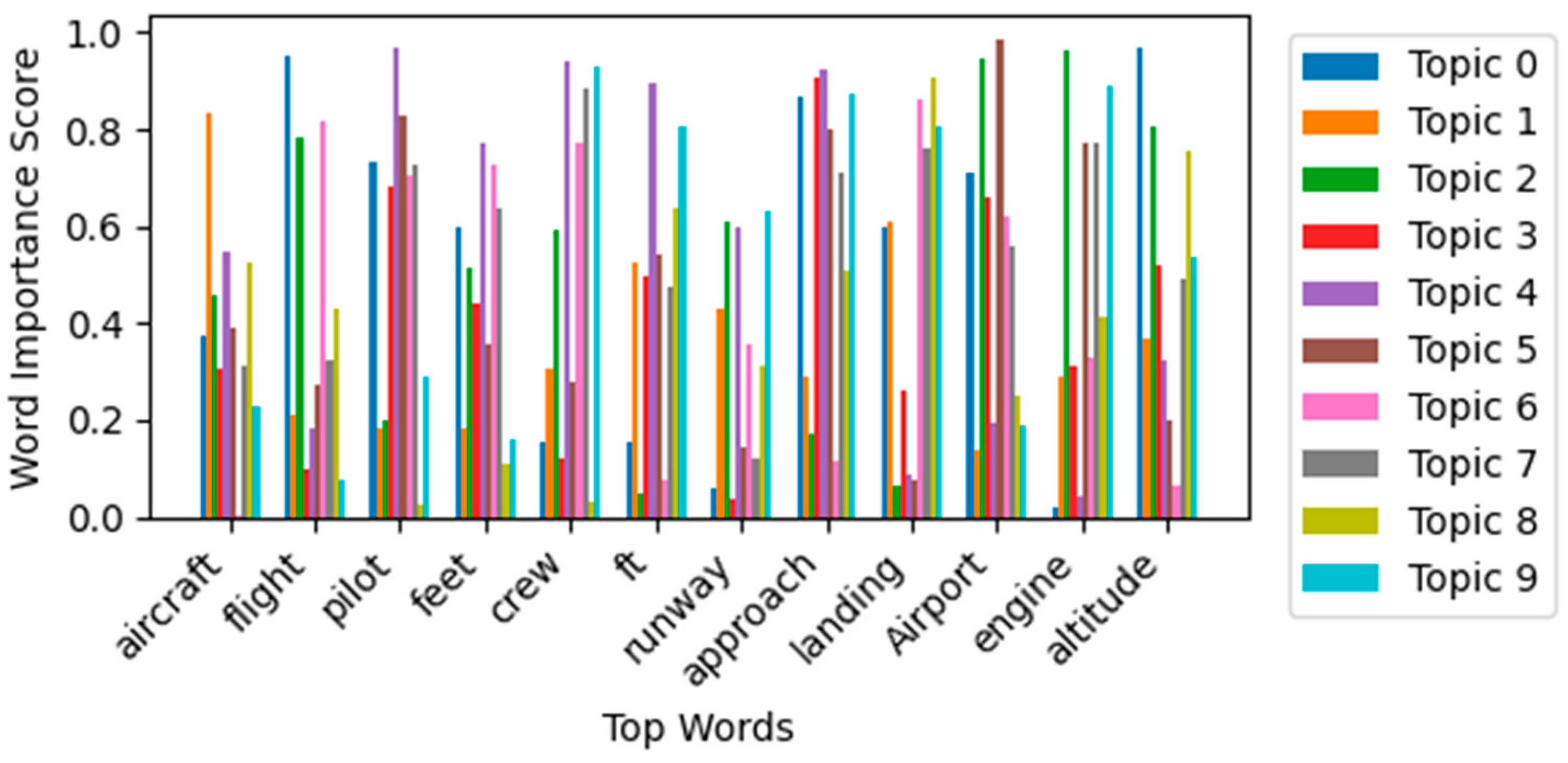

4.2.1. Topic Words and Thematic Labels

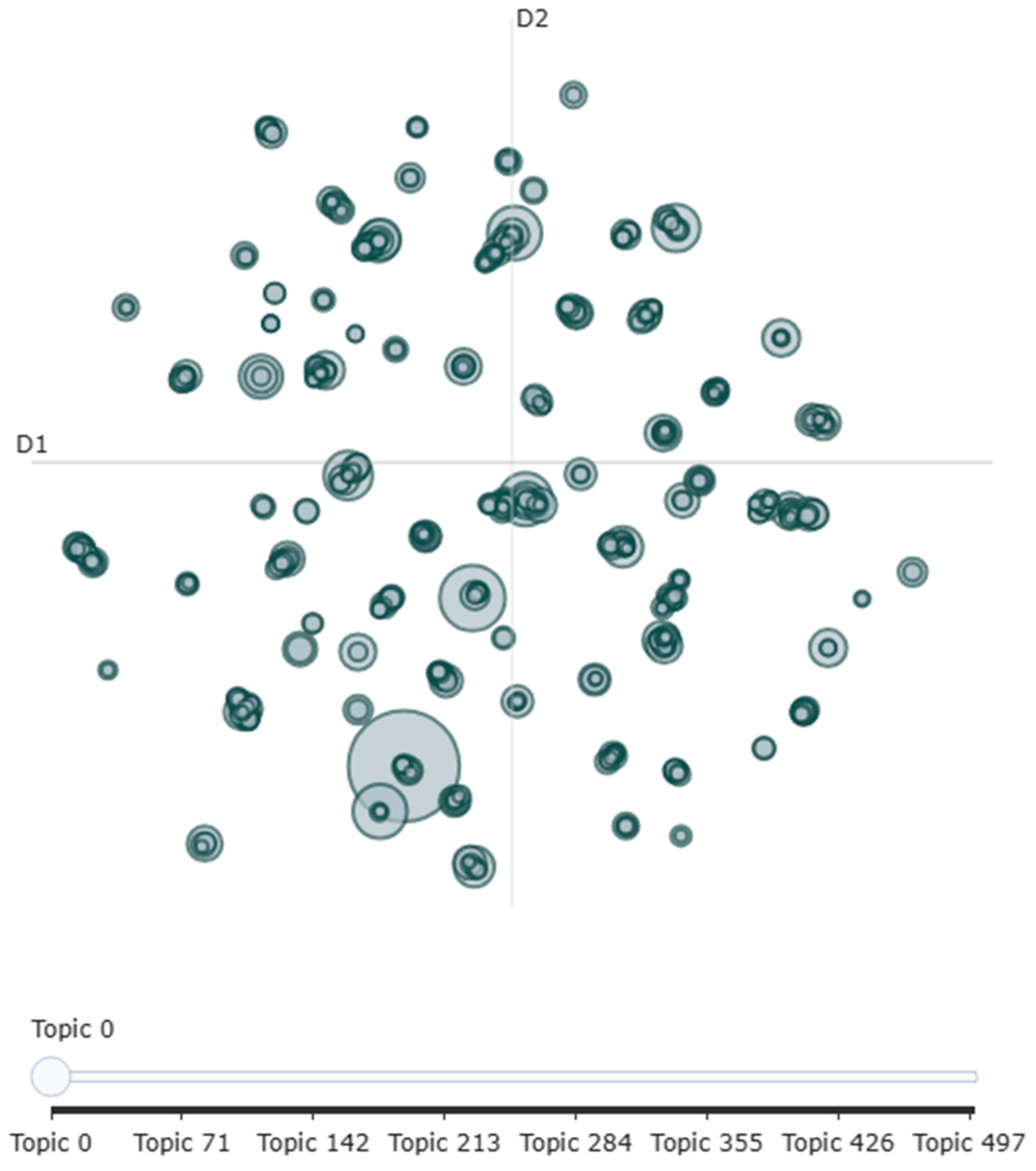

4.2.2. Visualization and Interpretability

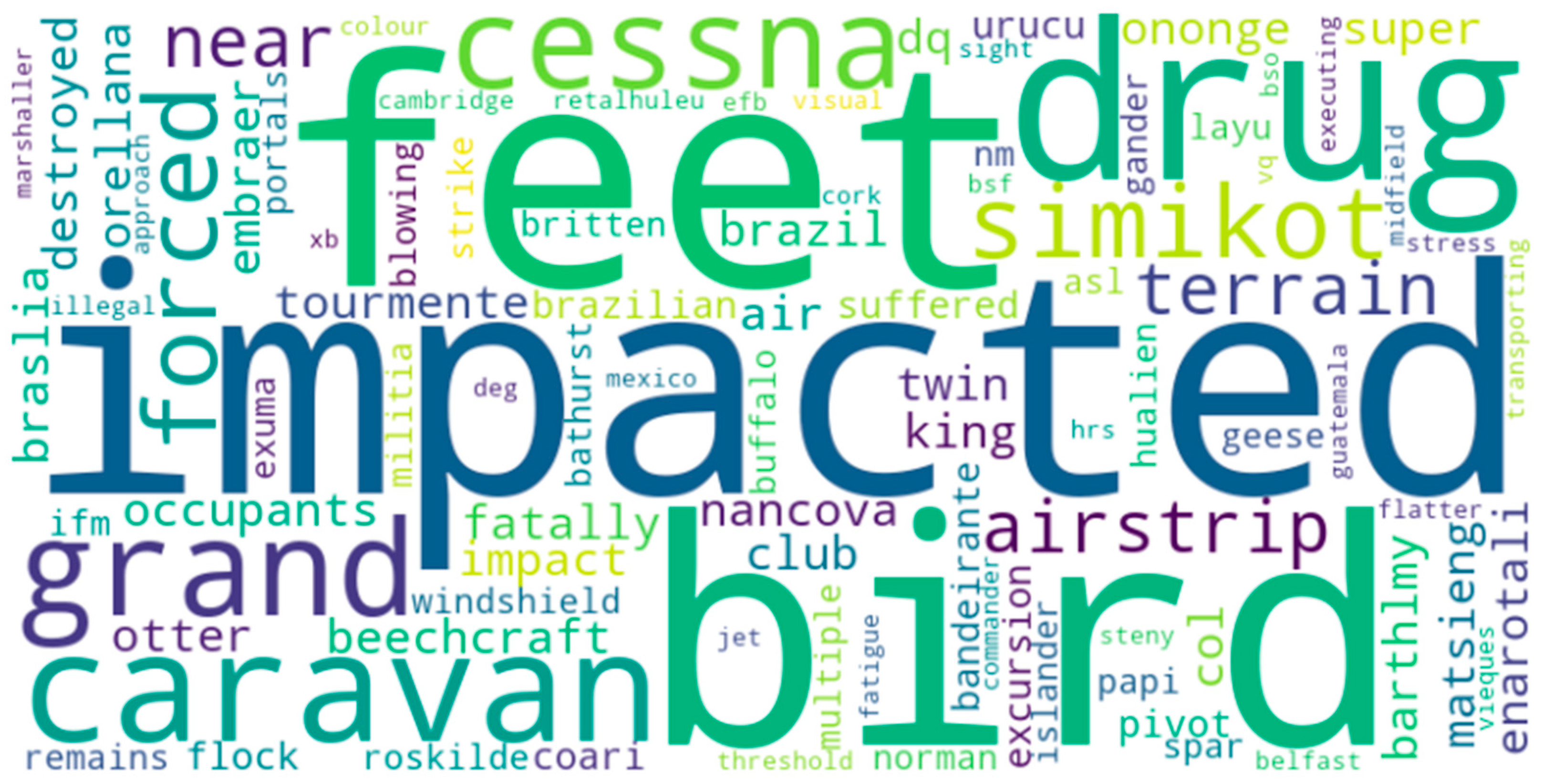

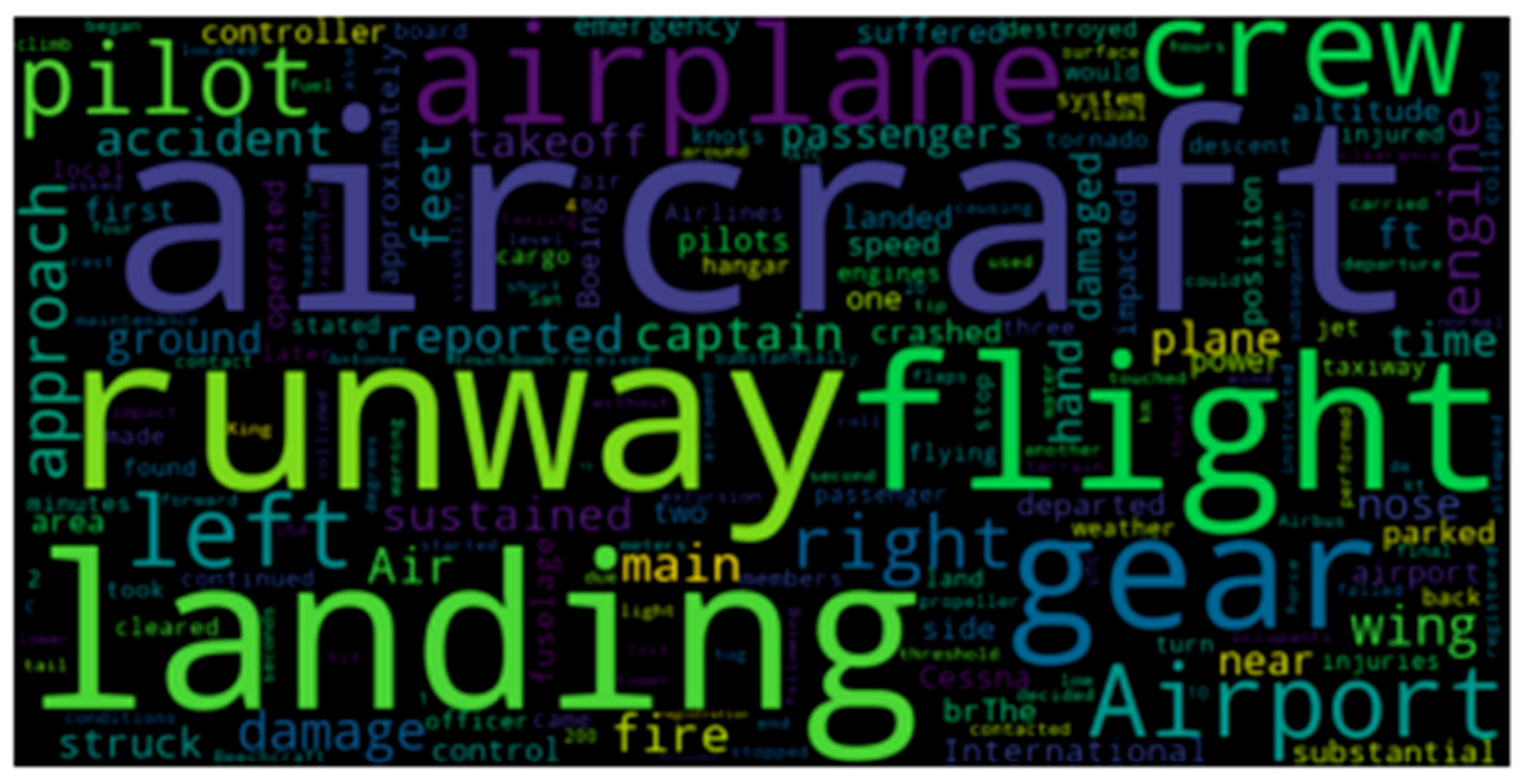

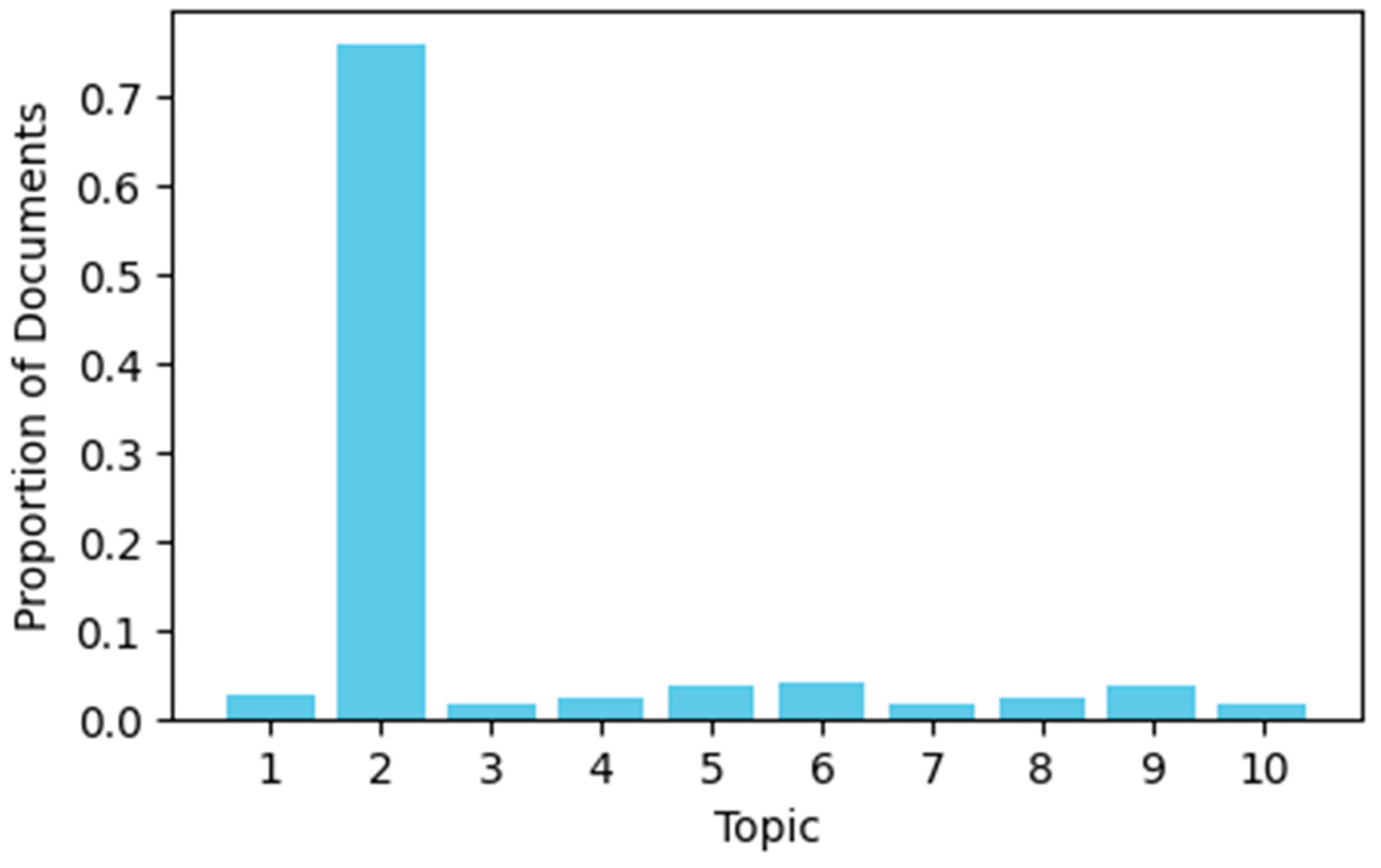

4.3. Word Clouds and Topic Distribution

4.4. Evaluation of Model Properties

5. Ablation Study

5.1. Discussion

5.2. Limitations

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| Abbreviation | Full Form |

| ASN | Aviation Safety Network |

| ATSB | Australian Transport Safety Bureau |

| BERTopic | Bidirectional Encoder Representations from Transformers Topic Modeling |

| DL | Deep Learning |

| HDBSCAN | Hierarchical Density-Based Spatial Clustering of Applications with Noise |

| ML | Machine Learning |

| NLP | Natural Language Processing |

| NTSB | National Transportation Safety Board |

| pLSA | Probabilistic Latent Semantic Analysis |

| TF-IDF | Term Frequency-Inverse Document Frequency |

| UMAP | Uniform Manifold Approximation and Projection |

References

- A. Nanyonga and G. Wild, "Impact of Dataset Size & Data Source on Aviation Safety Incident Prediction Models with Natural Language Processing," in 2023 Global Conference on Information Technologies and Communications (GCITC), 2023, pp. 1-7: IEEE.

- A. Nanyonga, K. Joiner, U. Turhan, and G. Wild, "Applications of natural language processing in aviation safety: A review and qualitative analysis," in AIAA SCITECH 2025 Forum, 2025, p. 2153.

- A. Gupta and H. J. N. Fatima, "Topic modeling in healthcare: A survey study," vol. 20, no. 11, pp. 6214-6221, 2022.

- A. J. Rawat, S. Ghildiyal, and A. K. J. I. J. E. T. T. Dixit, "Topic Modeling Techniques for Document Clustering and Analysis of Judicial Judgements," vol. 70, no. 11, pp. 163-169, 2022.

- M. Apishev, S. Koltcov, O. Koltsova, S. Nikolenko, and K. Vorontsov, "Additive regularization for topic modeling in sociological studies of user-generated texts," in Advances in Computational Intelligence: 15th Mexican International Conference on Artificial Intelligence, MICAI 2016, Cancún, Mexico, October 23–28, 2016, Proceedings, Part I 15, 2017, pp. 169-184: Springer.

- H. Axelborn and J. Berggren, "Topic Modeling for Customer Insights: A Comparative Analysis of LDA and BERTopic in Categorizing Customer Calls," ed, 2023.

- A. Nanyonga, K. Joiner, U. Turhan, and G. Wild, "Does the Choice of Topic Modeling Technique Impact the Interpretation of Aviation Incident Reports? A Methodological Assessment," 2025.

- T. Hofmann, "Probabilistic latent semantic analysis," in UAI, 1999, vol. 99, pp. 289-296.

- Y. Mu, C. Dong, K. Bontcheva, and X. J. a. p. a. Song, "Large Language Models Offer an Alternative to the Traditional Approach of Topic Modelling," 2024.

- J. A. dos Santos, T. I. Syed, M. C. Naldi, R. J. Campello, and J. J. I. T. o. B. D. Sander, "Hierarchical density-based clustering using MapReduce," vol. 7, no. 1, pp. 102-114, 2019.

- M. Masseroli, D. Chicco, and P. Pinoli, "Probabilistic latent semantic analysis for prediction of gene ontology annotations," in The 2012 international joint conference on neural networks (IJCNN), 2012, pp. 1-8: IEEE.

- M. Wahabzada and K. Kersting, "Larger residuals, less work: Active document scheduling for latent Dirichlet allocation," in Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2011, Athens, Greece, September 5-9, 2011, Proceedings, Part III 22, 2011, pp. 475-490: Springer.

- A. Nanyonga and G. J. a. p. a. Wild, "Analyzing Aviation Safety Narratives with LDA, NMF and PLSA: A Case Study Using Socrata Datasets," 2025.

- J. Rusakovica, J. Hallinan, A. Wipat, and P. J. J. o. i. b. Zuliani, "Probabilistic latent semantic analysis applied to whole bacterial genomes identifies common genomic features," vol. 11, no. 2, pp. 93-105, 2014.

- M. La Rosa, A. Fiannaca, R. Rizzo, and A. J. B. b. Urso, "Probabilistic topic modeling for the analysis and classification of genomic sequences," vol. 16, pp. 1-9, 2015.

- S. T. Dumais, "LSA and information retrieval: Getting back to basics," in Handbook of latent semantic analysis: Psychology Press, 2007, pp. 305-334.

- N. C. J. T. D. S. Albanese, "Topic Modeling with LSA, pLSA, LDA, NMF, BERTopic, Top2Vec: a Comparison," vol. 19, no. 09, 2022.

- S. Xu, Y. Wang, X. Cheng, and Q. Yang, "Thematic Identification Analysis of Equipment Quality Problems Based on the BERTopic Model," in 2024 6th Management Science Informatization and Economic Innovation Development Conference (MSIEID 2024), 2025, pp. 484-491: Atlantis Press.

- H. Sibitenda et al., "Extracting Semantic Topics about Development in Africa from Social Media," 2024.

- A. Nanyonga, H. Wasswa, U. Turhan, K. Joiner, and G. Wild, "Comparative Analysis of Topic Modeling Techniques on ATSB Text Narratives Using Natural Language Processing," in 2024 3rd International Conference for Innovation in Technology (INOCON), 2024, pp. 1-7: IEEE.

- L. McInnes, J. Healy, and J. J. a. p. a. Melville, "Umap: Uniform manifold approximation and projection for dimension reduction," 2018.

- Y. Kim and H. J. I. J. o. C. Kim, "An Analysis of Research Trends on the Metaverse Using BERTopic Modeling," vol. 19, no. 4, pp. 61-72, 2023.

- W. Chen, F. Rabhi, W. Liao, and I. J. E. Al-Qudah, "Leveraging state-of-the-art topic modeling for news impact analysis on financial markets: a comparative study," vol. 12, no. 12, p. 2605, 2023.

- R. Egger and J. J. F. i. s. Yu, "A topic modeling comparison between lda, nmf, top2vec, and bertopic to demystify twitter posts," vol. 7, p. 886498, 2022.

- A. Nanyonga, H. Wasswa, U. Turhan, K. Joiner, and G. Wild, "Exploring Aviation Incident Narratives Using Topic Modeling and Clustering Techniques," in 2024 IEEE Region 10 Symposium (TENSYMP), 2024, pp. 1-6: IEEE.

- A. Agovic, H. Shan, and A. Banerjee, "Analyzing Aviation Safety Reports: From Topic Modeling to Scalable Multi-Label Classification," in CIDU, 2010, pp. 83-97: Citeseer.

- D. Gefen, J. E. Endicott, J. E. Fresneda, J. Miller, and K. R. J. C. o. t. A. f. I. S. Larsen, "A guide to text analysis with latent semantic analysis in R with annotated code: Studying online reviews and the stack exchange community," vol. 41, no. 1, p. 21, 2017.

- M. J. a. p. a. Grootendorst, "BERTopic: Neural topic modeling with a class-based TF-IDF procedure," 2022.

- R. IBRAIMOH, K. O. DEBRAH, and E. NWAMBUONWO, "Developing & Comparing Various Topic Modeling Algorithms on a Stack Overflow Dataset," 2024.

- S. Deb and A. K. J. M. L. w. A. Chanda, "Comparative analysis of contextual and context-free embeddings in disaster prediction from Twitter data," vol. 7, p. 100253, 2022.

- A. Hoyle, P. Goel, A. Hian-Cheong, D. Peskov, J. Boyd-Graber, and P. J. A. i. n. i. p. s. Resnik, "Is automated topic model evaluation broken? the incoherence of coherence," vol. 34, pp. 2018-2033, 2021.

- M. Röder, A. Both, and A. Hinneburg, "Exploring the space of topic coherence measures," in Proceedings of the eighth ACM international conference on Web search and data mining, 2015, pp. 399-408.

- D. J. a. p. a. Angelov, "Top2vec: Distributed representations of topics," 2020.

| Topic | BERTopic Top 10 Words | pLSA Top 10 Words | Theme / Single Word |

|---|---|---|---|

| 0 | caravan, grand, cessna, forced, simikot, near, airstrip, impacted, terrain, pilot | aircraft, flight, pilot, feet, crew, ft, runway, approach, landing, Airport | Small Aircraft and Flight Basics |

| 1 | illegal, venezuelan, drugs, venezuela, mexican, mexico, colombian, jet, guatemala, xb | airplane, aircraft, landing, flight, pilot, engine, left, runway, crew, drugs | Drug Trafficking and Flight Landing |

| 2 | otter, twin, servo, elevator, nancova, tourmente, dq, ononge, hinge, col | aircraft, crew, flight, feet, airplane, right, wing, damage, landing, San | Aircraft Parts and Flight Damage |

| 3 | fire, smoke, extinguished, parked, fireball, cargo, bottles, heat, emanating, rescue | fire, aircraft, runway, flight, airplane, plane, engine, Airport, landing, right | Fire Incident and Flight |

| 4 | caught, fire, canadair, erupted, repair, huatulco, hockey, arson, forced, providence | aircraft, landing, flight, runway, gear, Airport, pilot, left, crew, right | Fire Event and Landing Gear |

| 5 | tornado, blown, substantially, tune, storm, hangered, nashville, damaged, tennessee, struck | runway, aircraft, flight, Airport, landing, pilot, airplane, right, left, damage | Tornado Damage and Runway Incident |

| 6 | learjet, paso, toluca, mateo, olbia, iwakuni, mexico, cancn, vor, michelena | gear, landing, main, crew, aircraft, flight, left, runway, pilot, Airport | Jet and Airports and Gear and Emergency |

| 7 | bird, birds, flock, strike, windshield, geese, remains, roskilde, spar, multiple | aircraft, Airport, Air, flight, runway, approach, airplane, crew, accident, crashed | Bird Strike and Accidents |

| 8 | havana, cuba, bogot, medelln, rionegro, permission, carreo, tulcn, haiti, lamia | runway, airplane, flight, pilot, crew, left, landing, aircraft, right, approach | Latin America and Runway and Flight |

| 9 | medan, tower, supervisor, acted, rendani, indonesia, pk, controller, ende, jalaluddin | aircraft, right, tornado, wing, parked, hangar, crew, hand, left, landing | Air Traffic Control and Tornado Damage |

| Property | BERTopic | pLSA |

|---|---|---|

| Interpretability | High | Moderate |

| Coherence | 0.531 | 0.7634 |

| Perplexity | −4.532 | −4.6237 |

| Granularity Control | Adjustable | Fixed |

| Computational Cost | Higher | Lower |

| Visualization | Strong (via UMAP) | Limited |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).