1. Introduction

Autism spectrum disorder (ASD) is a progressive condition characterized by difficulties in social interaction, communication, stereotypic behaviors, and sensory abnormalities [

1,

2]. In the field of medical imaging, deep learning models have made significant strides in ASD diagnosis, leveraging their unsupervised nature to identify complex patterns [

3,

4].

Magnetic resonance imaging (MRI) based studies have showed various biomarkers demonstrated altered patterns of gray matter in autism patients compared to the normal control population [

5,

6]. For example, increased gray matter has been reported in angular gyrus in right hemisphere, prefrontal cortex, superior and middle frontal gyri in left hemisphere, precuneus and inferior occipital gyrus in left hemisphere and inferior temporal gyrus in right hemisphere regions as increased biomarkers in autism subjects. In addition to these increased gray matter tissues in brain also they reported diminished gray matter tissues in left hemisphere post central gyrus and cerebellar regions [

7]. Another study compared three groups normal control (CN), participants with attention deficit hyperactivity disorder (ADHD), and ASD and found that gray matter volume (GMV) was significantly higher in the ASD group compared to the ADHD and CN groups (p = 0.004). Total brain volume (TBV) was also significantly higher in the ASD group (p = 0.015) [

8]. Another longitudinal volumetric study with 156 participants also exhibited statistically significant increases in GMV and TBV in ASD subjects [

9]. Even though the sample sizes in the previous study were relatively small (33 CN, 44 ADHD, and 19 ASD), the observed group differences in GMV and TBV were statistically validated using Bonferroni correction [

8]. These findings might be an indication that gray matter tissue alone could potentially serve as a useful biomarker for classifying ASD through deep learning approaches.

Another longitudinal volumetric study with 156 subjects also reported a similar pattern of statistically significant increased gray matter and volume in ASD subjects [

9]. Although, the sample sizes were small for CN, ADHD, and ASD subjects, such as 33, 44, and 19 respectively. Nonetheless, the results of the GMV and TBV group differences were based on Bonferroni statistical correction [

8], suggesting that these results might indicate the probable for further classification of ASD brain patterns using gray matter tissues alone over a deep learning approach. Another study consisted of 295 study cohorts studied MRI based brain changes associated with ASD, with respect to gender differences. The study noted that males with ASD displayed increased gray matter volumes in the insula and superior frontal gyrus, while diminished volumes were noted in the inferior frontal gyrus and thalamus. However, females with ASD exhibited increased gray matter volume in the right cuneus [

10]. In addition to gray matter biomarkers, other works have reported on white matter changes, including white matter connectivity, which also provide a significant biomarker for ASD brain [

11,

12,

13,

14,

15]. For example, a diffusion tensor imaging (DTI) based work reported a 99% classification accuracy for ASD using fivefold cross validation [

16].

Previous studies have used various deep learning approaches to identify the ASD based on functional MRI (fMRI) and structural MRI (sMRI) data, showing a wide range of classification accuracies. A study using a 3D Residual Network (ResNet-18) and multilayer perceptron (MLP) achieved 74% accuracy using fMRI and region of interest (ROI) data [

17]. Another study using a Deep Neural Network (DNN) approach to fMRI data reported a 70% classification accuracy, with ROIs selected based on co activation levels of brain regions [

18]. Another hybrid model that combined fMRI and structural MRI data, including gray and white matter tissues, for a Deep Belief Network (DBN) approach accomplished 65% accuracy, with 116 ROIs used from both imaging modalities [

19]. A connectivity based study using 7266 gray matter ROIs from the Blood Oxygen Level Dependent (BOLD) signal tested on 964 subjects from the Autism Brain Imaging Data Exchange (ABIDE) dataset achieved a 60% classification accuracy [

20].

Another study reported improved accuracy with smaller sample sizes, such as a study with 80 subjects using a leave-one-out classifier that achieved 79% accuracy, which boosted to 89% for subjects under 20 years of age [

21]. A DNN classifier on fMRI data involving 866 subjects (402 ASD and 464 control subjects) showed a high classification accuracy of 88%, using ROIs based on several functional and structural atlases, including the Bootstrap Analysis of Stable Clusters (BASC) and the Craddock 200 (CC200) atlas [

22]. A convolutional neural network (CNN) approach, using 126 subjects from the ABIDE database, achieved an impressive 99.39% accuracy over 50 epochs with 20% of the data reserved for validation [

23]. Additionally, a multimodal fusion approach incorporating both fMRI and sMRI for 1383 male participants aged 5 to 40 years achieved an accuracy of 85%, with the structural model alone achieving 75% and the functional model achieving 83% [

24]. These findings highlight the effectiveness of different deep learning models and imaging modalities in ASD classification, with multimodal approaches offering the highest accuracies.

While fMRI provides valuable physiological information about brain regions, it has lower resolution and more attenuation of structural regions. In contrast, sMRI offers higher resolution and less attenuation of structural regions, making it a promising tool for studying brain anatomy. However, its application in ASD prediction using deep learning models has been relatively underexplored. In this work, we aim to use sMRI images alone to train and predict outcomes in a deep learning model. For this purpose, we used the VGG network, introduced by Simonyan and Zisserman in 2014 for the ImageNet Challenges. The VGG network has proven effective in large image data challenges, particularly in image recognition [

25]. Previously , a study used VGG16 model to identify papillary thyroid carcinoma from benign thyroid nodules using cytological images, achieving 97.66 % accuracy in cancer detection [

26].

In our study, we introduce a modified VGG model, leveraging the strengths of the base VGG model along with multiple weighted layers in a deep neural network, using TensorFlow and Keras, to improve ASD identification in large datasets. To the best of our knowledge, this is the first study to apply the VGG model for ASD identification based solely on sMRI. While various conventional deep learning models in the literature have used multimodal or multiple tissue types for ASD classification, our approach focuses on identifying ASD using GMD maps alone, minimizing computational complexity in terms of storage and learning.

2. Materials and Method

2.1. Dataset

The present study utilized MRI T1-weighted image data from the ABIDE database. ABIDE is a consortium that provides previously collected sMRI and rs-fMRI data from individuals with ASD and matched healthy controls for data sharing within the scientific community [

27]. We included a total of 272 subjects in our analysis and the age difference between the ASD and CN groups was assessed using an independent t-test from the SciPy Python library, implemented within the PyCharm platform.

2.2. Preprocessing of MRI- T1 Images

For data preprocessing, we employed the statistical parametric mapping package SPM12 (Wellcome Department of Cognitive Neurology, UK) and MATLAB 2019.b (The MathWorks Inc., Natick, MA) with custom software to preprocess our MRI T1 images. The preprocessing steps followed those described earlier [

28]. The Diffeomorphic Anatomical Registration Through Exponentiated Lie Algebra (DARTEL) toolbox was used to improve inter-subject image registration in our input images [

29]. We segmented gray matter (GM) , white matter (WM), cerebrospinal fluid (CSF), skull, and other brain regions using the 'new segment' option in the DARTEL toolbox. The gray matter probability maps computed for each scan were spatially normalized to Montreal Neurological Institute (MNI) space (unmodulated, re-sliced to 1 × 1 × 1 mm) and smoothed with a Gaussian filter (9-mm full width at half maximum) [

30,

31,

32,

33].

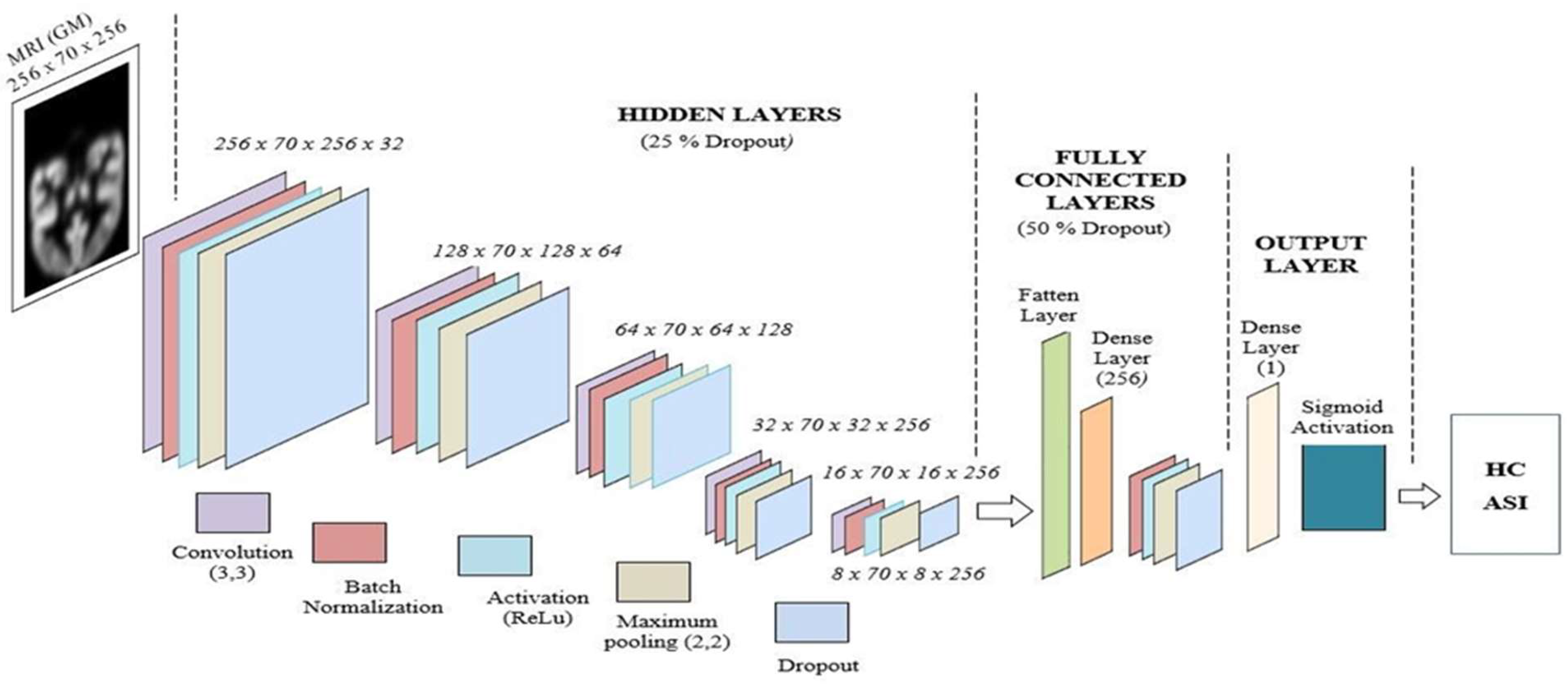

2.3. Proposed Deep Learning VGG Architecture

The proposed deep learning network is implemented using the TensorFlow and Keras platforms. Skull-stripped image data (segmented and normalized) provided a higher probability of achieving valid MNI coordinates for functional activations compared to skull-included input images [

34]. The proposed deep learning VGG network architecture is shown in Fig. 1. In the conventional VGG-16 network, convolutional layers are followed by pooling layers in each hidden layer unit. The deep learning network starts with 64 filters in the first layer unit, then to 128 filters, then 256 filters, and finally gets 512 filters in the deeper hidden layers. Furthermore, each convolutional layer utilities a Rectified Linear Unit (ReLU) for activation. Finally, it incorporates three fully connected layers: the first two with 4,096 channels, and the third with 1,000 channels—one for every class. Nevertheless, we have significantly changed the filter layout and layer structure in our version of this deep neural network [

35,

36,

37,

38]. Our deep learning architecture starts with 32 filters, followed by 64 and 128 filters, and ends with two final units, each containing 256 filters. We have also encompassed further batch normalization units, as demonstrated below.

Figure 1.

Proposed deep learning VGG network for ASD and HC classification.

Figure 1.

Proposed deep learning VGG network for ASD and HC classification.

2.2.1. Input Layer Unit

The input layer consists of preprocessed gray matter (GM) from MRI T1-weighted images. To reduce complexity, we selected the best 70 slices that contain the most brain regions (256 x 70 x 256). Our network was designed with five fully connected, sequential hidden layer units. Each hidden layer unit was designed with the following layers: convolution filters (3 x 3), activation unit (ReLU), maximum pooling layer (2 x 2), and 25 percentage of dropout layers.

2.2.2. Hidden Layer Unit

The first hidden layer consists of 32 convolution filters with a kernel size of 3x3. The output of the convolution layer in the first hidden layer has 32 feature maps. The maximum pooling layer of the first hidden layer reduces the dimensionality of the feature map by half, i.e., 128 x 70 x 128 x 32 feature maps. The second hidden layer unit consists of 64 convolution filters with a kernel size of 3x3, and the output of the convolution layer in the second hidden layer unit has 64 feature maps. The maximum pooling layer of the second hidden layer reduces the dimensionality of the feature map by half, i.e., 64 x 70 x 64 x 64 feature maps. The third hidden layer unit is designed with 128 convolution filters with a kernel size of 3x3, and the output of the convolution layer in the third hidden layer unit has 128 feature maps. The maximum pooling layer of the third hidden layer reduces the dimensionality of the feature map by half, i.e., 32 x 70 x 32 x 128 feature maps. The fourth hidden layer unit is developed using 256 convolution filters with a kernel size of 3x3, and the output of the convolution layer in the fourth hidden layer unit has 256 feature maps. The maximum pooling layer of the fourth hidden layer reduces the dimensionality of the feature map by half, i.e., 16 x 70 x 16 x 256 feature maps. The fifth (final) hidden layer unit is implemented with 256 convolution filters with a kernel size of 3x3. The maximum pooling layer of the fifth hidden layer reduces the dimensionality of the feature map by half, i.e., 8 x 70 x 8 x 256 feature maps. In our proposed deep learning network, each hidden layer unit is designed with a rectified linear unit (ReLU)-based activation, a dropout layer with 25%, and batch normalization functions.

2.2.3. Fully Connected Layer Unit

Our proposed fully connected (FC) layer unit is designed with a flatten layer, a fully connected layer, a batch normalization layer, a ReLU-based activation, a maximum pooling layer, and a 50% dropout layer. The FC layer connects the hidden layers to the output layer unit.

2.2.4. Output Layer Unit

The output layer unit is designed with a dense layer and a sigmoid activation function. The output unit predicts our images into ASI and HC classes.

3. Results

3.1. Demographic Data

We included a total of 272 subjects, with 132 individuals diagnosed with ASD and 132 matched healthy controls. The mean age of the CN group was 14.62 years (SD = 4.34), and the mean age of the ASD group was 14.89 years (SD = 4.29). The CN group consisted of 68 male subjects and 72 female subjects, while the ASD group consisted of 67 males and 65 females. The mean age of males in the CN group was 14.97 years (SD = 4.14), and in the ASD group, it was 15.75 years (SD = 3.77). The mean age of females in the CN group was 13.57 years (SD = 4.56), and in the ASD group, it was 14.02 years (SD = 4.60). No significant age differences were observed between the two groups (p = 0.23), as shown in

Table 1.

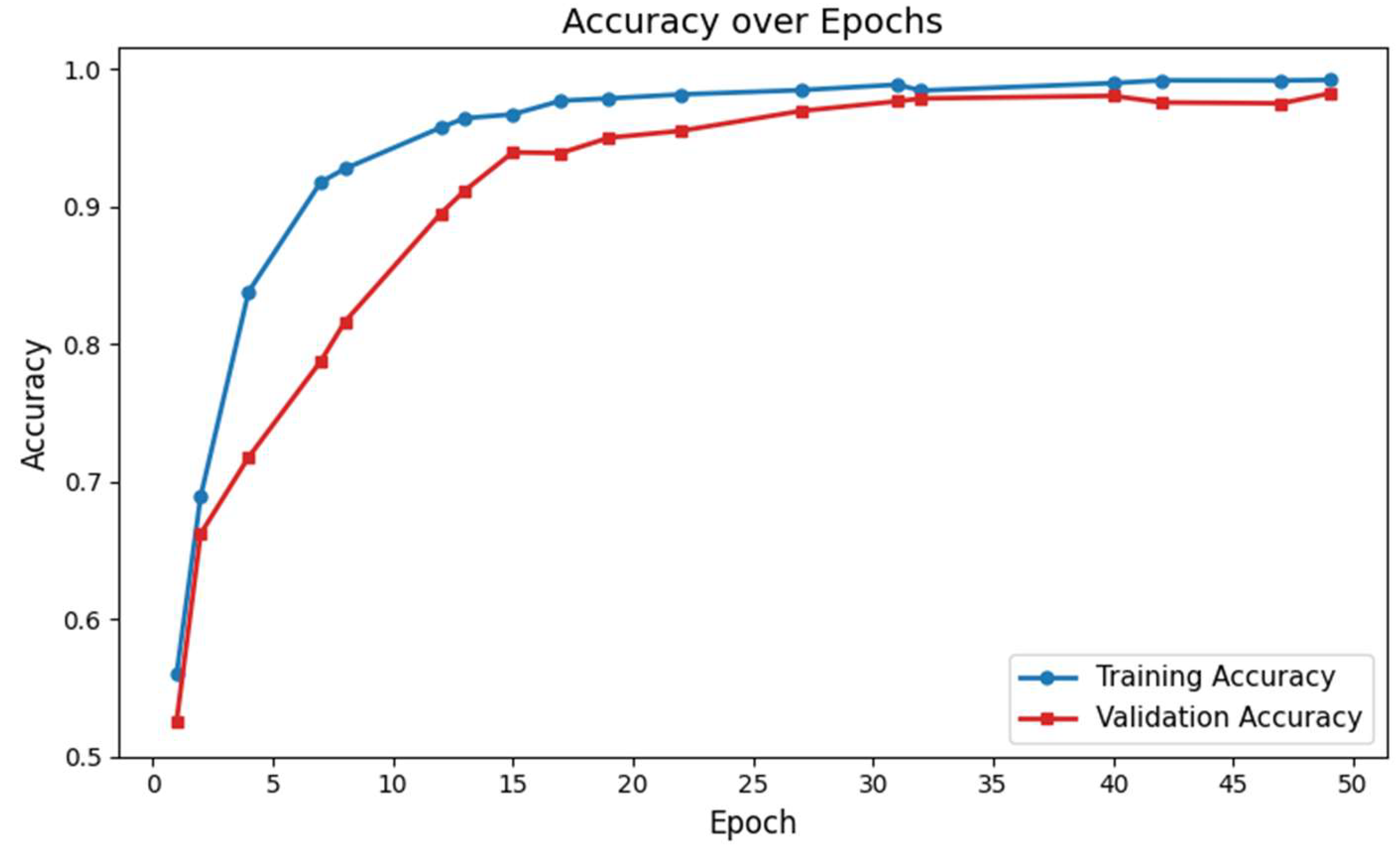

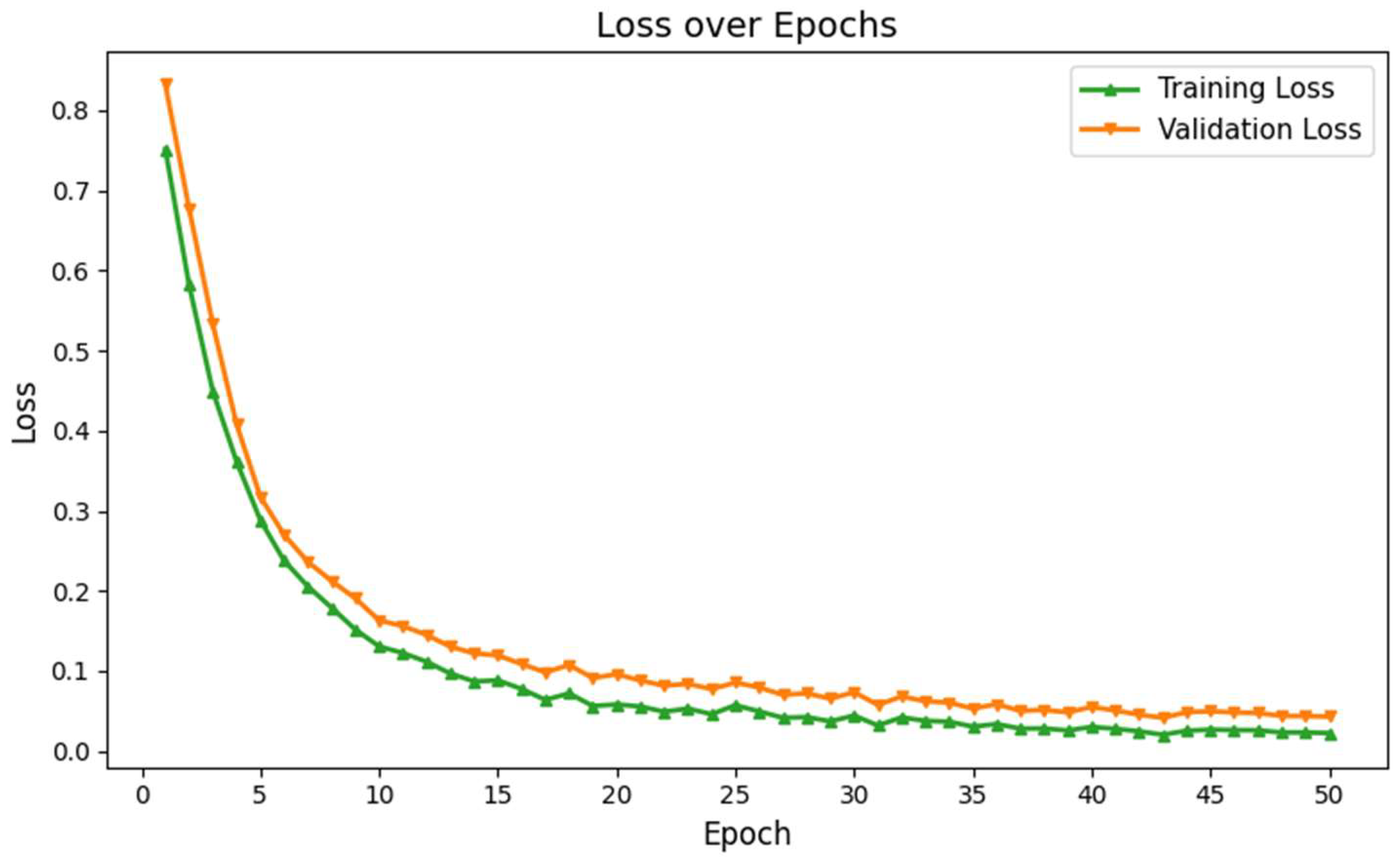

3.2. Performance Evaluation of GM-VGG Net Classifier

The classification performance of our proposed GM-VGGNet was evaluated based on loss and accuracy parameters. The training and validation loss functions, along with the accuracy of our deep network, are shown in Fig. 2. Our proposed deep learning network was validated over 50 epochs. The training and validation accuracy of our network were 97% and 96%, respectively, over 50 epochs. The loss function values of our network were 0.0204 for training and 0.0696 for validation over 50 epochs. In this deep learning model, we used the TensorFlow-Keras platform with the Adam optimizer, kept the default learning rate of 0.001. We fine-tuned the structure based on the loss and accuracy performance to avoid overfitting challenges. The total number of parameters was 5,176,705, of which 5,174,721 were trainable. The model summary is given in

Table 2.

Figure 2.

Training and validation accuracy of the proposed deep learning network for ASD identification.

Figure 2.

Training and validation accuracy of the proposed deep learning network for ASD identification.

Figure 3.

Training and validation loss of the proposed deep learning network for ASD identification.

Figure 3.

Training and validation loss of the proposed deep learning network for ASD identification.

4. Discussion

In this study, we developed a deep learning network for ASD identification, utilizing only structural GM tissue images and based on the VGG16 architecture. We systematically evaluated the network's performance and achieved the highest accuracy on sMRI data after 50 epochs of training. To the best of our knowledge, the proposed deep learning model outperformed exciting network for ASD identification using structural GM tissues alone. By using the deep learning network with GM maps exclusively, we were able to reduce the complexity of the training process.

Previous studies on ASD classification using the ABIDE dataset have reported a classification accuracy of 63.89% for gray matter (GM) tissue alone, using a ten-fold cross-validation with a DBN network [

19]. The classification accuracy was improved to 65% when fMRI data alone, along with GM tissue, were used. Finally, by combining features from white matter (WM) tissues, GM, and fMRI, they achieved an accuracy of 65.56% for ASD classification. Our model demonstrates superior performance in classification compared to the previous model [

19]. We tested our model on a dataset of 272 samples, whereas their model was evaluated on 185 data samples. In their previous work, the fMRI-based model showed lower performance than the sMRI GM tissue alone images, possibly due to the low temporal resolution from the hemodynamic response, as well as susceptibility artifacts from signal dropout [

39]. Therefore, our GM-VGG16 model has less computational complexity while maintaining greater accuracy, as it relies solely on GM tissues.

Another study on male participants from the ABIDE dataset, which incorporated 1383 subjects, exhibited higher performance with an fMRI model compared to sMRI. Their accuracy reached 75% with sMRI alone, while fMRI achieved 83%, and the combined fused data reached an accuracy of 85%. The previously reported model [

19] performed differently with lower accuracy, possibly due to the high spatial resolution of their method, or due to the fusion approach they employed, which used early fusion to combine sMRI and fMRI before classification. In contrast, later fusion approaches integrate features based on the classification performance during label testing. However, their feature extraction models required more manual involvement, leading to a semi-automated approach. Our method, on the other hand, does not rely on feature selection from the images; instead, our model is trained to identify ASD patterns directly from the whole GM maps.

The architecture of our deep learning network is based on the VGG network, which was developed by Karen Simonyan and Andrew Zisserman for the ImageNet Challenge in 2014 [

25]. The conventional VGG addressed the challenges of training deep neural networks for large scale image recognition, reaching higher accuracy. Furthermore, VGG16 has demonstrated 97.66% accuracy on cytological images for papillary thyroid carcinomas [

26]. Similarly, we employed small 3x3 convolution filters for feature map generation. Our network consists of five sequential hidden layers, each with 2x2 max pooling and a stride of 2. As with the VGG architecture, the width of the convolution filters increases sequentially across all hidden layers, starting with 32 filters in the first hidden layer and progressing to 256 filters in the final hidden layer. Unlike conventional neural networks, which typically use smaller input sizes (e.g., 32x32 pixels) (Li & Liu, 2018), the VGG network is designed to handle larger input sizes effectively [

26]. Larger input sizes preserve more substantial brain regions, generating more active feature maps.

However, unlike the original VGG network, we incorporated batch normalization across all five hidden layers, which improved training accuracy. Each hidden layer in our network uses a rectified linear unit (ReLU) activation function, and we applied a uniform 25% dropout rate to prevent overfitting. This dropout rate was fixed using the trial and error, as the network showed poor learning without it, and started to memorize the training data. Our deep learning architecture showed a lower error difference between training and validation over 50 epochs, as shown in Fig. 3. These results exhibit that our network overcame the overfitting limitations and enabled higher learning.

In contrast to the conventional VGG network, which includes three fully connected layers and a dropout layer with a 0.5 rate [

25], our network features a fully connected (FC) unit and an output layer (OL). In our work, FC unit designed with a flatten layer, a dense layer (256 filters), a batch normalization layer, activation layer, and a 50 percentage of dropout layer. Moreover, in our output layer, we used a sigmoid activation, while the conventional VGG network utilizes softmax activation. Our newly introduced gray matter based deep learning network higher performance in terms of validation accuracy and loss function over the existing ASD identification models.

However, there are some limitations in our study. Our deep learning network was trained solely on the ABIDE dataset, and future work should involve incorporating additional datasets to further validate our approach. Furthermore, our model was tested on 272 MRI images, and a larger dataset is needed to enhance the model's generalization. Although our model does not require feature extraction during training and validation, our preprocessing, which involved segmenting the GM tissues, was performed semi-automatically using the SPM 12 toolbox. In the future, a fully automated approach for GM tissue segmentation should be integrated into the deep learning network, alongside the classification model. Despite these challenges, our model achieved the highest performance, minimizing classification loss over 50 epochs.

5. Conclusions

The developed deep learning network which is a modified VGG architecture named the gray matter network (GM-VGG-Net) and demonstrates an effective method for classifying ASD using sMRI brain scans based exclusively on gray matter (GM) tissues. Our modified GM-VGG-Net showed a training accuracy of 97 % and a validation accuracy of 96% over 50 epochs. This methodology is significant as it based on sMRI GM maps, which streamlines the training process, reduces computational complexity, and outperforms previous models that required multi-modality or whole brain data.

Author Contributions

All authors contributed to the work presented in this paper. E.D. was responsible for conceptualization, investigation, validation, and writing—original draft preparation; A.G. contributed to validation and writing—review & editing; S.S. contributed to validation and writing—review & editing; and C.L. was responsible for conceptualization, investigation, validation, and writing—review & editing. All authors have read and agreed to the published version of the manuscript.

Funding

The research presented in this manuscript received no external funding. The data used were obtained from the publicly available ABIDE database, which may have been supported by separate funding sources not related to this study.

Institutional Review Board Statement

Not applicable for this study, as it involved the use of publicly available, de-identified data from the ABIDE database. .

Informed Consent Statement

Not applicable.

Data Availability Statement

The data for this study were obtained from the ABIDE database, which operates under defined accessibility protocols as outlined by the source.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- H. Hodges, C. H. Hodges, C. Fealko, and N. Soares, "Autism spectrum disorder: definition, epidemiology, causes, and clinical evaluation," (in eng), Transl Pediatr, vol. 9, no. Suppl 1, pp. 2020; s65. [Google Scholar] [CrossRef]

- C. Lord et al., "Autism spectrum disorder," Nature Reviews Disease Primers, vol. 6, no. 1, p. 2020; 5. [CrossRef]

- Y. Ding, H. Y. Ding, H. Zhang, and T. Qiu, "Deep learning approach to predict autism spectrum disorder: a systematic review and meta-analysis," (in eng), BMC Psychiatry, vol. 24, no. 1, p. 2024; 28. [Google Scholar] [CrossRef]

- M. Z. Uddin, M. A. M. Z. Uddin, M. A. Shahriar, M. N. Mahamood, F. Alnajjar, M. I. Pramanik, and M. A. R. Ahad, "Deep learning with image-based autism spectrum disorder analysis: A systematic review," Engineering Applications of Artificial Intelligence, vol. 127, p. 1071; 85. [Google Scholar] [CrossRef]

- F. Rafiee, R. F. Rafiee, R. Rezvani Habibabadi, M. Motaghi, D. M. Yousem, and I. J. Yousem, "Brain MRI in Autism Spectrum Disorder: Narrative Review and Recent Advances," (in eng), J Magn Reson Imaging, vol. 55, no. 6, pp. 1613. [Google Scholar] [CrossRef]

- M. Wang, D. M. Wang, D. Xu, L. Zhang, and H. Jiang, "Application of Multimodal MRI in the Early Diagnosis of Autism Spectrum Disorders: A Review," Diagnostics, vol. 13, no. 19, p. 3027, 2023. [Online]. Available: https://www.mdpi.com/2075-4418/13/19/3027.

- J. Liu et al., "Gray matter abnormalities in pediatric autism spectrum disorder: a meta-analysis with signed differential mapping," (in eng), Eur Child Adolesc Psychiatry, vol. 26, no. 8, pp. 2017. [CrossRef]

- L. Lim et al., "Disorder-specific grey matter deficits in attention deficit hyperactivity disorder relative to autism spectrum disorder," (in eng), Psychol Med, vol. 45, no. 5, pp. 2015; -76. [CrossRef]

- N. Lange et al., "Longitudinal volumetric brain changes in autism spectrum disorder ages 6-35 years," (in eng), Autism Res, vol. 8, no. 1, pp. 2015; -93. [CrossRef]

- D. Zhou et al., "Gender and age related brain structural and functional alterations in children with autism spectrum disorder," Cerebral Cortex, vol. 34, no. 2024; 7. [CrossRef]

- C. R. Gibbard, J. C. R. Gibbard, J. Ren, K. K. Seunarine, J. D. Clayden, D. H. Skuse, and C. A. Clark, "White matter microstructure correlates with autism trait severity in a combined clinical–control sample of high-functioning adults," NeuroImage: Clinical, vol. 3, pp. 2013. [Google Scholar] [CrossRef]

- H. Ohta et al., "White matter alterations in autism spectrum disorder and attention-deficit/hyperactivity disorder in relation to sensory profile," Molecular Autism, vol. 11, no. 1, p. 2020; 77. [CrossRef]

- D. Dimond et al., "Reduced White Matter Fiber Density in Autism Spectrum Disorder," (in eng), Cereb Cortex, vol. 29, no. 4, pp. 1778. [CrossRef]

- H. Ohta et al., "White matter alterations in autism spectrum disorder and attention-deficit/hyperactivity disorder in relation to sensory profile," (in eng), Mol Autism, vol. 11, no. 1, p. 2020; 77. [CrossRef]

- M. Zhang et al., "Brain white matter microstructure abnormalities in children with optimal outcome from autism: a four-year follow-up study," Scientific Reports, vol. 12, no. 1, p. 2015; 1. [CrossRef]

- Y. ElNakieb et al., "The Role of Diffusion Tensor MR Imaging (DTI) of the Brain in Diagnosing Autism Spectrum Disorder: Promising Results," Sensors, vol. 21, no. 24, p. 8171, 2021. [Online]. Available: https://www.mdpi.com/1424-8220/21/24/8171.

- M. Tang, P. M. Tang, P. Kumar, H. Chen, and A. Shrivastava, "Deep Multimodal Learning for the Diagnosis of Autism Spectrum Disorder," (in eng), J Imaging, vol. 6, no. 2020; 6. [Google Scholar] [CrossRef]

- S. Heinsfeld, A. R. S. Heinsfeld, A. R. Franco, R. C. Craddock, A. Buchweitz, and F. Meneguzzi, "Identification of autism spectrum disorder using deep learning and the ABIDE dataset," NeuroImage: Clinical, vol. 17, pp. 2018; -23. [Google Scholar] [CrossRef]

- M. Akhavan Aghdam, A. M. Akhavan Aghdam, A. Sharifi, and M. M. Pedram, "Combination of rs-fMRI and sMRI Data to Discriminate Autism Spectrum Disorders in Young Children Using Deep Belief Network," (in eng), J Digit Imaging, vol. 31, no. 6, pp. 2018. [Google Scholar] [CrossRef]

- J. A. Nielsen et al., "Multisite functional connectivity MRI classification of autism: ABIDE results," (in eng), Front Hum Neurosci, vol. 7, p. 2013. [CrossRef]

- J. S. Anderson et al., "Functional connectivity magnetic resonance imaging classification of autism," (in eng), Brain, vol. 134, no. Pt 12, pp. 3742; -54. [CrossRef]

- F. Z. Subah, K. F. Z. Subah, K. Deb, P. K. Dhar, and T. Koshiba, "A Deep Learning Approach to Predict Autism Spectrum Disorder Using Multisite Resting-State fMRI," Applied Sciences, vol. 11, no. 8, p. 3636, 2021. [Online]. Available: https://www.mdpi.com/2076-3417/11/8/3636.

- M. Feng and J. Xu, "Detection of ASD Children through Deep-Learning Application of fMRI," (in eng), Children (Basel), vol. 10, no. 2023; 10. [CrossRef]

- S. Saponaro et al., "Deep learning based joint fusion approach to exploit anatomical and functional brain information in autism spectrum disorders," (in eng), Brain Inform, vol. 11, no. 1, p. 2024; 2. [CrossRef]

- K. Simonyan and A. Zisserman, "Very Deep Convolutional Networks for Large-Scale Image Recognition," CoRR, vol. abs/1409.1556, 2014.

- Q. Guan et al., "Deep convolutional neural network VGG-16 model for differential diagnosing of papillary thyroid carcinomas in cytological images: a pilot study," (in eng), J Cancer, vol. 10, no. 20, pp. 4876- 4882, 2019. [CrossRef]

- Di, Martino; et al. , "The autism brain imaging data exchange: towards a large-scale evaluation of the intrinsic brain architecture in autism," (in eng), Mol Psychiatry, vol. 19, no. 6, pp. 2014; -67. [Google Scholar] [CrossRef]

- J. Ashburner and K. J. Friston, "Computing average shaped tissue probability templates," NeuroImage, vol. 45, no. 2, pp. 2009. [CrossRef]

- J. Ashburner, "A fast diffeomorphic image registration algorithm," NeuroImage, vol. 38, no. 1, pp. 2007. [CrossRef]

- J. Ashburner, "Computational anatomy with the SPM software," (in eng), Magn Reson Imaging, vol. 27, no. 8, pp. 1163; -74. [CrossRef]

- E. Daniel et al., "Cortical thinning in chemotherapy-treated older long-term breast cancer survivors," (in eng), Brain Imaging Behav, vol. 17, no. 1, pp. 2023; -76. [CrossRef]

- J. L. Whitwell, "Voxel-based morphometry: an automated technique for assessing structural changes in the brain," (in eng), J Neurosci, vol. 29, no. 31, pp. 9661; -4. [CrossRef]

- J. Ashburner and K. J. Friston, "Voxel Based Morphometry," in Encyclopedia of Neuroscience, L. R. Squire Ed. Oxford: Academic Press, 2009, pp. 471-477.

- F. P. S. Fischmeister et al., "The benefits of skull stripping in the normalization of clinical fMRI data," NeuroImage: Clinical, vol. 3, pp. 2013. [CrossRef]

- D. A. Muhtasim, M. I. D. A. Muhtasim, M. I. Pavel, and S. Y. Tan, "A Patch-Based CNN Built on the VGG-16 Architecture for Real-Time Facial Liveness Detection," Sustainability, vol. 14, no. 16, p. 10024, 2022. [Online]. Available: https://www.mdpi.com/2071-1050/14/16/10024.

- X. Fei, S. X. Fei, S. Wu, J. Miao, G. Wang, and L. Sun, "Lightweight-VGG: A Fast Deep Learning Architecture Based on Dimensionality Reduction and Nonlinear Enhancement for Hyperspectral Image Classification," Remote Sensing, vol. 16, no. 2, p. 259, 2024. [Online]. Available: https://www.mdpi.com/2072-4292/16/2/259.

- K. Simonyan and A. arXiv preprint arXiv:1409.1556, arXiv:1409.1556, 2014.

- R. Klangbunrueang, P. R. Klangbunrueang, P. Pookduang, W. Chansanam, and T. Lunrasri, "AI-Powered Lung Cancer Detection: Assessing VGG16 and CNN Architectures for CT Scan Image Classification," Informatics, vol. 12, no. 1, p. 18, 2025. [Online]. Available: https://www.mdpi.com/2227-9709/12/1/18.

- G. H. Glover, "Overview of functional magnetic resonance imaging," (in eng), Neurosurg Clin N Am, vol. 22, no. 2, pp. 2011; -9. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).