Submitted:

01 April 2025

Posted:

02 April 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. How Data Is Crucial to Build LLMs Performance?

- The main issues in the absence of strong data governance in LLM are "Hallucination" while performing the output response based on input query.

- The other vital issue is "Data misuse" that creates a big issue due to ethical violations (unclear and unauthorized data usage policies).

- "Biasness in data" is a major concern that leads to creating bias approach in LLM.

- Lack of data governance framework leads to "Data breach and lack of data security (security concern)" activity which increase a risk of various adversarial attacks (backdoor attacks, data-poisoning attacks, model inversion attacks, transfer based black-box attacks etc.).

- Also, it impacts an "Ethical implications and legal concerns" in LLM due to lack of data governance framework.

- Failure of LLM, while deploying in production pipeline, requires strong LLMOps pipeline with the assistance of a solid data governance approach.

1.2. Addressing Data Misuse, Biases, and Ethical Challenges in the Digital Era of LLMs

1.3. Problem Statement

1.4. Objectives of the Survey

- The paper proposed the use of an AI data governance framework in the context of LLMs to improve the detection of suspicious transactions (money laundering, anomaly detection in financial transactions) [77].

- The use of AI-driven intelligent data framework that substantially improves operational efficiency, compliance accuracy, and data integrity for the future development of AI-based work [78].

- The author provides a criticality to the use of centering implementation of AI data governance in LLMs, which is more effective for model performance [79].

- The study recommends the use of a robust data governance framework in the AI-enabled healthcare system, which addresses ethical challenges and privacy concerns (builds trust among users of healthcare services) [80].

- The use of AI data governance frameworks automates the process of managing data quality in the banking sector to improve model performance [81].

- The use of data-centric governance throughout the model learning life cycle, responsible for the deployment of AI system which reduces the risk of deployment failure, reduces the deployment process, and increases the solution design approach [82].

- The integration of AI driven data governance framework with banking system, that enhance data is accurate, reliable and securable, which creates trust accountability in financial sector [83].

- As AI is evolving very rapidly in daily life and lots of manual tasks reduces due to automation capabilities. Therefore, there is trust in the AI system needed which needs to be addressed through co-governance implementation techniques such as regulation, standards, and principles. The use of data governance frameworks improves AI maturity [84].

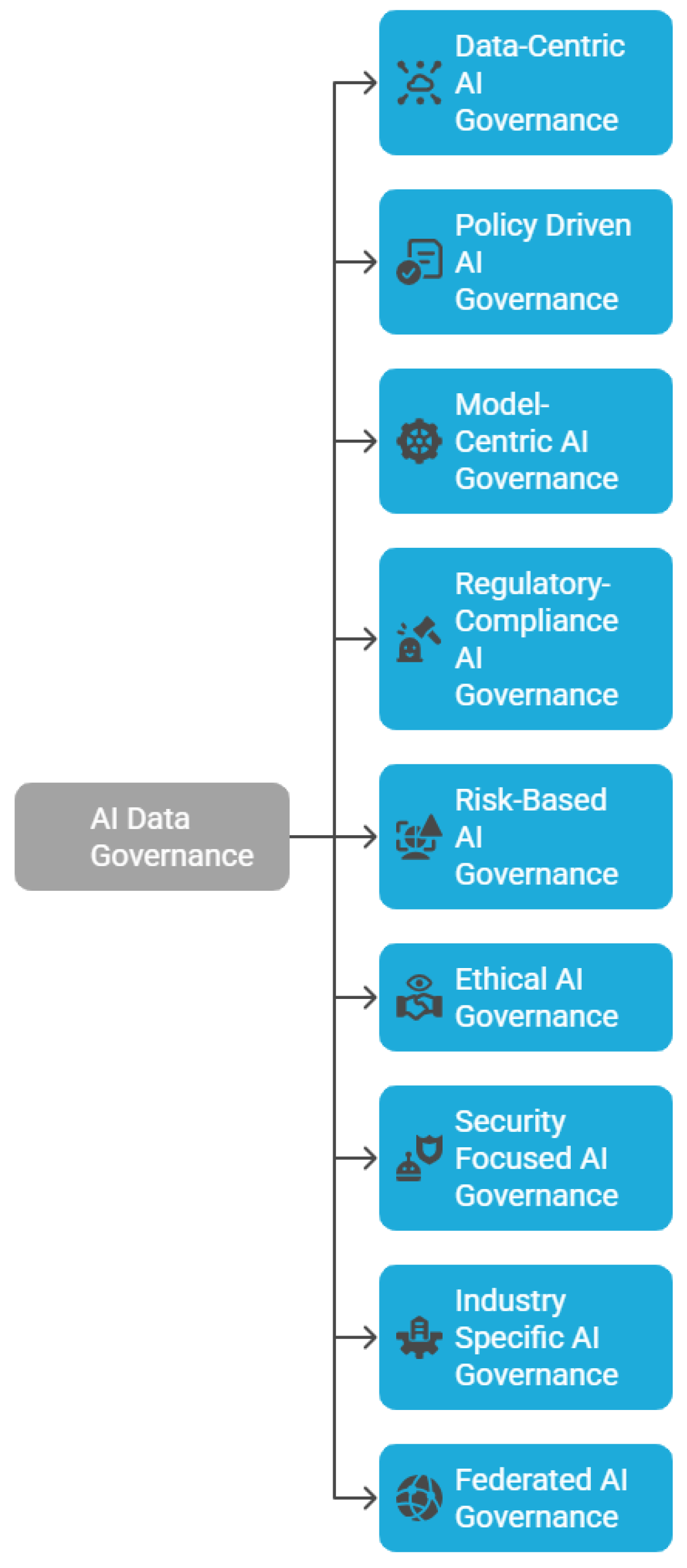

2. Foundations of AI Data Governance

2.1. Core Principles of AI Data Governance Relevance to LLMs

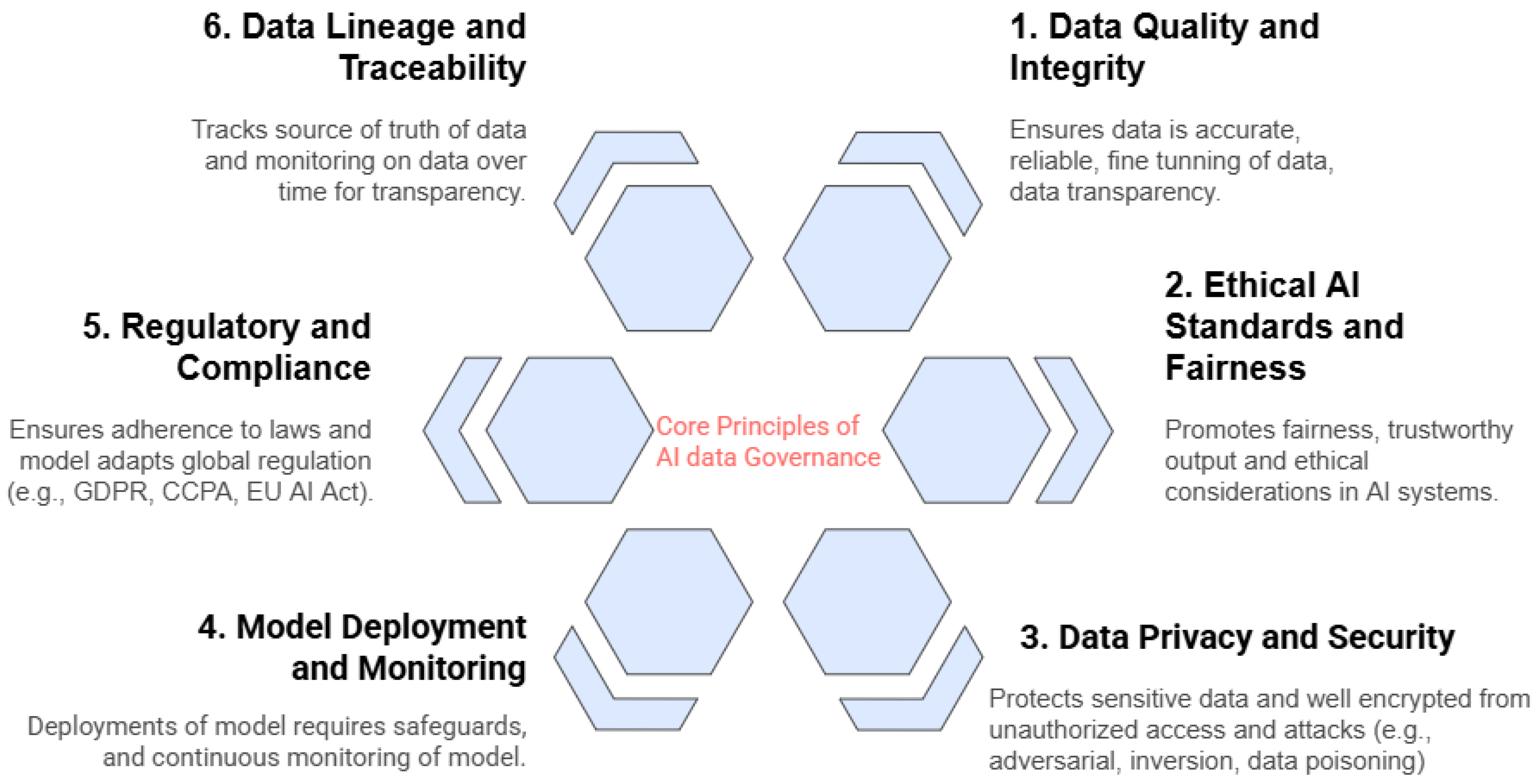

2.2. Core Principles of AI Data Governance

2.2.1. Data Quality and Integrity

2.2.2. Ethical AI Standards and Fairness

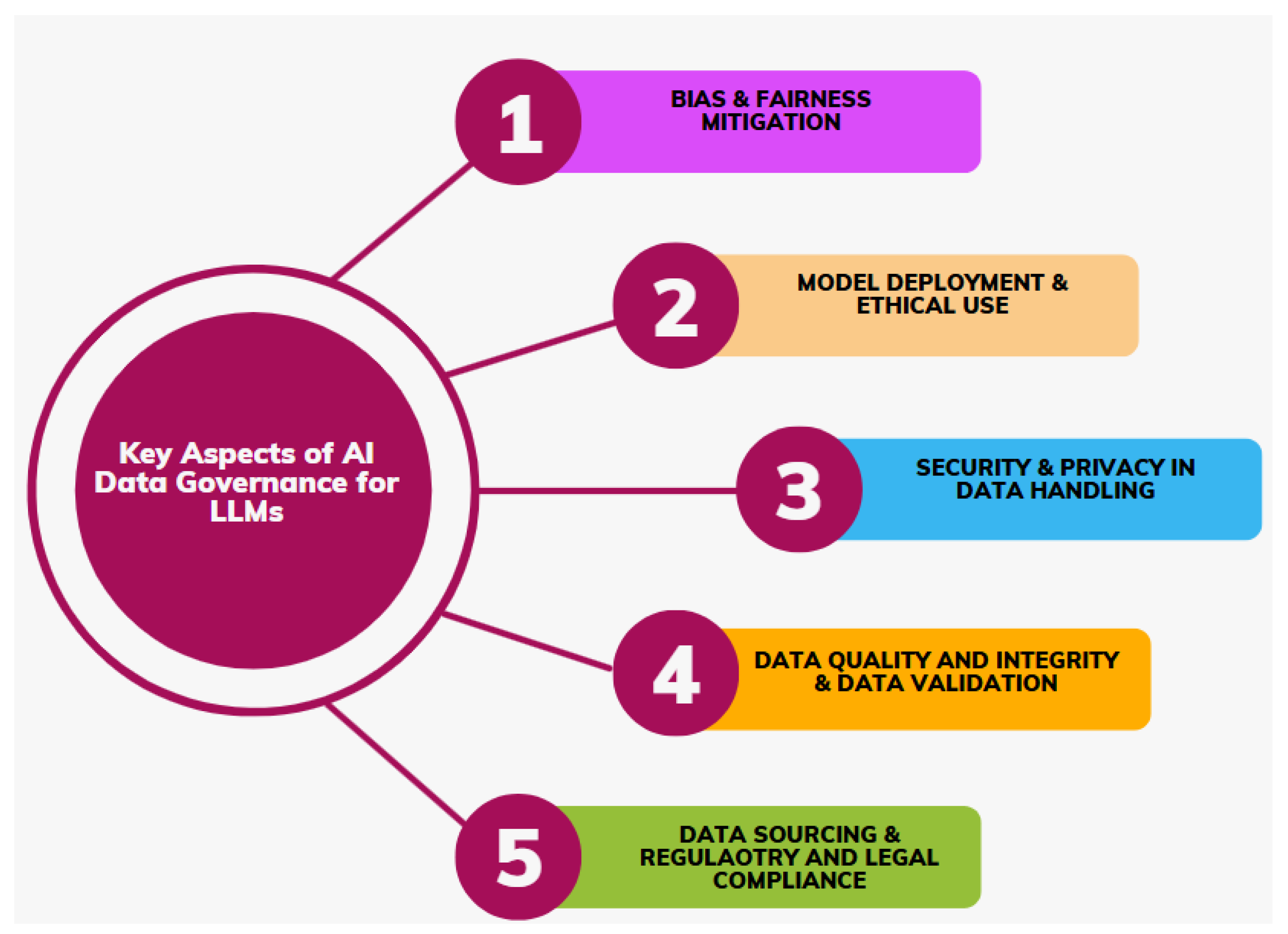

2.2.3. Data Privacy and Security

2.2.4. Model Deployment and Monitoring

2.2.5. Regulatory and Compliance

2.2.6. Data Lineage and Traceability

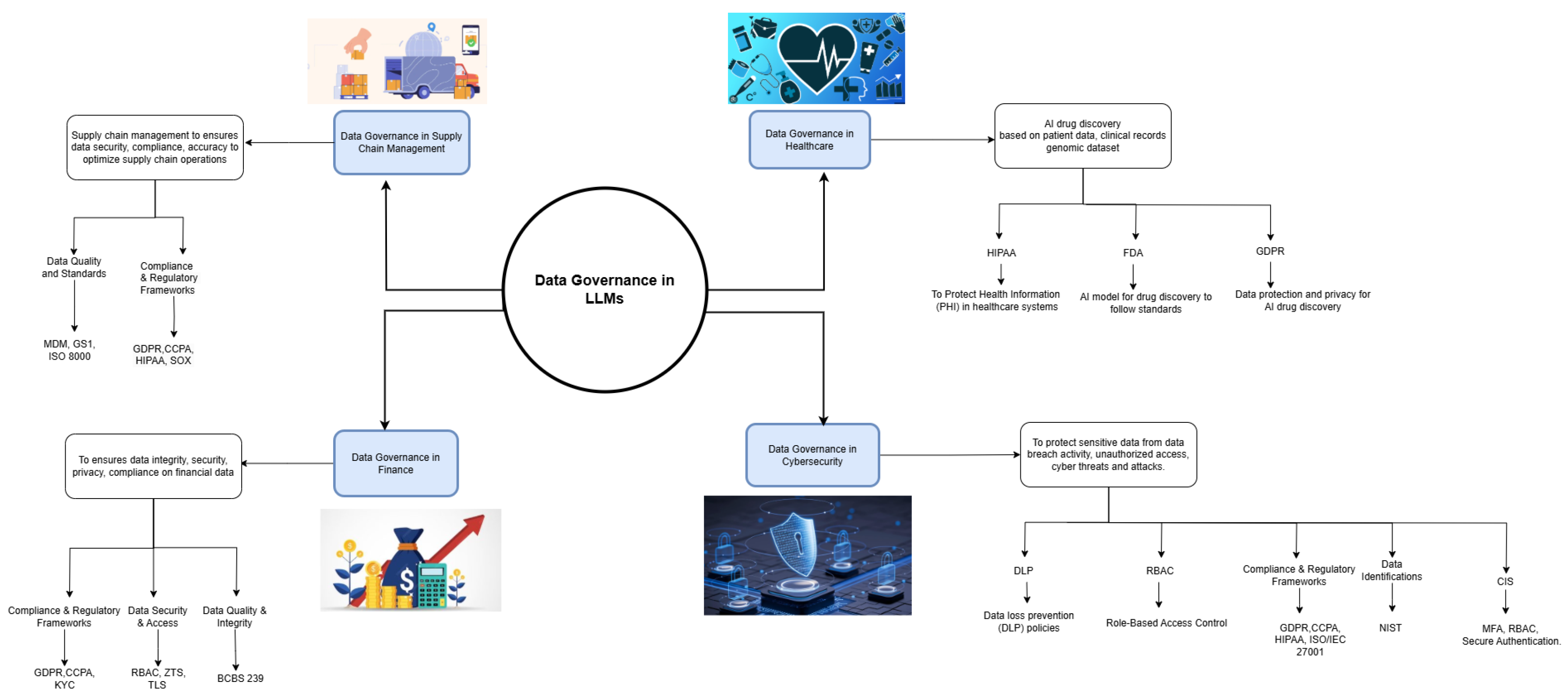

3. Use of AI Data Governance in Various Domains

3.1. AI Data Governance in Supply Chain Management

3.2. AI Data Governance in Healthcare

3.3. AI Data Governance in Cybersecurity

3.4. AI Data Governance in Finance

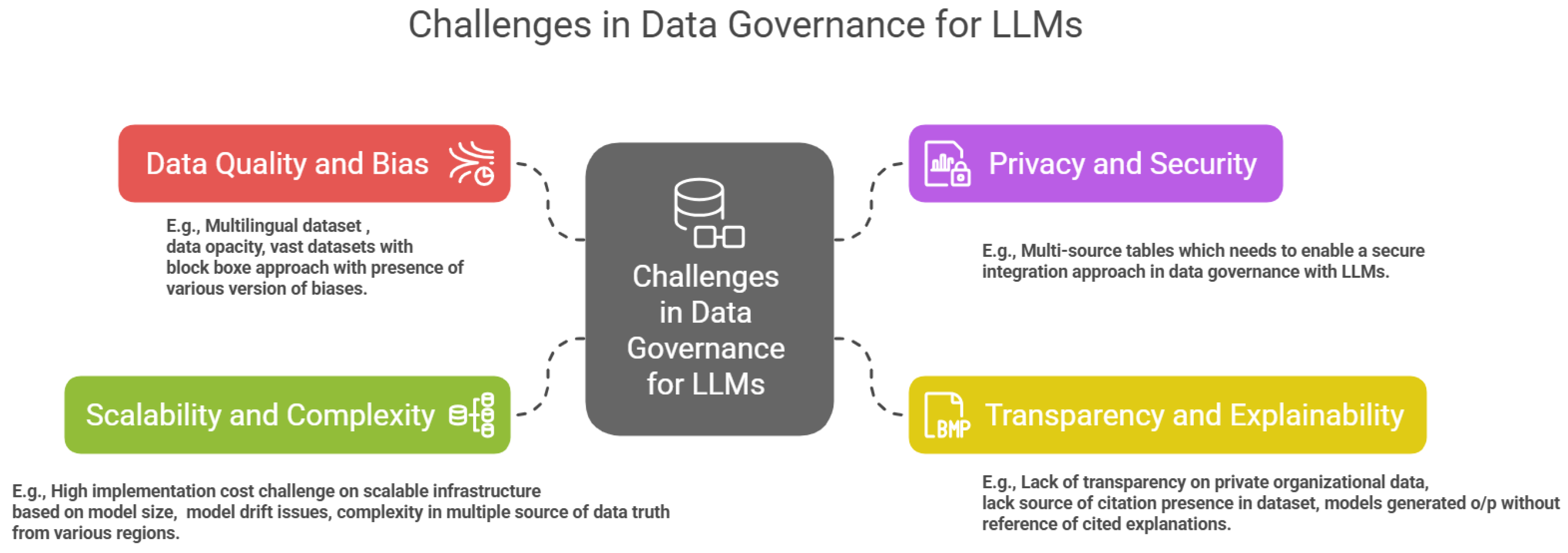

4. Challenges in Data Governance for LLMs

4.1. Data Quality and Bias

4.2. Privacy and Security

- As LLM has the ability to train on large datasets and can create by mistakes memorize the data and regenerate the information from the personal sensory data such as patient details, medical records, leakage of financial information and others (e.g., data-poisoning attacks) [178].

- It also targeted inference attacks, where malicious attacks can impact the vector database and can pull private data using queries, which is a massive security concern with the various malicious attacks (e.g., privacy breaches in model training and prediction phase, membership inference attacks, model inference attacks) [179].

- Due to a rapid increase in data volumes, implementation is a big challenge for LLM in data governance framework, as data come from multi-source tables which needs to enable a secure integration approach. [182].

4.3. Transparency and Explainability

- Most LLM are trained in private organizational enterprise data; hence most of these data are dark data, not accessible to the public. This is vague to gain trust in the source of data and model output that creates challenges in transparency, regulation and trust [183].

- Healthcare chabots for patient recommendation trained on public healthcare datasets, research papers and medical literature (PubMed, WHO guidelines), EHR’s. However, this chatbot model cannot provide insight into the output result reference that makes a model decision opaque [187].

- LLMs like GPT-4, BERT and others remain the black box of the system (e.g., trained on billions or millions of parameter, model generates output without citing source details) which tends to create a very big challenge to implement the data governance framework approach, as example, the EU AI act and US AI bill of rights need transparency and explainability behind each decision [188,189,190].

4.4. Scalability and Complexity

- LLMs need high infrastructure components for a safer deployment of AI models. This leads to a higher implementation cost based on the scalability of the architecture pattern and the size of the model that makes it harder to implement a data governance approach at the enterprise level [191].

- The model generates the output based on training with large training datasets that come from multiple sources of data from various regions (e.g., the US region, Europe region, and others). However, each region has its own specific AI regulation laws, such as cross-border data compliance, creating a pertinent challenge to implementing data governance methodology [194,195].

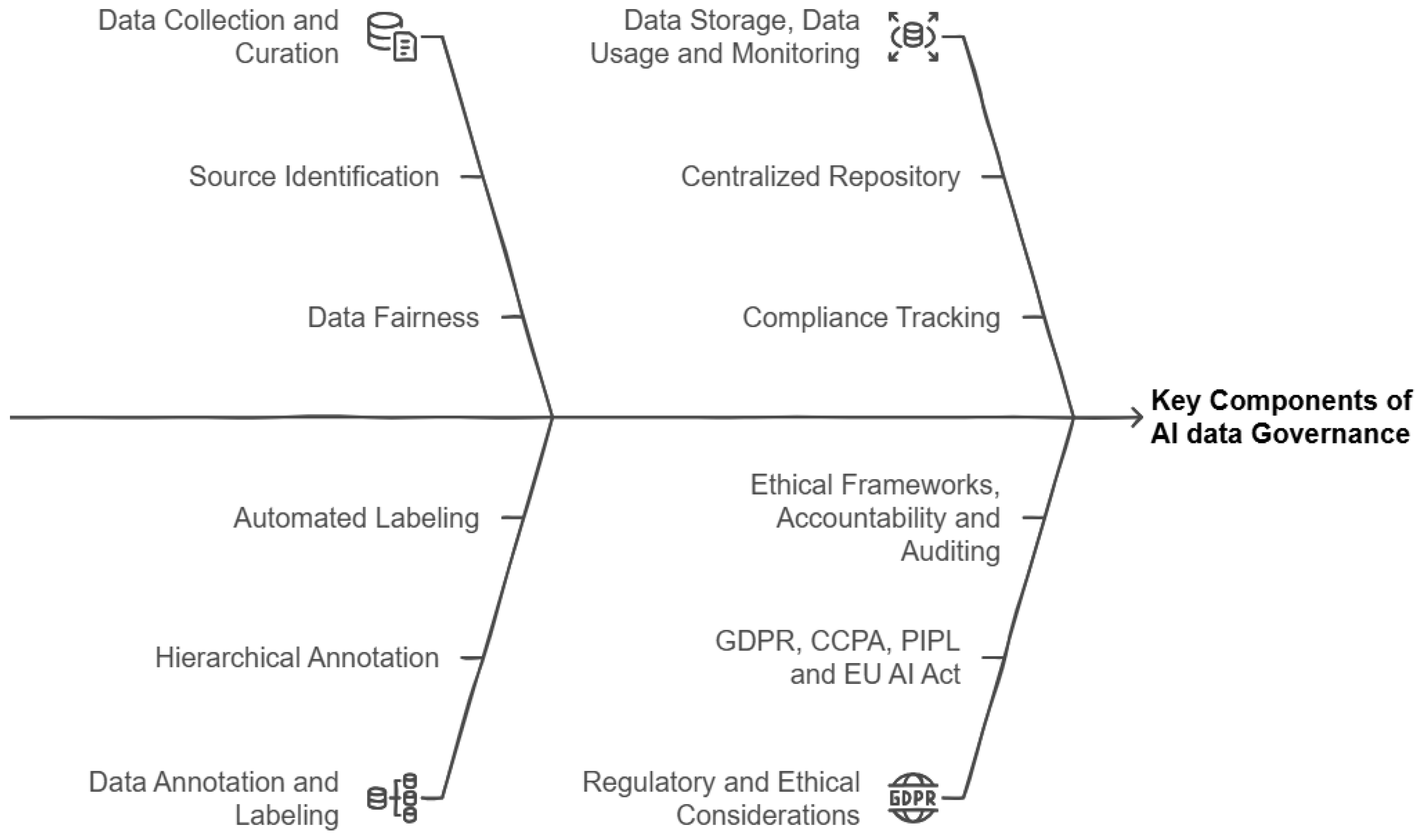

5. Key Components of AI Data Governance for LLMs

5.1. Data Collection and Curation

- Source Identification: Effective governance to ensure that data used for training, the data source that is used for fine tuning that comes with high quality [196], diversity is crucial to selecting data samples to enhance model training [197], data must have ethical sources that comply with data protection laws (e.g. GDPR, HIPAA, CCPA, and others) [198], human curated data indicates high quality of the data [199,200], and data fairness are important steps as part of core components of data governance for LLM, however role of data cataloging ensures the data lineage tracking and use of metadata management approach [201].

5.2. Data Annotation and Labeling

- LLM in enterprise data can benefit from using hierarchical annotation of structure data, where instead of using a single label, it is classified at multiple levels based on label granularity. Instead of using a single label, a tree structure benefits LLM to improve scalability and better performance output [183,202]. Hence, leveraging hierarchical annotation allows for the identification of complex relationships and patterns within the dataset.

- AI data governance for LLMs in which automated labeling is a crucial component, as it deals with dynamic label schema integration techniques to improve the ability to understand and classify data with high precision [203]. Dynamic label schemas allow labels to evolve based on data input and requirements, allowing the system to adjust automatically and labels to remain accurate without manual efforts [204,205].

5.3. Data Storage and Management

- Data storage is a crucial step to the impact of AI data governance to maintain data quality, data integrity, and data storage and management, which plays a significant role in securing data storage with a centralized data repository to manage a vast data set [206] to minimize the risk of data leakage and helps to maintain data security using the central data approach.

- This work emphasizes the impact of the data governance framework on a secure data leak mitigation approach via the centralization of the data repository for vast data sets, transforming enterprise data management through the unified data governance methodology [207].

- This paper proposed a qualified compliance to align ISO/IEC 5259 standards with EU AI Act, Article 10. This process is a key component of data governance to improve data management and compliance tracking and facilitate organizations in demonstrating compliance with both legal and technical standards [208].

- The current research discusses how AI data governance can help with data management by keeping an eye on compliance, making data more effective, and handling risk with a mitigation approach [209]. Using advanced machine learning technologies can enhance data governance capabilities with multisource data integration patterns by using reference tools for data quality check, data profiling, data cleaning, and continuous monitoring [210].

5.4. Data Usage and Monitoring

- Data usage and monitoring are critical components to implement AI data governance frameworks to mitigate the risk of data misuse and allow data compliance with the regulations and guidelines that apply. An effective data governance impacts the data filtering and data monitoring approach to work closely from training, testing, to the secure AI deployment pipeline of large models [154].

- Recommendation of the data governance mechanism based on the detection of unauthorized data and requires the protection of patient data in healthcare by closely monitoring the process through transparency and accountability to prevent harm; therefore, data encryption, masking and hashing can protect patient health information within the use of a conceptual data governance framework [43,211].

- The OECD recommendation on the governance of health data underlines the need to establish national governance frameworks that protect personal health data while facilitating their use for public policy purposes. It encompasses measures to identify unwanted data access to protect patient data security and privacy [212].

5.5. Regulatory and Ethical Considerations

5.5.1. Global Regulatory Landscape

-

General Data Protection Regulation (GDPR): GDPR sets a high standard for data protection law by the European Union to mitigate data privacy and security vulnerability. This regulation sets strict requirements for organizations related to data handling, data processing, data brach notifications, data storage to ensure transparency and accountability.The key components of GDPR are data protection rights, breach notification, lawful processing, extraterritorial reach, data subject rights (e.g, Right to Access, Right to Rectifications) [215,216]. By integrating GDPR and EU AI Act within the global regulatory landscape that enhances compliance strategies, strengthen data protection and trustworthy AI system [217].

- California Consumer Privacy Act (CCPA): The CCPA represents privacy laws that govern data collection, data sharing, and letting customers control their data. It gives people in California certain rights over their personal information, such as the right to know what information is being collected, the right to see and delete that information, and the right not to have their information sold [218,219]. The CCPA protects specific groups of people, with a focus on customer rights when it comes to data sales. It has made a lot of advances in protecting privacy, showing how different ways of handling privacy problems in the digital world today [220]. This law was subsequently revised and expanded by the California Privacy Rights Act (CPRA), which introduced enhanced consumer protections and enforcement mechanisms [221].

- China’s Personal Information Protection Law (PIPL): PIPL is a comprehensive framework and a significant step in China for data protection, data classification and user rights within digital platforms [222]. The main components of PIPL are informed consent, data classification, and user rights. This framework in China is used for the protection of personal data, which emphasizes informed consent, classification of data, and remedies for data violations, and is based on the principle of proportionality to improve data security and privacy rights [223]. It is designed to regulate the use of personal data by digital platforms, with a focus on the state’s authority over user data control and privacy practices [224].

5.5.2. Ethical Frameworks

- Stakeholder Engagement in Ensuring Ethical Data Practices: The roles of stakeholders are critical in ethical data practices to mitigate data risk assessments using government and regulatory involvement to foster trust by implementing various regulatory frameworks (e.g., CCPA, GDPR, EU AI Act, and others) to implement sustainable development [225]. The use of stakeholder participation is critical by participating in brainstorming sessions, consultations to clarify ethical responsibilities, and addressing ethical conflicts [226]. Ethical considerations in data analysis are the best practices for the researcher and developer to implement an ethical AI model with fairness, fostering trust throughout the lifecycle of model development [227]. The use of the enhanced enterprise data ethics framework fosters strategic decision-making and legitimate engagement in higher education data management by emphasizing transparency, fairness, accountability, and a centric approach between stakeholders [228].

- The Fundamental Principles of Responsible AI Development: These principles must guide the end-to-end machine learning lifecycle of AI development that builds securely with ethical safeguard of model deployment, prevents biasing in the model, and the discrimination and ethical approach throughout the life of the model iteration. A comprehensive framework and data protection laws are important for responsible AI development that includes fairness, transparency, privacy, security, accountability, and system robustness [229]. This report highlights the importance of stakeholder participation, comprehensive monitoring systems, and structured ethical frameworks as fundamental principles for responsible AI development. These components guarantee that technological advances are consistent with ethical principles and human values, ultimately resolving issues such as accountability and algorithmic bias with the requirements of responsible AI governance [230,231].

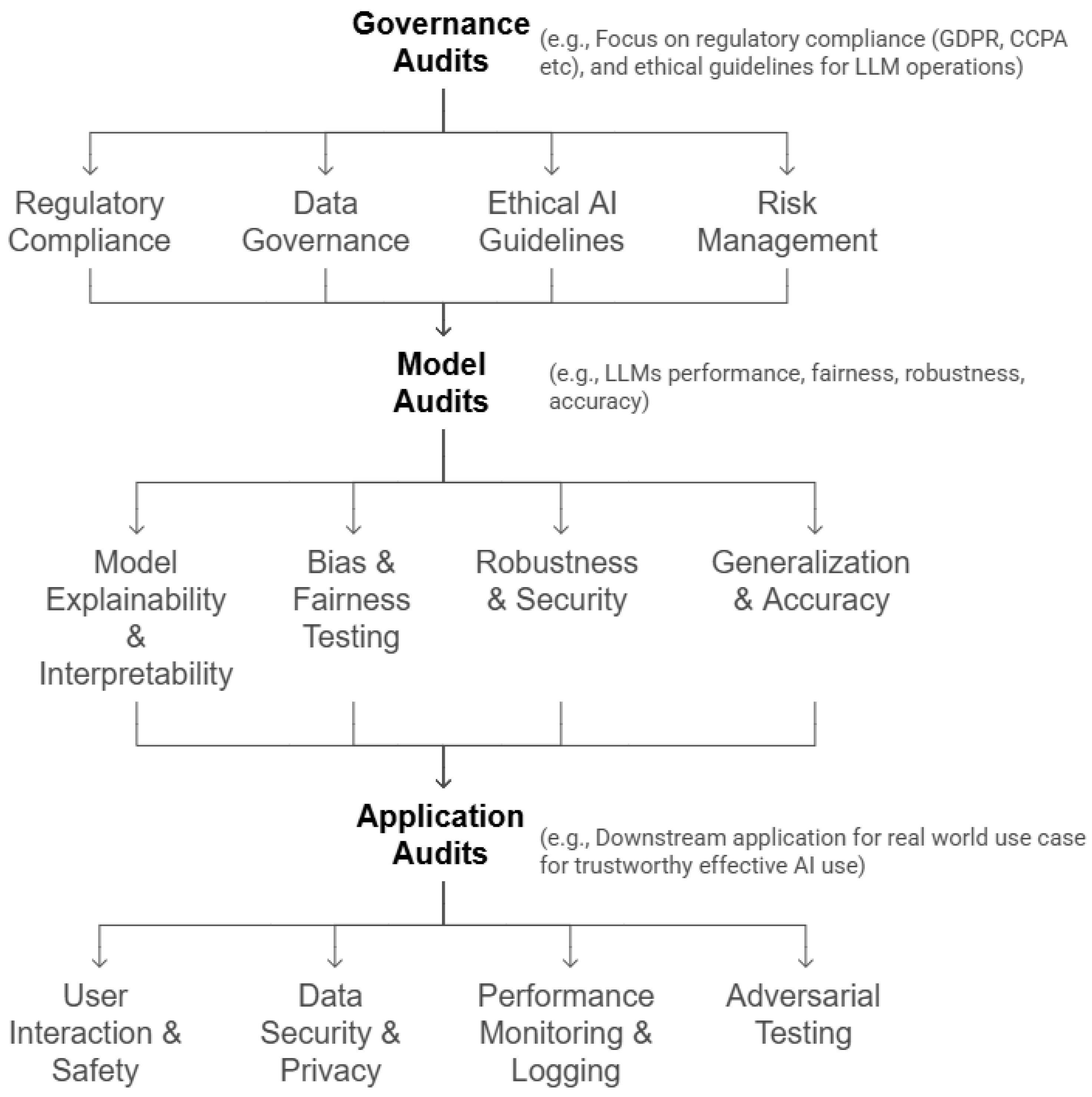

5.5.3. Accountability and Auditing

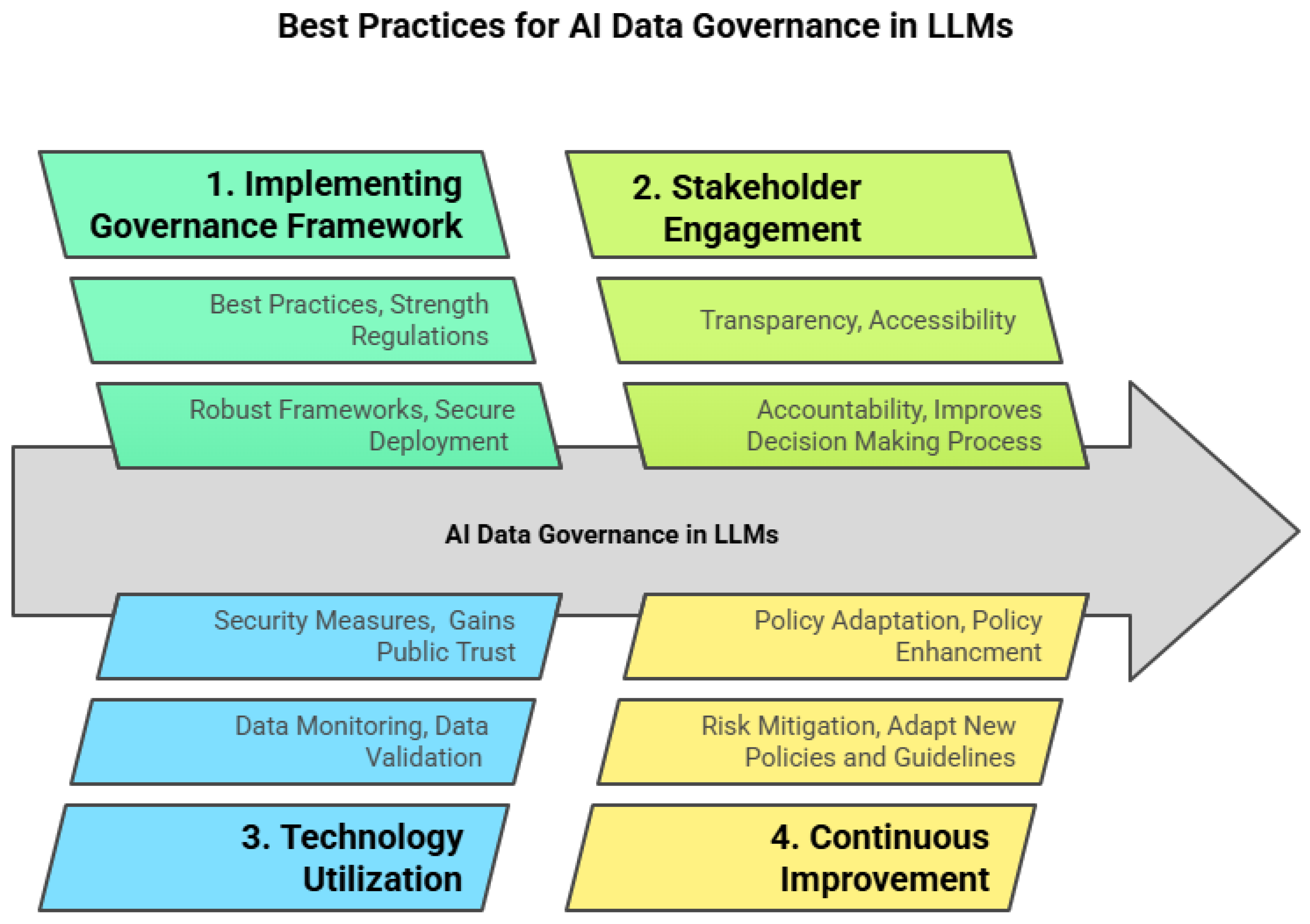

6. Best Practices for AI Data Governance in LLMs

6.1. Developing a Governance Framework

6.2. Stakeholder Engagement

6.3. Leveraging Technology

6.4. Continuous Improvement

7. Case Studies and Real-World Applications on Implementing Data Governance in LLMs

8. Open Challenges and Future Research Opportunities

8.1. Scalability of Data Governance Framework

8.2. Security Risks and Data Breaches

8.3. Data Privacy and Compliance

8.4. Data Provenance and Traceability

8.5. Human-AI Collaboration in Data Governance

8.6. Data Quality and Bias Mitigation

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| GDPR: General Data Protection Regulation |

| HIPAA: Health Insurance Portability and Accountability Act |

| CCPA: California Consumer Privacy Act |

| FCRA: Fair Credit Reporting Act |

| MDM: Master Data Management |

| FSA: Financial Sentimental Analysis |

| IT: Information Technology |

| OECD: Organization for Economic Cooperation and Development |

| ENISA: European Union Agency for Cybersecurity |

| NIST: National Institute of Standards and Technology |

| CRS: Conversational Recommender System |

| BCBS: Basel Committee on Banking Supervision’s |

| SCM: Supply Chain Management |

| LLMDB: LLM-enhanced data management paradigm |

| FDA: Food and Drug Administration |

| MLOps: Machine Learning Operations |

| LLMOps: Large Language Model Operations |

| GPU: Graphics Processing Unit |

| TPU: Tensor Processing Unit |

| LLM: Large Language Model |

| AIRMF: AI Risk Management Framework |

| DLP: Data Loss Prevention |

| RBAC: Role-Based Access Control |

| ISO/IEC: International Organization for Standardization / International Electrotechnical Commission |

| MFA: Multi-Factor Authentication |

| KYC: Know Your Customer |

| TLS: Transport Layer Security |

| ML: Machine Learning |

| ZTS: Zero Trust Security |

| BCBS: Basel Committee on Banking Supervision |

| GS1: Global Standards for Supply Chain and Data Management |

| SOX: Sarbanes-Oxley Act |

| RBAC: Role-Based Access Control |

| PHI: Protected Health Information |

| CTI: Cyber Threat Intelligence |

| EHR: Electronic Health Records |

| EU: European Union |

| PIPL: Personal Information Protection Law |

| CPRA: California Privacy Rights Act |

| AI: Artificial Intelligence |

| NIST: National Institute of Standards and Technology |

| SOC: Service Organization Focus |

| MDM: Master Data Management |

| TKG: Telecom Knowledge Governance |

| RAG: Retrieval-Augmented Generation |

| LGPD: Lei Geral de Proteção de Dados (General Data Protection Law) |

| HHH: Helpful, Honest, and Harmless |

| PII: Personal Identifiable Information |

| DVD: Data Validation Document |

| EAI: Explainable Artificial Intelligence |

| WHO: World Health Organization |

| AU: African Union |

| EU: European Union |

| DPK: Data Prep Kit |

| DLG: Data lineage graphs |

| ETHOS: Ethical Technology and Holistic Oversight System |

| KYC: Know Your Customer |

| DataBOM: Data Bill of Materials |

| HAIRA: Healthcare AI Governance Readiness Assessment |

| GRC: Governance, Risk, and Compliance |

References

- Haque, M.A. LLMs: A Game-Changer for Software Engineers? arXiv preprint arXiv:2411.00932 2024. [CrossRef]

- Meduri, S. Revolutionizing Customer Service: The Impact of Large Language Models on Chatbot Performance. International Journal of Scientific Research in Computer Science Engineering and Information Technology 2024, 10, 721–730. [Google Scholar] [CrossRef]

- Pahune, S.; Chandrasekharan, M. Several categories of large language models (llms): A short survey. arXiv preprint arXiv:2307.10188 2023. [CrossRef]

- Vavekanand, R.; Karttunen, P.; Xu, Y.; Milani, S.; Li, H. Large Language Models in Healthcare Decision Support: A Review 2024.

- Veigulis, Z.P.; Ware, A.D.; Hoover, P.J.; Blumke, T.L.; Pillai, M.; Yu, L.; Osborne, T.F. Identifying Key Predictive Variables in Medical Records Using a Large Language Model (LLM) 2024.

- Yuan, M.; Bao, P.; Yuan, J.; Shen, Y.; Chen, Z.; Xie, Y.; Zhao, J.; Li, Q.; Chen, Y.; Zhang, L.; et al. Large language models illuminate a progressive pathway to artificial intelligent healthcare assistant. Medicine Plus 2024, p. 100030. [CrossRef]

- Zhang, K.; Meng, X.; Yan, X.; Ji, J.; Liu, J.; Xu, H.; Zhang, H.; Liu, D.; Wang, J.; Wang, X.; et al. Revolutionizing Health Care: The Transformative Impact of Large Language Models in Medicine. Journal of Medical Internet Research 2025, 27, e59069. [Google Scholar] [CrossRef]

- Acosta, J.N.; Falcone, G.J.; Rajpurkar, P.; Topol, E.J. Multimodal biomedical AI. Nature Medicine 2022, 28, 1773–1784. [Google Scholar] [CrossRef]

- Huang, K.; Altosaar, J.; Ranganath, R. Clinicalbert: Modeling clinical notes and predicting hospital readmission. arXiv preprint arXiv:1904.05342 2019. [CrossRef]

- Lee, J.; Yoon, W.; Kim, S.; Kim, D.; Kim, S.; So, C.H.; Kang, J. BioBERT: a pre-trained biomedical language representation model for biomedical text mining. Bioinformatics 2020, 36, 1234–1240. [Google Scholar] [CrossRef] [PubMed]

- Santos, T.; Tariq, A.; Das, S.; Vayalpati, K.; Smith, G.H.; Trivedi, H.; Banerjee, I. PathologyBERT-pre-trained vs. a new transformer language model for pathology domain. In Proceedings of the AMIA annual symposium proceedings, 2023, Vol. 2022, p. 962.

- Christophe, C.; Kanithi, P.K.; Raha, T.; Khan, S.; Pimentel, M.A. Med42-v2: A suite of clinical llms. arXiv preprint arXiv:2408.06142 2024. [CrossRef]

- Wu, S.; Irsoy, O.; Lu, S.; Dabravolski, V.; Dredze, M.; Gehrmann, S.; Kambadur, P.; Rosenberg, D.; Mann, G. Bloomberggpt: A large language model for finance. arXiv preprint arXiv:2303.17564 2023.

- Araci, D. FinBERT: Financial Sentiment Analysis with Pre-trained Language Models. arXiv preprint arXiv:1908.10063 2019. [CrossRef]

- Yang, H.; Liu, X.Y.; Wang, C.D. Fingpt: Open-source financial large language models. arXiv preprint arXiv:2306.06031 2023. [CrossRef]

- Zhao, Z.; Welsch, R.E. Aligning LLMs with Human Instructions and Stock Market Feedback in Financial Sentiment Analysis. arXiv preprint arXiv:2410.14926 2024. [CrossRef]

- Yu, Y.; Yao, Z.; Li, H.; Deng, Z.; Cao, Y.; Chen, Z.; Suchow, J.W.; Liu, R.; Cui, Z.; Xu, Z.; et al. Fincon: A synthesized llm multi-agent system with conceptual verbal reinforcement for enhanced financial decision making. arXiv preprint arXiv:2407.06567 2024.

- Shah, S.; Ryali, S.; Venkatesh, R. Multi-Document Financial Question Answering using LLMs. arXiv preprint arXiv:2411.07264 2024. [CrossRef]

- Wei, Q.; Yang, M.; Wang, J.; Mao, W.; Xu, J.; Ning, H. Tourllm: Enhancing llms with tourism knowledge. arXiv preprint arXiv:2407.12791 2024. [CrossRef]

- Banerjee, A.; Satish, A.; Wörndl, W. Enhancing Tourism Recommender Systems for Sustainable City Trips Using Retrieval-Augmented Generation. arXiv preprint arXiv:2409.18003 2024. [CrossRef]

- Wang, J.; Shalaby, A. Leveraging Large Language Models for Enhancing Public Transit Services. arXiv preprint arXiv:2410.14147 2024. [CrossRef]

- Zhang, Z.; Sun, Y.; Wang, Z.; Nie, Y.; Ma, X.; Sun, P.; Li, R. Large language models for mobility in transportation systems: A survey on forecasting tasks. arXiv preprint arXiv:2405.02357 2024. [CrossRef]

- Zhai, X.; Tian, H.; Li, L.; Zhao, T. Enhancing Travel Choice Modeling with Large Language Models: A Prompt-Learning Approach. arXiv preprint arXiv:2406.13558 2024. [CrossRef]

- Mo, B.; Xu, H.; Zhuang, D.; Ma, R.; Guo, X.; Zhao, J. Large language models for travel behavior prediction. arXiv preprint arXiv:2312.00819 2023. [CrossRef]

- Nie, Y.; Kong, Y.; Dong, X.; Mulvey, J.M.; Poor, H.V.; Wen, Q.; Zohren, S. A Survey of Large Language Models for Financial Applications: Progress, Prospects and Challenges. arXiv preprint arXiv:2406.11903 2024. [CrossRef]

- Papasotiriou, K.; Sood, S.; Reynolds, S.; Balch, T. AI in Investment Analysis: LLMs for Equity Stock Ratings. In Proceedings of the Proceedings of the 5th ACM International Conference on AI in Finance, 2024, pp. 419–427.

- Fatemi, S.; Hu, Y.; Mousavi, M. A Comparative Analysis of Instruction Fine-Tuning LLMs for Financial Text Classification. arXiv preprint arXiv:2411.02476 2024. [CrossRef]

- Gebreab, S.A.; Salah, K.; Jayaraman, R.; ur Rehman, M.H.; Ellaham, S. Llm-based framework for administrative task automation in healthcare. In Proceedings of the 2024 12th International Symposium on Digital Forensics and Security (ISDFS). IEEE, 2024, pp. 1–7. [CrossRef]

- Cascella, M.; Montomoli, J.; Bellini, V.; Bignami, E. Evaluating the feasibility of ChatGPT in healthcare: an analysis of multiple clinical and research scenarios. Journal of medical systems 2023, 47, 33. [Google Scholar] [CrossRef] [PubMed]

- Palen-Michel, C.; Wang, R.; Zhang, Y.; Yu, D.; Xu, C.; Wu, Z. Investigating LLM Applications in E-Commerce. arXiv preprint arXiv:2408.12779 2024. [CrossRef]

- Fang, C.; Li, X.; Fan, Z.; Xu, J.; Nag, K.; Korpeoglu, E.; Kumar, S.; Achan, K. Llm-ensemble: Optimal large language model ensemble method for e-commerce product attribute value extraction. In Proceedings of the Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval, 2024, pp. 2910–2914.

- Yin, M.; Wu, C.; Wang, Y.; Wang, H.; Guo, W.; Wang, Y.; Liu, Y.; Tang, R.; Lian, D.; Chen, E. Entropy law: The story behind data compression and llm performance. arXiv preprint arXiv:2407.06645 2024. [CrossRef]

- Kumar, R.; Kakde, S.; Rajput, D.; Ibrahim, D.; Nahata, R.; Sowjanya, P.; Kumar, D. Pretraining Data and Tokenizer for Indic LLM. arXiv preprint arXiv:2407.12481 2024. [CrossRef]

- Lu, K.; Liang, Z.; Nie, X.; Pan, D.; Zhang, S.; Zhao, K.; Chen, W.; Zhou, Z.; Dong, G.; Zhang, W.; et al. Datasculpt: Crafting data landscapes for llm post-training through multi-objective partitioning. arXiv e-prints 2024, pp. arXiv–2409. [CrossRef]

- Choe, S.K.; Ahn, H.; Bae, J.; Zhao, K.; Kang, M.; Chung, Y.; Pratapa, A.; Neiswanger, W.; Strubell, E.; Mitamura, T.; et al. What is Your Data Worth to GPT? LLM-Scale Data Valuation with Influence Functions. arXiv preprint arXiv:2405.13954 2024. [CrossRef]

- Jiao, F.; Ding, B.; Luo, T.; Mo, Z. Panda llm: Training data and evaluation for open-sourced chinese instruction-following large language models. arXiv preprint arXiv:2305.03025 2023. [CrossRef]

- Gan, Z.; Liu, Y. Towards a Theoretical Understanding of Synthetic Data in LLM Post-Training: A Reverse-Bottleneck Perspective. arXiv preprint arXiv:2410.01720 2024. [CrossRef]

- Wood, D.; Lublinsky, B.; Roytman, A.; Singh, S.; Adam, C.; Adebayo, A.; An, S.; Chang, Y.C.; Dang, X.H.; Desai, N.; et al. Data-Prep-Kit: getting your data ready for LLM application development. arXiv preprint arXiv:2409.18164 2024. [CrossRef]

- Liu, Z. Cultural Bias in Large Language Models: A Comprehensive Analysis and Mitigation Strategies. Journal of Transcultural Communication 2024.

- Khola, J.; Bansal, S.; Punia, K.; Pal, R.; Sachdeva, R. Comparative Analysis of Bias in LLMs through Indian Lenses. In Proceedings of the 2024 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), 2024, pp. 1–6. [CrossRef]

- Talboy, A.N.; Fuller, E. Challenging the appearance of machine intelligence: Cognitive bias in LLMs and Best Practices for Adoption. arXiv preprint arXiv:2304.01358 2023. [CrossRef]

- Faridoon, A.; Kechadi, M.T. Healthcare Data Governance, Privacy, and Security-A Conceptual Framework. In Proceedings of the EAI International Conference on Body Area Networks. Springer, 2024, pp. 261–271.

- Gavgani, V.Z.; Pourrasmi, A. Data Governance Navigation for Advanced Operations in Healthcare Excellence. Depiction of Health 2024, 15, 249–254. [Google Scholar] [CrossRef]

- Raza, M.A. Cyber Security and Data Privacy in the Era of E-Governance. Social Science Journal for Advanced Research 2024, 4, 5–9. [Google Scholar] [CrossRef]

- Du, X.; Xiao, C.; Li, Y. Haloscope: Harnessing unlabeled llm generations for hallucination detection. arXiv preprint arXiv:2409.17504 2024.

- Li, R.; Bagade, T.; Martinez, K.; Yasmin, F.; Ayala, G.; Lam, M.; Zhu, K. A Debate-Driven Experiment on LLM Hallucinations and Accuracy. arXiv preprint arXiv:2410.19485 2024. [CrossRef]

- Liu, X. A Survey of Hallucination Problems Based on Large Language Models. Applied and Computational Engineering 2024, 97, 24–30. [Google Scholar] [CrossRef]

- Reddy, G.P.; Pavan Kumar, Y.V.; Prakash, K.P. Hallucinations in Large Language Models (LLMs). In Proceedings of the 2024 IEEE Open Conference of Electrical, Electronic and Information Sciences (eStream), April 2024, pp. 1–6. [CrossRef]

- Zhui, L.; Fenghe, L.; Xuehu, W.; Qining, F.; Wei, R. Ethical considerations and fundamental principles of large language models in medical education. Journal of Medical Internet Research 2024, 26, e60083. [Google Scholar] [CrossRef]

- Shah, S.B.; Thapa, S.; Acharya, A.; Rauniyar, K.; Poudel, S.; Jain, S.; Masood, A.; Naseem, U. Navigating the Web of Disinformation and Misinformation: Large Language Models as Double-Edged Swords. IEEE Access 2024, pp. 1–1. [CrossRef]

- Ma, T. LLM Echo Chamber: personalized and automated disinformation. arXiv preprint arXiv:2409.16241 2024. [CrossRef]

- Pahune, S.; Akhtar, Z. Transitioning from MLOps to LLMOps: Navigating the Unique Challenges of Large Language Models. Information 2025, 16, 87. [Google Scholar] [CrossRef]

- Tie, J.; Yao, B.; Li, T.; Ahmed, S.I.; Wang, D.; Zhou, S. LLMs are Imperfect, Then What? An Empirical Study on LLM Failures in Software Engineering. arXiv preprint arXiv:2411.09916 2024. [CrossRef]

- Menshawy, A.; Nawaz, Z.; Fahmy, M. Navigating Challenges and Technical Debt in Large Language Models Deployment. In Proceedings of the Proceedings of the 4th Workshop on Machine Learning and Systems, New York, NY, USA, 2024; EuroMLSys ’24, p. 192–199. [CrossRef]

- Chen, T. Challenges and Opportunities in Integrating LLMs into Continuous Integration/Continuous Deployment (CI/CD) Pipelines. In Proceedings of the 2024 5th International Seminar on Artificial Intelligence, Networking and Information Technology (AINIT), 2024, pp. 364–367. [CrossRef]

- Fakeyede, O.G.; Okeleke, P.A.; Hassan, A.; Iwuanyanwu, U.; Adaramodu, O.R.; Oyewole, O.O. Navigating data privacy through IT audits: GDPR, CCPA, and beyond. International Journal of Research in Engineering and Science 2023, 11. [Google Scholar]

- Hu, S.; Wang, P.; Yao, Y.; Lu, Z. " I Always Felt that Something Was Wrong.": Understanding Compliance Risks and Mitigation Strategies when Professionals Use Large Language Models. arXiv preprint arXiv:2411.04576 2024. [CrossRef]

- Hassani, S. Enhancing legal compliance and regulation analysis with large language models. In Proceedings of the 2024 IEEE 32nd International Requirements Engineering Conference (RE). IEEE, 2024, pp. 507–511. [CrossRef]

- Berger, A.; Hillebrand, L.; Leonhard, D.; Deußer, T.; De Oliveira, T.B.F.; Dilmaghani, T.; Khaled, M.; Kliem, B.; Loitz, R.; Bauckhage, C.; et al. Towards Automated Regulatory Compliance Verification in Financial Auditing with Large Language Models. In Proceedings of the 2023 IEEE International Conference on Big Data (BigData), 2023, pp. 4626–4635. [CrossRef]

- Aaronson, S.A. Data Dysphoria: The Governance Challenge Posed by Large Learning Models. Available at SSRN 4554580 2023.

- Cheong, I.; Caliskan, A.; Kohno, T. Envisioning legal mitigations for LLM-based intentional and unintentional harms. Adm. Law J 2022.

- Glukhov, D.; Han, Z.; Shumailov, I.; Papyan, V.; Papernot, N. Breach By A Thousand Leaks: Unsafe Information Leakage inSafe’AI Responses. arXiv preprint arXiv:2407.02551 2024. [CrossRef]

- Madhavan, D. Enterprise Data Governance: A Comprehensive Framework for Ensuring Data Integrity, Security, and Compliance in Modern Organizations. International Journal of Scientific Research in Computer Science, Engineering and Information Technology 2024. [CrossRef]

- Rejeleene, R.; Xu, X.; Talburt, J. Towards trustable language models: Investigating information quality of large language models. arXiv preprint arXiv:2401.13086 2024. [CrossRef]

- Yang, J.; Wang, Z.; Lin, Y.; Zhao, Z. Problematic Tokens: Tokenizer Bias in Large Language Models. In Proceedings of the 2024 IEEE International Conference on Big Data (BigData). IEEE, 2024, pp. 6387–6393. [CrossRef]

- Balloccu, S.; Schmidtová, P.; Lango, M.; Dušek, O. Leak, cheat, repeat: Data contamination and evaluation malpractices in closed-source LLMs. arXiv preprint arXiv:2402.03927 2024. [CrossRef]

- Abdelnabi, S.; Fay, A.; Cherubin, G.; Salem, A.; Fritz, M.; Paverd, A. Are you still on track!? Catching LLM Task Drift with Activations. arXiv preprint arXiv:2406.00799 2024. [CrossRef]

- Würsch, M.; David, D.P.; Mermoud, A. Monitoring Emerging Trends in LLM Research. Large 2024, p. 153.

- Mannapur, S.B. Machine Learning Drift Detection and Concept Drift Analysis: Real-time Monitoring and Adaptive Model Maintenance. CSEIT 2024. [CrossRef]

- Pai, Y.T.; Sun, N.E.; Li, C.T.; Lin, S.d. Incremental Data Drifting: Evaluation Metrics, Data Generation, and Approach Comparison. ACM Trans. Intell. Syst. Technol. 2024, 15. [Google Scholar] [CrossRef]

- Sharma, V.; Mousavi, E.; Gajjar, D.; Madathil, K.; Smith, C.; Matos, N. Regulatory framework around data governance and external benchmarking. Journal of Legal Affairs and Dispute Resolution in Engineering and Construction 2022, 14, 04522006. [Google Scholar] [CrossRef]

- Vardia, A.S.; Chaudhary, A.; Agarwal, S.; Sagar, A.K.; Shrivastava, G. Cloud Security Essentials: A Detailed Exploration. Emerging Threats and Countermeasures in Cybersecurity 2025, pp. 413–432. [CrossRef]

- Sainz, O.; Campos, J.A.; García-Ferrero, I.; Etxaniz, J.; de Lacalle, O.L.; Agirre, E. Nlp evaluation in trouble: On the need to measure llm data contamination for each benchmark. arXiv preprint arXiv:2310.18018 2023. [CrossRef]

- Perełkiewicz, M.; Poświata, R. A Review of the Challenges with Massive Web-mined Corpora Used in Large Language Models Pre-Training. arXiv preprint arXiv:2407.07630 2024. [CrossRef]

- Jiao, J.; Afroogh, S.; Xu, Y.; Phillips, C. Navigating llm ethics: Advancements, challenges, and future directions. arXiv preprint arXiv:2406.18841 2024. [CrossRef]

- Peng, B.; Chen, K.; Li, M.; Feng, P.; Bi, Z.; Liu, J.; Niu, Q. Securing large language models: Addressing bias, misinformation, and prompt attacks. arXiv preprint arXiv:2409.08087 2024. [CrossRef]

- Mhammad, A.F.; Agarwal, R.; Columbo, T.; Vigorito, J. Generative & responsible ai-llms use in differential governance. In Proceedings of the 2023 International Conference on Computational Science and Computational Intelligence (CSCI). IEEE, 2023, pp. 291–295. [CrossRef]

- Kumari, B. Intelligent Data Governance Frameworks: A Technical Overview. International Journal of Scientific Research in Computer Science, Engineering and Information Technology 2024. [CrossRef]

- Gupta, R.; Walker, L.; Corona, R.; Fu, S.; Petryk, S.; Napolitano, J.; Darrell, T.; Reddie, A.W. Data-Centric AI Governance: Addressing the Limitations of Model-Focused Policies. arXiv preprint arXiv:2409.17216 2024. [CrossRef]

- Arigbabu, A.T.; Olaniyi, O.O.; Adigwe, C.S.; Adebiyi, O.O.; Ajayi, S.A. Data governance in AI-enabled healthcare systems: A case of the project nightingale. Asian Journal of Research in Computer Science 2024, 17, 85–107. [Google Scholar] [CrossRef]

- Yandrapalli, V. AI-Powered Data Governance: A Cutting-Edge Method for Ensuring Data Quality for Machine Learning Applications. In Proceedings of the 2024 Second International Conference on Emerging Trends in Information Technology and Engineering (ICETITE). IEEE, 2024, pp. 1–6. [CrossRef]

- McGregor, S.; Hostetler, J. Data-centric governance. arXiv preprint arXiv:2302.07872 2023. [CrossRef]

- Khan, I. Ai-powered data governance: ensuring integrity in banking’s technological frontier 2023.

- Tjondronegoro, D.W. Strategic AI Governance: Insights from Leading Nations. arXiv preprint arXiv:2410.01819 2024. [CrossRef]

- Schiff, D.; Biddle, J.; Borenstein, J.; Laas, K. What’s next for ai ethics, policy, and governance? a global overview. In Proceedings of the Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society, 2020, pp. 153–158.

- Arnold, G.; Ludwick, S.; Mohsen Fatemi, S.; Krause, R.; Long, L.A.N. Policy entrepreneurship for transformative governance. European Policy Analysis 2024. [CrossRef]

- Jakubik, J.; Vössing, M.; Kühl, N.; Walk, J.; Satzger, G. Data-centric artificial intelligence. Business & Information Systems Engineering 2024, pp. 1–9.

- Majeed, A.; Hwang, S.O. Technical analysis of data-centric and model-centric artificial intelligence. IT Professional 2024, 25, 62–70. [Google Scholar] [CrossRef]

- Gisolfi, N. Model-centric verification of artificial intelligence. PhD thesis, Carnegie Mellon University, 2022.

- Currie, N. Risk based approaches to artificial intelligence. Crowe Data Management 2019. [Google Scholar]

- Lütge, C.; Hohma, E.; Boch, A.; Poszler, F.; Corrigan, C. On a Risk-Based Assessment Approach to AI Ethics Governance 2022.

- Lee, C.A.; Chow, K.; Chan, H.A.; Lun, D.P.K. Decentralized governance and artificial intelligence policy with blockchain-based voting in federated learning. Frontiers in Research Metrics and Analytics 2023, 8, 1035123. [Google Scholar] [CrossRef]

- Pencina, M.J.; McCall, J.; Economou-Zavlanos, N.J. A federated registration system for artificial intelligence in health. JAMA 2024, 332, 789–790. [Google Scholar] [CrossRef]

- Lim, H.Y.F. Regulatory compliance. In Artificial Intelligence; Edward Elgar Publishing, 2022; pp. 85–108.

- Aziza, O.R.; Uzougbo, N.S.; Ugwu, M.C. The impact of artificial intelligence on regulatory compliance in the oil and gas industry. World Journal of Advanced Research and Reviews 2023, 19, 1559–1570. [Google Scholar] [CrossRef]

- Eitel-Porter, R. Beyond the promise: implementing ethical AI. AI and Ethics 2021, 1, 73–80. [Google Scholar] [CrossRef]

- Daly, A.; Hagendorff, T.; Hui, L.; Mann, M.; Marda, V.; Wagner, B.; Wang, W.; Witteborn, S. Artificial intelligence governance and ethics: global perspectives. arXiv preprint arXiv:1907.03848 2019. [CrossRef]

- Sidhpurwala, H.; Mollett, G.; Fox, E.; Bestavros, M.; Chen, H. Building Trust: Foundations of Security, Safety and Transparency in AI. arXiv preprint arXiv:2411.12275 2024. [CrossRef]

- Singh, K.; Saxena, R.; Kumar, B. AI Security: Cyber Threats and Threat-Informed Defense. In Proceedings of the 2024 8th Cyber Security in Networking Conference (CSNet). IEEE, 2024, pp. 305–312. [CrossRef]

- Bowen, G.; Sothinathan, J.; Bowen, R. Technological Governance (Cybersecurity and AI): Role of Digital Governance. In Cybersecurity and Artificial Intelligence: Transformational Strategies and Disruptive Innovation; Springer, 2024; pp. 143–161.

- Savaş, S.; Karataş, S. Cyber governance studies in ensuring cybersecurity: an overview of cybersecurity governance. International Cybersecurity Law Review 2022, 3, 7–34. [Google Scholar] [CrossRef]

- Lal, S.; Singh, B.; Kaunert, C. Role of Artificial Intelligence (AI) and Intellectual Property Rights (IPR) in Transforming Drug Discovery and Development in the Life Sciences: Legal and Ethical Concerns. Library of Progress-Library Science, Information Technology & Computer 2024, 44. [Google Scholar]

- Mirakhori, F.; Niazi, S.K. Harnessing the AI/ML in Drug and Biological Products Discovery and Development: The Regulatory Perspective. Pharmaceuticals 2025, 18, 47. [Google Scholar] [CrossRef]

- Price, W.; Nicholson, I. Distributed governance of medical AI. SMU Sci. & Tech. L. Rev. 2022, 25, 3. [Google Scholar]

- Han, Y.; Tao, J. Revolutionizing Pharma: Unveiling the AI and LLM Trends in the Pharmaceutical Industry. arXiv preprint arXiv:2401.10273 2024. [CrossRef]

- Tripathi, S.; Gabriel, K.; Tripathi, P.K.; Kim, E. Large language models reshaping molecular biology and drug development. Chemical Biology & Drug Design 2024, 103, e14568. [Google Scholar]

- Liu, J.; Liu, S.; et al. Applications of Large Language Models in Clinical Practice: Path, Challenges, and Future Perspectives 2024.

- Dou, Y.; Zhao, X.; Zou, H.; Xiao, J.; Xi, P.; Peng, S. ShennongGPT: A Tuning Chinese LLM for Medication Guidance. In Proceedings of the 2023 IEEE International Conference on Medical Artificial Intelligence (MedAI). IEEE, 2023, pp. 67–72. [CrossRef]

- Zhao, H.; Liu, Z.; Wu, Z.; Li, Y.; Yang, T.; Shu, P.; Xu, S.; Dai, H.; Zhao, L.; Mai, G.; et al. Revolutionizing finance with llms: An overview of applications and insights. arXiv preprint arXiv:2401.11641 2024. [CrossRef]

- Li, Y.; Wang, S.; Ding, H.; Chen, H. Large language models in finance: A survey. In Proceedings of the Proceedings of the fourth ACM international conference on AI in finance, 2023, pp. 374–382.

- Kong, Y.; Nie, Y.; Dong, X.; Mulvey, J.M.; Poor, H.V.; Wen, Q.; Zohren, S. Large Language Models for Financial and Investment Management: Models, Opportunities, and Challenges. Journal of Portfolio Management 2024, 51. [Google Scholar] [CrossRef]

- Yuan, Z.; Wang, K.; Zhu, S.; Yuan, Y.; Zhou, J.; Zhu, Y.; Wei, W. FinLLMs: A Framework for Financial Reasoning Dataset Generation with Large Language Models. arXiv preprint arXiv:2401.10744 2024. [CrossRef]

- Febrian, G.F.; Figueredo, G. KemenkeuGPT: Leveraging a Large Language Model on Indonesia’s Government Financial Data and Regulations to Enhance Decision Making. arXiv preprint arXiv:2407.21459 2024. [CrossRef]

- Clairoux-Trepanier, V.; Beauchamp, I.M.; Ruellan, E.; Paquet-Clouston, M.; Paquette, S.O.; Clay, E. The use of large language models (llm) for cyber threat intelligence (cti) in cybercrime forums. arXiv preprint arXiv:2408.03354 2024. [CrossRef]

- Shafee, S.; Bessani, A.; Ferreira, P.M. Evaluation of llm chatbots for osint-based cyber threat awareness. arXiv preprint arXiv:2401.15127 2024. [CrossRef]

- Wang, F. Using large language models to mitigate ransomware threats 2023.

- Zangana, H.M. Harnessing the Power of Large Language Models. Application of Large Language Models (LLMs) for Software Vulnerability Detection 2024, p. 1.

- Yang, J.; Chi, Q.; Xu, W.; Yu, H. Research on adversarial attack and defense of large language models. Applied and Computational Engineering 2024, 93, 105–113. [Google Scholar] [CrossRef]

- Abdali, S.; Anarfi, R.; Barberan, C.; He, J. Securing Large Language Models: Threats, Vulnerabilities and Responsible Practices. arXiv preprint arXiv:2403.12503 2024. [CrossRef]

- Ashiwal, V.; Finster, S.; Dawoud, A. Llm-based vulnerability sourcing from unstructured data. In Proceedings of the 2024 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW). IEEE, 2024, pp. 634–641. [CrossRef]

- Srivastava, S.K.; Routray, S.; Bag, S.; Gupta, S.; Zhang, J.Z. Exploring the Potential of Large Language Models in Supply Chain Management: A Study Using Big Data. Journal of Global Information Management (JGIM) 2024, 32, 1–29. [Google Scholar] [CrossRef]

- Wang, S.; Zhao, Y.; Hou, X.; Wang, H. Large language model supply chain: A research agenda. ACM Transactions on Software Engineering and Methodology 2024. [Google Scholar] [CrossRef]

- Xu, W.; Xiao, J.; Chen, J. Leveraging large language models to enhance personalized recommendations in e-commerce. In Proceedings of the 2024 International Conference on Electrical, Communication and Computer Engineering (ICECCE). IEEE, 2024, pp. 1–6. [CrossRef]

- Zhu, J.; Lin, J.; Dai, X.; Chen, B.; Shan, R.; Zhu, J.; Tang, R.; Yu, Y.; Zhang, W. Lifelong personalized low-rank adaptation of large language models for recommendation. arXiv preprint arXiv:2408.03533 2024. [CrossRef]

- Mohanty, I. Recommendation Systems in the Era of LLMs. In Proceedings of the Proceedings of the 15th Annual Meeting of the Forum for Information Retrieval Evaluation, 2023, pp. 142–144.

- Li, C.; Deng, Y.; Hu, H.; Kan, M.Y.; Li, H. Incorporating External Knowledge and Goal Guidance for LLM-based Conversational Recommender Systems. arXiv preprint arXiv:2405.01868 2024. [CrossRef]

- Alhafni, B.; Vajjala, S.; Bannò, S.; Maurya, K.K.; Kochmar, E. Llms in education: Novel perspectives, challenges, and opportunities. arXiv preprint arXiv:2409.11917 2024. [CrossRef]

- Leinonen, J.; MacNeil, S.; Denny, P.; Hellas, A. Using Large Language Models for Teaching Computing. In Proceedings of the Proceedings of the 55th ACM Technical Symposium on Computer Science Education V. 2, 2024, pp. 1901–1901. [CrossRef]

- Zdravkova, K.; Dalipi, F.; Ahlgren, F. Integration of Large Language Models into Higher Education: A Perspective from Learners. In Proceedings of the 2023 International Symposium on Computers in Education (SIIE). IEEE, 2023, pp. 1–6. [CrossRef]

- Jadhav, D.; Agrawal, S.; Jagdale, S.; Salunkhe, P.; Salunkhe, R. AI-Driven Text-to-Multimedia Content Generation: Enhancing Modern Content Creation. In Proceedings of the 2024 8th International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud)(I-SMAC). IEEE, 2024, pp. 1610–1615. [CrossRef]

- Li, S.; Li, X.; Chiariglione, L.; Luo, J.; Wang, W.; Yang, Z.; Mandic, D.; Fujita, H. Introduction to the Special Issue on AI-Generated Content for Multimedia. IEEE Transactions on Circuits and Systems for Video Technology 2024, 34, 6809–6813. [Google Scholar] [CrossRef]

- Yao, Y.; Duan, J.; Xu, K.; Cai, Y.; Sun, Z.; Zhang, Y. A survey on large language model (llm) security and privacy: The good, the bad, and the ugly. High-Confidence Computing 2024, p. 100211. [CrossRef]

- Nazi, Z.A.; Peng, W. Large language models in healthcare and medical domain: A review. In Proceedings of the Informatics. MDPI, 2024, Vol. 11, p. 57. [CrossRef]

- Huang, J.; Chang, K.C.C. Citation: A key to building responsible and accountable large language models. arXiv preprint arXiv:2307.02185 2023. [CrossRef]

- Liu, Y.; Yao, Y.; Ton, J.F.; Zhang, X.; Guo, R.; Cheng, H.; Klochkov, Y.; Taufiq, M.F.; Li, H. Trustworthy llms: a survey and guideline for evaluating large language models’ alignment. arXiv preprint arXiv:2308.05374 2023. [CrossRef]

- Carlini, N.; Tramèr, F.; Wallace, E.; Jagielski, M.; Herbert-Voss, A.; Lee, K.; Roberts, A.; Brown, T.; Song, D.; Úlfar Erlingsson.; et al. Extracting Training Data from Large Language Models. In Proceedings of the 30th USENIX Security Symposium, 2021, pp. 2633–2650.

- Pan, X.; Zhang, M.; Ji, S.; Yang, M. Privacy risks of general-purpose language models. In Proceedings of the 2020 IEEE Symposium on Security and Privacy (SP). IEEE, 2020, pp. 1314–1331. [CrossRef]

- Zimelewicz, E.; Kalinowski, M.; Mendez, D.; Giray, G.; Santos Alves, A.P.; Lavesson, N.; Azevedo, K.; Villamizar, H.; Escovedo, T.; Lopes, H.; et al. Ml-enabled systems model deployment and monitoring: Status quo and problems. In Proceedings of the International Conference on Software Quality. Springer, 2024, pp. 112–131. [CrossRef]

- Bodor, A.; Hnida, M.; Najima, D. From Development to Deployment: An Approach to MLOps Monitoring for Machine Learning Model Operationalization. In Proceedings of the 2023 14th International Conference on Intelligent Systems: Theories and Applications (SITA), 2023, pp. 1–7. [CrossRef]

- Roberts, T.; Tonna, S.J. Extending the Governance Framework for Machine Learning Validation and Ongoing Monitoring. In Risk Modeling: Practical Applications of Artificial Intelligence, Machine Learning, and Deep Learning; Wiley, 2022; chapter 7. [CrossRef]

- Nogare, D.; Silveira, I.F. EXPERIMENTATION, DEPLOYMENT AND MONITORING MACHINE LEARNING MODELS: APPROACHES FOR APPLYING MLOPS. Revistaft 2024, 28, 55. [Google Scholar] [CrossRef]

- Mehdi, A.; Bali, M.K.; Abbas, S.I.; Singh, M. "Unleashing the Potential of Grafana: A Comprehensive Study on Real-Time Monitoring and Visualization". In Proceedings of the 2023 14th International Conference on Computing Communication and Networking Technologies (ICCCNT), 2023, pp. 1–8. [CrossRef]

- Samudrala, L.N.R. Automated SLA Monitoring in AWS Cloud Environments - A Comprehensive Approach Using Dynatrace. Journal of Artificial Intelligence & Cloud Computing 2024.

- Menon, V.; Jesudas, J.; S.B, G. MODEL MONITORING WITH GRAFANA AND DYNATRACE: A COMPREHENSIVE FRAMEWORK FOR ENSURING ML MODEL PERFORMANCE. International Journal of Advanced Research 2024, pp. 54–63.

- Yadav, S. Balancing Profitability and Risk: The Role of Risk Appetite in Mitigating Credit Risk Impact. International Scientific Journal of Economics and Management 2024. [Google Scholar] [CrossRef]

- Anil, V.K.S.; Babatope, A. Global Journal of Engineering and Technology Advances. Global Journal of Engineering and Technology Advances 2024, 21, 190–202. [Google Scholar] [CrossRef]

- Zhang, S.; Ye, L.; Yi, X.; Tang, J.; Shui, B.; Xing, H.; Liu, P.; Li, H. " Ghost of the past": identifying and resolving privacy leakage from LLM’s memory through proactive user interaction. arXiv preprint arXiv:2410.14931 2024. [CrossRef]

- Asthana, S.; Mahindru, R.; Zhang, B.; Sanz, J. Adaptive PII Mitigation Framework for Large Language Models. arXiv preprint arXiv:2501.12465 2025. [CrossRef]

- Kalinin, M.; Poltavtseva, M.; Zegzhda, D. Ensuring the Big Data Traceability in Heterogeneous Data Systems. In Proceedings of the 2023 International Russian Automation Conference (RusAutoCon), 2023, pp. 775–780. [CrossRef]

- Falster, D.; FitzJohn, R.G.; Pennell, M.W.; Cornwell, W.K. Versioned data: why it is needed and how it can be achieved (easily and cheaply). PeerJ PrePrints 2017, 5, e3401v1. [Google Scholar]

- Mirchandani, S.; Xia, F.; Florence, P.; Ichter, B.; Driess, D.; Arenas, M.G.; Rao, K.; Sadigh, D.; Zeng, A. Large language models as general pattern machines. arXiv preprint arXiv:2307.04721 2023. [CrossRef]

- Chen, Y.; Zhao, Y.; Li, X.; Zhang, J.; Long, J.; Zhou, F. An open dataset of data lineage graphs for data governance research. Visual Informatics 2024, 8, 1–5. [Google Scholar] [CrossRef]

- Kramer, S.G. Artificial Intelligence in the Supply Chain: Legal Issues and Compliance Challenges. Journal of Supply Chain Management, Logistics and Procurement 2024, 7. [Google Scholar] [CrossRef]

- Hausenloy, J.; McClements, D.; Thakur, M. Towards Data Governance of Frontier AI Models. arXiv preprint arXiv:2412.03824 2024. [CrossRef]

- Liu, Y.; Zhang, D.; Xia, B.; Anticev, J.; Adebayo, T.; Xing, Z.; Machao, M. Blockchain-Enabled Accountability in Data Supply Chain: A Data Bill of Materials Approach. In Proceedings of the 2024 IEEE International Conference on Blockchain (Blockchain). IEEE, 2024, pp. 557–562. [CrossRef]

- Azari, M.; Arif, J.; Moustabchir, H.; Jawab, F. Navigating Challenges and Leveraging Future Trends in AI and Machine Learning for Supply Chains. In AI and Machine Learning Applications in Supply Chains and Marketing; Masengu, R.; Tsikada, C.; Garwi, J., Eds.; IGI Global Scientific Publishing, 2025; pp. 257–282. [CrossRef]

- Hussein, R.; Zink, A.; Ramadan, B.; Howard, F.M.; Hightower, M.; Shah, S.; Beaulieu-Jones, B.K. Advancing Healthcare AI Governance: A Comprehensive Maturity Model Based on Systematic Review. medRxiv 2024, pp. 2024–12. [CrossRef]

- Singh, B.; Kaunert, C.; Jermsittiparsert, K. Managing Health Data Landscapes and Blockchain Framework for Precision Medicine, Clinical Trials, and Genomic Biomarker Discovery. In Digitalization and the Transformation of the Healthcare Sector; Wickramasinghe, N., Ed.; IGI Global Scientific Publishing, 2025; pp. 283–310. [CrossRef]

- Hassan, M.; Borycki, E.M.; Kushniruk, A.W. Artificial intelligence governance framework for healthcare. Healthcare Management Forum 2024, 38, 125–130. [Google Scholar] [CrossRef]

- Chakraborty, A.; Karhade, M. Global AI Governance in Healthcare: A Cross-Jurisdictional Regulatory Analysis. arXiv preprint arXiv:2406.08695 2024. [CrossRef]

- Kim, J.; Kim, S.Y.; Kim, E.A.; et al. Developing a Framework for Self-regulatory Governance in Healthcare AI Research: Insights from South Korea. Asia-Pacific Biotech Research (ABR) 2024, 16, 391–406. [Google Scholar] [CrossRef]

- Olimid, A.P.; Georgescu, C.M.; Olimid, D.A. Legal Analysis of EU Artificial Intelligence Act (2024): Insights from Personal Data Governance and Health Policy. Access to Just. E. Eur. 2024, p. 120.

- Kolade, T.M.; Aideyan, N.T.; Oyekunle, S.M.; Ogungbemi, O.S.; Dapo-Oyewole, D.L.; Olaniyi, O.O. Artificial Intelligence and Information Governance: Strengthening Global Security, through Compliance Frameworks, and Data Security. Available at SSRN 5044032 2024.

- Mbah, G.O.; Evelyn, A.N. AI-powered cybersecurity: Strategic approaches to mitigate risk and safeguard data privacy. World Journal of Advanced Research and Reviews 2024, 24, 310–327. [Google Scholar] [CrossRef]

- Folorunso, A.; Adewumi, T.; Adewa, A.; Okonkwo, R.; Olawumi, T.N. Impact of AI on cybersecurity and security compliance. Global Journal of Engineering and Technology Advances 2024, 21, 167–184. [Google Scholar] [CrossRef]

- Jabbar, H.; Al-Janabi, S.; Syms, F. AI-Integrated Cyber Security Risk Management Framework for IT Projects. In Proceedings of the 2024 International Jordanian Cybersecurity Conference (IJCC), 2024, pp. 76–81. [CrossRef]

- Muhammad, M.H.B.; Abas, Z.B.; Ahmad, A.S.B.; Sulaiman, M.S.B. AI-Driven Security: Redefining Security Information Systems within Digital Governance. International Journal of Research in Information Security and Systems (IJRISS) 2024, 8090245. [Google Scholar] [CrossRef]

- Effoduh, J.; Akpudo, U.; Kong, J. Toward a trustworthy and inclusive data governance policy for the use of artificial intelligence in Africa. Data & Policy 2024, 6, e34. [Google Scholar] [CrossRef]

- Jyothi, V.E.; Sai Kumar, D.L.; Thati, B.; Tondepu, Y.; Pratap, V.K.; Praveen, S.P. Secure Data Access Management for Cyber Threats using Artificial Intelligence. In Proceedings of the 2022 6th International Conference on Electronics, Communication and Aerospace Technology; 2022; pp. 693–697. [Google Scholar] [CrossRef]

- Boggarapu, N.B. Modernizing Banking Compliance: An Analysis of AI-Powered Data Governance in a Hybrid Cloud Environment. CSEIT 2024, 10, 2434. [Google Scholar] [CrossRef]

- Akokodaripon, D.; Alonge-Essiet, F.O.; Aderoju, A.V.; Reis, O. Implementing Data Governance in Financial Systems: Strategies for Ensuring Compliance and Security in Multi-Source Data Integration Projects. CSI Transactions on ICT Research 2024, 5, 1631. [Google Scholar] [CrossRef]

- Chukwurah, N.; Ige, A.B.; Adebayo, V.I.; Eyieyien, O.G. Frameworks for Effective Data Governance: Best Practices, Challenges, and Implementation Strategies Across Industries. Computer Science & IT Research Journal 2024, 5, 1666–1679. [Google Scholar] [CrossRef]

- Zhou, X.; Zhao, X.; Li, G. LLM-Enhanced Data Management. arXiv preprint arXiv:2402.02643 2024. [CrossRef]

- Gorti, A.; Chadha, A.; Gaur, M. Unboxing Occupational Bias: Debiasing LLMs with US Labor Data. In Proceedings of the Proceedings of the AAAI Symposium Series, 2024, Vol. 4, pp. 48–55. [CrossRef]

- de Dampierre, C.; Mogoutov, A.; Baumard, N. Towards Transparency: Exploring LLM Trainings Datasets through Visual Topic Modeling and Semantic Frame. arXiv preprint arXiv:2406.06574 2024. [CrossRef]

- Yang, J.; Wang, Z.; Lin, Y.; Zhao, Z. Global Data Constraints: Ethical and Effectiveness Challenges in Large Language Model. arXiv preprint arXiv:2406.11214 2024. [CrossRef]

- Li, C.; Zhuang, Y.; Qiang, R.; Sun, H.; Dai, H.; Zhang, C.; Dai, B. Matryoshka: Learning to Drive Black-Box LLMs with LLMs. arXiv preprint arXiv:2410.20749 2024. [CrossRef]

- Alber, D.A.; Yang, Z.; Alyakin, A.; Yang, E.; Rai, S.; Valliani, A.A.; Zhang, J.; Rosenbaum, G.R.; Amend-Thomas, A.K.; Kurland, D.B.; et al. Medical large language models are vulnerable to data-poisoning attacks. Nature Medicine 2025, pp. 1–9. [CrossRef]

- Wu, F.; Cui, L.; Yao, S.; Yu, S. Inference Attacks in Machine Learning as a Service: A Taxonomy, Review, and Promising Directions. arXiv e-prints 2024, pp. arXiv–2406. [CrossRef]

- Subramaniam, P.; Krishnan, S. Intent-Based Access Control: Using LLMs to Intelligently Manage Access Control. arXiv preprint arXiv:2402.07332 2024. [CrossRef]

- Mehra, T. The Critical Role of Role-Based Access Control (RBAC) in securing backup, recovery, and storage systems. International Journal of Science and Research Archive 2024, 13, 1192–1194. [Google Scholar] [CrossRef]

- Li, L.; Chen, H.; Qiu, Z.; Luo, L. Large Language Models in Data Governance: Multi-source Data Tables Merging. In Proceedings of the 2024 IEEE International Conference on Big Data (BigData). IEEE, 2024, pp. 3965–3974. [CrossRef]

- Kayali, M.; Wenz, F.; Tatbul, N.; Demiralp, Ç. Mind the Data Gap: Bridging LLMs to Enterprise Data Integration. arXiv preprint arXiv:2412.20331 2024. [CrossRef]

- Erdem, O.; Hassett, K.; Egriboyun, F. Evaluating the Accuracy of Chatbots in Financial Literature. arXiv preprint arXiv:2411.07031 2024. [CrossRef]

- Ruke, A.; Kulkarni, H.; Patil, R.; Pote, A.; Shedage, S.; Patil, A. Future Finance: Predictive Insights and Chatbot Consultation. In Proceedings of the 2024 4th Asian Conference on Innovation in Technology (ASIANCON), 2024, pp. 1–5. [CrossRef]

- Zheng, Z. ChatGPT-style Artificial Intelligence for Financial Applications and Risk Response. International Journal of Computer Science and Information Technology 2024, 3, 179–186. [Google Scholar] [CrossRef]

- Kushwaha, P.K.; Kumar, R.; Kumar, S. AI Health Chatbot using ML. International Journal of Scientific Research in Engineering and Management (IJSREM) 2024, 8, 1. [Google Scholar] [CrossRef]

- Hassani, S. Enhancing Legal Compliance and Regulation Analysis with Large Language Models. In Proceedings of the 2024 IEEE 32nd International Requirements Engineering Conference (RE), June 2024, pp. 507–511. [CrossRef]

- Kumar, B.; Roussinov, D. NLP-based Regulatory Compliance–Using GPT 4.0 to Decode Regulatory Documents. arXiv preprint arXiv:2412.20602 2024. [CrossRef]

- Kaur, P.; Kashyap, G.S.; Kumar, A.; Nafis, M.T.; Kumar, S.; Shokeen, V. From Text to Transformation: A Comprehensive Review of Large Language Models’ Versatility. arXiv preprint arXiv:2402.16142 2024. [CrossRef]

- Zhu, H. Architectural Foundations for the Large Language Model Infrastructures. arXiv preprint arXiv:2408.09205 2024. [CrossRef]

- Koppichetti, R.K. Framework of Hub and Spoke Data Governance Model for Cloud Computing. Journal of Artificial Intelligence & Cloud Computing 2024.

- Li, D.; Sun, Z.; Hu, X.; Hu, B.; Zhang, M. CMT: A Memory Compression Method for Continual Knowledge Learning of Large Language Models. arXiv preprint arXiv:2412.07393 2024. [CrossRef]

- Folorunso, A.; Babalola, O.; Nwatu, C.E.; Ukonne, U. Compliance and Governance issues in Cloud Computing and AI: USA and Africa. Global Journal of Engineering and Technology Advances 2024, 21, 127–138. [Google Scholar] [CrossRef]

- Alsaigh, R.; Mehmood, R.; Katib, I.; Liang, X.; Alshanqiti, A.; Corchado, J.M.; See, S. Harmonizing AI governance regulations and neuroinformatics: perspectives on privacy and data sharing. Frontiers in Neuroinformatics 2024, 18, 1472653. [Google Scholar] [CrossRef]

- Li, Y.; Yu, X.; Koudas, N. Data Acquisition for Improving Model Confidence. Proceedings of the ACM on Management of Data 2024, 2, 1–25. [Google Scholar] [CrossRef]

- Zhang, C.; Zhong, H.; Zhang, K.; Chai, C.; Wang, R.; Zhuang, X.; Bai, T.; Qiu, J.; Cao, L.; Fan, J.; et al. Harnessing Diversity for Important Data Selection in Pretraining Large Language Models. arXiv preprint arXiv:2409.16986 2024. [CrossRef]

- Rajasegar, R.; Gouthaman, P.; Ponnusamy, V.; Arivazhagan, N.; Nallarasan, V. Data Privacy and Ethics in Data Analytics. In Data Analytics and Machine Learning: Navigating the Big Data Landscape; Springer, 2024; pp. 195–213. [CrossRef]

- Pang, J.; Wei, J.; Shah, A.P.; Zhu, Z.; Wang, Y.; Qian, C.; Liu, Y.; Bao, Y.; Wei, W. Improving data efficiency via curating llm-driven rating systems. arXiv preprint arXiv:2410.10877 2024. [CrossRef]

- Seedat, N.; Huynh, N.; van Breugel, B.; van der Schaar, M. Curated llm: Synergy of llms and data curation for tabular augmentation in ultra low-data regimes 2023.

- Oktavia, T.; Wijaya, E. Strategic Metadata Implementation: A Catalyst for Enhanced BI Systems and Organizational Effectiveness. HighTech and Innovation Journal 2025, 6, 21–41. [Google Scholar] [CrossRef]

- Tan, Z.; Li, D.; Wang, S.; Beigi, A.; Jiang, B.; Bhattacharjee, A.; Karami, M.; Li, J.; Cheng, L.; Liu, H. Large language models for data annotation and synthesis: A survey. arXiv preprint arXiv:2402.13446 2024. [CrossRef]

- Walshe, T.; Moon, S.Y.; Xiao, C.; Gunawardana, Y.; Silavong, F. Automatic Labelling with Open-source LLMs using Dynamic Label Schema Integration. arXiv preprint arXiv:2501.12332 2025. [CrossRef]

- Cholke, P.C.; Patankar, A.; Patil, A.; Patwardhan, S.; Phand, S. Enabling Dynamic Schema Modifications Through Codeless Data Management. In Proceedings of the 2024 IEEE Region 10 Symposium (TENSYMP). IEEE, 2024, pp. 1–9. [CrossRef]

- Edwards, J.; Petricek, T.; van der Storm, T.; Litt, G. Schema Evolution in Interactive Programming Systems. arXiv preprint arXiv:2412.06269 2024. [CrossRef]

- Strome, T. Data governance best practices for the AI-ready airport. Journal of Airport Management 2024, 19, 57–70. [Google Scholar] [CrossRef]

- Suhra, R. Unified Data Governance Strategy for Enterprises. International Journal of Computer Applications 2024, 186, 36. [Google Scholar] [CrossRef]

- Aiyankovil, K.G.; Lewis, D. Harmonizing AI Data Governance: Profiling ISO/IEC 5259 to Meet the Requirements of the EU AI Act. Frontiers in Artificial Intelligence and Applications 2024, 395, 363–365. [Google Scholar] [CrossRef]

- Gupta, P.; Parmar, D.S. Sustainable Data Management and Governance Using AI. World Journal of Advanced Engineering Technology and Sciences 2024, 13, 264–274. [Google Scholar] [CrossRef]

- Idemudia, C.; Ige, A.; Adebayo, V.; Eyieyien, O. Enhancing data quality through comprehensive governance: Methodologies, tools, and continuous improvement techniques. Computer Science & IT Research Journal 2024, 5, 1680–1694. [Google Scholar]

- Comeau, D.S.; Bitterman, D.S.; Celi, L.A. Preventing unrestricted and unmonitored AI experimentation in healthcare through transparency and accountability. npj Digital Medicine 2025, 8, 42. [Google Scholar] [CrossRef]

- Organisation for Economic Co-operation and Development. Towards an Integrated Health Information System in the Netherlands. Technical report, Organisation for Economic Co-operation and Development (OECD), 2022. Report, 16 February 2022.

- Musa, M.B.; Winston, S.M.; Allen, G.; Schiller, J.; Moore, K.; Quick, S.; Melvin, J.; Srinivasan, P.; Diamantis, M.E.; Nithyanand, R. C3PA: An Open Dataset of Expert-Annotated and Regulation-Aware Privacy Policies to Enable Scalable Regulatory Compliance Audits. arXiv preprint arXiv:2410.03925 2024. [CrossRef]

- ISLAM, M.; SOURAV, M.; REZA, J. The impact of data protection regulations on business analytics 2024.

- Eshbaev, G. GDPR vs. Weakly Protected Parties in Other Countries. Uzbek Journal of Law and Digital Policy 2024, 2, 55–65. [Google Scholar] [CrossRef]

- Borgesius, F.Z.; Asghari, H.; Bangma, N.; Hoepman, J.H. The GDPR’s Rules on Data Breaches: Analysing Their Rationales and Effects. SCRIPTed 2023, 20, 352. [Google Scholar] [CrossRef]

- Musch, S.; Borrelli, M.C.; Kerrigan, C. Bridging Compliance and Innovation: A Comparative Analysis of the EU AI Act and GDPR for Enhanced Organisational Strategy. Journal of Data Protection & Privacy 2024, 7, 14–40. [Google Scholar] [CrossRef]

- Aziz, M.A.B.; Wilson, C. Johnny Still Can’t Opt-out: Assessing the IAB CCPA Compliance Framework. Proceedings on Privacy Enhancing Technologies 2024, 2024, 349–363. [Google Scholar] [CrossRef]

- Rao, S.D. The Evolution of Privacy Rights in the Digital Age: A Comparative Analysis of GDPR and CCPA. International Journal of Law 2024, 2, 40. [Google Scholar] [CrossRef]

- Harding, E.L.; Vanto, J.J.; Clark, R.; Hannah Ji, L.; Ainsworth, S.C. Understanding the scope and impact of the california consumer privacy act of 2018. Journal of Data Protection & Privacy 2019, 2, 234–253. [Google Scholar]

- Charatan, J.; Birrell, E. Two Steps Forward and One Step Back: The Right to Opt-out of Sale under CPRA. Proceedings on Privacy Enhancing Technologies 2024, 2024, 91–105. [Google Scholar] [CrossRef]

- Wang, G. Administrative and Legal Protection of Personal Information in China: Disadvantages and Solutions. Courier of Kutafin Moscow State Law University (MSAL) 2024, pp. 189–197. [CrossRef]

- Yang, L.; Lin, Y.; Chen, B. Practice and Prospect of Regulating Personal Data Protection in China. Laws 2024, 13, 78. [Google Scholar] [CrossRef]

- Bolatbekkyzy, G. Comparative Insights from the EU’s GDPR and China’s PIPL for Advancing Personal Data Protection Legislation. Groningen Journal of International Law 2024, 11, 129–146. [Google Scholar] [CrossRef]

- Yalamati, S. Ensuring Ethical Practices in AI and ML Toward a Sustainable Future. In Artificial Intelligence and Machine Learning for Sustainable Development, 1st ed.; CRC Press, 2024; p. 15. [CrossRef]

- Ethical Governance and Implementation Paths for Global Marine Science Data Sharing. Frontiers in Marine Science 2024, 11. [CrossRef]

- Sharma, K.; Kumar, P.; Özen, E. Ethical Considerations in Data Analytics: Challenges, Principles, and Best Practices. In Data Alchemy in the Insurance Industry; Taneja, S., Kumar, P., Reepu., Kukreti, M., Özen, E., Eds.; Emerald Publishing Limited: Leeds, 2024; pp. 41–48. [Google Scholar] [CrossRef]

- McNicol, T.; Carthouser, B.; Bongiovanni, I.; Abeysooriya, S. Improving Ethical Usage of Corporate Data in Higher Education: Enhanced Enterprise Data Ethics Framework. Information Technology & People 2024, 37, 2247–2278. [Google Scholar] [CrossRef]

- Kottur, R. Responsible AI Development: A Comprehensive Framework for Ethical Implementation in Contemporary Technological Systems. Computer Science and Information Technology 2024. [Google Scholar] [CrossRef]

- Sharma, R.K. Review Article. International Journal of Science and Research Archive 2025, 14, 544–551. [Google Scholar] [CrossRef]

- Díaz-Rodríguez, N.; Del Ser, J.; Coeckelbergh, M.; de Prado, M.L.; Herrera-Viedma, E.; Herrera, F. Connecting the dots in trustworthy Artificial Intelligence: From AI principles, ethics, and key requirements to responsible AI systems and regulation. Information Fusion 2023, 99, 101896. [Google Scholar] [CrossRef]

- Zisan, T.I.; Pulok, M.M.K.; Borman, D.; Barmon, R.C.; Asif, M.R.H. Navigating the Future of Auditing: AI Applications, Ethical Considerations, and Industry Perspectives on Big Data. European Journal of Theoretical and Applied Sciences 2024, 2, 324–332. [Google Scholar] [CrossRef] [PubMed]

- Sari, R.; Muslim, M. Accountability and Transparency in Public Sector Accounting: A Systematic Review. AMAR: Accounting and Management Review 2023, 3, 1440. [Google Scholar] [CrossRef]

- Felix, S.; Morais, M.G.; Fonseca, J. The role of internal audit in supporting the implementation of the general regulation on data protection — Case study in the intermunicipal communities of Coimbra and Viseu. In Proceedings of the 2018 13th Iberian Conference on Information Systems and Technologies (CISTI), 2018, pp. 1–7. [CrossRef]

- Weaver, L.; Imura, P. System and method of conducting self assessment for regulatory compliance, 2016. US Patent App. 14/497,436.

- Križman, I.; Tissot, B. Data Governance Frameworks for Official Statistics and the Integration of Alternative Sources. Statistical Journal of the IAOS 2022, 38, 947–955. [Google Scholar] [CrossRef]

- Malatji, M. Management of enterprise cyber security: A review of ISO/IEC 27001: 2022. In Proceedings of the 2023 International conference on cyber management and engineering (CyMaEn). IEEE, 2023, pp. 117–122. [CrossRef]

- Segun-Falade, O.D.; Leghemo, I.M.; Odionu, C.S.; Azubuike, C. A Review on [Insert Paper Topic]. International Journal of Science and Research Archive 2024, 12, 2984–3002. [Google Scholar] [CrossRef]

- Janssen, M.; Brous, P.; Estevez, E.; Barbosa, L.S.; Janowski, T. Data governance: Organizing data for trustworthy Artificial Intelligence. Government information quarterly 2020, 37, 101493. [Google Scholar] [CrossRef]

- Olateju, O.; Okon, S.U.; Olaniyi, O.O.; Samuel-Okon, A.D.; Asonze, C.U. Exploring the concept of explainable AI and developing information governance standards for enhancing trust and transparency in handling customer data. Available at SSRN 2024.

- Friha, O.; Ferrag, M.A.; Kantarci, B.; Cakmak, B.; Ozgun, A.; Ghoualmi-Zine, N. Llm-based edge intelligence: A comprehensive survey on architectures, applications, security and trustworthiness. IEEE Open Journal of the Communications Society 2024. [CrossRef]

- Leghemo, I.M.; Azubuike, C.; Segun-Falade, O.D.; Odionu, C.S. Data governance for emerging technologies: A conceptual framework for managing blockchain, IoT, and AI. Journal of Engineering Research and Reports 2025, 27, 247–267. [Google Scholar] [CrossRef]

- O’Sullivan, K.; Lumsden, J.; Anderson, C.; Black, C.; Ball, W.; Wilde, K. A Governance Framework for Facilitating Cross-Agency Data Sharing. International Journal of Population Data Science 2024, 9. [Google Scholar] [CrossRef]

- Bammer, G. Stakeholder Engagement. In Sociology, Social Policy and Education 2024; Edward Elgar Publishing, 2024; pp. 487–491. [CrossRef]

- Demiris, G. Stakeholder Engagement for the Design of Generative AI Tools: Inclusive Design Approaches. Innovation in Aging 2024, 8, 585–586. [Google Scholar] [CrossRef]

- Siew, R. Stakeholder Engagement. In Sustainability Analytics Toolkit for Practitioners; Palgrave Macmillan: Singapore, 2023. [Google Scholar] [CrossRef]

- Arora, A.; et al. Data-Driven Decision Support Systems in E-Governance: Leveraging AI for Policymaking. In Artificial Intelligence: Theory and Applications; Sharma, H., Chakravorty, A., Hussain, S., Kumari, R., Eds.; Springer: Singapore, 2024; Vol. 844, Lecture Notes in Networks and Systems. [Google Scholar] [CrossRef]

- Luo, J.; Luo, X.; Chen, X.; Xiao, Z.; Ju, W.; Zhang, M. SemiEvol: Semi-supervised Fine-tuning for LLM Adaptation. arXiv preprint arXiv:2410.14745 2024. [CrossRef]

- Uuk, R.; Brouwer, A.; Schreier, T.; Dreksler, N.; Pulignano, V.; Bommasani, R. Effective Mitigations for Systemic Risks from General-Purpose AI. SSRN, 2024. Available online: https://ssrn.com/abstract=5021463 or http://dx.doi.org/10.2139/ssrn.5021463.

- AIMultiple Research Team. Data Governance Case Studies, 2024. Accessed: 2025-03-14.

- Google Cloud. Data Governance in Generative AI - Vertex AI, 2024. Accessed: 2025-03-14.

- Microsoft. AI Principles and Approach, 2024. Accessed: 2025-03-14.

- Microsoft. Introducing Modern Data Governance for the Era of AI, 2024. Accessed: 2025-03-14.

- Majumder, S.; Bhattacharjee, A.; Kozhaya, J.N. Enhancing AI Governance in Financial Industry through IBM watsonx. governance. Authorea Preprints 2024.

- Schneider, J.; Kuss, P.; Abraham, R.; Meske, C. Governance of generative artificial intelligence for companies. arXiv preprint arXiv:2403.08802 2024. [CrossRef]

- Mhammad, A.F.; Agarwal, R.; Columbo, T.; Vigorito, J. Generative & Responsible AI - LLMs Use in Differential Governance. In Proceedings of the 2023 International Conference on Computational Science and Computational Intelligence (CSCI), 2023, pp. 291–295. [CrossRef]

- Kumari, B. Intelligent Data Governance Frameworks: A Technical Overview. International Journal of Scientific Research in Computer Science, Engineering and Information Technology 2024, 10, 141–154. [Google Scholar] [CrossRef]

- Mökander, J.; Schuett, J.; Kirk, H.R.; Floridi, L. Auditing large language models: a three-layered approach. AI and Ethics 2024, 4, 1085–1115. [Google Scholar] [CrossRef]

- Cai, H.; Wu, S. TKG: Telecom Knowledge Governance Framework for LLM Application 2023.

- Asthana, S.; Zhang, B.; Mahindru, R.; DeLuca, C.; Gentile, A.L.; Gopisetty, S. Deploying Privacy Guardrails for LLMs: A Comparative Analysis of Real-World Applications. arXiv 2025, [arXiv:cs.CY/2501.12456]. [CrossRef]

- Mamalis, M.; Kalampokis, E.; Fitsilis, F.; Theodorakopoulosand, G.; Tarabanis, K. A Large Language Model based legal assistant for governance applications, 2024.

- Zhao, L. Artificial Intelligence and Law: Emerging Divergent National Regulatory Approaches in a Changing Landscape of Fast-Evolving AI Technologies. In Law 2023; Edward Elgar Publishing, 2023; pp. 369–399. [CrossRef]

- Imam, N.M.; Ibrahim, A.; Tiwari, M. Explainable Artificial Intelligence (XAI) Techniques To Enhance Transparency In Deep Learning Models. IOSR Journal of Computer Engineering (IOSR-JCE) 2024, 26, 29–36. [Google Scholar] [CrossRef]

- Butt, A.; Junejo, A.Z.; Ghulamani, S.; Mahdi, G.; Shah, A.; Khan, D. Deploying Blockchains to Simplify AI Algorithm Auditing. In Proceedings of the 2023 IEEE 8th International Conference on Engineering Technologies and Applied Sciences (ICETAS), 2023, pp. 1–6. [CrossRef]

- Leghemo, I.M.; Azubuike, C.; Segun-Falade, O.D.; Odionu, C.S. Data Governance for Emerging Technologies: A Conceptual Framework for Managing Blockchain, IoT, and AI. Journal of Engineering Research and Reports 2025, 27, 247–267. [Google Scholar] [CrossRef]

- Yang, F.; Abedin, M.Z.; Qiao, Y.; Ye, L. Toward Trustworthy Governance of AI-Generated Content (AIGC): A Blockchain-Driven Regulatory Framework for Secure Digital Ecosystems. IEEE Transactions on Engineering Management 2024, 71, 14945–14962. [Google Scholar] [CrossRef]

- Zhao, Y. Audit Data Traceability and Verification System Based on Blockchain Technology and Deep Learning. In Proceedings of the 2024 International Conference on Telecommunications and Power Electronics (TELEPE), 2024, pp. 77–82. [CrossRef]

- Chaffer, T.J.; von Goins II, C.; Cotlage, D.; Okusanya, B.; Goldston, J. Decentralized Governance of Autonomous AI Agents. arXiv preprint arXiv:2412.17114 2024. [CrossRef]

- Nweke, O.C.; Nweke, G.I. Legal and Ethical Conundrums in the AI Era: A Multidisciplinary Analysis. International Law Research Archives 2024, 13, 1–10. [Google Scholar] [CrossRef]

- Van Rooy, D. Human–machine collaboration for enhanced decision-making in governance. Data & Policy 2024, 6, e60. [Google Scholar] [CrossRef]

- Abeliuk, A.; Gaete, V.; Bro, N. Fairness in LLM-Generated Surveys. arXiv preprint arXiv:2501.15351 2025. [CrossRef]

- Alipour, S.; Sen, I.; Samory, M.; Mitra, T. Robustness and Confounders in the Demographic Alignment of LLMs with Human Perceptions of Offensiveness. arXiv preprint arXiv:2411.08977 2024. [CrossRef]

- Agarwal, S.; Muku, S.; Anand, S.; Arora, C. Does Data Repair Lead to Fair Models? Curating Contextually Fair Data To Reduce Model Bias. In Proceedings of the 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2022, pp. 3898–3907. [CrossRef]

- Simpson, S.; Nukpezah, J.; Brooks, K.; et al. Parity benchmark for measuring bias in LLMs. AI Ethics 2024. [Google Scholar] [CrossRef]

| Application | References |

|---|---|

| (Cultural, Algorithmic) biases in LLM | [39,40,41] |

| Data privacy and security concerns | [42,43,44] |

| Hallucination in LLM | [45,46,47,48] |

| Ethical implications and misinformation | [49,50,51] |

| Failure deployment of LLMs | [52,53,54,55] |

| Regulatory compliance and legal concerns | [56,57,58,59] |

| Unintended destructive outputs | [60,61,62] |

| Lack of data validation and data quality control | [63,64,65,66] |

| Data evolution and drift creates a lack of performance | [67,68,69,70] |

| Aspect | Description |

|---|---|

| Data Lifecycle Management | Use of intelligent data governance uses across the AI model through out the end to end lifecycle from development phase to end of deployment phase. |

| Regulatory Compliance and Legal Frameworks | The scalable and flexible governance model adapts the global regulation like GDPR, CCAA, HIPAA, AI Act, AIRMF. |

| Ethical and Fair AI Practices | The implementation of AI data governance ensures that AI models and systems operates with transparency, fairness without any discrimination metrics (e.g., regardless of race, gender, religion, age and others). |

| Data Privacy and Security | The implementation of an intelligence of data governance leverages the data privacy, encrypted mechanism to mitigate data breach activity. Also, prevents with various cyber threats and several attacks (eg., adversarial, model inversion, inference, data poisoning and others). |

| Data Quality, Integrity, and Validation | Data quality, integrity, and validation are essential elements of data governance. These three factors directly impact the quality of trustworthiness in AI models. |

| Data Lineage and Traceability | Data lineage and traceability are the vital components of data governance methodology, which assist auditors in tracing data usage, assist with debugging the issue for root cause analysis. |

| More Secure End-to-End Model Deployment | The use of this data governance approach assist with secure and confidence deployment of AI model via various pipelines (DevOps, MLOps, LLMOps) from initial phase, robust model training, testing and validation, deployment phase, post deployment phase. |

| Types of AI Governance | Focus | Key Aspects | References |

|---|---|---|---|

| Policy-Driven Governance | Regulations and Compliance: Focus on various policies and data protection law | GDPR, HIPAA, AI Act, and CCPA. | [85,86] |

| Data-Centric Governance | Data Quality and Integrity: Ensure data fairness, accuracy and bias mitigation approach | Master Data Management, Data Encryption, Third-Party Data Sharing Policies. | [79,82,87] |

| Model-Centric Governance | Model Explainability: Focus on models lifecycle from initial phase to secure deployment phase. | Model Lifecycle Management (MLOps, LLMOps), Model Performance and Accuracy. | [87,88,89] |

| Risk-Based Governance | AI Risk Management: Identifies potential AI risk (e.g., algorithmic bias, data privacy breaches, security vulnerabilities) and applies data governance controls. | Financial and Operational Risk, Security and Cyber Risk Management, Algorithmic Risk Management. | [90,91] |

| Federated AI Governance | Decentralized AI Systems: AI model training with securing confidential data (e.g, Train AI model without sharing confidential patient data). | Decentralized Model Governance and Accountability, Security and Trust in Federated Systems, Decentralized Model Governance and Accountability. | [92,93] |

| Regulatory-Compliance Governance | Adherence to Laws: Ensure models not breaking rights, privacy and laws | Healthcare AI to comply must with HIPAA regulations. | [94,95] |