1. Introduction

Recent advancements in portable ultrasound technology are transforming care of critically ill patients, including echocardiography. Echocardiography is a vital diagnostic tool in cardiovascular medicine, providing non-invasive insights into the heart’s structure and function [

1]. Yet, as the demand for high-quality “echo” examinations continues to blossom, the human and technological resources needed to deliver such exams are finite [

2]. Point-of-care ultrasound (POCUS) is an approach whereby treating clinicians apply focused echocardiography to quickly evaluate gross cardiac function, identify pericardial effusions, and assess response to fluids [

3]. However, the effectiveness of POCUS in such settings hinges on the operator’s ability to obtain diagnostic-quality images and accurately interpret the findings [

4]. In settings such as the emergency department or intensive care, practitioners of varying experience level encounter innumerable obstacles to acquiring high-quality images [

5,

6,

7].

The left ventricular ejection fraction (LVEF) is a key metric for cardiovascular assessment on echocardiography [

8], measuring the percentage of blood ejected from the left ventricle during systole. LVEF offers critical insights into overall cardiac function and is essential for diagnosing and managing various cardiac conditions. The two principal echocardiographic windows used to measure LVEF are the parasternal (i.e., parasternal long axis view; PLAX; parasternal short axis view; PSAX) and the apical windows (i.e., apical 4-chamber view; A4C;apical 2-chamber view;A2C). Obtaining these views traditionally requires a high level of technical expertise and dependable equipment to ensure diagnostic-quality images [

8]. In emergency and critical care, factors such as patient instability, wounds, dressings, and invasive devices combine with body habitus and limited positioning challenge even the most skilled sonographer [

9,

10,

11].

In helping to circumvent operator variability and patient limitations, one specific technology has found itself well positioned to accelerate advancements in ultrasound imaging –artificial intelligence (AI). By automating key aspects of image acquisition and interpretation, AI has the potential to enable users at any skill level to perform complex cardiac assessments, including LVEF assessment, with minimal training [

4,

12]. Previous studies have also demonstrated that AI-based echocardiography tools can effectively calculate LVEF from both parasternal and apical views [

13,

14]. However, no research to date has evaluated the application of POCUS-AI tools in acute care settings. This capability is particularly important in high-stakes environments like the intensive care unit (ICU), where rapid and accurate decision-making is needed to positively impact patient outcomes. If done well, the integration of AI-driven tools such as LVEF calculation could reduce the barriers to the performance and interpretation of echocardiography, and better inform acute care.

This study seeks to evaluate the feasibility, reliability and accuracy of POCUS-AI systems in an intensive-care setting, by addressing two key hypotheses: (1) novice users can reliably acquire both diagnostic-quality PLAX and A4C views in ICU patients, and (2) the accuracy of AI-calculated LVEF derived from both of these views is not inferior to conventional echocardiography performed concurrently by expert sonographer.

2. Materials and Methods

2.1. Study design

We performed a prospective observational study with institutional ethics approval in place (University of Alberta HREB Pro00119711). Ethical protocols ensured that the novice users of the ultrasound-AI tool provided informed consent to participate, while scans of ICU patients (who were generally unconscious and intubated) were integrated into routine care on a waiver-of-consent basis, as echo is routinely performed in the ICU setting with minimal potential harm.

2.2. Hardware and software

We scanned patients using the Exo Iris portable ultrasound probe with ExoAI software, a commercially available tool developed by Exo Inc. (Santa Clara, CA, USA, version 2.1.0). ExoAI has two different LVEF analysis packages, for PLAX and A4CP4 views.

2.3. Study participants

Participants performing ultrasound scans included an expert professional echo sonographer with 26 years of experience, who also collected our gold-standard images, and multiple novice learners. Inclusion criteria for learners included: healthcare professionals (typically nurses, medical students, or physicians in training), or graduate students/research assistants with a health-sciences project focus; ability to provide written informed consent to be trained to perform basic echocardiography; and sufficient time to perform multiple scans. We excluded learners who had prior formal imaging experience (e.g., sonographers, radiologists). We recruited 30 novice learners (7 medical students, 2 graduate students, 21 resident physicians).

2.4. Patients

We included 75 consecutive ICU patients who were receiving conventional echocardiography as part of their care. These were divided into a small cohort (Cohort 1, n=10), where novice scanners were available to provide measurements in addition to an expert sonographer, and a larger cohort (Cohort 2, n=65), where only the expert sonographer provided measurements . We included adult patients with a wide range of cardiac and intrathoracic pathologies, of any age [mean (SD) Cohort 1: 57.6 (19.7); Cohort 2: 64.3 (10.8)], sex (Cohort 1: 70% M; Cohort 2: 71% M), ethnicity, height, weight, and body mass index (BMI) [mean(SD) Cohort 1: 27.9(4.8); Cohort 2: 27.2(5.0)] We excluded patients too unstable to safely delay care for a 15-minute research ultrasound, congenital structural cardiac anomalies, and those currently in isolation for COVID-19 or other communicable diseases.

2.5. Training

This was intentionally kept brief. Novice learners underwent a standardized two-hour training session that included a comprehensive presentation on ultrasound cardiac imaging and techniques for obtaining parasternal and apical views. This session covered topics on basic ultrasound principles, cardiac anatomy, and imaging landmarks. Additionally, the training included one hour of hands-on practice, during which learners received direct guidance from an expert sonographer to refine their skills and confidence.

2.6. Scan protocol

Most echocardiographic examinations in this study were conducted with the patient in the supine, semi-supine, or partial left decubitus position depending on patient-related factors (i.e., clinical stability, spinal stabilization, external fixators).

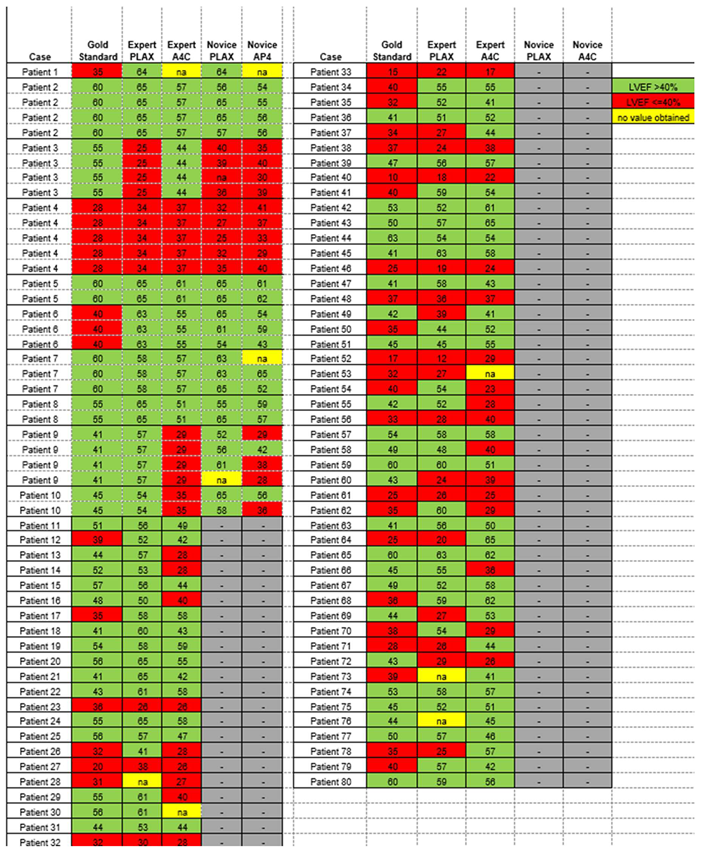

To assess feasibility and inter-user reliability of the AI-assisted echo, we scanned a small cohort of patients (Cohort 1) multiple times. In these patients, the expert and all available novices independently acquired their best attempts at PLAX and A4c views suitable for LVEF calculation using the AI-assisted tool on the Exo IRIS probe (

Figure 1). Time to achieve diagnostic-quality images was recorded for the expert and novices. If any novice learner was unable to obtain views of sufficient quality to generate AI LVEF estimates within 5 minutes, the expert would step in to verbally assist until images were captured.

To evaluate accuracy of the AI-assisted echo, the expert then scanned a larger cohort of patients (Cohort 2), obtaining AI-assisted PLAX and A4C views in any patient who was also undergoing a contrast echocardiogram with quantitative LVEF calculation. This cohort did not involve novice learners performing scans. The gold-standard contrast echo performed by an expert sonographer in Cohort 2 patients was a complete conventional echo including (among other views) PLAX and A4C, performed without any AI guidance. All patients in Cohort 2 had this echo done with contrast. Conventional echo scan was also performed in all Cohort 1 patients, but for logistical reasons we were not able to administer contrast in all these patients.

2.7. Data analysis

Data was systematically captured in the Exo Iris PACS system and a REDCap database. Collected metrics included scan times, image quality ratings, and LVEF classifications. Post-scan analysis involved AI-based LVEF calculations and expert evaluations of image quality and functionality.

2.8. Statistical Methods

ExoAI provides an estimated LVEF and a confidence interval with an upper and lower bound (

Figure 1b,d).

We mainly focused our analysis on the mean estimate. Since the number of novice scanners varied between patients and each novice scanner was unique, a mean LVEF score from all novice scanners was calculated for each patient and used when relevant.

Non-parametric descriptive statistics were performed on continuous LVEF scores and scan times. Differences between expert and novice scanners and A4C and PLAX views in Cohort 1 were assessed using the Friedman test, while differences in LVEF between A4C and PLAX views acquired by the expert scanner in Cohort 2 were evaluated by Wilcoxon and McNemar’s tests. Inter-rater reliability of continuous LVEF measurements between expert and novice scanners was evaluated by intraclass correlation coefficient (ICC).

LVEF percentages were also converted to categorical data for normal/borderline (LVEF%>40) and markedly reduced (LVEF %<=40%). Inter-rater reliability of categorical assignment between expert and novice readers was assessed using Cohen’s kappa. sensitivity and specificity of AI-extracted ultrasound LVEF vs. gold-standard classification by contrast echocardiography were calculated.

3. Results

3.1. Feasibility

We averaged 3 novice learners per patient (range: 1 to 5). With 2 hours of instruction and AI assistance, novice users were able to obtain images of sufficient quality for AI to measure LVEF in nearly all patients. Out of 60 scans by novice readers on n=10 patients, measurements could not be obtained by A4C in 2 (6.7%) cases, and by PLAX in 1 (3.3%) case.

The expert had similar rates of scan failure: Out of 80 patients scanned by the expert, measurements could be obtained by PLAX but not A4C in 3 (3.8%) cases, and A4C but not PLAX in 3 (3.8%) cases.

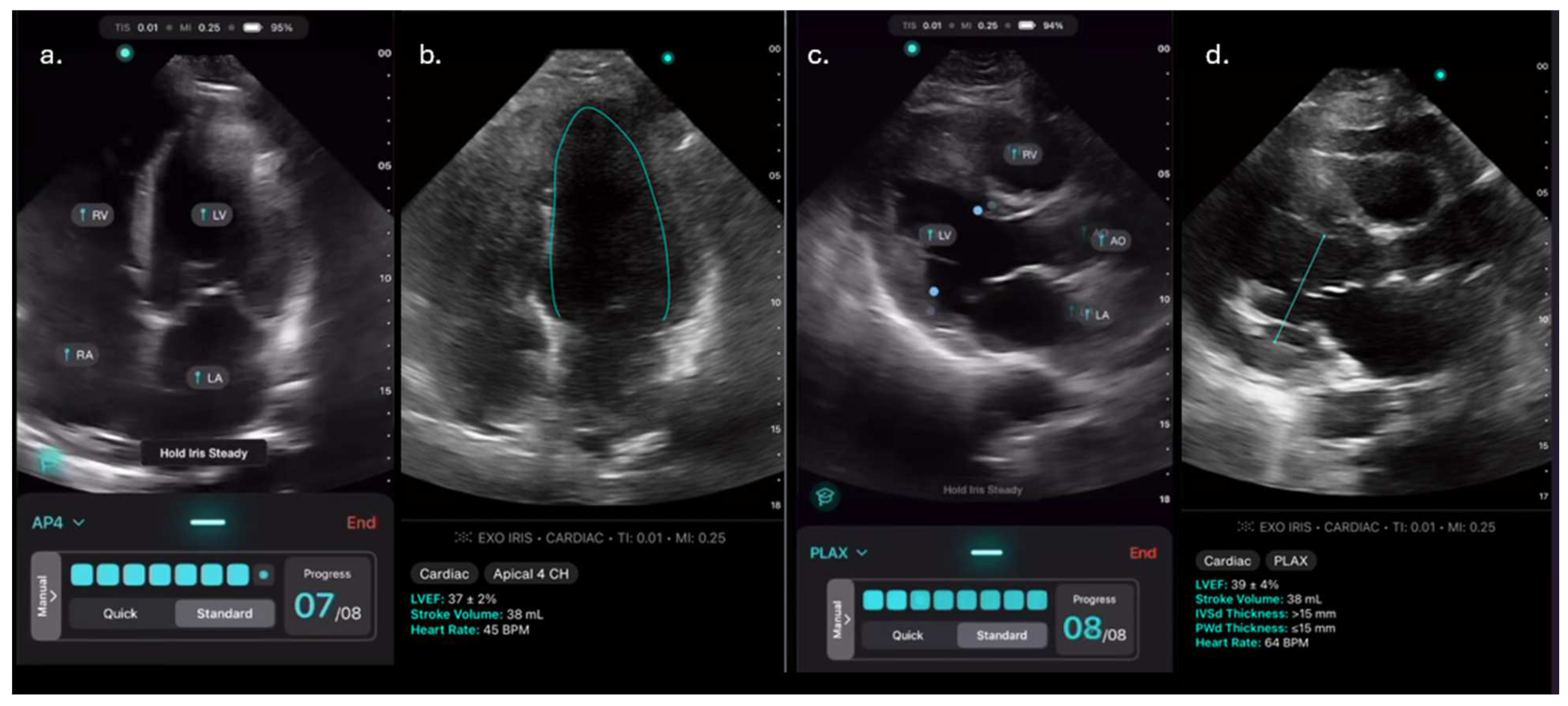

Scan times were significantly longer for novices than experts for both A4C and PLAX views (

Figure 2a,b). Scan time for A4C: 142(67-264) [mean(IQR)] seconds for novices vs. 36(25-56) s for experts; PLAX: 92(76-140) s vs. 28(15-40) s; p<0.00001]. Scan times were less than 5 minutes for every PLAX scan and for all but 3 A4C scans. Although A4C scan times were often substantially longer than PLAX, for a given patient the difference between time to scan A4C and PLAX was not significant, either for experts or novices.

3.2. Reliability

Inter-rater reliability of continuous LVEF values between novice and expert scanners was high for both A4C and PLAX [ICC (95% CI)s: 0.88 (0.57-0.97) and 0.9 (0.67-0.97), respectively], and very high when considering the mean LVEF taken by averaging A4C and PLAX views (ICC (95% CI):0.94 (0.77-0.99)] (

Table 1).

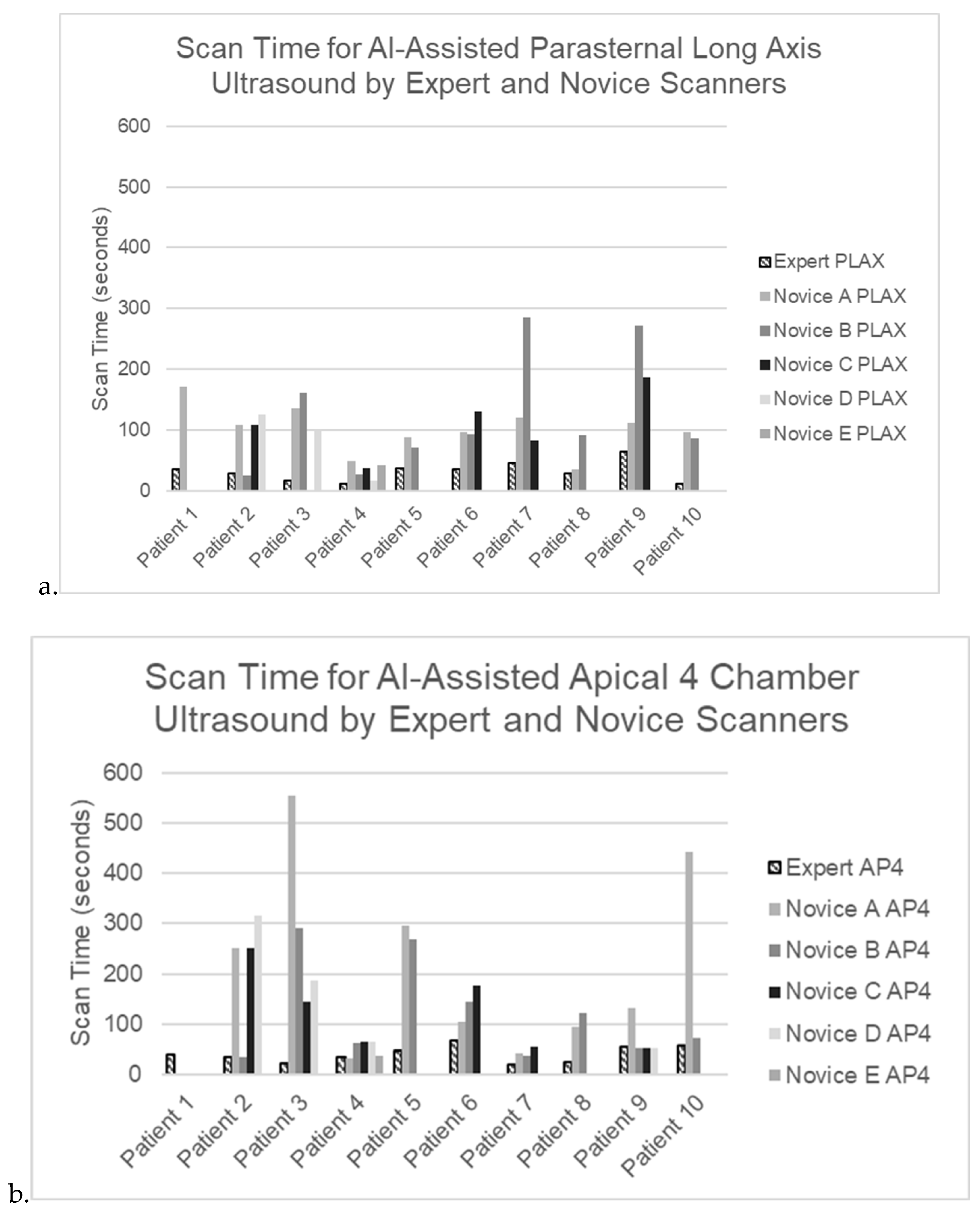

When reviewing scan-by-scan data, the expert and novices generally had similar LVEF results, even when these diverged from the gold-standard conventionally-measured LVEF (

Figure 3 a,b).

Considering LVEF classification by novice and expert raters at a threshold between normal/borderline (>40%) vs. markedly reduced (<40%), there was perfect agreement between novices and experts using PLAX or the mean of A4C and PLAX [kappa (95% CI): 1.0 (1.0-1.0) for both], but only fair agreement when considering A4C values alone [kappa (95% CI) 0.5 (-0.10-1.0)].

3.3. Accuracy

We focused our evaluation of accuracy of AI LVEF determination on Cohort 2, for whom a high-quality contrast-echo gold standard was consistently available. Reduced LVEF was present by gold-standard contrast echo in 27 (41.5%) of these cases.

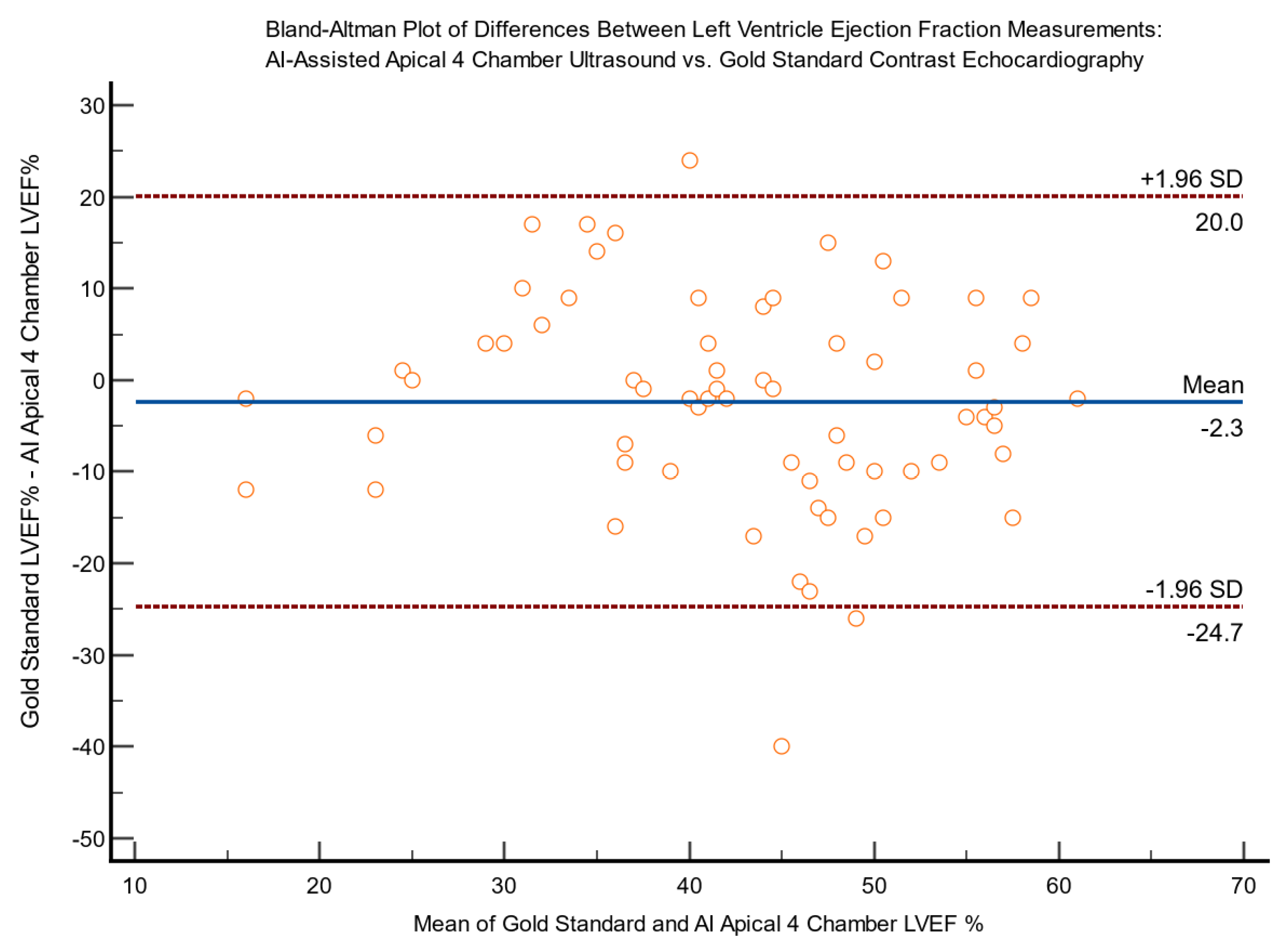

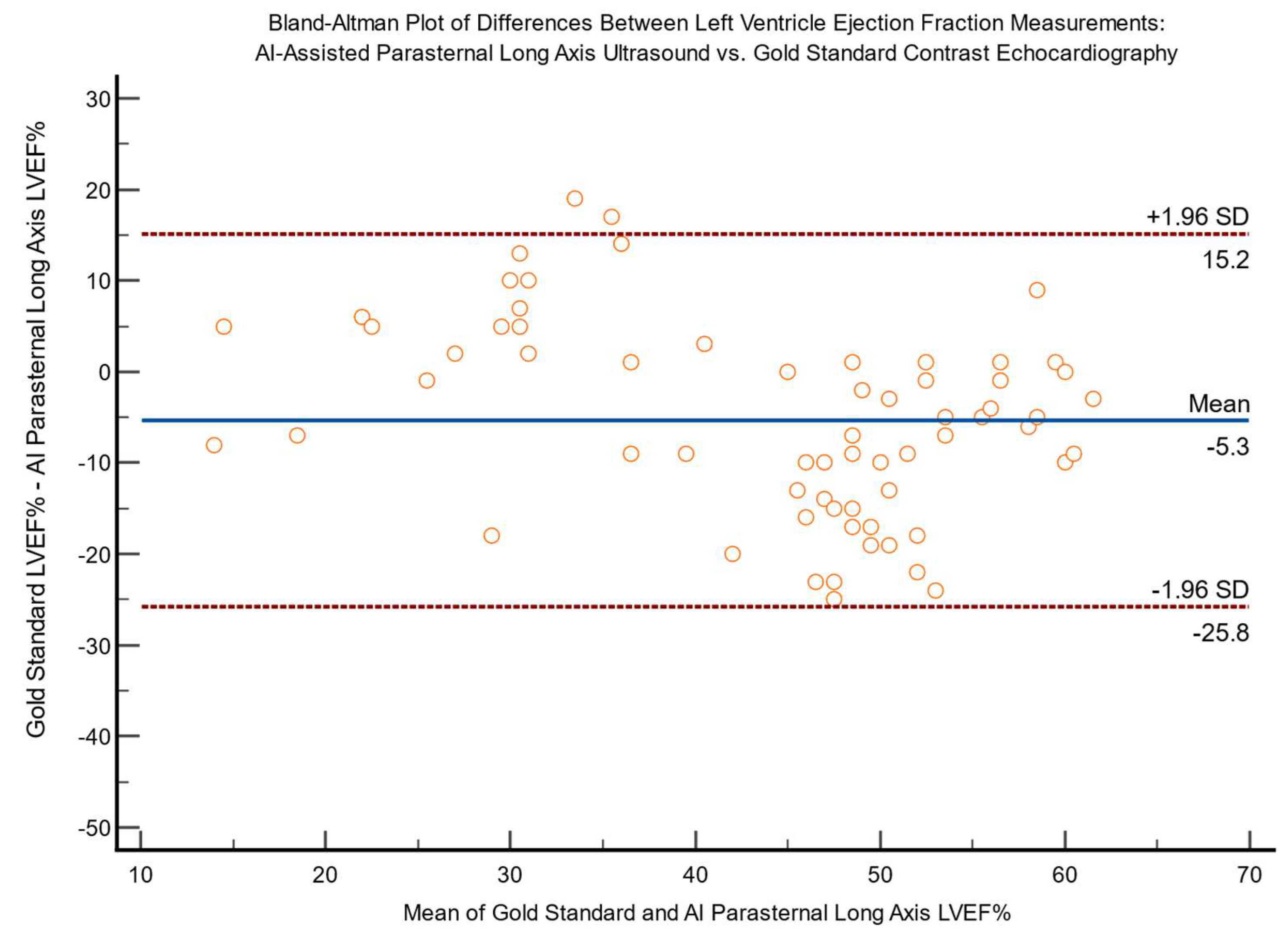

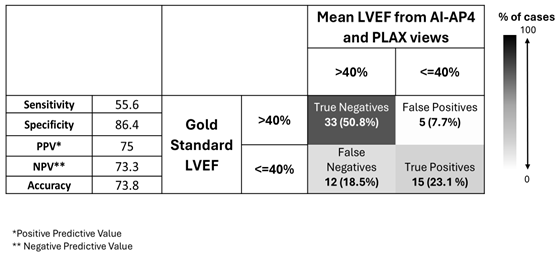

LVEF values measured by AI on A4C and PLAX views vs. gold-standard in Cohort 2 are shown in

Table 2 a-b. We found LVEF values obtained via A4C were significantly lower than those obtained via PLAX [44% (35-55%) vs. 53% (34-58%) respectively, median (IQR), p=0.042]. (

Table 2) This led to a slightly greater proportion of LVEF values being categorized by AI as reduced when measured from A4C than from PLAX (

Table 3). This was not statistically significant when considering AI mean values [24 (37%) cases for A4C vs. 19 (29%) for PLAX, p=0.332)], but became significant for AI ‘lower bound’ values [33 (51%) vs. 20 (31%), p=0.007]. Regardless of which view(s) were obtained, the AI generally detected fewer cases of reduced LVEF

<40% (19-24 patients) than the gold-standard test did (27 patients).

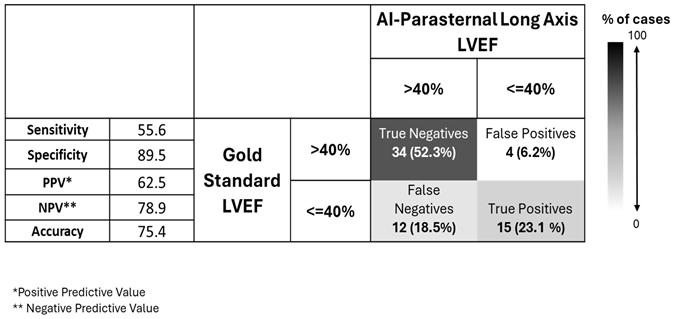

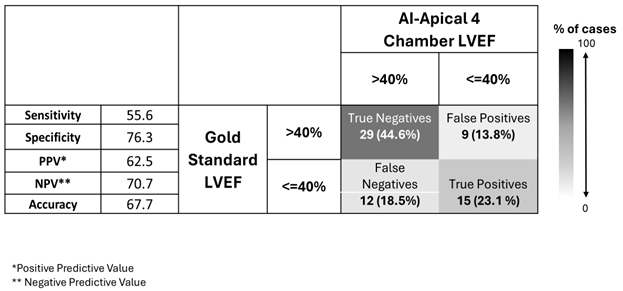

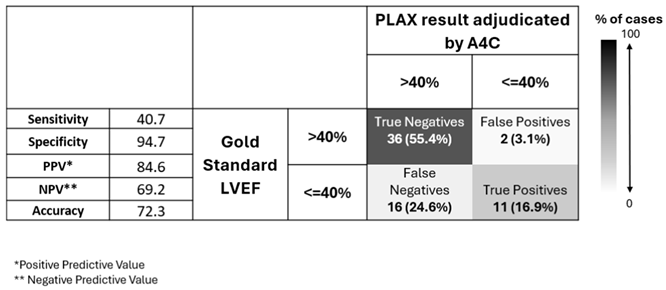

We computed confusion matrices for diagnostic strategies where the gold-standard LVEF is estimated clinically by performing only AI-PLAX, only AI-A4C, both, and a strategy where A4C is only added when LVEF on AI-PLAX view is reduced (

Table 4,

Table 5,

Table 6 and

Table 7).

From these confusion matrices, we see that AI-PLAX alone was highly specific for a reduced LVEF<40% (SP=90%, PPV=63%), but misses cases of reduced LVEF (SN=56%). The AI-A4C view alone was equally sensitive (SN=56%) and less specific (SP=76%), conferring no advantage. Routinely combining AI-PLAX and AI-A4C views and averaging the LVEF obtained gave a profile fairly similar to just performing AI-PLAX alone. A strategy of performing AI-PLAX in all patients, and performing AI-A4C and using the AI-A4C LVEF for classification only if the AI-PLAX found an abnormal LVEF, was highly specific (SP=95%, PPV=85%) at the cost of sensitivity (SN=41%). It was difficult to find an algorithm in which AI-PLAX and/or AI-A4C views could give high sensitivity for abnormal LVEF. If we used the AI “lower-bound” LVEF measurement rather than the mean, sensitivity did increase to 70%, 59%, and 70% (for A4C, PLAX, and mean of A4C+PLAX respectively), while mildly compromising specificity (to SP=63%, 89% and 82% respectively).

3.3. Agreement and Bias

Bland-Altman plots for differences between expert AI-assisted ultrasound and gold standard echocardiography LVEF measurements are shown in

Figure 4 and

Figure 5, demonstrating a lack of bias.

3.4. Accuracy in Cohort 1

Although Cohort 1 was designed to test reliability, we did also evaluate accuracy in this cohort. On the 10 patients who were scanned (a total of 80 times) by expert and multiple novices, mean LVEF on gold-standard echo was 50% (40-60%, mean(IQR)) and reduced LVEF (<40%) values were present in 2 (22.2%). Median AI-generated LVEF values were not significantly different between novice and expert scanners, nor between A4C and PLAX views (p>0.05). This 10 patient cohort was too small for us to meaningfully evaluate diagnostic performance, but we did note that the SN and SP values in this cohort were identical for novice and expert scanners for both PLAX and A4C views.

3.5. Accuracy in Cohort 1

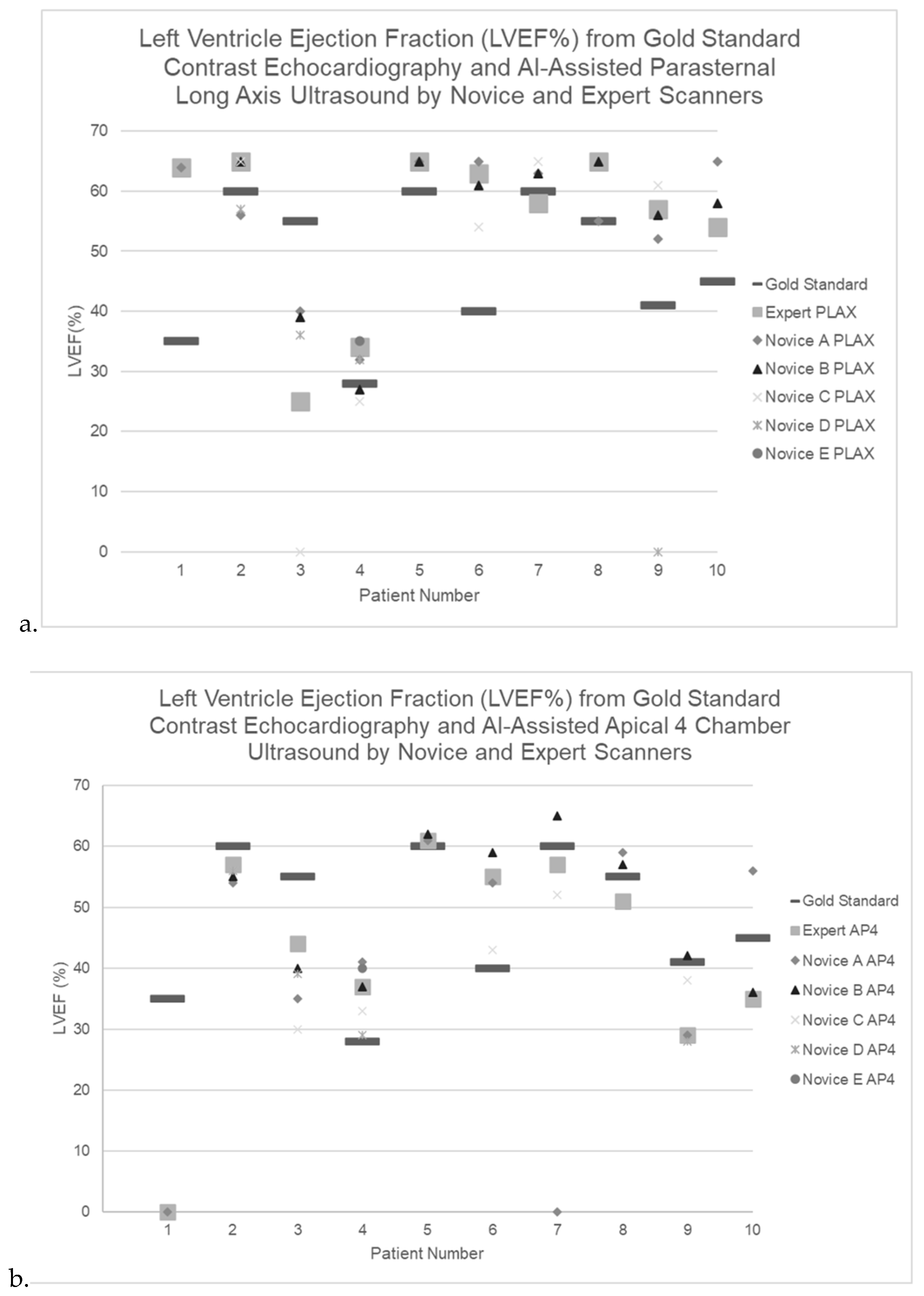

Case-by-case LVEF measurements by gold standard contrast echocardiography as well as by expert and novice AI-assisted ultrasound are shown for both cohorts in (Appendix Table A1).

4. Discussion

This prospective study investigated the feasibility, reliability and accuracy of determining the cardiac LVEF from AI-enhanced ultrasound in an ICU setting. There are two related steps in this process: non-expert users must acquire diagnostic-quality images (with AI assistance) in these challenging patients, and AI must interpret the often-suboptimal images accurately. We had several key findings.

Feasibility of AI-enhanced echo was strong. We found that novice users with only 2 hours of training could generate echo images of quality adequate for AI analysis in ~96% of ICU scans, with a 3-4% scan failure rate similar to that of experts. These results align with previous studies demonstrating that AI-enhanced ultrasound, utilized by both novice and expert users, consistently produces diagnostic quality images from PLAX and A4C views [

13,

15]. This result is particularly impressive considering the factors limiting echo in ICU patients: immobility (especially the inability to turn into decubitus position), potentially unstable clinical status, irregular and/or rapid heart rhythms, shadowing from abnormal lungs, large body habitus, uncooperative partially sedated or delirious patients, and artifacts from machinery such as mechanical ventilation.

While scan times were ~3x as long for novices as for experts, nearly all scans could be obtained in less than 5 minutes even by novices. The PLAX view was easier and substantially faster for novices to obtain: their scan times averaged ~1.5 minutes for the PLAX view and ~3 minutes for A4C.

Inter-observer reliability was high. Novices and experts generated images that led to similar AI calculations of LVEF (ICC=0.88-0.94 for the 2 views). This is expected, since a key advantage of AI is that in many applications it ‘levels the playing field’, enabling novices to perform tasks nearly as well as experts.

Accuracy of the AI LVEF calculations in these challenging ICU patients was more mixed. Concordant with frequent real-world practice [

16], we used a threshold of 40% to differentiate between a substantially reduced LVEF (true-positive result) and a normal/minimally-reduced LVEF. When AI detected an LVEF

<40%, it was generally correct, with high specificity 90-94%. The A4C view had more false-positive AI results than PLAX, potentially due to the increased difficulty of acquiring the A4C view and the effects of foreshortening in a suboptimal A4C view. Sensitivity was low at 56% when using the AI “mean LVEF” prediction, rising to 70% when using the AI “lower-bound LVEF” prediction. Overall, in our ICU patients the AI tool could be considered useful to confirm a suspected abnormal LVEF, due to its high specificity and positive predictive value, but a normal LVEF on this test would not exclude decreased cardiac function.

When comparing A4C and PLAX views, while AI-derived measurements from the two views were generally similar, A4C LVEF estimates were significantly lower than those from PLAX in our cohort, leading to an underestimation of LVEF when relying on A4C alone. There is controversy in the literature regarding which view allows the most accurate AI-enhanced LVEF estimation, with some studies reporting that A4C outperforms PLAX [

4,

17], and other studies supporting our findings that PLAX was superior [

14,

18]. The differences may relate to patient cohorts and user experience. Since PLAX is more easily obtained by non-experts, these images may be higher-quality in more patients. However, because the PLAX view does not include the cardiac apex, patients with focal pathology affecting the apex (as is frequent in myocardial infarctions) may be best assessed by A4C views obtained by experts.

Given these discrepancies, many studies emphasize the value of integrating multiple echocardiographic views to improve the diagnostic accuracy of LVEF [

4,

14,

17,

18]. Consistent with this, we found that sensitivity and specificity for detecting reduced LVEF were highest when combining measurements from both A4C and PLAX. Performing the more easily-obtained PLAX view in all patients and only adding A4C when PLAX was abnormal would have improved specificity slightly. Future larger studies could evaluate the validity of AI-enhanced single-view vs. multi-view approaches for LVEF assessment across different patient populations and clinical settings.

Our study had limitations. Although a strength of our study was the large number of novice scanners in an ICU setting (30 learners), we had only a small number of patients in Cohort 1 scanned by these novices (n=10). This is because it was logistically difficult to have an ICU patient stable enough to be scanned by many learners when they were available. We also did not have a concurrent contrast-echo gold-standard in all of these patients, again for logistical reasons. Another limitation is that the larger series in Cohort 2 (n=65) assessing LVEF accuracy was scanned only by our expert, without learners; this is again due to logistical constraints in the hospital setting. However, since our results in Cohort 1 showed that the AI LVEF estimates were very similar whether the scan was performed by an expert or novice, the accuracy of the AI tool in Cohort 2 is likely to be broadly similar to that obtained by less-experienced users.

5. Conclusions

AI-enhanced echocardiography is feasible in ICU patients. After just 2 hours of training, novices were able to obtain images of sufficient quality to produce an AI result, in 96% of scans. The PLAX view took novices half as long to obtain as A4C, and both views could be obtained in <5 minutes. AI LVEF estimates were similar whether the scan was performed by a novice or an expert. When AI detected a low LVEF it was highly accurate, but AI had limited sensitivity for low LVEF, implying a need for caution when the AI estimates LVEF to be normal in ICU patients.

This study highlights the potential of AI-enhanced ultrasound to improve cardiac assessment across diverse healthcare settings. In ICU, it could improve efficiency by streamlining evaluations, quickly confirming suspected poor cardiac function and reducing the burden on specialist sonographers. In areas lacking experts, such as small communities, AI-enhanced ultrasound could enable primary care providers to quickly conduct cardiac triage, facilitating timely referrals to specialist care for those most in need.

Appendix A

Table A1.

Per-patient measurement of left ventricle ejection fraction (LVEF) % by gold standardcontrast echocardiography, expert-acquired AI- assisted A4C and PLAX ultrasound, and novice-acquired AI-assisted A4C and PLAX ultrasound.

Table A1.

Per-patient measurement of left ventricle ejection fraction (LVEF) % by gold standardcontrast echocardiography, expert-acquired AI- assisted A4C and PLAX ultrasound, and novice-acquired AI-assisted A4C and PLAX ultrasound.

Author Contributions

LB was the expert sonographer and curated all novice, expert, and gold standard imaging data. CG, LB, JJ, HB, BB, CK and SW were major contributors to the writing of the manuscript. SW performed statistical analysis. JJ, BB, MN and HB were deeply involved in the conception of the study. All authors read and approved the final manuscript.

Funding

This research was funded by Alberta Innovates and CIFAR grants.

Institutional Review Board Statement

Institutional ethics approval was in place under University of Alberta HREB Pro00119711.

Informed Consent Statement

Novice users of the ultrasound-AI tool provided informed consent to participate, while scans of ICU patients (who were generally unconscious and intubated) were integrated into routine care on a waiver-of-consent basis.

Data Availability Statement

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Acknowledgments

We gratefully acknowledge Alberta Innovates for project funding, as well as CIFAR. Dr. Jaremko’s academic time is partially supported by Medical Imaging Consultants. We thank the ABACUS lab and the University of Alberta Hospital Intensive Care Unit staff for logistical support.

Conflicts of Interest

JJ holds equity in Exo Inc, but is not paid or employed by Exo. All other authors have no conflicts of interest to declare.

Abbreviations

The following abbreviations are used in this manuscript:

| ICU |

Intensive Care Unit |

| LVEF |

Left Ventricular Ejection Fraction |

| PLAX |

Parasternal Long Axis |

| A4C |

Apical 4 Chamber |

| A2C |

Apical 2 Chamber |

| AI |

Artificial Intelligence |

| POCUS |

Point-of-care Ultrasound |

| BMI |

Body Mass Index |

| ICC |

Intraclass Correlation Coefficient |

| SN |

Sensitivity |

| SP |

Specificity |

| PPV |

Positive Predictive Value |

| NPV |

Negative Predictive Value |

References

- Chen, X.; Yang, F.; Zhang, P.; Lin, X.; Wang, W.; Pu, H.; Chen, X.; Chen, Y.; Yu, L.; Deng, Y.; et al. Artificial Intelligence–Assisted Left Ventricular Diastolic Function Assessment and Grading: Multiview Versus Single View. J. Am. Soc. Echocardiogr. 2023, 36, 1064–1078. [CrossRef]

- Dietrich, C.F.; Goudie, A.; Chiorean, L.; Cui, X.W.; Gilja, O.H.; Dong, Y.; Abramowicz, J.S.; Vinayak, S.; Westerway, S.C.; Nolsøe, C.P.; et al. Point of Care Ultrasound: A WFUMB Position Paper. Ultrasound Med. Biol. 2017, 43, 49–58. [CrossRef]

- Kirkpatrick, J.N.; Grimm, R.; Johri, A.M.; Kimura, B.J.; Kort, S.; Labovitz, A.J.; Lanspa, M.; Phillip, S.; Raza, S.; Thorson, K.; et al. Recommendations for Echocardiography Laboratories Participating in Cardiac Point of Care Cardiac Ultrasound (POCUS) and Critical Care Echocardiography Training: Report from the American Society of Echocardiography. J. Am. Soc. Echocardiogr. 2020, 33, 409–422.e4. [CrossRef]

- Schneider, M.; Bartko, P.; Geller, W.; Dannenberg, V.; König, A.; Binder, C.; Goliasch, G.; Hengstenberg, C.; Binder, T. A machine learning algorithm supports ultrasound-naïve novices in the acquisition of diagnostic echocardiography loops and provides accurate estimation of LVEF. Int. J. Cardiovasc. Imaging 2020, 37, 577–586. [CrossRef]

- Dykes JC, Kipps AK, Chen A, Nourse S, Rosenthal DN, Selamet Tierney ES (2019) Parental acquisition of echocardiographic images in pediatric heart transplant patients using a handheld device: A pilot tele-health study. J Am Soc Echocardiogr 32:404–411.

- Mor-Avi, V.; Khandheria, B.; Klempfner, R.; Cotella, J.I.; Moreno, M.; Ignatowski, D.; Guile, B.; Hayes, H.J.; Hipke, K.; Kaminski, A.; et al. Real-Time Artificial Intelligence–Based Guidance of Echocardiographic Imaging by Novices: Image Quality and Suitability for Diagnostic Interpretation and Quantitative Analysis. Circ. Cardiovasc. Imaging 2023, 16, 905–913. [CrossRef]

- Jaji A, Loomba RS (2024) Hocus POCUS! Parental quantification of left-ventricular ejection fraction using point of care ultrasound: Fiction or reality? Pediatr Cardiol 45:1289–1294.

- Olaisen, S.; Smistad, E.; Espeland, T.; Hu, J.; Pasdeloup, D.; Østvik, A.; Aakhus, S.; Rösner, A.; Malm, S.; Stylidis, M.; et al. Automatic measurements of left ventricular volumes and ejection fraction by artificial intelligence: clinical validation in real time and large databases. Eur. Hear. J. - Cardiovasc. Imaging 2023, 25, 383–395. [CrossRef]

- Labovitz AJ, Noble VE, Bierig M, Goldstein SA, Jones R, Kort S, Porter TR, Spencer KT, Tayal VS, Wei K (2010) Focused cardiac ultrasound in the emergent setting: a consensus statement of the American So-ciety of Echocardiography and American College of Emergency Physicians. J Am Soc Echocardiogr 23:1225–1230.

- Aldaas, O.M.; Igata, S.; Raisinghani, A.; Kraushaar, M.; DeMaria, A.N. Accuracy of left ventricular ejection fraction determined by automated analysis of handheld echocardiograms: A comparison of experienced and novice examiners. Echocardiography 2019, 36, 2145–2151. [CrossRef]

- Barry, T.; Farina, J.M.; Chao, C.-J.; Ayoub, C.; Jeong, J.; Patel, B.N.; Banerjee, I.; Arsanjani, R. The Role of Artificial Intelligence in Echocardiography. J. Imaging 2023, 9, 50. [CrossRef]

- Egan M, Ionescu A (2008) The pocket echocardiograph: a useful new tool? Eur J Echocardiogr 9:721–725.

- Lau, T.; Ahn, J.S.; Manji, R.; Kim, D.J. A Narrative Review of Point of Care Ultrasound Assessment of the Optic Nerve in Emergency Medicine. Life 2023, 13, 531. [CrossRef]

- Vega, R.; Kwok, C.; Hareendranathan, A.R.; Nagdev, A.; Jaremko, J.L. Assessment of an Artificial Intelligence Tool for Estimating Left Ventricular Ejection Fraction in Echocardiograms from Apical and Parasternal Long-Axis Views. Diagnostics 2024, 14, 1719. [CrossRef]

- Lohese, O.; Garberich, R.; Stanberry, L.; Sorajja, P.; Cavalcante, J.; Gossl, M. ADOPTABILITY AND ACCURACY OF POINT-OF-CARE ULTRASOUND IN SCREENING FOR VALVULAR HEART DISEASE IN THE PRIMARY CARE SETTING. Circ. 2021, 77, 1711. [CrossRef]

- Murphy SP, Ibrahim NE, Januzzi JL Jr (2020) Heart failure with reduced ejection fraction: A review: A review. JAMA 324:488–504.

- Asch, F.M.; Mor-Avi, V.; Rubenson, D.; Goldstein, S.; Saric, M.; Mikati, I.; Surette, S.; Chaudhry, A.; Poilvert, N.; Hong, H.; et al. Deep Learning–Based Automated Echocardiographic Quantification of Left Ventricular Ejection Fraction: A Point-of-Care Solution. Circ. Cardiovasc. Imaging 2021, 14, 528–537. [CrossRef]

- Siliv, N.S.; Yamanoglu, A.; Pınar, P.; Yamanoglu, N.G.C.; Torlak, F.; Parlak, I. Estimation of Cardiac Systolic Function Based on Mitral Valve Movements: An Accurate Bedside Tool for Emergency Physicians in Dyspneic Patients. J. Ultrasound Med. 2018, 38, 1027–1038. [CrossRef]

Figure 1.

a-d. Images obtained using the AI-assisted tool on the Exo IRIS probe. a) Apical 4 chamber (A4C) view, b) AI-assisted LVEF of A4C, c) Parasternal long axis view (PLAX) d) AI-assisted LVEF of PLAX.

Figure 1.

a-d. Images obtained using the AI-assisted tool on the Exo IRIS probe. a) Apical 4 chamber (A4C) view, b) AI-assisted LVEF of A4C, c) Parasternal long axis view (PLAX) d) AI-assisted LVEF of PLAX.

Figure 2.

a-b. Scan time for AI-assisted ultrasound by expert and novice scanners on n=10 ICU patients for a) parasternal long axis view and b) apical 4 chamber view.

Figure 2.

a-b. Scan time for AI-assisted ultrasound by expert and novice scanners on n=10 ICU patients for a) parasternal long axis view and b) apical 4 chamber view.

Figure 3.

a-b. Left Ventricle Ejection Fraction (LVEF%) from AI-Assisted ultrasound by Expert and Novice Scanners for n=10 ICU patients via a)parasternal long axis view and b) apical 4 chamber view.

Figure 3.

a-b. Left Ventricle Ejection Fraction (LVEF%) from AI-Assisted ultrasound by Expert and Novice Scanners for n=10 ICU patients via a)parasternal long axis view and b) apical 4 chamber view.

Figure 4.

Bland-Altman Plot of differences between left ventricle ejection fraction (LVEF%) measurements via AI-assisted apical 4 chamber ultrasound (A4C) and gold standard contrast echocardiography (n=65).

Figure 4.

Bland-Altman Plot of differences between left ventricle ejection fraction (LVEF%) measurements via AI-assisted apical 4 chamber ultrasound (A4C) and gold standard contrast echocardiography (n=65).

Figure 5.

Bland-Altman Plot of differences between left ventricle ejection fraction measurements (LVEF%) via AI-assisted parasternal long axis ultrasound (PLAX) vs. gold standard contrast echocardiography (n=65).

Figure 5.

Bland-Altman Plot of differences between left ventricle ejection fraction measurements (LVEF%) via AI-assisted parasternal long axis ultrasound (PLAX) vs. gold standard contrast echocardiography (n=65).

Table 1.

Intra-class correlation coefficients (ICC) for continuous AI-derived LVEF measurements obtained by expert vs. novice scanners from both A4C and PLAX views (n=9 cases with all measurements available).

Table 1.

Intra-class correlation coefficients (ICC) for continuous AI-derived LVEF measurements obtained by expert vs. novice scanners from both A4C and PLAX views (n=9 cases with all measurements available).

| Measurement |

ICC |

95% CI |

| AI A4C |

0.88 |

0.57-0.97 |

| AI PLAX |

0.9 |

0.67-0.97 |

| Mean A4C and PLAX |

0.94 |

0.77-.99 |

| AI A4C lower bound |

0.85 |

0.50-0.96 |

| AI PLAX lower bound |

0.9 |

0.67-0.97 |

| Mean A4C and PLAX lower bound |

0.92 |

0.72-0.98 |

Table 2.

Descriptive statistics on cohort 2 data, n=65 cases where gold standard and expert AI-generated LVEF data are available. The AI provides a mean and “lower bound” estimate of LVEF.

Table 2.

Descriptive statistics on cohort 2 data, n=65 cases where gold standard and expert AI-generated LVEF data are available. The AI provides a mean and “lower bound” estimate of LVEF.

| |

Gold Standard |

AI A4C |

AI PLAX |

AI A4C and PLAX |

p-value |

| Median(IQR) |

Range |

Median(IQR) |

Range |

Median (IQR) |

Range |

Median (IQR) |

Range |

| LVEF% mean |

42 (35-50) |

10-63 |

44 (35-55) |

17-65 |

53 (35-58) |

12-65 |

49 (38-55) |

20-63 |

0.042 |

| LVEF % lower bound |

- |

- |

40 (31-52) |

15-62 |

50 (31-54) |

7-63 |

60 (34-52) |

16-60 |

0.062 |

Table 3.

LVEF classification in cohort 2 data, n=65 cases where gold standard and expert AI-generated A4C and PLAX ultrasound data are available.

Table 3.

LVEF classification in cohort 2 data, n=65 cases where gold standard and expert AI-generated A4C and PLAX ultrasound data are available.

| |

Gold standard |

AI A4C |

AI PLAX |

Mean AI A4C and PLAX |

p-value* |

| N (%) |

N (%) |

N (%) |

N (%) |

| LVEF <=40% |

27 (41.5%) |

24 (36.9%) |

19 (29.2%) |

20 (30.8%) |

0.3323 |

| LVEF lower bound <=40% |

- |

33 (50.8%) |

20 (30.8%) |

26 (40%) |

0.0072 |

Table 4.

LVEF classification by AI-assisted parasternal long axis (PLAX) ultrasound compared to gold standard echocardiography in n=65 expert-scanned cases.

Table 4.

LVEF classification by AI-assisted parasternal long axis (PLAX) ultrasound compared to gold standard echocardiography in n=65 expert-scanned cases.

Table 5.

LVEF classification by AI-assisted apical 4 chamber (A4C) ultrasound compared to gold standard echocardiography in n=65 expert-scanned cases.

Table 5.

LVEF classification by AI-assisted apical 4 chamber (A4C) ultrasound compared to gold standard echocardiography in n=65 expert-scanned cases.

Table 6.

Mean LVEF classification by averaging LVEF measured from AI-assisted A4C and PLAX views compared to gold standard echocardiography in n=65 expert-scanned cases.

Table 6.

Mean LVEF classification by averaging LVEF measured from AI-assisted A4C and PLAX views compared to gold standard echocardiography in n=65 expert-scanned cases.

Table 7.

LVEF classification by AI-assisted ultrasound according to PLAX, adjudicated by A4C in cases where PLAX returned LVEF value <=40%.

Table 7.

LVEF classification by AI-assisted ultrasound according to PLAX, adjudicated by A4C in cases where PLAX returned LVEF value <=40%.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).