Submitted:

10 March 2025

Posted:

11 March 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

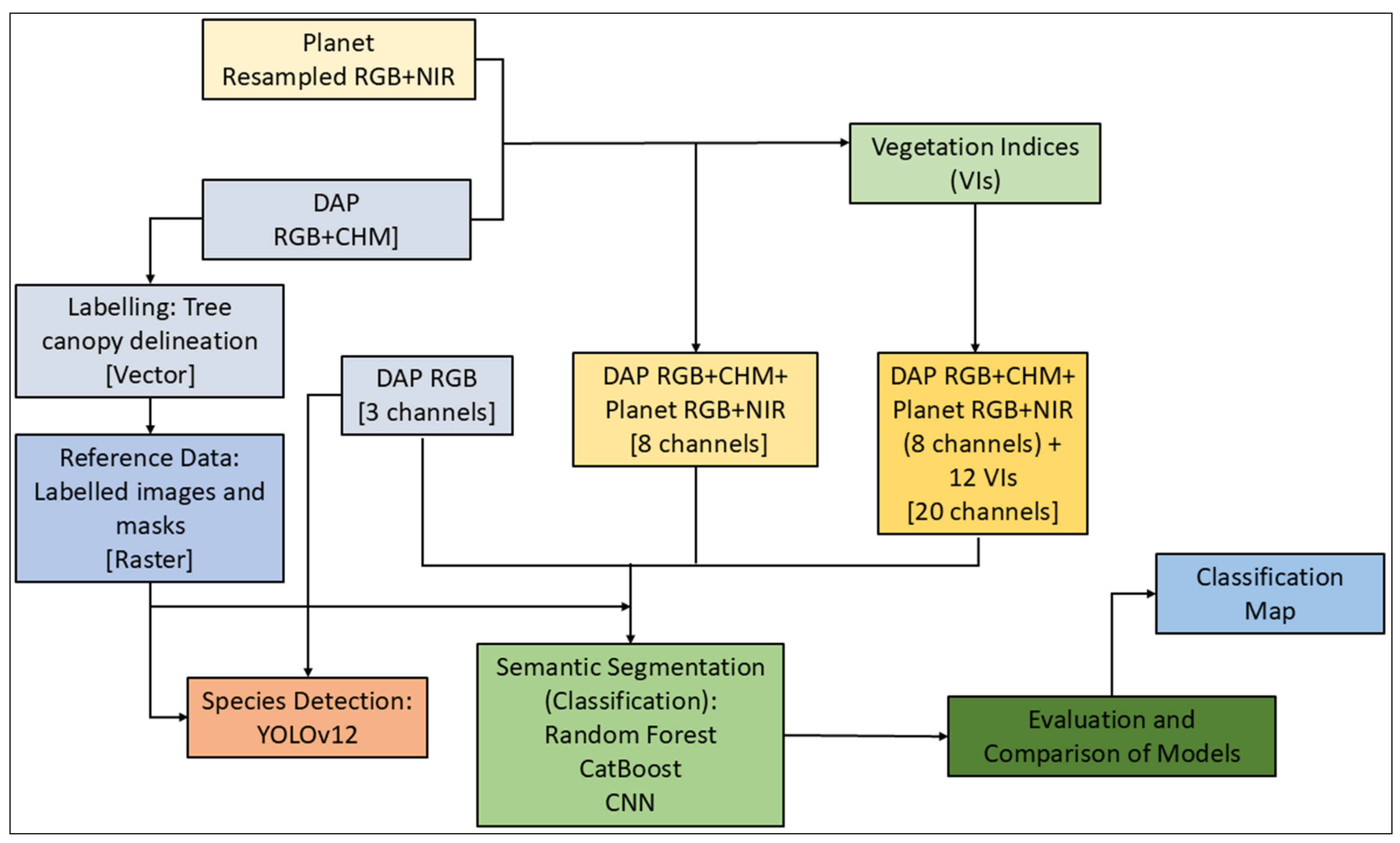

2. Materials and Methods

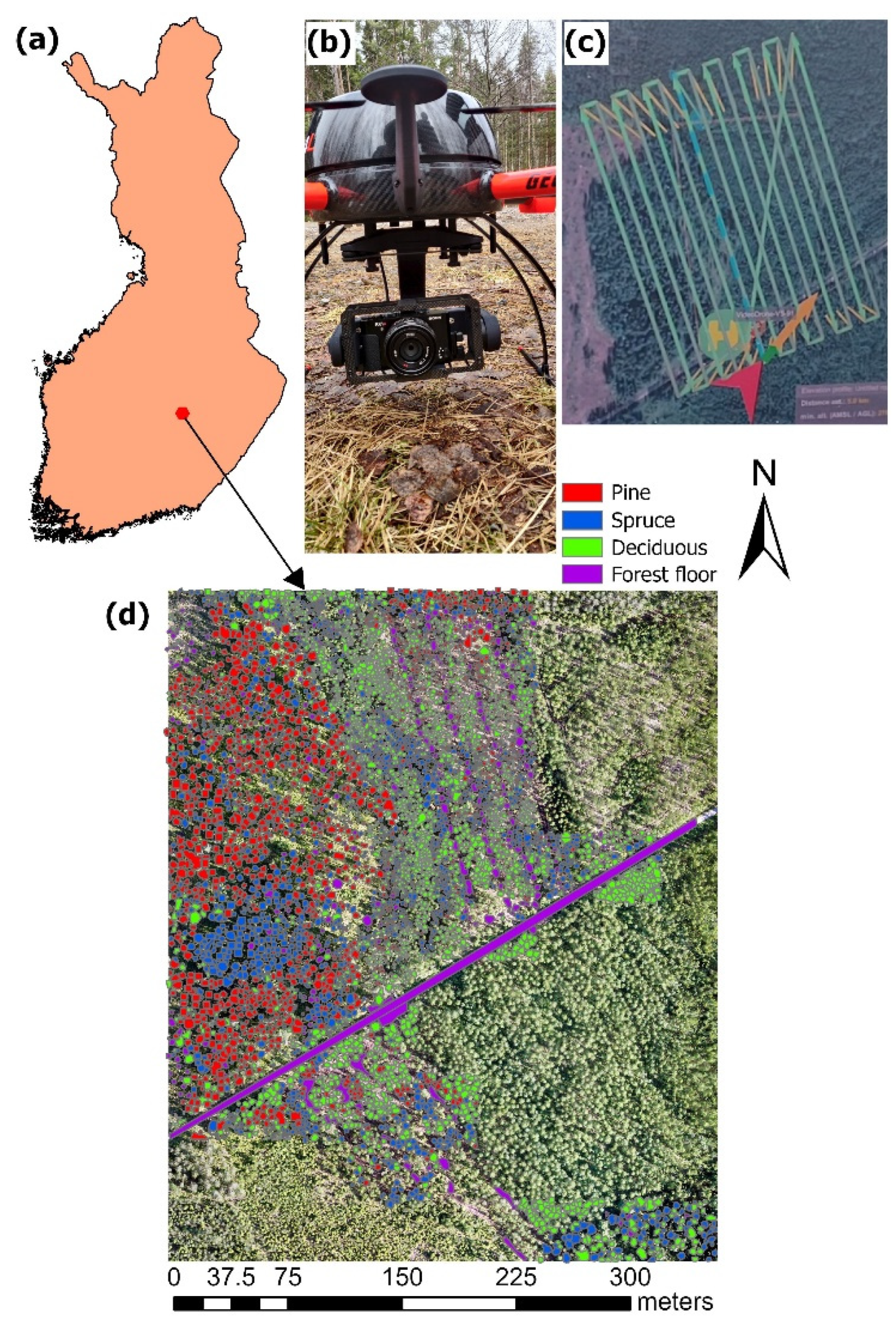

2.1. Study Area

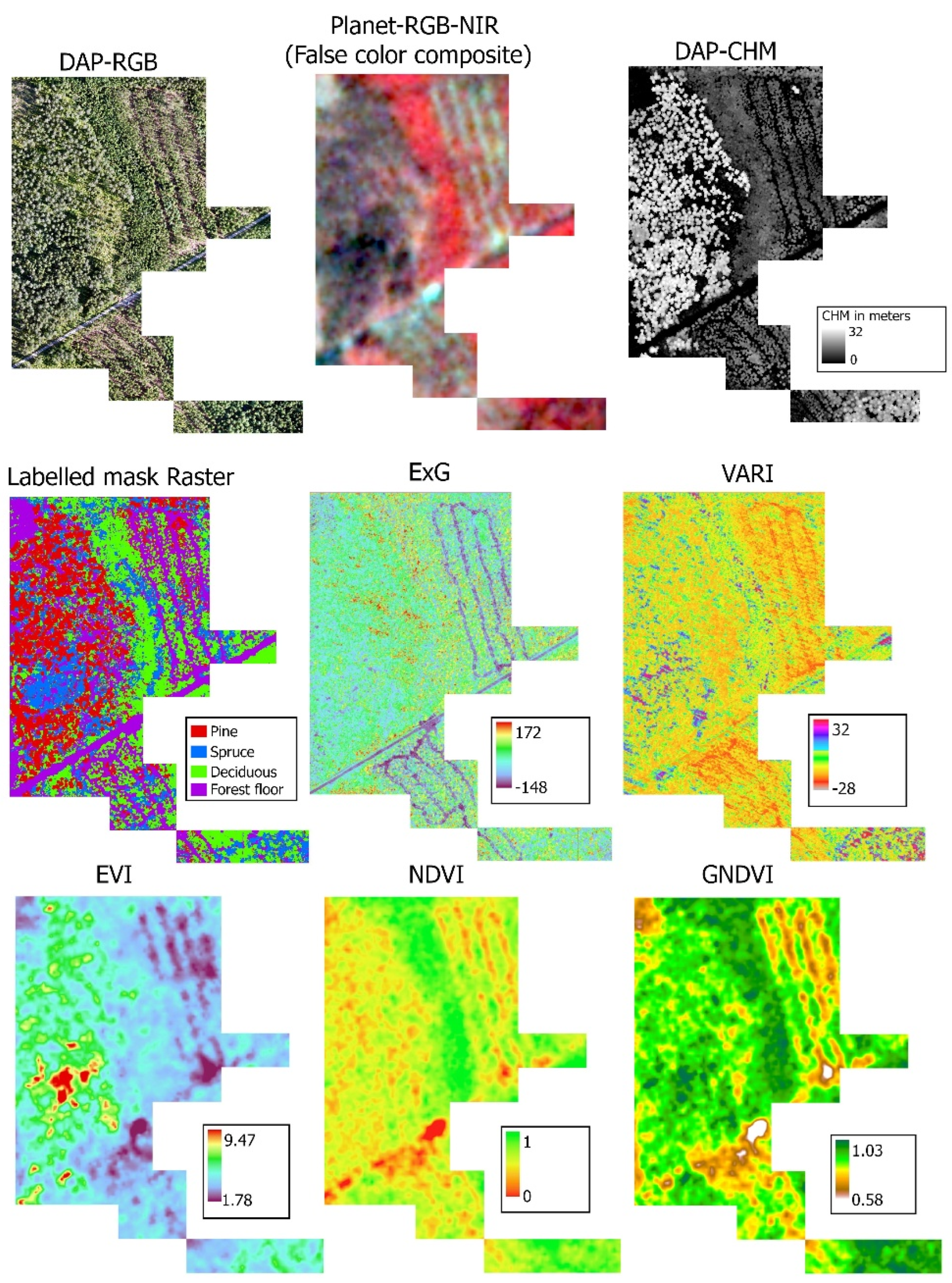

2.2. Digital Aerial Photogrammetry

2.3. Preparation of Reference Data

2.4. Planet Data

2.5. Vegetation Indices

2.6. Preparation of the Input Data

2.7. Tree Species Detection and Classification

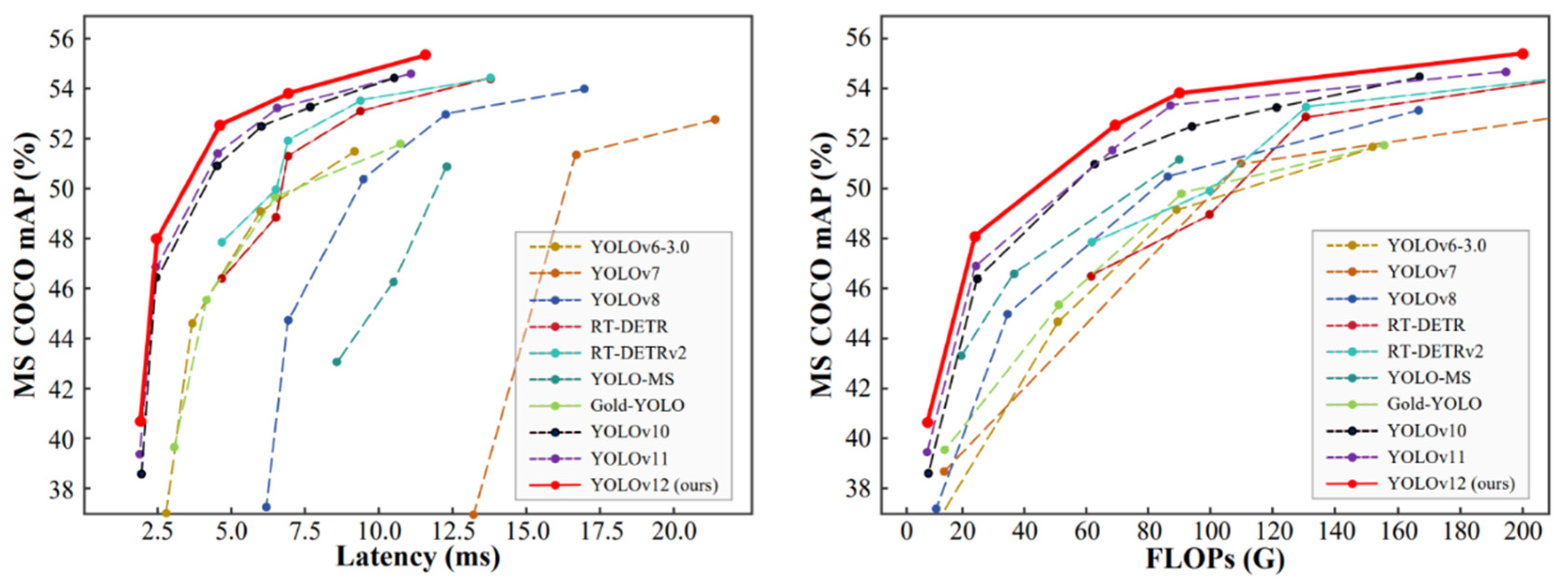

2.7.1. YOLOv12

2.7.2. Random Forest

2.7.3. Categorical Boosting

2.7.4. Convolutional Neural Networks

2.8. Model Evaluation

3. Results

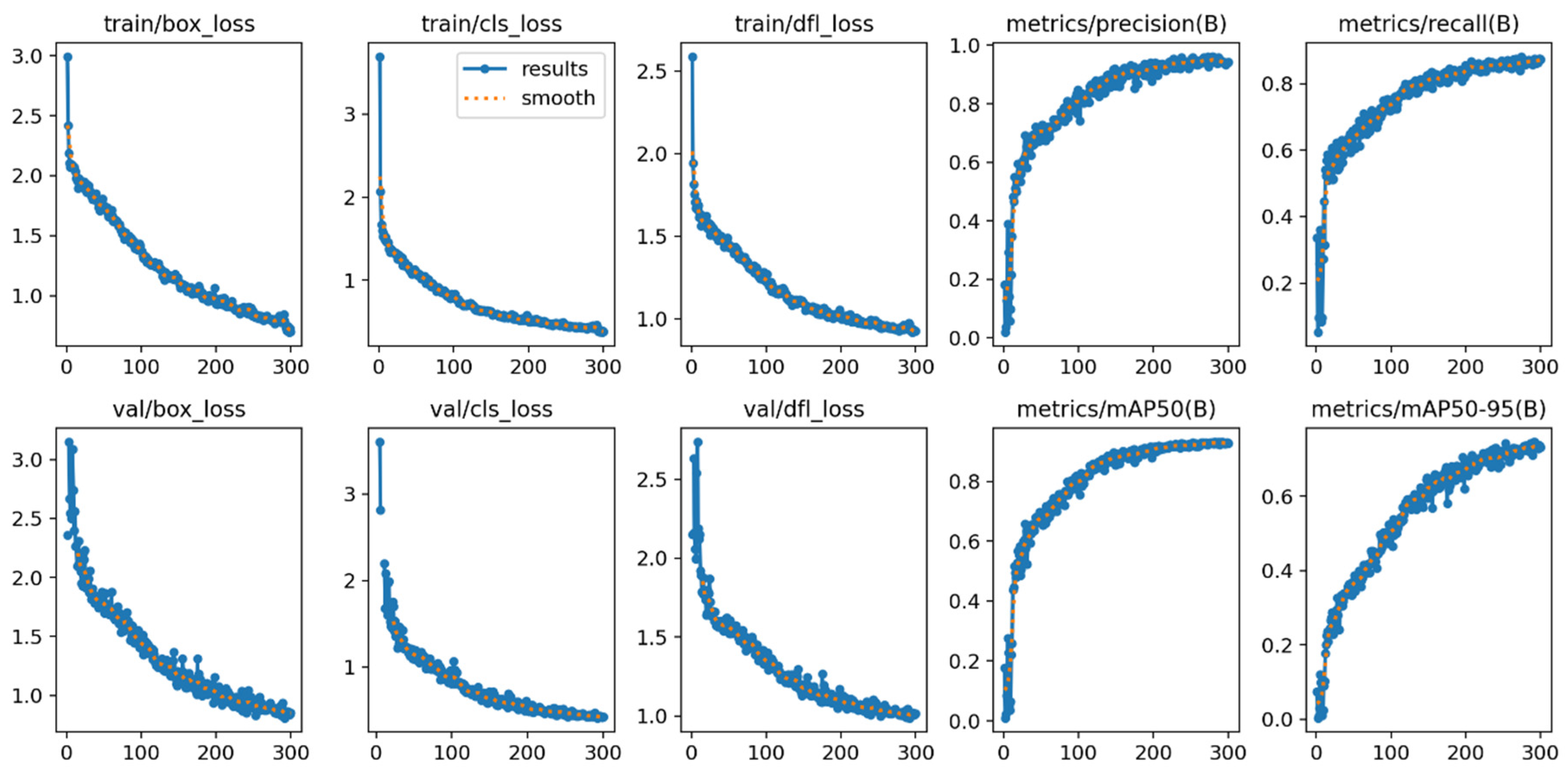

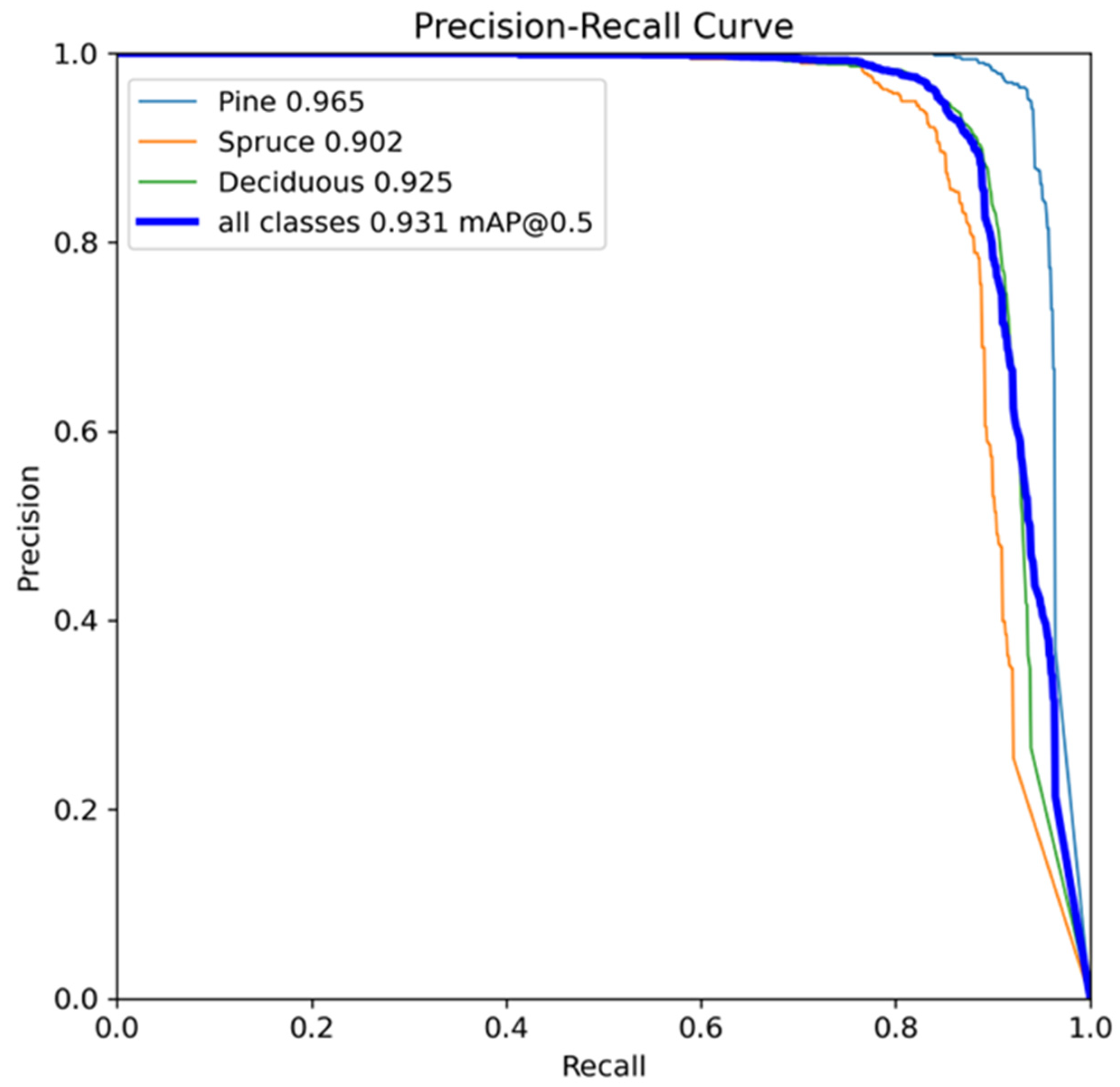

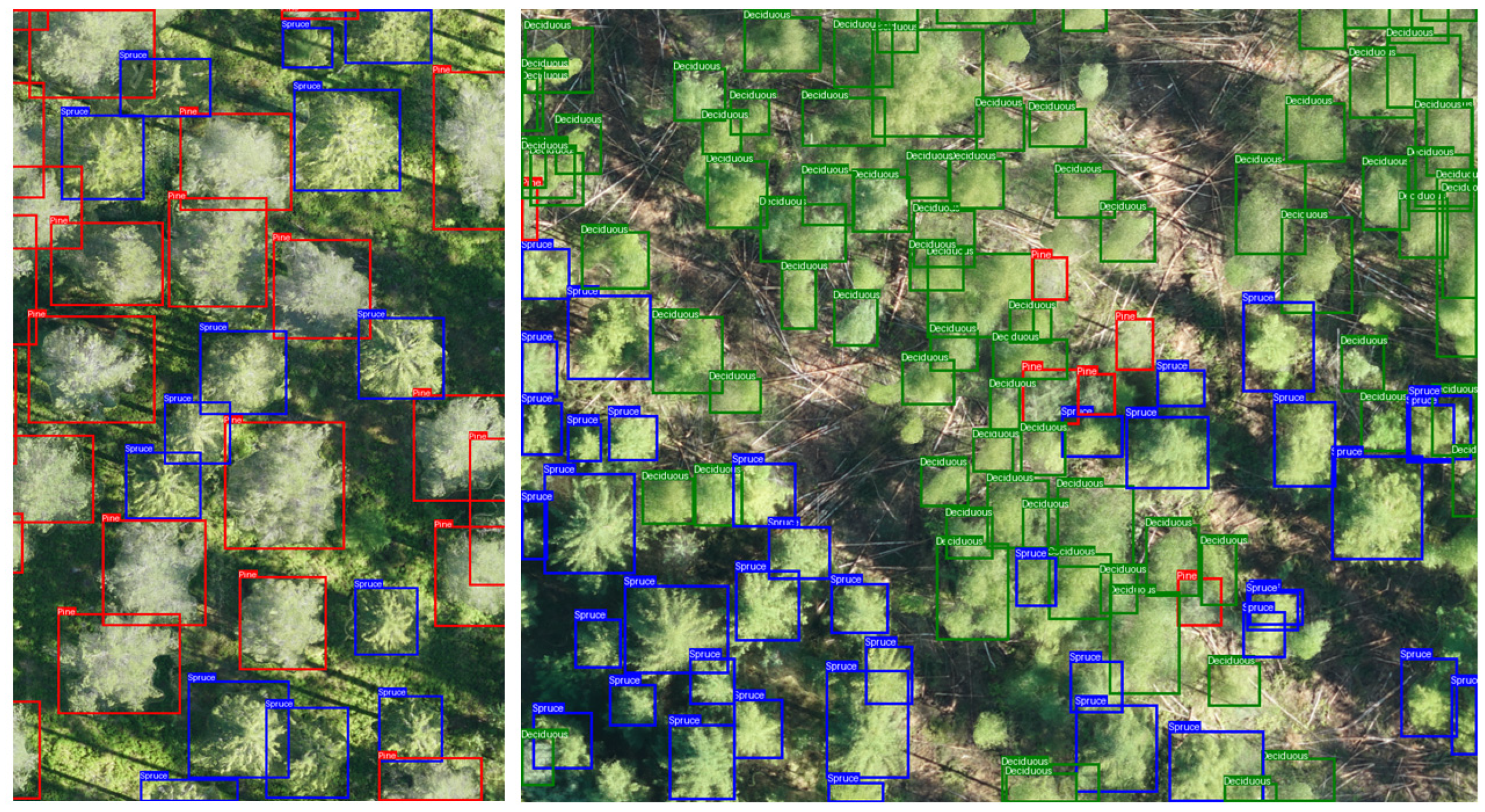

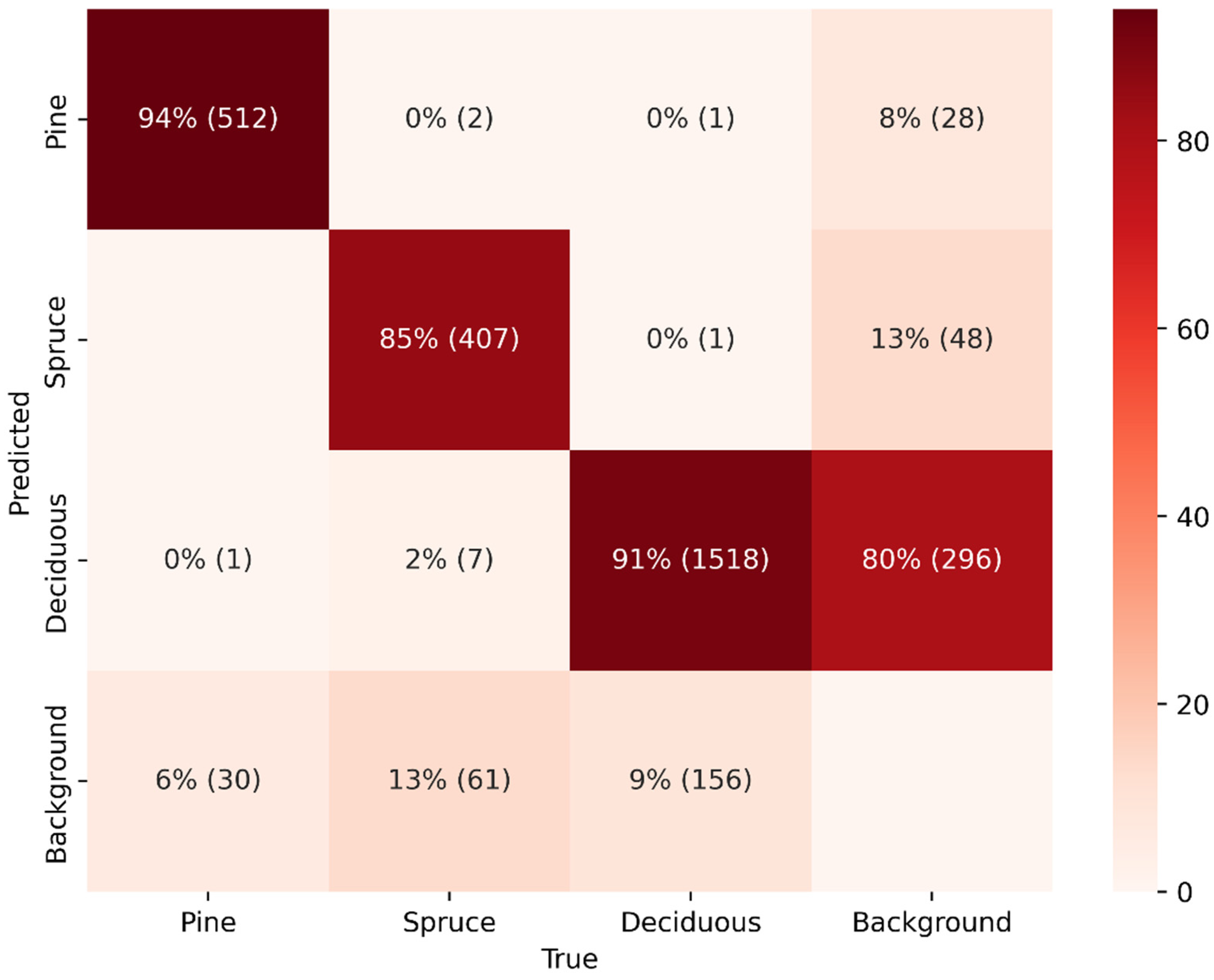

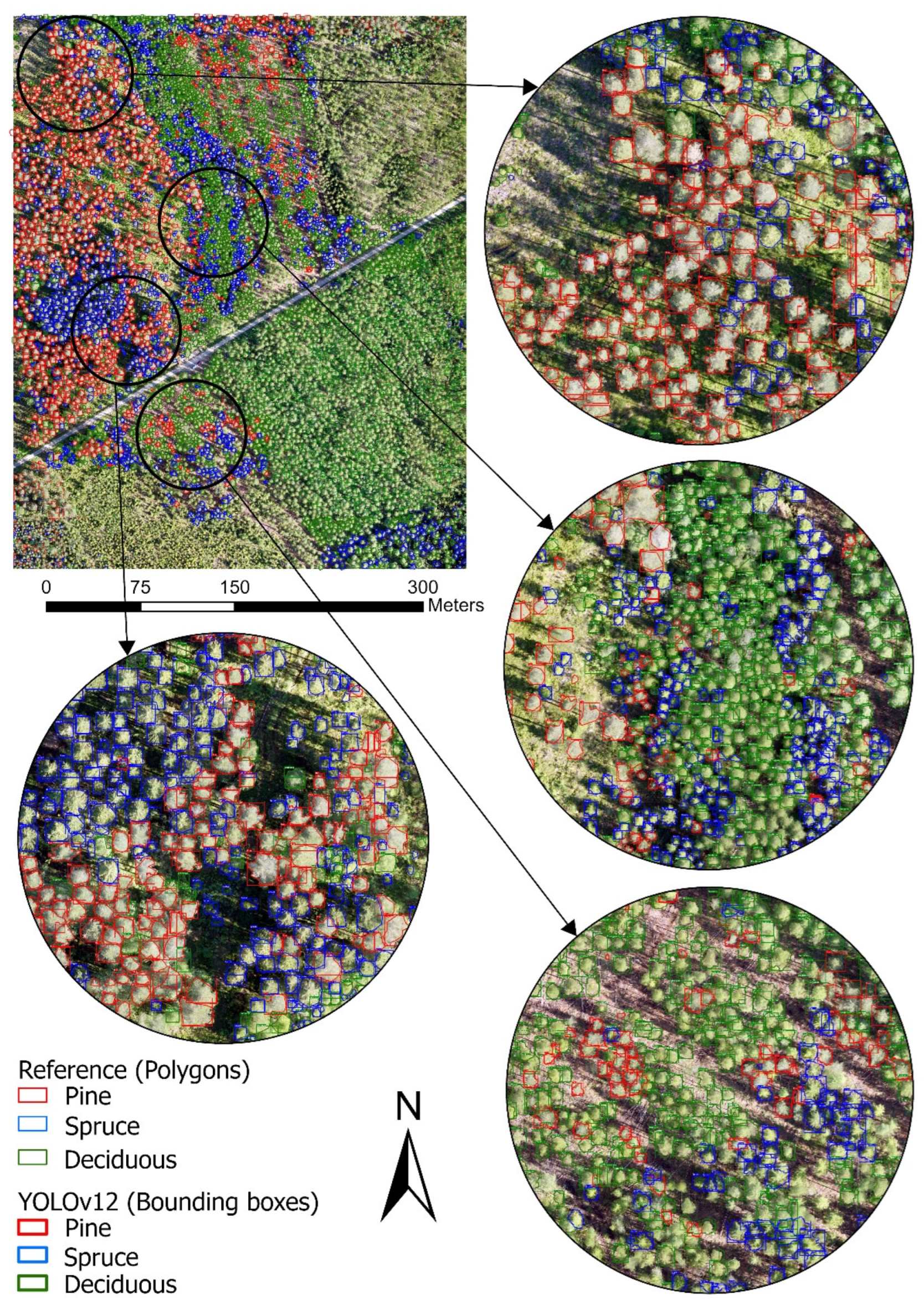

3.1. Tree Species Detection

3.2. Classification

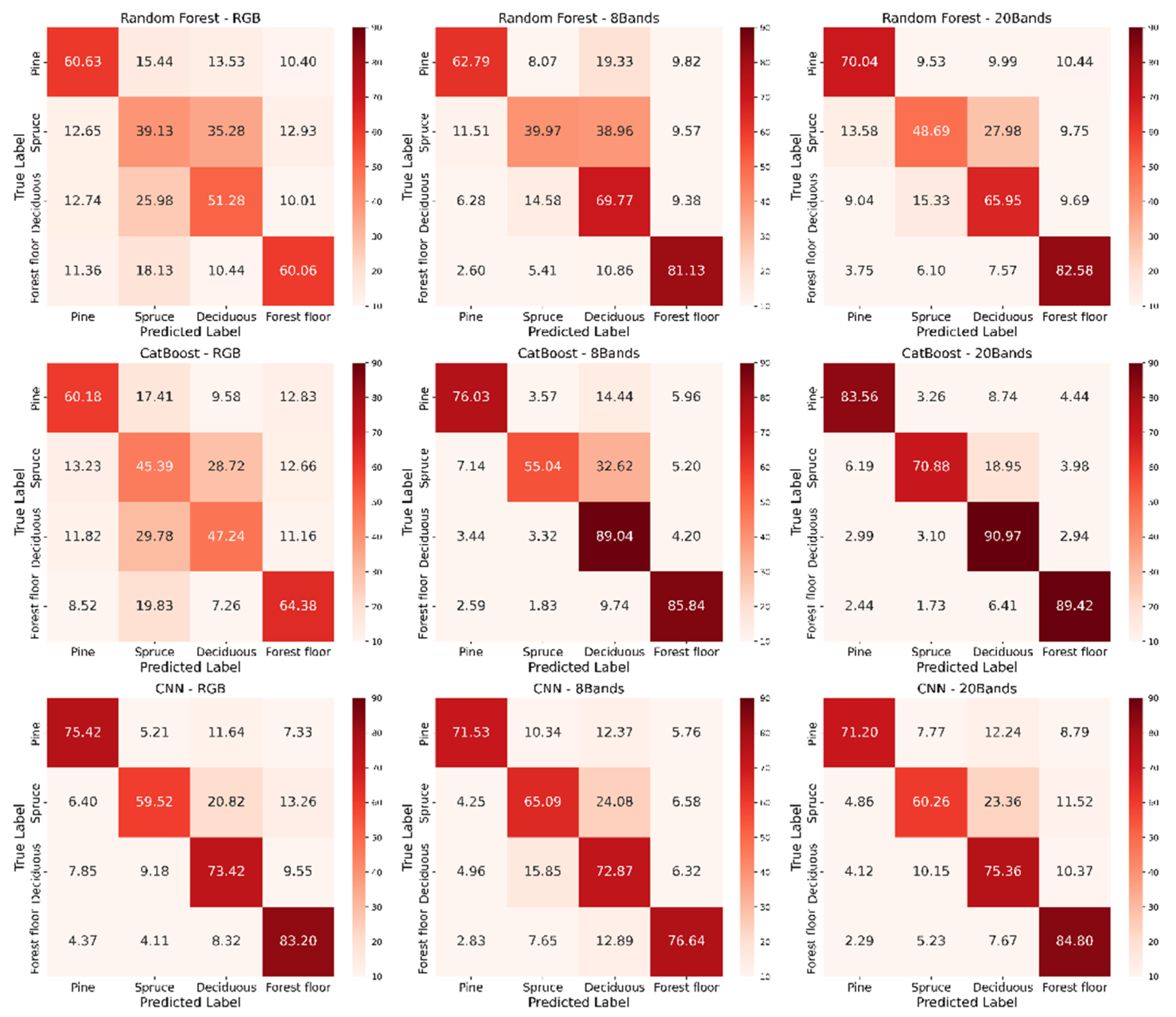

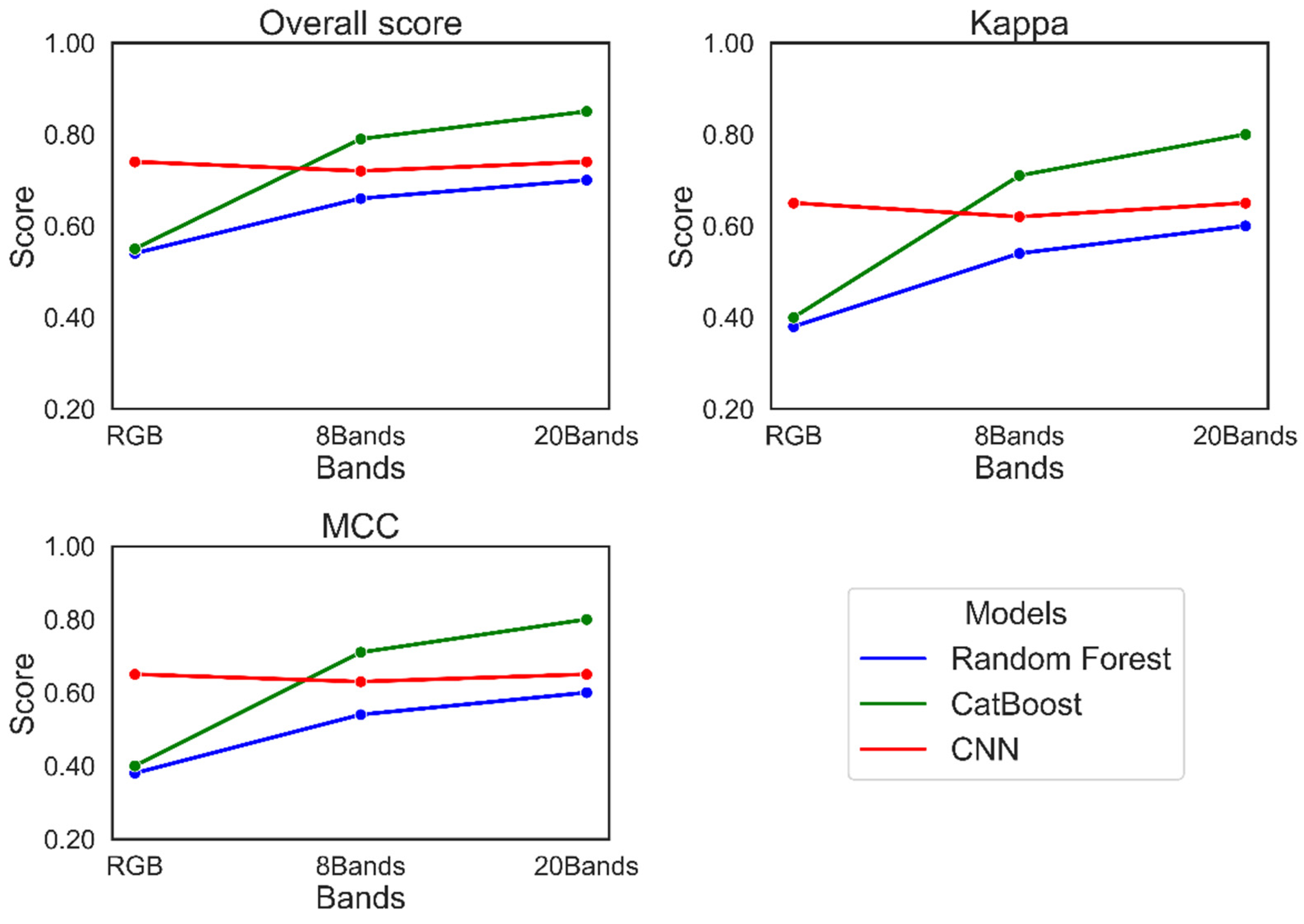

3.2.1. Performance of the Models

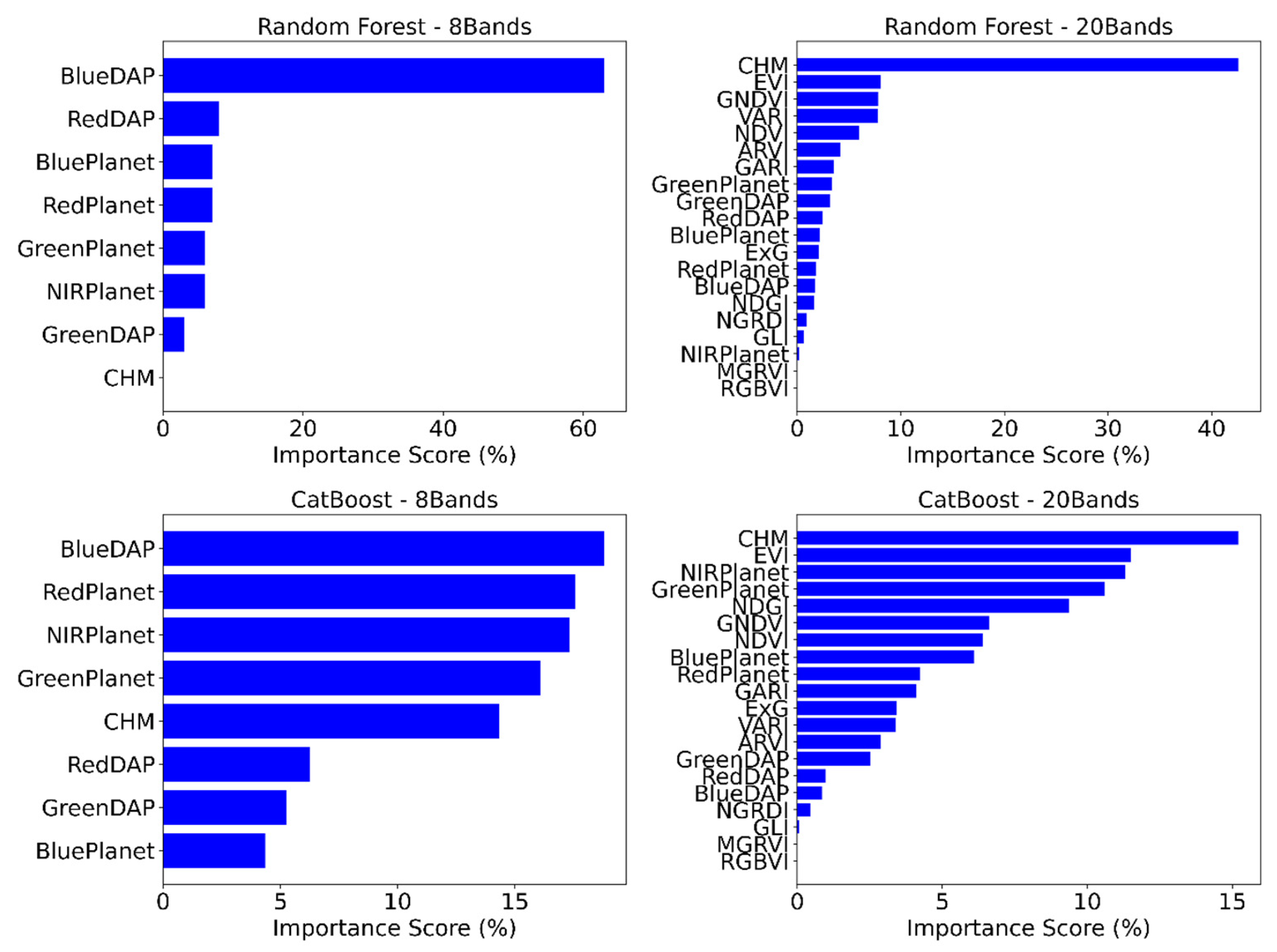

3.2.2. Feature Importance

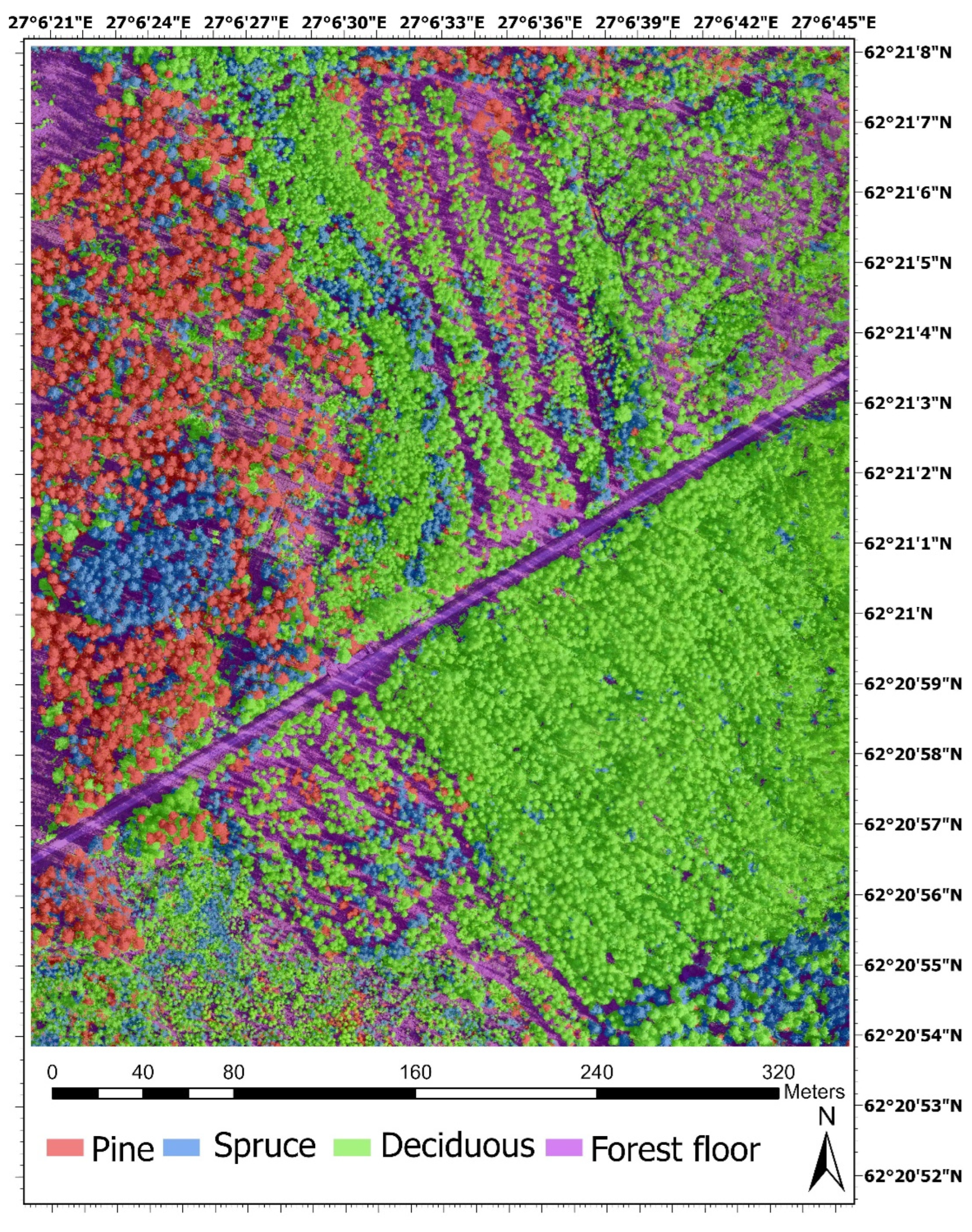

3.3. Classification Map

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Liu, M.; Han, Z.; Chen, Y.; Liu, Z.; Han, Y. Tree species classification of airborne LiDAR data based on 3D deep learning. Guofang Keji Daxue Xuebao/Journal Natl. Univ. Def. Technol. 2022, 44, 123–130. [Google Scholar] [CrossRef]

- Shang, X.; Chisholm, L.A. Classification of Australian native forest species using hyperspectral remote sensing and machine-learning classification algorithms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2481–2489. [Google Scholar] [CrossRef]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.A.; Zare, A.; White, E.P. Cross-site learning in deep learning RGB tree crown detection. Ecol. Inform. 2020, 56, 101061. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition.

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition.

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the Proceedings of the IEEE international conference on computer vision.

- Ferreira, M.P.; Almeida, D.R.A. de; Papa, D. de, A.; Minervino, J.B.S.; Veras, H.F.P.; Formighieri, A.; Santos, C.A.N.; Ferreira, M.A.D.; Figueiredo, E.O.; Ferreira, E.J.L. Individual tree detection and species classification of Amazonian palms using UAV images and deep learning. For. Ecol. Manage. 2020, 475, 118397. [Google Scholar] [CrossRef]

- Hao, Z.; Lin, L.; Post, C.J.; Mikhailova, E.A.; Li, M.; Chen, Y.; Yu, K.; Liu, J. Automated tree-crown and height detection in a young forest plantation using mask region-based convolutional neural network (Mask R-CNN). ISPRS J. Photogramm. Remote Sens. 2021, 178, 112–123. [Google Scholar] [CrossRef]

- Yang, M.; Mou, Y.; Liu, S.; Meng, Y.; Liu, Z.; Li, P.; Xiang, W.; Zhou, X.; Peng, C. Detecting and mapping tree crowns based on convolutional neural network and Google Earth images. Int. J. Appl. Earth Obs. Geoinf. 2022, 108. [Google Scholar] [CrossRef]

- Beloiu, M.; Heinzmann, L.; Rehush, N.; Gessler, A.; Griess, V.C. Individual Tree-Crown Detection and Species Identification in Heterogeneous Forests Using Aerial RGB Imagery and Deep Learning. Remote Sens. 2023, 15. [Google Scholar] [CrossRef]

- Korznikov, K.; Kislov, D.; Petrenko, T.; Dzizyurova, V.; Doležal, J.; Krestov, P.; Altman, J. Unveiling the Potential of Drone-Borne Optical Imagery in Forest Ecology: A Study on the Recognition and Mapping of Two Evergreen Coniferous Species. Remote Sens. 2023, 15. [Google Scholar] [CrossRef]

- Gan, Y.; Wang, Q.; Iio, A. Tree Crown Detection and Delineation in a Temperate Deciduous Forest from UAV RGB Imagery Using Deep Learning Approaches: Effects of Spatial Resolution and Species Characteristics. Remote Sens. 2023, 15. [Google Scholar] [CrossRef]

- Zhong, H.; Zhang, Z.; Liu, H.; Wu, J.; Lin, W. Individual Tree Species Identification for Complex Coniferous and Broad-Leaved Mixed Forests Based on Deep Learning Combined with UAV LiDAR Data and RGB Images. Forests 2024, 15, 293. [Google Scholar] [CrossRef]

- Santos, A.A. dos; Marcato Junior, J.; Araújo, M.S.; Di Martini, D.R.; Tetila, E.C.; Siqueira, H.L.; Aoki, C.; Eltner, A.; Matsubara, E.T.; Pistori, H. Assessment of CNN-based methods for individual tree detection on images captured by RGB cameras attached to UAVs. Sensors 2019, 19, 3595. [Google Scholar] [CrossRef]

- Itakura, K.; Hosoi, F. Automatic tree detection from three-dimensional images reconstructed from 360 spherical camera using YOLO v2. Remote Sens. 2020, 12, 988. [Google Scholar] [CrossRef]

- Safonova, A.; Hamad, Y.; Alekhina, A.; Kaplun, D. Detection of Norway Spruce Trees (Picea Abies) Infested by Bark Beetle in UAV Images Using YOLOs Architectures. IEEE Access 2022, 10, 10384–10392. [Google Scholar] [CrossRef]

- Liu, Y.; Zhao, Q.; Wang, X.; Sheng, Y.; Tian, W.; Ren, Y. A tree species classification model based on improved YOLOv7 for shelterbelts. Front. Plant Sci. 2024, 14, 1265025. [Google Scholar] [CrossRef]

- Dong, C.; Cai, C.; Chen, S.; Xu, H.; Yang, L.; Ji, J.; Huang, S.; Hung, I.K.; Weng, Y.; Lou, X. Crown Width Extraction of Metasequoia glyptostroboides Using Improved YOLOv7 Based on UAV Images. Drones 2023, 7, 336. [Google Scholar] [CrossRef]

- Xu, S.; Wang, R.; Shi, W.; Wang, X. Classification of Tree Species in Transmission Line Corridors Based on YOLO v7. Forests 2024, 15, 61. [Google Scholar] [CrossRef]

- Jarahizadeh, S.; Salehi, B. Advancing tree detection in forest environments: A deep learning object detector approach with UAV LiDAR data. Urban For. Urban Green. 2025, 105, 128695. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524 2025. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Transactions on Pattern Analysis and Machine Intelligence; 2017; Vol. 39, pp. 640–651. [Google Scholar]

- Schiefer, F.; Kattenborn, T.; Frick, A.; Frey, J.; Schall, P.; Koch, B.; Schmidtlein, S. Mapping forest tree species in high resolution UAV-based RGB-imagery by means of convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2020, 170, 205–215. [Google Scholar] [CrossRef]

- Gyawali, A.; Adhikari, H.; Aalto, M.; Ranta, T. From simple linear regression to machine learning methods: Canopy cover modelling of a young forest using planet data. Ecol. Inform. 2024, 82, 102706. [Google Scholar] [CrossRef]

- Morais, T.G.; Domingos, T.; Teixeira, R.F.M. Semantic Segmentation of Portuguese Agri-Forestry Using High-Resolution Orthophotos. Agronomy 2023, 13, 2741. [Google Scholar] [CrossRef]

- Kemker, R.; Salvaggio, C.; Kanan, C. Algorithms for semantic segmentation of multispectral remote sensing imagery using deep learning. ISPRS J. Photogramm. Remote Sens. 2018, 145, 60–77. [Google Scholar] [CrossRef]

- Haq, M.A.; Rahaman, G.; Baral, P.; Ghosh, A. Deep Learning Based Supervised Image Classification Using UAV Images for Forest Areas Classification. J. Indian Soc. Remote Sens. 2021, 49, 601–606. [Google Scholar] [CrossRef]

- Hızal, C.; Gülsu, G.; Akgün, H.Y.; Kulavuz, B.; Bakırman, T.; Aydın, A.; Bayram, B. Forest Semantic Segmentation Based on Deep Learning Using Sentinel-2 Images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. - ISPRS Arch. 2024, 48, 229–236. [Google Scholar] [CrossRef]

- You, H.; Huang, Y.; Qin, Z.; Chen, J.; Liu, Y. Forest Tree Species Classification Based on Sentinel-2 Images and Auxiliary Data. Forests 2022, 13, 1416. [Google Scholar] [CrossRef]

- Ma, Y.; Zhao, Y.; Im, J.; Zhao, Y.; Zhen, Z. A deep-learning-based tree species classification for natural secondary forests using unmanned aerial vehicle hyperspectral images and LiDAR. Ecol. Indic. 2024, 159, 111608. [Google Scholar] [CrossRef]

- Zagajewski, B.; Kluczek, M.; Raczko, E.; Njegovec, A.; Dabija, A.; Kycko, M. Comparison of random forest, support vector machines, and neural networks for post-disaster forest species mapping of the krkonoše/karkonosze transboundary biosphere reserve. Remote Sens. 2021, 13, 2581. [Google Scholar] [CrossRef]

- Raczko, E.; Zagajewski, B. Comparison of support vector machine, random forest and neural network classifiers for tree species classification on airborne hyperspectral APEX images. Eur. J. Remote Sens. 2017, 50, 144–154. [Google Scholar] [CrossRef]

- Immitzer, M.; Atzberger, C.; Koukal, T. Tree species classification with Random forest using very high spatial resolution 8-band worldView-2 satellite data. Remote Sens. 2012, 4, 2661–2693. [Google Scholar] [CrossRef]

- Yan, S.; Jing, L.; Wang, H. A new individual tree species recognition method based on a convolutional neural network and high-spatial resolution remote sensing imagery. Remote Sens. 2021, 13, 479. [Google Scholar] [CrossRef]

- Los, H.; Mendes, G.S.; Cordeiro, D.; Grosso, N.; Costa, H.; Benevides, P.; Caetano, M. Evaluation of Xgboost and Lgbm Performance in Tree Species Classification With Sentinel-2 Data. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS); IEEE; Vol. 2021, pp. 5803–5806.

- Vanguri, R.; Laneve, G.; Hościło, A. Mapping forest tree species and its biodiversity using EnMAP hyperspectral data along with Sentinel-2 temporal data: An approach of tree species classification and diversity indices. Ecol. Indic. 2024, 167, 112671. [Google Scholar] [CrossRef]

- Usman, M.; Ejaz, M.; Nichol, J.E.; Farid, M.S.; Abbas, S.; Khan, M.H. A Comparison of Machine Learning Models for Mapping Tree Species Using WorldView-2 Imagery in the Agroforestry Landscape of West Africa. ISPRS Int. J. Geo-Information 2023, 12, 142. [Google Scholar] [CrossRef]

- Qin, H.; Zhou, W.; Yao, Y.; Wang, W. Individual tree segmentation and tree species classification in subtropical broadleaf forests using UAV-based LiDAR, hyperspectral, and ultrahigh-resolution RGB data. Remote Sens. Environ. 2022, 280, 113143. [Google Scholar] [CrossRef]

- Sun, Y.; Huang, J.; Ao, Z.; Lao, D.; Xin, Q. Deep learning approaches for the mapping of tree species diversity in a tropical wetland using airborne LiDAR and high-spatial-resolution remote sensing images. Forests 2019, 10, 1047. [Google Scholar] [CrossRef]

- Xu, Z.; Shen, X.; Cao, L.; Coops, N.C.; Goodbody, T.R.H.; Zhong, T.; Zhao, W.; Sun, Q.; Ba, S.; Zhang, Z.; et al. Tree species classification using UAS-based digital aerial photogrammetry point clouds and multispectral imageries in subtropical natural forests. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 102173. [Google Scholar] [CrossRef]

- Quan, Y.; Li, M.; Hao, Y.; Liu, J.; Wang, B. Tree species classification in a typical natural secondary forest using UAV-borne LiDAR and hyperspectral data. GIScience Remote Sens. 2023, 60. [Google Scholar] [CrossRef]

- Planet Team Planet Application Program Interface; Space for Life on Earth: San Francisco, CA, USA. Available online: https://api.planet.com (accessed on 20 September 2022).

- Kluczek, M.; Zagajewski, B.; Zwijacz-Kozica, T. Mountain Tree Species Mapping Using Sentinel-2, PlanetScope, and Airborne HySpex Hyperspectral Imagery. Remote Sens. 2023, 15, 844. [Google Scholar] [CrossRef]

- Hovi, A.; Raitio, P.; Rautiainen, M. A spectral analysis of 25 boreal tree species. Silva Fenn. 2017, 51, 7753. [Google Scholar] [CrossRef]

- FMI Finnish Meteorological Institute (FMI). Available online: https://en.ilmatieteenlaitos.fi/ (accessed on 15 January 2025).

- Gyawali, A.; Aalto, M.; Peuhkurinen, J.; Villikka, M.; Ranta, T. Comparison of Individual Tree Height Estimated from LiDAR and Digital Aerial Photogrammetry in Young Forests. Sustainability 2022, 14, 3720. [Google Scholar] [CrossRef]

- Pix4D S.A. Prilly, S. Pix4D Drone Mapping Software. Swiss Fed Inst Technol Lausanne, Route Cantonale, Switz 2014. Available online: http://pix4d.com (accessed on 25 May 2021).

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. Am. Soc. Agric. Eng. 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially located platform and aerial photography for documentation of grazing impacts on wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Vina, A.; Arkebauer, T.J.; Rundquist, D.C.; Keydan, G.; Leavitt, B. Remote estimation of leaf area index and green leaf biomass in maize canopies. Geophys. Res. Lett. 2003, 30. [Google Scholar] [CrossRef]

- Huete, A.R.; Liu, H.Q.; Batchily, K.; Van Leeuwen, W. A comparison of vegetation indices over a global set of TM images for EOS-MODIS. Remote Sens. Environ. 1997, 59, 440–451. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS- MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Yang, W.; Kobayashi, H.; Wang, C.; Shen, M.; Chen, J.; Matsushita, B.; Tang, Y.; Kim, Y.; Bret-Harte, M.S.; Zona, D.; et al. A semi-analytical snow-free vegetation index for improving estimation of plant phenology in tundra and grassland ecosystems. Remote Sens. Environ. 2019, 228, 31–44. [Google Scholar] [CrossRef]

- Rouse, R.W.H.; Haas, J.A.W.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS (Earth Resources Technology Satellites). Goddard Sp. Flight Cent. 3d ERTS-1 1974, 1, 309–317. [Google Scholar]

- ESRI. Train Deep Learning Model (Image Analyst). Available online: https://pro.arcgis.com/en/pro-app/latest/tool-reference/image-analyst/train-deep-learning-model.htm (accessed on Sep 10, 2024).

- Breiman, L. Random forests. Random Forests, 1–122. Mach. Learn. 2001, 45, 5–32. [Google Scholar]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. Catboost: Unbiased boosting with categorical features. Adv. Neural Inf. Process. Syst. 2018, 2018, 6638–6648. [Google Scholar]

- Odeh, A.; Al-Haija, Q.A.; Aref, A.; Taleb, A.A. Comparative Study of CatBoost, XGBoost, and LightGBM for Enhanced URL Phishing Detection: A Performance Assessment. J. Internet Serv. Inf. Secur. 2023, 13, 1–11. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 1–74. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer, 2016; Vol. 9908 LNCS, pp. 630–645. [Google Scholar]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Matthews, B.W. Comparison of the predicted and observed secondary structure of T4 phage lysozyme. BBA - Protein Struct. 1975, 405, 442–451. [Google Scholar] [CrossRef]

- Shen, L.; Lang, B.; Song, Z. DS-YOLOv8-Based Object Detection Method for Remote Sensing Images. IEEE Access 2023, 11, 125122–125137. [Google Scholar] [CrossRef]

- Avtar, R.; Chen, X.; Fu, J.; Alsulamy, S.; Supe, H.; Pulpadan, Y.A.; Louw, A.S.; Tatsuro, N. Tree Species Classification by Multi-Season Collected UAV Imagery in a Mixed Cool-Temperate Mountain Forest. Remote Sens. 2024, 16, 4060. [Google Scholar] [CrossRef]

- Sothe, C.; De Almeida, C.M.; Schimalski, M.B.; La Rosa, L.E.C.; Castro, J.D.B.; Feitosa, R.Q.; Dalponte, M.; Lima, C.L.; Liesenberg, V.; Miyoshi, G.T.; et al. Comparative performance of convolutional neural network, weighted and conventional support vector machine and random forest for classifying tree species using hyperspectral and photogrammetric data. GIScience Remote Sens. 2020, 57, 369–394. [Google Scholar] [CrossRef]

- Sothe, C.; La Rosa, L.E.C.; De Almeida, C.M.; Gonsamo, A.; Schimalski, M.B.; Castro, J.D.B.; Feitosa, R.Q.; Dalponte, M.; Lima, C.L.; Liesenberg, V.; et al. Evaluating a convolutional neural network for feature extraction and tree species classification using uav-hyperspectral images. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 5, 193–199. [Google Scholar] [CrossRef]

- Maschler, J.; Atzberger, C.; Immitzer, M. Individual tree crown segmentation and classification of 13 tree species using Airborne hyperspectral data. Remote Sens. 2018, 10, 1218. [Google Scholar] [CrossRef]

- Yao, Y.; Wang, X.; Qin, H.; Wang, W.; Zhou, W. Mapping Urban Tree Species by Integrating Canopy Height Model with Multi-Temporal Sentinel-2 Data. Remote Sens. 2025, 17, 790. [Google Scholar] [CrossRef]

| Species | Number of polygons | Total area (m2) | % of total delineated area | % of total study area |

|---|---|---|---|---|

| Scots pine | 1261 | 11650 | 29.45 | 7.33 |

| Norway spruce | 1622 | 8850 | 22.37 | 5.57 |

| Deciduous spp. | 2755 | 13816 | 34.93 | 8.69 |

| Forest floor | 373 | 5240 | 13.25 | 3.30 |

| Total | 6011 | 39556 | 100 | 24.89 |

| Data | Vegetation index | Formula | Reference |

| DAP | ExG | [50] | |

| GLI | [51] | ||

| MGRVI | [52] | ||

| NGRDI | [53] | ||

| RGBVI | [52] | ||

| VARI | [54] | ||

| Planet | ARVI | [55] | |

| EVI | [56] | ||

| GARI | [57] | ||

| GNDVI | [57] | ||

| NDGI | [58] | ||

| NDVI | [59] |

| Class | Images | Instances | Precision | Recall | mAP50 | mAP50-95 |

|---|---|---|---|---|---|---|

| All | 30 | 2696 | 0.95 | 0.87 | 0.93 | 0.75 |

| Pine | 20 | 543 | 0.97 | 0.92 | 0.97 | 0.80 |

| Spruce | 25 | 477 | 0.94 | 0.82 | 0.90 | 0.71 |

| Deciduous | 30 | 1676 | 0.93 | 0.87 | 0.93 | 0.73 |

| Model | Channels | Species | Precision | Recall | F1 Score | Overall score | Kappa | MCC |

|---|---|---|---|---|---|---|---|---|

| Random forest | RGB | Pine | 0.59 | 0.61 | 0.60 | |||

| Spruce | 0.29 | 0.39 | 0.33 | |||||

| Deciduous | 0.56 | 0.51 | 0.53 | |||||

| Forest floor | 0.69 | 0.60 | 0.64 | |||||

| 0.54 | 0.38 | 0.38 | ||||||

| 8 Bands | Pine | 0.76 | 0.63 | 0.69 | ||||

| Spruce | 0.47 | 0.40 | 0.43 | |||||

| Deciduous | 0.60 | 0.70 | 0.64 | |||||

| Forest floor | 0.77 | 0.81 | 0.79 | |||||

| 0.66 | 0.54 | 0.54 | ||||||

| 20 Bands | Pine | 0.72 | 0.70 | 0.71 | ||||

| Spruce | 0.49 | 0.49 | 0.49 | |||||

| Deciduous | 0.68 | 0.66 | 0.67 | |||||

| Forest floor | 0.77 | 0.83 | 0.80 | |||||

| 0.70 | 0.60 | 0.60 | ||||||

| CatBoost | RGB | Pine | 0.62 | 0.60 | 0.61 | |||

| Spruce | 0.29 | 0.45 | 0.36 | |||||

| Deciduous | 0.61 | 0.47 | 0.53 | |||||

| Forest floor | 0.68 | 0.64 | 0.66 | |||||

| 0.55 | 0.40 | 0.40 | ||||||

| 8 Bands | Pine | 0.85 | 0.76 | 0.80 | ||||

| Spruce | 0.80 | 0.55 | 0.65 | |||||

| Deciduous | 0.70 | 0.89 | 0.78 | |||||

| Forest floor | 0.87 | 0.86 | 0.87 | |||||

| 0.79 | 0.71 | 0.72 | ||||||

| 20 Bands | Pine | 0.88 | 0.84 | 0.86 | ||||

| Spruce | 0.85 | 0.71 | 0.77 | |||||

| Deciduous | 0.80 | 0.91 | 0.85 | |||||

| Forest floor | 0.91 | 0.89 | 0.90 | |||||

| 0.85 | 0.80 | 0.81 | ||||||

| CNN | RGB | Pine | 0.81 | 0.75 | 0.78 | |||

| Spruce | 0.65 | 0.60 | 0.62 | |||||

| Deciduous | 0.74 | 0.73 | 0.74 | |||||

| Forest floor | 0.73 | 0.83 | 0.78 | |||||

| 0.74 | 0.65 | 0.65 | ||||||

| 8 Bands | Pine | 0.86 | 0.72 | 0.78 | ||||

| Spruce | 0.52 | 0.65 | 0.58 | |||||

| Deciduous | 0.69 | 0.73 | 0.71 | |||||

| Forest floor | 0.80 | 0.77 | 0.78 | |||||

| 0.72 | 0.62 | 0.63 | ||||||

| 20 Bands | Pine | 0.88 | 0.71 | 0.79 | ||||

| Spruce | 0.60 | 0.60 | 0.60 | |||||

| Deciduous | 0.73 | 0.75 | 0.74 | |||||

| Forest floor | 0.73 | 0.85 | 0.79 | |||||

| 0.74 | 0.65 | 0.65 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).