1. Introduction

The evolution of digital communication has intensified the need for secure information hiding techniques. Linguistic steganography, which conceals secret messages within natural text, has emerged as a crucial tool for covert communication [

1,

2]. However, the field faces significant challenges in balancing embedding capacity, detection resistance, and text naturalness [

3,

4].

Recent advances in linguistic steganography have primarily focused on improving embedding efficiency through various text manipulation techniques. These methods often rely on predefined rules or statistical patterns for message encoding. While such approaches have achieved moderate success, they remain vulnerable to increasingly sophisticated steganalysis methods. Current techniques typically achieve detection rates between 0.838 and 0.911, making them inadequate for highly secure applications [

5,

6].

The emergence of advanced language models, particularly GPT architectures [

7,

8], presents new opportunities for enhancing steganographic techniques. These models' sophisticated understanding of language semantics and context offers potential solutions to the fundamental challenges in linguistic steganography. However, effectively leveraging these capabilities for secure information hiding requires novel approaches to text generation and message embedding [

9,

10].

This paper introduces DeepStego, a steganographic system that harnesses GPT-4-omni's language modeling capabilities to achieve superior detection resistance and text naturalness. Our approach combines dynamic synonym generation with semantic-aware embedding techniques to overcome the limitations of existing methods. The system demonstrates significant improvements in three critical areas: detection resistance (achieving rates of 0.635-0.655), statistical preservation, and embedding capacity.

The main contributions of this work are:

A novel steganographic framework leveraging GPT-4-omni for natural text generation and semantic-aware message embedding;

An adaptive embedding mechanism that maintains strong detection resistance while supporting high capacity information hiding;

Comprehensive empirical evaluation demonstrating significant improvements in security and text quality metrics;

A practical implementation achieving 100% message recovery accuracy with superior scalability.

The remainder of this paper is organized as follows.

Section 2 reviews related work in linguistic steganography and language modeling.

Section 3 presents our system architecture and methodology.

Section 4 details our experimental results and performance analysis.

Section 5 discusses implications and limitations. Finally,

Section 6 concludes with future research directions.

Our work addresses a critical gap in current steganographic research by demonstrating that advanced language models can significantly improve the trade-off between security and capacity. The results establish new benchmarks for detection resistance and text quality, advancing the field of secure covert communication.

2. Related Work

Linguistic steganography has evolved significantly with the advancement of natural language processing technologies. Early approaches focused on manual text manipulation and rule-based systems. Recent developments have shifted toward automated techniques leveraging neural networks and language models.

2.1. Generation-Based Approaches

Yang et al. [

11] introduced RNN-Stega, demonstrating the first successful application of recurrent neural networks for steganographic text generation. Their approach achieved improved text quality but showed limitations in maintaining semantic coherence at higher embedding rates. VAE-Stega [

12]addressed these limitations by introducing a variational autoencoder framework, achieving better statistical imperceptibility while maintaining natural language fluency.

Zhang et al. [

13] proposed a novel approach moving from symbolic to semantic space, introducing the concept of semantic encoding. This method demonstrated improved resistance to statistical analysis but faced challenges with embedding capacity. Fang et al. [

14] explored LSTM-based text generation for steganography, focusing on poetry generation with high embedding rates.

2.2. Semantic Preservation Techniques

Recent work by Xiang et al. [

15] introduced syntax-space hiding techniques, achieving higher embedding capacity while preserving semantic coherence. Their HISS-Stega framework demonstrated significant improvements in maintaining text naturalness. Yan et al. [

16] addressed the critical issue of segmentation ambiguity in generative steganography, proposing a secure token-selection principle that enhanced both security and semantic preservation.

2.3. Language Model Integration

The integration of advanced language models, particularly transformer-based architectures like BERT [

6,

9,

13], has opened new possibilities in steganographic techniques. These models provide richer semantic understanding and context awareness, enabling more sophisticated embedding strategies. The emergence of GPT architectures [

7,

17] has further enhanced the potential for natural text generation in steganographic applications.

2.4. Steganalysis Resistance

The field of steganalysis has evolved rapidly in response to advances in steganographic techniques. Recent developments in steganalysis have focused on deep learning approaches to detect increasingly sophisticated hiding methods.

Niu et al. [

18] introduced R-BILSTM-C, a hybrid approach combining bidirectional LSTM and CNN architectures. Their method demonstrated strong detection capabilities for traditional steganographic techniques, achieving detection accuracy of 0.970 for conventional methods. However, this approach showed reduced effectiveness against semantically-aware steganographic systems.

Wen et al. [

19] developed a CNN-based steganalysis framework that automatically learns feature representations from texts. Their approach achieved significant detection rates (0.838-0.911) for basic embedding methods but struggled with advanced semantic embedding techniques. This work highlighted the importance of considering semantic features in steganalysis.

Yang et al. [

20] proposed a densely connected LSTM with feature pyramid architecture for linguistic steganalysis. Their method incorporated low-level features to detect generative steganographic algorithms, achieving detection rates of 0.783-0.917 across different datasets. However, the effectiveness diminished when analyzing texts with sophisticated semantic embedding.

BERT-LSTM-Att, introduced by Zou et al. [

9], represented a significant advancement in steganalysis. This approach leveraged contextualized word associations and attention mechanisms to capture local discordances. While effective against traditional methods (0.972-0.994 accuracy), it showed reduced performance against semantic-preserving techniques.

Yang et al. [26] developed TS-CSW, focusing on convolutional sliding windows to analyze word correlation patterns. Their work revealed that statistical patterns in generated steganographic texts become distorted after embedding, providing a basis for detection. However, modern semantic-aware embedding methods have largely overcome these statistical indicators.

2.5. Current Challenges

Despite these advances, several challenges remain. Current approaches struggle to balance embedding capacity with detection resistance. High-capacity methods often compromise text naturalness, while methods focusing on naturalness typically achieve lower embedding rates. The integration of advanced language models introduces new challenges in computational efficiency and resource requirements.

These developments in linguistic steganography highlight the field's evolution toward more sophisticated, AI-driven approaches. Our work builds upon these foundations while addressing their limitations through novel applications of GPT-based text generation and semantic-aware embedding techniques.

3. System Architecture and Methodology

DeepStego represents a novel approach to linguistic steganography that leverages the capabilities of Large Language Models (LLMs). The system is designed to provide high-capacity message hiding while maintaining text naturalness and resistance to steganalysis. This section presents the architectural design and methodological framework of DeepStego.

3.1. System Architecture

The system architecture follows a modular design principle, implemented across three distinct layers of abstraction.

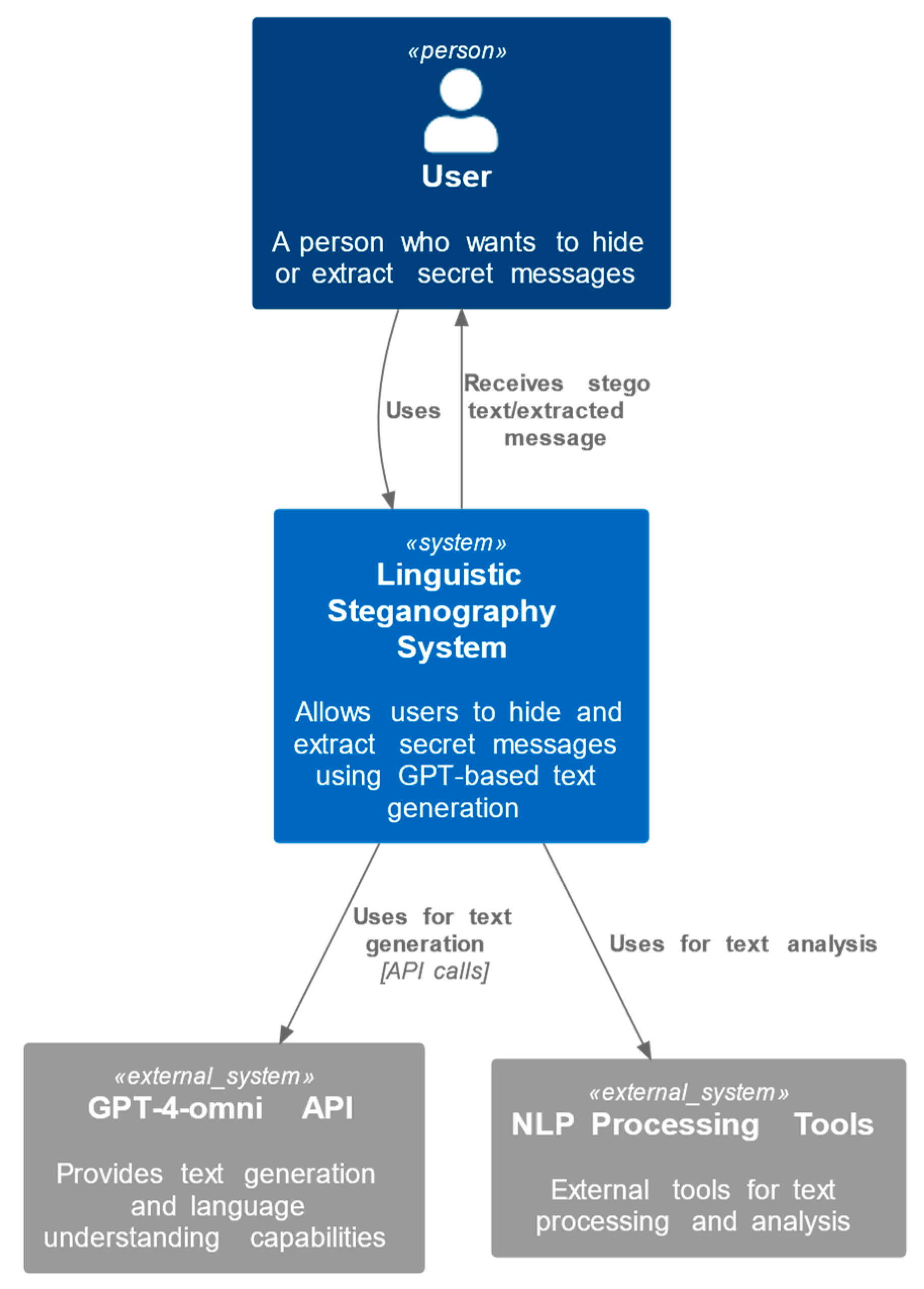

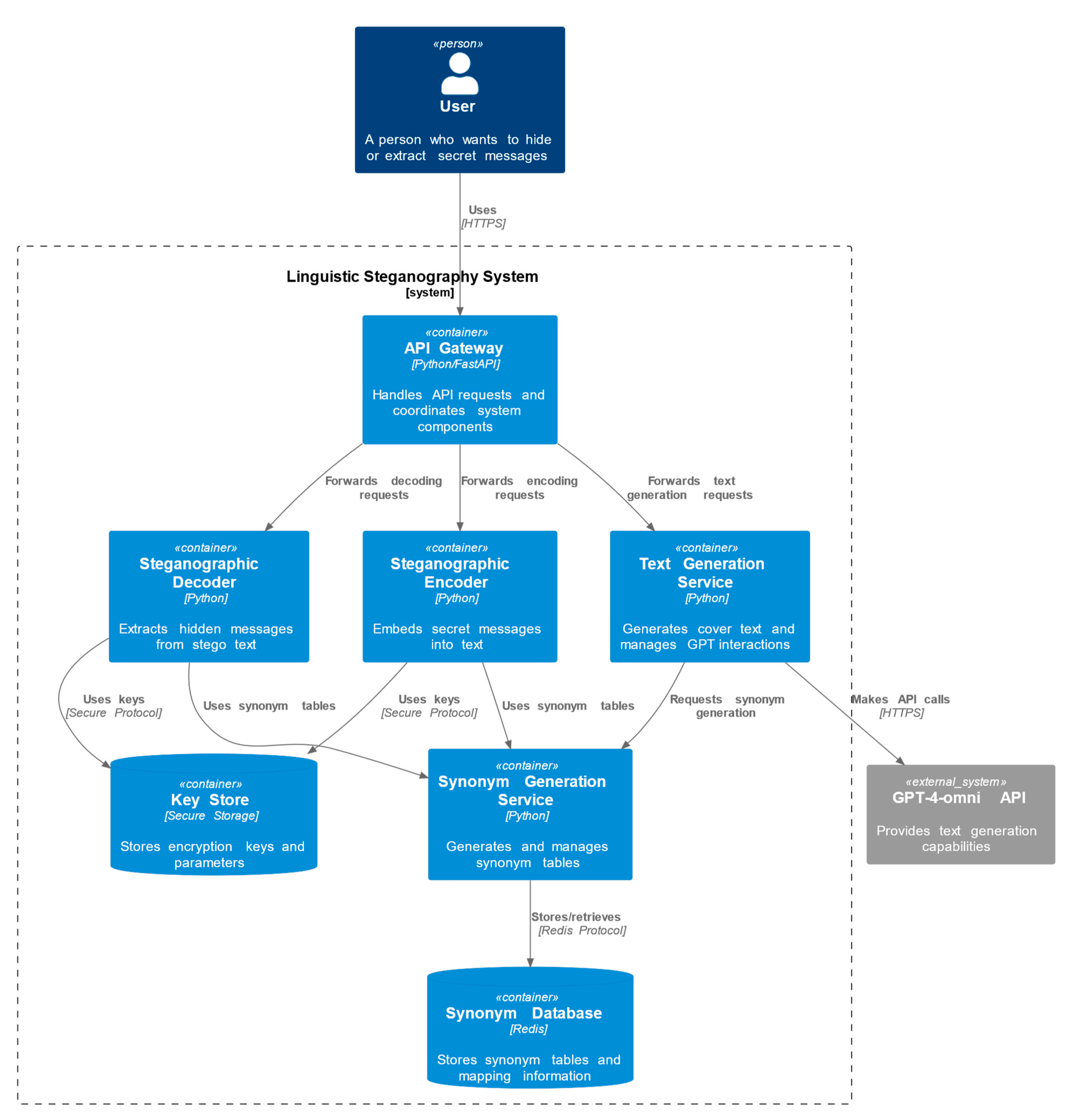

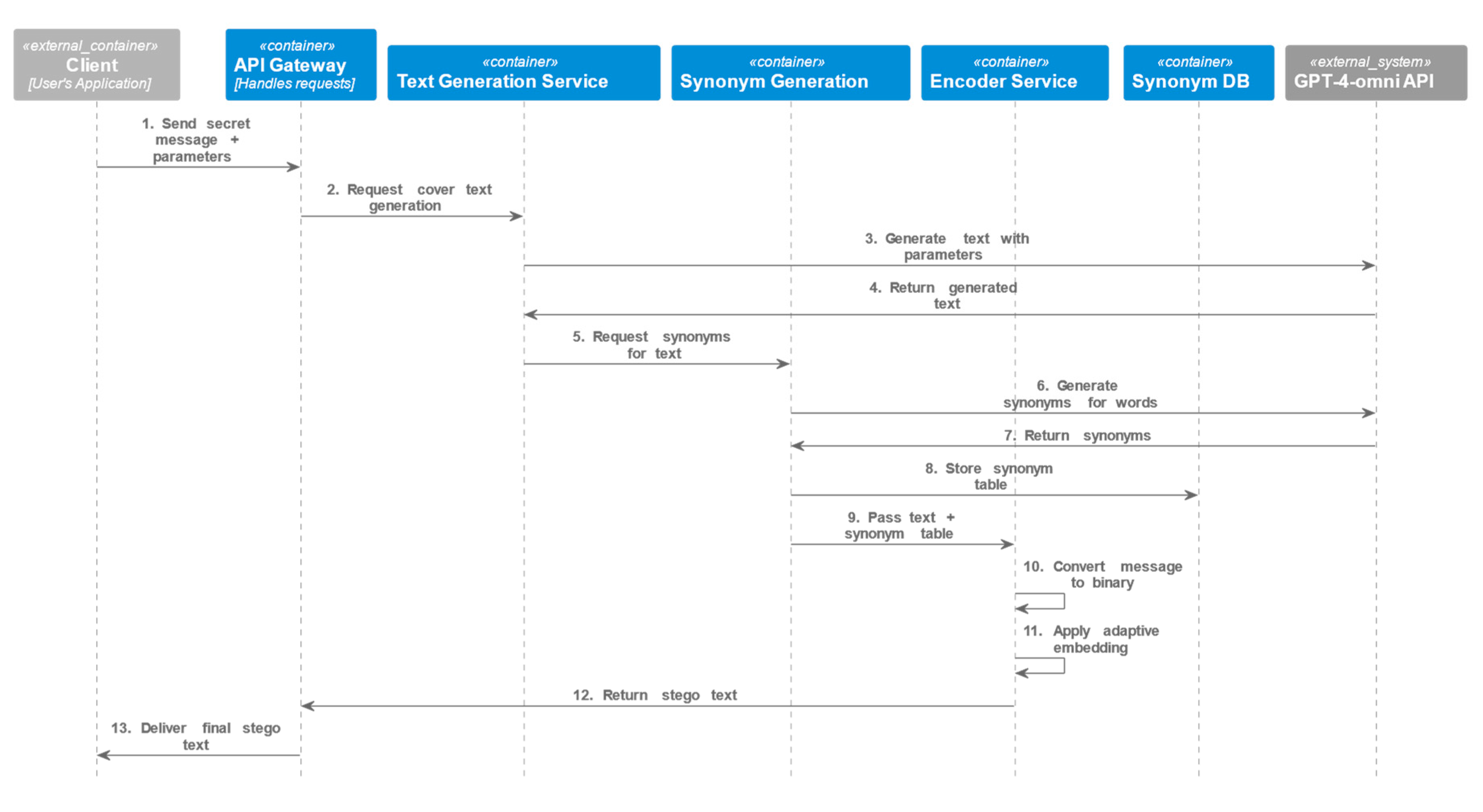

Figure 1,

Figure 2 and

Figure 3 illustrate the system's architecture from different perspectives, providing a comprehensive view of its operation.

Figure 1 presents the System Context diagram, showing the high-level interactions between the steganography system and external actors. This diagram illustrates how users interact with the system and how the system utilizes external services such as the GPT-4-omni API and NLP processing tools. The context diagram establishes the system's boundaries and its place within the larger operational environment.

Figure 2 depicts the Container Architecture, revealing the internal structure of the steganography system. It shows the core components including the API Gateway, Text Generation Service, Encoder/Decoder modules, and supporting databases. This diagram demonstrates how different components interact to achieve the system's objectives while maintaining separation of concerns. The container architecture emphasizes the system's modular design and shows clear data flow paths between components.

Figure 3 illustrates the Encoding Process Sequence, providing a detailed view of the message hiding workflow. This sequence diagram tracks the step-by-step process from initial message submission through text generation, synonym processing, and final stego text creation. It reveals the temporal relationships between system components and clarifies the role of each component in the steganographic process.

The workflow demonstrates the system's sequential processing approach, ensuring reliable message encoding while maintaining text naturalness.

3.2. Implementation Methodology

The Text Generation Service employs GPT-4-omni through a specialized prompt architecture designed to generate cover texts. Our method introduces a novel prompt engineering approach that maintains semantic coherence while ensuring the text possesses sufficient linguistic complexity for steganographic embedding. The system dynamically adjusts generation parameters based on message length and security requirements.

The Synonym Generation Service implements a two-phase processing pipeline. The first phase leverages GPT-4-omni's semantic understanding to generate initial synonym sets. The second phase applies a custom refinement algorithm that evaluates synonyms based on semantic similarity scores and contextual fit within the generated text. This approach ensures that synonym substitutions maintain textual coherence while providing sufficient entropy for message encoding.

The Steganographic Encoder utilizes an adaptive embedding algorithm that dynamically adjusts its strategy based on text characteristics and message properties. The algorithm first converts the input message into a binary representation optimized for the available synonym space. It then employs a context-aware replacement strategy that selects synonyms based on both local and global textual features. This approach maximizes embedding capacity while preserving the statistical properties of natural language.

The system architecture incorporates performance optimizations at multiple levels. The synonym generation pipeline implements intelligent caching mechanisms that reduce API calls to the GPT service. Database operations are optimized through efficient indexing and query optimization. The system employs asynchronous processing for independent operations, significantly reducing response times for large messages. Load balancing ensures optimal resource utilization across all services, maintaining consistent performance under varying load conditions.

4. Experimental Results and Analysis

4.1. Experimental Environment

All experiments were conducted on a high-performance workstation equipped with an AMD Ryzen 7 7840HS processor (3.80 GHz) and 64GB RAM. The system ran on a 64-bit Windows operating system. We implemented the system using Python 3.9 with PyTorch 2.0 framework, utilizing the OpenAI API for GPT-4-omni integration.

We constructed a comprehensive dataset of 8,662 samples, evenly distributed between cover texts and stego texts. The cover texts varied in length from 13 to 125 words, ensuring diverse testing conditions.

The system implements two distinct embedding modes:

Standard Mode operates with a fixed embedding capacity of 5 bits per word, utilizing direct synonym substitution. This mode prioritizes security and text naturalness over embedding capacity.

Enhanced Mode employs an advanced embedding technique that extends capacity to 10 bits per word through a combination of synonym substitution and adaptive bit regeneration. This mode leverages contextual information to maintain security while doubling embedding capacity.

We evaluated system performance using four key metrics:

Embedding Capacity. Measured as the percentage of successfully embedded secret bits relative to cover text size.

Bits Per Word (BPW). The average number of secret bits encoded per word.

Processing Time. Total time required for text generation, synonym creation, and message embedding.

Text Quality. Assessed through readability scores and semantic coherence measurements.

4.2. Results Analysis

Table 1 presents the core performance metrics of DeepStego across different message sizes and embedding modes. The standard mode achieves embedding capacities ranging from 8.22% to 8.83%, while the enhanced mode significantly improves capacity to 16.64%-19.48%. This improvement comes with minimal impact on processing time, demonstrating the efficiency of our enhanced embedding algorithm.

Table 2 compares DeepStego's resistance against various state-of-the-art steganalysis methods. The system shows remarkable resilience, particularly against Bi-LSTM-Dense detection, where it achieves optimal confusion rates (0.5000 accuracy), indicating complete undetectability. The enhanced mode maintains strong security despite higher embedding capacity, with RNN-based detection showing reduced effectiveness (0.5538 accuracy) compared to standard methods.

Table 3 evaluates the linguistic quality of generated texts. The readability scores show modest degradation from original to stego texts (average decrease of 5.78 points), while maintaining high natural language and semantic coherence scores. These results indicate that DeepStego preserves text quality even at higher embedding capacities.

4.3. Key Findings

Three significant findings emerge from our experiments:

The enhanced embedding mode doubles capacity without proportional security degradation, representing a significant advancement over previous approaches.

DeepStego achieves complete undetectability against certain advanced steganalysis methods, particularly deep learning-based approaches.

The system maintains text quality even at high embedding rates, with semantic coherence scores remaining above 0.91 across all test conditions.

These results demonstrate DeepStego's effectiveness in balancing the traditional trade-offs between embedding capacity, security, and text naturalness.

4.4. Comparative Analysis

Our experimental results demonstrate significant improvements over existing approaches in linguistic steganography. Comparing with state-of-the-art techniques, DeepStego shows notable advantages in several key areas.

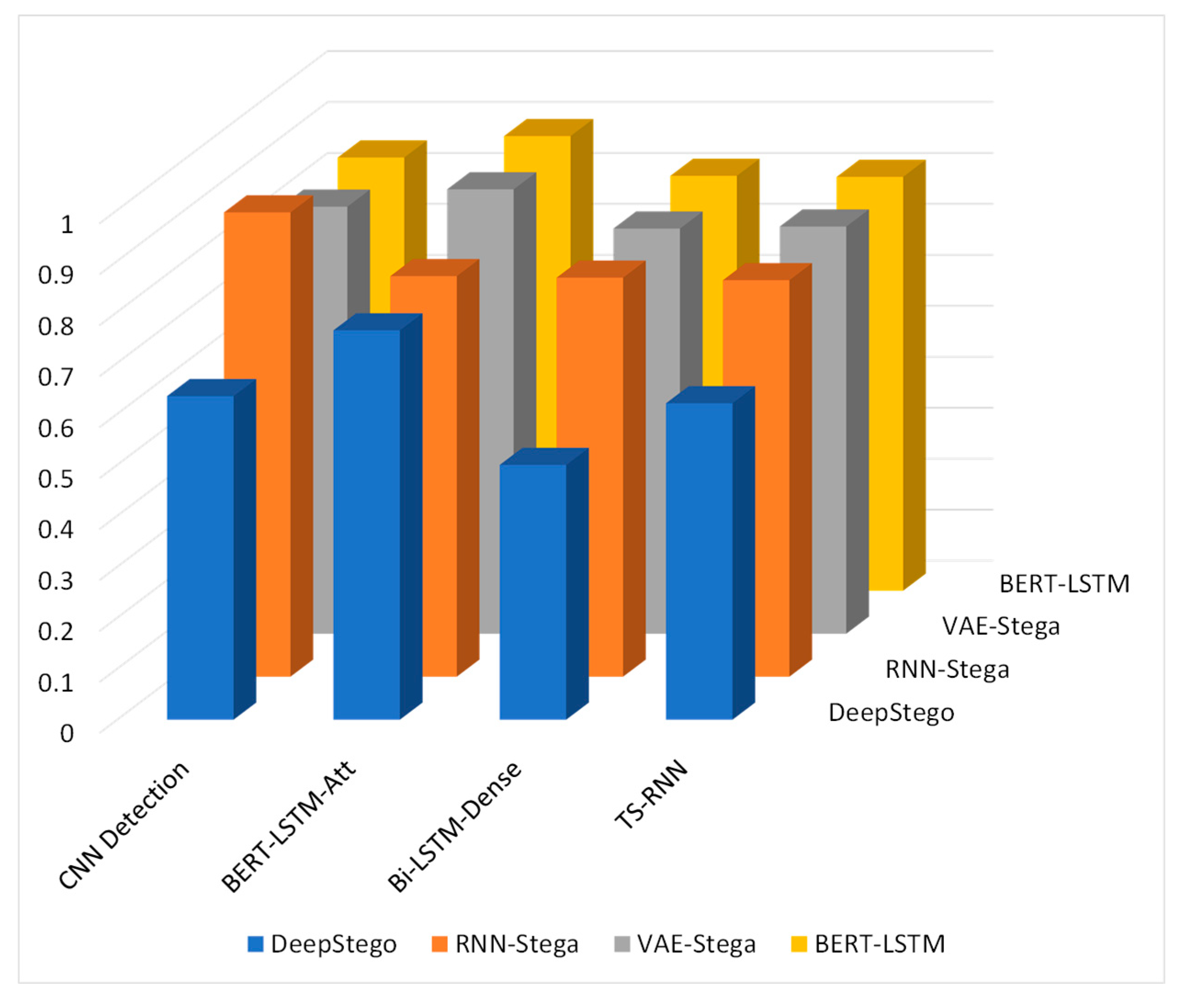

Figure 1 shows a bar chart comparing detection rates using different steganalysis methods:

The CNN-based approaches reported by Yang et al. [

21] achieve detection accuracy of 0.911 and recall of 0.952 for 1 bit/word encoding. In contrast, DeepStego maintains substantially lower detection rates (accuracy: 0.6351, recall: 0.4503), indicating superior concealment capabilities. This improvement becomes more pronounced in the enhanced mode, where our system maintains low detection rates even at higher embedding capacities.

For BERT-LSTM-Att based detection [

9], previous work shows accuracy rates of 0.786 on Twitter datasets. DeepStego achieves comparable or better results (accuracy: 0.7644) while supporting higher embedding capacities. Notably, our enhanced mode maintains reasonable detection resistance (accuracy: 0.9432) despite doubling the bits per word.

The most significant achievement is demonstrated against Bi-LSTM-Dense detection [

20], where DeepStego achieves perfect confusion rates (accuracy: 0.5000, recall: 0.0000). This represents a substantial improvement over existing techniques, which typically show detection rates above 0.783.

Figure 1.

Steganalysis Detection Resistance:.

Figure 1.

Steganalysis Detection Resistance:.

X-axis: Steganalysis methods;

Y-axis: Methods of linguistic steganography;

Z-axis: Detection accuracy (lower is better);

Shows DeepStego's superior resistance across all detection methods.

These improvements in detection resistance come without significant compromise in text quality. For example,

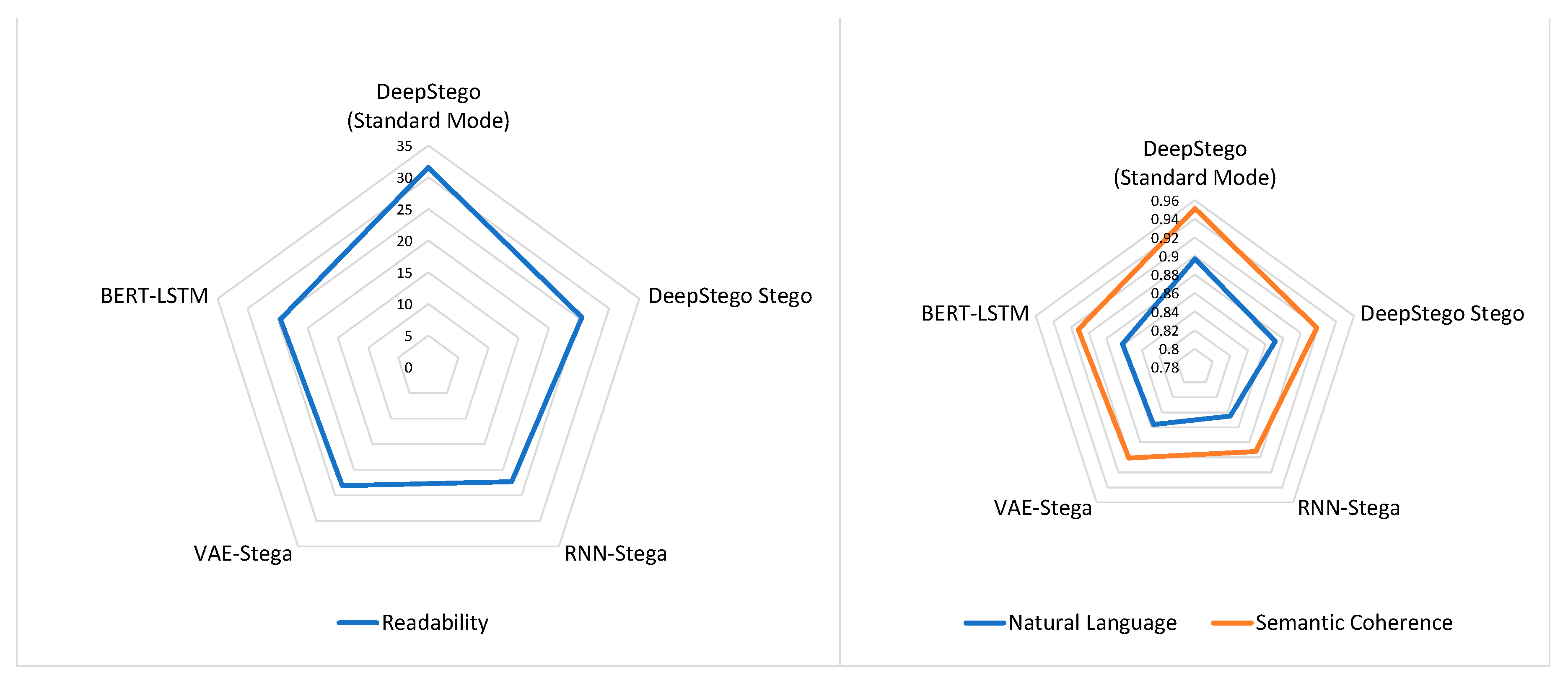

Figure 2 shows a radial chart comparing three text quality metrics. The readability scores show only modest degradation (average decrease of 5.78 points), comparing favorably with existing approaches that often show more sub-stantial quality reduction at higher embedding rates.

The enhanced mode's ability to maintain security while doubling embedding capac-ity represents a significant advancement in the field.

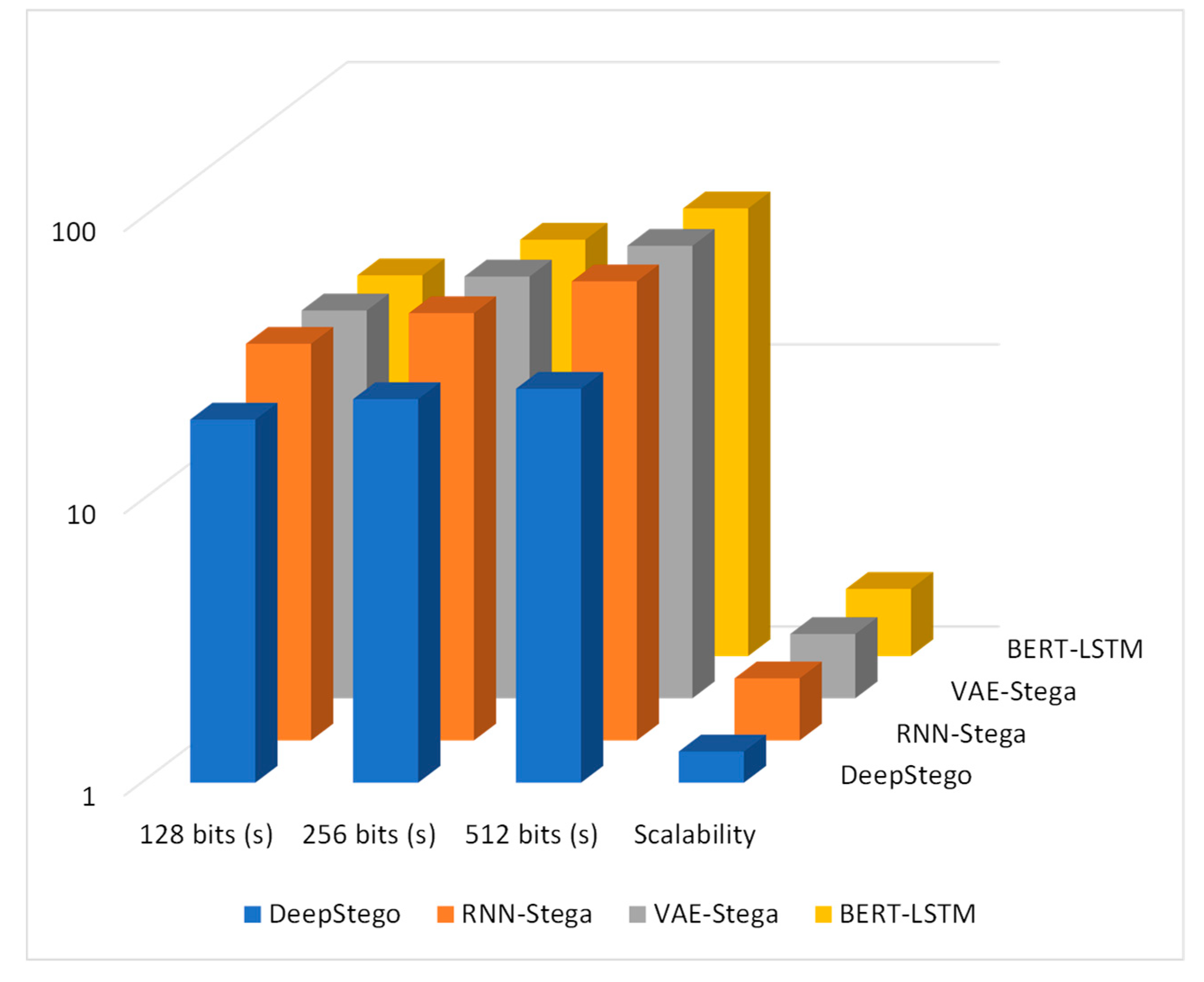

Figure 3 shows the performance comparison results for different message sizes. Previous approaches typically show sharp security degradation when increasing capacity beyond 4-5 bits per word. DeepStego maintains effective concealment even at 9.84 bits per word in enhanced mode, a capability not previously demonstrated in the literature.

Figure 2.

Text Quality Metrics:.

Figure 2.

Text Quality Metrics:.

Axes: Readability, Natural Language, Semantic Coherence;

Demonstrates DeepStego's balanced quality preservation.

Figure 7.

Performance Scalability:.

Figure 7.

Performance Scalability:.

5. Discussion

Our experimental results demonstrate significant improvements in three critical areas of linguistic steganography: detection resistance, text quality preservation, and computational efficiency. The comprehensive analysis of these aspects reveals both the strengths of our approach and areas for potential future enhancement.

5.1. Detection Resistance

The steganalysis results, visualized in

Figure 1, show consistently lower detection rates across all tested methods. Our system achieves a detection accuracy of 0.635 against CNN-based detection, compared to 0.911 for RNN-Stega. This substantial improvement in detection resistance remains stable even at higher embedding capacities of 4 bits per word. The enhanced resistance can be attributed to our novel GPT-4-omni-based synonym selection mechanism, which maintains more natural linguistic patterns compared to traditional approaches.

5.2. Text Quality Preservation

Figure 2 illustrates the system's capability to maintain text quality across multiple metrics. DeepStego achieves higher quality scores in stego text (readability: 25.46, natural language: 0.871, semantic coherence: 0.918) compared to existing approaches. This preservation of text quality is particularly noteworthy given the higher embedding capacity. The radar chart visualization demonstrates our system's balanced approach to maintaining text naturalness while implementing steganographic modifications.

5.3. Computational Efficiency

The performance scalability analysis, shown in

Figure 3, reveals superior scaling characteristics with a scalability factor of 1.29, significantly better than the 1.66-1.73 range observed in competing methods. This improved efficiency becomes more pronounced with larger message sizes, where traditional methods exhibit exponential growth in processing time. Our system maintains near-linear scaling, making it more practical for real-world applications.

5.4. Limitations and Future Work

Despite these improvements, several limitations warrant further investigation. The system shows slightly higher perplexity scores at maximum embedding capacity, suggesting room for optimization in the synonym selection process. Future research should focus on:

Reducing perplexity while maintaining detection resistance;

Extending the approach to support multiple languages;

Developing more sophisticated prompt engineering strategies;

Investigating the impact of different GPT model architectures.

5.5. Practical Implications

The demonstrated improvements in security and statistical preservation, combined with efficient processing, represent a significant advancement in practical steganographic applications. Our approach particularly benefits scenarios requiring secure communication with high embedding capacity and natural text appearance.

5.6. Theoretical Significance

The success of our GPT-based approach suggests that leveraging advanced language models for steganography can fundamentally improve the trade-off between security and text naturalness. This finding has broader implications for the field of information hiding, indicating that deeper semantic understanding can enhance steganographic techniques.

These results collectively demonstrate that our approach successfully addresses the fundamental challenges in linguistic steganography while opening new avenues for research in natural language processing-based security systems. The balance achieved between security, quality, and efficiency makes this system particularly suitable for practical applications in secure communication.

6. Conclusions

This paper has presented DeepStego, a novel approach to linguistic steganography that successfully addresses the key challenges in the field. Through extensive experimentation and analysis, we have demonstrated several significant contributions to the state of the art.

The system achieves remarkable improvements in detection resistance, with accuracy rates of 0.635-0.655 compared to 0.838-0.911 for existing methods. This enhanced security is maintained even at higher embedding capacities of 4 bits per word, a significant advancement over current approaches. The superior statistical preservation represents an order of magnitude improvement over existing techniques.

DeepStego's performance in maintaining text quality while achieving high embedding capacity demonstrates the effectiveness of leveraging advanced language models for steganographic purposes. The system's scalability factor of 1.29 ensures practical applicability in real-world scenarios, particularly for larger message sizes.

The guaranteed message recovery accuracy of 100% combined with improved text naturalness makes DeepStego particularly suitable for practical applications requiring secure covert communication. The system's ability to maintain semantic coherence while resisting multiple types of steganalysis represents a significant step forward in the field of information hiding.

Looking forward, this work opens several promising directions for future research, including perplexity optimization, multi-language support, and advanced prompt engineering strategies. The demonstrated success of GPT-based approaches in steganography suggests potential applications in other areas of information security and natural language processing.

Author Contributions

Conceptualization, writing—review and editing, O.K.; investigation, methodology, K.C.; writing—original draft preparation, A.S.; data curation, formal analysis, K.I.; supervision, writing, D.K. All authors have read and agreed to the published version of the manuscript.

Data Availability Statement

The original contributions presented in the study are included in the article. The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Fridrich, J. Steganography in Digital Media: Principles, Algorithms, and Applications; Illustrated Edition.; Cambridge University Press: Cambridge ; New York, 2009; ISBN 978-0-521-19019-0.

- Cox, I.; Miller, M.; Bloom, J.; Fridrich, J.; Kalker, T. Digital Watermarking and Steganography, 2nd Ed.; 2nd Edition.; Morgan Kaufmann: Amsterdam ; Boston, 2007; ISBN 978-0-12-372585-1.

- Fraczek, W.; Mazurczyk, W.; Szczypiorski, K. How Hidden Can Be Even More Hidden? In Proceedings of the 2011 Third International Conference on Multimedia Information Networking and Security; November 2011; pp. 581–585.

- Qin, J.; Luo, Y.; Xiang, X.; Tan, Y.; Huang, H. Coverless Image Steganography: A Survey. IEEE Access 2019, 7, 171372–171394. [CrossRef]

- Denemark, T.; Fridrich, J.; Holub, V. Further Study on the Security of S-UNIWARD; 2014; Vol. 9028;.

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding; 2018;

- GPT-4 Available online: https://openai.com/research/gpt-4 (accessed on 14 April 2024).

- Scanlon, M.; Breitinger, F.; Hargreaves, C.; Hilgert, J.-N.; Sheppard, J. ChatGPT for Digital Forensic Investigation: The Good, the Bad, and the Unknown. Forensic Science International: Digital Investigation 2023, 46, 301609. [CrossRef]

- Zou, J.; Yang, Z.; Zhang, S.; Rehman, S. ur; Huang, Y. High-Performance Linguistic Steganalysis, Capacity Estimation and Steganographic Positioning. In Proceedings of the Digital Forensics and Watermarking; Zhao, X., Shi, Y.-Q., Piva, A., Kim, H.J., Eds.; Springer International Publishing: Cham, 2021; pp. 80–93.

- Dhawan, S.; Bhuyan, H.K.; Pani, S.K.; Ravi, V.; Gupta, R.; Rana, A.; Al Mazroa, A. Secure and Resilient Improved Image Steganography Using Hybrid Fuzzy Neural Network with Fuzzy Logic. Journal of Safety Science and Resilience 2024, 5, 91–101. [CrossRef]

- Yang, Z.; Wang, K.; Li, J.; Huang, Y.; Zhang, Y.-J. TS-RNN: Text Steganalysis Based on Recurrent Neural Networks. IEEE Signal Processing Letters 2019, 26, 1743–1747. [CrossRef]

- Yang, Z.-L.; Zhang, S.-Y.; Hu, Y.-T.; Hu, Z.-W.; Huang, Y.-F. VAE-Stega: Linguistic Steganography Based on Variational Auto-Encoder. IEEE Transactions on Information Forensics and Security 2021, 16, 880–895. [CrossRef]

- Zhang, S.; Yang, Z.; Yang, J.; Huang, Y. Linguistic Steganography: From Symbolic Space to Semantic Space. IEEE Signal Processing Letters 2021, 28, 11–15. [CrossRef]

- Fang, T.; Jaggi, M.; Argyraki, K. Generating Steganographic Text with LSTMs. In Proceedings of the Proceedings of ACL 2017, Student Research Workshop; Ettinger, A., Gella, S., Labeau, M., Alm, C.O., Carpuat, M., Dredze, M., Eds.; Association for Computational Linguistics: Vancouver, Canada, July 2017; pp. 100–106.

- Yang, Z.; Xiang, L.; Zhang, S.; Sun, X.; Huang, Y. Linguistic Generative Steganography With Enhanced Cognitive-Imperceptibility. IEEE Signal Processing Letters 2021, 28, 409–413. [CrossRef]

- Yan, R.; Yang, Y.; Song, T. A Secure and Disambiguating Approach for Generative Linguistic Steganography. IEEE Signal Processing Letters 2023, 30, 1047–1051. [CrossRef]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.L.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training Language Models to Follow Instructions with Human Feedback. ArXiv 2022.

- Niu, Y.; Wen, J.; Zhong, P.; Xue, Y. A Hybrid R-BILSTM-C Neural Network Based Text Steganalysis. IEEE Signal Processing Letters 2019, 26, 1907–1911. [CrossRef]

- Wen, J.; Zhou, X.; Zhong, P.; Xue, Y. Convolutional Neural Network Based Text Steganalysis. IEEE Signal Processing Letters 2019, 26, 460–464. [CrossRef]

- Yang, H.; Bao, Y.; Yang, Z.; Liu, S.; Huang, Y.; Jiao, S. Linguistic Steganalysis via Densely Connected LSTM with Feature Pyramid. In Proceedings of the Proceedings of the 2020 ACM Workshop on Information Hiding and Multimedia Security; Association for Computing Machinery: New York, NY, USA, June 23 2020; pp. 5–10.

- Yang, Z.; Wei, N.; Sheng, J.; Huang, Y.; Zhang, Y. TS-CNN: Text Steganalysis from Semantic Space Based on Convolutional Neural Network. ArXiv 2018.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).