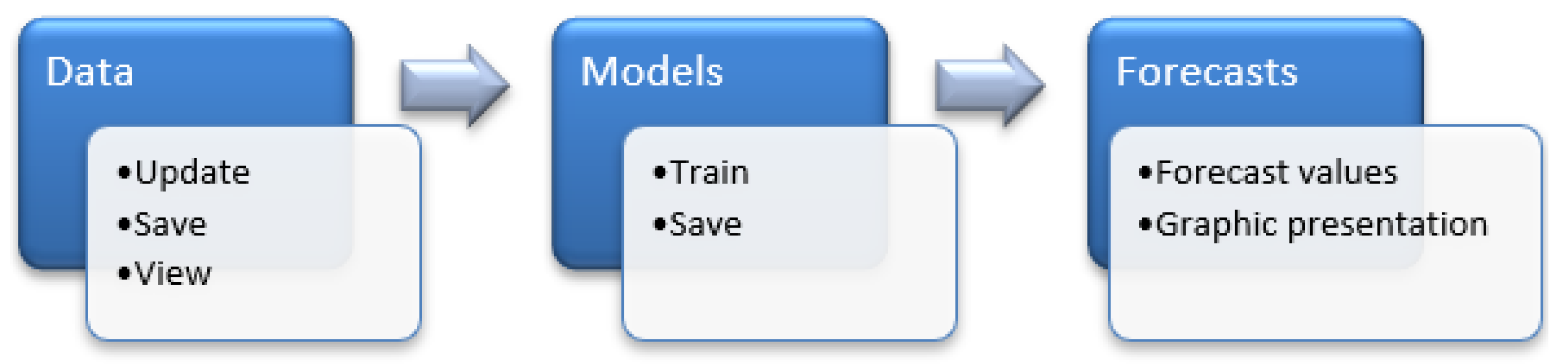

The models were developed in Python, a simple and widely used programming language for developing software that use machine learning libraries. Additionally, there is a vast amount of information and code examples available for reference.

3.1. Datasets

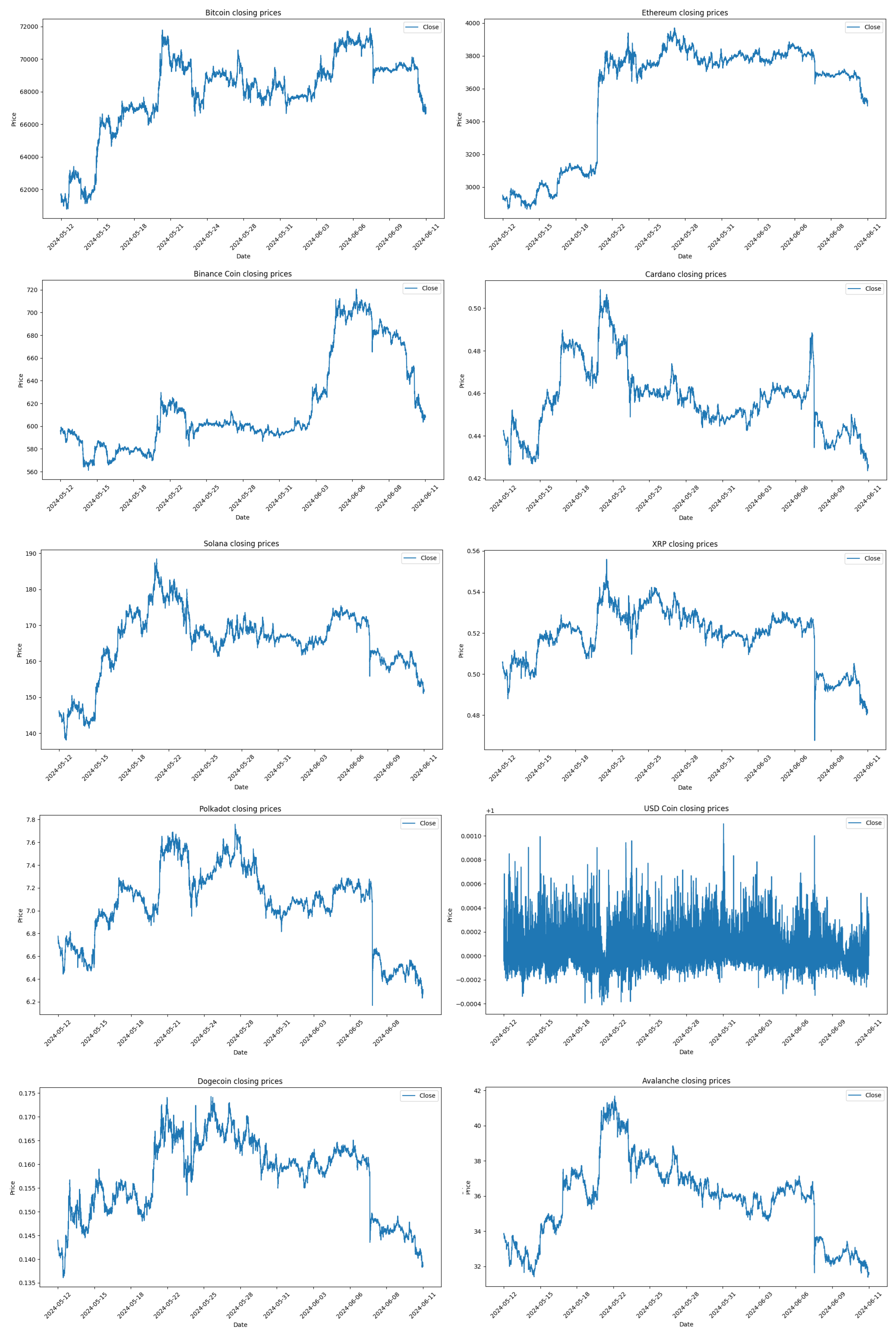

For the training and testing of the models, different datasets were collected, which include the minute step price values of Bitcoin, Ethereum, Binance Coin, Cardano, Solana, XRP, Polkadot, USD Coin, Dogecoin, and Avalanche. The data was obtained using the Python library YFinance, which allows the extraction of cryptocurrency prices as well as other financial assets, available on the portal finance.yahoo.com.

We collected data from 12/05/2024 to 11/06/2024 to train and test the models’ minute step price values over a 30-day period.

The records include the attributes Datetime, Open, High, Low, Close, Adj. Close, and Volume, corresponding to the date and time of the price, opening value of the period, highest value traded during the period, lowest value traded during the period, closing value of the period, adjusted closing value of the period, and volume traded during the period. These fields, or attributes, are common across all financial asset quotations, from cryptocurrencies to stock market or Forex prices.

As can be seen in

Table 2 and

Table 3, although the same function with the same parameters is used to retrieve the data, the number of records is not the same for all cryptocurrencies, varying between 31,670 for Polkadot and 38,216 for Ethereum.

All values are in USD, and the prices vary significantly depending on the cryptocurrency. The average value for Dogecoin is 0.16 USD, the cryptocurrency with the lowest price, while the average value for Bitcoin is 67,948.15 USD, the highest-priced cryptocurrency. In terms of trading volume per minute, Polkadot has the lowest average value, at 76,259.87 USD, while Bitcoin has the highest, at 8,879,468.76 USD.

Figure 7 show the Closing Price for all cryptocurrencies across the collect data period.

3.2. Data Preparation

For the datasets obtained via the YFinance API, there is not much work required at this stage, as the data is retrieved in the same format for all cryptocurrencies and no null or empty values are passed. However, this verification is performed to ensure that no null values are passed for the training and testing of the models.

After being retrieved, the records are stored in a CSV file so they can be reused and processed throughout the modeling and testing process, ensuring that the data is the same for all models and that the results are not influenced by different data being used for different models.

The Datetime attribute is used as the index for the records, and its sorting is ensured so that the records are always sequential based on date and time.

The Adj. Close column is removed from all cryptocurrency datasets, as it contains the same values as the Close attribute and is therefore redundant.

With the exception of the SARIMA model, which only uses the Close attribute, the data is scaled using the MinMaxScaler function from the sklearn library to ensure that all attribute values are on the same scale for model training. This scale is then reversed for model testing and validation.

The tests and comparison of the models were based on the 60-minute forecast, and the data was divided as follows:

The last 60 records are used for validation.

80% of the remaining records are used for training.

20% of the remaining records are used for testing.

3.4. Models

First, we compare the performance of the eight different regression algorithms using the Bitcoin records for 60-minute forecasts. This choice is due to the fact that Bitcoin is the most relevant and commonly used cryptocurrency in the analyzed studies. We selected the 60-minute timeframe because it is the shortest time horizon under analysis, thereby ensuring optimal performance.

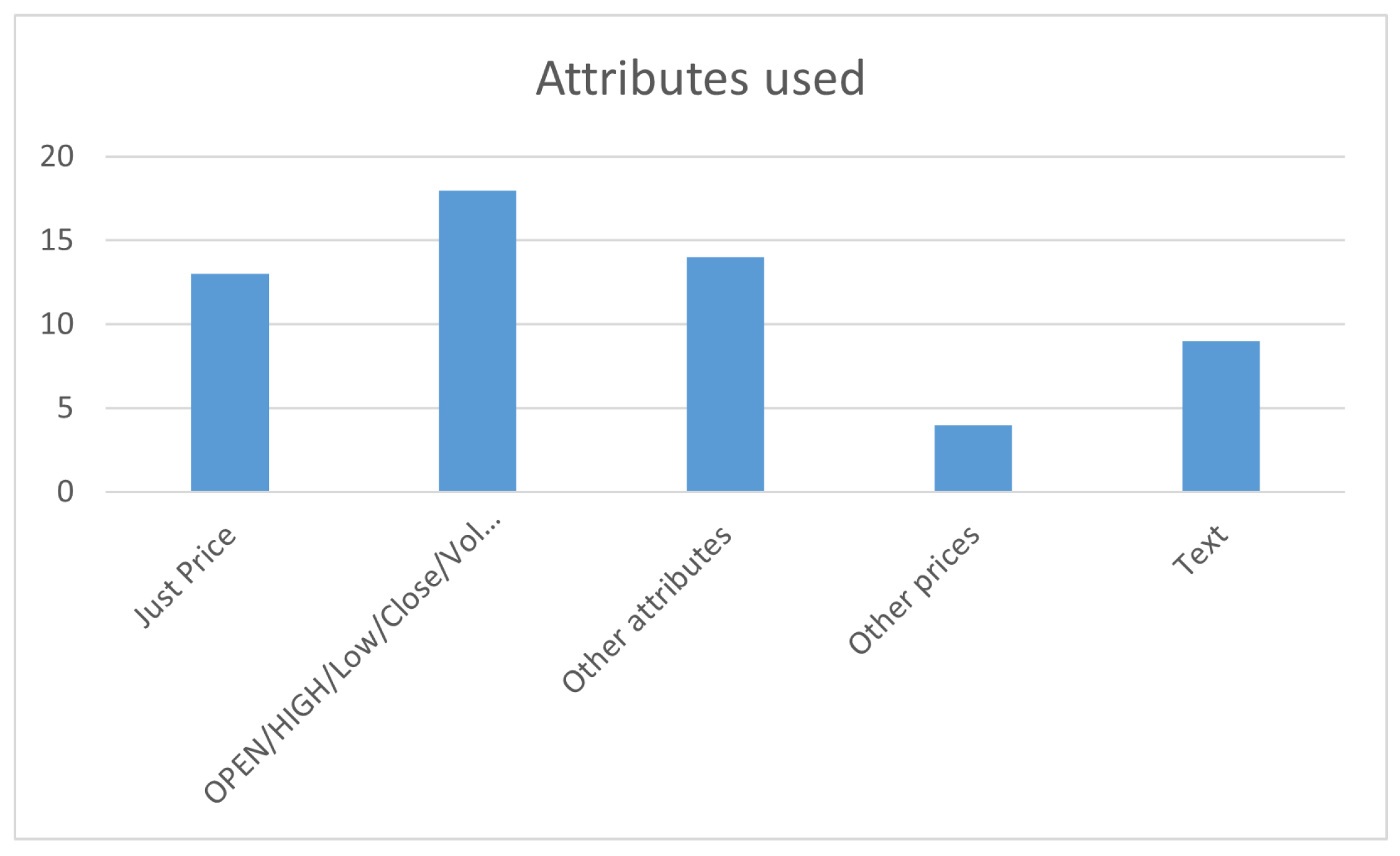

The comparative tests were based on the use of all attributes—Open, High, Low, Close, and Volume—as well as on just the Close attribute. The SARIMA model was only trained and tested using the Close attribute because it has some particularities that distinguish it from the other models, which will be explained next.

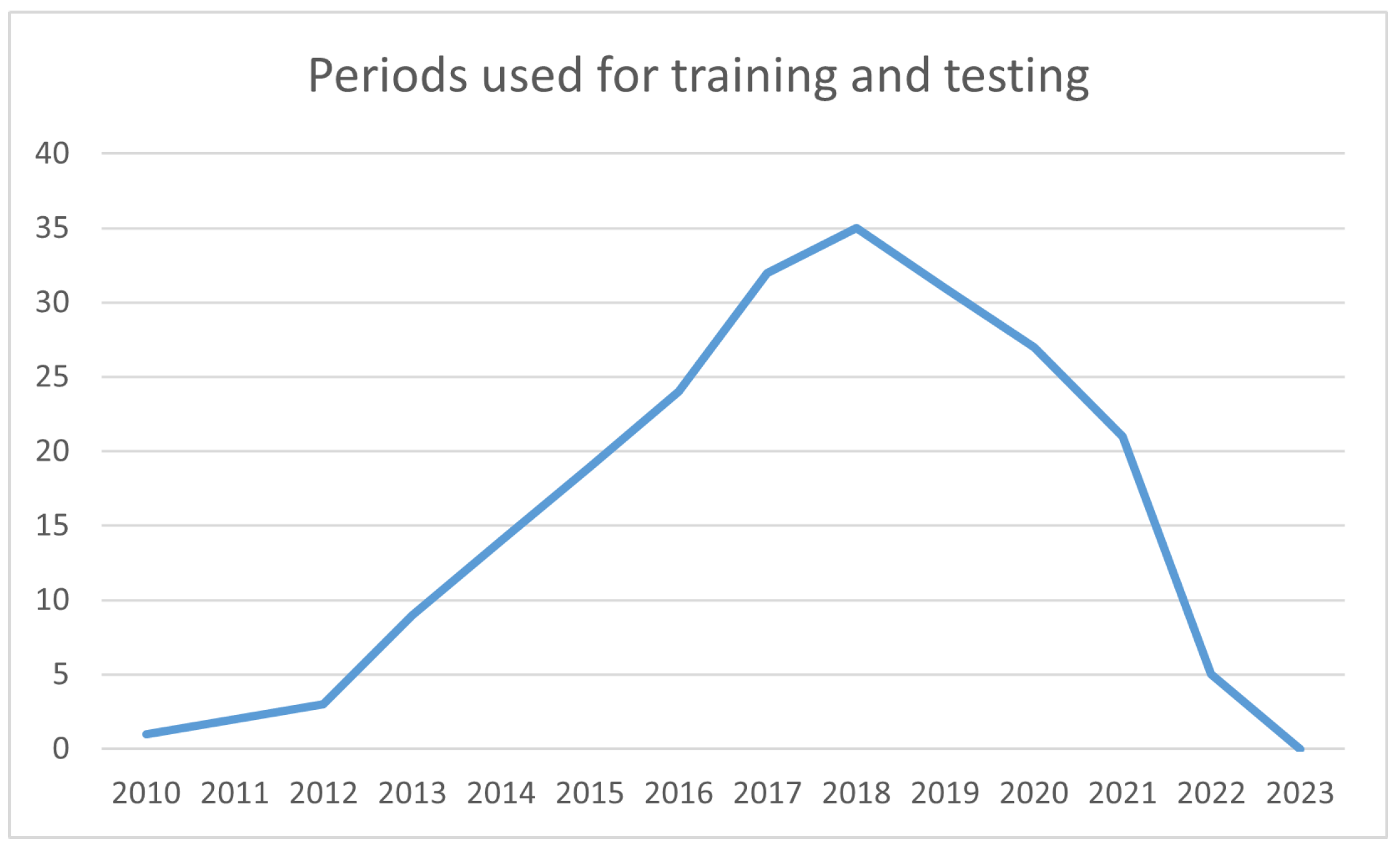

In general, the models can be used with data from different time intervals. The records are available through the YFinance API, which allows downloading records by the minute, hour, or day. For minute-level data, the last 30 days of data can be retrieved. For hourly intervals, data from the last 365 days can be retrieved, and for daily records, there are no restrictions.

The decision to use minute-level data allows for a much larger amount of records for model training. Some models, such as neural networks, require a large amount of data to achieve better performance. In 30 days, there can be up to 43,200 records, while in one year of hourly data, there can be up to 8,760, and in five years of daily records, there could only be up to 1,825 records.

Another relevant aspect of minute-step records is that the variation in values between records is less significant, which also helps to make predictions more accurate.

Except for the SARIMA model, where values are trained minute by minute, for all the other models, there is a function to create forecasts in 60-minute sequences, also known as sliding windows. This function is adjusted according to the forecast time horizon. Thus, the last 60 minutes are used to predict the next 60 minutes.

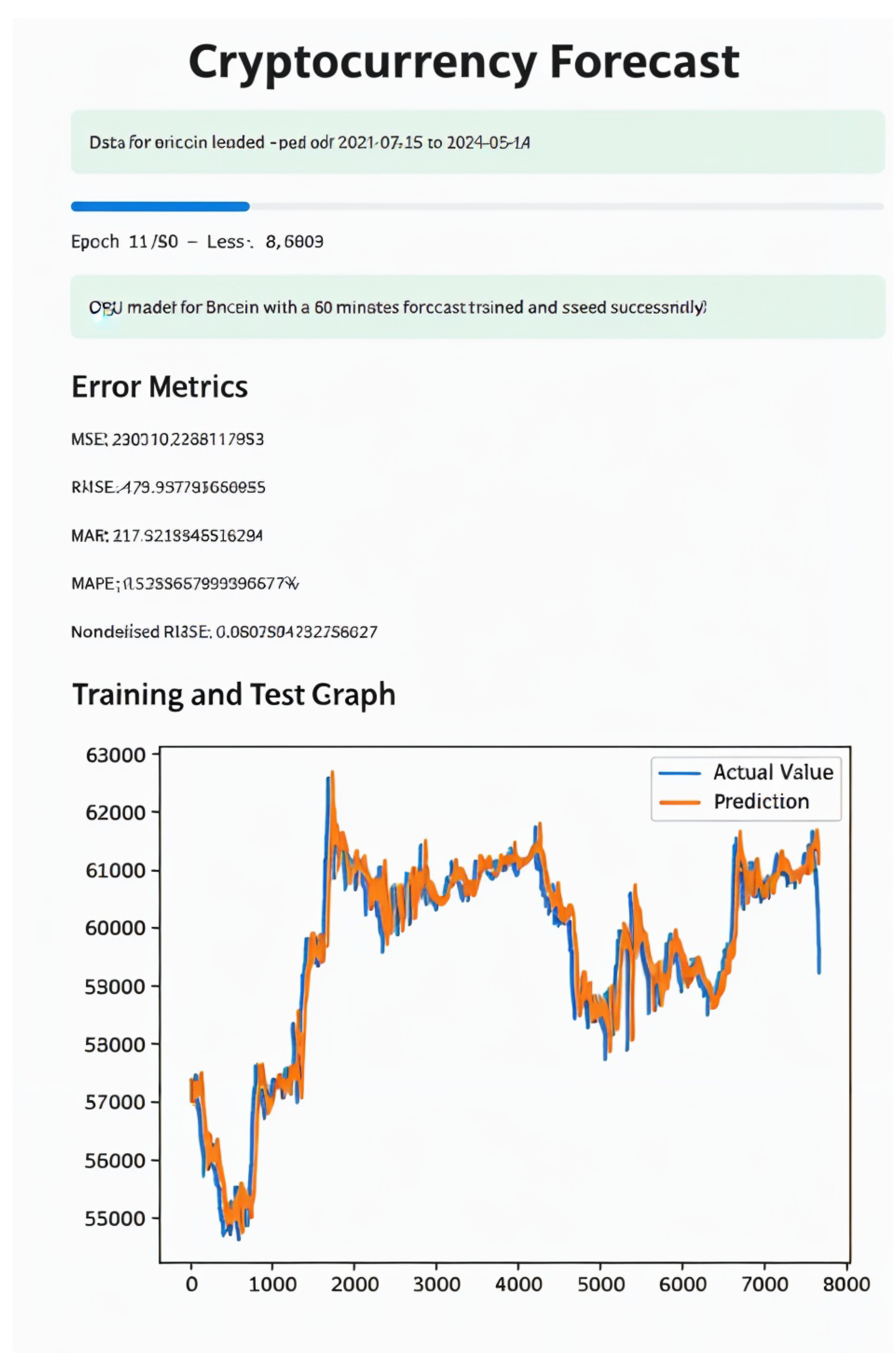

The model’s performance is measured using the metrics previously mentioned: MSE, RMSE, MAE, and MAPE. Additionally, the nRMSE metric is introduced, which corresponds to the RMSE metric but obtained from normalized values, allowing for better comparison of model performance across different cryptocurrencies, as each one has different magnitude values.

From Keras library of the TensorFlow API, the models Long Short-Term Memory (LSTM) [

14] and Gated Recurrent Unit (GRU) [

15] were used, and both models were tested with 50 neurons, two layers, Adam optimizer and the loss values were calculated based on MSE. These models run for 50 epochs, and can be stopped earlier using the EarlyStopping method if the loss function values begin to increase consecutively for 5 epochs. The GRU model, in terms of Python programming, is quite similar to the LSTM, however, it is a simpler and faster model, which can also be more efficient as it handles data overfitting better.

The Auto-Regressive Integrated Moving Average (ARIMA) [

16] model is obtained through the Statsmodels library. The model used is actually SARIMAX, which is prepared to use seasonality and exogenous variables in the ARIMA model, but in this case, there are no exogenous variables in the data obtained, and for that reason, the model is referred to as SARIMA and not ARIMA nor SARIMAX. This model is very resource-intensive, and on the machine where it was tested, it was not even possible to load the entire dataset to train the model. Therefore, subsets of the records were loaded, and the point where the best results were achieved was with only 360 records, meaning the price of the last 360 minutes. Even so, the model takes a long time to train, which makes it impractical for deployment in an application, as it needs to be retrained with new data to make predictions, unlike the other models that can be saved and reused to make predictions with new data without requiring training the model again.

The Linear Regression [

17] is the simplest regression model, and despite that its performance was quite interesting. What was observed with the previous models is that they can more or less follow the price trends, but they do not predict the major peaks in value variation. Linear Regression tends to be even more of a straight line when presented graphically. The main advantage is that it is a very simple and fast model to train.

The Random Forest [

18] model is not very common for time series forecasting, and the training process is also quite heavy when used with a large amount of data. To avoid overloading the training process too much, a model with 100 decision trees was applied. The performance of this model was slightly worse than the previous models.

The Support Vector Machine (SVM) [

19] model, Support Vector Regressor for regression problems, was tested with the kernel parameter set to RBF. This model is also heavy to train with large datasets, but it performs well, especially when trained with all attributes.

The XGBoost [

20] model, in terms of training, is quite fast, however, its performance in validation fell well short of the previous models, especially when trained with all attributes. In the parameters, 100 decision trees, or predictors, were used, along with a learning rate of 0.1.

Lastly, LightGBM [

21], a model derived from XGBoost, achieves better results with faster training. Using default configuration values, its performance, although not as good as neural networks, is better than XGBoost when used with all predictor attributes, but worse when used with only the closing price.

3.4.1. Analysis of Models Performance

Table 4 provides a performance comparison of the eight different machine learning models developed.

The GRU model with all attributes outperformed all other models across all metrics, achieving the lowest MSE (5,954.89), RMSE (77.17), MAE (60.20), and MAPE (0.09%). Its normalized RMSE (nRMSE) was also the lowest (0.19), highlighting its robustness in handling Bitcoin’s price fluctuations. The GRU’s performance slightly declined when using only the Close attribute, with an RMSE of 96.47 and a MAPE of 0.11%. This indicates that the model benefits from having access to a wider range of input features to improve prediction accuracy. The LSTM model performed competitively, coming in second after the GRU. With all attributes, it achieved an MSE of 8,163.73 and a MAPE of 0.11%. Similar to GRU, its performance dropped when only the Close attribute was used, with an MSE of 7,237.85 and a MAPE of 0.10%. The LSTM’s slightly higher computational complexity may explain its lower performance compared to GRU for this dataset. The SARIMA model, tested only with the Close attribute, showed significantly worse results compared to GRU and LSTM. With an RMSE of 139.56 and a MAPE of 0.16%, SARIMA struggled with the volatile nature of Bitcoin prices. This may be due to its reliance on linear assumptions and the inability to capture complex patterns in the data, unlike the neural network models. Surprisingly, Linear Regression performed relatively well considering its simplicity. With all attributes, it achieved an RMSE of 83.54 and a MAPE of 0.10%, making it a lightweight yet effective option for basic price forecasting. However, its inability to predict extreme price peaks and valleys limits its applicability in high-volatility markets like cryptocurrencies. The ensemble and decision tree-based models performed worse than both GRU and LSTM, especially when trained with all attributes. For instance, XGBoost had an RMSE of 2050.87 and a MAPE of 1.57%, highlighting its struggle to adapt to the intricate time-series patterns in cryptocurrency data. LightGBM, while faster to train than XGBoost, also had higher errors compared to the neural network models.

The superiority of the GRU model, as demonstrated by its consistently lower error metrics, can be attributed to its ability to effectively model sequential data with fewer computational resources than LSTM, without compromising accuracy. The GRU’s simplified architecture compared to LSTM allows for faster training times while still maintaining the ability to capture long-term dependencies, making it particularly useful for real-time applications like cryptocurrency forecasting. In contrast, traditional models like ARIMA and regression-based algorithms, while still useful for certain financial forecasting tasks, struggled to adapt to the rapidly fluctuating nature of cryptocurrency prices. This reinforces the need for models that can accommodate non-linear and non-stationary behaviors, characteristics intrinsic to cryptocurrencies. In conclusion, the GRU model is not only the most accurate model tested but also the most practical for deployment in real-time forecasting systems, where computational efficiency is critical. Its performance highlights the potential for deep learning models to provide accurate, short-term predictions in highly volatile markets like cryptocurrencies.

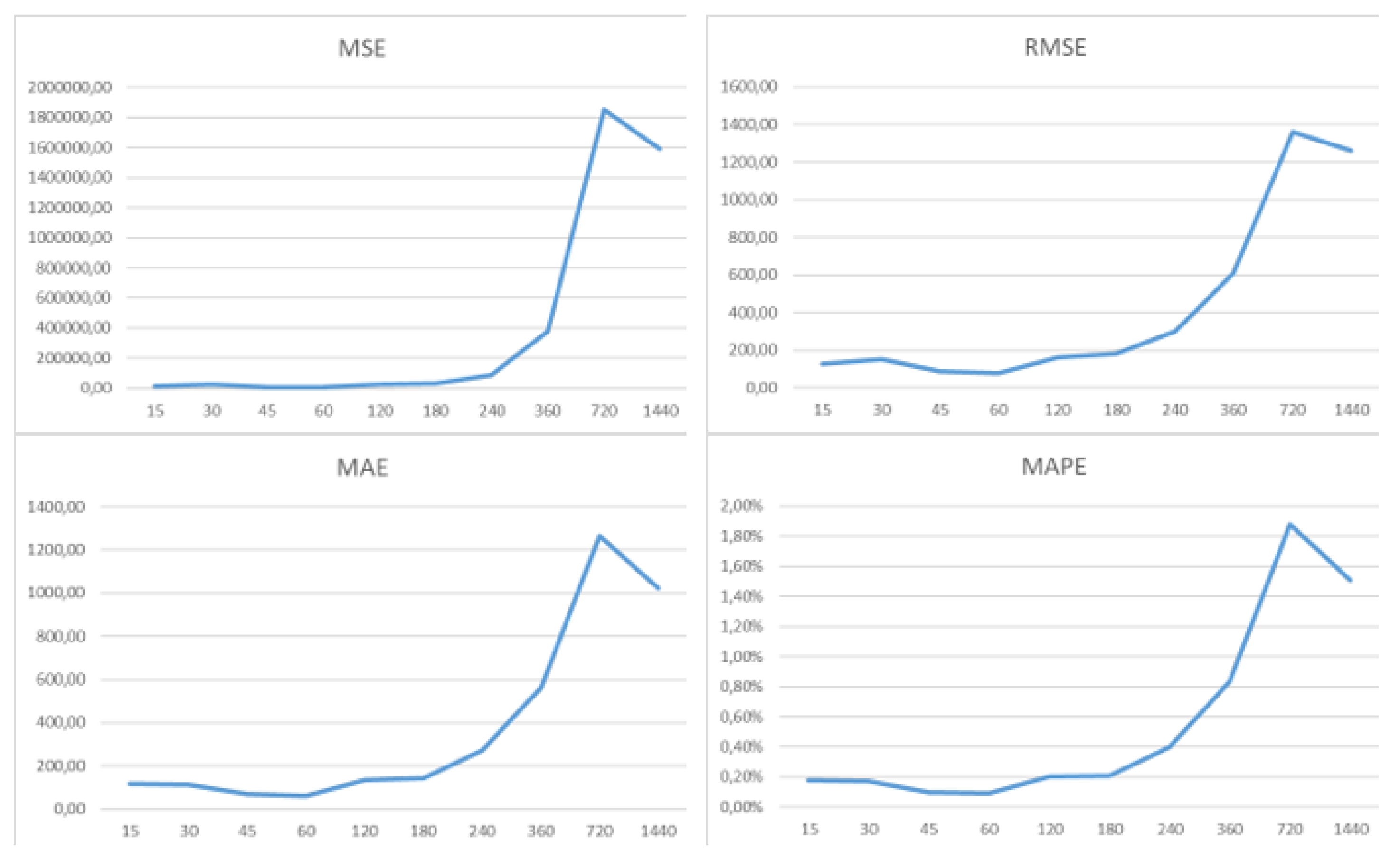

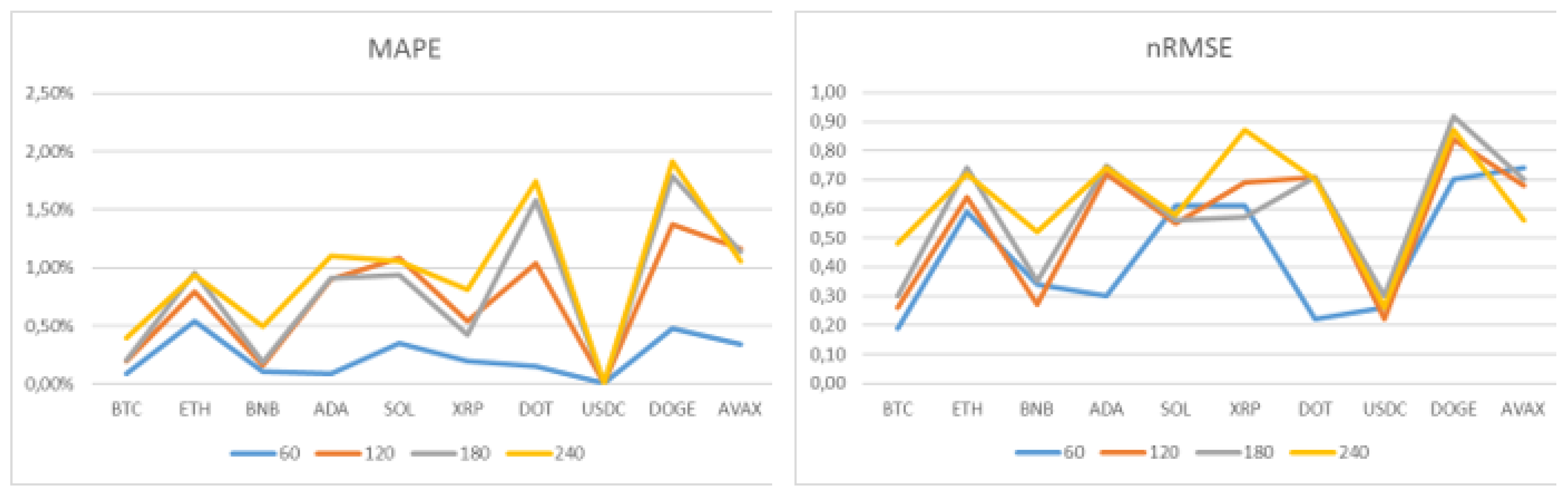

3.4.2. Time Horizons

It is observed that the performance significantly decreases as the forecast horizon increases when using high-frequency data, which was expected, as forecasting so many steps ahead is much more difficult. Especially when the model is unable to predict major drops or peaks in value, and cannot foresee changes in trend direction. In other words, if a cryptocurrency is in an upward trend, the model will make predictions in the same direction, and if there is a change in the trend, the model will not predict it, as there are no predictor attributes indicating such a change might occur.

In order to study the performance of the GRU network across different forecast time horizons, the GRU was configured to run up to 50 learning cycles (epochs); however, with the EarlyStopping method configured to tolerate 5 epochs if the loss function values start increasing, the process generally does not exceed 20 cycles in each training process.

Initially, shorter forecast horizons of 30, and 45 minutes were planned, but the performance did not improve enough to justify making them available in the web application. Longer horizons of 6, 12, and 24 hours were also considered, but here the performance dropped too much. Most likely, for these longer forecast horizons, the models would train better with hourly data instead of minute-level data.

The model’s performance was compared across all time horizons for Bitcoin, and as can be seen in

Figure 8 and

Table 5 the performance metric values remain stable up to the 240-minute forecast. However, when extended to 1440 minutes, these values increase considerably.