Submitted:

27 February 2025

Posted:

28 February 2025

You are already at the latest version

Abstract

Multimodal image analysis is a significant research direction in the field of computer vision, playing a crucial role in tasks such as image captioning and visual question answering (VQA). However, existing visual-language fusion methods often struggle to capture the fine-grained interactions between visual and language modalities, leading to suboptimal fusion results. To address this issue, this paper proposes a visual-language fusion model based on the Cross-Attention Transformer, which constructs deep interactive relationships between visual and language modalities through cross-attention mechanisms, thereby achieving effective multimodal feature fusion. The proposed model first utilizes convolutional neural networks (CNN) and pre-trained language models (e.g., BERT) to extract visual and language features separately, and then applies cross-attention modules to capture mutual dependencies in feature sequences, resulting in a unified multimodal representation vector. Experimental results demonstrate that the proposed model significantly outperforms traditional methods in tasks such as image captioning and VQA, validating its superiority in multimodal image analysis. Additionally, visualization analysis and ablation experiments further explore the contribution of the cross-attention mechanism to model performance, while discussing the model's limitations and potential future improvements.

Keywords:

1. Introduction

2. Related Concepts and Applications

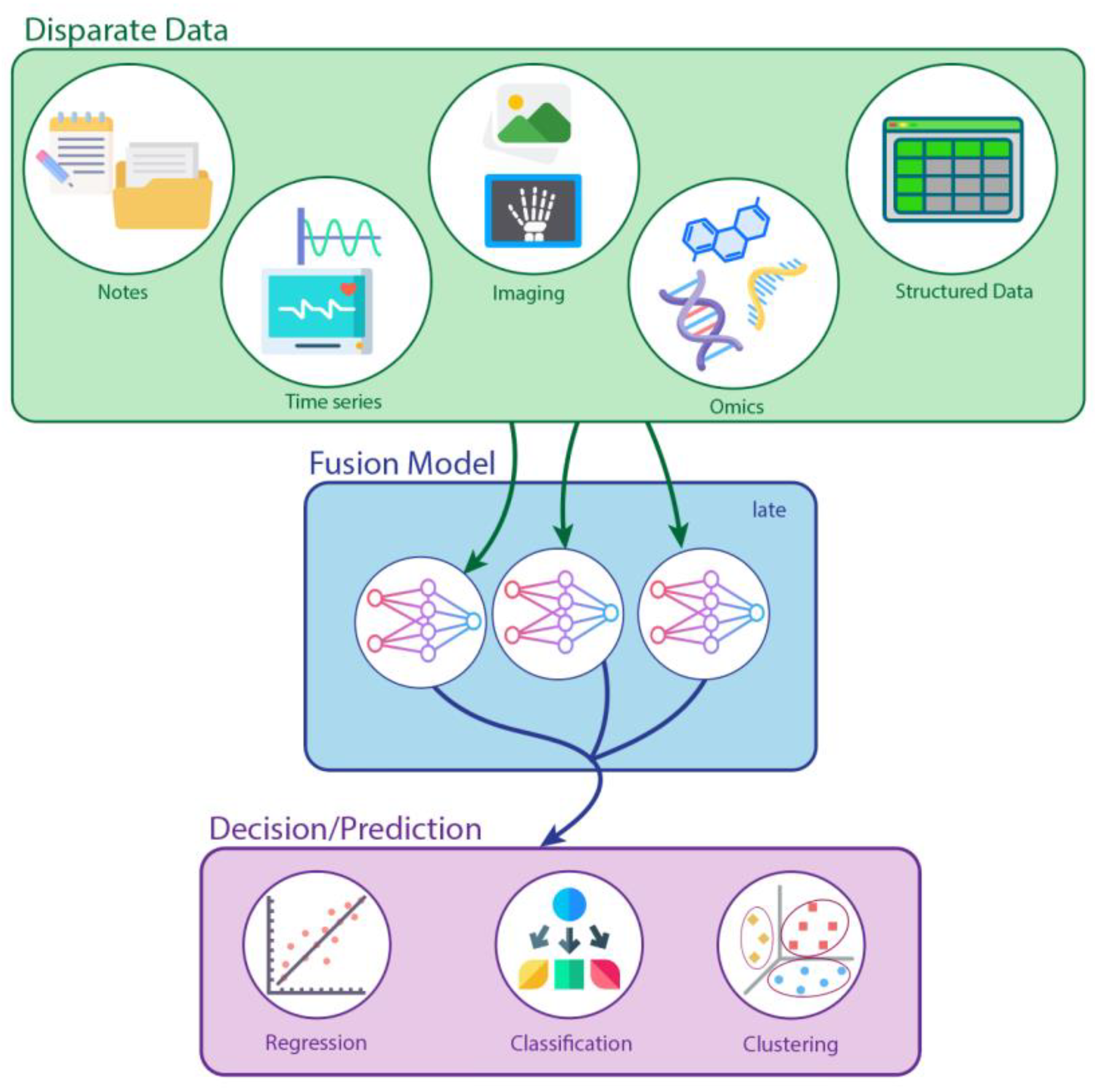

2.1. Multimodal Learning and Fusion Methods

2.2. Application of Cross-Modal Attention Mechanism in Visual-Language Fusion

3. Architecture and Strategy

3.1. Model Architecture Design

3.2. Cross-Attention Mechanism and Fusion Strategy

3.3. Loss Function and Optimization Strategy

4. Experiments and Analysis

5. Conclusion

References

- Tan C, Zhang W, Qi Z, et al. Generating Multimodal Images with GAN: Integrating Text, Image, and Style[J]. arXiv preprint arXiv:2501.02167, 2025.

- Zhang J, Xiang A, Cheng Y, et al. Research on Detection of Floating Objects in River and Lake Based on AI Image Recognition[J]. Journal of Artificial Intelligence Practice, 2024, 7(2): 97-106.

- Xiang A, Zhang J, Yang Q, et al. Research on splicing image detection algorithms based on natural image statistical characteristics[J]. arXiv preprint arXiv:2404.16296, 2024.

- Liu, Jiabei, et al. "Application of Deep Learning-Based Natural Language Processing in Multilingual Sentiment Analysis." Mediterranean Journal of Basic and Applied Sciences (MJBAS) 8.2 (2024): 243-260. [CrossRef]

- Qi Z, Ma D, Xu J, et al. Improved YOLOv5 Based on Attention Mechanism and FasterNet for Foreign Object Detection on Railway and Airway tracks[J]. arXiv preprint arXiv:2403.08499, 2024.

- Wang T, Cai X, Xu Q. Energy Market Price Forecasting and Financial Technology Risk Management Based on Generative AI[J]. Applied and Computational Engineering, 2024, 100: 29-34.

- Wu, X.; Liu, X.; Yin, J. Multi-Class Classification of Breast Cancer Gene Expression Using PCA and XGBoost. Preprints 2024, 2024101775. https://doi.org/10.20944/preprints202410.1775.v3. [CrossRef]

- Min, Liu, et al. "Financial Prediction Using DeepFM: Loan Repayment with Attention and Hybrid Loss." 2024 5th International Conference on Machine Learning and Computer Application (ICMLCA). IEEE, 2024.

- Wu Z. An efficient recommendation model based on knowledge graph attention-assisted network (kgatax)[J]. arXiv preprint arXiv:2409.15315, 2024.

- Wang, H., Hao Zhang and Yi Lin. 2024 "RPF-ELD: Regional Prior Fusion Using Early and Late Distillation for Breast Cancer Recognition in Ultrasound Images" Preprints. https://doi.org/10.20944/preprints202411.1419.v1. [CrossRef]

- Qi Z, Ding L, Li X, et al. Detecting and Classifying Defective Products in Images Using YOLO[J]. arXiv preprint arXiv:2412.16935, 2024.

- Yan, Hao, et al. "Research on image generation optimization based deep learning." Proceedings of the International Conference on Machine Learning, Pattern Recognition and Automation Engineering. 2024.

- Tang, Xirui, et al. "Research on heterogeneous computation resource allocation based on data-driven method." 2024 6th International Conference on Data-driven Optimization of Complex Systems (DOCS). IEEE, 2024.

- Wu Z, Chen J, Tan L, et al. A lightweight GAN-based image fusion algorithm for visible and infrared images[C]//2024 4th International Conference on Computer Science and Blockchain (CCSB). IEEE, 2024: 466-470.

- Mo K, Chu L, Zhang X, et al. DRAL: Deep reinforcement adaptive learning for multi-UAVs navigation in unknown indoor environment[J]. arXiv preprint arXiv:2409.03930, 2024.

- Zhang W, Huang J, Wang R, et al. Integration of Mamba and Transformer--MAT for Long-Short Range Time Series Forecasting with Application to Weather Dynamics[J]. arXiv preprint arXiv:2409.08530, 2024.

- Zhao Y, Hu B, Wang S. Prediction of brent crude oil price based on lstm model under the background of low-carbon transition[J]. arXiv preprint arXiv:2409.12376, 2024.

- Zhao Y, Hu B, Wang S. Prediction of brent crude oil price based on lstm model under the background of low-carbon transition[J]. arXiv preprint arXiv:2409.12376, 2024.

- Diao, Su, et al. "Ventilator pressure prediction using recurrent neural network." arXiv preprint arXiv:2410.06552 (2024).

- Gao, Dawei, et al. "Synaptic resistor circuits based on Al oxide and Ti silicide for concurrent learning and signal processing in artificial intelligence systems." Advanced Materials 35.15 (2023): 2210484. [CrossRef]

- Shi X, Tao Y, Lin S C. Deep Neural Network-Based Prediction of B-Cell Epitopes for SARS-CoV and SARS-CoV-2: Enhancing Vaccine Design through Machine Learning[J]. arXiv preprint arXiv:2412.00109, 2024.

- Wang, B., Chen, Y., & Li, Z. (2024). A novel Bayesian Pay-As-You-Drive insurance model with risk prediction and causal mapping. Decision Analytics Journal, 13, 100522. [CrossRef]

- Li, Z., Wang, B., & Chen, Y. (2024). Incorporating economic indicators and market sentiment effect into US Treasury bond yield prediction with machine learning. Journal of Infrastructure, Policy and Development, 8(9), 7671. [CrossRef]

- Zhao R, Hao Y, Li X. Business Analysis: User Attitude Evaluation and Prediction Based on Hotel User Reviews and Text Mining[J]. arXiv preprint arXiv:2412.16744, 2024.

- Guo H, Zhang Y, Chen L, et al. Research on vehicle detection based on improved YOLOv8 network[J]. arXiv preprint arXiv:2501.00300, 2024.

- Xu, Q., Wang, S., & Tao, Y. (2025). Enhancing Anti-Money Laundering Detection with Self-Attention Graph Neural Networks. Preprints. https://doi.org/10.20944/preprints202501.0587.v1. [CrossRef]

- Ziang H, Zhang J, Li L. Framework for lung CT image segmentation based on UNet++[J]. arXiv preprint arXiv:2501.02428, 2025.

- Weng, Y., & Wu, J. (2024). Fortifying the global data fortress: a multidimensional examination of cyber security indexes and data protection measures across 193 nations. International Journal of Frontiers in Engineering Technology, 6(2), 13-28. [CrossRef]

- Wu Z. Large Language Model Based Semantic Parsing for Intelligent Database Query Engine[J]. Journal of Computer and Communications, 2024, 12(10): 1-13.

- Wang, Zixuan, et al. "Improved Unet model for brain tumor image segmentation based on ASPP-coordinate attention mechanism." 2024 5th International Conference on Big Data & Artificial Intelligence & Software Engineering (ICBASE). IEEE, 2024.

- Liu, Dong. "Mt2st: Adaptive multi-task to single-task learning." arXiv preprint arXiv:2406.18038 (2024).

- Luo, D. (2024). Enhancing Smart Grid Efficiency through Multi-Agent Systems: A Machine Learning Approach for Optimal Decision Making. Preprints preprints, 202411, v1.

- Luo,D. (2024). Quantitative Risk Measurement in Power System Risk Management Methods and Applications. Preprints. https://doi.org/10.20944/preprints202411.1636.v1. [CrossRef]

- Luo, D. (2024). Decentralized Energy Markets: Designing Incentive Mechanisms for Small-Scale Renewable Energy Producers. Preprints. https://doi.org/10.20944/preprints202411.0696.v1. [CrossRef]

- Li Z, Wang B, Chen Y. Knowledge Graph Embedding and Few-Shot Relational Learning Methods for Digital Assets in USA[J]. Journal of Industrial Engineering and Applied Science, 2024, 2(5): 10-18.

- Weng, Y., & Wu, J. (2024). Leveraging artificial intelligence to enhance data security and combat cyber attacks. Journal of Artificial Intelligence General science (JAIGS) ISSN: 3006-4023, 5(1), 392-399. [CrossRef]

- Liu, Dong, et al. "Graphsnapshot: Graph machine learning acceleration with fast storage and retrieval." arXiv preprint arXiv:2406.17918 (2024).

- Tan C, Li X, Wang X, et al. Real-time Video Target Tracking Algorithm Utilizing Convolutional Neural Networks (CNN)[C]//2024 4th International Conference on Electronic Information Engineering and Computer (EIECT). IEEE, 2024: 847-851.

- Liu, D. (2024). Contemporary model compression on large language models inference. arXiv preprint arXiv:2409.01990.

- Li, Z., Wang, B., & Chen, Y. (2024). A contrastive deep learning approach to cryptocurrency portfolio with us treasuries. Journal of Computer Technology and Applied Mathematics, 1(3), 1-10.

- Weng Y, Wu J, Kelly T, et al. Comprehensive overview of artificial intelligence applications in modern industries[J]. arXiv preprint arXiv:2409.13059, 2024.

- Huang B, Lu Q, Huang S, et al. Multi-modal clothing recommendation model based on large model and VAE enhancement[J]. arXiv preprint arXiv:2410.02219, 2024.

- Li, Z., Wang, B., & Chen, Y. (2024). Knowledge Graph Embedding and Few-Shot Relational Learning Methods for Digital Assets in USA. Journal of Industrial Engineering and Applied Science, 2(5), 10-18.

- Zhao, P., & Lai, L. (2024). Minimax optimal q learning with nearest neighbors. IEEE Transactions on Information Theory. [CrossRef]

- Feng, J., Wu, Y., Sun, H., Zhang, S., & Liu, D. (2025). Panther: Practical Secure 2-Party Neural Network Inference. IEEE Transactions on Information Forensics and Security. [CrossRef]

| Model | Dataset | BLEU-1 | BLEU-2 | CIDEr | METEOR |

|---|---|---|---|---|---|

| LSTM-Attention | COCO Caption | 73.2 | 55.4 | 112.8 | 27.1 |

| Dual-Attention | COCO Caption | 76.5 | 58.9 | 123.4 | 28.3 |

| M4C | COCO Caption | 79.1 | 61.2 | 134.7 | 30.2 |

| Proposed Model | COCO Caption | 82.4 | 64.7 | 145.3 | 32.5 |

| LSTM-Attention | Flickr30k | 68.9 | 52.1 | 97.6 | 26.2 |

| Dual-Attention | Flickr30k | 72.8 | 55.4 | 108.3 | 27.4 |

| M4C | Flickr30k | 74.5 | 57.1 | 115.7 | 29.1 |

| Proposed Model | Flickr30k | 77.8 | 60.2 | 126.5 | 30.8 |

| Model | Dataset | Top-1 Accuracy | Top-5 Accuracy |

|---|---|---|---|

| LSTM-Attention | COCO Caption | 53.1 | 78.4 |

| Dual-Attention | COCO Caption | 58.7 | 81.3 |

| M4C | COCO Caption | 59.2 | 83.5 |

| Proposed Model | COCO Caption | 64.3 | 87.2 |

| LSTM-Attention | Flickr30k | 48.7 | 73.9 |

| Dual-Attention | Flickr30k | 52.9 | 76.5 |

| M4C | Flickr30k | 55.1 | 79.8 |

| Proposed Model | Flickr30k | 60.4 | 83.1 |

| Model Configuration | CIDEr Score |

|---|---|

| Full Model | 145.3 |

| Remove Cross-Attention | 136.8 |

| Remove CBAM Module | 140.0 |

| Visual Features Only | 121.4 |

| Text Features Only | 118.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).